Abstract

Machine Learning (ML) is increasingly used to predict fuel spray characteristics, but ML conventionally requires large datasets for training. There is a problem of limited training data in the field of synthetic fuel sprays. One solution is to reproduce experimental results using Computational Fluid Dynamics (CFD) and then to augment or replace experimental data with more abundant CFD output data. However, this approach can obscure the relationship of the neural network to the training data by introducing new factors, such as CFD grid design, turbulence model, near-wall treatment, and the particle tracking approach. This paper argues that CFD can be eliminated as a data augmentation tool in favour of a systematic treatment of the uncertainty in the neural network training. Confidence intervals are calculated for neural network outputs, and these encompass both (1) uncertainty due to errors in experimental measurements of the neural networks’ training data and (2) uncertainty due to under-training the neural networks with limited experimental data. This approach potentially improves the usefulness of artificial neural networks for predicting the behaviour of sprays in engineering applications. Confidence intervals represent a more rigorous and trustworthy measure of the reliability of a neural network’s predictions than a conventional validation exercise. Furthermore, when data are limited, the best use of all available data is to improve the training of a neural network and our confidence in that training, rather than to reserve data for ad-hoc testing, which exercise can only at best approximate a confidence interval.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Artificial neural networks

- Confidence intervals

- Uncertainty

- Machine learning

- Fuel spray penetration

- Synthetic fuel

- Polyoxymethylene dimethyl ethers (OME)

- Diesel

6.1 Introduction

Machine learning is increasingly used to predict fuel spray characteristics, such as spray penetration [1, 2]. However, the cost of fuel spray experiments limits the available training data. When a neural network is trained on limited data, there is a trade-off between over-fitting the data and over-simplifying the neural network (providing too few nodes or layers within the network). A nonlinear phenomenon, such as spray breakup, requires a degree of neural network complexity that cannot be trained reliably with the quantity of experimental spray penetration data typically available. To get around this, researchers have augmented experimental spray results with complementary computational fluid dynamics (CFD) studies [3]. However, CFD occupies time and resources and risks distorting the original experimental data. There is the potential to remove CFD from the process and to train neural networks directly on experimental data [4]. This study proposes to encompass predictive uncertainty due to neural network under-training (due to limited training data) within confidence intervals around neural network predictions. The aim is to improve the practical usefulness of neural networks as predictive tools in engineering design, in cases where they are trained incompletely due to insufficient training data.

Confidence intervals for neural networks are not new. Chryssolouris et al. [5] derived confidence intervals for a neural network from fundamental statistics. To use this approach, the experimenter must assess the confidence intervals of each of the neural network’s training inputs and the sensitivity of each neural network output to variations in each input. Confidence intervals are typically available for spray data, because once a new experimental condition is created, experiments are easily repeated. A matrix of dm⁄dn sensitivities sized m outputs by n inputs must be manipulated (transposed and multiplied) to calculate confidence intervals for the neural network. Trichakis et al. [6] reviewed numerical methods of estimating the confidence intervals on neural networks and applied the bootstrap method of Efron [7] to estimate confidence intervals on a neural network predicting the hydraulic head of aquifers. Although beyond the scope of Efron’s original proposal, Trichakis et al. showed that the bootstrap method is a practical way to estimate confidence intervals for perceptron neural networks. Confidence intervals have been used with some success in other applications of neural networks, including applications related to solar power generation [8].

The present study applies the bootstrap method to calculate confidence intervals on spray penetration predictions. The novel contribution of the present study is to incorporate within the confidence intervals not only (1) uncertainty arising from sampling error on measured input values but also (2) residual random errors in the neural network due to initialisation and incomplete training. Because of the limited data available for training, the neural network training algorithm does not converge reliably. This means that neural network output contains random error due to residual randomness from the initialisation state. We allow this random error to combine with variations that arise from resampling inputs (due to errors in experimental measurements). The confidence intervals produced by this method thus incorporate both sources of error. Thus, we prefer repeated, abortive attempts at neural network training, with suitable confidence intervals, over a single neural network that over-fits the data. We recognise that in general simpler neural networks (containing fewer nodes) can be trained on less abundant data, but there is typically a lower limit on neural network complexity whereby an over-simple neural network introduces model bias (a situation in which the neural network is insufficiently complex to represent adequately the output despite successful training). The methods suggested in this paper offer a means of training suitably complex neural networks from limited experimental data without relying on CFD or any data augmentation techniques. By providing confidence intervals, the methods suggested in this paper support the judicious application of neural networks to practical problems in engineering.

6.2 Methodology

This investigation uses liquid and vapour spray penetration data from Honecker et al. [9]. The authors began with a common rail injector having specific flow rate of 310 mL/30 s at 100 bar from 8 holes of 125 µm diameter. They modified the injector to have only three holes, and they measured the injection plume from only the “downward facing” hole. Measurement was by means of three cameras with no filter, 308 nm filter and 520 nm filter, respectively. Images were processed in Matlab to identify contrast thresholds in each camera view representing the boundaries of the liquid and vapour phases.

The researchers ran diesel and polyoxymethylene dimethyl ethers (OME) fuel injection events across three different injection pressure conditions and captured images at 100 ns intervals. For this work we have used the OME data only. For each injection pressure condition, 20 injection events were measured, resulting in a total of 1260 images. For each group of 20 images having the same injection pressure condition and time stamp, the experimenters produced an average vapour penetration length with standard deviation and, where available, an average liquid penetration length with standard deviation. This resulted in 63 average penetration length data points for the vapour phase and 57 for the liquid phase. Wishing to use the raw, resampled data but having access only to the mean and standard deviation figures published by Honecker et al., the present study calculates a normally distributed population of 20 penetration lengths for every experimental condition using the normdist (normal distribution) function in Microsoft Excel [ver. 16.61.1 (2022)]. This resulted in 63 × 20 data points for the vapour phase and 57 × 20 for the liquid phase.

We build 100 separate training data sets of 57 data points each from the available liquid phase data, and again (100 training sets of 63 points each) from the vapour phase data. The choice of 100 is arbitrary, but it is intentionally larger than the number of repeat samples of each experimental data point. We use the resulting 2 × 100 data sets to train 100 neural networks on the liquid phase data and 100 neural networks on the vapour phase data. Each of the 2 × 100 data sets contains one each of the permutations of injection pressure and time from the original experimental study (all 63 vapour phase permutations from the vapour phase or all 57 from the liquid phase). However, each of the data points in each of the 2 × 100 sets is selected randomly from among the 20 available samples for that data point.

Each of the 2 × 100 neural networks trained in this way involves just 8 nodes and 15 training epochs. Training is stopped at 15 epochs, with more typical convergence criteria supressed. These hyperparameter values are informed by sensitivity studies (ranging from 2 to 16 nodes and 10 to 20 epochs). Because the neural networks produced within these ranges of hyperparameter values are undertrained, we observe variability in the results of the sensitivity study. The values were chosen subjectively after repeated runs, but more systematic approaches are suggested in the ‘Discussion’ section below.

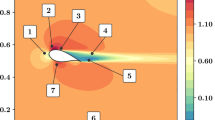

We use Matlab’s Neural Network Toolbox [ver. R2022a (2022)]. We create feed-forward neural networks with one hidden layer of 8 nodes, represented in Fig. 6.1. We use the sigmoid activation function, ϕ. Inputs are normalised (φ) and outputs are denormalised (φ′). We use the Levenberg–Marquardt training algorithm with a fixed learning rate of 0.001 to set weights w, which are initialised randomly with a mean value of 0.5 and a normal distribution. Training stops at 15 epochs, ensuring that the networks do not over-fit the limited available training data but also ensuring that every network is under-trained. The 2 × 100 neural networks thus trained are expected to differ because they each retain some of the randomness introduced by the initialisation of weights. We do not consider alternative activation functions, because, in a single-layer network, saturated weights and vanishing gradients are not expected [10]. We do not consider alternative initialisation strategies.

To reiterate: the 20 repeat samples of each of the 63 + 57 data points are not aggregated to create a larger data set. Rather, each neural network is trained on a set of distinct data points (63 for the vapour phase or 57 for the liquid phase). The repeat sampling is reflected not in the training data for any one neural network but rather in the profusion of neural networks, each trained on a different data set wherein each point was selected randomly from among a population of 20 repeat samples of that data point.

After training a family of neural networks to predict spray penetration, the entire population of neural networks may be queried for any desired set of input values. The result of this query is a set of predictions having a mean and standard deviation. Thus, the family of neural networks collectively offers a prediction with confidence intervals corresponding to any input condition.

6.3 Results and Discussion

The mean outputs of the family of 100 × 2 neural networks are shown in Fig. 6.1a, b with the experimental data (mean values) from Honecker et al. [9]. The same mean predictions are shown together with their upper and lower confidence intervals (single standard deviation) in Figs. 6.2, 6.3, and 6.4 for injection pressures of 700 bar, 1000 bar, and 1500 bar, respectively.

The figures show wider confidence intervals on neural network outputs in conditions where the experimental data of Honecker et al. [9] showed wider confidence intervals: in the vapour phase more than in the liquid phase, and in the spray breakup region of the liquid phase curves. Figure 6.6 reproduces graphs of experimental data from Honecker et al. [9].

Furthermore, the results show wider confidence intervals at the maximum penetration length of both liquid and vapour phases—conditions where the experimental data did not exhibit similarly wide confidence intervals. Thus, there is a source of uncertainty captured in this study that is not directly related to the uncertainty in the input data (Fig. 6.5).

Experimental data from Honecker et al. [9]

This paper documents the application of bootstrap confidence intervals (following the method of Efron [7]) to neural networks, extending the work of Trichakis et al. [6] to deal with data sets that are limited in relation to the complexity of the neural network required to fit the data. The spray penetration data of Honecker et al. [9] are used to train a family of neural networks, each incorporating randomness from (1) random sampling of redundant input data per the bootstrap method and (2) residual randomness from initialisation of the neural networks (persisting in networks because of undertraining). With every set of predictions made by the family of neural networks, there is a mean and a confidence interval associated with that set.

This study does not select the node number rigorously. Training stopped according to an epoch number limit, and again this was not assessed rigorously. Had this study sought to produce a rigorously optimised neural network, then a better approach would have been to produce a different family of neural networks representing bootstrapped confidence intervals for each permutation of hyperparameters. Rather than reserving precious data for the purpose of validating the selection of hyperparameters, the full population of families of neural networks may be examined to identify the set of hyperparameter values offering the narrowest confidence intervals. Rather than comparing the average size (across the predictive domain) of confidence intervals for each hyperparameter value, researchers may go a step further to compare weighted average standard deviations, where the weights are specified to give greater importance to the areas of interest within the curves, such as the droplet breakup region in the case of liquid spray penetration curves.

6.4 Conclusion

We have developed and applied a novel machine learning technique to predict synthetic fuel spray penetration from limited experimental data without computational fluid dynamics. When experimental data are scarce, confidence intervals on a neural network predictions are much more useful than confirming the neural network’s performance with reference to a set of reserved test data. As the quantity of test data approaches infinity, the performance against test data approaches a confidence interval, but this is not an efficient use of data in the sense that it does not represent, for each data point, the greatest contribution of that data point toward improving the neural network’s predictive accuracy. The bootstrap method applies every data point to the related (by statistical theory) tasks of minimising the error and quantifying the error. Thus, the bootstrap approach is preferable when data are limited.

The bootstrap confidence intervals produced in this study reflect both (1) the randomness associated with the input data, as measured by the confidence intervals on that data or the repeat sampling results of that data and (2) the residual randomness due to the random initialisation of the neural networks, surviving in the ‘trained’ neural networks because of under-training. It is not necessary that the training data incorporates repeat sampling or confidence intervals of its own; the method could be applied to show confidence intervals that arise entirely from under-training rather than from both sources of error. Likewise, the bootstrap method could be applied where randomness exists in the training data but under-training is not a factor, as in the study by Trichakis et al. [6].

We propose that, when data are limited, the best application of all the available data is toward the better training of neural networks and the narrowing of the confidence intervals around their predictions. When applied ad infinitum (with unlimited data), a validation study for a neural network approaches an accurate representation of a confidence interval on neural network predictions. Far better, we propose, when data are limited, to apply all data to the narrowing of confidence intervals which are calculated from theory or from an a demonstrably sound numerical approximation of the relevant theory (such as the bootstrap method). These considerations are offered in the hope of improving the application of machine learning to limited data sets and the assessment of the uncertainty that arises from so doing.

References

Y. Ikeda, D. Mazurkiewicz, Application of Neural Network Technique to Combustion Spray Dynamics Analysis, in Progress in Discovery Science. ed. by S. Arikawa, A. Shinohara (Springer, New York, 2002), pp.408–425

J. Tian et al., Experimental study on the spray characteristics of octanol diesel and prediction of spray tip penetration by ANN model. Energy 239, 121920 (2022)

P. Koukouvinis, C. Rodriguez, J. Hwang, I. Karathanassis, M. Gavaises, L. Pickett, Machine learning and transcritical sprays: a demonstration study of their potential in ECN Spray-A. Int. J. Engine Res. (2021). 14680874211020292

J. Hwang, et al., A new pathway for prediction of gasoline sprays using machine-learning algorithms, SAE Technical Paper, (2022) 2022-01-0492

G. Chryssolouris, M. Lee, A. Ramsay, Confidence interval prediction for neural network models. IEEE Trans. Neural Netw. 7, 1 (1996)

I. Trichakis, I. Nikolos, G.P. Karatzas, Comparison of bootstrap confidence intervals for an ANN model of a karstic aquifer response. Hydrol. Process 25, 2827–2836 (2011)

Efron (1979) Bootstrap methods: another look at the jackknife. Annals of Statistics, 7(1), 1–26

H.Y. Lee, B.-T. Lee, Confidence-aware deep learning forecasting system for daily solar irradiance. IET Renew. Power Gener. 13(10), 1681–1689 (2019)

C. Honecker, M. Neumann, S. Gluck, M. Schoenen, et al., Optical spray investigations on OME3–5 in a constant volume high pressure chamber. SAE Technical Paper, 2019-24-0234. (2019)

A. Géron, Hands-on machine learning with Scikit-Learn, Keras & TensorFlow, O’Reilly, Sebastopol, CA. (2019), pp. 331–373

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2023 The Author(s)

About this paper

Cite this paper

Richards, B., Emekwuru, N. (2023). Using Machine Learning to Predict Synthetic Fuel Spray Penetration from Limited Experimental Data Without Computational Fluid Dynamics. In: Nixon, J.D., Al-Habaibeh, A., Vukovic, V., Asthana, A. (eds) Energy and Sustainable Futures: Proceedings of the 3rd ICESF, 2022. ICESF 2022. Springer Proceedings in Energy. Springer, Cham. https://doi.org/10.1007/978-3-031-30960-1_6

Download citation

DOI: https://doi.org/10.1007/978-3-031-30960-1_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-30959-5

Online ISBN: 978-3-031-30960-1

eBook Packages: EnergyEnergy (R0)