Abstract

Magnets are at the core of both circular and linear accelerators. The main function of a magnet is to guide the charged particle beam by virtue of the Lorentz force, given by the following expression:where q is the electrical charge of the particle, v its velocity, and B the magnetic field induction. The trajectory of a particle in the field depends hence on the particle velocity and on the space distribution of the field. The simplest case is that of a uniform magnetic field with a single component and velocity v normal to it, in which case the particle trajectory is a circle. A uniform field has thus a pure bending effect on a charged particle, and the magnet that generates it is generally referred to as a dipole.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

8.1 Magnets, Normal and Superconducting

8.1.1 Introduction

Magnets are at the core of both circular and linear accelerators. The main function of a magnet is to guide the charged particle beam by virtue of the Lorentz force, given by the following expression:

where q is the electrical charge of the particle, v its velocity, and B the magnetic field induction. The trajectory of a particle in the field depends hence on the particle velocity and on the space distribution of the field. The simplest case is that of a uniform magnetic field with a single component and velocity v normal to it, in which case the particle trajectory is a circle. A uniform field has thus a pure bending effect on a charged particle, and the magnet that generates it is generally referred to as a dipole.

By equating the Lorentz force to the centripetal force, we obtain the bending radius ρ of the motion of a particle of charge q under the action of a magnetic field B perpendicular to the motion:

By expressing the momentum p in practical units [GeV/c], we can write:

where Z is the charge number of the particle, with q = Ze.

The product Bρ is known as magnetic rigidity and provides the link between dipole strength and length based on the momentum of a charged particle in a circular accelerator. Note how the formula shows clearly the trade-off between the bending magnetic field B and the size of the machine (related to ρ).

Besides bending magnets, a number of other field shapes are required to focus and control the beam. Most important are magnets that generate a pure gradient field, i.e. a field that is zero on the axis of the magnet and grows linearly with distance. This type of magnet is referred to as a quadrupole and is used to focus the particles on the central trajectory of the accelerator. The strength of a quadrupoles is customarily quoted in terms of the field gradient G, in units of [T/m]. A normalised quadrupole strength for a quadrupole of length l is defined as the ratio of the integrated quadrupole gradient to the beam rigidity, or: K = Gl/(Bρ). The angular deflection α (in radians) of a particle passing at a distance x from the centre of a quadrupole can be computed using the normalised quadrupole strength as:

which shows that a particle on the quadrupole axis (x = 0) has a straight trajectory, while a particle off-axis receives a kick proportional to its distance from the centre, i.e. the expected focussing effect. Higher order gradient fields, such as sextupoles, or octupoles, behave similarly and provide further non-linear means to control, correct and stabilize the dynamics of the motion of the particles, as described elsewhere in this handbook.

The accelerator magnets considered here have typically slender, long apertures (the space available for the beam), where the magnetic field has components only in the plane of the magnet cross section. In this plane, 2-D configuration, the most compact representation of the magnetic field shape in the magnet aperture is provided by the complex formalism [1] and its multipole expansion. Defining the complex variable z = x + iy, where the plane (x,y) is that of the magnet cross section, the function B y + iB x of the two non-zero components of the magnetic field is expanded in series:

The coefficients B n and A n of the series expansion are the multipoles of the field, and determine the shape of the field lines. As an example, a magnet in which only the term B 1 is non-zero, corresponds to a magnetic field:

i.e. a perfect dipole field (constant in amplitude and direction) oriented in y direction. If the y direction is taken perpendicular to the plane of the accelerator (e.g. vertical), this is usually called a normal dipole, which provides bending in the plane of the accelerator (e.g. horizontal). A magnet in which only A 1 is non-zero results in a perfect skew dipole field:

which is in the plane of the accelerator (e.g. horizontal) and provides bending perpendicular to it (e.g. vertical). Multipoles B 2 and A 2 correspond to magnets generating a pure normal and skew quadrupole field. Higher order gradients (sextupole, octupole, etc.) are obtained by simple analogy in the continuation of the series.

The explicit expressions of the field components corresponding to the first four multipoles, and a sketch of the corresponding field lines are reported in Table 8.1. It is useful to remark that the coefficients B n and A n appearing in Table 8.1 have units of [T/mn − 1] and are hence the generalized normal and skew gradient of order n. Specifically, B 2 corresponds to the normal quadrupole gradient G discussed earlier. To complete this short review of field configuration, we use the complex expansion to evaluate the module of the field B in the case of a pure normal multipole (chosen for convenience, the case of a pure skew multipole field yields identical results). Simple algebra, writing that z = Reiθ where R is the module and θ is the argument of z, gives the following result:

which shows that the field strength in a pure multipole field of order n is proportional to the generalized gradient and grows with the power n−1 of the distance from the magnet centre. This extends the case of the dipole (n = 1, constant field), and quadrupole (n = 2, linear field), to higher order multipoles such as the sextupole (n = 3, quadratic field profile), octupole (n = 4, cubic field profile), and so on.

Besides its compact form, the complex notation is useful because there is a direct relation between multipoles (order and strength) and beam properties. This is why accelerator magnets are often characterised using their harmonic content in terms of the B n and A n coefficients of the field expansion. Indeed, the pure multipolar fields discussed so far can only be approximated to a suitable degree in real magnets. The field generated by a magnet contains then all multipoles, normal and skew, i.e. a dense harmonic spectrum. Symmetries cause cancellation effects, resulting in low (ideally zero) non-allowed multipoles when compared to the multipoles allowed by the magnet symmetry. Selected multipoles can be further reduced by using design features such as optimization of the coil and iron geometry, or corrections such as passive and active magnetic shims.

We define the field quality of an accelerator magnet as the relative difference between the field produced and the ideal field distribution, usually a pure multipole, in the region of interest for the beam, which is generally referred to as the good field region. Depending on the shape of the good field region, it may be convenient to quote field quality as an overall homogeneity (i.e. ΔB/B, typically done for magnets with a rectangular or elliptic aperture), or providing the spectrum of multipoles other than the one corresponding to the main magnet function (ratio of A n and B n to the main field strength, typically done for magnets with round bore). Whichever the form, the field quality of accelerator magnets is generally requested to be in the range of few 10−4. To maintain practical orders of magnitude, field errors are then quoted in relative units of 1 × 10−4 of the main field, or simply units.

Gradient magnets (i.e. quadrupole and higher order) are also characterised by a magnetic axis, which is usually taken as the locus of the points in the magnet aperture where the field is zero. Magnets are aligned with respect to their axis (or an average of the locus when it deviates from a straight line) to the specified beam trajectory to avoid unwanted feed-down effects. Typical alignment tolerances in circular machines range from few tens of μm in synchrotron light sources to fractions of mm in large colliders (e.g. the LHC). Linear colliders are more demanding, with typical tolerances at the sub-μm level.

Dipoles and quadrupoles are the main elements of the linear optics in modern synchrotrons. Depending on the beam specifications, any residual field and alignment imperfections, as well as drift in magnet properties, may require active correction to ensure stable and efficient operation. This is done using corrector magnets that are powered using information established from previous knowledge on the main magnets, or parameters measured on the beam, or both. Corrector magnets are often designed to generate a single multipole, so to act on the beam as an orthogonal knob, thus making the correction easier to execute.

8.1.2 Normal Conducting Magnets

“Normal conducting”, and alternatively “resistive”, “warm” or “conventional” magnets, are electro-magnets in which the magnetic field is generated by conductors like copper or aluminium, which oppose an electrical resistance to the flow of current. The magnetic field induction provided in the physical aperture of these magnets rarely exceeds 1.7 to 2.0 T, such that the working point of the ferromagnetic yoke remains below saturation. In these conditions, the yoke provides a closure of the magnetic path with small use of magneto-motive force, and its pole profile determines the magnetic field quality.

The integral form of the static part of the last Maxwell equation, the Ampere’s law, provides a simple analytical expression for the relationship between magnetic field and magneto-motive force in most of magnet configurations used in particle accelerators.

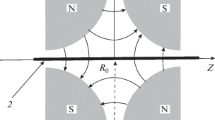

As an example, we illustrate in Fig. 8.1 a non-saturated C-type dipole magnet, made of two coils of N/2 turns each, connected in series and supplied by a current I.

If μ r ≫ l iron/l air we can neglect the magneto-motive force “used” in the iron and obtain:

If part of the iron is saturated, its permeability will be lower and part of the ampere-turns NI will be used to magnetize the iron as discussed later.

In case the magnetic field exceeds a value of typically about 1.5 T along the path corresponding to l iron the magneto-motive force used in the iron may become no longer negligible with respect to that used in the air. As the iron yoke gathers also the stray field, the field induction in the iron poles is always higher than the one between poles. To reduce the iron portion working at fields above 1.5 T, the iron pole can be tapered. This allows designing iron-dominated magnets capable of producing magnetic fields intensities in their physical aperture rather close to the saturation limit of the iron, i.e. up to about 1.7–2.0 T. A quantitative example of the effect of saturated iron in dipole magnet is given in Fig. 8.2.

8.1.2.1 Magnetic Design

Transfer function (the ratio of the magnetic field intensity in the magnet aperture to the supply current) and inductance can be computed starting from the Ampere’s law and considering the relationship between magnet inductance (L), current (I) and energy (E) as E = ½·LI 2.

In practical cases the theoretical transfer function of an ideal magnetic circuit is reduced by an “efficiency” η, typically of the order of η = 0.95…0.98, which depends on the length, stacking factor and working conditions of the magnetic yoke. The formulas in Table 8.2 provide an analytical formulation of the inductance values for different magnet configurations.

The inductance depends on how the pole geometry is trimmed (shims, tapered poles, chamfers) and on saturation. For quadrupole and sextupole magnets different tapering of the poles can strongly modify the inductance. For such magnets these simplified formulas can cover only standard designs.

The field homogeneity typically required by an accelerator magnet within its good field region is of the order of few parts in 10−4. Transfer line and corrector magnets may be specified with lower homogeneity.

Field quality in a given volume is determined by several factors:

-

the size of the magnet aperture with respect to the good field region

-

the shape of the iron poles

-

manufacture and assembly tolerances

-

the position of active conductors (coils), in particular in window-frame magnets

-

the ferromagnetic properties at the working conditions of the steel used for the yoke

-

dynamic effects

Optimizing field quality is achieved considering all above aspects. In particular magnets operating below 2 T, as the ones treated in this chapter, are also described as iron dominated magnets because the shape of the magnetic field induction is dominated by the shape of the ferromagnetic poles. At the interface between the magnet aperture and the poles, i.e. between steel and air, the component of the magnetic field induction B ⊥ perpendicular to the interface surface is the same on both media. The tangential component of the magnetic field H t also remains the same in case no surface currents are present: this corresponds to a change of the tangential components Bt of the field induction by the ratio of the magnetic permeability between the two media. As a result, in case of infinite permeability the direction of the magnetic field induction in the air at the exit of a magnet pole is always perpendicular to the pole surface.

An example of trimming field quality with pole shims in a dipole magnet is shown in Fig. 8.3.

8.1.2.2 Coils

The coils generate the magneto-motive force necessary to produce the required magnetic field induction in the magnet physical aperture. Coils produce losses due to their electrical resistance.

The power dissipated in a conductor of electrical resistivity ρ, volume V, effective current density J rms, is:

where S is the conductor section, l its length, I rms = SJ rms the effective current, R the electrical resistance. In case the current is not uniformly distributed in the conductor section, in particular for fast transients where the penetration depth is smaller than the conductor size, an effective section has to be considered.

We recall the resistivity of copper and aluminium as a function of the temperature T:

The effective current density J rms determines coil size, power consumption and cooling: the designer shall consider the right balance between requirements, technological limits, investment and operation cost.

Typical values of J rms are around 5 A/mm2 for water cooled magnets, and around 1 A/mm2 for air cooled magnets. Air cooled coils with favourable configurations (large heat exchange surface with respect to their section) can be operated at higher current densities.

Introducing l av as the average coil turn length, it is easy to show that the power dissipated in a magnet is:

In case of water-cooled magnets, heat is removed by water circulating in the coil (hollow) conductors.

The choice of cooling parameters and number of circuits is based on a few main principles: set the water flow corresponding to the allowed temperature drop for a given power to be removed, having a moderate turbulent flow to provide an efficient cooling, keeping the water velocity within reasonable limits to avoid erosion-corrosion and impingement of the cooling pipes and of the junctions, keeping the pressure drop across the circuit within reasonable limits (typically within 10–15 bars). Properties of water cooling circuits are provided in Table 8.3

Finally, we remark that coils are submitted to forces: their own weight and the electromagnetic forces produced by the interaction between magnetic field and current.

The interaction between a moving charge and the magnetic field is described by the so called Lorentz force, which in the macroscopic form for a wire carrying a current I is referred as Laplace force:

where the length l is oriented towards the direction of the current flow. For example 1.5 m of straight coil immerged in an average magnetic field component perpendicular to the coil of 0.5 T, carrying a total current of 60,000 ampere-turns, is subjected to a force of F = 60,000 ∙ 1.5 ∙ 0.5 = 45 kN.

8.1.2.3 Yoke

The magnet yoke has the function of directing and shaping the magnetic field generated by the coils. While magnets operated in persistent mode can be built either with solid or with laminated steel, the yokes of cycled magnets are composed of laminations electrically insulated from each other to reduce the eddy currents generated by the change of magnetic field with time. This electrical insulation can be inorganic (oxidation, phosphating, Carlite) or organic (epoxy). Epoxy coating in a B-stage form can be used to glue laminations together, a technique widely used for small to medium magnets, possibly reinforced by welded bars on the yoke periphery.

The magnetic properties of steel depend on the chemical composition and on the temperature/mechanical history of the material. Important parameters for accelerator magnets are the coercive field H c and the saturation induction. The coercive field has an impact on the reproducibility of the magnetic field at low currents. A typical requirement for the steel used in accelerator magnets is H c < 80 A/m. Tighter constraints (H c < 20 A/m) apply when the operation covers a large field factor starting from low field inductions (few hundred gauss). The saturation induction is highest with low carbon steel (carbon content in the final state <0.006%). It is common to specify points along the normal magnetization curve, with the condition that the magnetic induction B shall exceed specification values at given field levels H.

To increase its electrical resistivity and at the same time narrow the hysteresis cycle, laminated steel used in cycled magnets usually contains 2…3% of silicon.

With 3% of Si the electrical resistivity increases from ρ = 2 ∙ 10−7 Ωm to ρ = 5 ∙ 10−7 Ωm.

The work performed during the hysteresis cycle and the eddy currents produce losses in cycled magnets. An estimate of hysteresis losses can be obtained by the Steinmetz law:

where f is the frequency and η = 0.01…0.1, about 0.02 for silicon steel, and of eddy current losses, for silicon steel, by:

where d lam is the lamination thickness in mm.

8.1.2.4 Costs

We can distinguish

-

fixed costs: design, coil tooling (winding, molding), yoke tooling (punching, stacking), quality assurance (including tools for specific measurements/checks, as magnetic measurements if requested);

-

unitary costs: main materials (conductor, insulation, steel), manufacture of parts (coil, laminations, yoke), final assembly, ancillaries (connectors, interlocks, hoses), tests (mechanical, electrical, magnetic);

-

other systems: cooling, power converters, controls and interlocks, electrical distribution. These parameters have to be taken into account at the magnet design phase: for example for cycled magnets a low inductance can minimize the voltage levels, however the corresponding higher current would require larger supply cables from the power converters to the magnets.

-

running costs: electric power, maintenance over the life of the project.

A compromise between capital and operational cost is typically found with magnets operating with:

-

current densities of about 5 A/mm2: higher current densities correspond to smaller coils and consequently smaller and cheaper yokes, lower current densities correspond to lower power consumption (less electricity, smaller cooling plant) but to larger magnets;

-

field induction levels in the region between 1.2 T and 1.7 T: a given required integrated strength can be provided by short magnet with high field induction, long magnets with low field induction or a compromise between the two. Since, below saturation, the pole width size depends essentially on the good field region size and not on the field induction level, the highest possible field and the corresponding lowest magnetic length represent in most cases a cost-optimized yoke design.

8.1.2.5 Undulators, Wigglers, Permanent Magnets

Wigglers and undulators produce a periodic field variation along the beam trajectory causing relativistic charged particles to wiggle emitting electromagnetic radiation with special properties, in particular with a small angle α = 1/γ where γ is the relativistic factor.

To a first approximation, these magnets produce a series of dipole fields with alternated directions, of period λ. This is typically obtained with conventional electromagnets when the period is relatively large allowing sufficient space for the coils, and with permanent magnets for shorter periods. Superconducting windings, in general cryo-cooled, are used in case the required field exceeds 2 T and/or for small periods where a high current density is needed.

The difference between wigglers and undulators is in the nature of the radiation produced by the particle. When the amplitude of the beam excursion expressed in meters is small with respect to the angle of the synchrotron radiation emission expressed in radians, the device is called undulator: the emitted radiation is concentrated in a small opening angle and the radiation produced by the different periods interferes coherently producing sharp peaks at harmonics of a fundamental wavelength. Wigglers on the contrary produce particle displacements of larger amplitude: the emitted radiation is similar to the continuous spectrum generated by bending magnets, with in addition the effect coming from the incoherent superposition of radiation from individual poles.

It is useful to introduce the deflection parameter K = δ 0/α as the ratio between the maximum trajectory deflection δ 0 (in meters) and the emission angle α (in radians). For electrons:

In case K < 1 the device is an undulator, in case K >> 1 the device is a wiggler.

As anticipated, these magnets are often built with the use of permanent magnets.

Two types of high performance permanent magnet materials, both composed of rare earth elements, are available: Neodymium-Iron-Boron (NdFeB) and Samarium-Cobalt (in the form SmCo5 or Sm2Co17, also referred as SmCo 1:5 and SmCo 2:17).

NdFeB materials show the highest remanent induction, up to B r~1.4T, and the highest energy product up to BH max~50 MGOe, they are ductile, but they require coating to avoid corrosion and have a relatively low stability versus temperature. Their relative change of remanent field induction with temperature (temperature coefficient) is ΔB r/B r~ − 0.11% per °C: field induction decreases when temperature increases.

SmCo magnets show a lower remanent induction, up to B r~1.1 T, are brittle, but they are corrosion and radiation resistant. Furthermore, their temperature coefficient is about −0.03%, lower than that of NdFeB.

The use of permanent magnets in particle accelerators is not limited to wigglers and undulators. For example, the 3.3 km long recycler ring at FNAL, commissioned in May 1999, stands as a pioneer, as it is solely composed of permanent magnets dipoles and quadrupoles [2]. More recently, permanent magnets are used in compact quadrupoles for LINAC4 at CERN [3], in the main bending magnets of the ESRF-EBS light source [4], and even as spectrometers as for example in the nTOF experiment at CERN [5].

8.1.2.6 Solenoids

Solenoids are made by electrical conductors wound in the form of a helix. The magnetic field induction inside an ideal solenoid is parallel to the longitudinal axis and its intensity is B = μ 0 NI/l where NI is the total number of ampere-turns and l the solenoid length. By introducing the coil thickness t, the formula can be written as B = μ 0 Jt, where J is the current density.

Solenoids can be built as ironless magnets or can have an external ferromagnetic yoke, used for shielding and to increase the magnetic field uniformity particularly at the solenoid extremities.

The design and construction of solenoids, thanks to their use in many electrical and electro-mechanical devices, is well assessed since more than a century. A comprehensive treatment of solenoid electromagnets was compiled by C. Underhill already in 1910. The treatment issued by Montgomery in 1969 is still a reference [6] nowadays, in spite of new materials now available in particular for wire dielectric insulation and for the containment of stresses.

In solenoids the electromagnetic forces produced by the interaction between the magnetic field and the currents in the coils can reach extremely high values capable of breaking the wires or even, especially for pulsed magnets, leading to an explosion of the device. Their design should consider conductor characteristics and reinforcements, winding tension during manufacture and the containment structure.

8.1.3 Superconducting Magnets

Superconducting magnet technology has been instrumental to the realization of the largest particle accelerators on Earth. Table 8.4 reports the main characteristics of the four large scale hadron accelerators built and operated since the beginning of superconducting magnet technology for accelerators. In parallel, superconductivity has fostered the construction of high field and large volume detector magnets that have become commonplace in high energy physics. The first such detector magnet was installed at Argonne National Laboratory, and operated in the mid 1960s as an instrument in the Zero Gradient Synchrotron (ZGS) [11]. Atlas [12] and CMS [13] at the LHC are the latest and most impressive example of superconducting detector magnets.

The prime difference between superconducting and normal conducting magnets is in the way the magnetic field induction is generated. While in normal conducting magnets the field is dominated by the magnetization of the iron yoke, in their superconducting “siblings” the field is generated by a suitable distribution of currents, properly arranged around the beam aperture. This is possible because a superconducting material can carry large currents with no loss, and ampere-turns become cheap, thus opening the way to magnetic fields much above saturation of ferromagnetic materials. Very schematically, superconducting magnets for large scale accelerators consist of a coil wound with highly compacted cables, tightly packed around the bore that delimits a vacuum chamber hosting the beam. The coil shape is optimized to maximize the bore field and achieve acceptable field quality, as described later. The large forces that are experienced by the coil (several tens to hundreds of tons/m) cannot be reacted on the winding alone, that has the characteristic shape of a slender racetrack. The force is hence transferred to a structure that guarantees mechanical stability and rigidity. The iron yoke that surrounds this assembly closes the magnetic circuit, yields to a marginal gain of magnetic field in the bore, and shields the surrounding from stray fields. Finally, the magnet is enclosed in a cryostat that provides the thermal barrier features necessary to cool the magnet to the operating temperature, which is in the cryogenic range (1.9 to 4.5 K for accelerators built to date). Various implementations of this basic concept can be seen in Fig. 8.4 that shows the cross sections of the superconducting dipoles of the four large superconducting hadron accelerators listed in Table 8.4.

Superconducting magnet technology relies heavily on the ability to produce technical superconducting materials in the form of high current cables. Beyond considerations on the magnetic field that are specific to the arrangements described above, the design of superconducting magnets must take into account issues related to the superconductor, such as stability, quench protection, magnetization and AC loss. The magnet powering requires warm-to-cold transitions capable to transport the large currents in the range of few to few tens of kA with minimal thermal loss. The construction of the magnet, and in particular the insulation, coil winding, assembly in the mechanical structure and yoke, and the placement in a cryostat necessary for thermal management, all have aspects specific to superconducting magnet technology. Finally, cooling by helium is a science by itself. In the following sections we will discuss some of the main principles, without entering into detail, and provide extensive references on the above matters. The bibliography in the references directs the reader to excellent books on superconducting magnet engineering and science, especially [14,15,16,17,18].

8.1.3.1 Superconducting Materials

A superconductor is such only if it operates below the critical surface, a combination of temperature T, magnetic field induction B and current density J that delimits the boundary between the superconducting and normal-conducting phases. This surface is best expressed using a function J C(B,T), the critical current density which is the main engineering characteristic of a superconductor. A good example is the critical current density for the highly optimised Nb-47%Ti alloy used for the production of the LHC magnets, shown in Fig. 8.5. When cooled to 4.2 K, this superconductor can carry a current density up to 3000 A/mm2 in a background field of 5 T. Indeed, this is the order of magnitude of current density that is of interest to make the design of a superconducting accelerator competitive. As we see from Fig. 8.5, higher fields or higher temperatures result in a reduction of the critical current density, while a decrease of any yields an increase in J C.

Critical current density of Nb-47%Ti representative of the LHC production. The superconductor carries 3000 A/mm2 at a temperature of 4.2 K and in a background field of 5 T (marked point). The LHC dipoles operate at 1.9 K, taking advantage of the increase of J C at lower temperature to reach a nominal field of 8.33 T

Table 8.5 reports a summary of the critical temperature T C and critical field B C for the technical superconducting materials that have found practical applications over the past 50 years, as well as materials that are expected to come into use in a few years. The materials are generally classified as low-temperature superconductors (LTS) and high-temperature superconductors (HTS). This classification was originally based on the temperature of the superconducting transition TC, but now it refers rather to the different mechanisms that explain the existence of a superconducting phase. According to this classification, the alloy of Niobium and Titanium (Nb-Ti), the inter-metallic compounds of Niobium and Tin or Aluminium (Nb3Sn, Nb3Al) and of Magnesium and Boron (MgB2) are LTS materials. On the other hand, the Perovskites formed by Bismuth, Strontium, Calcium and Copper oxide (conventionally referred to as BSCCO) and a Rare Earth (e.g. Yttrium), Barium and Copper oxide (referred to as REBCO) are HTS materials. The details of the material composition and production route influence the values of T C and B C. For this reason the values quoted in the table should be regarded as indicative, especially for developmental materials such as MgB2, BSCCO and REBCO.

Note for completeness that many other elements and compounds become superconducting when cooled to sufficiently low temperatures and, in some cases, when submitted to large pressure. The family of superconductors is hence still developing. The recent discovery of a new class of HTS, the iron based superconductors [25] (also named IBS, FeSC, or ferropnictides), bears the promise of understanding the theory of high-temperature superconductivity, as well as the potential development of a new class of technical materials for high field applications. At the time of writing, the search for the highest possible T C has reached the maximum verified value of 203 K in a hydrogen sulphide under a pressure of the order of 155 GPa[26].

Though exciting and promising, these recent developments are still far from being ripe for applications. In the following sections we will focus on the main characteristics of the technical superconductors of Table 8.5, which are at present the only ones available in sufficient quantity and quality for magnet applications.

8.1.3.1.1 Nb-Ti

The alloy of Nb-47%Ti [19] is undoubtedly the most successful practical superconductor, and has been used in all superconducting particle accelerators built to date. Nb-Ti is a ductile and tough material, easily available in long lengths (a few km piece length) in the form of multi-filamentary wires where the superconductor is dispersed in a copper matrix of high purity and low electrical resistivity. Nb-Ti at 4.2 K has a critical current density of about 1500 A/mm2 at 7.5 T. Cooling it to 1.9 K shifts this point up to 10.5 T. This field range represents the upper (quench) limit for the use of Nb-Ti in accelerator magnets. Standard industrial production yields filament size of a few μm (5 to 10), which is beneficial to reduce the field perturbations induced by persistent currents (see later). Smaller filaments (1 to 3 μm) have been produced to reduce magnetization and losses, but this R&D products are not industrial standards. Homogeneity of the production is at the level of few % for key parameters such as critical current, magnetization, wire composition and geometry, which demonstrates the maturity of the technology. Figure 8.6 shows multi-filamentary Nb-Ti strands used in the LHC.

8.1.3.1.2 Nb3Sn

The inter-metallic compound Nb3Sn [20] is the second LTS material that founds its way from material research to large-scale applications. Nb3Sn is a brittle and fragile compound, which is why after an initial success in high field solenoids of the 1960’s, attaining record fields of 10 T, it was quickly replaced by the ductile Nb-Ti for more modest field values. All manufacturing routes involve assembly of the precursor elements Nb and Sn into large size billets that are extruded and/or drawn to the final diameter wire. The Nb3Sn is then formed by a chemical reaction induced by a heat treatment to temperatures in the range of 650 °C for durations of few tens to few hundreds hours. Various manufacturing routes have been established industrially, resulting in wires with different properties of critical current density and filament size. Most common fabrication methods are based on the so-called “bronze-route”, or “internal-tin”, which distinguish themselves by the way that the relatively mobile Sn is made available for reaction with Nb. The interest in Nb3Sn comes from the improved TC and BC with respect to Nb-Ti, resulting in record critical current density exceeding 1500 A/mm2 at 15 T and 4.2 K. This potential is commonly exploited in commercial solenoids built for NMR spectroscopy or other laboratory applications, but to date found no accelerator applications due to the technical difficulties associated with the heat treatment and the handling of a fragile magnet coil. In addition, high JC Nb3Sn has presently larger filament size than Nb-Ti, in the range of 50 to 100 μm. This material is nonetheless very relevant to extend the reach of present accelerators such as the LHC beyond the limit of Nb-Ti (e.g. the High-Luminosity upgrade of the LHC and the R&D on magnet technology for a Future Circular Collider), or to build compact accelerators that could be of interest for industrial and medical applications (e.g. high-field compact cyclotrons for proton therapy).

8.1.3.1.3 MgB2

The most recent of the technical superconductors [22], the inter-metallic compound MgB2 is a relatively inexpensive material obtained from readily available precursor elements. Superconducting MgB2 wire can be produced through the powder-in-tube (PIT) process. Variants of this process exist, depending on whether the MgB2 is formed at the end of the wire processing from Mg and B precursors (in-situ variant), or rather powders of pre-reacted MgB2 are sintered in the finished wire (ex-situ variant). In both cases, a heat treatment is required in the range of 600 °C to 1000 °C. As for Nb3Sn, the wires and tapes of MgB2 are fragile and require careful handling to limit deformation. In spite of a high critical field, which in thin films has reached values above 70 T, the bulk material becomes irreversible at much smaller applied fields. MgB2 is hence of interest in the range of low to medium field applications (presently up to a maximum 5 T range), but for operating temperatures up to 20 K, i.e. well above boiling helium conditions. The main technical realizations presently based on MgB2 are open MRI systems at modest magnetic field (0.5 T), and cables for power transmission planned for the High Luminosity upgrade of the LHC (the so called SC links) or electric energy distribution. This material is to date still in the developmental state, with specific interest in enlarging the field range for magnet applications. While the main motivation remains helium-free MRI magnets, MgB2 could be an option for accelerator magnets subjected to radiation loads that require high operating temperature and energy margin (see later).

8.1.3.1.4 Cuprate Superconductors

These are the two HTS materials that seem to bear the largest potential for future use in accelerator magnets, mainly because of the high critical field at low operating temperature that makes them the ideal candidates to break the 20 T barrier. Both BSCCO and REBCO are ceramic compounds obtained respectively by chemical synthesis of B, Sr, Ca, Cu and O, or Y, Ba, Cu and O. As for all other HTS materials, their production is a complex process that may involve assisted crystal growth, at high temperature, and under very well controlled chemical conditions. BSCCO and REBCO still have unresolved engineering issues, they are fragile, and have comparatively high material and production costs. One of the main limitations to critical current in HTS materials comes from the effect of grain boundaries. Mis-aligned grain boundaries are a barrier to the free flow of the super-currents. In principle, this means that HTS conductors would require solving the tantalizing task of growing single crystals of km length. Different ways have been found to deal with this limitation in BSCCO and REBCO. BSCCO is manufactured using a Powder in Tube (PIT) technique, filling Ag tubes with a precursor BSCCO oxide powder. Two different techniques are used to produce the 2212 and 2223 variants of the BSCCO compound. In the case of BSCCO-2212, the tubes are stacked and drawn to wires that can be wound, cable and finally reacted, much like Nb3Sn, albeit at higher temperature (900 °C) and under controlled oxygen atmosphere. BSCCO-2212 undergoes a melt process, critical to produce the connected grain structure required for high critical current. For BSCCO-2223 the common form is tape. An initial liquid-mediated reaction of the precursor is used to form the 2223 compound. In this case the required grain alignment cannot be obtained upon heat treatment, but can be induced by mechanical deformation. This is why reacted BSCCO-2223 is mechanically deformed to the final tape dimension, and undergoes a final sintering heat treatment. REBCO is also manufactured in the form of tape. A robust substrate such as stainless steel or hastelloy, is polished and prepared with a series of buffer layers that imprint a crystal texture to a thin layer of REBCO superconductor. This is the crucial step to induce aligned crystal growth of the superconducting phase. The superconductor growth can be achieved by various chemical or physical deposition techniques. The tape is then capped with a sealing Ag layer, finally adding Cu as a stabilizer. When cooled down to 4.2 K, REBCO and BSCCO attain exceptional current densities at high-field, surpassing the performance of LTS materials at fields of 10 to 15 T. In spite of the early phase of development, the HTS BSCCO-2223 already found a large-scale application in the current leads of the LHC, where the gain in operating efficiency and margin has offset the additional investment.

8.1.3.2 Superconducting Cables

Wires and tapes manufactured with the LTS and HTS materials listed above carry currents in the range of few hundreds of A, and are appropriate to wind small magnets, where the magnet inductance and stored energy are not an issue (see later discussion on protection). On the other hand, the large-scale dipole and quadrupole magnets of an accelerator are connected in km-long strings, and stored energy that can reach hundreds of MJ. To decrease their inductance and limit the operating voltage, it is mandatory to use cables made of several wires in parallel, able to carry much larger currents, typically in the range of 10 kA. An additional benefit of a cable is to provide parallel paths for the current in case of local wire defects. Such cables must insure good current distribution through transposition, combined with precisely controlled dimensions necessary to obtain coils of accurate geometry, as well as good winding characteristics. These properties are the characteristic of the flat cable invented at the Rutherford Laboratory in England [27]. A typical Rutherford cable, shown in Fig. 8.7, is composed of fully transposed twisted wires (Nb-Ti in Fig. 8.7). The transposition length, also referred to as twist pitch, is usually kept short, a few cm. To improve winding properties the cable is slightly keystoned, i.e. the cable width is not constant from side to side. The angle formed by the planes of the cable upper and lower faces is called the keystone angle, usually in the range of 1 to 2 degrees. A summary of cable characteristics for the major superconducting accelerator projects is reported in Table 8.6.

The Rutherford cable for the inner layer of the LHC dipoles, showing the Nb-Ti filaments in a few etched strands. The schematic in the inset shows the definition of the quantities reported in Table 8.6

The concept of Rutherford cables can be easily applied to LTS materials that come in the form of round wires and has been recently extended to round BSCCO-2212 HTS wires. The rectangular geometry of the cable provides high strand packing and a flexible cable for winding magnet coils of various geometries. The cabling process is invariably associated with large deformations at the edges of the cable, where the wires are plastically deformed. This is necessary to achieve mechanical stability of the cable, but can lead to a degradation of the critical current of the superconductor. Optimization of this delicate balance between limited wire deformation and desired cable compaction is done empirically, and the I C degradation in an optimized cable is in the range of a few %.

The electro-dynamic and mechanical requirements for a cable are essentially independent of the superconducting material, at least to first order, and apply both to LTS and HTS. Cabling of HTS materials, however, and in particular those only available in the form of tapes, is far by being standard practice. Several configurations are under development, from compact stacks and Roebel bars, to twisted assemblies of tapes, or wound tapes around cores. Indeed, the matter of HTS cabling is an R&D that will need to be resolved before these materials can become a viable superconductor for accelerator magnets.

8.1.3.3 Stability and Margins, Quench and Protection

We have already remarked that a superconductor is such only when it operates below its critical surface. Once in normal conducting state, e.g. because of a sudden temperature increase caused by internal mechanical energy release or a beam loss, the superconductor generates resistive power, causing a thermal runaway, i.e. an unstable behaviour. It is for this reason that the operating point of current density J op, field B op and temperature T op are chosen by design well inside the allowable envelope, i.e. with proper margins that ensure stability at the operating point. The typical metrics used for operating margins are:

-

Critical current margin i, expressed as the operating fraction of the critical current density i = J op/J C(B op,T op), where the critical current is evaluated at the operating field and temperature;

-

Margin along the loadline f, expressed as the ratio of operating to critical current f = J op/J C(t/J C,T op) where the critical current is evaluated at the intersection of the magnet loadline, i.e. the straight line with slope t = B/J and the critical surface;

-

Temperature margin ΔT, given by the difference in temperature from operating conditions T op to current sharing conditions T CS, evaluated at the operating field and current density ΔT = T CS(J op,B op) − T op. The current sharing temperature is defined as the temperature at which the operating current density equals the critical current, or J op = J C(B op,T CS).

The margins defined above are shown graphically in Fig. 8.8. Representative values for the design of the large-scale Nb-Ti accelerator dipoles listed earlier are i ≈ 0.5, f ≈ 0.8, and ΔT = 0.5…1.5 K.

An additional quantity that measures the stability of the operating point is the energy margin, i.e. the quantity of heat necessary to drive the superconductor normal. The energy margin depends on the time and space structure of the heat deposition. A lower bound of the energy margin is given by the enthalpy difference between operating and current sharing conditions ΔH = H(T CS) − H(T op). A robust magnet design is such that the energy margin is larger than the expected amplitude of perturbation over the whole spectrum of operating conditions and characteristic times, which is the basic idea behind all stabilization strategies adopted.

In spite of good design, an irreversible transition to the normal conducting state is always possible, resulting in a thermal runaway process that is referred to as a quench. Superconducting magnets in general, and more specifically the highly compact accelerator magnets, tend to have large stored magnetic energy density. Local dissipation of this energy has the potential to lead to material damage and cause loss of electrical insulation. For this reason all superconducting magnets must be protected against quench by detecting any irreversible resistive transition (quench detection electronics) and discharging the magnet. The peak temperature T hot reached during a quench can be estimated by equating the Joule heat produced during the discharge to the enthalpy of the conductor, or H(T hot) − H(T op) = ∫ρJ 2 dt, where ρ is the specific resistance of the superconductor composite in normal conducting state. We see from the above concept, borrowed from electrical blow-fuses design, that it is always advantageous to reduce the normal state resistance and the time of the discharge. The normal state resistance is decreased by backing the superconductor with a matrix of a material with good conductivity properties (e.g. copper, aluminium or silver). The discharge can be made faster by reducing the inductance of the magnet, which is the reason why large scale magnets are wound using cables with large operating current in the place of single wires. There are various possibilities to dump the magnetic energy, based on one or more of the following standard strategies:

-

Energy extraction, in which the magnetic energy is extracted from the magnet and dissipated in an external circuit (e.g. a dump resistor or diode);

-

Coupling to a secondary circuit, in which the magnet is coupled inductively to a secondary that absorbs and dissipates a part of the magnetic energy;

-

Subdivision, a partition of the magnet in sections, with each section shunted by an alternative current path (resistance or diode) in case of quench;

-

Active quench initiation, that relies most commonly on heater embedded in the winding pack, fired at the moment a quench is detected to spread the normal zone over the whole magnet mass and thus reduce the peak temperature. Alternative means to actively initiate quench have been revisited, based on over-current or fast field changes induced by capacitive discharge in the coil.

8.1.3.4 Magnetization, Coupling and AC Loss

An ideal superconductor (type-I) tends to exclude field variations from its bulk, i.e. a perfect diamagnetic behaviour. In practice, in the superconducting materials listed above (type-II) the magnetic field can penetrate the bulk, still resulting in partial diamagnetism. Macroscopically seen, a field change induces shielding currents, which, in a superconductor, do not decay. For this reason these currents are referred to as persistent. The magnetic moment per unit volume M associated with persistent currents is proportional to the current density J C of the shielding currents, and the characteristic size D of the superconductor, i.e. M ≈ J C D. This magnetic moment can attain large values, perturb the field generated by the magnet, and lead to instabilities in case the magnetic energy inside the superconductor is dissipated in a process referred to as flux-jump. For this reason, the superconductor in wires and tapes is subdivided in fine filaments that have characteristic dimension in the range of 10 to 100 μm. Persistent currents and the associated magnetization produce a significant field perturbation in accelerator magnets, and must be subject to optimization and tight control.

Similar to the bulk behaviour described above, field variations also induce shielding currents between the superconducting filaments. These currents couple the filaments electromagnetically by finding a return path crossing the wire matrix. The amount of filament coupling depends on the resistivity of the matrix, which has to be low for good protection, and the geometry of the current loop. In the extreme case of wires and tapes with untwisted filaments, coupling currents could travel along long lengths (e.g. the km length in a magnet) and find a low cross-resistance. The net effect would be that the multi-filamentary matrix would respond to field changes as a single bulk filament, losing the advantage of fine subdivision. Decoupling of the filaments is achieved by shortening the current loop, twisting the wire with typical pitch in the range of few mm. This procedure cannot be applied in tapes that thus suffer from a higher degree of coupling. Similar reasoning applies to the superconducting cable, which explains why the strands in the cable must be transposed by twisting them. In addition, the cross resistance can be controlled in cables by applying resistive coating to the strands, or inserting resistive barriers (sheets, wraps) in the cable itself.

A typical value of magnetization due to persistent and coupling currents is shown in Fig. 8.9 for an LHC Nb-Ti strand. The magnetic moment has a hysteresis, and the area of the loop is proportional to the energy density dissipated during a powering cycle. This means that in superconducting magnets a field ramp is invariably associated with an energy loss, which is referred to as AC loss. When compared to other heat loads on the magnet, AC losses become relevant only at high ramp-rates, of the order of 1 T/s, which is of interest for fast cycled accelerators.

8.1.3.5 Magnetic Design of Superconducting Accelerator Magnets

Field calculations for superconducting magnets are very different from those described earlier for iron dominated normal-conducting magnets. The task in this case is to find the current distribution that generates the desired multipole magnetic field. Among the many possible solutions, the cos(nθ) and intercepting ellipses of Beth [1] and Halbach [28] are most instructive examples and the reader should familiarise with their theory. These ideal current distributions are, however, not practical for winding coils with cables of the type described later. A good approximation of a coil cross section is obtained considering sectors of current shown in Fig. 8.10. The sectors have uniform current density J, a high degree of symmetry, but produce only an approximate multipolar field. The configuration depicted in Fig. 8.10a generates an approximate dipole B 1, with higher order field errors. Because of symmetry, the only field errors produced (allowed multipoles) are normal multipoles of order (2n + 1), i.e. B 3, B 5, B 7… Similarly, the configuration of Fig. 8.10b produces an approximate quadrupole B 2 with normal higher order multipoles of order 2(2n + 1), i.e. B 6, B 10, B 14… The strength of field and field errors are reported in Table 8.7 that can be used as a starting point for an analytical design of multipole coils.

Examining the equations in Table 8.7a for the field of a sector coil dipole, we see that the strength of the field produced is directly proportional to the current density J and the coil width (R out-R in). In order to keep the coil cross section as small as practically feasible, it is always beneficial to maximise the coil current density, compatibly with mechanical limits (stress) and quench protection (heating rate in case of quench). A maximum current density results in the smallest possible coil, which is associated with minimum overall magnet dimension and cost. This is the simple and clear explanation for the push towards high current density in accelerator magnets.

Similar considerations also hold for a quadrupole, as we see from the expression of the field gradient in Table 8.7b. In this case, however, it is interesting to note that while the quadrupole gradient is proportional to the current density, as for the dipole, the dependence on the coil width is logarithmic. This limits the interest of increasing the coil size in the case of a quadrupole, and makes the role of current density even more prominent.

With respect to field quality, we note further that a choice of φ = 60° in the sector dipole coil cancels the sextupole error b 3. The first non-zero multipole error is then the decapole b 5. For typical coil dimensions, the b 5 error is a few percent, i.e. much larger (two orders of magnitude) than acceptable field quality specification in an accelerator magnet. Better field quality can be obtained by segmenting the sectors using insulating wedges, and using two (or more) nested layers. This adds degrees of freedom in the coil geometry that can be used to improve the field homogeneity, at the cost of an increased complexity of the winding. In Fig. 8.11 we show the coil cross sections of the four large-scale superconducting world synchrotrons. It is evident from this how the coils have evolved in complex structures to follow the increased demand of field quality. Similar considerations, and optimization, are valid in the case of a quadrupole magnet.

Considering further field quality, it is important to recall that the magnetic moment associated with persistent and coupling currents produces field errors that are typically in the range of 10−4 to 10−3. These field errors are not easy to predict and control in production, can exhibit large non-linearity and time dependence, and hence require due attention through direct measurement and appropriate corrections in accelerator operation.

The forces reported in Table 8.7 are intended as resultants on a coil quadrant (for a dipole) or octant (for a quadrupole). We see that the forces generally scale with the square of the current density, and hence with the square of the field both in a dipole and in a quadrupole (i.e. proportional to the magnetic pressure). The same holds for the magnetic energy per unit length, scaling with the square of the field in the bore. Practical values for the loads seen by the coils of accelerator dipoles are compiled in Fig. 8.12, which reports the Lorentz forces in the plane of the coil (referred to a coil quadrant) for the dipoles of the large scale accelerators discussed. We clearly see in Fig. 8.12 the progression in the level of electromagnetic forces and stored energy, from the modest field values of RHIC (3.5 T) to the state-of-the-art of the LHC (8.3 T). Large forces and stored energy are indeed the main engineering challenges of superconducting accelerator magnets, with increasing challenges in the mechanics (supporting structures and internal stress) and quench protection (quench detection and dump time) as the field is pushed to higher values.

Finally, the expressions of Table 8.7 do not take into account the presence of magnetic iron, that surrounds the coil and produces an additional contribution to the field and field errors. The magnitude of the iron contribution is usually small (in the range of 10 to 20%, with the exception of super-ferric magnets, described later) compared to the field generated by the coil current. When the iron is not saturated, its contribution can be approximated analytically using the method of images, which is simple in the case of a round iron cavity. A compact treatment of this method can be found in [17]. For complex geometries, or in the presence of saturation, it is mandatory to resort to computer codes to perform the appropriate calculations and optimizations.

8.1.3.6 Current Leads

The powering of superconducting magnets is done via current leads from room temperature, where the warm power cables are connected, to the cold mass at cryogenic temperature, where the magnets are operated. Current leads are often the dominant source of heat leak into the cryogenic environment because of thermal conduction under a large temperature gradient, as well as ohmic loss. The goal of a current lead design is the minimization of these losses, aiming at the optimum geometry which enables stable operation with a minimum heat in-leak.

Conventional current leads are made from metal, and are cooled either by conduction or by heat exchange to the cryogen (commonly helium) boil-off. The heat leak to cryogenic temperature for optimized conduction cooled leads at operating current is about 47 W/kA, while leads cooled by helium boil-off have a much more efficient value of 1.1 W/kA. These values are representative of the minimum heat leak that can be achieved. They are independent of the properties of the material chosen because of the proportionality relation between electrical conductivity (which governs Joule dissipation) and thermal conductivity (which rules heat in-leak) established by the Wiedemann-Franz law, to which most metals and alloys obey. The geometry of the lead that corresponds to the optimum performance (length and cross section) is however strongly dependent on the materials chosen.

The loss of a conventional lead can be further decreased replacing the cold part with High Temperature Superconducting (HTS) material, which is characterized by low thermal conductivity and zero electrical resistivity. The use of HTS leads was first pioneered on large scale at the LHC machine [29], where more than 1000 HTS leads operate at currents ranging from 600 A to 13,000 A and power the superconducting magnet circuits. The high temperature superconductor incorporated in the LHC leads is the Bi-2223 tape with a gold-doped silver matrix [30]. The HTS operates in a temperature range spanning from 50 K to 4.5 K, while a resistive heat exchanger, cooled by helium gas, provides the link between 50 K and the room temperature. In the LHC leads, the heat load into the helium bath is reduced by a factor 10 with respect to conventional self-cooled leads [29]. An additional heat load appears at intermediate temperature, which can however be removed with much better thermodynamic efficiency. The typical gain on the overall heat balance that can be achieved by proper use of high temperature superconductor in current leads is then a factor of 3 with respect to the optimized values quoted earlier.

8.1.3.7 Mechanics, Insulation, Cooling and Manufacturing Aspects

The performance of a superconducting magnet is invariably determined by proper consideration of the material physics and engineering aspects discussed earlier. Good material and magnet design, however, are not sufficient, and success relies heavily on a sound mechanical concept and the adapted manufacturing technology. The main issue in the mechanics of a superconducting magnet is how to support the electromagnetic force in situations of both large forces and large force density, at cryogenic conditions, maintaining the conductors in their nominal position, and avoiding excessive stress on superconducting cables, insulating materials and the structure itself. This simple task can be addressed using different strategies that depend on the level of field and electromagnetic force, on space and other operation constraints, on material selection, and, not last, on the specific choice of the magnet designer.

A few general lessons have been learned in the past 40 years of development, and are common practice in superconducting magnet engineering. It is important to constrain the winding pack in all directions, with a force that is greater than the Lorentz load, including an appropriate safety factor. This is done to reduce the energy inputs of mechanical origins that can trigger instabilities. For simple magnetic configurations (e.g. a solenoid) the coil itself can be the bearing structure. In the more complex situations encountered in accelerator magnets, the winding must always be in contact with a force-bearing surface. An initial load is applied on this surface, sometimes just a few MPa sufficient to remove the fluff. Caution must be used to avoid cracking or tearing at the interfaces, and in particular those bonded or glued during an impregnation process. In some cases, it may be of advantage to intentionally remove the bond between the windings and the surfaces that are not supporting the Lorentz load, especially at the surfaces that tend to separate. In this case the coil would be allowed to move as much as required, e.g. by field quality considerations. These principles may be difficult to apply in cases where the force distribution is complex, e.g. in high order field configurations such as nested multipole corrector magnets of particle accelerators. These magnets are then designed with larger operating margin to cope with the increased perturbation energy spectrum.

Coils can be dry-wound, in which case the conductor has free surfaces and can be permeated by the cryogen. Alternatively, they can be impregnated with a polymer resin that fills the coil spaces and once cured provides mechanical strength but prevents direct contact to the coolant. Common resins do not have sufficient mechanical strength to withstand thermal and mechanical stresses, and are loaded with fibers (e.g. glass). It is important to avoid volumes of unloaded resin, as these tend to crack and release energy that can lead to magnet quenches. Nb-Ti based conductors, ductile and strain tolerant, are well adapted to both techniques, while impregnation is favoured in the case of Nb3Sn or HTS based conductors that are strain sensitive and require stress homogenization in the winding. Coil support is usually achieved using a stiff clamping system. For magnets working at moderate fields (up to about 5 T) a simple structure acting on the coil (referred to as collars), and locked by dowels or keys, may be adequate. This is the type of structure used for the Tevatron dipole (see Fig. 8.4), in which the collared coil assembly is enclosed in a cryostat (for thermal management) and centreed in the warm iron yoke by means of spring-loaded bolts. Higher fields require additional force transfer structures, for example the collared coil can be further clamped inside the magnetic yoke, thus increasing rigidity, as in the case of the RHIC, HERA and LHC dipoles, also shown in Fig. 8.4. In this case the collared coil assembly has a well defined outer surface that mates with the inner surface of the iron yoke. The iron yoke, assembled from packs, is held by an outer shell that takes part of the mechanical load.

Force transfer from cold to warm parts is usually kept to a minimum, and possibly reduced to the bare support of gravity loads. This is because any massive mechanical component also acts as a thermal bridge, affecting heat loads and cooling efficiency. Due to differential thermal contraction, any warm-to-cold transition is also subjected to relative movements, which shall be accomodated by adjusted kinematics or flexibility.

One of the recurrent issues in the manufacture of a superconducting magnet is the choice of electrical insulation between coil turns and between coil and ground. Most important is to include in the consideration of insulation all coil discontinuities (e.g. terminations, contact surfaces, coil heaters) as well as the instrumentation. The insulation of accelerator magnets is submitted to moderate dielectric stresses (in the range of few hundred V to a kV), but extremely high mechanical stresses under cryogenic temperatures (in the range of few ten to hundred MPa), and possibly in a radiation environment. Good coil insulation must rely in materials capable of retaining their dielectric and mechanical properties at cryogenic temperatures, such as polyimide tapes, cryogenic-grade epoxy resins and relevant glass-fiber composites. The dielectric strength of impregnated coils can be as good as for resistive magnets. In the case of insulation porous to the coolant, such as obtained by dry-winding the coil, the dielectric strength depends on the properties of the coolant itself. Liquid helium has a high breakdown strength, about 30 kV/mm which is one order of magnitude larger than that of dry air. Gaseous helium, on the contrary, has a much lower breakdown voltage, typically one tenth than that of air at the same pressure, while at sub-atmospheric pressures it decreases to a Paschen minimum of about 150 V [31]. In this case it is important to consider all possible operating conditions (e.g. the decrease of helium density during a magnet quench).

A further issue in the design of superconducting magnets is cooling. Any heat load from internal or external origin (e.g. particle energy deposition, heating at resistive splices, heat conduction and radiation to the cold mass, AC loss), of both steady state and transient nature, must be removed to a suitable cryogenic installation that provides the heat sink at the lowest temperature of the system. Magnets subjected to small heat loads (in the range of few mW) can be indirectly cooled by thermal conduction. This is the case of small-size magnets, operated at low current, and well shielded. The recent advance in cryo-coolers has made cryogen-free operation a convenient solution for this class of instrumentation magnets. At the other extreme, large cold masses, and high current cables, subjected to much larger heat load (few W to few tens of W) require the direct use of the coolant as a thermal vector. The magnet can then be cooled by immersion in a bath of liquid, either normal or superfluid helium, or by force-flow cooling. A further option in case of force-flow is to either cool the magnet as a whole, or to distribute the cooling channel within the coil using, as an example, internally cooled cables. An important aspect of any cooling method, either direct or indirect, is the temperature gradient that is established under the heat load between the superconductor and the heat sink. This temperature gradient affects the temperature margin discussed earlier. With the exception of internally cooled cables, the temperature gradient is directly proportional to the thermal resistance of the coil. Low thermal resistance insulation schemes can be obtained by proper choice of insulation thickness and overlap, as demonstrated by recent work [32] devoted to the upgrade of the quadrupoles for the inner triplet of the LHC.

The fabrication of a superconducting magnet resorts on the standard techniques, tools and instruments used in electrical and heavy industries, adapted to specificities of the materials used. Particular attention is devoted to preserve the physical properties of the conductor throughout the coil fabrication and handling, as these can be degraded by excessive strain during the winding or by heat treatments (e.g. curing of the resin). The case of Nb3Sn magnets fabricated by the wind-and-react technique poses additional constraints on the structural and insulating materials. These need to withstand the high temperature heat treatment required for the formation of the superconducting phase (approximately 700 °C for a few hours to a few days). When compared to standard electrical equipment, superconducting coils have very tight manufacturing tolerances originating from demands of field quality (recall that the field is dominated by the current distribution) and to avoid movements during energization that could trigger quenches (recall the discussion on stability). Indeed, the level of accuracy demanded is often beyond the standard experience in electromechanical constructions. Which is why trained personnel and field experience are often critical to the successful performance of a superconducting magnet.

8.1.3.8 Super-Ferric Magnets

Super-ferric magnets are a special case of electromagnets, where the ferro-magnetic yoke, producing the dominant portion of the magnetic field in the useful aperture, is magnetized by superconducting coils. These electromagnets resemble the normal conducting magnets described earlier, apart for their cryogenic features. The prime interest of super-ferric magnets is to profit from the power advantage of superconductors (no ohmic loss). Provided the design is well optimized, the cost of cryogenic cooling is smaller than the cost of powering the resistive coils, resulting in a net gain. Part of this optimization is the trade-off between a cold or warm yoke, affecting the amount of required cooling power at cryogenic temperatures. In addition, super-ferric magnets provide operational flexibility. In particular, steady operation at nominal field is possible with no significant overhead. By contrast, high field operation can be very power-intensive, and costly, in normal conducting magnets. Finally, the high current density which can be achieved in superconducting coils is more than one order of magnitude larger than in normal conducting coils. This can bring additional benefits to the reduction of the overall magnet dimension, also thanks to a reduced yoke reluctance.

The limitation of super-ferric magnets is similar to normal conducting electromagnets, namely that the field is limited by the saturation of the ferro-magnetic material of the yoke. In fact, super-ferric magnets for accelerators, such as the Nuclotron [33] and FAIR [34] dipoles, achieve maximum field in the aperture of the order of 2 T. Super-ferric correctors are a good niche application, because the peak field at the pole tip remains modest. Recent examples of these magnets are the super-ferric high-order correctors of the new interaction regions of the High-Luminosity upgrade of the LHC [35].

8.2 RF Cavities

The acceleration of charged particles is possible with electromagnetic fields thanks to the Lorentz force F = q(E + v × B). Since the increase of particle energy is given by

neither the magnetic field nor the transverse components of the electric field contribute to energy exchange with the particle. Only the longitudinal electric field component can be used to accelerate particles to higher energies; RF cavities for acceleration thus must provide a longitudinal electric field.

In addition to the necessity that the electric field must have a longitudinal component, a second condition is that the net electric field (or force) integrated through the RF cavity on the path of the particle trajectory (taking its finite speed into account) must not vanish. For substantial acceleration, this latter condition naturally leads to the need for a time-varying field, as can be concluded from the following line of thought: For field quantities constant in time, it follows from Maxwell’s equations that the electric field is the gradient of a potential; to increase the kinetic energy of a charged particle, it will thus have to pass through a potential difference, which for technical (and safety) reasons will be limited to a few MV at best, which in turn will limit the possible energy gain to a few MeV just once, i.e. without the possibility to add stages. To reach larger energy gains, time-varying fields are necessary. RF cavities provide time-varying fields at high frequencies (radio frequency = RF, typically ranging from a few kHz to some 10 GHz).

However, when averaging over one period of the RF, the fields at any one location inside an RF cavity average out to zero; so if a particle travels a large distance through the time-varying fields in an RF cavity, it may experience both accelerating and decelerating fields, which will lead to a reduction of the net acceleration. For this reason, RF cavities are designed to concentrate the accelerating field over a relatively short distance (the accelerating gap). Cavities may have more than one gap, but in this case the distance between gaps must be adjusted to the particle velocity (see Sect. 8.2.6 below).

Since the particle beam is normally travelling in a vacuum pipe, the RF cavity must be compatible with this requirement as well. This can be done either by using an insulating, vacuum-tight tube inserted into the beam pipe at the location of the accelerating gap; this insulating tube is often itself referred to as the gap. Gaps are typically made of ceramic or glass (Fig. 8.13). Another possibility to make the cavity compatible with the vacuum requirements is to use the cavity itself as a vacuum vessel. In this case, the RF power has to be coupled into the cavity through vacuum tight feed-through. The design of high power RF couplers is a complex task and has almost become a discipline of its own.

Since RF electromagnetic fields radiate, RF cavities must be entirely closed on their outside with a well-conducting shield to prevent both power loss and electromagnetic interference. For this reason, cavities are normally fabricated from metal. This RF shield continues around the power coupler, the feeder cable and the RF amplifier. The beam pipe does not break this RF shield since due to its diameter it presents a hollow waveguide well below cut-off, through which electromagnetic field cannot propagate at the cavity operational frequency. A generic RF cavity (see Fig. 8.14) thus forms almost naturally an enclosed volume around the accelerating gap—the name “cavity” derives of course from this very property.

8.2.1 Parameters of a Cavity

If the metallic enclosure that forms the RF cavity were a perfect conductor and the cavity volume would not contain any lossy material, there would exist solutions to Maxwell’s equations with non-vanishing fields even without any excitation. These so-called eigensolutions are also known as the cavity (oscillation) modes. Each mode is characterized by its (eigen-)frequency and its characteristic field distribution inside the cavity. If the cavity walls are made of a good rather than a perfect conductor, modes still exist and are useful to characterize the cavity, but their eigenfrequencies will become complex, describing damped oscillations, so each mode will be characterized by its frequency and its decay rate. If the field amplitudes of a mode decay as ∝e−αt, the stored energy decays as ∝e−2αt. Thequality factor Q is defined as

Here ω n denotes the eigenfrequency and W the stored energy. The expression—dW/dt in the denominator of Eq. (8.15) describes the power that is lost into the cavity walls (or any other loss mechanism); it is equally the power that will have to be fed into the cavity in order to keep the stored energy at a constant value W. It is clear that the larger Q, the smaller will become the power necessary to compensate for cavity losses. In other words, one can design the cavity to be operated at or near one of its eigenfrequencies (often the lowest order mode, which is the one with the lowest eigenfrequency) and thus make use of the high Q by using the resonance phenomenon that will lead to large fields.

We define the “accelerating voltage” of a cavity (or more precisely of one cavity oscillation mode) as the integrated change of the kinetic energy of a traversing particle divided by its charge:

where ds denotes integration along the particle trajectory, taking the fields at the actual position of the particle at the time of passage. With the fields varying at a single frequency ω and particles moving with the speed of light in the direction z, this expression simplifies to

The underscore denotes now that we understand the field as the complex amplitude of the field of the cavity oscillation mode, with the real fields oscillating as \( \operatorname{Re}\left\{\underset{\_}{\boldsymbol{E}}\left(x,y,z\right){e}^{j\omega t}\right\} \) in time. The exponential accounts for the movement of the particle with speed c through the cavity while the fields continue to oscillate. It is clear that the expression (8.17) is generally complex; the phase angle accounts for the phase difference between the RF field and the bunches of the passing beam; the complex amplitude is generally referred to as accelerating voltage. Since the energy W stored in the cavity is proportional to the square of the field (and thus the square of the accelerating voltage), it can be used to conveniently normalize the accelerating voltage; this leads to the definition of the quantity R-upon-Q:

The R-upon-Q thus simply quantifies how effectively the cavity converts stored energy into acceleration. Note that R-upon-Q is uniquely determined by the geometry of the cavity and not by the loss mechanism that leads to a finite Q. Multiplying the R-upon-Q with the quality factor Q, one obtains the shunt impedance R, which describes how effectively the cavity converts input power into acceleration voltage, as long as beam loading can be neglected:

Following this line of thought, the R-upon-Q may be considered a fundamental quantity and the shunt impedance R a derived quantity, in spite of the names that suggest otherwise. Note that there are a number of different definitions for these quantities in the technical literature. The definition given here is often used for synchrotrons, while in the definition used for linacs (Eq. 7.7), the factor 2 on the right hand side of Eqs. (8.18) and (8.19) is missing (“Linac-Ohms”).