Abstract

In this article, we propose an analysis of polysemy and coercion phenomena using a syntax-semantics interface which combines Lexicalized Tree Adjoining Grammar with frame semantics and Hybrid Logic. We show that this framework allows a straightforward and explicit description of selectional mechanisms as well as coercion processes. We illustrate our approach by applying it to examples discussed in Generative Lexicon Theory [23, 25]. This includes the modeling of dot objects and associated coercion phenomena in our framework, as well as cases of functional coercion triggered by transitive verbs and adjectives.

This work was supported by the CRC 991 “The Structure of Representations in Language, Cognition, and Science” funded by the German Research Foundation (DFG). The first author was financially supported during his stay in Düsseldorf by ENS Cachan, Université Paris-Saclay. We would like to thank the three anonymous reviewers for their helpful comments.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Systematic polysemy

- Coercion

- Lexical semantics

- Frame semantics

- Hybrid logic

- Lexicalized tree adjoining grammars

- Hole semantics

- Underspecification

- Syntax-semantics interface

- Generative lexicon theory

1 Introduction

Any compositional model of the syntax-semantics interface has to cope with polysemy and coercion phenomena. Well-known examples of inherent systematic polysemy are the varying sortal characteristics of physical carriers of information such as book: Books can be bought, read, understood, put away, and remembered, and thus can refer to physical objects or abstract, informational entities, depending on the context of use. The question is then how to represent such potential meaning shifts in the lexicon and how to integrate the respective meaning components compositionally within the given syntagmatic environment. A different but related phenomenon in selectional polysemy [25], where an apparent selectional mismatch is resolved by coercion mechanisms that go beyond referential shifts provided by lexical polysemy. Examples are given by expressions like Mary began the book and John left the party, where the aspectual verb begin selects for an event argument (here, an activity with the book as an undergoer), and leave selects for an argument of type location.

There is a considerable body of work on the compositional treatment of polysemy and coercion. One important strand of research in this domain is the dot type and qualia structure approach as part of Generative Lexicon Theory developed by James Pustejovsky and his colleagues [6, 22, 23, 25, 26]. A more recent development in this direction is Type Composition Logic [3–5, 7], which introduces an elaborate system of complex types and rules for them. The approach presented in the following takes a model-oriented perspective in that it asks for the semantic structures in terms of semantic frames that underlie the phenomena in question. We propose a compositional framework in which syntactic operations formulated in Lexicalized Tree Adjoining Grammar drive the semantic composition. On the semantic side, we use underspecified Hybrid Logic formulas for specifying the associated semantic frames.

The rest of the paper is structured as follows: Sect. 2 introduces the general model of the syntax-semantics interface adopted in this paper. Its main components are a formal model of semantic frames, a slightly adapted version of Hybrid Logic for describing such frames, and a version of Lexicalized Tree Adjoining Grammar which combines elementary trees with underspecified Hybrid Logic formulas. Section 3 shows how this framework can be fruitfully employed for a detailed modeling of systematic polysemy and coercion phenomena. It is shown how dot objects can be represented in frame semantics and how various cases of argument selection and coercion can be formally described. Section 4 gives a brief summary and lists some topics of current and future research.

2 The Formal Framework

We follow [16] in adopting a framework for the syntax-semantics interface that pairs a Tree Adjoining Grammar (TAG) with semantic frames. More concretely, every elementary syntactic tree is paired with a frame description formulated in Hybrid Logic (HL) [2]. In the following, we briefly introduce this framework; see [14, 16] for more details.

2.1 Frames

Frames [8, 10, 18] are semantic graphs with labeled nodes and edges, as in Fig. 1, where nodes correspond to entities (individuals, events, ...) and edges to (functional or non-functional) relations between these entities. In Fig. 1 all relations except part-of are meant to be functional.

Frames can be formalized as extended typed feature structures [15, 21] and specified as models of a suitable logical language. In order to enable quantification over entities or events, [16] propose to use Hybrid Logic, an extension of modal logic.

Frame for the meaning of the man walked to the house (adapted from [15])

2.2 Hybrid Logic and Semantic Frames

Before giving the formal definition of Hybrid Logic (HL) as used in this paper, let us illustrate its use for frames with some examples. Consider the frame in Fig. 1. The types in frames are propositions holding at single nodes, the formula \(\textit{motion}\), for instance, is true at the node \(n_0\) but false at all other nodes of our sample frame. Furthermore, we can talk about the existence of an attribute for a node. This corresponds to stating that there exists an outgoing edge at this node using the  modality in modal logic. In frames, there may be several relations, hence several modalities, denoted by \(\left\langle R \right\rangle \) with R the name of the relation. For example,

modality in modal logic. In frames, there may be several relations, hence several modalities, denoted by \(\left\langle R \right\rangle \) with R the name of the relation. For example,  is true at the motion node \(n_0\) in our frame because there is an agent edge from \(n_0\) to some other node where \(\textit{man}\) holds. (Note that HL does not distinguish between functional and non-functional edge labels. That is, functionality has to be enforced by additional constraints.) Finally, we can have conjunction, disjunction, and negation of these formulas. E.g.,

is true at the motion node \(n_0\) in our frame because there is an agent edge from \(n_0\) to some other node where \(\textit{man}\) holds. (Note that HL does not distinguish between functional and non-functional edge labels. That is, functionality has to be enforced by additional constraints.) Finally, we can have conjunction, disjunction, and negation of these formulas. E.g.,  is also true at the motion node \(n_0\).

is also true at the motion node \(n_0\).

HL extends this with the possibility to name nodes in order to refer to them, and with quantification over nodes. We use a set of nominals (unique node names), and a set of node variables. \(n_0\) is such a nominal, the node assigned to it is the motion node in our sample frame. x, y, ... are node variables. The truth of a formula is given with respect to a specific node w in a frame, an assignment V from nominals to nodes in the frame and an assignment g which maps variables to nodes in the frame.

There are different ways to state existential quantifications in HL, namely  and \(\exists x.\phi \).

and \(\exists x.\phi \).  is true at w if there exists a node \(w'\) at which \(\phi \) holds. In other words, we move to some node \(w'\) in the frame and there \(\phi \) is true. For instance,

is true at w if there exists a node \(w'\) at which \(\phi \) holds. In other words, we move to some node \(w'\) in the frame and there \(\phi \) is true. For instance,  is true at any node in our sample frame. As usual, we define

is true at any node in our sample frame. As usual, we define  and \(\phi \rightarrow \psi \equiv \lnot \phi \vee \psi \). In contrast to

and \(\phi \rightarrow \psi \equiv \lnot \phi \vee \psi \). In contrast to  is true at w if there is a \(w'\) such that \(\phi \) is true at w under an assignment of x to \(w'\). In other words, there is a node that we name x but for the evaluation of \(\phi \), we do not move to that node. E.g., the formula

is true at w if there is a \(w'\) such that \(\phi \) is true at w under an assignment of x to \(w'\). In other words, there is a node that we name x but for the evaluation of \(\phi \), we do not move to that node. E.g., the formula  is true at the motion node in our sample frame.

is true at the motion node in our sample frame.

Besides quantification, HL also allows us to use nominals or variables to refer to nodes via the @ operator: @\(_n \phi \) specifies the moving to the node w denoted by n before evaluating \(\phi \). n can be either a nominal or a variable. The \(\mathrel {\downarrow \!}\) operator allows us to assign the current node to a variable: \(\mathrel {\downarrow \!}x.\phi \) is true at w if \(\phi \) is true at w under the assignment \(g_w^x\). I.e., we call the current node x, and, under this assignment, \(\phi \) is true at that node. E.g.,  is true at the motion node in our frame.

is true at the motion node in our frame.

To summarize this, our HL formulas have the following syntax: Let \(\mathsf {Rel}=\mathsf {Func}\cup \mathsf {PropRel}\) be a set of functional and non-functional relation symbols, \(\mathsf {Type}\) a set of type symbols, \(\mathsf {Nom}\) a set of nominals (node names), and \(\mathsf {Nvar}\) a set of node variables, with \(\mathsf {Node}=\mathsf {Nom}\cup \mathsf {Nvar}\). Formulas are defined as:

where \(p\in \mathsf {Type}\), \(n\in \mathsf {Node}\), \(x\in \mathsf {Nvar}\), \(R \in \mathsf {Rel}\) and \(\phi , \phi _1, \phi _2 \in \mathsf {Forms}\). For more details and the formal definition of satisfiability as explained above see [14, 16].

2.3 LTAG and Hybrid Logic

A Lexicalized Tree Adjoining Grammar (LTAG; [1, 12]) consists of a finite set of elementary trees. Larger trees are derived via substitution (replacing a leaf with a tree) and adjunction (replacing an internal node with a tree). An adjoining tree has a unique foot node (marked with an asterisk), which is a non-terminal leaf labeled with the same category as the root of the tree. When adjoining such a tree to some node n of another tree, in the resulting tree, the subtree with root n from the original tree is attached at the foot node of the adjoining tree.

The non-terminal nodes in LTAG are usually enriched with feature structures [27]. More concretely, each node has a top and a bottom feature structure (except substitution nodes, which have only a top). Nodes in the same elementary tree can share features. Substitutions and adjunctions trigger unifications in the following way: In a substitution step, the top of the root of the new tree unifies with the top of the substitution node. In an adjunction step, the top of the root of the adjoining tree unifies with the top of the adjunction site and the bottom of the foot of the adjoining tree unifies with the bottom of the adjunction site. Furthermore, in the final derived tree, top and bottom must unify in all nodes.

Our framework for the syntax-semantics interface follows previous LTAG semantics approaches in pairing each elementary tree with a semantic representation that consists of a set of HL formulas, which can contain holes and which can be labeled. In other words, we apply hole semantics [9] to HL and link these underspecified formulas to the elementary trees. Composition is then triggered by the syntactic unifications arising from substitution and adjunction, using interface features on the syntactic trees, very similar to [11, 13, 17].

As a basic example consider the derivation given in Fig. 2 where the two NP trees are substituted into the two argument slots in the ate tree. The interface features i on the NP nodes make sure that the contributions of the two arguments feed into the agent and theme nodes of the frame. Furthermore, an interface feature mins is used for providing the label of the  formula as minimal scope to a possible quantifier. The unifications lead to identities

formula as minimal scope to a possible quantifier. The unifications lead to identities  ,

,  and

and  , triggered by the feature unifications on the syntactic tree. As a result, when collecting all formulas, we obtain the underspecified representation

, triggered by the feature unifications on the syntactic tree. As a result, when collecting all formulas, we obtain the underspecified representation

The relation \(\lhd ^*\) links holes to labels: \(h \lhd ^* l\) signifies that the formula labeled l is a subformula of h or, to put it differently, is contained in h. In (2), the  formula, labeled \(l_1\), has to be part of the nuclear scope of the quantifier, which is given by the hole

formula, labeled \(l_1\), has to be part of the nuclear scope of the quantifier, which is given by the hole  . Disambiguating such underspecified representations consists of “plugging” the labeled formulas into the holes while respecting the given constraints. Such a plugging amounts to finding an appropriate bijection from holes to labels. (2) has a unique disambiguation, namely

. Disambiguating such underspecified representations consists of “plugging” the labeled formulas into the holes while respecting the given constraints. Such a plugging amounts to finding an appropriate bijection from holes to labels. (2) has a unique disambiguation, namely  . This leads to (3), which is then interpreted conjunctively.

. This leads to (3), which is then interpreted conjunctively.

3 Application to Coercion

3.1 Dot Objects in Frames Semantics

In order to capture the full complexity of concepts while modeling them, we need a way to represent the phenomenon of inherent polysemy, that is, the phenomenon that certain concepts integrate two or more different and apparently contradictory senses. Consider for instance the following two sentences:

Both sentences use book in the common way, but while in (4a) the adjective heavy applies to a physical object, the adjective interesting in (4b) requires its object to be an information. It thus appears that book carries two different aspects, which are arguably incompatible. However, this contradiction reveals an underlying structure in which these aspects are linked to each other. This structure appears in Pustejovsky’s work [23] under the name of dot object. Following this approach, our frame definition of book encodes the lexical structure proposed by Pustejovsky by taking two nodes with types information and phys-obj respectively, to represent both aspects, and defining an explicit relation between them, which is quite similar to what Pustejovsky calls the formal component of the concept. In the traditional definition of frames, one node should be marked as the referential, or central one, the others being connected to it by functional edges (see e.g. [21]). The necessity of fixing a referent for sense determination was also proposed in [19]. We have therefore chosen to take the physical aspect of book as the referential node; and since its two aspects are linked by the “has information content” relation, we define a content attribute to connect the physical object to the information it carries.Footnote 1 We thus get the following formula to express the semantics for book:

To ensure that the type book is permitted where a phys-obj is required, we assume general constraints which, among other things, express that books are entities of type phys-obj. Furthermore, we introduce a type info-carrier for information carrying physical objects, and therefore build our constraints in two steps:

The purpose of the type info-carrier is to provide a stage between specific types like book and more general ones like phys-obj, to which other concepts can be linked. For instance, a complex word like newspaper should have a type which implies the type info-carrier [6, 20, 24]. Note that we can easily deduce the following constraint from (6a) and (6b):

This constraint will be very useful to simplify formulas where the type book is involved.

3.2 Coercion, Selection and Dot Objects

Let us start with the case of read, which has been described in [23]. The verb read allows for the direct selection of the dot object book as complement, as illustrated in (8a), but also enables coercion of its complement from type information in (8b) as well as from type phys-obj in (8c). The distinction between all these concepts can be explained as follows: although books and stories are informational in nature, a story does not need a physical realisation, whereas a book does, and although books and blackboards are physical objects, a blackboard does not necessarily contain information. The constraints for the associated types are defined in (9).

The semantics for read has to encode the direct selection of a dot object as a complement. In [23], the verb read is analysed with two distinct events linked by a complex relation expressing the fact that the reader first sees the object before reaching its informational content. We want to keep a similar analysis here; we build our semantic definition of read by taking an event node of type reading with two attributes, namely perceptual-component and mental-component, whose values are respectively of type perception and comprehension.Footnote 2 These nodes are meant to represent the decomposition of the activity of reading into two subevents, the action of looking at a physical object (the perception) and the action of processing the provided information (the comprehension). These two events are linked by a non-functional temporal relation inspired from the one proposed by Pustejovsky: we call it ordered-overlap, and it expresses the fact that the perception starts before the comprehension and that these two subevents (typically) overlap. For the sake of simplicity, we encode the central part of this semantics into the definition of reading with the following constraint:

Moreover, the perception node has an attribute stimulus describing the role of its object, which has to be of type phys-obj, and the comprehension node has an attribute content which refers to the information that was read. We also explicitly add in our semantics the requirement that the value of stimulus has a content attribute, whose value is the same for the content attribute from the comprehension node. Furthermore, since the argument contributed by the object can be either the stimulus of the perception (phys-obj) or its content, we add a disjunction of these two possibilities. We therefore obtain the formula represented in Fig. 3, with the associated elementary tree.Footnote 3 In this formula,  is intended to unify with a node variable when the direct object gets inserted (i.e.,

is intended to unify with a node variable when the direct object gets inserted (i.e.,  is provided as value of the feature i in the object node associated lexicalized tree for read), and the process of rewriting and simplifying the final formula will allow us to identify either x or y with the variable of the direct object, depending on whether this is of type phys-obj or information.

is provided as value of the feature i in the object node associated lexicalized tree for read), and the process of rewriting and simplifying the final formula will allow us to identify either x or y with the variable of the direct object, depending on whether this is of type phys-obj or information.

We can now use this elementary tree-frame pair to achieve a derivation for (8a), which is represented in Fig. 4. The HL formula coming with read is now labeled and its label is provided as potential minimal scope for quantifiers at the NP slots. Concerning the entry of the, we simplify here and treat is as an existential quantifier, disregarding the presuppositions it carries. The book formula is also labeled, and the label is made available via an interface feature p (for “proposition”).Footnote 4 Due to the two scope constraints, this proposition will be part of the restriction of the quantifier (i.e., part of the subformula at  ) while the read formula will be part of the nuclear scope, i.e., part of the subformula at

) while the read formula will be part of the nuclear scope, i.e., part of the subformula at  . Substitutions and adjunctions lead to the unifications

. Substitutions and adjunctions lead to the unifications  ,

,  ,

,  and

and  on the interface features. As a result, we obtain the following underspecified representation:

on the interface features. As a result, we obtain the following underspecified representation:

The only solution for disambiguating the representation in (11) is the mapping  , which leads to (12):

, which leads to (12):

Furthermore, due to the constraint (7) and due to the incompatibility of information and phys-obj (9c), we can deduce that \(z\leftrightarrow x\) and \(\lnot (z\leftrightarrow y)\). Consequently, we can simplify our formulas by omitting the \(\exists x\) quantification and replacing every x with z. Putting these things together leads to the representation (13):

The frame shown in Fig. 5 is a minimal model for (13) which also takes (10) into account, i.e., it is the smallest frame graph satisfying (13) and (10).

The semantic representations of (8b) and (8c) can be derived in a similar way, except that for (8b), the variable z introduced by the quantifier will be equivalent to the information variable y in the contribution of read. The interesting point in these cases is that the final semantic formula involves a node which reflects respectively that there is an implicit material on which the story is written (8b) and that implicit contents are written on the blackboard (8c). The analysis of (8a) differs from that of (8c) in that the semantics of book always brings a content attribute of type information, which is merged with the constraints contributed by the semantics of read. In (8c), by contrast, the content attribute of the blackboard is contributed solely by the verb.

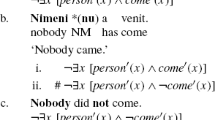

It is also worth asking how to handle cases where the verb does not select a dot object as for read, but rather a simple type. Indeed, although the dot object book has the properties of physical objects and of information, there are some verbs which do not allow book as a complement but select a pure informational argument. These verbs actually provide no possibilities of coercion: their argument has to be of the specified type to allow a direct selection. This kind of selection is referred to as passive selection, in opposition to the active selection which enables coercion and type accommodation [23]. To understand this phenomenon, consider the following sentences (those in (15) are taken from [23]):

The verbs believe and tell both require their argument to be of type information; however, the verb believe accepts the dot object book as its argument whereas tell does not: the sentence (15b) seems to be incorrect. Thus the examples in (14) illustrate a case of active selection, with a coercion of the complement in (14b), and those in (15) show a case of passive selection.

With our semantics for read, the way to build the semantics for these two verbs is quite straightforward. In comparison to read, we only need in each case a single node to represent the activity, respectively of believing and of telling. But the really interesting point is about the selection of the variable provided by the semantics of the argument. In the case of read, we had the subformula  , with

, with  to be unified with the variable contributed by the direct object, regardless of its type. For believe, we need a similar subformula that allows for the object variable to be either of type information, or to have a content attribute with a value of type information; cf. (16a). For tell, however, the object variable has to be unified directly with the theme of telling, which is of type information; cf. (16b).

to be unified with the variable contributed by the direct object, regardless of its type. For believe, we need a similar subformula that allows for the object variable to be either of type information, or to have a content attribute with a value of type information; cf. (16a). For tell, however, the object variable has to be unified directly with the theme of telling, which is of type information; cf. (16b).

In this way, active and passive selections differ in that in the case of active selection, we have an additional subformula that handles coercion possibilities.Footnote 5

3.3 Other Cases of Coercion

Coercion is not limited to dot objects: it can occur for many other concepts with a simple type. We will discuss here a few more examples of coercion, and present ways to handle them within our framework. We will thus show that many different cases of coercion can be solved in similar ways. We start here with a sentence taken from [25]:

The verb leave requires its object to be of type location while in (17), the noun party is provided, which is of type event and does not carry a dot type. Here, the coercion relies on the fact that party, like every event, has an associated location, which is basically where the party takes place. The application of leave to the party therefore involves a transfer of meaning from the direct sense to a related one. This phenomenon is referred to as attribute functional coercion [25, 26] because it operates on concepts which can serve as types as well as attributes.

Our framework is capable of handling such cases without problems. Indeed, the basis of frame semantics is to work with attribute-value descriptions, and the coercion which occurs here shifts from one sense to another by following an attribute to get to the required concept type. Hence we naturally define a type location and an attribute location to represent the dual nature of the concept of location. As previously for book, we need to assume the general constraints in (18) to link party with these new elements:

It remains to define the semantics for leave in such a way that it enables coercion to the value of the attribute location when the given argument is not of the required type. This can be done in a similar way to what we did in the case of believe in (16a), leading to the formula in Fig. 6, where  is intended to be unified with the node variable from the direct object argument. By following the steps described in Sect. 3.2, we can easily produce a derivation for (17). As a starting point, we consider the yield of the syntactic unifications and the mapping of holes to formulas, which gives the following result:

is intended to be unified with the node variable from the direct object argument. By following the steps described in Sect. 3.2, we can easily produce a derivation for (17). As a starting point, we consider the yield of the syntactic unifications and the mapping of holes to formulas, which gives the following result:

With the constraints in (18), we can conclude that \(\lnot (z\leftrightarrow x)\) and consequently, we obtain the following semantics for (17):

There is only a slight difference between functional coercion of the kind just described and the treatment of dot objects shown before: frame semantics allows us to process both types of coercion phenomena in a similar way because of the underlying attribute-value structure. A further example is given by the dot object speech, which combines the types event and information [25]. Speech has the two attributes content and location, among others. More precisely, the dot type speech is characterized by the constraint in (21a), which, together with (18b) repeated here as (21b) gives rise to the constraint in (21c). Note that the latter constraint makes no difference between the two attributes – although they have different “levels” of origin, as content is a direct consequence of speech whereas location is implied by the type event, which is entailed by speech.

Two further examples, adapted from [6], are considered in (22) below. Their purpose is to show how adjectival modification which enables coercion can be handled in our framework.

In (22a), both heavy and on magic act as modifiers of of book, but the former modifier acts on the phys-obj component of the dot object while the latter modifier acts on the information component. In (22b), on the other hand, the adjective readable coerces screen from the simple type phys-obj to a dot type, with a new informational component.

Let us start with the sentence in (22a). The most interesting parts of its derivation are represented in Fig. 7. We define the semantics of heavy by assuming that it selects directly a physical object (and so voluntarily keeping any other meaning aside). The semantics of magic is simply regarded as sortal for the purposes of the present example. As for on, its semantic representation includes a disjunction to allow for the identification with a node of the required type, using a similar technique as in the representation of believe above.Footnote 6 Moreover, we introduce a type knowledge, which is intended to be a subtype of information, and which has a topic attribute describing what field the knowledge is about. That is, we have the constraint in (23).

The substitutions and adjunctions in Fig. 7 trigger unifications  ,

,  and

and  , which leads to the HL formula in (24):

, which leads to the HL formula in (24):

Formula (24) can be simplified due to the fact that book and knowledge are incompatible; therefore the first element of the disjunction \(x \vee \langle \textsc {content}\rangle x\) (which is evaluated in the book node) cannot be true. Consequently, we reduce the disjunction to \(\langle \textsc {content}\rangle x\).

It is also worth noticing that the verb master seems to require an object of type knowledge and not merely information. Indeed, the use of this verb with another subtype of information as in sentences (25b) seems unacceptable, while (25a) involving a pure knowledge concept is fully acceptable. The sentence in (25c) shows that master is able to coerce at least certain types of arguments.

The selectional mechanism is therefore more complex for this verb. Nevertheless, as the type knowledge provided by on magic overwrites the information value in the relevant example (22a), book has already a coerced type for its content in this case, which allows us to leave a more general analysis of master for future work and to assume for now the same behavior for this verb as for believe. A derivation for the sentence in (22a) leads thus, after unification and simplification, to the following semantic representation:

The case in (22b) is very similar to the coercion of blackboard to a dot object by read. The semantics of the adjective readable does nothing else than adding a content attribute with an information value to a physical object. This translates into the logical formula in (27a). Moreover, screen is considered as a subtype of phys-obj, and we assume here a simple semantics for break, given in (27b). Finally, (27c) recalls the semantics for every.Footnote 7

The derivation for (22b) therefore leads to:

The foregoing examples have shown that our formal framework allows us to solve a large variety of coercion problems in similar ways, building on constraint-based semantic representations combining frames and HL.

4 Conclusion

In this paper, we presented a model of coercion mechanisms for the case of verbs and adjectives which select nominal arguments within a syntax-semantics interface based on frames semantics using LTAG and HL. We also provided a frame-semantic representation of Pustejovsky’s dot objects which keeps the notion of referential meaning and explicitly includes the relations between the different aspects of a concept. Frame semantics is well-suited to handle such mechanisms since type shifting can simply be modeled by moving along an attribute relation from a given meaning to the coerced one. Furthermore, the approach with HL and holes semantics in the composition process allows us to implement precisely the argument selection mechanisms into the model, using a disjunction of type shifting possibilities in the logical representation of a predicate.

Another interesting point of this model is the fact that it is able to handle different cases of coercion in similar ways, thus avoiding the requirement of more complex structures when involving polysemous concepts. We also think that coercion phenomena in sentences like Mary began the book, in which aspectual verbs with a nominal argument are involved, could be modeled using the same kind of representation. Indeed, in Pustejovsky’s analysis the underspecified information has been encoded into the lexicon by a qualia structure, where qualia are partial functions describing the roles that a concept can have [23]. As such, it seems possible to represent these qualia by attribute-value pairs, and modeling this kind of coercion would therefore follow the way presented in this paper. Moreover, the general constraints in HL that are used in our framework could be extended by contextual constraints as well: we would be able to change the intended qualia of a word depending on the context, and also to handle cases of metaphoric readings by adding some temporary constraints if the previous selection mechanism fails.

Notes

- 1.

One of the reviewers raised the question on what grounds phys-obj is preferred over information as the primary lexical meaning facet of book and, more importantly, of how to decide this question for related terms like novel and for dot types in general. We regard this as an empirical issue which falls ultimately into the realm of psycholinguistic research. As a first approximation, we tend to rely on the information provided by monolingual dictionaries. For instance, the Longman Dictionary of Contemporary English tells us that a book is “a set of printed pages that are held together in a cover”.

- 2.

In the following, we will abbreviate these attributes by perc-comp and ment-comp, respectively.

- 3.

The constraint (10) should be applied here, but for reasons of space, we do not list all the conjuncts contributed by it.

- 4.

Note that in Fig. 4, we have already applied (6).

- 5.

As pointed out by one of the reviewers, having the attribute content in the disjunction in (16a) imposes specific constraints on the semantic structure of the argument. We leave it as a question for future research whether constraints of this type are overly restrictive when moving from selected examples to large-scale applications.

- 6.

The given semantic representation for on is considerably simplified. A more precise representation should include a selection between two effects depending on the type of the argument of on (unified with

on Fig. 7), as the preposition can also occur in phrases like the book on the table where a physical object is involved: in this case, a more elaborated subformula with a location attribute would replace the subformula

on Fig. 7), as the preposition can also occur in phrases like the book on the table where a physical object is involved: in this case, a more elaborated subformula with a location attribute would replace the subformula  .

. - 7.

Lack of space prevents us from showing the associated elementary syntactic trees.

References

Abeillé, A., Rambow, O.: Tree adjoining grammar: an overview. In: Abeillé, A., Rambow, O. (eds.) Tree Adjoining Grammars: Formalisms, Linguistic Analysis and Processing, pp. 1–68. CSLI Press, Stanford (2000)

Areces, C., ten Cate, B.: Hybrid logics. In: Blackburn, P., Benthem, J.V., Wolter, F. (eds.) Handbook of Modal Logic, pp. 821–868. Elsevier, Amsterdam (2007)

Asher, N.: A Web of Words: Lexical Meaning in Context. Cambridge University Press, Cambridge (2011)

Asher, N.: Types, meanings and coercions in lexical semantics. Lingua 157, 66–82 (2015)

Asher, N., Luo, Z.: Formalization of coercions in lexical semantics. In: Proceedings of Sinn und Bedeutung, vol. 17, pp. 63–80 (2012)

Asher, N., Pustejovsky, J.: Word meaning and commonsense metaphysics. Semant. Arch. (2005). http://semanticsarchive.net/

Asher, N., Pustejovsky, J.: A type composition logic for generative lexicon. J. Cogn. Sci. 6, 1–38 (2006)

Barsalou, L.W.: Frames, concepts, and conceptual fields. In: Lehrer, A., Kittay, E.F. (eds.) Frames, Fields, and Contrasts, pp. 21–74. Lawrence Erlbaum, Mahwah (1992)

Bos, J.: Predicate logic unplugged. In: Dekker, P., Stokhof, M. (eds.) Proceedings of the 10th Amsterdam Colloquium, pp. 133–142 (1995)

Fillmore, C.J.: Frame semantics. In: Linguistics in the Morning Calm, pp. 111–137. Hanshin Publishing Co., Seoul (1982)

Gardent, C., Kallmeyer, L.: Semantic construction in feature-based TAG. In: Proceedings of the 10th Meeting of the European Chapter of the Association for Computational Linguistics (EACL), pp. 123–130 (2003)

Joshi, A.K., Schabes, Y.: Tree-adjoning grammars. In: Rozenberg, G., Salomaa, A.K. (eds.) Handbook of Formal Languages, vol. 3, pp. 69–123. Springer, Heidelberg (1997)

Kallmeyer, L., Joshi, A.K.: Factoring predicate argument and scope semantics: underspecified semantics with LTAG. Res. Lang. Comput. 1(1–2), 3–58 (2003)

Kallmeyer, L., Lichte, T., Osswald, R., Pogodalla, S., Wurm, C.: Quantification in frame semantics with hybrid logic. In: Cooper, R., Retoré, C. (eds.) Proceedings of the ESSLLI 2015 Workshop on Type Theory and Lexical Semantics, Barcelona, Spain (2015)

Kallmeyer, L., Osswald, R.: Syntax-driven semantic frame composition in lexicalized tree adjoining grammars. J. Lang. Model. 1(2), 267–330 (2013)

Kallmeyer, L., Osswald, R., Pogodalla, S.: Progression and iteration in event semantics - an LTAG analysis using hybrid logic and frame semantics. In: Pinón, C. (ed.) Empirical Issues in Syntax and Semantics, vol. 11 (2016)

Kallmeyer, L., Romero, M.: Scope and situation binding in LTAG using semantic unification. Res. Lang. Comput. 6(1), 3–52 (2008)

Löbner, S.: Evidence for frames from human language. In: Gamerschlag, T., Gerland, D., Osswald, R., Petersen, W. (eds.) Frames and Concept Types. Applications in Language and Philosophy, pp. 23–67. Springer, Heidelberg (2014)

Nunberg, G.: The non-uniqueness of semantic solutions: polysemy. Linguist. Philos. 3(2), 143–184 (1979)

Nunberg, G.: Transfers of meaning. J. Seman. 12(2), 109–132 (1995)

Petersen, W.: Representation of concepts as frames. In: The Baltic International Yearbook of Cognition, Logic and Communication, vol. 2, pp. 151–170 (2006)

Pustejovsky, J.: The Generative Lexicon. MIT Press, Cambridge (1995)

Pustejovsky, J.: The semantics of lexical underspecification. Folia Linguistica 32(3–4), 323–348 (1998)

Pustejovsky, J.: A survey of dot objects. Manuscript (2005)

Pustejovsky, J.: Coercion in a general theory of argument selection. Linguistics 49(6), 1401–1431 (2011)

Pustejovsky, J., Rumshisky, A.: Mechanisms of sense extension in verbs. In: de Schryver, G.-M. (ed.) A Way with Words: Recent Advances in Lexical Theory and Analysis, pp. 67–88. Menha Publishers, Kampala (2010)

Vijay-Shanker, K., Joshi, A.K.: Feature structures based tree adjoining grammar. In: Proceedings of the 12th International Conference on Computational Linguistics (COLING), pp. 714–719 (1988)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution-NonCommercial 2.5 International License (http://creativecommons.org/licenses/by-nc/2.5/), which permits any noncommercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2016 The Author(s)

About this paper

Cite this paper

Babonnaud, W., Kallmeyer, L., Osswald, R. (2016). Polysemy and Coercion – A Frame-Based Approach Using LTAG and Hybrid Logic. In: Amblard, M., de Groote, P., Pogodalla, S., Retoré, C. (eds) Logical Aspects of Computational Linguistics. Celebrating 20 Years of LACL (1996–2016). LACL 2016. Lecture Notes in Computer Science(), vol 10054. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-53826-5_2

Download citation

DOI: https://doi.org/10.1007/978-3-662-53826-5_2

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-53825-8

Online ISBN: 978-3-662-53826-5

eBook Packages: Computer ScienceComputer Science (R0)

on Fig.

on Fig.  .

.