Abstract

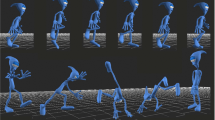

Recent works on human action recognition have focused on representing and classifying articulated body motion. These methods require a detailed knowledge of the action composition both in the spatial and temporal domains, which is a difficult task, most notably under real-time conditions. As such, there has been a recent shift towards the exemplar paradigm as an efficient low-level and invariant modelling approach. Motivated by recent success, we believe a real-time solution to the problem of human action recognition can be achieved. In this work, we present an exemplar-based approach where only a single action sequence is used to model an action class. Notably, rotations for each pose are parameterised in Exponential Map form. Delegate exemplars are selected using k-means clustering, where the cluster criteria is selected automatically. For each cluster, a delegate is identified and denoted as the exemplar by means of a similarity function. The number of exemplars is adaptive based on the complexity of the action sequence. For recognition, Dynamic Time Warping and template matching is employed to compare the similarity between a streamed observation and the action model. Experimental results using motion capture demonstrate our approach is superior to current state-of-the-art, with the additional ability to handle large and varied action sequences.

This research is funded by the John Dalton Institute.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

Poppe, R.: A survey on vision-based human action recognition. Image and Vision Computing 28(6), 976–990 (2010)

Elgammal, A., Shet, V., Yacoob, Y., Davis, L.S.: Learning dynamics for exemplar-based gesture recognition. In: CVPR, pp. 571–578. IEEE Computer Society, Washington, DC (2003)

Barnachon, M, Bouakaz, S., Boufama, B., Guillou, E.: Ongoing human action recognition with motion capture. Pattern Recognition (2013)

Grassia, F.S.: Practical parameterization of rotations using the exponential map. Journal of Graphics Tools 3(3), 29–48 (1998)

Bregler, C., Malik, J.: Tracking people with twists and exponential maps. In: CVPR, pp. 8–15 (1998)

Taylor, G.W., Hinton, G.E., Roweis, S.: Modeling human motion using binary latent variables. In: NIPS, p. 2007 (2006)

MacQueen, J.: Some methods for classification and analysis of multivariate observations. In: Proc. of the Fifth Berkeley Symposium on Mathematrical Statistics and Probability, pp. 281–297. University of California Press (1967)

Ketchen, D., Shook, C.: The application of cluster analysis in strategic management research: An analysis and critique. Strategic Management Journal 17(6), 441–458 (1996)

Carnegie Mellon University Motion Capture Dataset. The data used in this project was obtained from mocap.cs.cmu.edu. The database was created with funding from NSF EIA-0196217

Müller, M., Röder, T., Clausen, M., Eberhardt, B., Krüger, B., Weber, A.: Documentation mocap database HDM05. Universität Bonn, Tech. Rep. CG-2007-2 (2007)

Müller, M., Baak, A., Seidel, H.-P.: Efficient and robust annotation of motion capture data. In: ACM SIGGRAPH/Eurographics Symposium on Computer Animation, New Orleans, LA, pp. 17–26 (August 2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Leightley, D., Li, B., McPhee, J.S., Yap, M.H., Darby, J. (2014). Exemplar-Based Human Action Recognition with Template Matching from a Stream of Motion Capture. In: Campilho, A., Kamel, M. (eds) Image Analysis and Recognition. ICIAR 2014. Lecture Notes in Computer Science(), vol 8815. Springer, Cham. https://doi.org/10.1007/978-3-319-11755-3_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-11755-3_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-11754-6

Online ISBN: 978-3-319-11755-3

eBook Packages: Computer ScienceComputer Science (R0)