Abstract

Collaborative Human-Robot workcells introduce robot-assisted operations in small-volume production or assembly processes, where conventional automation is noncompetitive. Unfortunately, the collaborative work of humans and robots sharing the same work area and/or working on the same assembly operation may pose unprecedented problems and failure risks. Failure Mode, Effects and Criticality Analysis (FMECA) is a popular tool to design reliable processes, which investigates the potential failure modes from the perspective of severity, occurrence and detection. The traditional FMECA approach requires the assessment of failure modes to be carried out collectively by a group of experts. Nevertheless, in the field of Human-Robot collaboration, experts are often unlikely to agree in their judgements, due to the almost inexistent historical records. Additionally, the traditional approach is not appropriate for decentralized production/assembly processes.

The paper revisits the traditional approach and integrates it with the ZMII-technique – i.e., a recent aggregation technique developed by the authors – which overcomes some limitations, including but not limited to: (i) arbitrary categorization and questionable aggregation of the expert judgments, (ii) disregarding the variability in these judgments, and (iii) disregarding the result uncertainty. The description is supported by a real-life application example.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction and Literature Review

Human-Robot Collaboration (HRC) is one of the more significant enabling technologies in the Industry 4.0 framework. Several countries adopt supporting policies to boost the upgrade of existing machine tools and robots with new collaborative models compliant with Industry 4.0 guidelines [1].

The technical issues raised by the introduction of humans and robots in the same workspace have been solved by robot manufacturers. Safety standards specific for HRC have been defined (ISO/TS 10218 and ISO/TS 15066) [18, 19]. Precision, accuracy and repeatability of HRC are in line with most industrial requirements [2].

Nevertheless, there are still some unsolved problems that are strictly linked to the concept of HRC. First, there is a diffuse lack of confidence in the robot as a teammate. Furthermore, mistakes in the communication between human and robot may represent an unprecedented source of problems and failure risks for the process. It is therefore necessary to develop proofing methods that neutralize the most critical problems.

Common risk analysis methods applied to robotic workcells are Fault Tree Analysis (FTA) and Failure Mode and Effects and Criticality Analysis (FMECA) [3]. There is consensus among authors that both of them are not immediately applicable because the information of the risks cannot be estimated at this stage. Additionally, FTA can only be applied with the support of history of preceding similar processes. This is not the case of the study, as HRC is a new process non-experimented before.

This paper focuses on the FMECA, which is a very popular technique to improve the reliability of products, services and manufacturing processes, by analyzing failure scenarios before they have occurred and preventing the occurrence of causes or mechanisms of failures [4]. Applied to manufacturing processes, the FMECA is very useful to improve reliability and safety and provide a useful basis for planning the corresponding predictive maintenance [5].

The FMECA is carried out by a cross-functional and multidisciplinary team of experts (typically composed of engineers and technicians specialized in design, testing, reliability, quality, maintenance, manufacturing, safety, etc.), coordinated by a team leader. The experts must overcome conflicting situations and converge towards a shared agreement.

The most critical activity is concerned with the priority assessment of failure modes/causes, based on the Risk Priority Number (RPN), which is a composite indicator given by the product of the three dimensions of occurrence (O), severity (S), and detection (D). Each of these dimensions is determined by collective judgment, using a conventional ordinal scale from 1 to 10. The failure modes with higher RPNs are considered more critical and deserve priority for the implementation of risk mitigation actions: since the resources (time and money) available for corrective actions are (by definition) limited, it is reasonable to concentrate them where they are most needed, tolerating the minor failure modes.

The traditional method for prioritizing failure modes shows important shortcomings, extensively debated in the scientific literature [6,7,8]; including but not limited to:

-

Use of arbitrary reference tables for assigning scores to the three dimensions S, O and D.

-

The three dimensions S, O and D are arbitrarily considered as equally important.

-

Since S, O and D are evaluated using ordinal scales, their product is not a meaningful measure according to the measurement theory [9].

-

The degree of disagreement among the team members in formulating collective judgments is not taken into account.

It is particularly challenging to assess the role of FMECA in the current globalised scenario, which is increasingly characterised by distributed manufacturing processes i.e., a form of decentralized manufacturing practiced by enterprises, using a network of geographically dispersed facilities that are supposed to be flexible, reconfigurable and coordinated through information technology. Unfortunately, decentralized production in some ways hampers the application of the traditional FMECA. Firstly, the fact that experts are numerous may increase the chances of conflicts [10]. Secondly, only few of them generally have competence on HRC. Thirdly, there is not a great deal of experience on which to rely on.

The purpose of this paper is to revisit the traditional FMECA approach, making it reliable also when there is a substantial disagreement among experts on the potential problems and failure risks of a process. The revisited approach allows the aggregation of individual judgments by experts, through a recent aggregation technique – called ZMII – which combines the Thurstone’s Law of Comparative Judgment (LCJ) and the Generalized Least Squares (GLS) method [11,12,13].

The remainder of the paper is organized into four sections. Section 2 introduces a real-life case study that will accompany the explanation of the proposed approach. Section 3 briefly recalls the ZMII-technique. Section 4 illustrates the proposed methodology in detail, exemplifying its application to the above case study. Finally, Sect. 5 summarizes the original contributions of this paper, its practical implications, limitations and suggestions for future research.

2 Case Study

An important multinational company (anonymous for reasons of confidentiality) designs, develops, manufactures and markets seats for a number of applications, ranging from cars to aircrafts. Since the relevant assembly operations are complex and require a relatively high level of dexterity, they are largely manual. To support operators in critical manual tasks, reducing the possibility of error, collaborative robots are introduced. The case study is the assembly of a seat-frame component, which consists of fixing different flanges on a common base. Figure 1a shows the flowchart of human and robot interaction through a Human Robot Interaction System (HRIS). In Fig. 1b a simplified part is designed for sake of laboratory tests. The most frequent collaborative task is when the robot holds a flange in position and the operator fixes it with screws; this operation is performed collaboratively, as illustrated in Fig. 1c.

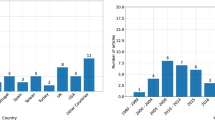

The company carries out this manufacturing process in four worldwide plants located in four countries (i.e., Germany, Poland, United States and China). Since, the employed equipment is almost equivalent, it is reasonable to expect that equivalent processes are likely to be subject to the same failure modes/causes. Following this reasoning, it would be appropriate to share the experience accumulated in the various production facilities.

The above four processes are managed by twenty total engineers/technicians, hereinafter referred to as “experts”. Given the great difficulty in bringing together all the experts and making them interact to reach shared decisions, the traditional FMECA approach would be extremely difficult to manage, especially with reference to activities concerning the formulation of collective judgments.

The initial activities of data collection, process analysis and determination of failure modes/causes are coordinated by a team leader, who collects information and technical indications received from other experts, processing and organizing them appropriately.

The results of the initial activities are summarised in the (incomplete) FMECA table in Fig. 2, in which twelve failure mode-cause combinations have been determined (f1 to f12), which should be prioritized according to the three factors of interest. It is interesting to note that the potential (negative) effects of failure modes mainly concern interruptions/slowdowns in assembly operations, with no real safety risks. This is no longer surprising, given the relatively stringent safety standards of collaborative robots [19].

Collective assignments of the S, O and D scores and their aggregation through RPN will be completed using the new revisited FMECA.

3 ZMII-Technique

The ZMII-technique can be used more generally for any large group-decision problem in which a number of judges express their individual judgments on certain objects, based on the degree of specific attributes [14, 16]. Considering the case study in Sect. 2, we can identify three separate decision-making problems in which:

-

the judges are the twenty experts (e1 to e20) affiliated to four production plants of the company of interest.

-

the objects are the failure mode-cause combinations (f1 to f12) in Fig. 2; for the sake of simplicity, these objects will be hereafter referred to as “failure modes”.

-

the attributes are respectively S for the first problem, O for the second problem, and D for the third problem.

The ZMII-technique can be seen as a black box transforming some specific input data – i.e., judgments on n failure modes, formulated by m experts – into some specific output data – i.e., ratio scaling of the failure modes, with a relevant uncertainty estimation. Precisely, for each (i-th) failure mode, the ZMII-technique produces an estimate of (1) the (mean) ratio-scale value yi and (2) the corresponding standard deviation \( \sigma_{{y_{i} }} \).

A prerequisite of the ZMII-technique is that each expert formulates a ranking of the failure modes – i.e., an ordered sequence of them, with those having the highest grade of the attribute in the top positions and those having the lowest grade of the attribute in the bottom ones. E.g., considering the case study in Sect. 2, the failure modes are supposed to be ranked according to the degree of each of the dimensions of interest (i.e., S, O or D).

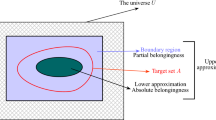

Apart from the regular failure modes, experts may also include two (fictitious) dummy failure modes in their rankings: i.e., one (fZ) corresponding to the absence of the attribute of interest, and one (fM) corresponding to the maximum-imaginable degree of the attribute. Referring to the case study, fZ corresponds to a fictitious failure mode of absent severity/occurrence/detection (e.g., a failure mode associated with the score S = 1/O = 1/D = 1 [15]), while fM corresponds to a fictitious failure mode of the maximum-imaginable severity (e.g., a failure mode associated with the score S = 10/O = 10/D = 10).

In the best cases, experts formulate complete rankings, characterised by relationships of strict dominance (e.g., “fi > fj”) or indifference (e.g., “fi ~ fj”) among the possible pairs of failure modes [17]. The formulation of these rankings may be problematic when the number of failure modes is large. To overcome this obstacle, a flexible response mode that tolerates incomplete rankings can be adopted.

Returning to the case study, each of the experts formulates his/her own three distinct (subjective) rankings of the failure modes, based on the three dimensions S, O and D; results are shown in Fig. 3. It can be noted that most of the experts have opted for the formulation of incomplete rankings, probably because they are simpler and faster. This kind of response mode may also favour data reliability since, in case of indecision, experts are not necessarily forced to provide complete and falsely precise responses [17].

Rankings indicate a significant inter-expert disagreement; e.g., while several experts place the failure mode f5 among the top positions of their S-rankings, others place it among the bottom positions. This reflects the actual difficulty of experts to converge towards a collective judgement.

The mathematical formalization of the problem relies on the postulates and simplifying assumptions of the Law of Comparative Judgment (LCJ) by Thurstone [11], who postulated the existence of a psychological continuum, i.e., an abstract and unknown unidimensional scale, in which objects are positioned depending on the degree of a certain attribute. The position of a generic i-th object (fi) is postulated to be distributed normally, in order to reflect the intrinsic expert-to-expert variability: \( f_{i}\,{\sim}\, N(x_{i} , \sigma_{i}^{2} ) \), where xi and \( \sigma_{i}^{2} \) are the unknown mean value and variance related to the degree of the attribute of that object.

Considering two generic objects, fi and fj, and having introduced further simplifying hypotheses [4] (e.g., lack of correlation, \( \sigma_{i}^{2} = \sigma^{2} \;\forall i, \ldots \)), it can be asserted that:

Extending the reasoning to all possible pairs of objects, an over-determined system of equations (similar to that in Eq. 1) can be obtained and solved by applying the Generalized Least Squares (GLS) method [12], which allows to obtain an estimate of the mean value of the degree of the attribute of each failure mode: X = […, xi, …]T, which is expressed on an arbitrary interval scale, with a relevant dispersion estimation.

4 Proposed Methodology

The experts’ rankings related to a certain dimension (S, O and D) are aggregated through the application of the ZMII–technique. For a generic (i-th) failure mode, the aggregation can be performed through the classic multiplicative model of the RPN [4].

The uncertainty related to the RPNi values can be determined by applying delta method, also referred as law of propagation of uncertainty or error transmission formula [17]. It is thus obtained:

where \( \sigma_{{S_{i} }}^{2} \), \( \sigma_{{O_{i} }}^{2} \) and \( \sigma_{{D_{i} }}^{2} \) are the variances associated with the Si, Oi and the Di values related to the i-th failure mode.

The results of the application of the proposed methodology to the case study are shown in Table 1 and synthetically represented in the Pareto chart of Fig. 4. The most critical failure modes are those with higher RPNi values. The relatively wide uncertainty bands (depicting the expanded-uncertainty values \( \pm 2 \cdot \sigma_{{RPN_{i} }}^{{}} \)) indicate that the RPNi alone is a “myopic” indicator, since it may perform differentiations that are unfounded from a statistical point of view. For instance, while it makes sense to say that f3 is more critical than f10 or f11 (being the uncertainty band not superimposed), it can not necessarily be said that f10 deserves priority over f11. These considerations give the team a few more degrees of freedom in the choice of corrective actions, perhaps taking into account other external constraints (such as cost, technical difficulty, time required, etc.).

Finally, we note that failure causes with higher RPNi values tend to have higher dispersion. This sort of heteroschedasticity depends on the multiplicative aggregation model of S, O and D. The model could be replaced by other models (e.g. additive ones), in which appropriate weights could be introduced for weighing the three dimensions.

5 Conclusions

The paper illustrated an innovative approach for FMECA, when applied to HRC in distributed manufacturing environments. This approach has important implications that make it more suitable than the traditional FMECA for this practical context, characterised by the greater difficulty of experts in converging towards a collective decision.

Among the advantages: the method does not require experts to meet physically and make collective decisions; it includes a flexible response mode; it provides an estimation of the uncertainty of the results.

Although there is no absolute reference (“gold standard”) to evaluate the validity of the proposed approach with respect to the traditional one, we believe that it is superior from the conceptual point of view as it overcomes some widely debated shortcomings of the classic FMECA (e.g., it does not require the use of arbitrary reference tables for S, O and D and it does not introduce any unduly “promotion” of the judgment scales).

Among the limitations: the proposed response mode, although being flexible, represents a novelty that could create some problems, especially for more experienced users that are accustomed to the traditional procedure; like the traditional procedure, the three dimensions S, O and D were considered as equally important.

Regarding the future, we plan to replace the classic multiplicative model of the RPN with a new one that allows (1) to weigh the contributions of S, O and D and (2) to visualize their uncertainty contribution on the resulting RPN values.

References

Almada-Lobo, F.: The industry 4.0 revolution and the future of manufacturing execution systems (MES). J. Innov. Manag. 3(4), 16–21 (2016)

Antonelli, D., Astanin, S.: Qualification of a collaborative human-robot welding cell. Procedia CIRP 41, 352–357 (2016)

Gopinath, V., Johansen, K.: Risk assessment process for collaborative assembly – a job safety analysis approach. Procedia CIRP 44, 199–203 (2016)

Stamatis, D.H.: Failure Mode and Effect Analysis: FMECA from Theory to Execution. ASQ Quality Press, Milwaukee (2003)

Johnson, K.G., Khan, M.K.: A study into the use of the process failure mode and effects analysis (FMECA) in the automotive industry in the UK. J. Mater. Process. Technol. 139(1–3), 348–356 (2003)

Certa, A., Hopps, F., Inghilleri, R., La Fata, C.M.: A Dempster-Shafer theory-based approach to the failure mode, effects and criticality analysis (FMECA) under epistemic uncertainty: application to the propulsion system of a fishing vessel. Reliab. Eng. Syst. Saf. 159, 69–79 (2017)

Liu, H.C., You, J.X., Shan, M.M., Su, Q.: Systematic failure mode and effect analysis using a hybrid multiple criteria decision-making approach. Tot. Qual. Manag. Bus. Excellence 30(5–6), 537–564 (2019)

Das Adhikary, D., Kumar Bose, G., Bose, D., Mitra, S.: Multi criteria FMECA for coal-fired thermal power plants using COPRAS-G. Int. J. Qual. Reliab. Manag. 31(5), 601–614 (2014)

Franceschini, F., Galetto, M., Maisano, D.: Designing Performance Measurement Systems: Theory and Practice of Key Performance Indicators. Management for Professionals. Springer, Berlin (2019). https://doi.org/10.1007/978-3-030-01192-5

Cai, C.G., Xu, X.H., Wang, P., Chen, X.H.: A multi-stage conflict style large group emergency decision-making method. Soft. Comput. 21(19), 5765–5778 (2017)

Thurstone, L.L.: A law of comparative judgment. Psychol. Rev. 34(4), 273 (1927)

Kariya, T., Kurata, H.: Generalized Least Squares. Wiley, New York (2004)

Franceschini, F., Maisano, D.: Fusion of partial orderings for decision problems in quality management. In: Proceedings of ICQEM 2018, Barcelona, 11–13 July 2018 (2018)

Franceschini, F., Maisano, D.: Fusing incomplete preference rankings in design for manufacturing applications through the ZMII-technique. Int. J. Comput. Integr. Manuf. (2019, to appear). https://doi.org/10.1007/s00170-019-03675-5

AIAG (Automotive Industry Action Group) and VDA (Verband der Automobilindustrie): Failure Mode and Effects Analysis – Design FMECA and Process FMECA Handbook, Southfield, MI (2019)

JCGM 100:2008: Evaluation of Measurement Data - Guide to the Expression of Uncertainty in Measurement. BIPM, Paris (2008)

Franceschini, F., Galetto, M.: A new approach for evaluation of risk priorities of failure modes in FMECA. Int. J. Prod. Res. 39(13), 2991–3002 (2001)

ISO 10218:2011: Robots and Robotic Devices – Safety Requirements for Industrial Robots. ISO, Geneva (2011)

ISO/TS 15066:2016: Robots and Robotic Devices – Collaborative Robots. ISO, Geneva (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 IFIP International Federation for Information Processing

About this paper

Cite this paper

Maisano, D.A., Antonelli, D., Franceschini, F. (2019). Assessment of Failures in Collaborative Human-Robot Assembly Workcells. In: Camarinha-Matos, L.M., Afsarmanesh, H., Antonelli, D. (eds) Collaborative Networks and Digital Transformation. PRO-VE 2019. IFIP Advances in Information and Communication Technology, vol 568. Springer, Cham. https://doi.org/10.1007/978-3-030-28464-0_49

Download citation

DOI: https://doi.org/10.1007/978-3-030-28464-0_49

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-28463-3

Online ISBN: 978-3-030-28464-0

eBook Packages: Computer ScienceComputer Science (R0)