Abstract

Industry 4.0 and advanced technology, such as sensors and human–machine cooperation, provide new possibilities for infusing intelligence into failure analysis. Failure analysis is the process of identifying (potential) failures and determining their causes and effects to enhance reliability and manufacturing quality. Proactive methodologies, such as failure mode and effects analysis (FMEA), and reactive methodologies, such as root cause analysis (RCA) and fault tree analysis (FTA), are used to analyze failures before and after their occurrence. This paper focused on failure analysis methodologies intelligentization literature applied to FMEA, RCA, and FTA to provide insights into expert-driven, data-driven, and hybrid intelligence failure analysis advancements. Types of data to establish an intelligence failure analysis, tools to find a failure’s causes and effects, e.g., Bayesian networks, and managerial insights are discussed. This literature review, along with the analyses within it, assists failure and quality analysts in developing effective hybrid intelligence failure analysis methodologies that leverage the strengths of both proactive and reactive methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Failure analysis entails activities to identify, categorize, and prioritize (potential) failures and determine causes and effects of each failure and failure propagation and interdependencies (Rausand & Øien, 1996). Failure analysis significance in manufacturing has grown since Industry 3.0 to mitigate defects and/or failures in production processes, thereby maximizing reliability and quality and minimizing production interruptions, associated risks, and costs (Wu et al., 2021; Ebeling, 2019).

Failure analysis methodologies have been supported by mathematical, statistical, and graph theories and tools, including MCDM theory, fuzzy theory, six-sigma, SPC, DOE, simulation, Pareto charts, and analysis of mean and variance (Oliveira et al., 2021; Huang et al., 2020; Tari & Sabater, 2004). Industry 4.0 is driven by (real-time) data from sensors, the Internet of Things (IoT), such as Internet-enabled machines and tools, and artificial intelligence (AI). Advances in artificial intelligence theory and technology have brought new tools to strengthen failure analysis methodologies (Oztemel & Gursev, 2020). Examples of tools include Bayesian networks (BNs), case-based reasoning (CBR), neural networks, classifications, clusterings algorithms, principal component analysis (PCA), deep learning, decision trees, and ontology-driven methods (Zheng et al., 2021). These Industry 4.0 advancments enable more efficient data collection and analysis, enhancing predictive capabilities, increasing efficiency and automation, and improving collaboration and knowledge sharing.

Failure analysis methodologies can be categorized into expert-driven, data-driven, and hybrid ones. Expert-driven failure analysis methods rely on experts’ knowledge and experience (Yucesan et al., 2021; Huang et al., 2020). This approach is useful when the data is limited or when there is a high degree of uncertainty. Expert-driven methods are also useful when the failure structure is complex and difficult to understand. However, this approach is limited by the availability and expertise of the experts, and is prone to bias and subjective interpretations (Liu et al., 2013).

Data-driven failure analysis methods, on the other hand, rely on statistical analysis and machine learning algorithms to identify patterns in the data and predict the causes of the failure (Zhang et al., 2023; Mazzoleni et al., 2017). This approach is useful when there is a large amount of data available and when the failure structure is well-defined. However, data-driven methods is limited by the quality and completeness of the data (Oliveira et al., 2021).

Until recently, most tools have focused on replacing humans with artificial intelligence (Yang et al., 2020; Filz et al., 2021b), which causes them to remove human intellect and capabilities from intelligence systems. Hybrid intelligence creates hybrid human–machine intelligence systems, in which humans and machines collaborate synergistically, proactively, and purposefully to augment human intellect and capabilities rather than replace them with machine intellect and capabilities to achieve shared goals (Akata et al., 2020).

Collaboration between humans and machines can enhance the failure analysis process, allowing for analyses that were previously unattainable by either humans or machines alone. Thus, hybrid failure analysis provides a more comprehensive analysis of the failure by incorporating strengths of both expert-driven and data-driven approaches to identify the most likely causes and effects of failures (Dellermann et al., 2019; van der Aalst, 2021).

Benefits from a smart failure analysis may include reduced costs and production stoppages, improved use of human resources, improved use of knowledge, improved failure, root causes, and effects identification, and real-time failure analysis. Yet, only a few studies specifically addressed hybrid failure analysis (Chhetri et al., 2023). A case example of hybrid expert data-driven failure analysis involves using data from similar product assemblies to construct a Bayesian network for proccess failure mode and effects analysis (pFMEA), while also incorporating expert knowledge as constraints based on the specific product being analyzed (Chhetri et al., 2023).

Over the past few years, several literature reviews, as reported in Section Literature review, have been accomplished under different outlooks in relation to different failure analysis methodologies including failure mode and effects analysis (FMEA), root cause analysis (RCA), and fault tree analysis (FTA). Currently, most existing literature does not systematically summarize the research status of these failure analysis methodologies from the perspective of Industry 4.0 and (hybrid) intelligence failure analysis with the benefits from new technologies. Therefore, this study aims to review, categorize, and analyze the literature of these three general failure analysis methodologies in production systems. The objective is to provide researchers with a comprehensive overview of these methodologies, with a specific focus on hybrid intelligence, and its benefits for quality issues in production. We address two questions "How can failure analysis methodologies benefit from hybrid intelligence?" and "Which tools are suitable for a good fusion of human and machine intelligence?" Consequently, the main contributions of this study to the failure analysis literature are as follows:

-

1.

Analysis of 86 papers out of 7113 papers from FMEA, RCA, and FTA with respect to methods and data types that might be useful for a hybrid intelligence failure analysis.

-

2.

Identification of data and methods to construct and detect multiple failures within different research related to FMEA, RCA, and FTA methodologies.

-

3.

Identification of the most effective methods for analyzing failures, identifying their sources and effects, and assessing related risks.

-

4.

Proposal of a categorization of research based on the levels of automation/intelligence, along with the identification of limitations in current research in this regard.

-

5.

Provision of hybrid intelligent failure analysis future research, along with other future directions such as future research on failure propagation and correlation.

The plan of this paper is as follows. Section Literature review briefly introduces related literature reviews on FMEA, RCA, and FTA. A brief description of other failure analysis methodologies is also provided. Section Research methodology presents our review methodology, including the review scope and protocols, defining both our primary and secondary questions, and the criteria for selecting journals and papers to be reviewed. A bibliography summary of the selected papers is provided. Literature has been categorized in Section Literature categorization based on the four general steps of a failure analysis methodology, involving failure structure detection, failure event probability detection, failure risk analysis, and outputs. Managerial insights, limitations, and future research are discussed in Section Managerial insights, limitations, and future research. This assists researchers with applications and complexity, levels of intelligence, how knowledge is introduced into the failure analysis. A more in-depth discussion of hybrid intelligence, failure propagation and correlation, hybrid methodologies, and other areas of future research is also included. Conclusions are presented in Section Conclusion.

Literature review

General and industry/field-specific failure analysis methodologies have been developed over the last few decades. In this section, we provide useful review papers regarding FMEA, RCA, and FTA, which are the focus of our paper. Additionally, some other general and industry/field-specific failure analysis methodologies are briefly discussed.

FMEA is a most commonly used bottom-up proactive qualitative methodologies for potential quality failure analysis (Huang et al., 2020; Stamatis, 2003). Among its extensions, process FMEA (pFMEA) proactively identifies potential quality failures in production processes such as assembly lines (Johnson & Khan, 2003). Typically, (p)FMEA uses expert knowledge to determine potential failures, effects, and causes, and to prioritize the failures based on the risk priority number (RPN). RPN is a product of severity, occurrence, and detection rates for each failure (Wu et al., 2021). Some of the FMEA shortcomings include time-consuming, subjectivity, inability to determine multiple failures, and failure propagation and interdependency (Liu et al., 2013).

RCA is a bottom-up reactive quantitative methodology that determines the causal mechanism behind a failure to prevent the recurrence of the failure in manufacturing processes (Oliveira et al., 2023). To locate, identify, and/or explain the reasons behind the occurrence of root causes, RCA utilizes statistical analysis tools, such as regression, statistical process control (SPC), design of experiments (DOE), PCA, and cause-effect diagram (Williams, 2001). Limited ability to predict future failures and difficulty in identifying complex or systemic issues are among RCA limitations (Yuniarto, 2012).

FTA is a top-down reactive graphical method to model failure propagation through a system, i.e., how component failures lead to system failures (Kumar & Kaushik, 2020). FTA uses qualitative data to model the structure of a system and quantitative data, including probabilities and graph methods such as minimal cut/path sets, binary decision diagrams, simulation, and BNs, to model failures propagation. Requiring extensive data, limited ability to identify contributing factors, and time-consuming are among the FTA limitations (Ruijters & Stoelinga, 2015).

In recent years, several literature reviews have been conducted on failure analysis methodologies, exploring various perspectives and approaches. Liu et al. (2013) reviewed FMEA risk evaluation tools including rule-based systems, mathematical programming, and multi-criteria decision-making (MCDM). They concluded that artificial intelligence and MCDM tools, particularly fuzzy rule base systems, grey theory, and cost-based models, are the most cited tools to prioritize risks in FMEA. Liu et al. (2019a) and Dabous et al. (2021) reviewed MCDM tools application for FMEA. Papers with different areas, automotive, electronics, machinery and equipment, and steel manufacturing were considered. The most used MCDM tools, namely technique for order of preference by similarity to ideal solution (TOPSIS), analytic hierarchy process (AHP), decision-making trial and evaluation laboratory (DEMATEL), and grey theory, were identified.

Spreafico et al. (2017) provided a FMEA/Failure mode, effects, and criticality analysis (FMECA) critical review by classifying FMEA/FMECA limitations and issues and reviewing suggested improvements and solutions for the limitations. FMEA issues were classified into four groups of applicabilities, cause and effect analysis, risk analysis, and problem-solving. Main problems (and solutions) are being time-consuming (integration with design tools, using more structured templates, and automation), lack of secondary effects modeling (integration with other tools such as FTA, BN, and Petri net), being too subjective (using statistical evaluation and cost-based approaches), and lack in evaluating the implementation of a solution (using the improved presentation of the results and integration with other tools such as maintenance management tools), respectively. Huang et al. (2020) provided a bibliographic analysis of FMEA and its applications in manufacturing, marine, healthcare, aerospace, and electronics. Wu et al. (2021) sorted out potential failure mode identification approaches such as analyzing entry point for system failure mode identification, failure mode recognition tools, and failure mode specification description. Then a review of FMEA risk assessment tools had been provided.

Oliveira et al. (2023) reviewed automatic RCA literature in manufacturing. Different data types, location-time, physical, and log-action, that are usually used were identified. Industries with the most use of RCA are ranked, semiconductor, chemical, automotive, and others. Then different tools used to automate RCA, including decision trees, regression models, classification methods, clustering methods, neural networks, BNs, PCA, statistical tests, and control charts, were discussed. Ruijters and Stoelinga (2015) provided FTA qualitative and quantitative analysis methods. Also, different types of FTA, standard FTA, dynamic FTA, and other extensions, were discussed. Zhu and Zhang (2022) also reviewed dynamic FTA. Cai et al. (2017) reviewed the application of BN in fault diagnosis. First, an overview of BN types (static, dynamic, and object-oriented), structure modeling, parameters modeling, and interference has been provided. Then applicability of BN for fault identification in process, energy, structural, manufacturing, and network systems has been discussed. BN verification and validation methods are provided. Future prospects including integration of big data with BN, real-time fault diagnosis BN inference algorithms, and hybrid fault diagnosis methods are finally resulted. More relevant BN reviews include BN application in reliability (Insua et al., 2020) and safety and risk assessments (Kabir & Papadopoulos, 2019).

The integration of FMEA, RCA, and FTA holds immense potential for quality and production managers to minimize failures and enhance system efficiency. By capitalizing on the unique strengths of each approach, the integration of these failure analysis methodologies enables a more comprehensive and effective examination of failures. However, existing studies and literature reviews have predominantly focused on individual methodologies, leading to a lack of integration and limited familiarity with three approaches among engineers and industry experts. To address this gap and promote the integration of them, this study aims to review the progress of intelligence failure analysis within FMEA, RCA, and FTA.

Other general failure analysis methodologies include, but are not limited to, the following methodologies. Event Tree Analysis, similar to FTA, is a graphical representation that models the progression of events following an initiating event, helping to analyze the potential consequences (Ruijters & Stoelinga, 2015). Bow-Tie Analysis, usually used in risk management, visualizes the relationship between different potential causes of a hazard and their possible consequences (Khakzad et al., 2012). Human Reliability Analysis focuses on assessing the probability of human error and its potential impact on systems and processes (French et al., 2011). The Fishbone Diagram visually represents potential causes of a problem to identify root causes by categorizing them into specific factors like people, process, equipment, materials, etc.

There are also industry-specific methodologies, including but not limited to the following ones. Electrostatic Discharge (ESD) Failure Analysis focuses on identifying failures caused by electrostatic discharge, a common concern in the electronics industry. Hazard and Operability Study is widely used in the chemical industry to examine deviations from the design intent and identify potential hazards and operability issues. Incident Response and Post-Incident Analysis, in the IT industry, is used for analyzing and responding to security incidents, with a focus on preventing future occurrences. Hazard Analysis and Critical Control Points is a systematic preventive approach to food safety that identifies, evaluates, and controls hazards throughout the production process. Maximum credible accident analysis assesses and mitigates the most severe accidents that could occur in high-risk industries. For more information on industry-specific methodologies, an interested reader may consult the paper on that industry, as they are wide and out of the scope of this paper for deep discussion.

Our review focuses on the historical progress of (hybrid) intelligence failure analysis to identify and classify methodologies and tools used within them. In Industry 4.0, (hybrid) intelligence failure analysis can contribute to improve quality management and automate quality through an improved human cyber-physical experience. Different from the abovementioned reviews, the purpose of our study is to provide a rich comprehensive understanding of the recent developments in these methodologies from industry 4.0 and hybrid intelligence, the benefits of making them intelligent, i.e., (augmented) automatic and/or data-driven, and their limitations.

Research methodology

A systematic literature review analyses a particular knowledge domain’s body of literature to provide insights into research and practice and identify research gaps (Thomé et al., 2016). This section discusses our review scope and protocols, defining both our primary and secondary questions, and the criteria for selecting journals and papers to be reviewed. A bibliography analysis of the selected papers is also presented, including distributions by year, affiliation, and journals.

Review scope and protocol

We follow Thomé et al. (2016) 8-step literature review methodology to assure a rigorous literature review of intelligence, automated/data-driven, failure analysis methodology for Industry 4.0.

In Step 1, our (hybrid) intelligence failure analysis problem is planned and formulated by identifying the needs, scope, and questions for this research. Our initial need for this literature review comes from a relevant industrial project entitled "assembly quality management using system intelligence" which aims to reduce the quality failures in assembly lines. The trend towards automated and data-driven methodologies in recent years signifies the need for this systematic literature review. Thus, three general failure analysis methodologies, FMEA, RCA, and FTA, are reviewed with respect to tools to make them intelligent and to derive benefits from hybrid intelligence.

Our primary questions are as follows. (i) What are the failure analysis general methodologies and what tools have been used to make them intelligent? (ii) How these methodologies may benefit from hybrid intelligence? (iii) What are the strengths and weaknesses of these methodologies and tools? Our secondary questions are as follows. (i) How intelligent are these tools? (ii) What types of data do they use? Which tools allow a good fusion of human and machine intelligence? (iii) How well do they identify the root causes of failures? (iv) What are the possible future prospectives?

Step 2 concerns searching the literature by selecting relevant journals, databases, keywords, and criteria to include or exclude papers. We select the SCOPUS database to scan the relevant paper from 1990 to the first half of 2022. SCOPUS contains all high-quality English publications and covers other databases such as ScienceDirect and IEEE Xplore. A two-level keyword structure is used. The first level retrieves all papers that have either failure mode and effect analysis, FMEA, failure mode and effects and criticality analysis, FMECA, fault tree analysis, FTA, event tree analysis, ETA, root cause analysis, RCA, failure identification, failure analysis, or fault diagnosis in the title, abstract, and/or keywords. The second level limits the retrieved paper by the first level keywords to papers that have either Bayesian network, BN, automated, automatic, automation, smart, intelligence or data-driven in the title, abstract, and/or keywords.

To ensure the scientific rigor of our literature review process, we have removed papers that met at least one of the following criteria: Publications with concise and/or ambiguous information that would make it impossible to re-implement the tools and methodologies described in the paper later on. Publications in low-level journals, i.e., journals in the third quarter (Q3) or lower in the Scimago Journal & Country Rank. Papers with subject areas that are irrelevant to our research topic, such as physics and astronomy.

Steps 3 and 4 involve gathering data and evaluating data quality. We download papers and check their sources according to exclusion criteria. Step 5 concerns data analysis. Step 6 focuses on interpreting the data. The final selected papers are analyzed and interpreted in Section Managerial insights, limitations, and future research. Step 7 involves preparing the results and report. Step 8 requires the review to be updated continuously.

Discussion and statistical analysis

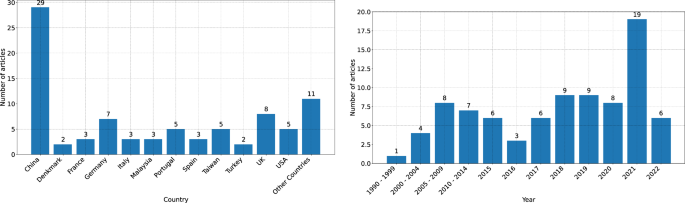

Here is a bibliometric analysis of our literature review. About 15,977 papers were found in our first search. By excluding criteria, we shortened the search to 7113. Then, we checked the titles of 7113 papers including 4359 conference and 2754 journal papers. We downloaded 1,203 papers to read their abstracts and skim their bodies. Then, 1114 low-quality/irrelevant papers were excluded. The remaining 86 high-quality papers were examined for this study.

Distributions of papers by year and affiliation are shown in Fig. 1. 28 countries have contributed in total. Most affiliations are in advanced countries including China, Germany, and the UK. Surprisingly, we found no publications from Japan and only five from the USA. Only one papers had been published between 1990 and 1999 because of limited data and technology, e.g., sensors and industrial cameras. A slow growth observed between 2000 and 2014 coincides with the technology advancement and Industry 4.0 emergence. The advanced technology and researchers focus on Industry 4.0 have led to significant growth every year since 2015. Worth to note that 2022 information is incomplete because this research has been conducted in the middle of 2022. We expect more publications, at least equal to 2021, for 2022.

Papers distribution by journal is in Fig. 2. 58 journals and conferences have contributed. Journals with a focus on production and quality, e.g., International Journal of Production Research, have published most papers. Technology-focused journals, e.g., IEEE Access, also have contributed.

Literature categorization

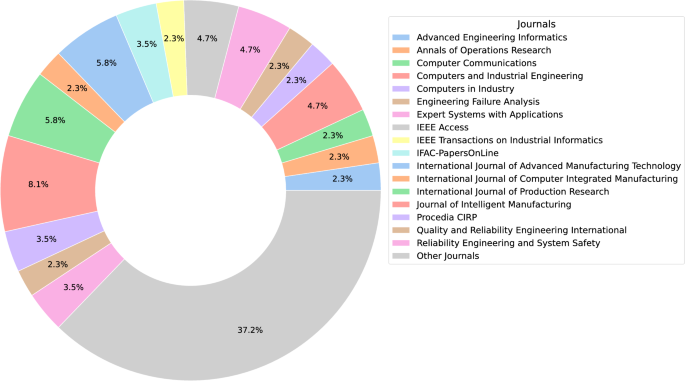

Selected papers are now categorized based on the four general steps of a failure analysis methodology, involving failure structure detection, failure event probabilities detection, failure risk analysis, and outputs. Then, a statistical analysis of these categorizations is provided.

These four steps of a failure analysis methodology are illustrated in Fig. 3. The first two steps deal with input data. In step 1, the failure structure is identified, encompassing all (possible) failures, the failure propagation structure, failure interdependency, and causes and effects. Step 2 involves detecting event probabilities in a failure structure. For example, classical FMEA scores each failure with severity, occurrence, and detection rates.

To analyze failures in a (production) system, data should be collected to identify the failure structure and detect failures. Reactive methodologies, such as RCA, are data-driven and typically gather available data in a system, while proactive methodologies, such as FMEA, are expert-driven and gather data through expert knowledge. However, a (hybrid) intelligence failure analysis methodology should take advantage of both advanced technologies, such as sensors and Internet-enabled machines and tools, and experts to automatically gather required data, combining proactive and reactive approaches, and providing highly reliable analyses and solutions.

In step 3, all input data are processed to determine the associated risk value with each failure, and the most probable causes (usually based on an observed or potential effect). Typically, a main tool, such as Bayesian networks, neural rule-based systems, statistical analysis, or expert analysis, is used to determine root causes, classify failures, and/or rank failures.

Step 4 outputs results that may include failures and sources, reasons behind the sources, and mitigation actions. The output of this tool is post-processed to provide possible solutions and information that is explainable and easy to use for both humans and machines.

Steps 1: failure structure

Failure structure identification is the first step in a failure analysis methodology. (Potential) failures, causes, effects, and/or failure interdependency are identified. We categorize the literature to develop a (hybrid) intelligence failure methodology to identify failure structure, causes, effects, interdependencies, and relationships between failures, failures and causes, and failures and effects.

Data

Traditionally, experts have defined failure structures by analyzing causes, effects, and the interdependency of failures. However, recent studies have explored alternative approaches to identifying failure structures, leveraging available data sources such as problem-solving databases, design forms, and process descriptions. Problem-solving databases include quality issue records, maintenance records, failure analysis records, and CBR databases. These records could be stored in structured databases and sheets, or unstructured texts. Design forms may include design FMEA forms, reliability characteristics, and product quality characteristics. Process descriptions may include operations, stations, and key operational characteristics. Moreover, simulation can be used to generate failures, causes, and effects (Snooke & Price, 2012). Design forms and process descriptions are generated by experts, usually for other purposes, and are re-used for failure analysis. Problem-solving databases could be generated by experts, such as previous FMEAs, or by an automated failure analysis methodology, such as automated RCA. Table 1 classifies studies based on the data sources used to identify the failure structure.

Data processing methods

To define failure structure from operational expert-driven data, no specific tool has been used. In the industry, failure structures are typically defined by an expert (or group of experts). When expert-driven or data-driven historical data and/or design forms and process descriptions are available, ontology-driven algorithms, including heuristics (Sayed & Lohse, 2014; Zhou et al., 2015; Steenwinckel et al., 2018; Xu & Dang, 2023) and SysML modeling language (Hecht & Baum, 2019), process/system decomposition (the operation, the station, and the key characteristics levels) (Zuo et al., 2016; Khorshidi et al., 2015; Zhou et al., 2015), rule-based algorithms that use CBR (Yang et al., 2018; Liu & Ke, 2007; Xu & Dang, 2023; Oliveira et al., 2022, 2021), and FTA/BN modeling from FMEA/expert data (Yang et al., 2022; Steenwinckel et al., 2018; Palluat et al., 2006) and from Perti net (Yang & Liu, 1998) have been suggested. Rivera Torres et al. (2018) divided a system into components and related failures to each of the components to make a tree of components and failures.

Component-failure matrix is generated using unstructured and quality problem texts mining from historical documents such as bills of material and failure analysis. Apriori algorithms were used to find synonyms in the set of failure modes (Xu et al., 2020). The 8D method is used to describe a failure. Ontology was used to store and retrieve data in a knowledge base CBR system.

Yang et al. (2022), Leu and Chang (2013) and Waghen and Ouali (2021) have suggested building a BN structure from the FTA model. Wang et al. (2018) has proposed to use the fault feature diagram, the fault-labeled transition system based on the Kripke structure to describe the system behavior. The MASON (manufacturing semantic ontology) has been used to construct the structure of the failure class by Psarommatis and Kiritsis (2022). Teoh and Case (2005) has developed a functional diagram to construct a failure structure between components of a system and to identify causes and effect propagation. Yang et al. (2018) used an FMEA style CBR to collect failures to search for similarity. They then used CBR to build a BN using a heuristic algorithm.

Step 2: failure detection

Failure detection data are gathered to determine the strength of relationships among failures, causes, and effects.

Data

Failure detection can be based on operational or historical expert-driven data, as well as data-driven historical and/or real-time data obtained from sensors. Such data can come from a variety of sources, including design and control parameters (such as machine age or workpiece geometry), state variables (such as power demand), performance criteria (such as process time or acoustic emission), and internal/external influencing factors (such as environmental conditions) (Filz et al., 2021b; Dey & Stori, 2005). These data are usually used to determine occurrence probability of failures. To determine the severity and detection probabilities of failures, conditional severity utility data/tables may be used (Lee, 2001). Simulation can also be used to determine occurrence, severity, and detection (Price & Taylor, 2002). Table 2 summarizes types of data that are usually used to detect failures in the literature.

Data processing methods

Processing data refers to the transformation of raw data into meaningful information. A data processing tool is needed that provides accurate and complete information about the system and relationships between data and potential failures.

First, data from different sources should be pre-processed. In a data pre-processing step, data is cleaned, edited, reduced, or wrangled to ensure or enhance performance, such as replacing a missing value with the mean value of the entire column (Filz et al., 2021b; Schuh et al., 2021; Zhang et al., 2023; Musumeci et al., 2020; Jiao et al., 2020; Yang et al., 2015; Chien et al., 2017).

Data then may need to be processed according to the tools used in Step 3. Common data processing methods between all tools include data normalization using the min-max method (Filz et al., 2021b; Musumeci et al., 2020) and other methods (Yang et al., 2018; Schuh et al., 2021; Jiao et al., 2020; Sariyer et al., 2021; Chien et al., 2017).

Feature selection/extraction algorithms have been used to select the most important features of data (Filz et al., 2021b; Xu & Dang, 2020; Mazzoleni et al., 2017; Duan et al., 2020; Schuh et al., 2021; Zhang et al., 2023; Musumeci et al., 2020; Yang et al., 2015; Sariyer et al., 2021).

For BN-based failure analysis, maximum entropy theory is proposed to calculate failure probabilities from expert-based data (Rastayesh et al., 2019). Fuzzy methods have also been used to convert linguistic terms to occurrence probabilities (Yucesan et al., 2021; Wan et al., 2019; Nie et al., 2019; Nepal & Yadav, 2015; Ma & Wu, 2020; Li et al., 2013; Duan et al., 2020). Euclidean distance-based similarity measure (Chang et al., 2015) and fuzzy rule base RPN model (Tay et al., 2015), heuristic algorithms (Brahim et al., 2019; Dey & Stori, 2005; Yang et al., 2022), and a fuzzy probability function (Khorshidi et al., 2015) have been suggested to build failure probabilities.

Failure analysis data may be incomplete, inaccurate, imprecise, and limited. Therefore, several studies have used tools to deal with uncertainty in data. The most commonly used methods are fuzzy FMEA (Yang et al., 2022; Nepal & Yadav, 2015; Ma & Wu, 2020), fuzzy BN (Yucesan et al., 2021; Wan et al., 2019; Nie et al., 2019), fuzzy MCDM (Yucesan et al., 2021; Nie et al., 2019; Nepal & Yadav, 2015), fuzzy neural network (Tay et al., 2015; Palluat et al., 2006), and fuzzy evidential reasoning and Petri nets (Shi et al., 2020).

Step 3: analysis

A failure analysis tool is essential for conducting any failure analysis. Table 3 categorizes various data-driven tools, such as BNs, Clustering/Classification, Rule-based Reasoning, and other tools used in the literature and the aspects they support.

BNs model probabilistic relationships among failure causes, modes, and effects using directed acyclic graphs and conditional probabilities. Pieces of evidence, i.e., known variables, are propagated through the graph to evaluate unobserved variables (Cai et al., 2017). For example, Rastayesh et al. (2019) applied BNs for FMEA and perform risk analysis of a Proton Exchange Membrane Fuel Cell. Various elements and levels of the system were identified along with possible routes of failure, including failure causes, modes, and effects. A BN was constructed to perform the failure analysis. Some other examples of the BNs application include an assembly system (Sayed & Lohse, 2014), kitchen equipment manufacturing (Yucesan et al., 2021), and Auxiliary Power Unit (APU) fault isolation (Yang et al., 2015).

Classification assigns predefined labels to input data based on learned patterns, Clustering organizes data into groups based on similarities. Neural networks are commonly used for failure classification and have been employed in most studies. Hence, we separated these studies from those that used other clustering/classification tools. Neural networks consist of layers of interconnected nodes, with an input layer receiving data, one or more hidden layers for processing, and an output layer providing the final classification (Jiang et al., 2024). For example, Ma and Wu (2020) applied neural networks to assess the quality of 311 apartments in Shanghai, China, for FMEA. The input includes various APIs collected for the apartments, and the output was the risk rate of each apartment. In another study, Ma et al. (2021) applied neural networks for RCA to predict the root causes of multiple quality problems in an automobile factory. Some other examples of the neural networks application include industrial valve manufacturing (Pang et al., 2021), complex cyber–physical systems (Liu et al., 2021), and an electronic module designed for use in a medical device (Psarommatis & Kiritsis, 2022).

Other clustering/classification tools include evolving tree (Chang et al., 2015), reinforced concrete columns (Mangalathu et al., 2020), K-means, random forest algorithms (Xu & Dang, 2020; Chien et al., 2017; Oliveira et al., 2022, 2021), contrasting clusters (Zhang et al., 2023), K-nearest neighbors (Ma et al., 2021), self-organizing maps (Gómez-Andrades et al., 2015), and Naive Bayes (Schuh et al., 2021; Yang et al., 2015).

Rule-based reasoning represents knowledge in the form of "if-then" rules. Rule-based reasoning involves a knowledge base containing the rules and a reasoning engine that applies these rules to incoming data or situations. For instance, Jacobo et al. (2007) utilized rule-based reasoning for analyzing failures in mechanical components. This approach serves as a knowledgeable assistant, offering guidance to less experienced users with foundational knowledge in materials science and related engineering fields throughout the failure analysis process. Also, the application of the rule-based reasoning for wind turbines FMEA is studied by (Zhou et al., 2015).

Other tools include gradient-boosted trees, logistic regression (Filz et al., 2021b), CBR (Tönnes, 2018; Camarillo et al., 2018; Jacobo et al., 2007), analyzing sensitivities of the machining operation by the stream of variations and errors probability distribution determination (Zuo et al., 2016), causal reasoning (Teoh & Case, 2005), probabilistic Boolean networks with interventions (Rivera Torres et al., 2018), principal component analysis (PCA) (Duan et al., 2020; Zhang et al., 2023; Jiao et al., 2020; Sun et al., 2021), factor ranking algorithms (Oliveira et al., 2022, 2021), heuristics and/or new frameworks (Camarillo et al., 2018; Yang et al., 2009, 2020; Snooke & Price, 2012; Xu & Dang, 2023; Rokach & Hutter, 2012; Wang et al., 2018; Hecht & Baum, 2019; Yang & Liu, 1998; Liu & Ke, 2007), and mathematical optimization methods (Khorshidi et al., 2015).

These tools may be integrated by other tools including sequential state switching and artificial anomaly association in a neural network (Liu et al., 2021), MCDM/optimization (Yucesan et al., 2021; Jomthanachai et al., 2021; Ma et al., 2021; Sun et al., 2021), game theory (Mangalathu et al., 2020), fuzzy evidential reasoning and Petri nets (Shi et al., 2020), and maximum spanning tree, conditional Granger causality, and multivariate time series (Chen et al., 2018).

Step 4: output

A data analysis process can benefit not only humans but also machines and tools in a hybrid intelligence failure analysis methodology. Therefore, the output information should be carefully designed. Table 4 ranks the output data, and the list of studies for each output is available in Online Appendix EC.1. Most studies have focused on automatically identifying the root causes of failures, which is the primary objective of a failure analysis methodology. In addition, researchers have also focused on failure occurrence rating, ranking, and classification. While automatically finding the root causes of failures is important, a hybrid intelligence failure analysis process needs to interpret the related data and information and automatically provide mitigation actions for both operators and machines. However, only a few studies have proposed tools to automatically find possible mitigation actions, usually based on CBR databases and only readable for humans. Therefore, future studies may focus on finding possible automated mitigation actions for failures and developing a quality inspection strategy.

Data post-processing

A data post-processing step transforms data from the main tool into readable, actionable, and useful information for both humans and machines. Adapting solutions from similar failures in a database (i.e., CBR) to propose a solution for a detected failure has been proposed by Tönnes (2018), Camarillo et al. (2018), Hecht and Baum (2019), Jacobo et al. (2007), Liu and Ke (2007) and Ma et al. (2021). Simulation to analyze different scenarios (Psarommatis & Kiritsis, 2022; Jomthanachai et al., 2021; Chien et al., 2017; Oliveira et al., 2022), mathematical optimization model (Khorshidi et al., 2015; Ma et al., 2021) and self-organizing map (SOM) neural network (Chang et al., 2017) to automatically select the best corrective action have also been proposed. Also, fuzzy rule-based systems to obtain RPN (Nepal & Yadav, 2015) and visualisation (Xu & Dang, 2020; Yang et al., 2009) are discussed.

Discussion and statistical analysis

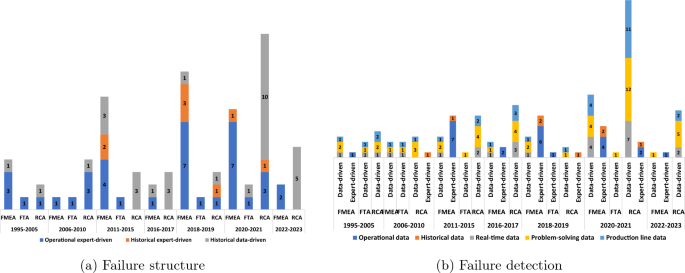

The statistical analysis of the paper reveals that most FMEA-based studies rely solely on expert-based information to construct failure structures, while RCA-based papers tend to use a hybrid of problem-solving and system-related data. This is depicted in Fig. 4, which shows the distribution of papers by data used over time. FMEA is used to identify potential failures when there is not enough data available to construct a failure structure based on system-based data. The trend shows some effort to use data, instead of expert knowledge, to construct failure structures, using data from similar products/processes. RCA and FTA are a reactive methodology that analyzes more information than FMEA. Advances in data mining techniques, along with increased data availability, have led to a growing trend of using data to construct failure structures. For a comprehensive and reliable intelligence failure analysis, a combination of all kinds of data is necessary. It is worth noting that Waghen and Ouali (2021) proposed a heuristic method to augment failure structure identification that uses expert and historical data. They suggested engaging expert knowledge when historical data are insufficient to identify a failure structure and/or the reliability of a failure structure is low. Other studies have solely focused on failure identification through expert knowledge or historical data, without considering the potential benefits of combining different types of data.

While most FMEA-based papers use only expert-based data to determine failure probability, there is a significant growth in the utilization of problem-solving data and a hybrid of problem-solving and system-related data, i.e., production line data, over time. RCA and FTA usually tend to use more problem-solving and system-related data. Moreover, this figure and Fig. 5 show that the literature on RCA has been growing in recent years, while the trend for FMEA has remained the same over time. We found that Filz et al. (2021b), Mazzoleni et al. (2017), Ma and Wu (2020) and Yang et al. (2015) improved FMEA to use a combination of expert-based, problem-solving, and system-related data to determine potential failures and their causes. They analyzed these data using deep learning, classification, and neural networks, respectively. Duan et al. (2020), Ma et al. (2021) tried to use the benefits of both expert-based data and problem-solving and system-related data in the RCA context. They analyzed the root cause of failures using neural networks.

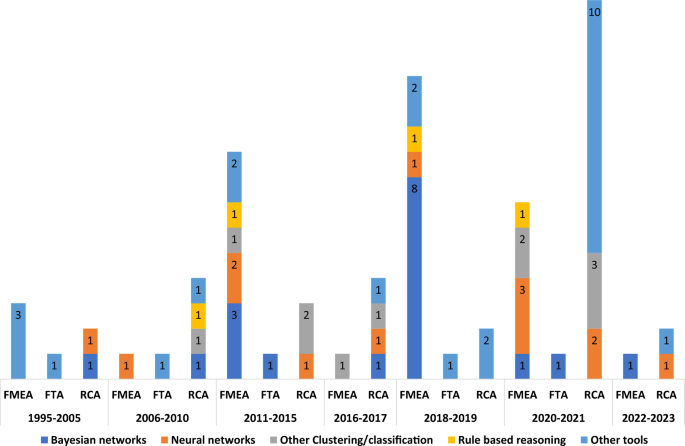

The distribution of papers by the tools used is shown in Fig. 5. BNs have been mainly used within the context of FMEA methodologies with a growing trend during the recent years, while RCA researchers have used them less frequently. BNs have the potential to model failure propagation, multi-failure scenarios, and solution analysis to propose potential solutions. However, all of the studies reviewed in this paper only used BNs to identify the root causes of failures. BNs offer a clear graphical representation of failures, their causes, and their effects, which facilitates the interpretation of results by humans. They also provide an easy way for humans to intervene and analyze the sensitivity of results and correct processed data if it appears unrealistic. BNs are well-developed tool and have the ability to work with expert-based, historical, and system-based data, even when data is fuzzy or limited. Developing methodologies that leverage the advantages of BNs seems promising for FMEA, RCA, and FTA.

RCA and FTA are reliant on various tools over time with no trend of using a specific tool, such as PCA and regression, due to their need for a large amount of data. However, these methods have limitations in incorporating both human and machine intelligence and mostly rely on machine intelligence. Although neural networks and classification algorithms have gained attention in both FMEA and RCA during the last few years, they are black boxes and difficult for humans to modify. Also, classification algorithms typically do not address failure propagation or multi-failure modes. BNs offer a promising alternative, as they can model failure propagation, multiple-failures, and provide a clear graphical representation of failures, causes, and effects. Furthermore, BNs can incorporate both expert-based and historical data, making them well-suited for FMEA, RCA, and FTA. Therefore, developing methodologies that fully leverage the benefits of BNs in these domains would be valuable.

Managerial insights, limitations, and future research

In this section, we discuss managerial insights, limitations, and future research related to different aspects of a Hybrid Intelligence failure analysis methodology. The aim is to assist researchers in focusing on relevant recommendations. Section Section Applications and complexity delves into the applications and complexity of each study, and provides examples for each tool. Section Levels of automation/intelligence presents the levels of intelligence for a failure analysis methodology. Section Introducing knowledge into tools discusses how knowledge is introduced into the failure analysis tools for an effective failure analysis. A more in-depth discussion of hybrid intelligence is in Section Hybrid intelligence. The last three sections provide insights into failure propagation and correlation, hybrid methodologies, and other areas of future research.

Applications and complexity

Intelligent FMEA, RCA, and FTA have been applied to various applications, including production quality management, computer systems, reliability and safety, chemical systems, and others. Table 5 presents the distribution of reviewed papers by application. The list of studies per application is available in Online Appendix EC.2. Production quality management has been the most common application of intelligent failure analysis methodologies due to the significant costs associated with quality assurance. Smart failure analysis methodologies have also been impacted by the increased use of sensors and IoT to collect precise data from machines, tools, operators, and stations, as well as powerful computers to analyze the data. Computer systems failure analysis and system reliability and safety rank second, while chemical systems rank third, as these systems often require specific methodologies, such as hazard and operability analysis.

We checked every paper dataset to find information about the complexity of their case-study and reasons behind their good results to help readers select a validated study on a large set of data. An enriched dataset of problem-solving data are used by Xu et al. (2020), Du et al. (2012), Oliveira et al. (2021), Gómez-Andrades et al. (2015), Leu and Chang (2013), Price and Taylor (2002), Sariyer et al. (2021), Gomez-Andrades et al. (2016) and Xu and Dang (2023). An enriched dataset of historical problem-solving and sensors data is used by

Filz et al. (2021b), Sun et al. (2021), Mazzoleni et al. (2017), Hireche et al. (2018), Yanget al. (2015) Demirbaga et al. (2021), Waghen and Ouali (2021), Zhang et al. (2023), Oliveira et al. (2022), Sun et al. (2021). Data from the system and processes are used by Teoh and Case (2005), Ma et al. (2021), Schuh et al. (2021), Waghen and Ouali (2021). Other studies demonstrated their methodology on a small problem.

Levels of automation/intelligence

Failure analysis intelligence can be divided into five levels based on the data used. Level 1 involves analyzing failures using expert-based data with the use of intelligence tools. This level can be further improved by incorporating fuzzy-based tools, such as fuzzy BNs, fuzzy neural networks, and fuzzy rule-based systems. If the amount of historical data can be increased over time, we suggest using BNs in a heuristic-based algorithm, as they have the capability to work with all possible data, resulting in fewer modifications in the failure analysis methodology over time. Good examples for Level 1 include Yucesan et al. (2021) and Brahim et al. (2019).

Level 2 involves analyzing failures using experts to identify failure structures and problem-solving and system-related data to determine failure probabilities. This level can be used by a professional team who can correctly and completely identify failure structure. It can also be used by those who work with variable structures where updating the structure requires a lot of data modification. Identifying failure structures and analyzing failures are both automated at level 3. This level is the most applicable when a good amount of data is available. BNs, classification algorithms, and neural networks are among the best tools to analyze failure within RCA, FMEA, and FTA methodologies. Studies such as Filz et al. (2021b) Zuo et al. (2016), Dey and Stori (2005), Mangalathu et al. (2020), Yang et al. (2015) and Ma et al. (2021) are good examples for Levels 2 and 3.

In level 4, mitigation actions are also determined automatically. This level represents a whole automation of failure analysis. BNs are among the few tools that can encompass all steps of failure analysis. As such, we suggest using them. CBR databases can be used by BNs plus system-based data to provide possible corrective actions. Tönnes (2018), Zuo et al. (2016) and Hecht and Baum (2019) are among good studies for Level 4. Chang et al. (2017) has focused to automate and visualize corrective actions using a self-organizing map (SOM) neural network in an FMEA methodology. Future research should concentrate on the development of an automated FMEA that dynamically updates the current RPN (Risk Priority Number). This can aid in predicting failures in parts or components of a system using a "Live RPN." The predictive capability of such a tool can be utilized to optimize the overall system. It enables the transformation of a manufacturing system into a self-controlling system, allowing adjustments based on current parameters (Filz et al., 2021b).

Level 5 is a hybrid intelligence approach to failure analysis that encompasses all other levels and can be implemented within FMEA, RCA, and FTA methodologies when a limited amount of historical and system-based data is available until a comprehensive CBR database is built. BNs provide a good graphical representation and can work with all possible data types. The advantages of BNs are significant enough to be suggested for hybrid intelligence failure analysis. However, we did not find any comprehensive study for this level. A combination of studies that proposed methods to use integrated expert-based, problem-solving, and system-based data, such as Waghen and Ouali (2021); Filz et al. (2021b), is suggested. Nonetheless, this level remains open and needs to be the focus of future research by scholars. To facilitate the implementation of hybrid intelligence failure analysis, a user-friendly interface is crucial for operators to interact with. Several studies have proposed user-interface applications for this purpose, including (Chan & McNaught, 2008; Camarillo et al., 2018; Li et al., 2013; Jacobo et al., 2007; Yang et al., 2009, 2020; Demirbaga et al., 2021; Snooke & Price, 2012; Palluat et al., 2006).

Introducing knowledge into tools

In this section, we analyze which types of knowledge, expert-driven, data-driven, or a hybrid of both, are usually used with which tools and what the implications are for providing insights on suitable tools for hybrid intelligence failure analyses.

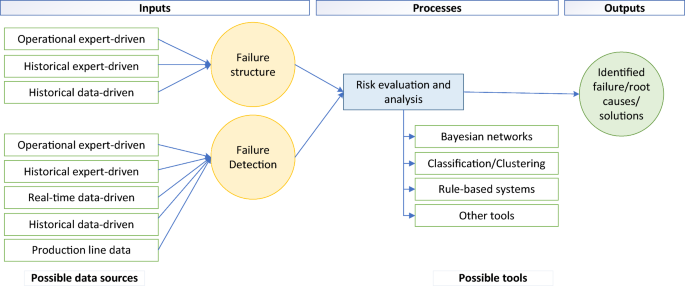

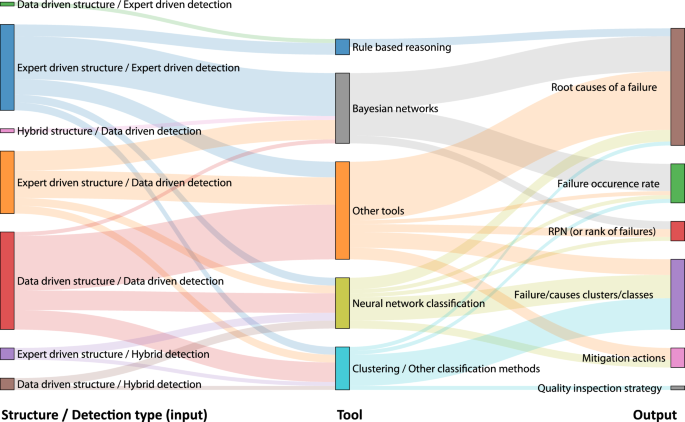

Figure 6 shows the distribution of literature based on the input data, tools, and outputs (four general steps of a failure analysis methodology in Fig. 3). The first column of nodes shows various combinations of types of knowledge, expert-driven, data-driven, or a hybrid of both, that are usually used in the literature to identify the structure of failure and to detect the probability of failures. The second column of nodes shows various tools that are used to analyze the failure. The third column of nodes shows outputs of a failure analysis. The number of studies with each particular focus is shown by the thickness of an arrow. Details are in Appendix EC.1.

The following studies have tried to introduce knowledge and data from expert and data based sources to a failure analysis methodology. Filz et al. (2021b) utilized expert knowledge to identify the structure of failure, the components involved, and the necessary sensors to be used. They then employed sensors to capture data and leveraged problem-solving data from the recorded expert archive to identify failures in a deep learning model. Similarly, Musumeci et al. (2020) used supervised algorithms to classify failures. Mazzoleni et al. (2017) they used data from sensors to select the most effective features related to a failure, and subsequently employed sensor data and failure expert data-sets within a gradient boosting tree algorithm to identify the possibility of the failure. Duan et al. (2020) used data from different sources in a similar way for a neural network to identify the root cause of a failure. Ma and Wu (2020) utilized expert knowledge to identify failures in construction projects. Subsequently, expert datasets were employed in conjunction with project performance indices to predict the possibility of a failure and determine the root cause of the failure using a neural network tool.

Hireche et al. (2018), Yang et al. (2015) gathered data from sensors to determine the conditions of each failure/component node. Then, a BN was used to identify the risks and causes. A multi-level tree is developed by Waghen and Ouali (2021). Each level contains a solution, pattern, and condition level. Solutions are retrieved from a historical failure database as a combination of certain patterns. The pattern in each problem has been identified and related to the solution using a supervised machine-learning tool. Each level is linked to the next level until the root cause of a failure is correctly identified.

Other usefull tips for introducing knowledge from different sources to a failure analysis methodology can be found in the following studies. Zuo et al. (2016) divided a multi-operation machining process operation, station, and key characteristics levels. Stream of variations (SoV) was used to evaluate the sensitivities of the machining operations level by level. Results were used to find the sources affecting the quality. Distribution techniques for each quality precision using multi-objective optimization were chosen. Dey and Stori (2005) used a message-passing method (Pearl, 1988) to update a BN using data from sensors to estimate the condition of the system and update the CPTs, when each sensor output is considered as a node in the BN. Chan and McNaught (2008) also used sensor data to change the probabilities in a BN. A user interface is also developed to make inferences and present the results to operators.

Rokach and Hutter (2012) used the sequence of machines and a commonality graph of steps and failure causes data to cluster failures to find commonalities between them. A GO methodology is used by Liu et al. (2019b) to model the system and a heuristic is used to construct BN structure and probabilities from the GO methodology model. Teoh and Case (2005) developed an objective-oriented framework that considers conceptual design information. A hierarchy of components, an assembly tree, and a functional diagram are built to capture data from processes and feed it to FMEA. Bhardwaj et al. (2022) used historical data from a similar system to estimate failure detection probabilities. Hecht and Baum (2019) used SysML to describe components and failures.

Zhou et al. (2015) used a tree of a system. Two classes of knowledge, shallow knowledge and deep knowledge, were gathered to generate rules for failure analysis. The former indicates the experiential knowledge of domain experts, and the latter is the knowledge about the structure and basic principle of the diagnosis system. Liu and Ke (2007) used CBR to find similar problems and solutions, text mining to find key concepts of the failure in the historical failure record texts, and rule mining to find hidden patterns among system features and failures. Filz et al. (2021a) gathered process parameters after each station using a quality check station. Then a self-organizing Map was used to find failure propagation and cause and effect. Ma et al. (2021) used data from the system to determine features of problems, products, and operators. Data from problem-solving databases was used to find new failures and classified them using the features and historical data.

Psarommatis and Kiritsis (2022) developed a methodology that uses data-driven and knowledge-based approaches, an ontology base on the MASON ontology to describe the production domain and enrich the available data. Wang et al. (2018) developed a data acquisition system including a monitor, sensor, and filter modules. A fault diagram models failure propagation. They extended the Kripke structure by proposing the feature-labeled transition system, which is used to distinguish the behavior of the transition relationship by adding a signature to the transition relationship.

This section highlights that in the realm of failure analysis, a majority of research papers have utilized a hybrid approach, combining expert and data knowledge for tasks such as failure detection, classification, and feature selection. However, to achieve real-time failure analysis, a more effective integration of these two sources is crucial. This integration should enable operators and engineers to provide timely input to the system and observe immediate results. Furthermore, only a limited number of studies have specifically focused on the identification of failure structures using either data or a hybrid of expert and data knowledge.

The use of BNs has emerged as a highly promising approach for achieving real-time input and structure identification in the field of failure analysis. By leveraging both expert knowledge and data sources, BNs have the capability to effectively incorporate expert knowledge as constraints within structure identification algorithms. Unlike traditional classification algorithms that are primarily designed for continuous data, BNs are versatile in handling both discrete and continuous data types. Moreover, BNs possess several strengths that make them particularly suitable for failure analysis. They excel at performing real-time inferences, engaging in counterfactual reasoning, and effectively managing confounding factors. Given these advantages, it is essential to allocate more attention to the application of BNs in hybrid intelligence failure analysis. This involves further exploration of their capabilities and conducting comparative analyses with other tools to assess their effectiveness in various scenarios. By focusing on BNs and conducting comprehensive evaluations, researchers can enhance the understanding and adoption of these powerful tools for improved failure analysis in real-time settings.

Hybrid intelligence

A collaborative failure analysis methodology is needed, in which artificial intelligence tools, machines, and humans can communicate. While hybrid intelligence has gained attention in various fields, literature on the subject for failure analysis is still limited. For example, Piller et al. (2022) discussed methods to enhance productivity in manufacturing using hybrid intelligence. They explored considerations such as task allocation between humans and machines and the degree of machine intelligence integrated into manufacturing processes. Petrescu and Krishen (2023) and references within have delved into the benefits and future directions of hybrid intelligence for marketing analytics. Mirbabaie et al. (2021) has reviewed challenges associated with hybrid intelligence, focusing particularly on conversational agents in hospital settings. Ye et al. (2022) developed a parallel cognition model. This model draws on both a psychological model and user behavioral data to adaptively learn an individual’s cognitive knowledge. Lee et al. (2020) combined a data-driven prediction model with a rule-based system to benefit from the combination of human and machine intelligence for personalized rehabilitation assessment.

An artificial intelligence tool should not only provide its final results but also provide its reasoning. A human can analyze the artificial intelligence tool reasoning through a user-interface application and correct possible mistakes instantly and effortlessly. To enable this capability, the use of a white-box artificial tool, such as Bayesian networks, is essential. Explainable AI aids in comprehending and trusting the decision-making process of the hybrid intelligence system by providing the reasoning behind it (Confalonieri et al., 2021). Moreover, a machine should be able to interpret and implement an artificial intelligence tool and/or human solutions. Artificial intelligence tools, machines, and humans can learn from mistakes (Correia et al., 2023).

To fully exploit the complementarity in human–machine collaborations and effectively utilize the strengths of both, it is important to recognize and understand their roles, limitations, and capabilities in the context of failure analysis. Future research should focus on developing a clear plan for their teamwork and joint actions, including determining the optimal sensor types and locations, quality inspection stations, and human/machine analysis processes. In other words, How to design a decision support system that integrates both human knowledge and machine intelligence with respect to quality management? should be answered. Additionally, tools should be developed to propose possible mitigation actions based on the unique characteristics of the system, environment, humans, and machines. To achieve this, system-related data along with CBR data can be analyzed to find potential mitigation actions.

A general framework for human–machine fusion could involve the following steps: identifying applicable human knowledge and machine data for the problem, determining machine intelligence tools that facilitate the integration of human–machine elements like BNs, identifying the suitable points in the decision-making process to combine human knowledge and machine intelligence effectively, designing the user interface, and incorporating online learning using input from human knowledge (Jarrahi et al., 2022). However, human–machine fusion is not an easy task due to the complexity of human–machine interaction, the need for effective and online methods to work with both human and machine data, and the challenge of online learning from human knowledge. For instance, while ChatGPT interacts well with humans, it currently does not update its knowledge using human knowledge input for future cases (Dellermann et al., 2019; Correia et al., 2023).

Failure propagation and correlation

Most FMEA papers concentrated on analyzing failures in individual products, processes, or machines. It is essential to acknowledge that production processes and machines are interconnected, leading to the correlation and propagation of failures among them. Consequently, it becomes crucial to address the challenge of analyzing failures in multiple machines. To effectively tackle this issue, a holistic approach is necessary. Rather than focusing solely on individual machines, take a broader perspective by considering the entire production system to identify the interdependencies and interactions among different machines, multiple processes, and within the system.

For an intelligence failure analysis, it is necessary to exploit detailed system-related data to carefully and comprehensively identify the relations between different parts of a system, product, and/or process. Some papers have suggested methods to identify failure propagation and correlation (Wang et al., 2021; Zhu et al., 2021; Chen et al., 2017). They usually proposed methods to analyze correlations only between failures or risk criteria using MCDM or statistical methods. However, an intelligence failure analysis should go beyond this and identify failure propagation and correlation among parts of a system.

In the literature, Chen and Jiao (2017) proposed finite state machine (FSM) theory to model the interactive behaviors between the components, constructing the transition process of fault propagation through the extraction of the state, input, output, and state function of the component. Zuo et al. (2016) used SoV to model propagation of variations from station to station and operation to operation. A propagation from one station (operation) to the next station (operation) was modeled using a regression like formula. Ament and Goch (2001) used quality check data after each station to train a neural network for failure progagation and estimate the relationships betweenfailure in stations using a regression model to find patterns in quality check data. Ma et al. (2021) used patterns in data to classify failures and identify causes.

To conduct an intelligence failure analysis, it is important to identify every part involved, their roles, characteristics, and states. The analysis should include the identification of failure propagation and effects on functions, parts, and other failures. One approach to analyzing failures is through simulation, which can help assess the changes in the characteristics of every part of a system, including humans, machines, and the environment. To analyze the complexity of failure propagation and mutual interactions among different parts of a system, data-driven tools and heuristic algorithms need to be developed. These tools should be capable of managing a large bill of materials and analyzing the failure structure beyond the traditional statistical and MCDM methods. Rule mining can be a useful tool for detecting failure correlation and propagation, especially in situations where there is limited data available, and human interpretation is crucial.

Hybrid methodologies

FMEA, RCA, and FTA methodologies are all complementary and can improve each other’s performance. Furthermore, the availability of data, advanced tools to process data, and the ability to gather online data may lead to a unified FMEA, RCA, and FTA methodology. The reason for this is that while FMEA tries to find potential failures, RCA and FTA try to find root causes of failures, they use similar data and tools to analyze data.

In the literature, FTA has been used as an internal part of FMEA by Steenwinckel et al. (2018), Palluat et al. (2006)and RCA by Chen et al. (2018). Using automated mappings from FMEA data to a domain-specific ontology and rules derived from a constructed FTA, Steenwinckel et al. (2018) annotated and reasoned on sensor observations. Palluat et al. (2006) used FTA to illustrate the failure structure of a system within an FMEA methodology and developed a neuro-fuzzy network to analyze failures. Chen et al. (2018) used FTA and graph theory tools, such as the maximum spanning tree, to find the root cause of failures in an RCA methodology. However, studies on the integration of these methodologies regarding the availability of data, tools, and applications should be done to use their advantages within a unified methodology that detects potential failures, finds root causes and effects, and improves the system.

Other future research

Several promising future research directions can be pursued. Cost-based and economic quantification approaches can be integrated into intelligent methodologies to enable more informed decision-making related to failures, their effects, and corrective actions. Additionally, incorporating customer satisfaction criteria, such as using the Kano model, can be useful in situations where there are several costly failures in a system, and budget constraints make it necessary to select the most effective corrective action. This approach has been successfully applied in previous studies (Madzík & Kormanec, 2020), and can help optimize decision-making in complex failure scenarios.

Data management is a critical aspect of intelligence methodologies, given the large volume and diverse types of data that need to be processed. Therefore, it is important to design reliable databases that can store and retrieve all necessary data. Ontology can be a valuable tool to help integrate and connect different types of data (Rajpathak & De, 2016; Ebrahimipour et al., 2010). However, it is also essential to consider issues such as data obsolescence and updates, especially when corrective actions are taken and root causes are removed. Failure to address these issues can lead to incorrect analysis and decision-making.

Traditionally, only single failures were considered in analysis because analyzing a combination of multiple failures was impossible. However, in a system, two or more failures may occur simultaneously or sequentially. It is also possible that a failure occurs as a consequence of another failure. These circumstances are complicated because each failure can have several root causes, and another failure is only one of its causes. Therefore, a clear and powerful tool, such as Bayesian Networks (BNs), should be used to analyze failures and accurately identify possible causes.

The traditional failure analysis methodologies had limitations such as repeatability, subjectivity, and time consumption, which have been addressed by intelligence failure analysis. However, there is a need for more focus on explainability, objective evaluation criteria, and results reliability as some intelligent tools, such as neural networks, act as black boxes. Therefore, suitable tools, such as BNs, should be well-developed and adapted for (hybrid) intelligence failure analysis. Details such as the time and location of the detected failure, possible factors of the causes, such as location, time, conditions, and description of the cause, and reasons behind the causes, such as human fatigue, should be considered within a methodology. These can help to go beyond the CBR and propose intelligence solutions based on the reasons behind a cause. While RCA has implemented these data to a limited extent, FMEA lacks such implementation.

Conclusion

This paper has collected information on both proactive and reactive failure analysis methodologies from 86 papers that focus on FMEA, RCA, or FTA. The goal is to identify areas for improvement, trends, and open problems regarding intelligent failure analysis. This information can help researchers learn the benefits of both methodologies, use their tools, and integrate them to strengthen failure analysis. Each paper has been read and analyzed to extract data and tools used within the paper and their benefits. It was observed that the literature on the three methodologies, FMEA, RCA, and FTA, is diverse. In Industry 4.0, the availability of data, and advances in technology are helping these methodologies benefit from the same tools, such as BNs and neural networks, and make them more integrated.

The literature was classified based on the data needed for a (hybrid) intelligence failure analysis methodology and the tools used for failure analysis to be data-driven and automated. In addition, trends to make these methodologies smart and possible future research in this regard were discussed.

Two main classes of failure structure and failure detection data are usually needed for a failure analysis methodology, each of which can be classified as expert-driven and data-driven. However, a combination of all types of data can lead to more reliable failure analysis. Most papers focused on operational and historical expert-driven and/or data-driven problem-solving data. Among the tools used within FMEA, RCA, and FTA methodologies, BNs have the capability to make a methodology smart and interact with both humans and machines to benefit from hybrid intelligence. BNs not only can analyze failures to identify root causes but also can analyze possible solutions to provide necessary action to prevent failures. A BN’s are also capable of real-time inference, counterfactual reasoning, and managing confounding factors. BNs handle both discrete and continuous data types, unlike traditional classification algorithms. Besides BNs, classification by neural networks, other classification tools, rule-based algorithms, and other tools have been proposed in the literature.

Finally, managerial insights and future research are provided. Most studies have focused on the determination of root causes. It is necessary to automatically find possible mitigation and corrective actions. This step of a failure analysis methodology needs more interaction with humans. Thus, the benefits of hybrid intelligence can be more evident here. It is imperative for humans and machines to work together to properly identify and resolve failures. System-related data should be analyzed to find possible corrective actions. This data is usually available for both proactive and reactive methodologies. Our study showed an effectively tool to integrate knowledge from experts and sensors in needed, enabling operators and engineers to provide timely input and observe immediate results. There is a need to identify failure structures using a hybrid approach that combines expert and data knowledge. Real-time input and structure identification with Bayesian networks can be achieved through the use of Bayesian networks. Further exploration of BNs and comparative analyses with other tools is necessary to enhance understanding and adoption of the best tools for a hybrid intelligence failure analysis in real-time scenarios to prevent failures.

Data availability

There is no data related to this paper.

References

Agrawal, V., Panigrahi, B. K., & Subbarao, P. (2016). Intelligent decision support system for detection and root cause analysis of faults in coal mills. IEEE Transactions on Fuzzy Systems, 25(4), 934–944.

Akata, Z., Balliet, D., De Rijke, M., Dignum, F., Dignum, V., Eiben, G., Fokkens, A., Grossi, D., Hindriks, K., Hoos, H., et al. (2020). A research agenda for hybrid intelligence: Augmenting human intellect with collaborative, adaptive, responsible, and explainable artificial intelligence. Computer, 53(08), 18–28.

Al-Mamory, S. O., & Zhang, H. (2009). Intrusion detection alarms reduction using root cause analysis and clustering. Computer Communications, 32(2), 419–430.

Ament, C., & Goch, G. (2001). A process oriented approach to automated quality control. CIRP Annals, 50(1), 251–254.

Bhardwaj, U., Teixeira, A., & Soares, C. G. (2022). Bayesian framework for reliability prediction of subsea processing systems accounting for influencing factors uncertainty. Reliability Engineering & System Safety, 218, 108143.

Brahim, I. B., Addouche, S. A., El Mhamedi, A., & Boujelbene, Y. (2019). Build a Bayesian network from FMECA in the production of automotive parts: Diagnosis and prediction. IFAC-PapersOnLine, 52(13), 2572–2577.

Cai, B., Huang, L., & Xie, M. (2017). Bayesian networks in fault diagnosis. IEEE Transactions on Industrial Informatics, 13(5), 2227–2240.

Camarillo, A., Ríos, J., & Althoff, K. D. (2018). Knowledge-based multi-agent system for manufacturing problem solving process in production plants. Journal of Manufacturing Systems, 47, 115–127.

Chan, A., & McNaught, K. R. (2008). Using Bayesian networks to improve fault diagnosis during manufacturing tests of mobile telephone infrastructure. Journal of the Operational Research Society, 59(4), 423–430.

Chang, W. L., Pang, L. M., & Tay, K. M. (2017). Application of self-organizing map to failure modes and effects analysis methodology. Neurocomputing, 249, 314–320.

Chang, W. L., Tay, K. M., & Lim, C. P. (2015). Clustering and visualization of failure modes using an evolving tree. Expert Systems with Applications, 42(20), 7235–7244.

Chen, H. S., Yan, Z., Zhang, X., Liu, Y., & Yao, Y. (2018). Root cause diagnosis of process faults using conditional Granger causality analysis and maximum spanning tree. IFAC-PapersOnLine, 51(18), 381–386.

Chen, L., Jiao, J., Wei, Q., & Zhao, T. (2017). An improved formal failure analysis approach for safety-critical system based on mbsa. Engineering Failure Analysis, 82, 713–725.

Chen, X., & Jiao, J. (2017). A fault propagation modeling method based on a finite state machine. Annual Reliability and Maintainability Symposium (RAMS), 2017, 1–7.

Chhetri, T. R., Aghaei, S., Fensel, A., Göhner, U., Gül-Ficici, S., & Martinez-Gil, J. (2023). Optimising manufacturing process with Bayesian structure learning and knowledge graphs. Computer Aided Systems Theory - EUROCAST, 2022, 594–602.

Chien, C. F., Liu, C. W., & Chuang, S. C. (2017). Analysing semiconductor manufacturing big data for root cause detection of excursion for yield enhancement. International Journal of Production Research, 55(17), 5095–5107.

Clancy, R., O’Sullivan, D., & Bruton, K. (2023). Data-driven quality improvement approach to reducing waste in manufacturing. The TQM Journal, 35(1), 51–72.

Confalonieri, R., Coba, L., Wagner, B., & Besold, T. R. (2021). A historical perspective of explainable artificial intelligence. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 11(1), e1391.

Correia, A., Grover, A., Schneider, D., Pimentel, A. P., Chaves, R., De Almeida, M. A., & Fonseca, B. (2023). Designing for hybrid intelligence: A taxonomy and survey of crowd-machine interaction. Applied Sciences, 13(4), 2198.

Dabous, S. A., Ibrahim, F., Feroz, S., & Alsyouf, I. (2021). Integration of failure mode, effects, and criticality analysis with multi-criteria decision-making in engineering applications: Part I- manufacturing industry. Engineering Failure Analysis, 122, 105264.

Dellermann, D., Ebel, P., Söllner, M., & Leimeister, J. M. (2019). Hybrid intelligence. Business & Information Systems Engineering, 61, 637–643.

Demirbaga, U., Wen, Z., Noor, A., Mitra, K., Alwasel, K., Garg, S., Zomaya, A. Y., & Ranjan, R. (2021). Autodiagn: An automated real-time diagnosis framework for big data systems. IEEE Transactions on Computers, 71(5), 1035–1048.

Dey, S., & Stori, J. (2005). A Bayesian network approach to root cause diagnosis of process variations. International Journal of Machine Tools and Manufacture, 45(1), 75–91.

Du, S., Lv, J., & Xi, L. (2012). A robust approach for root causes identification in machining processes using hybrid learning algorithm and engineering knowledge. Journal of Intelligent Manufacturing, 23(5), 1833–1847.

Duan, P., He, Z., He, Y., Liu, F., Zhang, A., & Zhou, D. (2020). Root cause analysis approach based on reverse cascading decomposition in QFD and fuzzy weight ARM for quality accidents. Computers & Industrial Engineering, 147, 106643.

Ebeling, C. E. (2019). An introduction to reliability and maintainability engineering. Waveland Press.

Ebrahimipour, V., Rezaie, K., & Shokravi, S. (2010). An ontology approach to support FMEA studies. Expert Systems with Applications, 37(1), 671–677.