Abstract

A dual-process model is suggested for the processing of words with emotional meaning in the cerebral hemispheres. While the right hemisphere and valence hypotheses have long been used to explain the results of research on emotional stimulus processing, including nonverbal and verbal stimuli, data on emotional word processing are mostly inconsistent with both hypotheses. Three complementary lines of research data from behavioral, electrophysiological, and neuroimaging studies seem to suggest that both hemispheres have access to the meanings of emotional words, although their time course of activation may be different. The left hemisphere activates these words automatically early in processing, whereas the right hemisphere gains access to emotional words slowly when attention is recruited by the meaning of these words in a controlled manner. This processing dichotomy probably corroborates the complementary roles the two hemispheres play in data processing.

Similar content being viewed by others

Introduction

In everyday life, we are constantly surrounded by words conveying emotions. The information conveyed about internal states, beliefs, attitudes, motivations, values, and behaviors makes us feel satisfied or causes us to feel tension or resentment. Words such as thriftyversus cheap,traditionalversus old-fashioned, or eccentricversus strange have similar definitions but carry somewhat opposite emotional meanings. The choice of emotional words completes communication, since it expresses the speaker’s opinions and feelings, which adds to the semantic referent of a given word, making communication more human. Sometimes, these words grab our attention and move us from one opinion to another. The two features that characterize emotional words are valence and arousal. Valence, or evaluation, varies from negative to positive and is defined as a measure of how pleasant or unpleasant a stimulus is,Footnote 1 whereas arousal, or activation, ranges from calm to highly arousing and is defined as a measure of how intensely a person would want to approach or flee from a stimulusFootnote 2 (Bradley, Greenwald, Petry, & Lang, 1992; Osgood, Suci, & Tannenbaum, 1957). Research suggests some differences in the processing of emotional words, as compared with neutral words. Taking into consideration the fact that the left hemisphere (LH) is more efficient at language processing and the right hemisphere (RH) is often reported to be involved in the processing of emotions, the question addressed in this article is the following: How do the cerebral hemispheres contribute to the processing of emotional words, which have the attributes both of being a part of language and of being emotional? If both hemispheres are involved in the processing of emotional words, what is the role of each one, assuming that they make complementary contributions?

Since the role of the cerebral hemispheres in the processing of emotional words as part of the semantic system is still unclear and disputable in many respects, the present review will summarize existing behavioral, electrophysiological, and neuroimaging studies related to the processing of emotional words in neurologically intact individuals, with an emphasis on identifying the contributions of the LH and RH to this type of processing. To this end, the first section reviews behavioral data in the field of emotional word processing, followed by the findings of semantic studies that suggest the possibility of a different time course of activation for some parts of the semantic system, due to the automatic versus controlled nature of processing governed by the LH and RH. In the next two sections, electrophysiological data that tend to support the dual-process model of automatic versus controlledand neuroimaging data that appear to clarify the role of the hemispheres in this dichotomy are reported.

Behavioral data on emotional word processing

Lateralized emotion hypotheses

In the past decades, there has been a growing interest in the role that channels for conveying emotions (i.e., facial, prosodic, and verbal) play in human communication. Reviewing literature on the lateralized effect of these channels indicates that research results in almost all three areas have been compared with the two hypotheses of the RH and the valence. The RH hypothesis, which has been supported especially in the studies in which the performance of brain-damaged individuals has been compared with that of normal controls, suggests that the RH has a greater role in the processing of emotional information than does the LH (e.g., Adolphs, Damasio, Tranel, & Damasio, 1996; Atchley, Ilardi, & Enloe, 2003; Borod, Andelman, Obler, Tweedy, & Welkowitz, 1992; Cicero et al., 1999; Sim & Martinez, 2005; Windmann, Daum, & Gunturkun, 2002; see also Borod, 1992, for a review), whereas the valence hypothesis states that hemispheric biases in the processing of emotional information may depend on the valence of the emotion conveyed by that information (e.g., Davidson, 1992; Jansari, Tranel, & Adolphs, 2000; Silberman & Weingartner, 1986; Sutton & Davidson, 1997; Van Strien & Morpurgo, 1992). In general, while the RH hypothesis attributes comprehension, experience, and expression of emotions to the RH irrespective of valence, the valence hypothesis holds that there is differential hemispheric specialization for these processes, with the LH being more involved in positive emotions and the RH in negative emotions.

It needs to be mentioned here that in a synthesis of the RH and valence hypotheses, a third theory of emotion, which has sometimes been referred to as circumplex theory, comes into play. The main feature of this theory is that it considers not only the effect of valence, but also the level of arousal that an emotional stimulus can raise (Russell, 1980, 2003). Researchers investigating this view,who map the neural systems involved in emotions by employing methodologies other than the behavioral, such as the electrophysiological, have proposed that the frontal regions of both hemispheres and the parietotemporal region of the RH constitute a system involved in the experience of, valence and the arousal aspects of emotions, respectively (e.g., Heller, 1993). The anterior regions are also involved in the expression of emotions according to valence theory; this theory attributes the comprehension of emotions to the RH (Davidson, 2003).The next part indicates whether behavioral data on emotional word processing fit with the predictions of the lateralized emotion hypotheses.

Inconsistent results of emotional word processing

While the the RH and the valence hypotheses appear to provide a good framework for explaining the results regarding the nonverbal components of emotional communication (i.e., facial expressions and prosody; see, e.g., Adolphs et al., 1996; Bryson, McLaren, Wadden, & MacLean, 1991; Carmon & Nachshon, 1973; Etcoff, 1989; Kimura, 1964; Ley & Bryden, 1979; Mahoney & Sainsbury, 1987), they do not seem to adequately explain what occurs in the area of emotional word processing as indicated by studies whose results do not fit with the predictions of theRH and valence hypotheses (e.g., Ali & Cimino, 1997; Eviatar & Zaidel, 1991; S. D. Smith & Bulman-Fleming, 2005, 2006; Strauss, 1983).

The first attempt to study the lateralized processing of emotional words was made by Graves, Landis, and Goodglass (1981) in the early 1980s. These researchers addressed the processing of emotional words in neurologically intact individuals using the divided visual field (DVF) paradigm. In the DVF paradigm, stimuli are presented for a brief duration (normally less than 200 ms) to the right visual field (RVF) or the left visual field (LVF) and are considered to be initially processed by the LH and the RH, respectively. The logic underlying this technique is based on the main feature of the visual system: The primary pathways from each visual field are crossed and reach the opposite hemisphere. In Graves et al.’s study, emotional words (e.g., fear, love) presented for 150 ms in the RVF (LH) or in the LVF (RH) were processed more accurately than neutral words in the LVF. This finding seems to be in favor of the RH hypothesis.

Strauss (1983) examined the two hemispheres’ contributions to the processing of emotional words in two experiments. Because the preliminary data showed relatively accurate responses with shorter exposure durations, Strauss decreased the exposure duration to 25 ms in the first experiment and 50 ms in the second experiment. In both experiments, emotional and neutral words were recognized more accurately when presented to the RVF. Strauss interpreted this finding as supporting the view that the LH plays a greater role in the processing of verbal information, regardless of the emotional content.

In contrast to the two above-mentioned studies, Eviatar and Zaidel (1991) failed to demonstrate a significant difference in processing in favor of either hemisphere with an exposure duration of 80 ms. Thus, the results of the latter two studies fitted neither the RH hypothesis nor the valence hypothesis. Supposedly, relatively short exposure durations (25 and 50 ms) showed an LH role (Strauss, 1983), while a relatively longer exposure duration (150 ms) yielded an RH effect (Graves et al., 1981); with an intermediate duration (80 ms), the effect of the hemispheres was balanced (Eviatar & Zaidel, 1991).

Similarly, a study carried out by Ali and Cimino (1997) yielded results that were not completely consistent with either hypothesis. In a lexical decision task, emotional words (positive and negative), neutral words, and nonwords were presented for 150 ms to either visual field (i.e., perception task); after a 20-min delay, participants were asked to recall as many real words as they could (i.e., recall task). After that, participants were given a list of all the real words intermixed with the same number of new words and were asked to circle the words that they had seen previously (i.e., recognition task).

The results of the perception task indicated greater accuracy only for positive words in the RVF, but not for negative words in the LVF. Analysis of the correct responses to the recall task also showed greater recall of positive words in the RVF, but not of negative words in the LVF. In the recognition task, however, accuracy for positive words was higher in the RVF, and accuracy for negative words was higher in the LVF. Since only the results of the recognition task were consistent with the valence hypothesis, the authors suggested caution when interpreting the results in favor of this hypothesis.

These data seem to suggest that the lateralized emotion hypotheses are insufficient in explaining the results of emotional word processing.

Recent research on emotional word processing

In the past decade, in order to explain the inconsistent results of behavioral research, researchers attempted to demonstrate that both hemispheres are involved in emotional word processing, but in varying ways (M. A. Collins & Cooke, 2005; Nagae & Moscovitch, 2002). Accordingly, Nagae and Moscovitch argued that memory of emotional words emerges in the RH, whereas perception of these words occurs in the LH. They believed that lateralization studies based on the most common method for implementing the DVF paradigm, in which participants respond to each stimulus immediately after it is presented, examine primarily the perception of these words. This methodology would obscure the RH’s contribution to the memory of emotional words.

In an attempt to separate the effects of memory from those of perception, Nagae and Moscovitch (2002) employed a DVF task in which a number of words were presented successively for 180 ms to participants in each block; at the end of the block, participants were asked to recall the words (Experiment 1). This method was compared with a more standard DVF paradigm in which stimuli were presented for 40 ms to each visual field (Experiment 2). The results of Experiment 1 revealed the same accuracy rate for emotional words across visual fields, along with a larger difference between the accuracy of emotional and neutral words in the LVF. That is, the modified methodology yielded a larger emotional/neutral accuracy difference only in the LVF, which was due to the worse recall of neutral words presented in the LVF. The results of Experiment 2 demonstrated better performance in the RVF for both emotional and neutral words. Therefore, the results did not support the idea thathemispheric asymmetry during emotional word processing is due to the emergence of these words’ perception in the LH and memory in the RH.

Following Nagae and Moscovitch’s (2002) idea that the LH and RH contribute to emotional word processing at two different levels, M. A. Collins and Cooke (2005) aimed to separate the effect of conceptual processing of emotional words, occurring in the RH, from that of perceptual processing, emerging in the LH.After encoding the surface features (Experiment 1) and semantic features (Experiment 2) of emotional words, participants completed a lexical decision task incorporating words from the encoding task along with some new words. Thus, for the perceptual-encoding task, participants first counted the number of long straight-lined strokes in each word and then performed a lexical decision task in which emotional and neutral words that had been presented in the encoding task and an equal number of unprimed words were presented to each visual field.

For the conceptual-encoding task, participants first generated a word from the cues provided in a sentence that described that word (e.g., jail) and then performed a lexical decision task in which reaction times to the associates of the encoded words (e.g., prison) were contrasted with reaction times to an equal number of new words. The results of the perceptual experiment indicated an overall RVF advantage in reaction times to primed emotional words, relative to unprimed words. In the conceptual experiment, however, there was a processing advantage only for primed positive, but not negative, words in the LVF. The latter result, therefore, did not support the role of the RH in the conceptual processing of emotional words, as hypothesized by the authors.

A review of behavioral research does not identify any further DVF studies that sought to clarify cerebral contributions to the processing of emotional words. Although the idea of Nagae and Moscovitch (2002) on the contribution of the two hemispheres is relatively innovative, a distinction between perception and memory or perceptual processing and conceptual processing does not seem to effectively capture the differential roles of the two hemispheres in emotional word processing. Yet, one implication of their idea is that the RH comes into play later than the LH. It means that time, itself, may be a factor that differentiates between the roles of the cerebral hemispheres in emotional word processing.The next sectionoffers insightsonthe slow activation of the RH in the processing of semantic information.

Since emotional words are part of the semantic system, research findings in the field of semantics may offer some insight into the role of each hemisphere in emotional word processing. According to the results of semantic research, instead of one semantic system shared by the two hemispheres, each hemisphere has its own semantic system,which is activated in different circumstances (see Chiarello, 1991, 1998, for reviews). If it is the case that each hemisphere has its own semantic system, this may be the reason why the RH and the valence hypotheses, which attribute the processing of emotional words to one of the two hemispheres, are inadequate in explaining the results of this line of research. Therefore, to resolve the discrepancies in the research results, it may be necessary to consider a different theoretical perspective that reflects different profiles of the two hemispheres during emotional word processing. A review of semantic research should guide us to the right path.

Hemispheric asymmetries in semantic processing

Semantic systems in the LH and RH

Lateralized semantic research suggests the idea that the LH and RH represent parallel and distinct information-processing systems, each contributing in some way to nearly all linguistic behaviors (see Chiarello, 2003, for a review). The most compelling evidence supporting this idea comes from the study of split-brain patients (Sperry, Gazzaniga, & Bogen, 1969). The real breakthrough supported by these studies was to conclusively demonstrate that each isolated hemisphere represents a complete information-processing system; that is, each isolated hemisphere has its own perceptual experiences, memories, semantic system, and the ability to select appropriate behavioral responses (Sperry et al., 1969; Sperry, Zaidel, & Zaidel, 1979).

Likewise, numerous studies with normal participants, using the DVF priming paradigm, have demonstrated that word meanings are accessed within each hemisphere, but not necessarily in the same way (see Chiarello, 1991, 1998, for reviews). In the DVF priming paradigm, a prime and a target word are presented to visual fields, and priming effects consist in speedier processing of the target when it is related to the prime. The main feature of this paradigm is that, by manipulating the time elapsed between the presentation of the prime and the target—namely,the stimulus onset asynchrony (SOA)—information on the activation of the target at different times during processing can be extracted. This line of research seems to suggest, for some part of the semantic system,that time is a factor that differentiates activation pattern of words in the cerebral hemispheres.

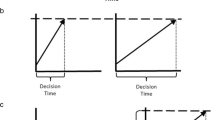

To explore the availability of the dominant meaning (e.g., money as target) and subordinate meaning (e.g., river as target) of ambiguous words (e.g., bank as prime) in the cerebral hemispheres, Burgess and Simpson (1988) used SOAs of 35 and 750 ms. Although they found equal priming of dominant meanings at both SOAs across hemispheres, subordinate meanings were primed at the 35-ms SOA only in the LH and at the 750-ms SOA only in the RH. Burgess and Simpson described this pattern of data on the basis of the dual-process model of word processing that differentiates between automatic and controlled processing (A. M. Collins & Loftus, 1975; Posner & Snyder, 1975). That is, subordinate meanings become activated in the LH automatically, but this activation is suppressed over time. After that, activation occurs in the RH, probably because some time is required to allocate attention to meanings for which the RH is responsible.

Later research revealed a similar pattern of activation for the processing of distantly related category pairs (e.g., deer–pony). First, (Chiarello, Burgess, Richards, and Pollock 1990), using a relatively long SOA (575 ms), found priming effects only in the RH. Conversely, in the study carried out by Abernethy and Coney (1993), priming of such pairs occurred only in the LH at an SOA of 250 ms. In their study, a bilateral pattern of priming effects was observed when an intermediate SOA of 450 ms was employed. When Abernethy and Coney (1996) further examined their results with the 250-ms SOA (Abernethy & Coney, 1993) through cross-hemispheric presentation of stimuli, they found that presentation of a prime to the LH facilitated processing of a target subsequently presented to the RH, whereas presentation of a prime to the RH did not facilitate processing of a target subsequently presented to the LH. This finding indicated that the RH is slow at activating distantly related category pairs.The next part shows how these pieces of evidence are combined in a frameworkthat implicatesa shift from an automatic processing in the LH to a controlled processing in the RH.

The time course hypothesis

The above-mentioned pattern of priming at the 450-ms SOA added to the previous data on the possibility of shifting activation from the LH to the RH over the course of processing. Namely, for this part of the semantic system (i.e., distantly related category pairs),the time course of activation seems to be different in the LH and RH.Nevertheless, a conclusive comparison of short, intermediate, and long time courses was presented in the study carried out by Koivisto (1997), which employed four different SOAs: 165, 250, 500, and 750 ms. This study showed priming effects only in the LH at the shortest SOA (165 ms) and only in the RH at the longest SOA (750 ms). Moreover, at the 250-ms SOA, there was nonsignificant priming in the LH and no priming in the RH, while at the 500-ms SOA, there was less priming in the LH than in the RH. Koivisto (1997) interpreted the results as indicating a difference between the time courses of activation in the cerebral hemispheres for distantly related category pairs.

The above-mentioned pattern of priming has been described in the time course hypothesis (e.g., Koivisto, 1997, 1998); it seems to have implications for emotional word processing. Namely, semantic research suggests that, in the processing of some parts of the semantic system, the time course of activation is a determining factor that differentiates automatic processing in the LH from controlled processing in the RH. As a piece of evidence, the automatic evaluation hypothesis suggests that the emotional value of words is extracted quickly and automatically (Fazio, Sanbonmatsu, Powell, & Kardes, 1986); this automatic stage is probably followed by a later stage in which attention is directed to the emotional content to guarantee an adaptive behavior (Naumann, Bartussek, Diedrich, & Laufer, 1992).

Thus, it is possible that the dual-process nature of automatic versus controlled processing governs emotional word processing in the cerebral hemispheres. To be clearer about the two key concepts, automatic processing, which likely occurs in the LH (Koivisto, 1997, 1998), activates nodes in memory but does not modify long-term memory. Hence, it does not place much demand on processing resources (Fisk & Schneider, 1984). In contrast, controlled processing, which probably takes place in the RH (Koivisto, 1997, 1998), is slow and sensitive to task difficulty. Thus, it includes effortful memory search and is under one’s active control (Schneider & Shiffrin, 1977). Due to the depth of processing and contribution of processing resources, the concepts of early and later stages of processing are also used for automatic and controlled processing, respectively (Posner & Petersen, 1990). In the next two sections, we present electrophysiological and neuroimaging data for emotional word processing that seem to offer insight into the dichotomy of automatic versus controlled processing and the hemisphere where each process likely takes place.

Electrophysiological bases of the emotional processing of words

Event-related brain potentials (ERPs) constitute valuable toolsfor assessing how fast the emotional content of words is processed (e.g., Bernat, Bunce, & Shevrin, 2001; Scott, O’Donnell, Leuthold, & Sereno, 2009). This technique is capable of reflecting different stages of processing in real time as fast as in milliseconds. Presumably, ERP studies that have used emotional words as their stimuli have not sought evidence supporting the RH or the valence hypotheses. They have mostly not been intended to reveal lateralized effects either (see Kissler, Assadollahi, & Herbert, 2006, for a review). The reason ERP studies use emotional words is likely due to the fast processing of these words and also the strong capture of participants’ attention by the meaning of these words. To be explicit, the most significant contribution of ERP findings is to provide evidence for the dichotomy of automatic versus controlled in emotional word processing (e.g., Franken, Gootjes, &van Strien, 2009; Van Hooff, Dietz, Sharma, & Bowman, 2008; see also Kissler et al., 2006, for a review). While,in this method,stimuli are mostly presented centrally, this line of research seems to attribute lateralized effects ofautomatic versus controlled processing, when available,to the left and right scalp regions, respectively (e.g., Bernat et al., 2001; Kissler, Herbert, Peyk, & Junghofer, 2007).

Most ERP deflections are referred to by the preceding letters of P and N, which indicate polarity and are followed by a number that indicates either a peak’s position within a waveform (e.g., P3) or its latency in milliseconds (e.g., P300). ERP components of emotional words can be categorized into (1) early components that appear within 300 ms of the stimulus onset and (2) later components that appear more than 300 ms from the stimulus onset. While earlier components appear automatically upon presentation of these words (e.g., Bernat et al., 2001; Scott et al., 2009; Van Hooff et al., 2008), later components are mostly the hallmark of attention to the content of emotional words (e.g., Fischler & Bradley, 2006; Naumann et al., 1992; Naumann, Maier, Diedrich, Becker, & Bartussek, 1997). In this section, adescription of later components,which have received larger focus from research, is followed by that of early components.

Later ERPs and attention to emotional meaning

The P300 is a component that has also been reported in ERP studies of emotional words. This component, which is greater along the midline centroparietal scalp region, typically emerges when participants attend to and discriminate stimuli, whether emotional or neutral, which are different in some aspect (e.g., De Pascalis, Strippoli, Riccardi, & Vergari, 2004; Isreal, Chesney, Wickens, & Donchin, 1980; Naumann et al., 1992;Naumann et al., 1997).

Related to the P300 component is the late positive component (LPC), which appears during the processing of emotional words around 500 ms after the stimulus onset; for instance, it is seen when a negative word is embedded in a sequence of positive words, but not when a positive word is embedded in a sequence of positive words, and the stimuli are presented one by one to the participant. The participant’s task is to respond to the emotional feature of stimuli by, for instance, counting the number of negative words (e.g., Cacioppo, Crites, Gardner, & Berntson, 1994). The distribution of the LPC has been shown to be more extensive over the right scalp regions (Cacioppo, Crites, &Gardner, 1996). Attention recruited by the emotional feature (Cuthbert, Schupp, Bradley, Birbaumer, & Lang, 2000), mental imagery activated by emotional words (Kanske & Kotz, 2007), and semantic cohesion of the category of emotional words (Dillon, Cooper, Grent-‘t-Jong, Woldorff, & LaBar, 2006) have been suggested to contribute to producing the LPC effect. The differential effect of the LPC, as compared with the P300, supposedly demonstrates that emotional words recruit attention differently from neutral words (Compton et al., 2003).

The N400 is also a later ERP correlate, but with a negative deflection that is generally greater over centroparietal regions of the RH (e.g., Kutas & Hillyard, 1980, 1984). Although the N400 is an appropriate ERP correlate for assessing semantic processing, it has not been widely reported in the processing of emotional words. As stated by Kanske and Kotz (2007), this is probably because the N400 emerges when new semantic information is integrated into a memory context. Where a smaller amplitude means facilitation of a process, decreased amplitude of the N400 likely indicates facilitated processing of emotional words due to their emotional content. It is reasonable, however, to think that incongruency of emotional valence, such as what occurs in the affective priming paradigm, would result in the N400 component.

The affective-priming paradigm is a variant of the semantic-priming paradigm in which a target word with emotional meaning is preceded by an emotional prime word. Behaviorally, affective priming is indicated if the time needed to evaluate the target is significantly shorter when the prime and the target share the same valence (i.e., congruent pairs: crime–death) than when prime and target are of opposite valence (i.e., incongruent pairs: crime–reward) (Fazio et al., 1986). Similar to the LPC effect, ERP evidence from the affective priming paradigm has taken the form of an extended latency of the N400 (around 600 ms) in RH electrode sites in response to incongruent pairs (Zhang, Lawson, Guo, & Jiang, 2006). This observation suggests the more intense effect of the violation of expectations in the affective-priming paradigm, as compared with the semantic-priming paradigm (e.g., Holcomb, 1988).

Research suggests that late ERP effects are subject to interference from task demands (e.g., Fischler & Bradley, 2006; Naumann et al., 1992; Naumann et al., 1997). This characteristic is considered to be evidence of the controlled nature of the later components. For instance, Fischler and Bradley contrasted the effects of different encoding tasks in a series of five experiments and observed a different pattern of ERP effects for each one. When the task was the evaluation task (i.e., identifying whether the word was unpleasant or pleasant), the LPC emerged in response to both negative and positive words. When an emotional decision task was employed (i.e., identifying whether the word was emotional or not), the N400 and the LPC were observed in response to both negative and positive words.

When the task was changed to a silent-reading task, the magnitude of the LPC decreased, but it was still significant in response to both negative and positive words. With a semantic category task (i.e., the emotionality of stimuli was not the focus), the LPC was found only for negative words, and with a lexical decision task, no ERP effect was detected for any of the stimuli. According to Fischler and Bradley (2006), the semantic task probably yielded the ERP effect for negative words because it tapped a rather deeper level of stimulus analysis and, therefore, the negative words’ greater ability to attract attention was retained. In contrast, in the lexical decision task, in which a relatively superficial level of semantic analysis is required, the effect of emotionality was undetected.Thus, the later ERPs that signify a capture of attention by the meaning of emotional words are subject to interference from task demands.

Early ERPs and automaticity of processing

ERP studies of emotional words have also reported components that occur within 100 ms of stimulus onset (e.g., Bernat et al., 2001; Scott et al., 2009; Van Hooff et al., 2008). In fact, a traditional view in the ERP literature holds that no meaning-related deflection appears within the first 200 ms after word onset (see Kissler et al., 2006, for a review). Accordingly, some researchers have argued that the ERP effects of emotional words that appear within the first 100 ms of stimulus onset—that is, the P1 and N1—are particularly controversial (e.g., Herbert, Junghofer, & Kissler, 2008; Kissler, Herbert, Winkler, & Junghofer, 2009). Kissler et al. (2006) believed that the earliest effects are more pronounced in clinical populations such as depressed individuals, who are more sensitive to unpleasant materials.

Yet, in studies of emotional words, there are reports that the ERP correlates appear even within the first 100 ms poststimulus onset in healthy individuals (e.g., Bernat et al., 2001; Scott et al., 2009; Van Hooff et al., 2008). A combination of high arousal, negative valence, and high frequency causes the earliest effects to appear readily in a nondemanding task such as a lexical decision task. Supposedly, high-arousal stimuli that are frequent are processed faster than low-arousal frequent stimuli, and valence gives priority to negative stimuli, probably because of the unpleasant consequences that negative material tends to have in the real world (Scott et al., 2009). This finding also supports the automatic evaluation hypothesis that states that emotional words are processed early and automatically (Fazio et al., 1986).

One more ERP effect that demonstrates the early activation of emotional words is the early posterior negativity (EPN), which has recently been reported by several studies (e.g., Franken et al., 2009; Herbert et al., 2008; Kissler et al., 2007; Kissler et al., 2009; Scott et al., 2009). This negative potential occurs in posterior scalp regions, 200 to 300 ms after word onset, for both negative and positive high-arousal words. It has been attributed to the arousal feature of emotional words and appears predominantly in the left occipitotemporal region (Kissler et al., 2007). In most of the studies, the EPN occurred during silent reading–that is, when emotional words were viewed passively, without any explicit instruction to attend to their emotional content–which suggests that this component is automatic. Namely, the emotional attributes of words are processed automatically upon presentation. This component has also been shown to be robust against a competing grammatical task, which further supports its automaticity (Kissler et al., 2009).

A stronger piece of evidence for the automatic processing of emotional words, as indicated by the appearance of early components such as P1, N1, and EPN, seems to be provided by the emotional Stroop task,Footnote 3 which primarily taps an attentional process (Franken et al., 2009; Van Hooff et al., 2008). In the emotional Stroop task, naming the color of an emotional word takes longer than naming the color of a neutral word, which reflects the fact that attention is captured by the emotional content of words (Williams, Mathews, & MacLeod, 1996). Thus, in such a task in which the emergence of the later components is the anticipated outcome, the appearance of the early, along with the later, ERP components validates the dual-process nature of emotional word processing: Both automatic and controlled processes are involved in this process.

Among the ERP data on emotional words, there are also clues to a shift of activation from the LH to the RH (Bernat et al., 2001; Ortigue et al., 2004). One study that offers spatiotemporal evidence for such a shift was conducted by Ortigue et al.; it employed a DVF technique along with a go/no-go lexical detection task. A word (either emotional or neutral) and a nonword, or else two nonwords, were presented to the visual fields, and participants were asked to respond when they saw a word in either visual field. Overall, performance with emotional words presented in the RVF was better than that with emotional words presented in the LVF or neutral words presented in either visual field. Early differentiation of emotional versus neutral word processing occurred over the 100- to 140-ms poststimulus period when the scalp topography of emotional words presented to the RVF demonstrated bilateral activity of lateral-occipital substrates with more activation in the RH. The scalp topography of other conditions(i.e., emotional words presented to the LVF and neutral words presented to either visual field), however, revealed activity in similar substrates, but mainly in the LH. This finding seems compatible with the predictions of the time course hypothesis, which implies a shift of activation from the LH to the RH while semantic information is processed (e.g., Burgess & Simpson, 1988; Koivisto, 1997, 1998).

In summary, ERP studies that use emotional words as stimuli suggest the involvement of an early automatic and a later attention-demanding stage in the course of emotional word processing. While later components that are mostly the hallmark of attention to the content of emotional words are subject to interference from task demands, earlier components appear automatically upon presentation of these words. This processing pattern is consistent with the dual-process model mentioned in the time course hypothesis—namely, early automatic versus later controlled processing. Although the lateralized data provided by ERP methodology mostly show that the earlier and later components can be linked to the left and right electrode sites, respectively, neuroimaging data provided by techniques such as functional magnetic resonance imaging (fMRI) and positron emission tomography should offer further insight into the locations where the automatic and controlled processes might take place.

Neuroimaging data on emotional word processing

Neuroimaging techniques detect metabolic/hemodynamic changes in the brain and, consequently, localize the regions involved in a neural process. In this method, the brain cannot be scanned faster than once every 2–3 s. However, taking into account the role that the type of task (e.g., implicit tasks such as silent reading vs. attention-grabbing tasks such as the emotional Stroop) plays in instigating a particular level of processing, imaging data may be able to differentiate between the structures that are involved in the automatic versus controlled stages of processing and whether the enhanced activation is lateralized to the LH or RH. In reality, the basic idea is that implicit tasks discourage the analysis of word meanings and, therefore, if, in this condition, increased activation is obtained, it is likely derived from automatic activation of word meanings. Explicit tasks,in contrast, encourage a deep level of processing that involves the processing of the semantic aspects of presented words (Chwilla, Brown, & Hagoort, 1995).In this section, adescription of the LH structures that are probably involved in the automatic processing of emotional words is followed by that of the RH structures that likely play a role in controlled processing of emotional words.

LH structures and automatic processing

In general, increased neural activity in an extensive part of the brain is a normal outcome when words, including emotional words, are presented. One observation in neuroimaging studies that compare the neural substrates involved in the processing of emotional and neutral words is enhanced activation in the areas of the LH that are primarily associated with the semantic properties of words. The inferior frontal gyrus (i.e., Brodmann areas 44 and 45) is an example of the regions that respond to almost all words (Nakic, Smith, Busis, Vythilingam, & Blair, 2006). Enhanced activation in the left temporal and occipital regions is also an inseparable part of word recognition (Beauregard et al., 1997). This is especially important because the LH is dominant in word processing and emotional words like neutral words are part of the semantic system. This observation, hence, challenges the idea that the RH is solely responsible for emotional word processing (i.e., the RH hypothesis).

However, emotional words trigger activation in other areas of the LH, such as the amygdala, orbitofrontal cortex, and posterior cingulate gyrus, as well. These regions have been claimed to be part of the limbic system (see Fig. 1), which plays a key role in emotion processing (Beauregard et al., 1997). In view of this fact, imaging studies of emotional word processing probably demonstrate intercorrelation between neural structures whose activation is caused by semantic features of emotional words and neural structures whose activation is triggered primarily in response to these words’ emotional attributes. These structures appear to come into play earlier in processing with less demanding tasks, and their activation is most likely lateralized to the LH (e.g., Costafreda, Brammer, David, & Fu, 2008; Kuchinke et al., 2005; Luo et al., 2004).

Among the structures of the limbic system, the amygdala is the moststudied structure that plays a pivotal role in the processing of emotional stimuli. Since studies that examined the processing of nonverbal emotional stimuli (e.g., facial expressions, pictures) have repeatedly claimed a central role for the amygdala in negative emotions such as fear (e.g., LeDoux, 2000), some emotional word studies have simply focused on the neural activity changes in the amygdala in response to words with negative meanings (e.g., Isenberg et al., 1999; Strange, Henson, Friston, & Dolan, 2000; Tabert et al., 2001). However, the association between the amygdala and negative words may not be exclusive, as indicated by studies that have reported reliable activation of the amygdala in response to positive words (Garavan, Pendergrass, Ross, Stein, & Risinger, 2001; Hamann & Mao, 2002; Herbert et al., 2009). This finding probably corroborates the role of the arousal dimension in engaging activation in the amygdala (Elliott, Rubinsztein, Sahakian, & Dolan, 2000). The disproportionately high response to negative stimuli probably occurs because negative and positive stimuli differ in their functional significance; in other words, a fast reaction to negative stimuli has vital consequences for the organism (Smith, Cacioppo, Larsen, & Chartrand, 2003).

The available data most likely suggest that this structure becomes activated upon presentation of emotional words. As one proof for this claim, research has shown a strong correlation between the pattern of activation in the amygdala and the occipital cortex during silent reading (Tabert et al., 2001). This observation probably supports the notion that the amygdala modulates the processing of visual information in the occipital cortex via feedback projections. Indeed, some researchers believe that the amygdala influences emotional processes via projections back to all levels of the visual cortex that exceed the effects of the emotional input received by the visual cortex. These connections may have the advantage of making the visual cortex more sensitive to emotional stimuli (Amarel, Price, Pitkanen, & Carmichael, 1992).

Further evidence suggesting that activation in the amygdala occurs without placing much demand on processing resources has come from studies that did not report activation in this structure when attention-demanding tasks were performed. For example, during a go/no-go evaluation task in which participants responded to, for instance, positive words and inhibited their responses to negative words, activation in the amygdala was reported to be negligible (e.g., Elliott et al., 2000). In a similar vein, in a meta-analysis study carried out by Costafreda et al. (2008), implicit taskswere associated with a higher probability of activation in the amygdala than were explicit tasks, suggesting the role of this structure in early processing of emotional stimuli.

Concerning the amygdala’s laterality, although a right-lateralized pattern of activation (Maddock, Garrett, & Buonocore, 2003) and also a bilateral pattern (Isenberg et al., 1999) have been reported, what has been mostly detected is a left-lateralized effect (e.g., Hamann & Mao, 2002; Maratos, Dolan, Morris, Henson, & Rugg, 2001; Strange et al., 2000). Two recent meta-analyses of emotional stimuli processing have also provided support for a pattern of left-lateralized activation in the amygdala (Bass, Aleman, & Kahn, 2004; Wager, Phan, Liberzon, &Taylor, 2003). Since a pattern of increased activation in the left amygdala has also been observed in nonverbal emotional studies (e.g., Hamann, 2001), Hamann and Mao argued that the left-lateralized effect of the amygdala during emotional word processing is more likely due to an advantage for the processing of emotional stimuli, in general, rather than the verbal aspect of emotional words. Costafreda et al.’s (2008) meta-analysis, in contrast,poses the possibility that the left-sided advantage of the amygdala is due to the verbal nature of emotional words. This discrepancy can be an open question for future research.

The likely role of the left amygdala in automatic processing of emotional words can also be deduced from the way high-frequency, high-arousal words are processed. As was mentioned above, enhanced activation in the left inferior frontal lobe has been observed in response to almost all words. The exception is high-frequency, high-arousal words. That is, the left inferior frontal region responds to low-frequency, high-arousal words, but not to high-frequency, high-arousal words. This finding suggests that the semantic representations of high-frequency, high-arousal words receive sufficient augmentation from the amygdala that additional augmentation by the inferior frontal lobe is unnecessary (Nakic et al., 2006). Bearing in mind from the ERP findings that high-frequency negative-arousal words are responded to within 100 ms after word onset (Scott et al., 2009), the role of the left amygdala in the automatic stage of processing, in which activation occurs rapidly and without much effort, becomes more likely. Thus, the LH structures seem to be involved primarily in the automatic processing of emotional words.

RH structures and controlled processing

The privileged processing status of emotional words, such as the role that their meanings play in boosting memory (e.g., Kensinger & Corkin, 2003),seems to call on more structures. Indeed, the limbic system, which forms the inner border of the cortex, includes portions of all the lobes of the cerebral hemispheres. If this system contributes to various functions, including interpreting emotional responses, recruiting attention, storing memories, and learning, it is probably due to some additional connections that serve to regulate emotional and cognitive processes (see Bush, Luu, & Posner, 2000, for a review). These connections may conceivably receive their activation later in processing via controlled attention when the role of the RH becomes more prominent (Strange et al., 2000).

Presumably, a connection between emotion-related structures of the limbic system such as the amygdala, on one side, and attention-related structures such as the anterior cingulate cortex (ACC) and dorsolateral prefrontal cortex (DLPFC), on the other side, is essential in the elaborated processing of emotional words. Accordingly, the ACC and DLPFC are considered as parts of the cognitive system (see Vuilleumier, 2002, for a review). For instance, the ACC is part of the prefrontal cortex and has two subdivisions: cognitive and affective. Recent research demonstrates that cognitive and emotional information are processed in this structure separately but interdependently. The cognitive subdivision is part of a distributed attentional network that has reciprocal connections with the lateral prefrontal cortex, parietal cortex, and supplementary motor cortex and plays a role in the modulation of attention. In contrast, the affective subdivision (i.e., the posterior cingulate cortex) contributes to the detection of the emotional features of input. Thus, the type of arrangement of the two parts of the ACC implies, to some extent, a neural basis for the interaction between emotion and attention (see Bush et al., 2000, for a review).

By attention, we mean active attention, which is a controlled process and is guided by concentration, interest, and needs. This type of attention obviously involves effort and includes selecting what we should be attending to and ignoring what we do not want to attend to (Gaddes & Edgell, 1994). Accordingly, it is likely that in emotional word processing, the role of attention is to encourage a later stage of processing by enhancing the effect of an earlier stage (Lane, Chua, & Dolan, 1999). It can be the reason why the activation shifts from the LH to the RH (Koivisto, 1997, 1998).

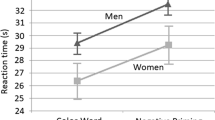

As was previously mentioned, the interaction between emotion and attention has generally been studied by using the emotional Stroop task (Williams et al., 1996). Imaging studies have reported enhanced activation of the right ACC during performance of this task (Casey et al., 1997; Pardo, Pardo, Janer, & Raichle, 1990). This finding is also consistent with the right-lateralized effect of the emotional Stroop task indicated by the behavioral methodology (Compton, Heller, Banich, Palmieri, & Miller, 2000). Research has even demonstrated the engagement of a common system in the RH for maintaining attention to the color of emotional words and incongruent color words in the emotional Stroop task and the standard Stroop task, respectively. This finding seems to support the role of the RH in attention-demanding tasks in general (Compton et al., 2003).

Likewise, there are extensive data in the literature concerning the right-lateralized effect of attention-demanding tasks. For instance, research demonstrates improved levels of activity in structures such as the right prefrontal and superior parietal regions when participants focus and maintain their attention on sensory signals that are input into the brain (e.g., Anderer, Saletu, Semlitsch, & Pascual-Marqui, 2003; Molina et al., 2005; Pardo, Fox, & Raichle, 1991; Strange et al., 2000). In addition, neglect that is claimed to be the result of damage to the attentional system in the brain occurs primarily after lesions to the RH (e.g., Driver & Vuilleumier, 2001; Heilman & Van den Abell, 1980; Heilman, Watson, & Valenstein, 1985). These findings not only are consistent with the notion that components of human attention are right lateralized (e.g., Whitehead, 1991), but also raise the possibility of a correlation between the RH’s role in the processing of emotions repeatedly reported in literature (i.e., the RH hypothesis) and its role in attention-demanding processes (e.g., Casey et al., 1997; Compton et al., 2003; Compton et al., 2000).

Taken together, the enhanced brain activation over the course of processing of emotional words demonstrated by neuroimaging methodology appears to occur in both the LH and RH. In the LH, the processing of emotional words creates activation not only in the semantic areas, but also in the structures of the limbic system, such as the amygdala, for which there is a greater number of reports in favor of the LH. This level of activation is probably automatic, because it is instigated primarily by implicit tasks. On the other hand, explicit processing of emotional words is likely,along with enhanced activation in some other structures such as the ACC and DLPFC, which are considered parts of the attention system in the brain. The activation in these structures seems to be right-lateralized and occurs when attention is directed to the content of emotional words. Overall, the left- versus right-lateralized processing of emotional words seems consistent with the predictions of the time course hypothesis that assigns automatic processing of semantic information to the LH and controlled processing to the RH.

Conclusion

The purpose of this article was to provide a comprehensive and critical synthesis of current knowledge of the neurocognitive and neurofunctional bases of the processing of emotional words. The data from a large body of research based on behavioral, electrophysiological, and neuroimaging methodologies appear to converge in indicating that both hemispheres are involved in the processing of words with emotional meaning, albeit in different, and probably complementary, ways. Consistent with the time course hypothesis, this is probably due to the fact that the two hemispheres do not react similarly and at the same “micropace” to the processing of emotional words. Thus, emotional words appear first to be processed automatically in the LH and only later in a controlled manner in the RH. This distinctive, but complementary, processing in the LH and RH is also compatible with evidence provided by research on the processing of facial expressions (see Vuilleumier, 2002, for a review). Taking into consideration the dominant role of the LH in language processing, the semantic feature of emotional words is probably one factor triggering the left-lateralized activation. However, the processing advantage of emotional words is also due to, on the one hand, emotion-related structures of the limbic system that become activated automatically earlier in processing and whose activation is likely left-lateralized and, on the other hand, attention-related structures that likely come into play later in processing and whose activation is presumably right-lateralized. The attention-grabbing quality of emotional words is perhaps the factor that causes activation to shift from the LH to the RH. Accordingly, the dual-process model of automatic versus controlled processing of emotional words corroborates the complementary roles of the two hemispheres of the brain in data processing.

Perhaps the greatest challenge to the area of emotional word processing in the upcoming decades will be to determine whether the attention-grabbing quality of emotional words is the underlying mechanism for the role of the RH in emotional word processing. This area of research also needs to determine to what extent the role of the LH depends on each of the semantic and emotional features of these words. The use of words with different degrees of emotionality may guide research in this direction. Also, lateralized presentation of emotional words in ERP and imaging studies along with instructions that stimulate superficial versus deep processing of the stimuli should be helpful in differentiating automatic and controlled levels of processing in the cerebral hemispheres.

Notes

Sample words with negative valence: lonely, poverty, neglect; sample words with positive valence: bless, reward, elegant.

Sample high-arousal words that are negative in valence: assault, betray, horror; sample high-arousal words that are positive in valence: miracle, thrill, passion; sample low-arousal words that are negative in valence: bored, gloom, obesity; sample low-arousal words that are positive in valence: secure, wise, cozy.

The emotional Stroop task is a version of the standard Stroop task (Stroop, 1935), in which participants are required to respond to the ink color of a color word while ignoring its meaning (e.g., the word green written in red ink). Since reading is an automatic process, naming the color in which a word is written requires the allocation of attention and, hence, causes longer naming times (the Stroop effect). Similarly, in the emotional Stroop task, naming the color of an emotional word takes longer than naming the color of a neutral word (the emotional Stroop effect). This effect reflects the fact that attention is captured by the emotional content of words (Williams, Mathews, & MacLeod, 1996).

References

Abernethy, M., & Coney, J. (1993). Associative priming in the hemispheres as a function of SOA. Neuropsychologia, 31, 1397–1409.

Abernethy, M., & Coney, J. (1996). Semantic category priming in the left cerebral hemisphere. Neuropsychologia, 34, 339–350.

Adolphs, R., Damasio, H., Tranel, D., & Damasio, A. R. (1996). Cortical systems for the recognition of emotion in facial expressions. Journal of Neuroscience, 16, 7678–7687.

Ali, N., & Cimino, C. R. (1997). Hemispheric lateralization of perception and memory for emotional verbal stimuli in normal individuals. Neuropsychology, 11, 114–125.

Amarel, D. G., Price, J. L., Pitkanen, A., & Carmichael, S. T. (1992). Anatomical organization of the primate amygdaloid complex. In J. P. Aggleton (Ed.), The amygdala: Neurobiological aspects of emotion (pp. 1–66). New York: Wiley-Liss.

Anderer, P., Saletu, B., Semlitsch, H. V., & Pascual-Marqui, R. D. (2003). Non-invasive localization of P300 sources in normal aging and age-associated memory impairment. Neurobiology of Aging, 24, 463–479.

Atchley, R. A., Ilardi, S. S., & Enloe, A. (2003). Hemispheric asymmetry in the processing of emotional content in word meanings: The effect of current and past depression. Brain and Language, 84, 105–119.

Baas, D., Aleman, A., & Kahn, R. S. (2004). Lateralization of amygdala activation: A systematic review of functional neuroimaging studies. Brain Research Reviews, 45, 96–103.

Beauregard, M., Chertkow, H., Bub, D., Murtha, S., Dixon, R., & Evans, A. (1997). The neural substrate for concrete, abstract, and emotional word lexica: A positron emission tomography study. Journal of Cognitive Neuroscience, 9, 441–461.

Bernat, E., Bunce, S., & Shevrin, H. (2001). Event-related brain potentials differentiate positive and negative mood adjectives during both supraliminal and subliminal visual processing. International Journal of Psychophysiology, 42, 11–34.

Borod, J. C. (1992). Interhemispheric and intrahemispheric control of emotion: A focus on unilateral brain damage. Journal of Consulting and Clinical Psychology, 60, 339–348.

Borod, J. C., Andelman, F., Obler, L. K., Tweedy, J. R., & Welkowitz, J. (1992). Right hemisphere specialization for the identification of emotional words and sentences: evidence from stroke patients. Neuropsychologia, 30, 827–844.

Bradley, M. M., Greenwald, M. K., Petry, M. C., & Lang, P. J. (1992). Remembering pictures: Pleasure and arousal in memory. Journal of Experimental Psychology: Learning, Mememory, and Cognition, 18, 379–390.

Bryson, S. E., McLaren, J., Wadden, N. P., & MacLean, M. (1991). Differential asymmetries for positive and negative emotion: Hemisphere or stimulus effects? Cortex, 27, 359–365.

Burgess, C., & Simpson, G. B. (1988). Cerebral hemispheric mechanisms in the retrieval of ambiguous word meanings. Brain and Language, 33, 86–103.

Bush, G., Luu, P., & Posner, M. I. (2000). Cognitive and emotional influences in anterior cingulate cortex. Trends in Cognitive Sciences, 4, 215–222.

Cacioppo, J. T., Crites, S. L., Jr., & Gardner, W. L. (1996). Attitudes to the right: Evaluative processing is associated with lateralized late positive event-related brain potentials. Personality and Social Psychology Bulletin, 22, 1205–1219.

Cacioppo, J. T., Crites, S. L., Gardner, W. L., Jr., & Berntson, G. G. (1994). Bioelectrical echoes from evaluative categorizations: I. A late positive brain potential that varies as a function of trait negativity and extremity. Journal of Personality and Social Psychology, 67, 115–125.

Carmon, A., & Nachshon, I. (1973). Sensory input competition in the visual fields and cerebral dominance. Israel Journal of Medical Sciences, 9, 85–91.

Casey, B. J., Trainor, R., Giedd, J., Vauss, Y., Vaituzis, C. K., Hamburger, S., et al. (1997). The role of the anterior cingulate in automatic and controlled processes: A developmental neuroanatomical study. Developmental Psychobiology, 30, 61–69.

Chiarello, C. (1991). Lateralization of lexical processes in normal brain: A review of visual half-field research. In H. H. Whitaker (Ed.), Contemporary reviews in neuropsychology (pp. 59–69). New York: Springer.

Chiarello, C. (1998). On codes of meaning and the meaning of codes: Semantic access and retrieval within and between hemispheres. In M. Beeman & C. Chiarello (Eds.), Right hemisphere language comprehension: Perspective from cognitive neuroscience (pp. 141–160). Mahwah, NJ: Erlbaum.

Chiarello, C. (2003). Parallel systems for processing language: Hemispheric complementarity in the normal brain. In M. T. Banich & M. Mack (Eds.), Mind, brain, and language: Multidisciplinary perspectives (pp. 229–247). Mahwah, NJ: Erlbaum.

Chiarello, C., Burgess, C., Richards, L., & Pollock, A. (1990). Semantic and associative priming in the cerebral hemispheres: Some words do, some words don't … sometimes, some places. Brain and Language, 38, 75–104.

Chwilla, D. J., Brown, C. M., & Hagoort, P. (1995). The N400 as a function of the level of processing. Psychophysiology, 32, 274–285.

Cicero, B. A., Borod, J. C., Santschi, C., Erhan, H. M., Obler, L. K., Agosti, R. M., et al. (1999). Emotional versus nonemotional lexical perception in patients with right and left brain damage. Neuropsychiatry, Neuropsychology, and Behavioral Neurology, 12, 255–264.

Collins, M. A., & Cooke, A. (2005). A transfer appropriate processing approach to investigating implicit memory for emotional words in the cerebral hemispheres. Neuropsychologia, 43, 1529–1545.

Collins, A. M., & Loftus, E. F. (1975). A spreading-activation theory of semantic processing. Psychological Review, 82, 407–428.

Compton, R. J., Banich, M. T., Mohanty, A., Milham, M. P., Herrington, J., Miller, G. A., et al. (2003). Paying attention to emotion: An fMRI investigation of cognitive and emotional stroop tasks. Cognitive, Affective, &Behavioral Neuroscience, 3, 81–96.

Compton, R. J., Heller, W., Banich, M. T., Palmieri, P. A., & Miller, G. A. (2000). Responding to threat: Hemispheric asymmetries and interhemispheric division of input. Neuropsychology, 14, 254–264.

Costafreda, S. G., Brammer, M. J., David, A. S., & Fu, C. H. (2008). Predictors of amygdala activation during the processing of emotional stimuli: A meta-analysis of 385 PET and fMRI studies. Brain Research Review, 58, 57–70.

Cuthbert, B. N., Schupp, H. T., Bradley, M. M., Birbaumer, N., & Lang, P. J. (2000). Brain potentials in affective picture processing: Covariation with autonomic arousal and affective report. Biological Psychology, 52, 95–111.

Davidson, R. J. (1992). Anterior cerebral asymmetry and the nature of emotion. Brain and Cognition, 20, 125–151.

Davidson, R. J. (2003). Affective neuroscience and psychophysiology: Toward a synthesis. Psychophysiology, 40, 655–665.

De Pascalis, V., Strippoli, E., Riccardi, P., & Vergari, F. (2004). Personality, event-related potential (ERP) and heart rate (HR) in emotional word processing. Personality and Individual Differences, 36, 873–891.

Dillon, D. G., Cooper, J. J., Grent-'t-Jong, T., Woldorff, M. G., & LaBar, K. S. (2006). Dissociation of event-related potentials indexing arousal and semantic cohesion during emotional word encoding. Brain and Cognition, 62, 43–57.

Driver, J., & Vuilleumier, P. (2001). Perceptual awareness and its loss in unilateral neglect and extinction. Cognition, 79, 39–88.

Elliott, R., Rubinsztein, J. S., Sahakian, B. J., & Dolan, R. J. (2000). Selective attention to emotional stimuli in a verbal go/no-go task: An fMRI study. NeuroReport, 11, 1739–1744.

Etcoff, N. L. (1989). Asymmetry in recognition of emotion. In F. Boller & J. Grafman (Eds.), Handbook of neuropsychology (Vol. 3, pp. 363–382). Amsterdam: Elsevier.

Eviatar, Z., & Zaidel, E. (1991). The effects of word length and emotionality on hemispheric contribution to lexical decision. Neuropsychologia, 29, 415–428.

Fazio, R. H., Sanbonmatsu, D. M., Powell, M. C., & Kardes, F. R. (1986). On the automatic activation of attitudes. Journal of Personality and Social Psychology, 50, 229–238.

Fischler, I., & Bradley, M. (2006). Event-related potential studies of language and emotion: Words, phrases, and task effects. Progress in Brain Research, 156, 185–203.

Fisk, A. D., & Schneider, W. (1984). Memory as a function of attention, level of processing, and automatization. Journal of Experimental Psychology: Learning, Memory, and Cognition, 10, 181–197.

Franken, I. H., Gootjes, L., & van Strien, J. W. (2009). Automatic processing of emotional words during an emotional Stroop task. NeuroReport, 20, 776–781.

Gaddes, W. H., & Edgell, D. (1994). Learning disabilities and brain function: A neuropsychological approach. New York: Springer.

Garavan, H., Pendergrass, J. C., Ross, T. J., Stein, E. A., & Risinger, R. C. (2001). Amygdala response to both positively and negatively valenced stimuli. NeuroReport, 12, 2779–2783.

Graves, R., Landis, T., & Goodglass, H. (1981). Laterality and sex differences for visual recognition of emotional and non-emotional words. Neuropsychologia, 19, 95–102.

Hamann, S. (2001). Cognitive and neural mechanisms of emotional memory. Trends in Cognitive Sciences, 5, 394–400.

Hamann, S., & Mao, H. (2002). Positive and negative emotional verbal stimuli elicit activity in the left amygdala. NeuroReport, 13, 15–19.

Heilman, K. M., & Van den Abell, T. (1980). Right hemisphere dominance for attention: The mechanism underlying hemispheric asymmetries of inattention. Neurology, 30, 327–330.

Heilman, K. M., Watson, R. T., & Valenstein, E. (1985). Neglect and related disorders. In K. M. Heilman & E. Valenstein (Eds.), Clinical neuropsychology (2nd ed., pp. 243–293). New York: Oxford University Press.

Heller, W. (1993). Neuropsychological mechanisms of individual differences in emotion, personality, and arousal. Neuropsychology, 7, 476–489.

Herbert, C., Ethofer, T., Anders, S., Junghofer, M., Wildgruber, D., Grodd, W., et al. (2009). Amygdala activation during reading of emotional adjectives—an advantage for pleasant content.Social, Cognitive, and Affective. Neuroscience, 4, 35–49.

Herbert, C., Junghofer, M., & Kissler, J. (2008). Event related potentials to emotional adjectives during reading. Psychophysiology, 45, 487–498.

Holcomb, P. J. (1988). Automatic and attentional processing: An event-related brain potential analysis of semantic priming. Brain and Language, 35, 66–85.

Isenberg, N., Silbersweig, D., Engelien, A., Emmerich, S., Malavade, K., Beattie, B., et al. (1999). Linguistic threat activates the human amygdala. Proceedings of the National Academy of Sciences, 96, 10456–10459.

Isreal, J. B., Chesney, G. L., Wickens, C. D., & Donchin, E. (1980). P300 and tracking difficulty: Evidence for multiple resources in dual-task performance. Psychophysiology, 17, 259–273.

Jansari, A., Tranel, D., & Adolphs, R. (2000). A valence-specific lateral bias for discriminating emotional facial expressions in free field. Cognition and Emotion, 14, 341–353.

Kanske, P., & Kotz, S. A. (2007). Concreteness in emotional words: ERP evidence from a hemifield study. Brain Research, 1148, 138–148.

Kensinger, E. A., & Corkin, S. (2003). Memory enhancement for emotional words: Are emotional words more vividly remembered than neutral words? Memory &Cognition, 31, 1169–1180.

Kimura, D. (1964). Left–right differences in the perception of melodies. Quarterly Journal of Experimental Psychology, 16, 355–358.

Kissler, J., Assadollahi, R., & Herbert, C. (2006). Emotional and semantic networks in visual word processing: Insights from ERP studies. Progress in Brain Research, 156, 147–183.

Kissler, J., Herbert, C., Peyk, P., & Junghofer, M. (2007). Buzzwords: Early cortical responses to emotional words during reading. Psychological Science, 18, 475–480.

Kissler, J., Herbert, C., Winkler, I., & Junghofer, M. (2009). Emotion and attention in visual word processing: An ERP study. Biological Psychology, 80, 75–83.

Koivisto, M. (1997). Time course of semantic activation in the cerebral hemispheres. Neuropsychologia, 35, 497–504.

Koivisto, M. (1998). Categorical priming in the cerebral hemispheres: Automatic in the left hemisphere, postlexical in the right hemisphere? Neuropsychologia, 36, 661–668.

Kuchinke, L., Jacobs, A. M., Grubich, C., Vo, M. L., Conrad, M., & Herrmann, M. (2005). Incidental effects of emotional valence in single word processing: An fMRI study. NeuroImage, 28, 1022–1032.

Kutas, M., & Hillyard, S. A. (1980). Reading senseless sentences: Brain potentials reflect semantic incongruity. Science, 207, 203–205.

Kutas, M., & Hillyard, S. A. (1984). Brain potentials during reading reflect word expectancy and semantic association. Nature, 307, 161–163.

Lane, R. D., Chua, P. M., & Dolan, R. J. (1999). Common effects of emotional valence, arousal and attention on neural activation during visual processing of pictures. Neuropsychologia, 37, 989–997.

LeDoux, J. E. (2000). Emotion circuits in the brain. Annual Review of Neuroscience, 23, 155–184.

Ley, R. G., & Bryden, M. (1979). Hemispheric differences in processing emotions and faces. Brain and Language, 7, 127–138.

Luo, Q., Peng, D., Jin, Z., Xu, D., Xiao, L., & Ding, G. (2004). Emotional valence of words modulates the subliminal repetition priming effect in the left fusiform gyrus: An event-related fMRI study. NeuroImage, 21, 414–421.

Maddock, R. J., Garrett, A. S., & Buonocore, M. H. (2003). Posterior cingulate cortex activation by emotional words: fMRI evidence from a valence decision task. Human Brain Mapping, 18, 30–41.

Mahoney, A. M., & Sainsbury, R. S. (1987). Hemispheric asymmetry in the perception of emotional sounds. Brain and Cognition, 6, 216–233.

Maratos, E. J., Dolan, R. J., Morris, J. S., Henson, R. N., & Rugg, M. D. (2001). Neural activity associated with episodic memory for emotional context. Neuropsychologia, 39, 910–920.

Molina, V., Sanz, J., Muñoz, F., Casado, P., Hinojosa, J. A., Sarramea, F., et al. (2005). Dorsolateral prefrontal cortex contribution to abnormalities of the P300 component of the event-related potential in schizophrenia. Psychiatry Research, 140, 17–26.

Nagae, S., & Moscovitch, M. (2002). Cerebral hemispheric differences in memory of emotional and nonemotional words in normal individuals. Neuropsychologia, 40, 1601–1607.

Nakic, M., Smith, B. W., Busis, S., Vythilingam, M., & Blair, R. J. (2006). The impact of affect and frequency on lexical decision: The role of the amygdala and inferior frontal cortex. NeuroImage, 31, 1752–1761.

Naumann, E., Bartussek, D., Diedrich, O., & Laufer, M. E. (1992). Assessing cognitive and affective information processing functions of the brain by means of the late positive complex of the event-related potential. Journal of Psychophysiology, 6, 285–298.

Naumann, E., Maier, S., Diedrich, O., Becker, G., & Bartussek, D. (1997). Structural, semantic, and emotion-focused processing of neutral and negative nouns: Event-related potential correlates. Journal of Psychophysiology, 11, 158–172.

Ortigue, S., Michel, C. M., Murray, M. M., Mohr, C., Carbonnel, S., & Landis, T. (2004). Electrical neuroimaging reveals early generator modulation to emotional words. NeuroImage, 21, 1242–1251.

Osgood, C. E., Suci, G. H., & Tannenbaum, P. H. (1957). The measurement of meaning. Urbana: University of Illinois Press.

Pardo, J. V., Fox, P. T., & Raichle, M. E. (1991). Localization of a human system for sustained attention by positron emission tomography. Nature, 349, 61–64.

Pardo, J. V., Pardo, P. J., Janer, K. W., & Raichle, M. E. (1990). The anterior cingulate cortex mediates processing selection in the Stroop attentional conflict paradigm. Proceedings of the National Academy of Sciences, 87, 256–259.

Posner, M. I., & Petersen, S. E. (1990). The attention system of the human brain. Annual Review of Neuroscience, 13, 25–42.

Posner, M. I., & Snyder, C. R. R. (1975). Attention and cognitive control. In R. L. Solso (Ed.), Information processing and cognition (pp. 55–85). Hillsdale, NJ; Erlbaum.

Russell, J. A. (1980). A circumplex model of affect. Journal of Personality and Social Psychology, 39, 1161–1178.

Russell, J. A. (2003). Core affect and the psychological construction of emotion. Psychological Review, 110, 145–172.

Schneider, W., & Shiffrin, R. M. (1977). Controlled and automatic human information processing: I. Detection, search, and attention. Psychological Review, 84, 1–66.

Scott, G. G., O'Donnell, P. J., Leuthold, H., & Sereno, S. C. (2009). Early emotion word processing: Evidence from event-related potentials. Biological Psychology, 80, 95–104.

Silberman, E. K., & Weingartner, H. (1986). Hemispheric lateralization of functions related to emotion. Brain and Cognition, 5, 322–353.

Sim, T. C., & Martinez, C. (2005). Emotion words are remembered better in the left ear. Laterality, 10, 149–159.

Smith, S. D., & Bulman-Fleming, M. B. (2005). An examination of the right-hemisphere hypothesis of the lateralization of emotion. Brain and Cognition, 57, 210–213.

Smith, S. D., & Bulman-Fleming, M. B. (2006). Hemispheric asymmetries for the conscious and unconscious perception of emotional words. Laterality, 11, 304–330.

Smith, N. K., Cacioppo, J. T., Larsen, J. T., & Chartrand, T. L. (2003). May I have your attention, please: Electrocortical responses to positive and negative stimuli. Neuropsychologia, 41, 171–183.

Sperry, R. W., Gazzaniga, M. S., & Bogen, J. E. (1969). Interhemispheric relationships: The neocortical commissures; Syndromes of hemispheric disconnection. In P. J. Vinken & G. W. Bruyn (Eds.), Handbook of clinical neurology (Vol. 4, pp. 273–290). Amsterdam: North-Holland.

Sperry, R. W., Zaidel, E., & Zaidel, D. (1979). Self recognition and social awareness in the deconnected minor hemisphere. Neuropsychologia, 17, 153–166.

Strange, B. A., Henson, R. N., Friston, K. J., & Dolan, R. J. (2000). Brain mechanisms for detecting perceptual, semantic, and emotional deviance. NeuroImage, 12, 425–433.

Strauss, E. (1983). Perception of emotional words. Neuropsychologia, 21, 99–103.

Stroop, J. R. (1935). Studies of interference in serial verbal reactions. Journal of Experimental Psychology, 18, 643–662.

Sutton, S. K., & Davidson, R. J. (1997). Prefrontal brain asymmetry: A biological substrate of the behavioral approach and inhibition systems. Psychological Science, 8, 204–210.

Tabert, M. H., Borod, J. C., Tang, C. Y., Lange, G., Wei, T. C., Johnson, R., et al. (2001). Differential amygdala activation during emotional decision and recognition memory tasks using unpleasant words: An fMRI study. Neuropsychologia, 39, 556–573.

Van Hooff, J. C., Dietz, K. C., Sharma, D., & Bowman, H. (2008). Neural correlates of intrusion of emotion words in a modified Stroop task. International Journal of Psychophysiology, 67, 23–34.

Van Strien, J., & Morpurgo, M. (1992). Opposite hemispheric activations as a result of emotionally threatening and non-threatening words. Neuropsychologia, 30, 845–848.

Vuilleumier, P. (2002). Facial expression and selective attention. Current Opinion in Psychiatry, 15, 291–300.

Wager, T. D., Phan, K. L., Liberzon, I., & Taylor, S. F. (2003). Valence, gender, and lateralization of functional brain anatomy in emotion: A meta-analysis of findings from neuroimaging. NeuroImage, 19, 513–531.

Whitehead, R. (1991). Right hemisphere processing superiority during sustained visual attention. Journal of Cognitive Neuroscience, 3, 329–334.

Williams, J. M., Mathews, A., & MacLeod, C. (1996). The emotional Stroop task and psychopathology. Psychological Bulletin, 120, 3–24.

Windmann, S., Daum, I., & Gunturkun, O. (2002). Dissociating prelexical and postlexical processing of affective information in the two hemispheres: Effects of the stimulus presentation format. Brain and Language, 80, 269–286.

Zhang, Q., Lawson, A., Guo, C., & Jiang, Y. (2006). Electrophysiological correlates of visual affective priming. Brain Research Bulletin, 71, 316–323.

Acknowledgments

This review was financially supported by Bourses de fin d’études doctorales (Faculté des études supérieures et postdoctorales, Université de Montréal) to the first author.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Abbassi, E., Kahlaoui, K., Wilson, M.A. et al. Processing the emotions in words: The complementary contributions of the left and right hemispheres. Cogn Affect Behav Neurosci 11, 372–385 (2011). https://doi.org/10.3758/s13415-011-0034-1

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13415-011-0034-1