Abstract

Background

Little research has focused on implementation of electronic Patient Reported Outcomes (e-PROs) for meaningful use in patient management in ‘real-world’ oncology practices. Our quality improvement collaborative used multi-faceted implementation strategies including audit and feedback, disease-site champions and practice coaching, core training of clinicians in a person-centered clinical method for use of e-PROs in shared treatment planning and patient activation, ongoing educational outreach and shared collaborative learnings to facilitate integration of e-PROs data in multi-sites in Ontario and Quebec, Canada for personalized management of generic and targeted symptoms of pain, fatigue, and emotional distress (depression, anxiety).

Patients and methods

We used a mixed-methods (qualitative and quantitative data) program evaluation design to assess process/implementation outcomes including e-PROs completion rates, acceptability/use from the perspective of patients/clinicians, and patient experience (surveys, qualitative focus groups). We secondarily explored impact on symptom severity, patient activation and healthcare utilization (Ontario sites only) comparing a pre/post population cohort not exposed/exposed to our implementation intervention using Mann Whitney U tests. We hypothesized that the iPEHOC intervention would result in a reduction in symptom severity, healthcare utilization, and higher patient activation. We also identified key implementation strategies that sites perceived as most valuable to uptake and any barriers.

Results

Over 6000 patients completed e-PROs, with sites reaching 51%–95% population completion rates depending on initial readiness. e-PROs were acceptable to patients for communicating symptoms (76%) and by clinicians for treatment planning (80%). Patient experience was better than the provincial average. Compared to the pre-population, we observed a significant reduction in levels of anxiety (p = 0.008), higher levels of patient activation (p = 0.045), and reduced hospitalization rates (12.3% not exposed vs 10.1% exposed, p = 0.034). A pre/post population trend towards significance for reduced emergency department visit rates (14.8% not exposed vs 12.8% exposed, p = 0.081) was also noted.

Conclusion

This large-scale pragmatic quality improvement project demonstrates the impact of implementation strategies and a collaborative improvement approach on acceptability of using PROs in clinical practice and their potential for reducing anxiety and healthcare utilization; and improving patient experience and patient activation when implemented in ‘real-world’ multi-site oncology practices.

Similar content being viewed by others

Background

Personalized medicine is changing the landscape of cancer care [1]. Cherny et al. propose that personalized medicine should encompass biologically personalized therapeutics, as well as “individually tailored whole-person care that is at the bedrock of what people want and need when they are ill” [2]. Patient reported outcomes (PROs) are an important aspect of personalized medicine [3] that can enable person-centered ‘whole’ person care and improve health outcomes when they are used by clinicians [4, 5]. Indeed, systematic reviews of randomized clinical trials have shown that PROs improve patient/provider communication and may improve other health outcomes such as quality of life and reduced emergency department visits [6,7,8,9,10]. A survival advantage has also been shown in randomized controlled trials (RCTs) for electronic PROs (e-PROs) when clinicians are prompted to address adverse events between clinic visits via alerting systems [11]. If we are to realize the benefit of PROs on health outcomes on a larger scale, we need to move beyond RCTs and drive optimal uptake of PROs data for clinically meaningful use in healthcare decisions and for person-centered patient management [12,13,14]. Unfortunately, little evidence has been generated with regards to implementation of PROs in ‘real-world’ settings and it is unclear what implementation strategies work best to facilitate uptake in practice [15, 16].

Despite a decade of experience of deploying e-PROs in 14 Regional Cancer Centers (RCCs) in Ontario, Canada for distress screening [17, 18] using the Edmonton Symptom Assessment System revised version (ESAS-r) [19], the use of this data in patient management is sub-optimal [20, 21]. This is not surprising as PROs implementation in ‘real-world’ cancer care is complex, requiring reconfiguration of clinic workflow, changes in both clinicians’ practice behaviors and multidisciplinary team collaboration to address PRO scores [22]. Use of strategies to overcome the multiple implementation barriers (e.g. lack of perceived value, difficulty in interpreting PRO data, poor integration in clinical workflow) that can impede a quality response to PRO data is required [23,24,25]. Thus, it is recommended that best practices in knowledge translation and implementation science methods be used to promote uptake and integration of PRO data in clinical practices [26, 27].

We initiated a Quality Improvement Collaborative, the Improving Patient Experience and Health Outcome Collaborative (iPEHOC), to drive uptake of e-PRO data by clinicians for person-centered management of symptoms in multi-site oncology practices in Ontario and Montreal, Quebec. Although the evidence for Quality Improvement Collaborative approaches has been equivocal, there is strong face validity that they are valuable for improving targeted clinical processes and a range of health outcomes such as symptom severity [28]. We are unaware of other studies that have used this approach for PROs implementation in multi-site practices. Our aims were to: 1) Evaluate uptake of e-PROs measured as percent of completed e-PROs from baseline to project end as run charts, acceptability/use from the perspective of patients/clinician, and changes in patient experience of care; 2) Explore impact on symptom severity, patient activation, and emergency department visit (ED) and hospitalization (H) rates (Ontario only). We hypothesized that the iPEHOC intervention would reduce symptom severity, healthcare utilization, and be associated with higher levels of patient activation; and 3) Identify implementation strategies considered by sites as essential for successful uptake of e-PROs in clinical practice.

Methods

We used a mixed-methods (quantitative surveys and qualitative data) program evaluation design to evaluate change in care processes. Qualitative focus groups of patients and clinicians in each site were conducted post-intervention to obtain their perspective of the e-PROs and their use in clinical care (reported in a separate paper). To explore impact on health and system outcomes we compared a pre-implementation population cohort (non-exposed to iPEHOC) to a post-implementation population cohort (iPEHOC exposed). Participating regional cancer centres and disease site clinics in Ontario included: 1) Princess Margaret Cancer Centre (PM), a comprehensive RCC in an urban setting, in lung and sarcoma disease site clinics; 2) Northeast Cancer Center (NECC), serving rural and remote regions, in the chemotherapy, radiotherapy, supportive care and palliative care clinics, and 3) Juravinski Cancer Centre (JCC), a midsized RCC serving urban and rural populations, in central nervous system and gynecology clinics. RCCs in Montreal, Quebec included: 1) Saint Mary’s Hospital Centre (SMHC), a small community hospital, in medical oncology clinics; 2) Segal Cancer Center, a comprehensive regional cancer centre at the Jewish General Hospital (JGH) in gynecologic clinics; and 3) McGill University Health Centre (MUHC), a large academic RCC, in lung clinics. Ethics approval for a multi-site study was obtained from the University Health Network Research Ethic Board (REB) (REB #14–8525-CE) followed by approvals in all regional cancer centres in both provinces.

iPEHOC implementation intervention

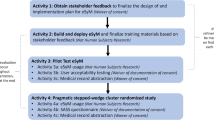

Implementation is defined as the use of specific activities and strategies that promote the adoption and integration of evidence-based interventions and change practice [29]. We used a three-phased, implementation approach (Fig. 1, Table 1) guided by integrated knowledge translation [30], the Knowledge-to-Action framework [31] and principles of a collaborative QI approach [28]. Integrated knowledge translation is defined as an ongoing relationship between researchers and decision-makers to foster uptake of innovations in practice [30].

Phase 1 (3 months: pre-Implementation/setting the stage)

Technical considerations

We built on an existing ESAS-r electronic platform and added four psychometrically valid and reliable, pan-Canadian endorsed e-PRO measures for multidimensional assessment of targeted symptoms of pain (Brief Pain Inventory-BPI) [32], fatigue (Cancer Fatigue Scale-CFS) [33], depression (Prime Health Questionaire-PHQ-9) [34], and anxiety (Generalized Anxiety Disorder-GAD-7) [35] (Additional Attachment 1, iPEHOC measurement system). Internal logic was built into the platform to trigger the multidimensional e-PROs based on previously established ESAS-r cut-scores of > 3 ESAS-r anxiety to trigger GAD-7; > 2 ESAS-r depression to trigger PHQ-9 [36], > 4 ESAS-r pain to trigger BPI, and > 4 ESAS-r fatigue to trigger the CFS [37]. We also built time-frame logic into the system for triggering these e-PROs at 21 days for anxiety and depression, and at 7 days for pain and fatigue, based on consensus amongst clinicians of the appropriate time-frame to observe a change from a treatment plan. A single item Quality of Life scale [38] was also included, and at Princess Margaret and Quebec sites, the Social Difficulties Inventory-21 (SDI-21) [39].

e-PROs were collected on stationary kiosks or tablets, with clinic receptionists and/or volunteers prompting patients to complete upon clinic registration. e-PRO data was scored in real-time and fed-back to clinicians (and patients) as a printed summary report (a graph of scores over time was also accessible in electronic medical records) of severity scores for nine ESAS-r plus targeted iPEHOC symptoms for use in the clinical encounter in person-centered communication (Additional File 2, iPEHOC symptom report). An initial galvanizing meeting was held with Collaborative members (provincial quality cancer agency leads and decision-makers for Ontario and Quebec, clinicians, patients, disease site leads) followed by meetings with disease site teams and patient partners in each site to catalyze a compelling vision for the change (i.e. key evidence of benefits). At the initial collaborative meeting sites worked with disease site teams to develop an implementation blueprint that incorporated recommended implementation strategies to facilitate uptake of PROs in routine clinical care. Implementation teams were formed in each site to: 1) facilitate practice change using champions and case-based, educational outreach sessions, 2) devise a change plan tailored to site enablers and barriers identified at baseline through team completion of an adapted version of the Organizational Readiness Survey (ORS) [40], 3) map current workflow and reconfigure clinical processes, i.e. workflow/team collaboration, to integrate e-PRO data in clinic encounters, 4) share learnings in monthly collaborative meetings to spread successful implementation strategies based on social learning and diffusion of innovation theories [41].

Phase 2 (6 Months, active Implementation)

In this phase, disease-site champions (identified by disease site leads as early adopters ESAS-r, used in practice, and respected by peers) worked alongside project coordinators and site implementation teams to facilitate practice change for use of e-PRO data in patient management. Champions help to facilitate and catalyze change through persuasive communication and interpersonal skills [42, 43]. During this phase, we used evidence-informed, multifaceted implementation strategies [44, 45] inclusive of core training of all clinicians (target of minimum of 70%), monthly case-based educational outreach sessions, audit and feedback reports, and tracked progress using monthly run charts to show rates of e-PROs completion in each RCC.

Core training included sessions on 1) interpretation of e-PRO scores and benefits of use and 2) case-based video-based simulations using clinicians paired with standardized patients that modelled a person-centered clinical method [46] for embedding of e-PRO data in the clinical encounter. The videos demonstrate use of e-PRO data scores for opening the dialogue with patients, developing a shared agenda and treatment plan based on problems that were prioritized as “mattering most” to patients and important to be addressed by clinicians in this clinic visit, use of e-PRO data to guide intervention selection and manage problems based on best practices in pan-Canadian evidence-based practice guidelines [47,48,49], and advising patient actions for symptom self-management. Additionally, to foster patient activation, in partnership with the Canadian Partnership Against Cancer we developed and disseminated videos to patients about how to interpret e-PRO scores and use of e-PRO reports in communication with clinicians [50] and distributed patient facing symptom management guidelines for use in site-based patient education [51].

Audit and feedback reports were tailored to each site but with common data elements including e-PRO completion rates, % of patients who met ESAS-r cut-offs and were required to complete the multidimensional e-PROs, and symptom change scores for discussion at monthly disease site meetings (Additional File 3: Audit and feedback reports). Audit and feedback data stimulates change in clinician behaviour’s through peer pressure [52]. Typically, audit and feedback data is reported back to individual clinicians with comparison to peers, but participating sites desired an overall disease site performance report. iPEHOC sites tracked the number of educational sessions delivered and staff attendance/discipline and use of implementation strategies (educational outreach, audit and feedback, etc.) on excel spreadsheets monthly for monitoring of implementation fidelity.

Phase 3 (3 months-making it stick: Embedding and Sustaining Use in Practice)

In this phase, case-based, interactive educational outreach sessions were ongoing to further facilitate embedding of e-PRO data use in clinical practice; and sustain the practice change. Interactive educational sessions work by sustaining momentum, changing health professionals’ awareness and beliefs about current practice and perceived subjective norms and builds their self-efficacy (confidence) and skills [53].

Process and exploration of impact on outcomes

Aim 1

The rate of e-PROs completion (number of completed e-PROs in participating clinics/number of patients eligible to complete) were tracked from baseline to project end as monthly run charts (rate of completion/population who could have completed). Descriptive statistics were used to summarize acceptability/use of e-PROs via surveys of patients (Patient Acceptability Survey-PAS) and clinicians (Clinician Satisfaction Survey-CSS). Surveys were developed specifically for iPEHOC based on items in other surveys [54]. Patient experience was assessed by completion of two-items from the Ambulatory Oncology Patient Satisfaction Survey (AOPPS) [55], to assess satisfaction with care received for managing emotional concerns and physical symptoms. The PAS was distributed in iPEHOC participating clinics waiting rooms over a 14-day period at 4 months (mid-point) and post-implementation. A target sample of a minimum of 50 completed surveys/site was pre-determined based on sampling for QI purposes [56]. Clinicians were invited to complete the CSS at midpoint and end of implementation via an email sent from the site lead with an embedded link to the survey with a 7-day e-mail reminder sent based on a modified Dillman survey methodology [57].

Aim 2

To explore impact on symptom severity, intra-individual change scores using ESAS-r data for a 6-months pre-implementation population cohort (non-iPEHOC exposed) were compared to scores for an ESAS-r plus IPEHOC (exposed) population cohort in the 6-months during the final months of implementation (Ontario sites only). Symptom scores were rank ordered based on their occurrence in time and a symptom change slope of outcome on time using linear regression were generated to account for systematic person-specific deviations such as serial correlation, time-varying medical events, and irregular measurement times. The mean slopes of the change scores were subjected to unequal samples ANOVA with the RCC site and the observation window as categorical variables. Using a similar timeframe, a Mann Whitney U-test was used to evaluate change in levels of patient activation using the brief Patient Activation Measure (PAM) [58]. The PAM measures knowledge, skills, and confidence for self-management and segments patients into one of four progressively higher levels of patient activation as follows: Level 1 (lack knowledge/confidence for managing health), Level 2 (knowledgeable, unsure about actions to take), Level 3 (knowledgeable, initiating health self-management skills), and Level 4 (using health behaviours, but struggle under stress).

In Ontario sites only, we compared the % of the population in the baseline observation window cohort (90 days pre-iPEHOC/non-exposed) to the % of the population in the 90 days post-iPEHOC implementation observation window cohort (exposed) admitted to the ED or hospitalized (H) within 30 days of an e-PRO report in that timeframe. Data sources for health utilization outcomes included the Symptom Management Reporting Database (SMRD) [59], which captures e-PRO data for Ontario RCCs, Canadian Institute for Health Information (CIHI): National Ambulatory Care Reporting System (NACRS) [60], Discharge Abstracts Database (DAD) [61] and the Activity Level Reporting (ALR) database of CCO [62]. NACRS records all visits to the ED and hospitalization, whereas the Activity Level Reporting database captures all visits to RCPs in Ontario for visit identification.

Results

Completion rates

Sites had varying baseline rates of using any e-PROs prior to iPEHOC implementation. In Ontario sites, NECC and JCC had baseline ESAS-r completion rates of 75% and 37% respectively, and PM had baseline completion rates of 86%. We observed an increase in e-PRO completion rates over time across the six sites; or rates were maintained if initially high at project start (Fig. 2). Time to complete ESAS-r plus all four e-PROs took on average 9 min, 56 s (tracked electronically in the platform). Overall, 6000 e-PROs were completed across sites.

Acceptability/use and patient experience

Results from the PAS (Ontario sites, n = 182, Montreal sites n = 54) indicated that 67% Ontario and 79% of Montreal patients respectively rated the e-PROs as acceptable for enabling communication about symptoms with their health care team. Compared to average population rates in Ontario for the two-items from AOPPS there was a shift in patient experience from pre/post implementation (Table 2). Of the 62 clinicians (50% nurses, 26% physicians, 36% allied health) who completed the CSS, slightly more than half (58%) felt the e-PROs had value and were used for symptom management in clinic visits and most (75–85%) were very satisfied with their ability to respond (data not shown). Slightly more than a third (36%) thought e-PROs prolonged clinic visit times. However, only 25% of respondents from NECC speciality clinics reported e-PROs had value as clinicians felt the e-PROs were redundant to comprehensive assessments already performed.

Symptom severity

We examined slopes of intra-individual change scores for all targeted e-PRO symptoms in Ontario sites only (fatigue, pain, depression, anxiety), but only significant slopes for change in anxiety were observed. A significantly larger reduction in anxiety was observed in the iPEHOC exposed population, compared to the pre-iPEHOC non-exposed cohort, p = 0.004 (Fig. 3). This finding was not as marked at PM, since GAD-7 was already in use pre-implementation, whereas the marked reduction in the anxiety distress slope in NECC and JCC may be indicative of the value added from the ESAS-r plus iPEHOC e-PROs in these sites.

Patient activation

A shift to higher levels of activation and a small but statistically significant increase in median scores on the Patient Activation Measure from baseline to end-point was observed (p = 0.045) in Ontario sites combined but not in Montreal sites (Fig. 4). This finding may be due to the increased exposure to ESAS-r in Ontario since 2007 and the small sample size in Montreal.

Health care utilization

For all Ontario sites combined, a small significant reduction was shown in hospitalization rates (p = 0.034) in the pre-implementation population (30 days after completion of the e-PRO report) (n = 299, 12.3%) compared to a post-population rate of 10.1% (exposed, n = 162). A trend towards significance was also observed for emergency department visit rates (p = 0.081) in the pre-population (n = 359, 14.8%) compared to the post-population rate of 12.8% (n = 205) (Table 3). The greatest contribution to the overall emergency department visit rates came from disease site clinics targeted in the Juravinski Cancer Centre, where ED visits were reduced from 20.1% to 12.7% (p = 0.051), and for hospitalizations in their disease site populations in the Juravinski Cancer Centre (11.8% to 4.9%, p = 0.014) and in the lung and sarcoma cancer population in the Princess Margaret Cancer Centre (14.8% to 10.6%, p = 0.041).

Implementation strategies

Implementation strategies identified by sites as key for facilitating uptake of e-PROs are shown in Table 4. A supportive leadership structure that establishes PROs use as performance metric, building clinician capacity and confidence in interpreting and responding to PRO data using case-based education and educational outreach, adaptive technology to trigger multidimensional e-PROs when screened positive based on ESAS-r and output reports that are easy to interpret were identified as key factors for successful uptake. Also, broad engagement of all stakeholders, high contact with practices, ongoing monitoring and use of audit and feedback, respected peers as champions, and site coordinators skilled in knowledge translation and facilitating practice change were considered key to successful implementation. Sites also identified the collaborative approach as helpful for sharing of ideas and gaining support in dealing with resistance to practice change. Our iPEHOC implementation methods toolkit is available online and recommendations were integrated for use in the Ontario provincial e-PROs framework to guide implementation steps in other PROs work [63]. A checklist was developed as part of the iPEHOC toolkit for use in guiding implementation in other organizations (Additional File 4: iPEHOC implementation checklist).

Discussion

Globally, greater attention has been focused on the use of e-PROs in health care organizations to achieve person-centered and tailored supportive care [64]. Embedding of PROs for guiding healthcare decision-making and patient management requires use of implementation strategies to facilitate practice change and redesign of care processes and workflow if improved health outcomes are to be acheived [65]. The American Society of Clinical Oncology (ASCO) has recommended routine use of e-PROs as a health policy priority for oncology practices [66], yet little evidence beyond passive dissemination of e-PRO information systems has been generated as to how to embed this data for use in ‘everyday’ oncology practices. A recent review identified only 3 reports of ‘real-world’ implementation of e-PROs in clinical practice and none of these studies used knowledge translation or implementation strategies to facilitate integration of PROS for personalized patient management [15]. Our study makes a novel contribution to the literature by identifying a collaborative approach and person-centred clinical training method for embedding of e-PRO data in the clinical encounter for patient management and for patient activation in symptom management. Additionally, we have identified key implementation strategies that promote successful uptake and applied these in diverse disease sites and urban, regional and remote cancer settings. Like most other studies, we found that e-PROs were acceptable to patients as it gives them a ‘voice’ to communicate their experience of the impact of cancer and treatment [67]. A shift in patient experience regarding emotional concerns and symptoms may be indicative of changes in care processes and uptake of the e-PROs in patient care. Clinical trial data show that e-PROs when used in clinical care improves quality of life, time on chemotherapy, reduces health care use and may improve survival if monitored and responded to between visits, but there is still a need for real-world evidence of impact [68].

Despite the limitations of small sample sizes, heterogeneity, and possible within site clustering in pre/post population cohort comparisons, we demonstrated the potential impact of multi-faceted implementation strategies on reducing anxiety and health care utilization but future large-scale trials are needed. A reduction in anxiety shown for iPEHOC may suggest patients felt more confident their symptoms would be addressed by clinicians using e-PRO data. This effect was not found for other targeted symptoms of pain, depression, fatigue, which likely require more targeted interventions [69]. The positive change in emergency department visit rates and hospitalization found for use of ESAS-r alone [70] suggests that early management of symptom and emotional distress may mitigate escalation [71].

While we noted a shift towards higher levels of patient activation in pre/post population comparisons, we used passive dissemination of information about PROs to patients and emphasized participatory communication approaches to promote patient activation in clinician training, but there is a need for greater attention to use of PROs for activating patients in symptom self-management; and as an essential component of PROs implementation [72].

Implementation problems are described as messy, complex and wicked [73]. Our experience certainly echo’s this sentiment as we found that facilitating implementation across multiple disease site teams was challenging since disease site teams function as their own microsystem within the larger Regional Cancer Centres (meso-program level system) and provincial cancer system (macro cancer system), each of which have their own unique local barriers to uptake of e-PROs. Additionally, our measurement of outcomes was impacted by the ‘noise’ of implementation and ‘real-world’ problems such as simultaneous health system restructuring in Montreal, Quebec. Not surprisingly, the complexity of implementing PROs for use in the 'everyday' practice of clinicians in cancer settings has been previously described as “easier said than done” in other demonstration projects [74].

Conclusion

Successful implementation of e-PROs can transform health care towards achieving better health outcomes [75], but this requires use of knowledge translation and implementation science methods for integration in work flow and embedding in the ‘everyday’ practice of clinicians for personalized patient management. Future large scale pragmatic trials to assess effectiveness, long term sustainability and cost-effectiveness of PRO use in patient management are needed. Implementation of e-PROs for patient management may be facilitated if identified as a performance metric [76] and for payment for performance in value-based care [77].

Availability of data and materials

The datasets generated and/or analyzed during the current study are not publicly available as they are owned by the respective provincial authority and are only accessible through this entity; and restrictions may apply.

Abbreviations

- AOPPS:

-

Ambulatory oncology patient satisfaction survey

- ALR:

-

Activity level reporting

- ASCO:

-

American society of clinical oncology

- BPI:

-

Brief pain inventory

- CFS:

-

Cancer fatigue scale

- CSS:

-

Clinician satisfaction survey

- DAD:

-

Discharge abstracts database

- ED:

-

Emergency department

- ESAS-r:

-

Edmonton symptom assessment system-revised

- GAD-7:

-

Generalized anxiety disorder questionnaire

- iPEHOC:

-

Improving patient experience and health outcomes collaborative

- iKT:

-

Integrated knowledge translation

- H:

-

Hospitalized

- JCC:

-

Juravinski Cancer centre

- JGH:

-

Jewish general hospital (JGH)

- KTA:

-

Knowledge to action framework

- QI:

-

Quality improvement

- MUHC:

-

McGill University health centre

- NACRS:

-

National ambulatory care reporting system

- NECC:

-

Northeastern Cancer Centre

- ORS:

-

Organizational readiness survey

- PAS:

-

Patient acceptability survey

- PAM:

-

Patient activation measure

- PHQ-9:

-

Prime health questionnaire

- PM:

-

Princess margaret cancer centre

- PROs:

-

Patient reported outcomes and electronic PROs (e-PROs)

- RCT:

-

Randomized controlled trial

- RCC:

-

Regional cancer centre

- SDL:

-

Social Difficulties inventory

- SMHC:

-

St. Mary’s healthcare centre

- SMRD:

-

Symptom management reporting database

References

Jackson, S. E., & Chester, J. D. (2014). Personalized cancer medicine. Int J Cancer, 137(2), 262–266.

Cherny, et al. (2014). Words matter: Distinguishing personalized medicine and biologically personalized therapeutics. JNCI, 106(12), 1–5.

Alemayehu, D., & Cappelleri, J. C. (2012). Conceptual and analytic considerations toward the use of patient-reported outcomes in personalized medicine. Amer Health and Drug Benefits, 5(5), 310–317.

Gensheimer, S. G., Wu, A. W., & Snyder, C. F. (2018). Oh, the places we’ll go: Patient reported outcomes and electronic health records. Patient, 11(6), 591–598.

PROMs Background Document, Canadian Institute for Health Information. PROMs Forum Feb 2015. https://www.cihi.ca/sites/default/files/document/proms_background_may21_en-web.pdf

Cheville, A. L., Alberta, S. R., Rummans, T. A., et al. (2015). Improving adherence to cancer treatment by addressing quality of life in patients with advanced gastrointestinal cancers. J Pain Symptom Manag, 50(3), 321–327.

Chen, J., Ou, L., & Hollis, S. J. (2013). A systematic review of the impact of routine collection of patient reported outcome measures on patients, providers and health organizations in an oncologic setting. BMC Health Serv Res, 11(13), 211.

Yang, L., Manhas, D., Howard, A., et al. (2014). Patient-reported outcome use in oncology: A systematic review of the impact on patient-clinician communication. Support Care Canc, 26, 41–60.

Kotronoulas, G., Kearney, N., Maguire, R., et al. (2014). What is the value of the routine use of patient-reported outcome measures toward improvement of patient outcomes, processes of care, and health service outcomes in cancer care? A systematic review of controlled trials. JCO, 32(14), 1480–1501.

Basch, E., Deal, A. M., Kris, M. G., et al. (2016). Symptom monitoring with patient-reported outcomes during routine cancer treatment: A randomized controlled trial. JCO, 34, 557–565.

Basch, E., Deal, A. M., Dueck, A. C., et al. (2017). Overall survival results of a trial assessing patient reported outcomes for symptom monitoring during routine cancer treatment. JAMA, 318, 197–198.

Stover, A., Irwin, D. E., Chen, R. C., et al. (2015). Integrating patient-reported measures into routine cancer care: Cancer patients’ and clinicians’ perceptions of acceptability and value. Generating Evid Methods Improv Patient Outcomes, 3(1), 17.

Howell, D., & Liu, G. Can routine collection of patient reported outcome data actually improve person-centered health? Healthcare Papers, 17(2012, 4), 42–47.

Basch E, Barbera L, Kerrigan CL, Velikova G (2018) Implementation of patient-reported outcomes in routine medical care. Am Soc Clin Oncol Ed book, ASCO journals, Alexandria, VA.

Anachkova, M., Donelson, S., Ska Licks, A. M., et al. (2018). Exploring the implementation of patient-reported outcome measures in cancer care: Need for more ‘real-world’ evidence results in the peer reviewed literature. J Patient-Reported Outcomes, 2(64), 1–21.

Mitchell, A. J. (2013). Screening for cancer related distress when is implementation successful and when it is unsuccessful? Acta Oncol, 52(2), 216–224.

Gilbert, J. E., Howell, D., King, S., et al. (2012). Quality improvement in cancer symptom assessment and control: The provincial palliative care integration project (PPCIP). J Pain Symptom Manag, 43(4), 663–678.

Dudgeon, D., King, S., Howell, D., et al. (2011). Cancer Care Ontario’s experience with implementation of routine physical and psychological symptom distress screening. Psychooncology, 21(4), 357–364.

Watanbe, S. M., Nekolaichuk, C., Beaumont, C., et al. (2011). A multicenter study comparing to numerical versions of the Edmonton symptom assessment system in palliative care patients. J Pain Symptom Manag, 41, 456–468.

Pereira, J., Green, E., Molloy, S., et al. (2014). Population-based standardized symptom screening: Cancer Care Ontario’s Edmonton symptom assessment system and performance status initiatives. JOP, 10(3), 212–214.

Seow, H., Sussman, J., Martelli-Reid, L., et al. (2012). Do high symptom scores trigger clinical actions? An audit after implementing electronic symptom screening. JOP, 8(6), e142–e148.

Greenhalgh, J., Long, A. F., & Flynn, R. (2005). The use of patient reported outcome measures in routine clinical care: Lack of impact or lack of theory? Soc Science Med, 60, 833–843.

Damschroder, L. J., Aron, D. C., Keith, R. E., et al. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implement Sci, 4, 50.

Van Der Wees, P. J., Nijhuis-Van Der Sanden, M. W. G., Ayanian, J. Z., et al. (2014). Integrating the use of patient-reported outcomes for both clinical practice and performance measurement: Views of experts from 3 countries. Millbank Q, 92(4), 754–775.

Howell, D., Molloy, S., Wilkinson, K., Green, E., Orchard, K., Wang, K., & Liberty, J. (2015). Patient- reported outcomes in routine cancer clinical practice: A scoping review of use, impact on health outcomes, and implementation factors. Ann Oncol, 26(9), 1846–1858.

Mitchell, S., & Chambers, D. (2017). Leveraging implementation science to improve cancer care delivery and patient outcomes. JOP, 13(8), 523–539.

Howell, D., Hack, T. F., Green, E., et al. (2014). Cancer distress screening data: Translating knowledge into clinical action for a quality response. Palliat Support Care, 12(1), 39–51.

Wells, S., Tamir, O., Gray, J., et al. (2018). Are quality improvement collaboratives effective? A systematic review. BMJ Qual Saf, 27(3), 226–240.

Fixsen DL, Naoom SF, Blase KA, et al. (2005) Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la parte Florida mental health institute, the National Implementation Research Network (FMHI publication #231).

Gagliardi, A. R., Berta, W., Kothari, A., et al. (2016). Integrated knowledge translation (IKT) in health care: A scoping review. Implement Sci, 11(38), 1–12.

Graham, I., Logan, J., Harrison, M., Straus, S., Tetroe, J., Caswell, W., & Robinson, N. (2008). Lost in knowledge translation: Time for a map? J Contin Educ Heal Prof, 26, 13–24.

Daut, R. L., Cleeland, C. S., & Flanery, R. C. (1983). Development of the Wisconsin brief pain questionnaire to assess pain in Cancer and other diseases. Pain, 17, 197–210.

Okuyama, T., Akechi, T., Kugaya, A., et al. (2000). Development and validation of the cancer fatigue scale: A brief, three-dimensional, self-rating scale for assessment of fatigue in cancer patients. J Pain Symptom Manag, 19(1), 5–14.

Kroenke, K., Spitzer, R., & Williams, W. (2001). The PHQ-9: Validity of a brief depression severity measure. JGIM, 16, 606–616.

Spitzer, R. L., Kroenke, K., Williams, J. B., & Lowe, B. (2006). A brief measure for assessing generalized anxiety disorder: The GAD-7. Arch Int Med, 166(10), 1092–1097.

Bagha, S. M., Macedo, A., Jacks, L. M., et al. (2013). The utility of the Edmonton symptom assessment system in screening for anxiety and depression. Eur J Cancer Care, 22(1), 60–69.

Oldenmenger, W. H., de Raaf, P. J., de Klerk, C., & van der Rijt, C. C. (2013). Cut points on 0-10 numeric rating scales for symptoms included in the Edmonton symptom assessment scale in cancer patients: A systematic review. J Pain Symp Manage, 45(5), 1083–1093.

Spitzer, W. O., Dobson, A. J., Hall, J., et al. (1981). Measuring the quality of life of cancer patients: A concise QL-index for use by physicians. J Chronic Dis, 34, 585–597.

Wright, E. P., Kiely, M., Johnston, C., et al. (2005). Development and evaluation of an instrument to assess social difficulties in routine oncology practice. Qual Life Res, 14, 373–386.

Helfrich, C. D. (2009). Organizational readiness to change assessment (ORCA): Development of an instrument based on the promoting action on research in health services (PARIHS) framework. Imp Sci, 4, 38. https://doi.org/10.1186/1748-5908-4-38.

Mittman, B. S. (2004). Creating the evidence base for quality improvement Collaboratives. Annals Int Med, 140, 897–901.

Thomson O’Brien, M. A., Oxman, A. D., Haynes, R. B., et al. (2000). Local opinion leaders: Effects on professional practice and health care outcomes. Cochrane Database Syst Rev, 2, CD003030.

Miech, E. J., Rattray, N. A., Flanagan, M. E., Damschroeder, L., Schmid, A. A., & Damush, T. M. (2018). Inside help: An integrative review of champions in healthcare-related implementation. SAGE Open Med, 6, 1–11.

Grol, R., Wensing, M., & Eccles, M. D. D. (2013). Improving patient care: The implementation of change in clinical practice (2nd ed.). Oxford, UK: Wiley Blackwell.

Powell, B. J., McMillen, J. C., Proctor, E. K., Carpenter, C. R., Griffey, R. T., Bunger, A. C., et al. (2011). A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev, 69(2). https://doi.org/10.1177/1077558711430690.

Levenstein, J. H., EC, M. C., IR, M. W., et al. (1986). The patient-centered clinical method. A model for the doctor-patient interaction in family medicine. Fam Pract, 3(1), 24–30.

Howell, D., Keshavarz, H., Esplen, M. J., Hack, T., Hamel, M., et al. (2015). Pan-Canadian practice guideline: Screening, assessment and Management of Psychosocial Distress, depression and anxiety in adults with Cancer version 2, 2015. Toronto: Canadian Partnership Against Cancer and the Canadian Association of Psychosocial Oncology https://capo.ca/resources/Documents/Guidelines/3APAN-~1.PDF. Accessed, Jan 2015.

Howell, D., Keshevarz, H., Broadfield, L., Hack, T., Hamel, M., et al. (2015). A Pan Canadian practice guideline for screening, assessment, and Management of Cancer-Related Fatigue in adults version 2, 2015. Toronto: Canadian Partnership Against Cancer and the Canadian Association of Psychosocial Oncology https://capo.ca/resources/Documents/Guidelines/3APAN-~1.PDF.

Sawhney, M., Fletcher, G. G., Rice, J., Watt-Watson, J., & Rawn, T. (2017). Guidelines on management of pain in cancer and/or palliative care (pp. 18–14). Toronto (ON): Cancer Care Ontario; 2017 Sep 22. Program in evidence-based care evidence summary no.

Person-Centered Perspective: Patient Report Outcomes video for patients. (2015) Canadian Partnership Against Cancer https://youtu.be/GtshFS1p70w. Accessed Sept 1 2015.

Guidelines and advice, managing symptoms, side-effects & well-being. Symptom management guidelines for patients. www.cancercare.on.ca/symptoms. Accessed 1 Sept 2015.

Ivers N, Jamtvedt G, Flottorp S, et al. (2012) Audit and feedback: Effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev Issue 6. Art: CD000259. DOI: https://doi.org/10.1002/14651858.CD000259.pub3.

O’Brien, M. A., Oxman, A. D., Davis, D. A., et al. (2000). Educational outreach visits: Effects on professional practice and health care outcomes. Cochrane Database Syst Rev Issue, 2, CD000409.

Snyder, C. F., Blackford, A. L., Wolff, A. C., et al. (2013). Feasibility and value of patient viewpoint: A web system for patient-reported outcomes assessment in clinical practice. Psychooncology, 22(4), 895–901.

NRC+Picker. (2003). Development and validation of the Picker Ambulatory Oncology Survey Instrument in Canada. Markham, ON: NRC+Picker [cited 2007 Jan 26]. Available from: http://www.can-cercare.on.ca/qualityindex2006/download/Fina- lOncologyMaskedJuly11.pdf.

Provost, L. P., & Murray, S. (Eds.). (2001). The health care data guide: Learning from data for improvement (pp. 10–12). San Francisco: Jossey-Bass.

Dillman, D. A. (2007). Mail and internet surveys: The tailored design method (2nd ed.). Hoboken, NJ: Wiley.

Hibbard, J. H., Stockard, J., Mahoney, E. R., & Tusler, M. (2004). Development of the patient activation measure (PAM): Conceptualizing and measuring activation in patients and consumers. Health Serv Res, 39(4 Pt 1), 1005–1026.

Guidelines and advice, managing symptoms, side-effects & well-being. Symptom management guidelines and algorithms for clinicians. www.cancercare.on.ca.symptoms. Accessed 1 Sept 2015.

Canadian Institute for Health Information. (2007). CIHI Data Quality Study of Ontario Emergency Department Visits for Fiscal Year 2007. Ottawa, Ontario.

Discharge Abstracts Database of CIHI (DAD) Canadian Institute for Health Information. (2012). Data quality documentation, discharge abstract database — Current year information. Ottawa, ON: CIHI http://www.cihi.ca. Accessed on April 2016.

Cancer Care Ontario Data Book 2017-2018, 2019-2020. Activity Level Reporting (ALR). www.ccohealth.ca/en/access-data. Accessed 1 Sept 2017.

iPEHOC toolkit http://ocp.cancercare.on.ca/cms/One.aspx?portalId=327895&pageId=351050. Accessed January 20, 2020.

Baumhauer, J. F. (2017). Patient-reported outcomes-are they living up to their potential? NEJM, 377(1), 6–9.

Procter, E., Silmere, H., Raghavan, R., et al. (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Admin Pol Ment Health, 38(2), 65–76.

Stover, A. M., Chiang, A. C., & Basch, E. M. (2016). ASCO PRO work group update: Patient reported outcome measures as a quality indicator. JCO, 34(7), 276.

LeBlanc, T. W., & Abernethy, A. P. (2017). Patient-reported outcomes in cancer care-hearing the patient voice at greater volume. Nat Rev Clin Oncol, 14(12), 763–772.

Rivera, S. C., Kyte, D. G., Aiyegbusi, O. L., Slade, A. L., McMullan, C., & Calvert, M. J. (2019). The impact of patient reported outcome (PRO) data from clinical trials: A systematic review and critical analysis. Health Qual Life Outcomes, 17, –156.

Meyer, T. J., & Mark, M. M. (1995). Effects of psychosocial interventions with adult cancer patients: A meta-analysis of randomized experiments. Health Psychol, 14, 101–108.

Barbera, L., Sutradhar, R., Howell, D., Sussman, J., Seow, H., et al. (2015). Does routine symptom screening with ESAS decrease ED visits in breast cancer patients undergoing adjuvant chemotherapy? Support Care Cancer, 23, 3025–3032.

Mitchell, A. J., Vahabzedeh, A., & Magruder, K. (2011). Screening for distress and depression in cancer settings: 10 lessons from 40 years of primary care research. Psychooncology, 20, 572–584.

Greene, J., & Hibbard, J. (2012). Does patient activation matter? An examination of the relationships between patient activation and health-related outcomes. J Gen Intern Med, 27(5), 520–526.

Lavery, J. (2012). “Wicked problems”, community engagement and the need for an implementation science for research ethics. BMJ, 74, 1477–1485.

Li, M., Macedo, L., Crawford, S., et al. (2016). Easier said than done: Keys to successful implementation of the distress assessment and response tool (DART) program. JOP, 2(5), e513–e526.

Black, N. (2013). Patient reported outcome measures could help transform healthcare. BMJ, 346, 167.

Basch, E., Snyder, C., McNiff, K., et al. (2014). Patient-reported outcome performance measures in oncology. JOP, 10(3), 209–211.

Petersen, L. A., Woodard, L. D., UrechT, D. C., & Sookanan, S. (2006). Does pay-for-performance improve the quality of health care? Annals of Intern Med, 145(4), 265–272.

Acknowledgements

All members of The IPEHOC Collaborative Team contributed to the study and includes:

Katherine George, Research Associate, Health Sciences North, Sudbury;

Zahra Ismail, Manager, Patient Reported Outcomes & Symptom Management, CCO;

Adriana Krasteva, Project Manager, St. Mary’s Hospital Centre, Montreal.

Ashley Kushneryk, Symptom Management Coordinator, Rossy Cancer Network;

Lorraine Martelli, APN Lead Juravinski Cancer Centre, Hamilton;

Alyssa Macedo, Co-Site Lead: Princess Margaret Cancer Centre, Toronto;

Julia Park, project coordinator, Princess Margaret Cancer Centre, Toronto;

Lesley Moody, Interim Director, Clinical Programs, Person-Centered Care, CCO now Clinical Director, Princess Margaret Cancer Centre;

Lisa Barbera, Clinical Lead, Patient-Reported Outcomes, CCO now at Alberta Health Services; Pat Giddings, Patient Family Advisory Council (PFAC) member at the Northeast Cancer Centre;

Subhash Bhandari, PFAC member from Mississauga;

Linda Tracey & Julie Szasz- Patient Advisors from Montreal.

Funding

Funding support was received from the Canadian Partnership Against Cancer with additional in kind support and resources from Cancer Care Ontario, Toronto, ON and the Rossy Cancer Network, Montreal, Quebec, Canada.

Author information

Authors and Affiliations

Consortia

Contributions

DH, ML, ZR conceptualized and designed the study. DH drafted the manuscript. ML, ZR contributed to conceptualization and provided critical revisions to the manuscript. ML, ZR, CM, AS, NM, MM, RF, MH, DBL contributed to analysis, edited the manuscript for intellectual content and contributed site study data. All authors reviewed and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval for a multi-site study was obtained from the University Health Network Research Ethic Board (REB) (REB #14–8525-CE) followed by approvals in all participating regional cancer centres in Ontario and Quebec.

Consent for publication

Not applicable.

Competing interests

Doris Howell is a member of the Scientific Advisory Board and has received fees for consultation to CAREVIVE Inc. No other conflicts of interest are declared. The content of the manuscript was not influenced by the funder.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Howell, D., Rosberger, Z., Mayer, C. et al. Personalized symptom management: a quality improvement collaborative for implementation of patient reported outcomes (PROs) in ‘real-world’ oncology multisite practices. J Patient Rep Outcomes 4, 47 (2020). https://doi.org/10.1186/s41687-020-00212-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41687-020-00212-x