Abstract

Background

Patients are accessing online health information frequently and using it to guide treatment decisions. Few studies have been done assessing obstructive sleep apnea (OSA) information, and no studies have examined surgical resources for these patients.

Methods

This was a cross-sectional analysis. “Sleep surgery” and “sleep apnea surgery” were entered into Google, MSN Bing, and Yahoo! search engines. The first 25 results of each individual search were evaluated. Each unique site was assessed for content quality, accessibility, usability, reliability, and readability using validated instruments. The date of last update for each site was also documented.

Results

“Sleep surgery” was searched for an average of 1,703,991 (SD = 166,585) times per month from June 2015 to June 2016. 33 unique websites were identified. Sites were most often academically/government affiliated (10/33, 30.3%), health information sites (8/33, 24.2%), or non-profit/hospital related (8/33, 24.2%). The mean overall DISCERN score for quality was “good,” at 56.6 (range, 22–79). The mean overall LIDA score for accessibility, usability, and reliability was “moderate,” at 123.9 (range, 97–152). The mean Flesch Reading Ease score for readability was 49.77 (range 22.7-74.3); 7/33 (21.2%) scored above 60, the recommended range for average visitors. 60.6% (20/33) of the sites had been updated since January 1, 2014. There was no significant correlation between a websites’ position on a browser’s search and its DISCERN, LIDA, FRE, or total score.

Conclusions

With patients’ increasing reliance on Internet information, efforts to understand and improve websites’ quality and usefulness present unique opportunities in OSA surgery and beyond.

Similar content being viewed by others

Background

The Internet is an increasingly important resource for patients seeking health information. An estimated 8 of 10 Internet users pursue online medical education, with 85% utilizing search engines to find it (Fox S. Health topics. Pew Internet and American Life Project. http://pewinternet.org/Reports/2011/Health Topics.aspx. Viewed Feb 27 2016; Ybarra and Suman 2006). However, studies have shown poor quality and inaccurate information on websites regarding numerous different medical and surgical conditions (Biermann et al. 2000; Impicciatore et al. 1997; Soot et al. 1999). This is concerning in an environment where nearly three-fourths of patients using the Internet say their findings influence their treatment decisions (Rainie and Fox S. The Online Health Care Revolution: The Internet’s powerful influence on “health seekers”. http://www.pewinternet.org/2000/11/26/the-online-health-care-revolution. Viewed March 3 2016).

Obstructive sleep apnea (OSA) is a major public health burden (Yaggi et al. 2005; Peker et al. 2002) with substantial socioeconomic impact (Kapur 2010; Mulgrew et al. 2007; Omachi et al. 2009). Patients with OSA are treated by a variety of specialists including internists, neurologists, psychiatrists, and otolaryngologists. The most commonly prescribed initial treatment is continuous positive airway pressure (CPAP), but more than 50% of patients fail, which suggests surgery as a promising alternative (Gay et al. 2006). Indeed, sleep surgery is being performed at increasing rates nationwide (Ishman et al. 2014). Due to the risk and variability in outcomes of different management options for OSA, the potential harms from misinformation can be catastrophic. To date, no studies have examined the quality of web-based information related to OSA surgery.

The main objective of this study is to assess the online sites visited by patients performing online searches for OSA surgery information using several validated instruments. Our hypothesis is that the topic would have a dearth of high quality information and overall be lacking in useful resources. Identifying this and focusing on areas of need would be valuable in setting future priorities for patient education efforts. Additionally, conducting this analysis would provide guidance for providers and patients on suggested websites for OSA treatment information.

Methods

This study received approval by the Northwestern University Institutional Review Board.

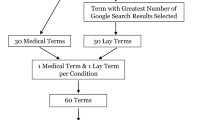

Search engine query

“Sleep surgery” was chosen as the initial search term and entered into Google, MSN Bing, and Yahoo! search engines. Utilizing Google AdWords (Google AdWords Keyword Planner. https://adwords.google.com/KeywordPlanner. Viewed January 1 2015), the most commonly associated search term “sleep apnea surgery” was identified and also entered into the three search engines to allow for a broad review of sites patients would encounter. Inclusion in the study required that a website be free, written in English, non-duplicate, and a source of online health information. Search engine query and collection of websites was performed on June 1, 2016.

The first 25 results of each browser search meeting inclusion criteria were evaluated. Each unique site was assessed for content quality, accessibility, usability, reliability, and readability using validated instruments. The last update for each site was also documented.

Validated assessment instruments

For quality, the DISCERN instrument was used (Charnock et al. 1999; Shepperd et al. 2002). This 16-item questionnaire is a validated tool that measures quality of online health information (Kaicker et al. 2010; Batchelor and Ohya 2009). Questions included are “is it clear what sources of information were used to compile the publication?” and “does it describe the benefits/risks of treatments?” Each question is rated on a 5-point scale. The maximum score for the DISCERN instrument was 80. Each website was categorized as “excellent” (68–80), “good” (55–67), “fair” (42–54), “poor” (29–41), or “very poor” (16–28).

For accessibility, usability, and reliability, the LIDA instrument was used. This 41-item questionnaire is a validated tool to examine these three domains of quality for Internet resources (Minervation. The Minervation validation instrument for healthcare web- sites. Available at: http://www.minervation.com/lida-tool/. Accessed March 20 2015). Questions 1-6 pertain to accessibility and assess a site’s code and setup for compliance with World Wide Web Consortium standards, as well as need for registration. Usability is assessed in questions 7–24, which examine website clarity, consistency of layout, and browsing/interactive abilities. Lastly, questions 25–41 assess reliability with questions focusing on frequency of updates, conflicts of interest, and accuracy of content. The maximum score for each domain is 60, 54, and 51 respectively. Each question assessed by raters is rated on a 0 (“Never”) to 3 (“Always”) scale. The raw scores for each category were converted into percentages, and were classified as “high” (>90%), “moderate” (50–90%), or “low” (< 50%).

For readability, the Flesch Reading Ease (FRE) score was used. Each site’s text was copied and pasted into a Microsoft Word 2010 (Microsoft Inc., Redmond, Washington) document (Microsoft Corporation 2010). All efforts were made to remove author names, hyperlinks, non-standard text formatting, dates, and abbreviations to prevent low-skewing of scores (Goslin and Elhassan 2013). The grammar check function of Word software calculates FRE score on a 0–100 scale with higher scores indicating increased ease of reading.

Data collection and analysis

After the search engine query was performed, two authors (C.G. and H.Q.) evaluated each website independently. The scores for each question were then averaged to give an overall score that was utilized for results and statistical analysis. SPSS version 21 (SPSS Inc., Chicago, IL) was used for summary data and statistical analysis. Inter-observer reliability was measured separately for the DISCERN and LIDA instruments using the Cohen’s weighted-kappa coefficient, with significance set at > 0.6. Differences in mean scores between types of websites were analyzed using the Wilcoxon rank sum test, with threshold for significance set at p < 0.05. The correlation between DISCERN, LIDA, FRE, and total score with the position a website appeared on each browser search was analyzed using the Spearman correlation coefficient.

Results

“Sleep surgery” was searched for an average of 1,703,991 (SD = 166,585) times per month from June 2015 to June 2016. The most commonly associated search term “sleep apnea surgery” was searched an average of 1,818,541 (SD = 159,541) times per month from June 2015 to June 2016.

Of the 150 websites identified using the Google, MSN Bing, and Yahoo search engines, 33 (22.0%) unique websites met inclusion criteria. Reasons for exclusion of the other 117 websites included duplicate searches (n = 88, 75.2%), news story (n = 15, 8.5%), advertisements/commercials (n = 5, 4.3%), online videos (n = 3, 2.6%), non-functioning or hacked site (n = 3, 2.6%), research journal website (n = 2, 1.7%), and online discussion forums (n = 1, 0.9%). Most websites were academically/government affiliated (10/33, 30.3%), health information sites (8/33, 24.2%), or non-profit/hospital related (8/33, 24.2%), as shown in Fig. 1.

Inter-observer reliability for the DISCERN instrument was significant (k = 0.688). The mean overall DISCERN score for quality was “good,” at 56.6 (range, 22–79; SD = 14.0). The DISCERN instrument rated 7/33 (21.2%) websites as “excellent,” 13/33 (39.4%) as “good,” 9/33 (27.3%) as “fair,” 2/33 (6.1%) as “poor,” and 2/33 (6.1%) as “very poor.” Figure 2 displays the number of websites in each DISCERN category.

Inter-observer reliability for the LIDA instrument was significant (k = 0.814). The mean overall LIDA score for accessibility, usability, and reliability was “moderate,” at 123.9 (75.1%; range, 97–152; SD = 14.2). The distribution of LIDA scores for accessibility, usability, and reliability for all 33 websites is shown in Fig. 3.

The mean score for accessibility was “moderate” at 48.2 (80.3%) (SD = 4.4). Only 2/33 (6.1%) had “high” LIDA accessibility scores and the remaining 31/33 (93.9%) had “moderate” scores. The mean score for usability was “moderate” at 42.3 (78.3%) (SD = 7.3) for all 33 websites. There were 5/33 (15.1%) websites with “high” LIDA usability scores, 27/33 (81.8%) with “moderate” scores, and 1/33 (3.0%) with “low” usability scores. The mean score for reliability for all 33 websites was “moderate” at 33.4 (65.5%) (SD = 10.7). 5/33 (15.1%) websites had “high” LIDA reliability scores, 21/33 (63.6%) with “moderate” scores, and 7/33 (21.2%) with “low” reliability scores. The number of websites rated “high,” “moderate,” or “low” in each LIDA instrument category is displayed in Fig. 4.

The mean FRE score for readability was 49.8 (range 22.7–74.3, SD 13.3). 7/33 (21.2%) websites scored above 60, the recommended range for average visitors (Van der Marel et al. 2009; D’Alessandro et al. 2001). 20/33 (60.6%) of sites had been updated since January 1, 2014.

The websites with the top 5 aggregate total of the DISCERN, LIDA, and FRE scores are listed in Table 1. Two of these five websites are academic/government-sponsored sites, whereas the remaining three are health information sites. There were no significant differences between mean scores for all academic versus non-academic websites in DISCERN score (p = 0.98), LIDA score (p = 0.50), FRE score (p = 0.57), and aggregate total score (p = 0.92). There was no significant linear correlation between a websites’ rank in each browser search and its DISCERN score, LIDA score, FRE score, and aggregate total.

Discussion

An estimated one-third of all adults and children with sleep disorders first present to an otolaryngologist, of whom many are diagnosed with OSA (Yaremchuk and Wardrop 2010). These patients utilize Internet resources in important ways: more than half seek contact with a medical professional because of information they have found online (Ybarra and Suman 2006; Pusz and Brietzke 2012) and more than 70% report it significantly influences their treatment decisions (Rainie and Fox S. The Online Health Care Revolution: The Internet’s powerful influence on “health seekers”. http://www.pewinternet.org/2000/11/26/the-online-health-care-revolution. Viewed March 3 2016). Search engines are the mode of choice for patients seeking information on the web (Ybarra and Suman 2006), but at present there are no studies examining the quality and usefulness of these resources for sleep surgery. This is important as surgical procedures for OSA carry unique benefits and risks, and are being performed in record levels nationwide (Ishman et al. 2014).

In our study examining online resources for OSA surgery, there is, unsurprisingly, significant heterogeneity in the quality and utility of websites. Overall though, the results of this study are encouraging. Quality was ‘excellent’ or ‘good’ for the majority of websites studied (29/33, 87.9%). The average DISCERN score of 56.6 is higher than any other topic examined within the otolaryngology literature, including OSA (Goslin and Elhassan 2013; Pusz and Brietzke 2012; Langille et al. 2012; Alamoudi and Hong 2015; McKearney 2013).

These encouraging results carried over to non-quality measures as well. The majority of sites studied had at least “moderate” accessibility, usability, and reliability. This compares favorably to a previous study examining LIDA in other otolaryngologic disorders (Goslin and Elhassan 2013). This study also found that the vast majority of sites have moderate ratings for all three non-quality measures, with reliability having the widest range of scores- this reflects the homogenous nature of sites when it comes to user interface and interactivity, but heterogeneity when examining frequency of updates and sources of information. No studies examining LIDA or similar measures in OSA specifically have ever been performed.

There remain several shortcomings of online resources for OSA surgery. Numerous sites in our study scored “low” for their reliability. Average readability score was 49.8 and less than one-fourth of sites had a readability score above 60, which is often cited as the minimum recommended for patients (Van der Marel et al. 2009; D’Alessandro et al. 2001). Further, nearly 40% (13/33) of sites had not been updated since January 1, 2014. These are concerning findings: even the most comprehensive, quality online materials will be unhelpful and potentially dangerous if they are unable to be understood by patients or outdated. This is especially true in sleep surgery, as it is an evolving field with new paradigms occurring frequently (Lin et al. 2008; Kezirian 2011).

Why are top browser searches not scoring well on quality and adjunctive measures of usefulness? The Wikipedia site for “sleep surgery” was one of the top 2 searches in all browser queries, however was not one of the top scoring websites. When examining this site’s score breakdown, its LIDA is close to top websites, but its quality score (56) is much lower. Comparing individual domain scores, the site suffered from not having a more thorough discussion of treatment options, especially of surgical risks, medical management, and overall impact on quality of life. It is worth noting that on a more contemporary review of the website, some of these issues seem to have improved. Examining sites that we, anecdotally, often recommend to patients (entnet.org, sleepeducation.org), found that they suffered mostly from lower LIDA scores for usability and reliability versus our top scoring websites. We continue to feel there is utility to all of these resources individually, but it highlights the challenges to clinicians and patients when utilizing online information.

This study has several limitations. First, we chose the term ‘sleep surgery’ by consensus of the authors. It was felt that this would represent a simple, common-language phrase that would be frequently used by patients seeking further information about surgery for OSA. Consideration was given to selecting ‘sleep apnea treatment’ or simply ‘sleep apnea,’ but the authors felt this would examine resources focusing on non-surgical issues related to OSA, or those favoring CPAP therapy. A previous study examining quality in OSA sites found lower overall quality, though more frequent updates (Langille et al. 2012). In order to best include other sites these patients may encounter, Google AdWords was used to find the most commonly associated search term, which was ‘sleep apnea surgery.’ Given the significant amount of website overlap amongst these terms, our findings likely represent the majority of information sources patients would find on their searches. We executed our search with only 3 search engines, although they represent nearly all United States activity (Top 15 Most Popular Search Engines: EBiz. www.ebizmba.com/articles/search-engines. Viewed Feburary 24 2016). Searches were limited to the first 25 sites from each search because nearly all users click within the first 2–3 results pages (Goslin and Elhassan 2013).

Our study utilized the DISCERN, LIDA, and FRE instruments to measure quality, accessibility, usability, reliability, and readability, which all have shortcomings in their respective domains. However, they are the few tested and validated tools for analyzing online health resources. In particular, the DISCERN and LIDA instruments rely on subjective scoring- however, our study’s significant kappa-coefficients on these respective tests is reassuring. The FRE has inherent limitations in examining medical websites, as many complex jargon terms cannot simply be replaced which skews to lower scores. This applies to all websites evaluated, however, so comparisons remain helpful. Other scoring systems, like the Flesch-Kincaid Grade Level or Gunning Frequency of Gobbledygook, can be used- but there is a high correlation with FRE and they have similar limitations. These instruments were chosen to provide a broad overview of website quality and adjunctive measures on the topic of OSA surgery, and we feel that they are effective in this.

The present study provides a useful overview of online health resources for patients pursuing sleep surgery. Overall, the quality and utility of these websites is strong, and greater than prior investigations in other fields of otolaryngology. Future research is needed to determine the etiology of this finding, and to assess its impact on patient experience and outcomes. This information can help direct physicians when discussing OSA surgery with patients, as well as healthcare providers developing online medical education.

Conclusions

Patients utilize online resources for information on their health conditions and treatments options. On the topic of OSA surgery, the quality and usefulness of current online materials are good overall, and rated higher than other otolaryngologic topics analyzed with the same metrics. Physicians must stay abreast of Internet content to assist in patient education and guidance.

References

Alamoudi U, Hong P. Readability and Quality assessment of websites related to microtia and aural atresia. IJPO. 2015;79(2):151–6.

Batchelor JM, Ohya Y. Use of the DISCERN instrument by patients and health professionals to assess information resources on treatments for asthma and atopic dermatitis. Allergol Int. 2009;58:141–5.

Biermann JS, Golladay GJ, Greenfield MLVH, Baker LH. Evaluation of cancer information on the Internet. Cancer. 2000;86:381–90.

Charnock D, Shepperd S, Needham G, Gann R. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health. 1999;53:105–11.

D’Alessandro DM, Kingsley P, Johnson-West J. The readability of pediatric patient education materials on the World Wide Web. Arch Pediatr Adolesc Med. 2001;155:807–12.

Fox S. Health topics. Pew Internet and American Life Project. http://pewinternet.org/Reports/2011/Health Topics.aspx. Viewed Feb 27, 2016

Gay P, Weaver T, Loube D, et al. Evaluation of positive airway pressure treatment for sleep related breathing disorders in adults. Sleep. 2006;29(3):381–401.

Google AdWords Keyword Planner. https://adwords.google.com/KeywordPlanner. Viewed January 1, 2015.

Goslin RA, Elhassan HA. Evaluating Internet Health Resources in Ear, Nose, and Throat Surgery. Laryngoscope. 2013;123:1626–31.

Impicciatore P, Pandolfini C, Casella N, Bonati M. Reliability of health information for the public on the World Wide Web: systematic survey of advice on managing fever in children at home. BMJ. 1997;314:1875–9.

Ishman SL, Ishii LE, Gourin CG. Temporal trends in sleep apnea surgery: 1993-2010. Laryngoscope. 2014;124(5):1251–8.

Kaicker J, Debono VB, Dang W, et al. Assessment of the quality and variability of health information on chronic pain websites using the DISCERN instrument. BMC Med. 2010;8:59.

Kapur VK. Obstructive sleep apnea: diagnosis, epidemiology, and economics. Respir Care. 2010;55:1155–67.

Kezirian EJ. Nonresponders to pharyngeal surgery for obstructive sleep apnea: insights from drug-induced sleep endoscopy. Laryngoscope. 2011;121(6):1320–6.

Langille M, Veldhuyzen S, Shanavaz SA, Massoud E. Systematic evaluation of obstructive sleep apnea websites on the internet. J Otolaryngol Head Neck Surg. 2012;41(4):265–72.

Lin HC, Friedman M, Chang HW, Gurpinar B. The efficacy of multilevel surgery of the upper airway in adults with obstructive sleep apnea/hypopnea syndrome. Laryngoscope. 2008;118(5):902–8.

McKearney TC. McKearney RM The quality and accuracy of internet information on the subject of ear tubes. IJPO. 2013;77:894–7.

Microsoft Corporation. Microsoft Office 2010 Standard Edition: Word [computer program]. Redmond, WA: Microsoft Corp; 2010.

Minervation. The Minervation validation instrument for healthcare web- sites. Available at: http://www.minervation.com/lida-tool/. Accessed March 20, 2015.

Mulgrew AT, Ryan CF, Fleetham JA, et al. The impact of obstructive sleep apnea and daytime sleepiness on work limitation. Sleep Med. 2007;9:42–53.

Omachi TA, Claman DM, Blanc PD, Eisner MD. Obstructive sleep apnea: a risk factor for work disability. Sleep. 2009;32(6):791–8.

Peker Y, Hedner J, Norum J, Kraiczi H, Carlson J. Increased incidence of cardiovascular disease in middle-aged men with obstructive sleep apnea: a 7-year follow-up. Am J Respir Crit Care Med. 2002;166:159–65.

Pusz MD, Brietzke SE. How good is Google? The quality of otolaryngology information on the internet. Otolaryngol Head Neck Surg. 2012;147(3):462–5.

Rainie L, Fox S. The Online Health Care Revolution: The Internet’s powerful influence on “health seekers”. http://www.pewinternet.org/2000/11/26/the-online-health-care-revolution. Viewed March 3, 2016.

Shepperd S, Charnock D, Cook A. A 5-star system for rating the quality of information based on DISCERN. Health Info Libr J. 2002;19:201–5.

Soot LC, Goneta GL, Edwards JM. Vascular surgery and the internet: a poor source of patient-oriented information. J Vasc Surg. 1999;30:84–91.

Top 15 Most Popular Search Engines: EBiz. www.ebizmba.com/articles/search-engines. Viewed Feburary 24, 2016.

Van der Marel S, Duijvestein M, Hardwick JC, et al. Quality of web-based information on inflammatory bowel diseases. Inflamm Bowel Dis. 2009;15:1891–6.

Yaggi HK, Concato J, Kernan WN, Lichtman JH, Brass LM, Mohsenin V. Obstructive sleep apnea as a risk factor for stroke and death. N Engl J Med. 2005;353:2034–41.

Yaremchuk K, Wardrop PA. Sleep Medicine. San Diego, CA: Plural Publishing Inc; 2010.

Ybarra ML, Suman M. Help seeking behavior and the Internet: a national survey. Int J Med Inform. 2006;75:29–41.

Acknowledgements

We would like to thank Amy Yang M.S. of the Northwestern University Biostatistics Collaboration Center for her assistance with statistical analysis.

Funding

The authors have no external funding sources responsible for this research.

Availability of data and materials

The datasets during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Authors’ contributions

CG came up with study idea, was involved in data collection, data analysis, manuscript preparation, and final approval. HQ was involved in data collection, data analysis, and final manuscript approval. RK was involved in study creation, data analysis, and final manuscript approval. SL and RC were involved in study creation, data analysis, manuscript preparation, and final manuscript approval.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

This study received approval by the Northwestern University Institutional Review Board. Consent to participate has been obtained from all authors.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Gouveia, C.J., Qureshi, H.A., Kern, R.C. et al. An assessment of online information related to surgical obstructive sleep apnea treatment. Sleep Science Practice 1, 6 (2017). https://doi.org/10.1186/s41606-016-0007-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41606-016-0007-y