Abstract

Introduction

The abstracts of a conference are important for informing the participants about the results that are communicated. However, there is poor reporting in conference abstracts in disability research. This paper aims to assess the reporting in the abstracts presented at the 5th African Network for Evidence-to-Action in Disability (AfriNEAD) Conference in Ghana.

Methods

This descriptive study extracted information from the abstracts presented at the 5th AfriNEAD Conference. Three reviewers independently reviewed all the included abstracts using a predefined data extraction form. Descriptive statistics were used to analyze the extracted information, using Stata version 15.

Results

Of the 76 abstracts assessed, 54 met the inclusion criteria, while 22 were excluded. More than half of all the included abstracts (32/54; 59.26%) were studies conducted in Ghana. Some of the included abstracts did not report on the study design (37/54; 68.5%), the type of analysis performed (30/54; 55.56%), the sampling (27/54; 50%), and the sample size (18/54; 33.33%). Almost all the included abstracts did not report the age distribution and the gender of the participants.

Conclusion

The study findings confirm that there is poor reporting of methods and findings in conference abstracts. Future conference organizers should critically examine abstracts to ensure that these issues are adequately addressed, so that findings are effectively communicated to participants.

Similar content being viewed by others

Introduction

An abstract is a condensed version of a full scientific paper that describes the aim of a study, the methods employed, the results, and the conclusions, including implications for policy and practitioners [1]. The abstract of every article is important to inform the reader about the results that are communicated [2]. In particular, the abstract is relevant as readers often make their preliminary assessment of the study at this stage. In fact, some readers, particularly clinicians, may use information from abstracts to inform their clinical decisions, due to their having limited time and resources [3].

Conversely, some researchers may never publish studies as full journal articles, and so the only published record of a study might be the abstract in the conference proceedings. The abstracts for a conference always yield insights, questions, and interpretations that alter and improve the final manuscript, supposing the authors decide to publish such studies in peer-reviewed journals. In particular, effective abstracts describe the importance of the scientific research performed [1, 4]. The participants in a conference usually make their preliminary assessment of a study using the information presented in the conference abstract. However, abstracts presented at conferences have largely been criticized as poor [1, 2], particularly in disability research. The poor reporting in conference abstracts may have several implications, particularly communicating incomplete information on findings and conclusions.

Recently, several studies have been undertaken on reporting in abstracts in disability research [5,6,7,8,9]. These studies have largely focused on poor reporting on the methods employed, including sampling, sample size selection, design, and ethical considerations [7, 8, 10]. However, none of these studies have attempted to assess poor reporting in conference abstracts. A literature search that was conducted identified few reviews and commentaries on abstracts, but rather focused on the reporting quality in abstracts in a randomized controlled trial in psychiatry [3], as well as practical lessons for writing conference abstracts [1, 2, 4]. None of these studies have attempted to assess poor reporting in abstracts from a scientific conference on disability.

Consequently, the African Network for Evidence-to-Action in Disability (AfriNEAD), which is a stakeholder group in disability that works to strengthen evidence-based intervention and policies, has organized a series of expert meetings and symposia in different settings in Africa. In previous symposia, the network upgraded the medium into a scientific conference, so as to strengthen collaboration and transform evidence into action. The College of Health Sciences at Kwame Nkrumah University of Science and Technology collaborated with the University of Stellenbosch to host the fifth scientific AfriNEAD conference for 2017 in Ghana.

This study aims to assess incomplete reporting in abstracts presented at the 5th AfriNEAD Conference in Ghana. In particular, the study assesses the content of abstracts in relation to information on the methods used, the results, and the conclusions, as well as how the abstracts meet the standards for reporting in abstracts. The study was facilitated by the following standards for reporting in abstracts: Strengthening the Reporting of Observational studies in Epidemiology (STROBE) Statement—Items to be included when reporting observational studies in a conference abstract [11, 12], as well as previous literature addressing methodological issues in abstracts [13,14,15].

Methods

Eligibility criteria

The study employed a descriptive design to assess the reporting in abstracts presented at the 5th AfriNEAD Conference, held on 7–9 August 2017 in Ghana. The study assessed the content of the abstracts against the standards for reporting [11, 12]. Abstracts included in the study were those that focused on one of the conference sub-themes, namely the following: children and youth with disability; education: early to tertiary; economic empowerment; development process in Africa: poverty, politics, and indigenous knowledge; health and HIV/AIDS; systems of community-based rehabilitation; holistic wellness, sport, recreation, sexuality, and spirituality; and research evidence and utilization, and abstracts of side events. The included abstracts were either structured or unstructured. However, one criterion was that the content of structured and unstructured abstracts should have adequate information that covers the background to the study, the methods used, the results, and the conclusions. Abstracts were also excluded if they were unstructured but did not adequately capture information on the background, the methods, the results, and the conclusions, but merely gave a brief narrative about the study.

Selection of the included abstracts

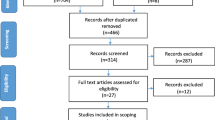

Three reviewers independently reviewed the titles and the content of the printed conference proceedings, and then approved on those that met the selection criteria. All the conference abstracts that were approved were included in the study. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flow chart for systematic reviews [16] was used to illustrate the selection processes (see Fig. 1).

Data extraction

A data extraction form was developed to extract information from all the included abstracts (see Additional file 1). The data extraction form was developed using the following reporting standards: Strengthening the Reporting of Observational studies in Epidemiology (STROBE) Statement—Items to be included when reporting observational studies in a conference abstract [11, 12], and variables of interest that have been captured in previous literature [13,14,15]. The data extraction form was divided into subsections, and it covered information on the background of the authors, the sub-themes, the objective of the study, the methodological issues, and the results. Three reviewers were involved in the extraction of data from all the included abstracts.

Data synthesis

Descriptive statistics, including frequencies, means, standard deviations, and percentages, were used to present the findings. Tables and figures were used to present the results. The analysis was performed using Stata version 15.

Results

Description of the abstracts reviewed

The study screened a total of 76 titles of conference abstracts. Of these, 59 met the inclusion criteria, while 17 were excluded. After a review of the full abstracts, a further five were excluded. Overall, 54 abstracts were included in the study (see Fig. 1).

Characteristics of the included abstracts

More than half of all the included abstracts (32/54; 59.26%) were studies that reported findings from Ghana. About a third of the included abstracts (16/54; 29.6%) focused on the sub-theme “education: early to tertiary,” while more than a tenth each focused on the sub-themes “holistic wellness, sport, recreation, sexuality, and spirituality” (8/54; 14.8%), “children and youth with disability” (7/54; 12.96%), and “health and HIV/AIDs” (7/54; 12.96%). More than two fifths (24/54; 44.44%) of the abstracts targeted people with disabilities, 17/54 (31.48%) used professionals (nurses, doctors, teachers, and stakeholders, including education directors and coordinators), and 5/54 (9.26%) used parents and caregivers (see Table 1).

The reporting of methods in the conference abstracts

Two thirds (36/54; 66.67%) of the included abstracts reported sample size in the abstracts, while 18/54 (33.33%) had no information on sample size (see Fig. 2). Most of the included abstracts (37/54; 68.5%) did not report the study design. Of the 17 abstracts that reported the study design, almost half (8/17; 47.06%) used a descriptive design (see Table 2). Most of the abstracts (45/54; 83.33%) reported the methods employed, while 9/54 (16.66%) had no information on the methods employed. Of the abstracts that reported the methods, 35/45 (77.77%) stated that qualitative methods were used (see Table 2).

The study showed that half of the included abstracts (27/54; 50%) did not report the sampling techniques used. Of the abstracts that reported the sampling, 18/27 (66.67%) used purposive sampling (see Table 2). More than half of the abstracts (30/54; 55.56%) did not report the type of analysis performed. However, of the abstracts that reported such information, 17/24 (70.84%) reported thematic analysis.

The majority of the included abstracts (50/54; 92.59%) did not report the analysis software used for the study. Only a few of the abstracts (4/54; 7.41%) reported SPSS as the statistical tool for the analysis. None of the included abstracts reported the date of conducting the study in the abstract.

The reporting of findings in the conference abstracts

The study extracted information about the results reported in the abstracts (see Table 3). None of the included abstracts reported the age distribution of participants in the abstracts. Similarly, most of the included abstracts (53/54; 98.15%) did not report information about the gender of the participants. Most of the included abstracts (37/54; 68.52%) reported results thematically, while a few (7/54; 12.96%) used descriptive statistics (see Table 3).

The majority of the included abstracts (48/54; 88.89%) did not report quantitative information that can be used to established associations between the dependent and the independent variables. Of the six included abstracts that were eligible to report such information, only one abstract reported such associations. Most of the included abstracts (43/54; 79.63%) were eligible to report on the primary outcome of the participants. Of the abstracts that were eligible to report on the primary outcome, 39/43 (90.69%) reported on such outcome, while 4/43 (9.30%) did not report on such outcome (see Table 3).

Discussion

Strengths and limitations

Our study has some strengths and limitations, which need to be explained. In terms of strengths, the study developed a data extraction form to extract information. Also, the authors followed due process, to ensure that adequate information was gathered and that the information was checked, so as to limit the risk of bias in the reporting of findings (see Table 4). Three reviewers independently reviewed the included abstracts. The reporting of the abstracts confirmed the findings of previous studies on methodological issues in disability research.

Our study has several limitations, however, which are mostly associated with the scope and type of the included abstracts. The study was limited to abstracts from one AfriNEAD conference. This suggests that the sample size is too small to make inferences about disability research in general. Limiting abstracts to one AfriNEAD conference may limit access to similar incomplete reporting in past AfriNEAD symposia.

The reporting of methods and results in the conference abstracts

In the current study, 68.5% of the included abstracts lacked information on the study design, while 14.8% did not report the type of data. This finding implies that there is poor reporting of methodological information, namely study design and type of data used. The incomplete reporting in abstracts implies that readers may have difficulty understanding how the study was conceptualized, as well as the type of data that was used to achieve the results. In particular, reporting study design and methods in conference abstracts is important to inform readers about the broader picture of the study, including the mix of data that is required to achieve the study objective [2]. Omission of such information at the abstract level may create uncertainty among readers. Poor reporting of methods means that readers cannot make concrete and firm conclusions about the subject. This finding can inform future conference organizers on effective ways to address methodological issues. In particular, future scientific abstracts should adequately highlight the relevant methodological issues, such as study design and methods to effectively communicate the findings [2].

The study highlighted that more than half of the included abstracts reported the sample size, while a few did not report such information. Reporting sample size in the abstract is relevant to provide evidence about the participants. Reporting sample size further enables the reader to better understand the representativeness and generalizability of the findings. Although most of the included abstracts reported the sample size, the 33.3% that lacked information on the sample size could provide misleading information to readers. This implies that readers may not be adequately informed about the findings presented in the abstracts. The few abstracts that lacked information on sample size demonstrate poor reporting. This finding confirms the findings of earlier studies on incomplete reporting [1, 2, 4]. Conference abstracts, particularly in disability research, should therefore adequately report the sampling approaches used, so as to inform readers. Scientific committees of conferences, particularly in disability research, should ensure that the sample size of participants is captured in the abstracts, to effectively communicate the findings.

In addition, reporting of the sampling technique used in abstracts is relevant to inform readers about the representativeness of participants, so as to avoid bias. However, about 50% of the included abstracts did not report on the sampling technique. Lack of information on sampling technique in the abstract implies that readers may not be able to generalize the findings reported in the abstract. This finding confirms earlier incomplete reporting in disability research [7, 8, 10]. In particular, the poor reporting in conference abstracts in previous disability research is mostly associated with poor sampling. Our finding demonstrates that conference abstracts should aim to report information on the sampling approach, in order to help readers understand the process involved in selecting participants.

Furthermore, the current study highlighted that 55.56% of the included abstracts did not report the type of analysis performed (whether descriptive or inferential statistics or a qualitative analysis approach). Similarly, some background characteristics, namely age distribution and the gender of participants, were not reported in the abstracts. This finding demonstrates that there is incomplete reporting of results in the abstracts. The results section of the conference abstract appears to be the most significant section that addresses the background characteristics of participants and the primary and secondary outcomes [2]. However, the poor reporting of findings indicates that conference participants will not be adequately informed about the research question and therefore will be unable to explore outcomes, associations, or risk factors. This finding demonstrates that conference abstracts should ensure that the results section includes all relevant information, including age and gender of participants. The poor reporting of results in conference abstracts confirms the findings of earlier studies in disability research [7, 8, 10]. The poor reporting in disability research has largely pertained to incomplete reporting of findings. In some instances, incomplete reporting is largely recorded in full papers, rather than in abstracts.

Conclusion

The study aims to assess the reporting in the abstracts presented at the 5th African Network for Evidence-to-Action in Disability (AfriNEAD) Conference in Ghana. Our findings confirm that there is poor reporting of methods and findings in conference abstracts. Poor reporting is associated with lack of information about the study design, the methods used, the sampling, the sample size, and the type of analysis performed. Our findings established that reporting evidence in conference abstracts should adequately address all relevant issues. In particular, future conferences on disability research should aim to address the study design, the type of data included, the sampling, the sample size, and the type of analysis employed.

Conference organizers should critically examine abstracts to ensure that these methodological issues are adequately addressed, so that findings are effectively communicated to the participants. The call for abstracts should clearly elaborate the reporting standards, particularly the required content in terms of objectives, methods, results, and conclusions, as well as practical implications for policy and practice. This can help to avoid any incomplete reporting of information in conference abstracts.

Abbreviations

- AfriNEAD:

-

African Network for Evidence-to-Action in Disability

- ENTREQ:

-

Enhancing Transparency in Reporting the Synthesis of Qualitative Research

- GRIPP2:

-

Guidance for Reporting Involvement of Patients and the Public

- SD:

-

Standard deviation

- STROBE:

-

Strengthening the Reporting of Observational studies in Epidemiology

References

Pierson DJ. How to write an abstract that will be accepted for presentation at a national meeting. Respir Care. 2004;49(10):1206–12.

Andrade C. How to write a good abstract for a scientific paper or conference presentation. Indian J Psychiatry. 2011;53(2):172–5.

Song SY, Kim B, Kim I, Kim S, Kwon M, Han C, et al. Assessing reporting quality of randomized controlled trial abstracts in psychiatry: adherence to CONSORT for abstracts: a systematic review. PLoS One. 2017;12(11):e0187807.

Higgins M, Eogan M, O’Donoghue K, Russell N. How to write an abstract that will be accepted. BMJ. 2013;346:f2974.

Danieli A, Woodhams C. Emancipatory research methodology and disability: a critique. Int J Soc Res Methodol. 2005;8(4):281–96.

Topp J. Narrative analysis as a methodology for disability research: experiences of pregnancy. Disability Studies Conference; 26–28 July, 2004. Lancaster: The Open University; 2004.

Kiger G. Disability simulations: logical, methodological and ethical issues. Disabil Handicap Soc. 1992;7(1):71–8.

Chowdhury M, Benson BA. Use of differential reinforcement to reduce behavior problems in adults with intellectual disabilities: a methodological review. Res Dev Disabil. 2011;32(2):383–94.

Melbøe L, Hansen KL, Johnsen B-E, Fedreheim GE, Dinesen T, Minde G-T, et al. Ethical and methodological issues in research with Sami experiencing disability. Int J Circumpolar Health. 2016;75(1):31656.

Heyvaert M, Maes B, Onghena P. Mixed methods research synthesis: definition, framework, and potential. Qual Quant. 2013;47(2):659–76.

EQUATOR Network. STROBE checklist of items to be included when reporting observational studies in conference abstracts. UK: Centre for Statistics in Medicine, NDORMS, University of Oxford; 2017. http://www.equator-network.org/reporting-guidelines/strobe-abstracts/. Accessed 5 Dec 2018

Von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP, et al. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) Statement: guidelines for reporting observational studies. Int J Surg. 2014;12(12):1495–9.

O’Brien BC, Harris IB, Beckman TJ, Reed DA, Cook DA. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med. 2014;89(9):1245–51.

Korevaar DA, Cohen JF, de Ronde MW, Virgili G, Dickersin K, Bossuyt PM. Reporting weaknesses in conference abstracts of diagnostic accuracy studies in ophthalmology. JAMA Ophthalmol. 2015;133(12):1464–7.

Malterud K. Qualitative research: standards, challenges, and guidelines. Lancet. 2001;358(9280):483–8.

Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097.

Malonje P, editor. Practicing inclusive early childhood development: an assessment of effectiveness of early childhood development and social intervention for young children with disabilities in Malawi, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Efua EMA-T, editor. Parental involvement: rethinking the right to education for children with disabilities, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Aboagye AS, Edusei KA, Acheampong E, Mprah KW, editors. Caring for children with cerebral palsy: experiences of caregivers at Komfo Anokye Teaching Hospital in Kumasi, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Taylor E, Dogbe J, Twum F, Kotei J, Dadzie E, editors. Disability and leadership: assessing the perceptions of KNUST students towards having disabled persons as leaders, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Kyeremateng J, Edusei KA, Dogbe JA, editors. Experiences of caregivers of children with cerebral palsy attending a teaching hospital in Ghana, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Owusu AS, Adjei OR, Dogbe JA, Owusu I, editors. Assessment of level of participation of children with disabilities in extracurricular activities at basic schools in Kumasi Metropolis, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Maria K, Tim C, Ngufuan R, Beato L, Boaki N, Ellie C, et al., editors. A comparative analysis of objective and subjective inequality between households with and without disabilities in Liberia, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Sumaila A, Owusu I, Mprah KW, Acheampong E, editors. An assessment of government support to special schools in the Kumasi Metropolis, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Awini A, editor. Social interaction patterns between pupils with and without visual impairments in classroom activities in inclusive schools in Ghana, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Ammaru Y, Edusei AK, Acheampong E, Mprah K, editors. Experiences, challenges and coping strategies of teachers in some selected special schools in Ashanti Region, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Bannieh S, Acheampong E, Vampere H, Okyere P, editors. Challenges faced by teachers in teaching deaf learners in selected special schools in Ghana, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Owusu-Ansah EF, Vuuro E, Edusei KA, editors. Barriers to inclusive education: the case of Wenchi Senior High School, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Baah AI, Edusei KA, Owusu-Ansah EF, editors. Support services for pupils with low vision in pilot inclusive schools at Ejisu-Juaben Municipality, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Mariama M, Mprah WK, Acheampong E, Edusei KA, Dogbe JA, editors. Inclusion of disability studies as a course in the senior high school curricular: perspectives of students at an Islamic and a secular school in the Kumasi Metropolis, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Wundow M, Mprah KW, Acheampong E, Owusu I, Edusei KA, Dogbe JA, editors. Perception of teachers on the inclusion of disabled children in inclusive classrooms: a case of some selected public basic schools at Sakogu in the Northern Region of Ghana, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Nseibo JK, editor. Transition to a senior high school, experiences of the physically impaired students of Krachi-Nchumbru District of Volta Region of Ghana, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Nseibo JK, editor. Exploring the experiences of people with mobility impairments in four educational settings in Ghana, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Mbibeh L, Awa JC, Lynn C, Fobuzie B, Tangem J, editors. Using assistive technology to enhance inclusive education in the North West Region of Cameroon, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Chataika T, Mutekwa T, editors. Computer skills for every blind child campaign: unlocking educational potential through assistive technology in Zimbabwe, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Appiah DG, Okyere P, Owusu I, Enuameh Y, editors. Challenges associated with the use of public library services by visually impaired persons in the Kumasi Metropolis, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Maria VZ, editor. Integration of rehabilitation and disability concepts/principles into the MBChB undergraduate clinical training, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Mosha M, Moshana S, editors. Opportunities and barriers of Moodle, the University of Namibia disability community, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Yekple EY, Majisi J, editors. Access to assistive technology for students with visual impairments: the case of University of Education, Winneba in the Central Region of Ghana, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Oteng M, Anomah JE, Dogbe J, Mensah I, editors. Employment of disabled persons in the informal sector: perspectives of physically disabled persons and employers in the Kumasi Metropolis of Ghana, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Mile A, Chirac JA, Ngong JK, editors. Wheelchairs and disability inclusion: the underexploited assistive technology in the North-West of Cameroon, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Kangkoyiri F, Owusu I, Dogbe J, Edusei KA, editors. The experiences of disabled persons in the Kumasi Metropolis in participating in national elections, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Owusu G, Mprah KW, Acheampong EE, Kwaku A, Dogbe JA, editors. Increasing access to the criminal justice system for disabled persons in Ghana. The role of assistive technology, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Acquah-Gyan E, Appiah-Brempong E, Dogbe JA, Owusu I, editors. Challenges of persons with disabilities within the Kumasi Metropolis in accessing information on their human rights, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Peprah V, Enuameh Y, Dogbe J, Owusu I, editors. Challenges of persons with physical disabilities in accessing judicial services in the Kumasi Metropolis, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Ohajunwa C, Visagie S, Scheffler E, Mji G, editors. An Africa centered perspective on assistive technology: informing sustainable outcomes, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Devlieger P, editor. Urine incontinence, the catheter, and the challenges of African advocacy, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Nartey KA, Osei AF, Owusu KA, Sarpong OP, Ansong D, editors. Barriers to health care for people living with disability in a teaching hospital, Ghana: the case of the deaf, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Dadzie E, Owusu I, Mprah KW, Acheampong E, editors. Knowledge and usage of assistive devices among persons with disabilities in the Kumasi Metropolis, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Kaundjua MB, editor. Health information and health care services among the deaf community in Namibia, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Nadutey A, Acheampong E, Akodwaa-Boadi K, Edusei KA, Mprah KW, editors. Menstrual hygiene management: knowledge and practices among female adolescents with disability in Kumasi, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Bakari R, Acheampong E, Mprah KW, Owusu I, Edusei AK, editors. Knowledge on and barriers to family planning services by the deaf in the Kumasi Metro, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Oppong F, Ansong D, Yiadom PK, Ofori E, Adjei OF, Amuzu ER, et al., editors. Mental health registry in Kumasi: epidemiology of cases reporting to the hospital, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Strachan L, editor. 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Forkuor BJ, Ofori-Duah K, Amarteifio J, Odongo AD, Kwao MD, Antwi AB, editors. Caring for the intellectually disabled: motivations, challenges and coping strategies, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Ned L, Ndzwayiba N, editors. The complexity of disability inclusion in the workplace: a South African study, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Oderud T, editor. I hear you – a new hearing concept for low income settings, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Boot FH, Dinsmore J, Maclachlan M, editors. Improve access to assistive technology for people with intellectual disabilities globally, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Omoniyi MM, Appiah JE, Osei F, Akwa LG, editors. Exercise for individuals living with disability: the unwelcome reality, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Bukhala P, editor. Sports equipment and technology in developing nations: grassroots initiatives to enhance parasports in Kenya, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Ampratum E, Owusu I, Mprah K, Acheampong E, editors. Views of Christian religious leaders on the involvement of persons with disabilities in church activities, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Acheampong E, Nakoja C, Mprah KW, Vampere H, Edusei KA, Isaac O, editors. Maltreatment in marriage; the silent killer, experiences of disabled persons in Yendi Municipality of Ghana, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Braathen HS, Rohleder P, Swartz L, Hunt X, Carew M, Chiwaula M, editors. Disability, sexuality and gender: stories from South Africa, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology.; 2017.

Carew M, Rohleder P, Braathen SH, Swartz L, Hunt X, Mussa C, editors. Understanding negative attitudes toward the sexual rights and sexual health care access of people with physical disabilities in South Africa, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology.; 2017.

Haruna OAM, editor. Assistive technology on female gender in Nigeria: issues and challenges, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Matter R, Kayange G, Harniss M, editors. AT-INFO-MAP, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Kelley M, Harniss M, editors. Assistive technology act programs in the United States, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Lynn C, Louis M, Nganji J, Awa JC, Nonki K, Han S, editors. Using assistive technology to improve communication, knowledge, and skills in communities of practice and disability inclusive development, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Osabutey EK, Osabutey CK, editors. The dermatoglyphic patterns of students in special schools compared to those in normal public schools, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Mduzana LL, Visagie S, Gubela M, editors. Suitability of the tool; guidelines for screening of prosthetic candidates: lower limb; for use in Eastern Cape Province, 5th AfriNEAD & 7th College of Health Sciences Scientific Conference 2017. Kumasi: University of Stellenbosch/Kwame Nkrumah University of Science and Technology; 2017.

Acknowledgements

The authors wish to thank the Centre for Disability and Rehabilitation Studies, Kwame Nkrumah University of Science and Technology, AfriNEAD, and the University of Stellenbosch, South Africa.

Funding

The authors declare no funding source for the study.

Availability of data and materials

All data supporting these findings is either contained in the manuscript or available on request. There are no restrictions to anonymized data sources. All data collection tools, including the data extraction form and the book of abstracts, are available on request.

Author information

Authors and Affiliations

Contributions

EB, PO, and DB conceptualized the study. EB conducted the data extraction, and PO and DB conducted the second review of the extracted data. EB conducted the thematic analysis and drafted the manuscript. EB, PO, DB, NG, MPO, PA-B, and AKE reviewed and made inputs into the intellectual content and agreed on its submission for publication. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Badu, E., Okyere, P., Bell, D. et al. Reporting in the abstracts presented at the 5th AfriNEAD (African Network for Evidence-to-Action in Disability) Conference in Ghana. Res Integr Peer Rev 4, 1 (2019). https://doi.org/10.1186/s41073-018-0061-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41073-018-0061-3