Abstract

Background

Animal-attached sensors are increasingly used to provide insights on behaviour and physiology. However, such tags usually lack information on the structure of the surrounding environment from the perspective of a study animal and thus may be unable to identify potentially important drivers of behaviour. Recent advances in robotics and computer vision have led to the availability of integrated depth-sensing and motion-tracking mobile devices. These enable the construction of detailed 3D models of an environment within which motion can be tracked without reliance on GPS. The potential of such techniques has yet to be explored in the field of animal biotelemetry. This report trials an animal-attached structured light depth-sensing and visual–inertial odometry motion-tracking device in an outdoor environment (coniferous forest) using the domestic dog (Canis familiaris) as a compliant test species.

Results

A 3D model of the forest environment surrounding the subject animal was successfully constructed using point clouds. The forest floor was labelled using a progressive morphological filter. Trees trunks were modelled as cylinders and identified by random sample consensus. The predicted and actual presence of trees matched closely, with an object-level accuracy of 93.3%. Individual points were labelled as belonging to tree trunks with a precision, recall, and F\(_{\beta }\) score of 1.00, 0.88, and 0.93, respectively. In addition, ground-truth tree trunk radius measurements were not significantly different from random sample consensus model coefficient-derived values. A first-person view of the 3D model was created, illustrating the coupling of both animal movement and environment reconstruction.

Conclusions

Using data collected from an animal-borne device, the present study demonstrates how terrain and objects (in this case, tree trunks) surrounding a subject can be identified by model segmentation. The device pose (position and orientation) also enabled recreation of the animal’s movement path within the 3D model. Although some challenges such as device form factor, validation in a wider range of environments, and direct sunlight interference remain before routine field deployment can take place, animal-borne depth sensing and visual–inertial odometry have great potential as visual biologging techniques to provide new insights on how terrestrial animals interact with their environments.

Similar content being viewed by others

Background

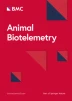

Animal-attached sensors are increasingly used to study the movement, behaviour, and physiology of both wild and domestic species. Such devices have provided valuable insights into areas such as bioenergetics, animal welfare, and conservation [1,2,3,4]. Data from animal-attached sensors are often overlaid on satellite imagery and maps to provide spatial and environmental context [5,6,7]. Current attempts to obtain more detailed visual information from a study animal’s perspective have typically involved attaching standard RGB cameras [8,9,10] (but see [11, 12]). Information is then often extracted from images or footage by manual inspection. Few attempts have been made to apply computer vision techniques to non-human biologging studies. Two notable examples include the use of object tracking on the prey of falcons and template matching to determine the head position of sea turtles, though in both cases using 2D images [13, 14].

In other fields, light-based depth sensors have been used for the reconstruction of 3D scenes, with numerous applications in areas such as robotics, mapping, navigation, and interactive media [15,16,17,18]. Active depth sensing encompasses a range of techniques based on examining the properties of projected light and enables the construction of 3D point clouds from points within a coordinate system. In a detection system known as LiDAR (light detection and ranging), this may involve measuring either the length of time or phase shifts that occur when light is projected from a source to an environment of interest and travels back to a receiver [19]. Alternatively, a defined pattern (e.g. stripes or dots) can be projected, providing information on depth and the surface of objects, in a technique known as structured light (SL) [20, 21].

Airborne LiDAR sensors have been used in a number of ecological studies, generating 3D models from point clouds that are typically used to investigate relationships between animal diversity and quantifiable attributes of vegetation and topography [22]. Although not obtained directly from animal-attached sensors, previous studies have integrated airborne LiDAR data with information from GPS-equipped collars to examine factors underlying animal movement patterns including habitat structure, social interactions, and thermoregulation [23,24,25]. In addition, aerial-derived point cloud models have been used to quantify the visible area or ‘viewshed’ of lions at kill sites, leading to insights on predator–prey relationships [11, 12]. Various other biological applications of active depth sensing include terrestrial and aerial vegetation surveys in forestry research and the automated identification of plant species [26,27,28,29]. Structured light sensors have also successfully been used to scan animals (e.g. cattle) from a fixed position and in milking robots [30, 31]. Perhaps similar to how demand in consumer electronics helped drive the availability of low-cost portable sensors such as accelerometers [32], active depth sensors are now beginning to appear in relatively small mobile devices which may further aid their adoption in research.

A separate and potentially complementary technique known as visual odometry (VO) can be used to estimate motion by tracking visual features over time [33]. The fusion of inertial data from accelerometers and gyroscopes (visual–inertial odometry, VIO) to further improve estimates of position and orientation (pose) has gained popularity in the field of robotics as a method to perform localisation in areas where GPS is intermittent or not available [34,35,36]. In addition to indoor environments, VIO could be of use in areas with dense vegetation or challenging terrain [37, 38].

This report trials the application of an animal-attached active depth-sensing (SL-based) and motion-tracking (VIO) device to record fine-scale movement within a reconstructed 3D model of the surrounding environment, without reliance on GPS. A segmentation pipeline is also demonstrated for the identification of neighbouring objects, testing the feasibility of such technology to investigate factors that influence animal behaviour and movement in an outdoor environment.

Methods

A one year-old female Labrador–golden retriever (Canis familiaris, body mass 32 kg) was used as the subject during the trial. The study took place in Northern Ireland, during late August in a section of mature coniferous forest (primarily Sitka spruce, Picea sitchensis) under the canopy. Trees had few remaining lower branches, and the forest floor was relatively flat with a blanket of dry pine needle litter. This environment was selected as the segmentation and identification of trees from point clouds had previously proven successful in forestry research. In addition, potential interference from direct sunlight could be avoided. The initial trial area measured 30 m in length and approximately 4.5 m in width, the outer edges of which were marked by placing large (\(0.76\times 1.02\,\hbox {m}\)) sheets of cardboard. The circumference/girth of each tree was measured at a consistent height of 1.0 m (or the narrowest point below a split trunk) using a fibreglass tape measure to ensure clearance of root flare.

The recording device was a Project Tango Development Kit tablet (‘Yellowstone’, NX-74751; Google Inc., CA, USA) running Android version 4.4.2 and Project Tango Core version 1.34 [39]. The Tango device projects SL onto surrounding objects and surfaces using an infrared (IR) laser (see Fig. 1a). This light is then detected using an RGB-IR camera (Fig. 1b) to measure depth which can be represented in point cloud form (Fig. 1c). Visual information is obtained from a fisheye camera (Fig. 1d) in which image features are tracked between frames during motion (Fig. 1e). This is fused with data from inertial sensors (tri-axial accelerometer and gyroscope, Fig. 1f) to track pose with reference to an initial starting point or origin by VIO (e.g. [34, 36]; Fig. 1g). Combining depth sensing with motion tracking allows point clouds to be accumulated over time and enables the reconstruction of an environment in three dimensions. Data were recorded on the device using ParaView Tango Recorder [40] (with a minor modification to record every frame of the point cloud data, rather than every third) in ‘Auto Mode’, which records both point cloud and pose data to internal storage. Depth was recorded at a frame rate of approximately 5 Hz (mostly between 0.5 and 4.0 m), and pose estimates were returned at 100 Hz.

The device was mounted on to the dorsal area of the dog using a harness (Readyaction™ Dog Harness plus a ‘Sport 2’ attachment) with the depth-sensing and motion-tracking cameras facing forward. The combined weight of the recording device and harness was 652 g, corresponding to 2.0% of body mass. The animal was first held motionless for several seconds while the device initialised. The dog was then guided in a straight line across the initial study area at a steady walking pace on a lead (Fig. 2). Following this, a second stage of the trial was performed by guiding the animal through the forested area in a non-predetermined path over more challenging terrain for an extended period of time. Both stages of the trial were carried out between late afternoon and early evening.

The compressed files containing the recorded data were then downloaded from the device. Data were visualised and analysed in ParaView/PvBatch version 4.1.0 [41], using point cloud library (PCL) plugin version 1.1 [42] (with modifications, including additional orientation constraints on model fitting and options for the segmentation of ground points) built with PCL version 1.7.2 [43]. Filters distributed with ParaView Tango Recorder were used to prepare the imported data as follows: 1) depth points were transformed to align with the pose data (‘Apply Pose To PointCloud’ filter); 2) point clouds were accumulated over time to produce an overall model of the study site (‘Accumulate Point Clouds over time’ filter, see Fig. 1h); 3) orientation of the device was obtained by applying the ‘Convert Quaternion to Orientation Frame’ filter to the pose data. The bounds of the study area were manually identified by visual inspection for the cardboard markers in the 3D model, and points that fell outside were removed using ParaView Clip filters. The PCL Radius Outlier Removal filter was then used to label points with less than 10 neighbours within a search radius of 0.3 m (see Fig. 1i). Outliers were subsequently removed using the Threshold Points passthrough filter. In order to reduce processing time and obtain a more homogeneous point density, the point cloud was then downsampled using the PCL Voxel Grid filter with a leaf size of 0.02 m (Fig. 1j). To aid in viewing the structure of the point cloud, the Elevation filter was applied to colour points by height. To provide a ground truth, each point was assigned an identification number and those corresponding to tree trunks were interactively isolated and annotated by frustum selection.

Following this, a progressive morphological filter [44] was applied using PCL for the identification and segmentation of ground points (cell size 0.2 m, maximum window size 20, slope 1.0, initial distance 0.25 m, maximum distance 3.0 m; Fig. 1k). The points labelled as ground were removed using a Threshold Points filter. The PCL Euclidean Cluster filter was then applied to extract clusters of points representing potential objects (cluster tolerance \(0.1\hbox { m}\), minimum cluster size 300, maximum cluster size 50, 000; Fig. 1l).

Random sample consensus (RANSAC) is an iterative method used to estimate parameters of a model from data in the presence of outliers [45]. In the case of point clouds, this allows for the fitting of primitive shapes and derivation of their dimensions. For each Euclidean cluster, an attempt was made to fit a cylindrical model (corresponding to a tree trunk) using the PCL SAC Segmentation Cylinder filter (normal estimation search radius 0.1 m, normal distance weight 0.1, radius limit 0.3 m, distance threshold 0.25 m, maximum iterations 200). This filter was modified to search for cylinders only in the vertical axis, allowing for slight deviations (angle epsilon threshold 15.0 \(^\circ\); Fig. 1m). The precision [(true-positive points / (true-positive points + false-positive points)], recall [(true positives / (true positives + false negatives)] and F\(_{\beta }\) score of tree trunk segmentation were calculated using scikit-learn version 0.17.1 [46] in Python. Differences between ground-truth measurements of tree trunk girth and the RANSAC model derived values were analysed using a Wilcoxon signed-rank test in R version 3.3.1 [47]. The device position obtained from the pose data was used to plot the trajectory of a first-person view of the study animal moving through the accumulated point cloud. In this animation, the camera focal point was fixed on the final pose measurement and the up direction set to the vertical axis (Additional file 1).

Results

During the first stage of the trial, a total of 1.1 million depth points and 5264 pose estimates were recorded over a period of 53 s with a file size of 14.8 MB. After applying the Clip filter to the bounds of the study area, the number of points was reduced to 869,353. The radius outlier filter removed a total of 559 points (0.1% of the clipped point cloud). After application of the voxel grid filter, the point cloud was further reduced to a total of 324, 074 points. The progressive morphological filter labelled 158,574 points (48.9%) as belonging to the forest floor. A total of 30 Euclidean clusters were identified with a median of 4931 (interquartile range 3153–6880) points (1342 points were unassigned to a cluster). Overall, 28 of these clusters had a RANSAC vertical cylinder model fit and were therefore, at an object level, classed as tree trunks. On visual inspection, there were no occurrences of object-level false positives. With 30 trees present in the study area, this corresponded to an accuracy of 93.3%. Individual points were labelled with a precision, recall, and F\(_{\beta }\) score of 1.00, 0.88, and 0.93, respectively (see Fig. 3). The Wilcoxon signed-rank test revealed that there was no significant difference (\(V = 225\), p = 0.63; median difference 0.01 m, interquartile range − 0.02–0.02 m) between the actual tree trunk radii (assuming circularity, mean 0.11, ± \(0.04\,\hbox {m}\)) and the RANSAC coefficient-derived estimates (median 0.11 m, interquartile range 0.10–0.13 m). Segmented clusters representing tree trunks were found to have a mean height (minimum to maximum vertical distance between inlier points) of 1.95 ± 0.52 m.

During the extended stage of the trial, a total of 4.17 million depth points and 12, 761 pose estimates were recorded over a 2-min period (file size 55.3 MB). After accumulation into a single point cloud, removal of outliers and downsampling, a total of 83 tree trunks were identified by RANSAC with a median coefficient-derived radius of 0.11 m (interquartile range 0.09–0.13 m). The device pose indicated a travel distance of 81.92 m, with an Euclidean distance of 62.48 m, and a vertical descent of 8.70 m. See Additional files 2 and 3 for interactive views of the segmented point clouds from both stages of the trial.

Discussion

Movement is of fundamental importance to life, impacting key ecological and evolutionary processes [48]. From an energetics perspective, the concept of an ‘energy landscape’ describes the variation that an animal experiences in energy requirements while moving through an environment [49, 50]. For terrestrial species, heterogeneity in the energy landscape depends on the properties of terrain, with animals predicted to select movement paths that allow them to minimise costs and maximise energy gain. At an individual level, an animal may also show deviations from landscape model predictions as it undergoes fitness related trade-offs seeking short-term optimality (e.g. for predator avoidance) [51]. In a previous accelerometer and GPS biologging study on the energy landscapes of a small forest-dwelling mammal (Pekania pennanti) [52], energy expenditure was found to be related to habitat suitability. However, it was not possible to identify the environmental characteristics that influenced individual energy expenditure, thus highlighting a need for methods that can record environmental information from the perspective of a study animal at both higher temporal and spatial resolutions.

In the present study, an animal-attached depth-sensing and motion-tracking device was used to construct 3D models, segment, and identify specific objects within an animal’s surroundings. Animal-scale 3D environmental models collected from free-ranging individuals have great potential to be used in the measurement of ground inclination, obstacle detection, and derivation of various surface roughness or traversability indexes (e.g. [53,54,55]). Such variables could then be examined in relation to accelerometer-derived proxies of energy expenditure to further our understanding of the ‘energetic envelope’ [51] within which an animal may optimise its behavioural patterns. Furthermore, VIO-based motion-tracking could be used to test widely debated random walk models of animal foraging and search processes [56,57,58,59].

The segmentation of point clouds and fitting of cylindrical models by RANSAC enabled the labelling and characterisation of tree trunks surrounding the study animal. While such an approach proved suitable for the environment in which the current study took place, more varied scenes would require the testing of alternative features and classification algorithms in order to distinguish between a wider range of objects (e.g. [60,61,62,63,64]). The ability to accurately model and identify specific objects from the perspective of an animal, while simultaneously tracking motion could have wide ranging biotelemetry applications such as studying the movement ecology of elusive or endangered species, and investigating potential routes of disease transmission.

Susceptibility to interference from direct sunlight presents a significant challenge to the SL depth-sensing method used in the current study. While this did not greatly influence the results of the present trial, as it was conducted under the canopy in a coniferous forest, future outdoor applications of animal-attached depth sensing may need to explore the use of passive solutions. For example, previous work has produced promising results on outdoor model reconstruction using mobile devices to perform motion stereo, which is insensitive to sunlight and also notably improved the range of depth perception [65, 66]. A hybrid approach, using both SL and stereo reconstruction, may provide advantages, particularly when measuring inclined surfaces [67]. In addition, the motion-tracking camera of the device used in the current study requires sufficient levels of visible light for pose estimation. This could impede deployments of a similar device on nocturnal species or those that inhabit areas with poor lighting conditions. One solution may be to utilise IR (or multi-spectral) imaging, which has previously been demonstrated for both VO [68,69,70] and stereo reconstruction [71].

Over time, pose estimates obtained by VIO alone can be prone to drift, potentially leading to misalignment of point clouds. Therefore, future work may also attempt to use visual feature tracking algorithms in order to recognise areas that have been revisited (i.e. within an animal’s home range) to perform drift correction or loop closure [72,73,74]. Such a feature, known as ‘Area Learning’ on the Tango platform, could allow researchers to visit and ‘learn’ an area in advance to produce area description files that correct errors in trajectory data. The application of such techniques in outdoor environments, across seasons, under a range of weather conditions at different times of day is challenging and subject to active research [75, 76]. For other forest-based evaluations of the platform accuracy, see [77, 78]. Physical factors that could impact the performance of motion tracking or SL depth sensing include a lack of visual features and the reflective properties of surfaces [79,80,81]. The raw point clouds used in the present study disregard non-surface information and can be susceptible to sensor noise. Future work may therefore seek to improve the quality of 3D reconstruction by experimenting with alternative techniques such as optimised variants of occupancy grid mapping and truncated signed distance fields (TSDF) which use the passthrough data of emanating rays to provide more detailed volumetric information [82, 83]. Ideally, the performance of motion tracking and depth sensing would also be tested with a wider range of environments, vegetation types, and movement speeds to closer emulate conditions found in more challenging field deployments. It may be possible to reconstruct occluded and unobserved regions of models using hole filling techniques (e.g. [84]). In relatively dynamic scenes, depth sensors have been used to track the trajectory of objects in motion (e.g. humans [85, 86]). The removal of dynamic objects from 3D models generated by mobile devices has also been demonstrated [87].

The development kit used in the current study was primarily intended for indoor use with a touch screen to allow human interaction. Therefore, careful consideration would be needed before routine deployments of a device with similar capabilities on other terrestrial animals. When preparing such a device, particular attention should be focused on the mass, form factor, and attachment method in order to reduce potential impact on the welfare and behaviour of a wild study animal [88, 89]. Whenever possible, applications should attempt to gracefully handle adverse situations such as temporary loss of motion tracking due to objects obstructing cameras at close range, or sudden movements overloading the sensors. Additionally, onboard downsampling of point cloud data could reduce storage requirements over longer deployments. Official support for the device used in the present study has now ended (partially succeeded by ARCore [90]); however, the general concepts of combined depth sensing and VIO motion tracking are not vendor specific. Active depth-sensing capabilities can be added to standard mobile devices using products such as the Occipital Structure Sensor [91]. Various open-source implementations of VO motion-tracking algorithms may also be suitable for deployment with further development (e.g. [92]). Future work may seek to compare the performance of (or augment) animal-attached VIO motion tracking with previously described magnetometer, accelerometer, and GPS-based dead-reckoning methods (e.g. [93]) in a range of environments. For example, under dense vegetation where the performance of GPS can deteriorate, VIO could offer significant advantages [38, 94]. The simultaneous collection of tri-axial accelerometer data would also allow the classification of animal behaviour along the pose trajectory within reconstructed models. This could enable further research into links between specific behaviours and various structural environmental attributes. In comparison with point clouds obtained from airborne laser scanning, similar to terrestrial laser scanning, animal-attached depth sensing could enable a higher point density at viewing angles more appropriate for the resolution of small or vertical objects [95].

Conclusions

The application of an animal-attached active depth-sensing and motion-tracking device enabled environmental reconstruction with 3D point clouds. Model segmentation allowed the semantic labelling of objects (tree trunks) surrounding the subject animal in a forested environment with high precision and recall, resulting in reliable estimates of their physical properties (i.e. radius/circumference). The simultaneous collection of depth information and device pose allowed for reconstruction of the animal’s movement path within the study site. Whilst this case report discussed the technical challenges that remain prior to routine field deployment of such a device in biotelemetry studies, it demonstrates that animal-attached depth sensing and VIO motion tracking have great potential as visual biologging techniques to provide detailed environmental context to animal behaviour.

Accumulated point cloud and pose from an animal-attached device in a forest environment. Trimmed point cloud from the first stage of the trial. Ground points were labelled using a progressive morphological filter and coloured by height using an elevation filter. Tree trunks labelled by RANSAC are highlighted in green (28 of 30). The position of the device over time, and orientation are represented by the white line and superimposed arrows, respectively. The corner axes represent the orientation of the point cloud. Panels (b) and (c) represent side and top-down views

Abbreviations

- IR:

-

infrared

- LiDAR:

-

light detection and ranging

- PCL:

-

point cloud library

- RANSAC:

-

random sample consensus

- SL:

-

structured light

- TSDF:

-

truncated signed distance field

- VO:

-

visual odometry

- VIO:

-

visual–inertial odometry

References

Halsey LG, Shepard ELC, Quintana F, Gomez Laich A, Green JA, Wilson RP. The relationship between oxygen consumption and body acceleration in a range of species. Comp Biochem Physiol Part A Mol Integr Physiol. 2009;152(2):197–202. https://doi.org/10.1016/j.cbpa.2008.09.021.

Jones S, Dowling-Guyer S, Patronek GJ, Marder AR, Segurson D’Arpino S, McCobb E. Use of accelerometers to measure stress levels in shelter dogs. J Appl Anim Welf Sci. 2014;17(1):18–28. https://doi.org/10.1080/10888705.2014.856241.

Kays R, Crofoot MC, Jetz W, Wikelski M. Terrestrial animal tracking as an eye on life and planet. Science. 2015;348(6240):2478. https://doi.org/10.1126/science.aaa2478.

Wilson ADM, Wikelski M, Wilson RP, Cooke SJ. Utility of biological sensor tags in animal conservation. Conserv Biol. 2015;29(4):1065–75. https://doi.org/10.1111/cobi.12486.

Shamoun-Baranes J, Bom R, van Loon EE, Ens BJ, Oosterbeek K, Bouten W. From sensor data to animal behaviour: an oystercatcher example. PLoS ONE. 2012;7(5):37997. https://doi.org/10.1371/journal.pone.0037997.

Wang Y, Nickel B, Rutishauser M, Bryce CM, Williams TM, Elkaim G, Wilmers CC. Movement, resting, and attack behaviors of wild pumas are revealed by tri-axial accelerometer measurements. Mov Ecol. 2015;3:2. https://doi.org/10.1186/s40462-015-0030-0.

McClune DW, Marks NJ, Delahay RJ, Montgomery WI, Scantlebury DM. Behaviour-time budget and functional habitat use of a free-ranging European badger (Meles meles). Anim Biotelem. 2015;3:7. https://doi.org/10.1186/s40317-015-0025-z.

Marshall GJ. CRITTERCAM: an animal-borne imaging and data logging system. Mar Technol Soc J. 1998;32(1):11–7.

Hooker SK, Barychka T, Jessopp MJ, Staniland IJ. Images as proximity sensors: the incidence of conspecific foraging in Antarctic fur seals. Anim Biotelem. 2015;3:37. https://doi.org/10.1186/s40317-015-0083-2.

Gómez-Laich A, Yoda K, Zavalaga C, Quintana F. Selfies of imperial cormorants (Phalacrocorax atriceps): what is happening underwater? PLoS ONE. 2015;10(9):0136980. https://doi.org/10.1371/journal.pone.0136980.

Loarie SR, Tambling CJ, Asner GP. Lion hunting behaviour and vegetation structure in an African savanna. Anim Behav. 2013;85(5):899–906. https://doi.org/10.1016/j.anbehav.2013.01.018.

Davies AB, Tambling CJ, Kerley GIH, Asner GP. Effects of vegetation structure on the location of lion kill sites in African thicket. PLoS ONE. 2016;11(2):1–20. https://doi.org/10.1371/journal.pone.0149098.

Kane SA, Zamani M. Falcons pursue prey using visual motion cues: new perspectives from animal-borne cameras. J Exp Biol. 2014;217(2):225–34. https://doi.org/10.1242/jeb.092403.

Okuyama J, Nakajima K, Matsui K, Nakamura Y, Kondo K, Koizumi T, Arai N. Application of a computer vision technique to animal-borne video data: extraction of head movement to understand sea turtles’ visual assessment of surroundings. Anim Biotelem. 2015;3:35. https://doi.org/10.1186/s40317-015-0079-y.

Krabill WB, Wright CW, Swift RN, Frederick EB, Manizade SS, Yungel JK, Martin CF, Sonntag JG, Duffy M, Hulslander W, Brock JC. Airborne laser mapping of Assateague National Seashore beach. Photogramm Eng Remote Sens. 2000;66(1):65–71.

Maurelli F, Droeschel D, Wisspeintner T, May S, Surmann H. A 3D laser scanner system for autonomous vehicle navigation. In: International conference on advanced robotics; 2009. pp. 1–6 .

Weiss U, Biber P. Plant detection and mapping for agricultural robots using a 3D LIDAR sensor. Robot Auton Syst. 2011;59(5):265–73. https://doi.org/10.1016/j.robot.2011.02.011.

Han J, Shao L, Xu D, Shotton J. Enhanced computer vision with Microsoft Kinect sensor: a review. IEEE Trans Cybern. 2013;43(5):1318–34. https://doi.org/10.1109/TCYB.2013.2265378.

Carter J, Schmid K, Waters K, Betzhold L, Hadley B, Mataosky R, Halleran J. Lidar 101: an introduction to lidar technology, data, and applications. National Oceanic and Atmospheric Administration (NOAA) Coastal Services Center: Charleston; 2012.

Blais F. Review of 20 years of range sensor development. J Electron Imaging. 2004;13(1):231–40. https://doi.org/10.1117/1.1631921.

Geng J. Structured-light 3D surface imaging: a tutorial. Adv Opt Photonics. 2011;3(2):128–60. https://doi.org/10.1364/AOP.3.000128.

Davies AB, Asner GP. Advances in animal ecology from 3D-LiDAR ecosystem mapping. Trends Ecol Evol. 2014;29(12):681–91. https://doi.org/10.1016/j.tree.2014.10.005.

Melin M, Matala J, Mehtätalo L, Tiilikainen R, Tikkanen OP, Maltamo M, Pusenius J, Packalen P. Moose (Alces alces) reacts to high summer temperatures by utilizing thermal shelters in boreal forests—an analysis based on airborne laser scanning of the canopy structure at moose locations. Glob Change Biol. 2014;20(4):1115–25.

McLean KA, Trainor AM, Asner GP, Crofoot MC, Hopkins ME, Campbell CJ, Martin RE, Knapp DE, Jansen PA. Movement patterns of three arboreal primates in a Neotropical moist forest explained by LiDAR-estimated canopy structure. Landsc Ecol. 2016;31(8):1849–62. https://doi.org/10.1007/s10980-016-0367-9.

Strandburg-Peshkin A, Farine DR, Crofoot MC, Couzin ID. Habitat structure shapes individual decisions and emergent group structure in collectively moving wild baboons. eLife. 2017;6:e19505. https://doi.org/10.7554/eLife.19505.

Koch B, Heyder U, Weinacker H. Detection of individual tree crowns in airborne lidar data. Photogramm Eng Remote Sens. 2006;72(4):357–63. https://doi.org/10.1007/s10584-004-3566-3.

Brandtberg T. Classifying individual tree species under leaf-off and leaf-on conditions using airborne lidar. ISPRS J Photogramm Remote Sens. 2007;61(5):325–40. https://doi.org/10.1016/j.isprsjprs.2006.10.006.

Höfle B. Radiometric correction of terrestrial LiDAR point cloud data for individual maize plant detection. IEEE Geosci Remote Sens Lett. 2014;11(1):94–8. https://doi.org/10.1109/LGRS.2013.2247022.

Olofsson K, Holmgren J, Olsson H. Tree stem and height measurements using terrestrial laser scanning and the RANSAC algorithm. Remote Sens. 2014;6(5):4323–44. https://doi.org/10.3390/rs6054323.

Kawasue K, Ikeda T, Tokunaga T, Harada H. Three-dimensional shape measurement system for black cattle using KINECT sensor. Int J Circuits Syst Signal Process. 2013;7(4):222–30.

Akhloufi MA. 3D vision system for intelligent milking robot automation. SPIE. In: SPIE, intelligent robots and computer vision XXXI: algorithms and techniques, vol. 9025; 2014. pp. 90250–19025010. https://doi.org/10.1117/12.2046072.

Vigna B. MEMS epiphany. In: IEEE 22nd international conference on micro electro mechanical systems; 2009. pp. 1–6. https://doi.org/10.1109/MEMSYS.2009.4805304.

Nistér D, Naroditsky O, Bergen J. Visual odometry. In: Proceedings of the 2004 IEEE computer society conference on computer vision and pattern recognition, vol 1; 2004. pp. 652–659. https://doi.org/10.1109/CVPR.2004.1315094.

Mourikis AI, Roumeliotis SI. A multi-state constraint Kalman filter for vision-aided inertial navigation. In: IEEE international conference on robotics and automation; 2007. pp. 3565–3572. https://doi.org/10.1109/ROBOT.2007.364024.

Konolige K, Agrawal M, Solà J. Large-scale visual odometry for rough terrain. In: Kaneko M, Nakamura Y, editors. Robotics research: the 13th international symposium ISRR. Berlin: Springer; 2011. pp. 201–12.

Hesch JA, Kottas DG, Bowman SL, Roumeliotis SI. Camera-IMU-based localization: observability analysis and consistency improvement. Int J Robot Res. 2014;33(1):182–201. https://doi.org/10.1177/0278364913509675.

D’Eon RG, Serrouya R, Smith G, Kochanny CO. GPS radiotelemetry error and bias in mountainous terrain. Wildl Soc Bull. 2002;30(2):430–9.

Zheng J, Wang Y, Nihan NL. Quantitative evaluation of GPS performance under forest canopies. In: IEEE networking, sensing and control; 2005. pp. 777–782. https://doi.org/10.1109/ICNSC.2005.1461289.

Tango. Google developers. https://web.archive.org/web/20170716155537/https://developers.google.com/tango/developer-overview (2017). Accessed 16 July 2017.

Kitware: ParaView Tango Recorder. GitHub. https://github.com/Kitware/ParaViewTangoRecorder (2015). Accessed 02 Apr 2016.

Ayachit U. The ParaView guide: a parallel visualization application. Clifton Park: Kitware Inc; 2015.

Marion P, Kwitt R, Davis B, Gschwandtner M. PCL and ParaView—connecting the dots. In: Computer society conference on computer vision and pattern recognition workshops; 2012. pp. 80–85. https://doi.org/10.1109/CVPRW.2012.6238918.

Rusu RB, Cousins S. 3D is here: point cloud library (PCL). In: IEEE international conference on robotics and automation; 2011. pp. 1–4. https://doi.org/10.1109/ICRA.2011.5980567. http://pointclouds.org/.

Zhang K, Chen S-C, Whitman D, Shyu M-L, Yan J, Zhang C. A progressive morphological filter for removing nonground measurements from airborne LIDAR data. IEEE Trans Geosci Remote Sens. 2003;41(4):872–82. https://doi.org/10.1109/TGRS.2003.810682.

Fischler MA, Bolles RC. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun ACM. 1981;24(6):381–95. https://doi.org/10.1145/358669.358692.

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay É. Scikit-learn: machine learning in Python. J Mach Learn Res. 2011;12:2825–30. https://doi.org/10.1007/s13398-014-0173-7.2. arXiv:1201.0490.

R Core Team: R: a Language and Environment for Statistical Computing. R foundation for statistical computing, Vienna, Austria. R Foundation for Statistical Computing; 2016. https://www.R-project.org/.

Nathan R, Getz WM, Revilla E, Holyoak M, Kadmon R, Saltz D, Smouse PE. A movement ecology paradigm for unifying organismal movement research. Proc Natl Acad Sci USA. 2008;105(49):19052–9. https://doi.org/10.1073/pnas.0800375105.

Wilson RP, Quintana F, Hobson VJ. Construction of energy landscapes can clarify the movement and distribution of foraging animals. Proc R Soc Lond B Biol Sci. 2012;279(1730):975–80. https://doi.org/10.1098/rspb.2011.1544.

Shepard ELC, Wilson RP, Rees WG, Grundy E, Lambertucci SA, Vosper SB. Energy landscapes shape animal movement ecology. Am Nat. 2013;182(3):298–312. https://doi.org/10.1086/671257.

Halsey LG. Terrestrial movement energetics: current knowledge and its application to the optimising animal. J Exp Biol. 2016;219(10):1424–31. https://doi.org/10.1242/jeb.133256.

Scharf AK, Lapoint S, Wikelski M, Safi K. Structured energetic landscapes in free-ranging fishers (Pekania pennanti). PLoS ONE. 2016;11(2):0145732. https://doi.org/10.6084/m9.figshare.2062650.

Larson J, Trivedi M, Bruch M. Off-road terrain traversability analysis and hazard avoidance for UGVs. In: IEEE intelligent vehicles symposium; 2011. pp. 1–7.

Lai P, Samson C, Bose P. Surface roughness of rock faces through the curvature of triangulated meshes. Comput Geosci. 2014;70:229–37. https://doi.org/10.1016/j.cageo.2014.05.010.

Liu C, Chao J, Gu W, Li L, Xu Y. On the surface roughness characteristics of the land fast sea-ice in the Bohai Sea. Acta Oceanol Sin. 2014;33(7):97–106. https://doi.org/10.1007/s13131-014-0509-3.

Edwards AM, Phillips RA, Watkins NW, Freeman MP, Murphy EJ, Afanasyev V, Buldyrev SV, da Luz MGE, Raposo EP, Stanley HE, Viswanathan GM. Revisiting Lévy flight search patterns of wandering albatrosses, bumblebees and deer. Nature. 2007;449(7165):1044–8. https://doi.org/10.1038/nature06199.

Humphries NE, Weimerskirch H, Queiroz N, Southall EJ, Sims DW. Foraging success of biological Lévy flights recorded in situ. Proc Natl Acad Sci USA. 2012;109(19):7169–74. https://doi.org/10.1073/pnas.1121201109.

Raichlen DA, Wood BM, Gordon AD, Mabulla AZP, Marlowe FW, Pontzer H. Evidence of Lévy walk foraging patterns in human hunter-gatherers. Proc Natl Acad Sci USA. 2013;111(2):728–33. https://doi.org/10.1073/pnas.1318616111.

Wilson RP, Griffiths IW, Legg PA, Friswell MI, Bidder OR, Halsey LG, Lambertucci SA, Shepard ELC. Turn costs change the value of animal search paths. Ecol Lett. 2013;16(9):1145–50. https://doi.org/10.1111/ele.12149.

Golovinskiy A, Kim VG, Funkhouser T. Shape-based recognition of 3D point clouds in urban environments. In: IEEE 12th international conference on computer vision; 2009. pp. 2154–2161. https://doi.org/10.1109/ICCV.2009.5459471.

Himmelsbach M, Luettel T, Wuensche H-J. Real-time object classification in 3D point clouds using point feature histograms. In: IEEE/RSJ international conference on intelligent robots and systems; 2009. pp. 994–1000. https://doi.org/10.1109/IROS.2009.5354493.

Maturana D, Scherer S. VoxNet: A 3D convolutional neural network for real-time object recognition. In: IEEE/RSJ international conference on intelligent robots and systems; 2015. pp. 922–928. https://doi.org/10.1109/IROS.2015.7353481.

Rodríguez-Cuenca B, García-Cortés S, Ordóñez C, Alonso MC. Automatic detection and classification of pole-like objects in urban point cloud data using an anomaly detection algorithm. Remote Sens. 2015;7(10):12680–703. https://doi.org/10.3390/rs71012680.

Lehtomäki M, Jaakkola A, Hyyppä J, Lampinen J, Kaartinen H, Kukko A, Puttonen E, Hyyppä H. Object classification and recognition from mobile laser scanning point clouds in a road environment. IEEE Trans Geosci Remote Sens. 2016;54(2):1226–39.

Schöps T, Sattler T, Häne C, Pollefeys M. 3D modeling on the go: interactive 3D reconstruction of large-scale scenes on mobile devices. In: International conference on 3D vision; 2015. pp. 291–299. https://doi.org/10.1109/3DV.2015.40.

Schöps T, Sattler T, Häne C, Pollefeys M. Large-scale outdoor 3D reconstruction on a mobile device. Comput Vis Image Underst. 2016;. https://doi.org/10.1016/j.cviu.2016.09.007.

Wittmann A, Al-Nuaimi A, Steinbach E, Schroth G. Enhanced depth estimation using a combination of structured light sensing and stereo reconstruction. In: International conference on computer vision theory and applications; 2016.

Nilsson E, Lundquist C, Schön TB, Forslund D, Roll J. Vehicle motion estimation using an infrared camera. IFAC Proc Vol. 2011;44(1):12952–7.

Mouats T, Aouf N, Sappa AD, Aguilera C, Toledo R. Multispectral stereo odometry. IEEE Trans Intell Transp Syst. 2015;16(3):1210–24. https://doi.org/10.1109/TITS.2014.2354731.

Borges PVK, Vidas S. Practical infrared visual odometry. IEEE Trans Intell Transp Syst. 2016;17(8):2205–13. https://doi.org/10.1109/TITS.2016.2515625.

Mouats T, Aouf N, Chermak L, Richardson MA. Thermal stereo odometry for UAVs. IEEE Sens J. 2015;15(11):6335–47. https://doi.org/10.1109/JSEN.2015.2456337.

Lynen S, Bosse M, Furgale P, Siegwart R. Placeless place-recognition. In: 2nd International conference on 3D vision; 2014. pp. 303–310. https://doi.org/10.1109/3DV.2014.36.

Lynen S, Sattler T, Bosse M, Hesch J, Pollefeys M, Siegwart R. Get out of my lab: large-scale, real-time visual-inertial localization. Robot Sci Syst. 2015;. https://doi.org/10.15607/RSS.2015.XI.037.

Laskar Z, Huttunen S, Herrera C, H, Rahtu E, Kannala J. Robust loop closures for scene reconstruction by combining odometry and visual correspondences. In: International conference on image processing; 2016. pp. 2603–2607.

Milford MJ, Wyeth GF. SeqSLAM: visual route-based navigation for sunny summer days and stormy winter nights. In: IEEE international conference on robotics and automation; 2012. pp. 1643–1649. https://doi.org/10.1109/ICRA.2012.6224623.

Naseer T, Ruhnke M, Stachniss C, Spinello L, Burgard W. Robust visual SLAM across seasons. In: IEEE/RSJ international conference on intelligent robots and systems; 2015. pp. 2529–2535.

Tomaštík J, Saloň Š, Tunák D, Chudý F, Kardoš M. Tango in forests—an initial experience of the use of the new Google technology in connection with forest inventory tasks. Comput Electron Agric. 2017;141:109–17. https://doi.org/10.1016/j.compag.2017.07.015.

Hyyppä J, Virtanen JP, Jaakkola A, Yu X, Hyyppä H, Liang X. Feasibility of Google Tango and kinect for crowdsourcing forestry information. Forests. 2017;9(1):1–14. https://doi.org/10.3390/f9010006.

Alhwarin F, Ferrein A, Scholl I. IR stereo kinect: improving depth images by combining structured light with IR stereo. In: Pham D, Park S, editors. PRICAI 2014: Trends in Artificial Intelligence. PRICAI 2014, vol. 8862. Cham: Springer; 2014. pp. 409–421.

Otsu K, Otsuki M, Kubota T. Experiments on stereo visual odometry in feature-less volcanic fields. In: Mejias L, Corke P, Roberts J, editors. Field and service robotics: results of the 9th international conference, vol. 105. Cham: Springer; 2015. pp. 365–378. Chap. 7.

Diakité AA, Zlatanova S. First experiments with the Tango tablet for indoor scanning. ISPRS Ann Photogramm Remote Sens Spat Inf Sci. 2016;III(4):67–72. https://doi.org/10.5194/isprsannals-III-4-67-2016.

Klingensmith M, Dryanovski I, Srinivasa SS, Xiao J. Chisel: real time large scale 3D reconstruction onboard a mobile device using spatially-hashed signed distance fields. In: Robotics: science and systems; 2015.

Klingensmith M, Herrmann M, Srinivasa SS. Object modeling and recognition from sparse, noisy data via voxel depth carving. In: Hsieh AM, Khatib O, Kumar V, editors. Experimental robotics: the 14th international symposium on experimental robotics. Cham: Springer; 2016. p. 697–713.

Dzitsiuk M, Sturm J, Maier R, Ma L, Cremers D. De-noising, stabilizing and completing 3D reconstructions on-the-go using plane priors; 2016. arXiv:1609.08267.

Spinello L, Luber M, Arras KO. Tracking people in 3D using a bottom-up top-down detector. In: IEEE international conference on robotics and automation; 2011. pp. 1304–1310. https://doi.org/10.1109/ICRA.2011.5980085.

Munaro M, Basso F, Menegatti E. Tracking people within groups with RGB-D data. In: IEEE/RSJ international conference on intelligent robots and systems; 2012. pp. 2101–2107. https://doi.org/10.1109/IROS.2012.6385772.

Fehr M, Dymczyk M, Lynen S, Siegwart R. Reshaping our model of the world over time. In: IEEE international conference on robotics and automation; 2016. pp. 2449–2455.

Wilson RP, McMahon CR. Measuring devices on wild animals: what constitutes acceptable practice? Front Ecol Environ. 2006;4(3):147–54. https://doi.org/10.1890/1540-9295(2006)004.

Casper RM. Guidelines for the instrumentation of wild birds and mammals. Anim Behav. 2009;78(6):1477–83. https://doi.org/10.1016/j.anbehav.2009.09.023.

ARCore. Google developers. https://developers.google.com/ar/discover/ (2018). Accessed 10 Mar 2018

Structure Sensor. Occipital, Inc. https://structure.io (2017). Accessed 27 May 2017

Angladon V, Gasparini S, Charvillat V, Pribanić T, Petković T, Donlić M, Ahsan B, Bruel F. An evaluation of real-time RGB-D visual odometry algorithms on mobile devices. J Real Time Image Process. 2017;. https://doi.org/10.1007/s11554-017-0670-y.

Bidder OR, Walker JS, Jones MW, Holton MD, Urge P, Scantlebury DM, Marks NJ, Magowan EA, Maguire IE, Wilson RP. Step by step: reconstruction of terrestrial animal movement paths by dead-reckoning. Mov Ecol. 2015;3:23. https://doi.org/10.1186/s40462-015-0055-4.

Orio APD, Callas R, Schaefer RJ. Performance of two GPS telemetry collars under different habitat conditions. Wildl Soc Bull. 2012;31(2):372–9.

Favorskaya MN, Jain LC. Realistic tree modelling. Cham: Springer; 2017. p. 181–202.

Acknowledgements

I thank Mike Scantlebury and Julia Andrade Rocha for their helpful comments, and Desmond with his well-behaved dog for taking part. I also thank the editors and three reviewers for their useful and constructive comments.

Competing interests

The author declares that there is no competing interests.

Ethics approval and consent to participate

The study animal was not subjected to pain or distress for this project. Informed consent was obtained from the owner.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Additional files

Additional file 1.

A first-person animation of a camera following the position of the animal-attached recording device, demonstrating the coupling of motion tracking and area reconstruction. The camera has a focal point fixed to the position of the final pose measurement, with an up direction set to the vertical axis. The RANSAC labelled tree trunks are displayed in green. Note that the point cloud was downsampled using a voxel grid filter with a leaf size of 0.02 m.

Additional file 2.

The RANSAC labelled tree trunks are displayed in green (28 of 30). An elevation filter was applied to the ground returns. Extract the .ZIP archive and open `index.html’ in a WebGL supporting web browser (e.g. FireFox 4.0+ or chrome 9.0+) to view in 3D with rotation and zoom options.

Additional file 3.

The RANSAC labelled tree trunks are again displayed in green (83 in total). An elevation filter was applied to the ground returns, highlighting the vertical decline (8.70 m). Note that an additional voxel grid filter (leaf size 0.045 m) was applied to the ground segment in order to improve rendering performance. Extract the .ZIP archive and open `index.html’ in a WebGL supporting web browser (e.g. FireFox 4.0+ or chrome 9.0+) to view in 3D with rotation and zoom options.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

McClune, D.W. Joining the dots: reconstructing 3D environments and movement paths using animal-borne devices. Anim Biotelemetry 6, 5 (2018). https://doi.org/10.1186/s40317-018-0150-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40317-018-0150-6