Abstract

Wireless sensor networks (WSNs) demand low power and energy efficient hardware and software. Dynamic Power Management (DPM) technique reduces the maximum possible active states of a wireless sensor node by controlling the switching of the low power manageable components in power down or off states. During DPM, it is also required that the deadline of task execution and performance are not compromised. It is seen that operational level change can improve the energy efficiency of a system drastically (up to 90%). Hence, DPM policies have drawn considerable attention. This review paper classifies different dynamic power management techniques and focuses on stochastic modeling scheme which dynamically manage wireless sensor node operations in order to minimize its power consumption. This survey paper is expected to trigger ideas for future research projects in power aware wireless sensor network arenas.

Similar content being viewed by others

Introduction

A wireless sensor network (WSN) consists of mostly tiny, resource-constrained, self-organized, low power, low cost and simple sensor nodes which are organized in a cooperative manner. The sensor nodes can sense, communicate and control the surrounding environment. They can provide interaction between the users (human being), physical environment and embedded computers in order to perform some specific operation. They follow IEEE 802.15.4 as basis standard for lower layers (physical and medium access control) and other standards like ZigBee, ISA100.11a etc. as upper layer (application, routing) protocols. WSNs have wide application bandwidth emerging the areas of agricultural, medical, military, environmental, industrial control, monitoring, civil and mechanical, etc. Target tracking and continuous monitoring in WSNs are important energy hungry problems with a large spectrum of applications, such as surveillance [1], natural disaster relief [2], traffic monitoring [3] and pursuit evasion games, and so forth. A wide range of wireless sensor network applications helps in transforming human lives in various aspects of intelligent living technology. These attractive applications have generated a great interest amongst industrialists and researchers [4].

Several multifunctional sensor nodes in specified area work for any specified tasks continuously without any internal interruption. Collectively sensor nodes connect with the pan-coordinator or sink nodes through the gateway and helps in forwarding the information to users through the network as depicted in Figure 1. Usually, a sensor node receives then processes and route the data to the next level nodes. The power manager as a part of micro-operating system (μ. OS), controls and manage the on/off and power down states of the components of sensor node (e.g. Embedded processor, memory, RF transceiver) to enable power management.

DPM is referred to as an operating system-level algorithm/technique which is used to control the power and performance parameters of a low power system, by increasing idle time slots of its devices and switching the devices to low power mode. Broadly, the ultra-low-power components of the sensor node make the node energy efficient by implementing dynamic power management policies to achieve longer lifetime [5]. The total power dissipation of sensor node can be modelled with static and dynamic power dissipation. Static power consumption is the result of leakage current flow in ultra-low power components of sensor node that can be reduced at design time by using static techniques. The static power can be reduced using synthesis and compilation at design time, whereas the dominate part such as dynamic power is the result of switching power consumption that can be reduced by selectively switching or shutting down hardware components on a sensor node. The switching or dynamic power consumption decreases quadratically with supply voltage (i.e. power gating) and linearly with the reduction in the working frequency (i.e. clock gating). Further, more the power modes of a component more is the saving of energy in a sensor node. To maximize the lifetime of the battery, the power consumption of sensor node components should reduce. Hence, the power management problem minimizes switching power or the power consumption of components. Switching power is defined as the power required for the component to change over from low power mode to high power mode and vice versa. The stochastic modelling is used for studying DPM in a wireless sensor network for power management [6,7]. Emphasis on the system model for power management shows the basic requirements for sensor node components and demands for power management.

The rest of this paper is organized as follows. Next section illustrates the abstract system model for dynamic power management and the system component requirements are identified. The section 3 classifies the major policies such as greedy schemes, time-out schemes, predictive schemes, stochastic schemes, dynamic scaling schemes, switching and scheduling schemes with their pros and cons. A brief discussion on other state of the art on discrete time and continuous time techniques is also presented. In section 4, the power consumption issues in continuous time Markov model and semi Markov model have been analyzed in detailed case studies 1 & 2 respectively. It also provides some background about existing stochastic models for dynamic power management as described in the literature, aiming at their stiff and weak points in wireless sensor network applications [8]. Apart from this, it highlights the important key areas, challenges and lacuna in managing the power dynamically using power aware stochastic modelling techniques, which gives future direction for research in the WSN area. Finally, section 5 concludes the entire paper and states about the Author’s future interest and investigation.

Basic power management system model

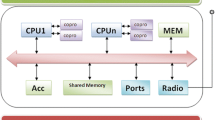

The operating system level dynamic power management schemes take the knowledge of application requirement and present states of power manageable devices and then switch the devices into different power modes for the next state. The unused components turn off if idle period is large. Today, DPM trends a Markov process in which stochastic models can analyze the power and performance in the system. The system model consists of a power manager (PM), a service provider (processor), a service requestor (input from sensors) and a service request queue (memory) as shown in Figure 2. The activity of different devices in WSN may be in discrete time, continuous time and event driven also that affect the battery lifetime. The temporal event behavior over the entire sensing region, R, can be assumed as a Poisson process with an average event rate. The power manager tracks the states of service provider, service requester, service queue and gives command signals to control the power modes of the system and power management components those are idle and waiting for the next command. The power manager can be a component of the software or hardware module. We can implement a suitable stochastic modelling approach at the Operating System (OS) level to achieve better energy efficiency at lower cost. Stochastic scheme or Markov Decision Policy (MDP) models a system and its workload [9,10]. Here, consider the workload is the arrival of the request and the information associated with it. Existing analysis and modelling of DPM schemes [11,12] have been accepted with the length of the queue of waiting tasks as a parameter of latency measurement. However, the deadline of task execution is important latency or performance parameter in Markov models. Dynamic power management obtains the correct power switching time after observing the workload and system component states at a performance cost [13]. Therefore, the basic need of DPM is embedded operating system (micro-operating system in case of sensor node) the power management components which support different power states, e.g. active, sleep and idle.

The switching between different power modes increases latency and degrades performance, which results the power and latency trade-offs. The authors presented the basic idea behind dynamic power management and the resulting power and performance trade-off space in wireless sensor network.

Ideally, a power manageable component with more than two power down states and its switching to deep sleep state can reduce more power consumption. Moreover, switching from power down to power up states and vice versa, require finite transition time and overhead of storing processor state before turning the device into low power mode or off. Deeper sleep state requires larger waking up time and which in turn increases the latency. Hence, there is a great need of an optimized DPM scheme that can reduce power consumption with performance constraints and gain performance with power constraints for power source limited applications in wireless sensor networks.

Dynamic power management

Some important factors that affect the sensor node life are the power consumption in different modes of operation, switching cost to power down modes and time duration of the processor in each mode [14]. A class of major dynamic power management policies such as greedy schemes, time-out schemes, predictive schemes, stochastic schemes, dynamic scaling schemes, switching and scheduling schemes respectively have been presented in Figure 3. The greedy (always ON) and timeout policies are heuristic policies. The timeout scheme shuts down a power manageable component after a fixed inactive time (T). Time out policy outperforms only when the idling duration is very large, but it costs for waiting period dissipation [15]. Predictive scheme is another type of heuristic scheme. It first predicts the future arrival time and nature, then directs the system for next state and makes it idle if the predicted time duration is more than break-even time [16,17]. Thus, predicted time determines the switching of the components to the low power state. The authors have introduced adaptive filters for workload prediction to make the state changes of the power manageable devices on the basis of the predicted time. They have used workload traces, taking different processors for different time slots in a daytime. A performance metric is defined for efficiency of observations. A workload prediction scheme does not deal with a generalized system model and they are not suitable for providing an accurate tradeoff between power saving and performance reduction. Therefore, stochastic techniques are required to mitigate the limitations of predictive techniques. The stochastic policies are better in terms of the power delay trade-off than heuristic policies [18].

Static approach has a priori knowledge of different power manageable component states and stationary workload. Adaptive approach accounts for non-stationary nature of the workloads and uses policy pre-characterization, parameter learning and policy interpolation in taking the determination for power down states of parts [19]. Adaptive Markov Control Process for non-stationary workloads are online adaptation DPM schemes for non-stationary workloads or real-life systems [20].

In discrete time Markov decision policy (DTMDP), the power manager takes the decision for next state at discrete time intervals regardless of the input nature. A discrete time, finite state Markov decision model for power-managed systems are proposed for giving the exact solution using linear optimization problem in polynomial time [21]. This discrete time Markov model takes decision for the next state at every defined discrete interval of time. Therefore, it is not suitable for continuous monitoring or event driven processes. In discrete finite horizon Markov decision process (MDP), the sensor’s battery discharge process as an MDP is modelled stochastically and characterized the optimal transmission strategy [22]. The scheme can be generalized for the decentralized case with the stochastic game theory technique. Generally, network lifetime analysis models in the literature assume average node power consumption. A discrete time Markov model for a node with a Bernoulli distribution of arrival process and phase-type distribution of service time has been validated for single and multi-hop network [23]. Observations tell that, these schemes have limitations in terms of architectural modification.

A continuous time Markov decision policy (CTMDP) is event driven and the decision taken can change only at event occurrence. A wrong decision can increase energy overhead than energy saving. The continuous time Markov models [11] need all the stochastic processes as exponential processes. These models overcome the problems in discrete time methods. Continuous time modelling is complex and does not give good results for real time systems. Therefore, semi-Markov model reduces the need of strict exponential distribution. Time indexed semi Markov Decision Process (TISMDP) combines the advantages of event driven Semi Markov Decision process model with discrete time Markov decision process (DTMDP) model, but limited to non-exponential arrival distribution coupled with non-uniform transition distribution [24]. The multiple non-exponential processes require a more flexible model and the Time-indexed semi-Markov models can be one of its solution. A model with multiple non-exponential processes increases the model as well as system complexity. Nevertheless, the non-stationary nature of work loads can save more power because of its adaptive nature scheme [9]. The N-policy gives accumulation of N events and then processing to make the system more energy efficient.

Other techniques such as dynamic scaling [13], decreases the power consumption of the system components by operating them at different low voltage and frequency levels during active phase. The switching and scheduling schemes help in power saving by controlling idle periods of the system [25]. The sleep state policy also improves energy efficiency to the greatest extent [26]. The anticipated workload of the different subsystems and the estimation of the task arrival rate at the scheduler are crucial preconditions for a DPM technique. Various cases of filters as estimation techniques are investigated [27,28]. These filters are predicting based which trace the past N number of tasks at scheduler and define the work load of the microcontroller for the next observation point. In a more precise estimation process, the workload of every hardware component is first observed and then the future load on the sensor node can be determined. This is particularly useful for selective switching. The scheduler can give the appropriate workload information regarding input events and event counter counts frequency and time-period of hardware employed by each task [29]. A stochastic sleep scheduling (SSS) with and without adaptive listening scheme is proposed and proved better in terms of energy consumption and delay reduction at the network level for S-MAC. This scheme is applicable for high node scalability and stochastic sensor sleep period [30]. The energy efficiency can be improved in the routing layer to enhance the network lifetime. Several energy efficient routing algorithms for wireless sensor network are discussed which assume arrival of the input as a stochastic process [31]. The authors have shown improvement in wireless sensor network lifetime introducing dynamic power management policy in broadband routing [32], target tracking [33] and other applications [34-37]. It is remarked that instead of energy efficiency improvement in routing protocols, power management can also improve energy efficiency. An OS-directed, event based, predictive power management technique [38] is proposed for single node energy efficiency improvement. PowerTOSSIM [39], mTOSSIM [40] and eSENSE [41] are easily available platform for sensor node life estimation.

Stochastic optimal control approach

Stochastic schemes are based on Markov decision policies which a power manager uses to direct the power manageable devices about the state change. Established in the memory-less property of Markov decision policy, power manager considers only present state of the devices for taking next state decisions. Here, the term “State” is the power mode of the device. The main function of stochastic approaches is the development and analysis of a system model to direct the system components for suitable operating mode to achieve the maximum power savings. The wireless sensor nodes observe the presence of the random inter-arrival of events on their input. Therefore, the input pattern follows any one of the exponential distribution, Pareto distribution, uniform distribution, normal distribution, Bernoulli distribution and Poisson distributions for modelling [42]. A paper on controllable Markov decision model provides heuristic and stochastic policies with linear programming optimization [43]. This work gives the importance to workload statistics for two, three and four state system model. It establishes that the delay in attending an event decreases with increase in timeout duration but the power consumption increases.

The advanced Partially Observable Markov Decision Process (POMDP) enabled the Hidden Markov model and event arrival distribution by causing modifications in the likelihood of the observed input sequence and optimizing it [44]. The paper focused on the Hidden Markov model (HMM) modelling of the service requester and the rest of the system (including service provider and service queue) is modelled in DTMDP. The authors compared the achieved results for HMM with other models and found 65.4% higher than earlier. In accumulation and fire (A&F) policy based model, the power manager can be entirely shut down when the service provider (SP) is activated. However, the A&F policy does not involve updating tasks [45,46]. This reduces average power dissipation. The PM latency is extremely small. A finite state Markov model for the server that minimizes the workload demand during energy minimization at the cost of reliability in hosting clusters is presented [47]. Thus, the hierarchical approaches are required to scale down the large systems consisting of a number of servers.

It is found through literature review that the power manager (PM) is the component which dissipates negligible power. Therefore, there is a need of policy that can consider power consumption of power manager along with the other component power on the system. Markov model based policies are not proven globally optimum for stationary Markovian workload. The accuracy of Markov models for non-stationary workload increases by incorporating a number of power states in the Markov chain. Different techniques of dynamic power management have their different implementation approach depending on the application requirement. The power consumption depends on the workload pattern and the states of the different components on the sensor node. The non-geometric transition times of the states and their complex cost functions can also be improved adding more states into a Markov chain of power managed system. However, this can increase the complexity of the system. Finally, constrained by the coarse-grain power management policy, one can search for a refined policy for the states inside each sensor node component dynamically.

Case study 1

A new continuous-time Markov decision processes shown in Figure 4 can overcome the shortcomings of reviewing models and form the new fine grain Markov model for DPM system in wireless sensor networks. The power saving can be achieved by decreasing the number of shutdown and wake up processes. Here, batch processing accumulates on ‘k’ number of events and the system fires at activation time. In this scheme, all the event requests arrive and serviced according to the first-in, first-out (FIFO) sequencing. The event arrival behaves as stochastic processes as an exponential distribution with mean 1/λ. Consider, the event occurrence as a signal received by the sensor node that has a value greater than a predetermined threshold value (Vth). Usually, the incoming events or requests have non-stationary distribution in space and time and represents a probability distribution function independently as pxy (x,y). Assume, an event arrives at the input of a sensor node in the region, R. Then equation (1) represents the probability of event detection by sensor node k (pek) that is above the threshold level. A paper shows adaptive event detection with time varying Poisson processes [48].

Markov model for event accumulation and fire policy [ 45 ].

The authors of this paper have assumed individual task execution as a Poisson distribution and the n + 1 task execution as Erlang distribution. A system can process a new arrived task only when its processor comes out of the current state (for example, wake up state Wu (k) to active state). The task execution introduces delay by increasing the time of task accumulation and then batch processing reduces the power consumption. The average power consumption in this policy is the sum of power consumption in service provider (processor) and control unit (power manager, task manager and memory unit). The stochastic model analysis includes the following parameters:

-

Inactive mode power consumption-0 W

-

Inactive mode start-up energy-4.75 J

-

Transition time from inactive to active-5 Sec

-

Active mode power consumption-1.9 W

-

Active mode start-up energy-0 W

-

Transition time from active to inactive-0 W

-

Accumulation limit (k) -4

-

Task execution rate (greedy policy) -100,1000

-

Task execution rate (A&F policy) -100

The control unit of accumulation and fire policy ((without batch processing or k = 1)) consumes more power than greedy policy because of processing overhead Figure 5. The average power consumption of the A&F policy is less than greedy policy Figure 6. Therefore, the average power use of the sensor node with inclusion of A&F policy will be lesser than the greedy policy but performance cost increases.

Power comparison of greedy and A&F policy (including PM cost) [ 45 ].

Average power comparison for greedy and A&F Policy [ 45 ].

Case Study 2

A Markov model represents memory-less system. Therefore, the present states and inputs at that time determine the next power states of the system. However, in the embedded Markov model, a semi Markov process represents the next state of the system components. It does not only take the present states, but considers the time a system spends in that state to determine the probability of the system being in low power mode. A semi Markov model for power consumption and lifetime analysis is developed and implemented [49]. The model is implemented on schedule driven Mica2 and Telos motes and analyzed using following parameters for both the motes-

-

Event arrival rate range:150.000/hr. - 12960000.000/hr.

-

Average number of jobs per event:1.666667e + 000/event

-

Event duration:1 Sec

-

Duty cycle range:0.010 - 0.900

-

Duty period:5 Sec

Simulation results are conducted on Matlab and represented in Tables 1 and 2 for Telos and Mica2 motes. Table 1 represents power consumption and lifetime of a system model which has five different power states (S0 – S4). The state S0 is corresponding to lowest power state and saves more power whereby state S2 is found to be the most power consuming states. This reflects the need of power management and switching of system components from sleep to communication and vice versa whenever required. Simultaneously, the listening power state duration should also be minimized. However, operating the system and its components in various power states increases latency, switching and energy overheads. Thus, the energy saving should always be greater than energy overheads. The table clearly indicates that the Telos motes are more energy efficient and live longer than mica2 motes. It also relates the life of a sensor node with the event detection. Lesser detection probability means the node is in low power or sleep state in most of the time and the communication unit is OFF more often. The lifetime of a sensor node increases to a great extent when detection probability decreases. But this increases event missing rate. Thus, the choice of power mode and duration of any power state depends on the application in case of wireless sensor networks. Table 2 shows the power breakdown for two motes at different values of duty cycle. When duty cycle has a minimum value, most of the time a node remains in sleep mode with maximum sleep power breakdown. It decreases as the duty cycle increases. Telos mote takes more power in transmitting and reception than mica2 mote. However, they consume less power in different processing states. The active, idle, transmission and reception power breakdown, increases with increase in duty cycle as presented in Table 2. The idle power breakdown, increases to a great extent with the duty cycle increase.

Apart from this, it is observed that the solution to stochastic modelling technique becomes complex when the number of power states in the model increases. Table 3 depicts the modelling requirements for stochastic modelling techniques for dynamic power management. With state of the art, the stochastic nature of event arrival distribution, service time distribution and transition time distribution are taken as normal, uniform, exponential, Poisson, Pareto, Gaussian and Erlang distributions. The number of states in the model and processor used previously in many stochastic models is presented in the table which can be helpful for quick selection of the model for any particular application.

Observations and challenges

The state of art in this paper tells about the important key points, challenges and lacuna in stochastic modelling for dynamic power management. Based on the in depth survey, the following observations and challenges give the future research direction.

-

The continuous time (event driven) model does not need synchronization pulse at each interval of time, as in case of discrete time system model.

-

The mission critical applications require the immediate service (high priority) queue and regular service (lower priority) queue.

-

Stochastic modelling outperforms over other dynamic power management techniques, but the solution of modelling becomes complex with the increase in the number of parameters used.

-

Pareto distribution is more suited for modelling event arrival when idle periods are long [10].

-

A stochastic modelling for dynamic power management along with dynamic voltage and frequency scaling will be the effective way of power consumption reduction in wireless sensor networks.

-

Consider the parameters such as delay in servicing an event, the number of waiting tasks, clock frequency, power manager delay, state transition time, detection probability and task execution deadline etc. as performance measure parameters.

-

Stochastic modelling for non-stationary service request needs improvement and directs toward future research area.

-

The knowledge gained from literature review is useful in the development of dynamic power management technique/algorithm for power hungry applications, e.g. continuous monitoring and detection of critical and emergency applications.

-

The cost of power manager needs to be evaluated for actual power consumption measurement in complete system.

-

The components other than processor should be working on scale down the voltage and frequency for achieving the more flexibility in modeling.

Conclusion

After in depth survey of power management techniques during the last two decades, this paper gives a brief review of papers which can be helpful in making decision for power management policy selection for wireless sensor network. The stochastic schemes as the Markov decision model helps in reducing the power consumption in wireless sensor network and thus, increases the sensor node life. The implementation and execution of dynamic power management policies are possible at operating system/software level. Thus, the energy efficiency of a sensor node can increase without requiring any specific power management hardware. This paper provides a brief study of Markov models. The work on specific stochastic modelling techniques for power and performance trade-offs in wireless sensor network will be investigated and upgraded in our future report. The need of power management tends towards a quest to design simpler, energy efficient, optimized and flexible modelling techniques which itself are less power consuming and need less memory to fulfil the requirement of wireless sensor network environment.

References

Valera M, Velastin SA (2005) Intelligent distributed surveillance systems: a review. In: IEE Proceedings of Vision, Image, and Signal Processing, Institution of Engineering and Technology (IET). 152(2):192–204, doi:10.1049/ip-vis:20041147

Wang Z, Li H, Shen X, Sun X, Wang Z (2008) Tracking and Predicting Moving Targets in Hierarchical Sensor Networks. In: IEEE International Conference on Networking, Sensing and Control. Sanya, IEEE, 1169-1173. Available: http://dx.doi.org/10.1109/icnsc.2008.4525393

Li X, Shu W, Li M, Huang HY, Luo PE, Wu MY (2009) Performance evaluation of vehicle-based mobile sensor networks for traffic monitoring. IEEE Trans Vehicle Tech 58:1647–1653

Song AJ, Lv JT (2014) Remote Medical Monitoring Based on Wireless Sensor Network. Applied Mechanics and Materials. Trans Tech Publ 556–562:3327–30

Otis B, Rabaey J (2007) Wireless Sensor Networks. Ultra-Low Power Wireless Technologies for Sensor Networks. Springer, US, 10.1007/978-0-387-49313-8_1

Chandrakasan A, Sinha A (2004) Dynamic Power Management in Sensor Networks. Handbook of Sensor Networks. CRC Press LLC, 2000 N.W. Corporate Blvd., Boca Raton, Florida 28 Jul 2004. doi:10.1201/9780203489635.sec7

Fallahi A, Hossain E (2007) QoS provisioning in wireless video sensor networks: a dynamic power management framework. IEEE Wirel Commun 14(6):40–9

Power-Aware Wireless Sensor Networks (2003) Wireless Sensor Network Designs. John Wiley & Sons, Ltd, pp 63–100. doi:10.1002/0470867388.ch3

Simunic T, Benini L, De Micheli G (2001) Energy-efficient design of battery-powered embedded systems. IEEE Transactions on Very Large Scale Integration (VLSI) Systems 9(1):15–28

Wang X (2007) Dynamic power optimization with target prediction for measurement in wireless sensor networks. Chinese J Mechanical Engineer 43(08):26

Qiu Q, Pedram M (1999) Dynamic power management based on continuous-time Markov decision processes. In: Proceedings of the 36th ACM/IEEE conference on Design automation conference–DAC, ACM Press, New Orleans, Louisiana, United States

Ren Z, Krogh BH, Marculescu R (2005) Hierarchical Adaptive Dynamic Power Management. IEEE Trans Comput 54(4):409–20

Simunic T, Benini L, Acquaviva A, Glynn P, De Micheli G (2001) Dynamic voltage scaling and power management for portable systems. In: Proceedings of the 38th annual Design Automation Conference. ACM, New York, NY, USA, pp 524–529

Estrin D, Heidemann J, Xu Y (2001) Geography-informed energy conservation for Ad-hoc routing. In: Proceedings of the ACM/IEEE International Conference on Mobile Computing and Networking, ACM, New York, USA, pp 70–84, Jul 2001

Zamora NH, Kao J-C, Marculescu R (2007) Distributed Power-Management Techniques for Wireless Network Video Systems. In: Design, Automation & Test in Europe Conference & Exhibition, Apr 2007. Nice Acropolis, France, IEEE. Available from: http://dx.doi.org/10.1109/date.2007.364653

Srivastava MB, Chandrakasan AP, Brodersen RW (1996) Predictive system shutdown and other architectural techniques for energy efficient programmable computation. IEEE Transactions on Very Large Scale Integration (VLSI) Systems 4:42–55

Hwang C-H, Wu AC-H (1997) A predictive system shutdown method for energy saving of event-driven computation. In: Proceedings of IEEE/ACM international conference on Computer-aided design, Digest of Technical Papers (ICCAD ‘97). IEEE Computer Society Press, Washington, Brussels, Tokyo, pp 28–32, Nov 1997

Shen Y, Li X (2008) Wavelet Neural Network Approach for Dynamic Power Management in Wireless Sensor Networks. In: International Conference on Embedded Software and Systems. 29-31 Jul 2008, Sichuan, IEEE, 376-381. doi:10.1109/icess.2008.36

Wu K, Liu Y, Zhang H, Qian D (2010) Adaptive power management with fine-grained delay constraints. In: 3rd International Conference on Computer Science and Information Technology 9-11 Jul 2010, Chengdu, IEEE, 2:633-637. doi:10.1109/iccsit.2010.5565140

Chung EY, Benini L, Bogliolo A, Lu Y-H, Micheli GD (2002) Dynamic power management for nonstationary service requests. IEEE Trans Comput 51(11):1345–1361

Benini L, Bogliolo A, Paleologo GA, Micheli GD (1999) Policy optimization for dynamic power management. IEEE Transactions Computer-Aided Design Integrated Circuits and Systems 18(6):813–833

Kobbane A, Koulali MA, Tembine H, Koutbi ME, Benothman J (2012) Dynamic power control with energy constraint for multimedia wireless sensor networks, IEEE ICC 2012 - Ad-hoc and Sensor networking Symposium. 518-522, 10-15 Jun 2012, Ottawa, Canada, IEEE, doi:10.1109/ICC.2012.6363971

Wang Y, Vuran MC, Goddard S (2010) Stochastic Analysis of Energy Consumption in Wireless Sensor Networks. In: Proceedings of 7th Annual IEEE Communications Society Conference on Sensor Mesh and Ad Hoc Communications and Networks (SECON), pp 1–9 Boston, MA, 21-25 Jun 2010, IEEE, doi:10.1109/SECON.2010.5508259

Simunic T, Benini L, Glynn P, Micheli GD (2000) Dynamic power management for portable systems. In: Proceedings of 6th International Conference on Mobile Computing and Networking, pp 11-19, Boston, MA, ACM, 1 Aug 2000.

Mei J, Li K, Hu J, Yin S, ᅟ H-M, Sha E (2013) Energy-aware pre-emptive scheduling algorithm for sporadic tasks on DVS platform. Microprocessors and Microsystems. Elsevier BV 37(1):99–112

Lin C, He Y, Xiong N (2006) An Energy-Efficient Dynamic Power Management in Wireless Sensor Networks. In: Fifth International Symposium on Parallel and Distributed Computing, Jul 2006. pp. 148–154, Timisoara, Romania, IEEE doi:10.1109/ispdc.2006.8

Sinha A, Chandrakasan A (2001) Dynamic power management in wireless sensor networks. IEEE Des Test 18(2):62–74

Zhang D, Miao Z, Wang X (2013) Saturated Power Control Scheme for Kalman Filtering via Wireless Sensor Networks. WSN Scie Res Publ Inc 05(10):203–207

Isci C, Martonosi M (2003) Runtime power monitoring in high-end processors: Methodology and empirical data. In: Proceedings of the 36th annual IEEE/ACM International Symposium on Microarchitecture MICRO 36. IEEE Computer Society Pub, Washington, DC, USA, p 93

Zhao Y, Wu J (2010) Stochastic sleep scheduling for large scale wireless sensor networks. In: IEEE International Conference on Communications, ICC 2010, Cape Town, 23–27 May 2010, pp 1-5, IEEE, doi:10.1109/ICC.2010.5502306

Haq U, Riaz, Norrozila, Sulaiman, Muhammad, Alam (2013) A Survey of Stochastic processes in Wireless Sensor Network: a Power Management Prospective In: Proceedings of 3rd International Conference on Software Engineering & Computer Systems (ICSECS - 2013), Universiti Malaysia Pahang, Available from: http://umpir.ump.edu.my/5011/

Sausen PS, Spohn MA, Perkusich A (2008) Broadcast routing in Wireless Sensor Networks with Dynamic Power Management. IEEE Symposium on Computers and Communications, 6-9 July 2008, pp 1090-1095, Marrakech, IEEE doi:10.1109/iscc.2008.4625620

Li G-J, Zhou X-N, Li J, Zhu J-H (2013) Distributed Targets Tracking with Dynamic Power Optimization for Wireless Sensor Networks. Sensors Letter. Am Sci Publ 11(5):907–910

Passos RM, Coelho CJN, Loureiro AAF, Mini RAF (2005) Dynamic Power Management in Wireless Sensor Networks: An Application-Driven Approach. Second Annual Conference on Wireless On-demand Network Systems and Services. pp 109-118, 19-21 Jan 2005, IEEE doi:10.1109/wons.2005.13

Halawani Y, Mohammad B, Al-Qutayri M, Saleh H (2013) Efficient power management in wireless sensor networks. IEEE 20th International Conference on Electronics, Circuits, and Systems (ICECS), Dec 2013. 8-11 Dec. 2013, pp 72-73, Abu Dhabi, IEEE, doi:10.1109/icecs.2013.6815350

Lee DH (2013) Yang WS (2013) The N-policy of a discrete time Geo/G/1 queue with disasters and its application to wireless sensor networks. Appl Mathematical Modelling Elsevier BV 37(23):9722–9731

Zhang Y (2011) An Energy-Based Stochastic Model for Wireless Sensor Networks. WSN Sci Res Publ Inc 03(09):322–328

Benini L, Bogliolo A, Micheli GD (2000) A survey of design techniques for system-level dynamic power management. IEEE Trans Very Large Scale Integr Syst 8(3):299–316

Perl E, Catháin AÓ, Carbajo RS, Huggard M, Mc Goldrick C (2008) PowerTOSSIM z. In: Proceedings of the 3rd ACM workshop on Performance monitoring and measurement of heterogeneous wireless and wired networks - PM2HW2N, ACM Press, pp 35-42, ACM New York, NY, USA. doi:10.1145/1454630.1454636

Mora-Merchan JM, Larios DF, Barbancho J, Molina FJ, Sevillano JL, León C (2013) mTOSSIM: A simulator that estimates battery lifetime in wireless sensor networks, Simulation Modelling Practice and Theory. Elsevier BV 31:39–51

Liu H, Chandra A, Srivastava J (2006) eSENSE: energy efficient stochastic sensing framework for wireless sensor platforms. In: 5th International Conference on Information Processing in Sensor Networks. pp 235-242, ACM New York, NY, USA, doi:10.1109/ipsn.2006.243752

Heyman DP (1975) Queueing Systems, Volume 1: Theory by Leonard Kleinrock John Wiley & Sons, Inc. New York Wiley-Blackwell 6(2):189–190

Qiu Q, Qu Q, Pedram M (2001) Stochastic modeling of a power-managed system-construction and optimization. IEEE Transactions Comput-Aided Design Integrated Circuits Syst 20(10):1200–1217

Tan Y, Qiu Q (2008) A framework of stochastic power management using hidden Markov model. In: Proceedings of the conference on Design, automation and test in Europe-DATE’08, ACM Press. Date: August 2008 UK Performance Evaluation Workshop. pp 92-97, ACM New York, NY, USA, doi:10.1145/1403375.1403402

Chen Y, Xia F, Shang D, Yakovlev A (2008) Fine grain stochastic modelling and analysis of low power portable devices with dynamic power management. UK Performance Evaluation Workshop (UKPEW) Aug 2008, Imperial College London, DTR08-09, pp 226-236, http://ukpew.org/2008/papers/dynamic-power-management

Chen Y, Xia F, Shang D, Yakovlev A (2009) Fine-grain stochastic modelling of dynamic power management policies and analysis of their power–latency trade-offs. IET Software. Inst Engineer Technol (IET) 3(6):458–469

Guenter B, Jain N, Williams C (2011) Managing cost, performance, and reliability tradeoffs for energy-aware server provisioning In: Proceedings of IEEE INFOCOM. 10-15 Apr 2011, pp 332-1340, Shanghai, IEEE. doi:10.1109/infcom.2011.5934917

Ihler A, Hutchins J, Smyth P (2006) Adaptive event detection with time-varying Poisson processes. In: Proceedings of the 12th ACM SIGKDD International conference on knowledge discovery and data mining, ACM New York, NY, USA, pp 207–216

Jung D, Teixeira T, Savvides A (2009) Sensor node lifetime analysis. TOSN. Assoc Comput Machinery (ACM) 5(1):1–33. doi:10.1145/1464420.1464423

Munir A, Gordon-Ross A (2012) An MDP-Based Dynamic Optimization Methodology for Wireless Sensor Networks. IEEE Transac Parallel Distributed Systems 23(4):616–625

Kang H, Li X, Moran PJ (2007) Power-Aware Markov Chain Based Tracking Approach for Wireless Sensor Networks. In: Proceedings of the IEEE Wireless Communications and Networking Conference. Hong Kong, 11–15 Mar 2007, pp 4209–4214. doi:10.1109/wcnc.2007.769

Xu D, Wang K (2014) Stochastic Modeling and Analysis with Energy Optimization for Wireless Sensor Networks. International Journal of Distributed Sensor Networks. Hindawi Publishing Corporation 2014:1–5

Norman G, Parker D, Kwiatkowska M, Shukla S, Gupta R (2005) Using probabilistic model checking for dynamic power management. Springer Sci Business Media 17(2):160–176. doi:10.1007/s00165-005-0062-0

Jung D, Teixeira T, Barton-Sweeney A, Savvides A (2007) Model-Based Design Exploration of Wireless Sensor Node Lifetimes. In: Proceedings of the European Conference on Wireless Sensor Networks (EWSN), Springer-Verlag, Berlin Heidelberg, Aug 2006. pp 277–292. doi:10.1007/978-3-540-69830-2_18

Simunic T, Benini L, Glynn P, De Micheli G (2001) Event-driven power management. IEEE Transactions Comput-Aided Design Integrated Circ Systems 20(7):840–857

Acknowledgement

The author’s would like to thank Gautam Buddha University for providing workplace, support and resources.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declared that they have no competing interests.

Author’s contributions

Both the authors, AP and VS, have equally contributed in sequence designing and drafting the manuscript. All authors have read and approved the final manuscript.

Anuradha Pughat and Vidushi Sharma contributed equally.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0), which permits use, duplication, adaptation, distribution, and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Pughat, A., Sharma, V. A review on stochastic approach for dynamic power management in wireless sensor networks. Hum. Cent. Comput. Inf. Sci. 5, 4 (2015). https://doi.org/10.1186/s13673-015-0021-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13673-015-0021-6