Abstract

In this paper, we consider an inverse source problem for the time-space-fractional diffusion equation. Here, in the sense of Hadamard, we prove that the problem is severely ill-posed. By applying the quasi-reversibility regularization method, we propose by this method to solve the problem (1.1). After that, we give an error estimate between the sought solution and regularized solution under a prior parameter choice rule and a posterior parameter choice rule, respectively. Finally, we present a numerical example to find that the proposed method works well.

Similar content being viewed by others

1 Introduction

Let T be a given positive number, Ω a bounded domain in \(\mathbb{R}^{n}\) (\(n \ge1\)) with a smooth boundary ∂Ω. In this work, we consider the inverse source problem of the time-fractional diffusion equation as follows:

where \(D_{t}^{\beta}u(x,t)\) is the Caputo fractional derivative of order β defined as [1] in the following form:

where \(\Gamma(\cdot)\) is the Gamma function. In fact \((g,\ell,\varphi)\) is noised by observation data \((g^{\varepsilon}, \ell^{\varepsilon}, \varphi^{\varepsilon} )\) where the order of ε is the noise level. We have

In all functions \(g(x)\), \(\ell(x)\), and \(\varphi(t)\) are given data. It is well known that, if ε is small enough, the sought solution \(f(x)\) may have a large error. It is known that the inverse source problem mentioned above is ill-posed. In general, the definition of the ill-posed problem was introduced in [2]. Therefore, regularization is needed.

As is well known, in the last few decades, the fractional calculation is a concept that has a great influence on the mathematical background and its application in modeling real problems. Fractional calculus has many applications in mechanics, physics and engineering science, etc. We present to the reader much of the published work on these issues, such as [3–21] and the references cited therein. This makes it attractive to study this model. The space source term problem for the time-fractional partial differential equations has attracted a lot of attention, and much work has been completed to study many aspects of this problem, specifically as follows.

In 2009, Cheng, Yamamoto et al. considered the problem (1.1) with \(\varphi(t)f(x) = 0\), the operator \(\mathcal{L}= \frac{\partial }{\partial x} (p(x) \frac{\partial u}{\partial x} )\) and the homogeneous Neumann boundary condition; see [22].

In 2016, the homogeneous problem, i.e, \(\varphi(t)f(x) = 0\), in Eq. (1.1) has been considered by Dou and Hon; see [23]. They used the Tikhonov regularization method to solve this problem (1.1) based on the kernel-based approximation technique.

In 2019, the authors Yan, Xiong and Wei proposed a conjugate gradient algorithm to solve the Tikhonov regularization problem for the case \(\gamma=1\).

In the case \(f(x) =1\), in 2014, Fan Yang and his group considered the Fourier transform and the quasi-reversibility regularization method; see [24]. Recently, the simple source problem, i.e, \(\varphi(t) = 1\) and \(\gamma=1\) in Eq. (1.1) has been considered by Fan Yang, Zhang and Li, see [20, 21, 25–27]; the authors used the Landweber iterative regularization, Truncation regularization and Tikhonov regularization methods solve this problem and achieved the results of convergence results to the order of \(\epsilon^{\frac {p}{p+1}}\) for \(0< p<2\) and \(\epsilon^{\frac{1}{2}}\) for \(p>2\), respectively.

The problem (1.1) with discrete random noise has been studied by Tuan et al, they used the filter regularization and trigonometric methods to solve this problem (1.1); see [28–30]. According to our searching, the results about applying the quasi-reversibility regularization method to solve the inverse source problem for the time-space-fractional diffusion equation is still limited. To the best of our knowledge, this is one of the first results of this type of problem. In particular, one addressed the case where \(\mathcal{L}^{\gamma}\) and the right-hand side \(\varphi(t)f(x)\) are represented in a general form. Motivated by all the above reasons, we consider the quasi-reversibility regularization method to solve the problem (1.1). The present paper aims to use the quasi-reversibility regularization method (QR method) to solve the problem (1.1).

The outline of the paper is as follows. In Sect. 2, we show some basic concepts, the function setting, the definitions, and the ill-posed problem are presented in Sect. 2. In Sect. 3, we construct the structure for the regularized problem (in Sect. 3.1), and the convergent rate between the sought solution and the regularized solution under a prior parameter choice rule (in Sect. 3.2), and a posterior parameter choice rule (in Sect. 3.3). A numerical example is presented in Sect. 4.

2 Preliminary results

The eigenvalues of the operator \(\mathcal{L}^{\gamma}\) is introduced in [31]. Let us recall that the spectral problem

admits a family of eigenvalues

Defining

where \(\langle\cdot\rangle\) is the inner product in \(L^{2}(\Omega)\), then \(\mathcal{D}^{\zeta}(\Omega)\) is a Hilbert space equipped with the norm

Definition 2.1

(see [1])

The Mittag-Leffler function is

where \(\beta> 0\) and \(\gamma\in\mathbb{R}\) are arbitrary constant.

Lemma 2.2

(see [1])

Let \(0 < \beta_{0} < \beta_{1} < 1\). Then there exist positive constants \(\mathcal{A}\), \(\mathcal{B}\), \(\mathcal{C}\) depending only on \(\beta_{0}\), \(\beta_{1}\) such that, for all \(\beta\in[\beta _{0}, \beta_{1}] \),

Lemma 2.3

([32])

The following equality holds for \(\lambda> 0\), \(\alpha> 0\) and \(m \in\mathbb{N:}\)

Lemma 2.4

([33])

For \(0 < \beta< 1\), \(\omega> 0\), we get \(0 \leq E_{\beta,1}(-\omega) < 1\). Therefore, \(E_{\beta,1}(-\omega)\) is completely monotonic, that is,

Lemma 2.5

Let \(\beta> 0\), \(\gamma\in \mathbb {R}\), then we get

Lemma 2.6

For \(\lambda_{k} > 0\), \(\beta> 0\), and positive integer \(k \in \mathbb {N}\), we have

Lemma 2.7

For any \(\lambda_{k}^{\gamma}\) satisfying \(\lambda_{k}^{\gamma} \ge {\lambda_{1}^{\gamma}} > 0\), \(\mathcal{A}\), \(\mathcal{B}\) are positive constants satisfying

where \(\mathcal{A}_{**} =1 - E_{\beta,1}(-\lambda_{1}^{\gamma }T^{\beta }) \).

Proof

By [33], it is easy to get the above conclusion. □

Lemma 2.8

([32])

Let \(E_{\beta,\beta} (-\eta) \ge0\), \(0 < \beta< 1 \), we have

Lemma 2.9

Assume that \(\varphi_{0} \le|\varphi_{\varepsilon}(t)| \le\varphi _{1}\), \(\mathrm{\forall t \in[0,T]}\), by choosing \(\varepsilon\in (0, \frac{\varphi_{0}}{4} )\), then we know

Proof

First of all, we have

From (2.11), we know that

Denoting \(\mathcal{P}(\varphi_{0},\varphi_{1}) = \varphi_{1} + \frac {\varphi _{0}}{4}\), combining with (2.12) leads to (2.10). □

Lemma 2.10

Let \(\varphi: [0,T] \to\mathbb{R}^{+}\) be a continuous function such that \(\frac{\varphi_{0}}{4} \le |\varphi(t) | \le\mathcal {P}(\varphi_{0},\varphi_{1})\). Then we have

and using Lemmas 2.5and 2.6we get

Theorem 2.11

Let \(g, f \in L^{2}(\Omega)\) and \(\varphi\in L^{\infty}(0,T)\), then there exists a unique weak solution \(u \in C([0,T];L^{2}(\Omega)) \cup C([0,T];\mathcal{D}^{\zeta}(\Omega))\) for (1.1) given by

From (2.15), letting \(t = T\), we obtain

By a simple transformation we can see that

This implies that

2.1 The ill-posedness of inverse source problem (1.1)

Theorem 2.12

The inverse source problem is not well-posed.

Proof

Denote \(\|\varphi\|_{L^{\infty}(0,T)} = \mathcal{P}(\varphi _{0},\varphi _{1})\). A linear operator is defined \(\mathcal{R}:L^{2}(\Omega) \to L^{2}(\Omega) \) as follows:

the integral kernel is

Because of \(k(x,\xi) = k(\xi,x)\), we can see that \(\mathcal{R}\) is self-adjoint operator. Next, we are going to prove its compactness. Let us define \(\mathcal{R}_{M}\) as follows:

We check that \(\mathcal{R}_{M}\) is a finite rank operator. From (2.19) and (2.21) we have

This implies that

Therefore, \(\|\mathcal{R}_{M} - \mathcal{R}\|_{L^{2}(\Omega)} \to0\) in the sense of operator norm in \(L(L^{2}(\Omega);L^{2}(\Omega))\) as \(M \to\infty\). We know that \(\mathcal{R}\) is a compact operator. Next, the linear self-adjoint compact operator \(\mathcal{R}\) is

and the corresponding eigenvectors are \({\mathrm {e}}_{k}\) which is known as an orthonormal basis in \(L^{2}(\Omega)\). From (2.19), the inverse source problem can be formulated as an operator equation,

and by Kirsch ([2]), we conclude that the problem (1.1) is ill-posed. We present an example. Fix β and choose

Because of (2.18) and combining (2.26), the source term \(f^{m}\) is

If we have input data \(\ell,g=0\), then the source term \(f=0\). An error in \(L^{2}(\Omega)\) norm between \((\ell,g)\) and \((\ell _{m},g_{m})\) is

Therefore,

The error \(\|f_{m}-f\|_{L^{2}({\Omega})}\) is

From (2.30), we obtain

From (2.31), by choosing \(\gamma> \frac{1}{2}\),

Combining (2.29) and (2.32), we conclude that the inverse source problem is not well-posed. □

2.2 Conditional stability of source term f

Theorem 2.13

Suppose that \(\|f\|_{H^{\gamma j}(\Omega)} \le\mathcal{M}_{1}\) for \(\mathcal{M}_{1}>0\), then

in which \(\overline{C} = (\frac{4}{\varphi_{0}\mathcal{A}_{**}} )^{\frac{j}{j+1}}\).

Proof

From (2.18) and the Hölder inequality, we get

Step 1: For an estimate of \((\mathbb{L}_{1})\), applying Lemma 2.7, it gives

Step 2: For an estimate of \((\mathbb{L}_{2})\), it gives

Combining (2.35) and (2.36), we conclude that

□

3 Quasi-reversibility method

In this section, the quasi-reversibility method is used to investigate problem (1.1), and give information for convergence of the two estimates under a prior parameter choice rule and a posterior parameter choice rule, respectively.

3.1 Construction of a regularization method

We employ the QR method to established a regularized problem, namely

where \(g_{\varepsilon}, \ell_{\varepsilon}\) are perturbed initial data and final data satisfying

and \(\alpha(\varepsilon)\) is a regularization parameter. We can assert that

From now on, for brevity, we denote

and

3.2 A prior parameter choice

Afterwards, \(\|f(\cdot)-f_{\varepsilon,\alpha(\varepsilon )}(\cdot ) \|_{L^{2}(\Omega)}\) is shown under a suitable choice for the regularization parameter. To do this, we introduced the following lemma.

Lemma 3.1

For α, γ, \(\lambda_{1}\) are positive constants. The function \(\mathcal{G}\) is given by

then

Proof

(1) If \(j \geq1 \) then from \(s \ge\lambda_{1}\), we get

(2) If \(0 < j < 1 \) then it can be seen that \(\lim_{s \to0} \mathcal{G}(s) = \lim_{s \to+ \infty}\mathcal {G}(s) = 0\). We have \(\mathcal{G}^{\prime}(s) = \frac{\alpha\gamma s^{\gamma-\gamma j-1} [ 1 - j - \alpha s^{\gamma} j ]}{ (1+ \alpha s^{\gamma } )^{2}} \). Solving \(\mathcal{G}^{\prime}(s) = 0\), we can see that \(s_{0} = (\frac{1-j}{j} )^{\frac{1}{\gamma}} \alpha^{-\frac{1}{\gamma }}\). Therefore,

This is precisely the assertion of the lemma. □

Theorem 3.2

Let f be as (2.18) and the noise assumption (2.13) hold. We obtain the following two cases.

-

If \(0 < j < 1\), by choosing \(\alpha(\varepsilon) = (\frac {\varepsilon}{\mathcal{M}_{1}} )^{\frac{1}{j+1}}\), we have

$$\begin{aligned} \bigl\Vert f(\cdot) - f_{\varepsilon,\alpha(\varepsilon)}(\cdot) \bigr\Vert _{L^{2}(\Omega)} \leq\varepsilon^{\frac{j}{j+1}} \bigl(\mathcal {H} ( \mathcal{B},\gamma, \lambda_{1},\varphi_{0},f ) + j^{j} (1-j)^{1-j} \mathcal{M}_{1}^{\frac{j}{j+1}} \bigr). \end{aligned}$$(3.8) -

If \(j > 1\), by choosing \(\alpha(\varepsilon) = (\frac {\varepsilon}{\mathcal{M}_{1}} )^{\frac{1}{2}}\), we have

$$\begin{aligned} \bigl\Vert f(\cdot) - f_{\varepsilon,\alpha(\varepsilon)}(\cdot) \bigr\Vert _{L^{2}(\Omega)} \leq\varepsilon^{\frac{1}{2}} \biggl(\mathcal {H} ( \mathcal{B},\gamma, \lambda_{1},\varphi_{0},f ) + \frac {1}{\lambda _{1}^{\gamma(j-1)}} \mathcal{M}_{1}^{\frac{1}{2}} \biggr). \end{aligned}$$(3.9)

Proof

By the triangle inequality, we know

The proof falls naturally into two steps.

Step 1: Estimation for \(\|f_{\alpha(\varepsilon)}(\cdot) -f_{\varepsilon, \alpha(\varepsilon)}(\cdot) \|_{L^{2}(\Omega)} \), we receive

From (3.11), using the Lemma 2.10, we have an estimate of \(\|\widetilde{E}_{1}\|_{L^{2}(\Omega)}\):

Next, using the condition of f in Theorem 2.13, we have the estimate for \(\|\widetilde{E}_{2}\|_{L^{2}(\Omega)}\) as follows:

Combining (3.12) and (3.13), one has

Step 2: For an estimation for \(\|f(\cdot) -f_{ \alpha (\varepsilon)}(\cdot) \|_{L^{2}(\Omega)} \), we have

From (3.15), we get

Combining with Lemma 3.1, it gives

Therefore, combining (3.16) and (3.17), we can find that

Choose the regularization parameter as follows:

Finally, from (3.14), (3.18) and (3.19), we conclude the following.

-

If \(0 < j < 1\) then

$$\begin{aligned} \bigl\Vert f(\cdot) - f_{\varepsilon,\alpha(\varepsilon)}(\cdot) \bigr\Vert _{L^{2}(\Omega)} \leq\varepsilon^{\frac{j}{j+1}} \bigl(\mathcal {H} ( \mathcal{B},\gamma, \lambda_{1},\varphi_{0},f ){ \mathcal {M}_{1}^{\frac {1}{1+j}}} + j^{j} (1-j)^{1-j} \mathcal{M}_{1}^{\frac{j}{j+1}} \bigr). \end{aligned}$$(3.20) -

If \(j \geq1\) then

$$\begin{aligned} \bigl\Vert f(\cdot) - f_{\varepsilon,\alpha(\varepsilon)}(\cdot) \bigr\Vert _{L^{2}(\Omega)} \leq\varepsilon^{\frac{1}{2}} \biggl(\mathcal {H} ( \mathcal{B},\gamma, \lambda_{1},\varphi_{0},f ) + \frac {1}{\lambda _{1}^{\gamma(j-1)}} \mathcal{M}_{1}^{\frac{1}{2}} \biggr), \end{aligned}$$(3.21)

where \(\mathcal{H} (\mathcal{B},\gamma, \lambda_{1},\varphi _{0},f ) = ( \frac{2 |\max \{1,\frac{\mathcal{B}}{\lambda _{1}^{\gamma}T^{\beta} } \} | T^{\beta} }{\varphi_{0} [1 - E_{\beta,1}(-\lambda_{1}^{\gamma}T^{\beta}) ]} + \frac{\|f\| _{L^{2}(\Omega)}}{\varphi_{0}\lambda_{1}^{\gamma}} )\). □

3.3 A posterior parameter choice

In this subsection, a posterior regularization parameter choice rule is considered. By the Morozov discrepancy principle here we find ζ such that

see [2], where \(\zeta> 1\) is a constant. We know there exists an unique solution for (3.22) if \([ \langle\ell_{\varepsilon}(x),\mathrm{e}_{k}(x) \rangle - \langle g_{\varepsilon }(x),\mathrm{e}_{k}(x) \rangle E_{\beta,1}(-\lambda_{k}^{\gamma }T^{\beta }) ] > \zeta\varepsilon\).

Lemma 3.3

We need the following auxiliary result.

-

(a)

\(\mathcal{R}(\alpha(\varepsilon))\) is a continuous function.

-

(b)

\(\lim_{\alpha(\varepsilon) \to0^{+}}\mathcal{R}(\alpha (\varepsilon)) = 0\).

-

(c)

\(\lim_{\alpha(\varepsilon) \to+\infty}\mathcal {R}(\alpha (\varepsilon)) = \| \langle\ell_{\varepsilon}(x),\mathrm {e}_{k}(x) \rangle - \langle g_{\varepsilon}(x),\mathrm{e}_{k}(x) \rangle E_{\beta ,1}(-\lambda _{k}^{\gamma}T^{\beta}) \|_{L^{2}(\Omega)}\).

-

(d)

\(\mathcal{R}(\alpha(\varepsilon))\) is a strictly increasing function.

Proof

Our proof starts with the observation that

In this section, for brevity, by we put

-

From (3.23), we have

$$\begin{aligned} \bigl[\mathcal{R}\bigl(\alpha(\varepsilon)\bigr) \bigr]^{2} = \sum_{k=1}^{\infty} \biggl(\frac{\alpha(\varepsilon) \lambda_{k}^{\gamma}}{1 + \alpha (\varepsilon) \lambda_{k}^{\gamma} } \biggr)^{4} \bigl\vert \langle\chi ,\mathrm {e}_{k} \rangle \bigr\vert ^{2} . \end{aligned}$$(3.24)We directly verify the continuity of \(\mathcal{R}\) and \(\mathcal {R}(\alpha(\varepsilon))\) for all \(\alpha(\varepsilon) > 0\).

-

Assume that θ be a positive number. From

$$\Vert \chi_{\varepsilon} \Vert _{L^{2}(\Omega)}^{2} = \sum _{k=1}^{\infty} \bigl\vert \bigl\langle \ell_{\varepsilon}(\cdot) - \langle g_{\varepsilon},\mathrm {e}_{k} \rangle E_{\beta,1}\bigl(-\lambda_{k}^{\gamma}T^{\beta} \bigr),\mathrm {e}_{k}(x) \bigr\rangle \bigr\vert ^{2}, $$there exists a positive number \(m_{\theta}\) such that \(\sum_{k=m_{\theta}+1}^{\infty} | \langle\chi_{\varepsilon},\mathrm {e}_{k} \rangle|^{2} < \frac{\theta^{2}}{2} \cdot\) For \(0 < \alpha (\varepsilon) < \frac{\theta^{\frac{1}{2}}}{\sqrt[4]{2}} [\lambda _{m_{\theta}}^{\gamma}\|\chi_{\varepsilon}^{\frac{1}{2}} \| _{L^{2}(\Omega)} ]^{-1}\), we have

$$\begin{aligned} \bigl[\mathcal{R}\bigl(\alpha(\varepsilon)\bigr) \bigr]^{2} &\leq \sum_{k=1}^{m_{\theta}} \biggl( \frac{\alpha(\varepsilon) \lambda _{k}^{\gamma }}{1 + \alpha(\varepsilon) \lambda_{k}^{\gamma} } \biggr)^{4} \bigl\vert \bigl\langle \chi_{\varepsilon}(x), \mathrm{e}_{k}(x) \bigr\rangle \bigr\vert ^{2} +\sum _{k=m_{\theta}+1}^{\infty} \bigl\vert \langle\chi_{\varepsilon},\mathrm {e}_{k} \rangle \bigr\vert ^{2} \\ &\leq\sum_{k=1}^{m_{\theta}} \bigl[\alpha( \varepsilon)\bigr]^{4} \lambda _{k}^{4\gamma} \bigl\vert \langle\chi_{\varepsilon},\mathrm{e}_{k} \rangle \bigr\vert ^{2} + \frac{\theta^{2}}{2} = \bigl[\alpha(\varepsilon) \bigr]^{4} \lambda ^{4\gamma}_{m_{\theta}} \Vert \chi_{\varepsilon} \Vert ^{2} + \frac {\theta ^{2}}{2} \le \theta^{2}. \end{aligned}$$ -

From (3.24), we can see that \(\mathcal{R}(\alpha(\varepsilon)) \leq | \langle\ell _{\varepsilon }(\cdot),\mathrm{e}_{k}(\cdot) \rangle - \langle g_{\varepsilon}(\cdot ),\mathrm{e}_{k}(\cdot) \rangle E_{\beta,1}(-\lambda_{k}^{\gamma }T^{\beta }),\mathrm{e}_{k}(x) \rangle |^{2}\), one has

$$\begin{aligned} \bigl[\mathcal{R}\bigl(\alpha(\varepsilon)\bigr) \bigr]^{2} &= \sum _{k=1}^{\infty} \frac{ \vert \langle\chi_{\varepsilon},\mathrm{e}_{k} \rangle \vert ^{2}}{ (1 + \frac{1}{\alpha(\varepsilon)\lambda_{k}^{\gamma}} )^{2}} \geq \biggl\Vert \frac{\chi_{\varepsilon}}{1 + \frac{1}{\alpha(\varepsilon )\lambda_{1}^{\gamma}} } \biggr\Vert _{L^{2}(\Omega)}. \end{aligned}$$Hence, \(\|\chi_{\varepsilon}\|_{L^{2}(\Omega)} \geq\mathcal {R}(\alpha (\varepsilon)) \geq\frac{\chi_{\varepsilon}}{1 + \frac{1}{\alpha (\varepsilon)\lambda_{1}^{\gamma}}}\) which implies that \(\lim_{\alpha (\varepsilon) \to+\infty} \mathcal{R}(\alpha(\varepsilon)) = \| \chi _{\varepsilon}\|_{L^{2}(\Omega)}\).

-

For \(0 < \alpha_{1}(\varepsilon) < \alpha_{2}(\varepsilon)\), we get \(\frac{\alpha_{1}(\varepsilon) \lambda_{k}^{\gamma}}{1 + \alpha _{1}(\varepsilon) \lambda_{k}^{\gamma} } < \frac{\alpha _{2}(\varepsilon ) \lambda_{k}^{\gamma}}{1 + \alpha_{2}(\varepsilon) \lambda _{k}^{\gamma } } \cdot\) From \(\|\chi_{\varepsilon}\|_{L^{2}(\Omega)}>0\), there exists a positive integer \(k_{0}\) such that \(| \langle\chi _{\varepsilon},\mathrm{e}_{k_{0}} \rangle |^{2} > 0\). Then \(\mathcal {R}(\alpha_{1}(\varepsilon)) < \mathcal{R}(\alpha_{2}(\varepsilon))\), We can conclude that this is a strictly increasing function. The lemma is proved.

□

Lemma 3.4

For α, γ, \(\lambda_{1}\) are positive constants. The function \(\widetilde{\mathcal{H}}\) is given by

Then

Proof

(1) If \(j \geq1 \), then from \(s \ge\lambda_{1}\), we get

(2) If \(0 < j < 1 \), then it can be seen that \(\lim_{s \to0} \widetilde{\mathcal{H}}(s) = \lim_{s \to+ \infty }\widetilde{\mathcal{H}}(s) = 0\). Afterward, we have \(\widetilde{\mathcal{H}}^{\prime}(s) = \frac{ \frac{\alpha\gamma}{2} s^{\frac{\gamma}{2}-\frac{\gamma j}{2}-1} [(1-j)-\alpha (1+j)s^{\gamma} ]}{ (1+ \alpha s^{\gamma} )^{2}}\). Solving \(\widetilde{\mathcal{H}}^{\prime}(s) = 0\), we know that \(s_{0} = (\frac{1-j}{1+j} )^{\frac{1}{\gamma}} \alpha ^{-\frac {1}{\gamma}}\). Hence, we can assert that

This completes the proof. □

Lemma 3.5

Let \(\alpha(\varepsilon)\) be the solution of (3.23), it gives

which gives the required results.

Proof

Step 1: Using the inequality \((a+b)^{2} \leq2a^{2} + 2b^{2}\), we have \([ \langle\ell_{\varepsilon}(\cdot),\mathrm {e}_{k}(\cdot) \rangle - \langle g_{\varepsilon}(\cdot),\mathrm {e}_{k}(\cdot ) \rangle E_{\beta,1}(-\lambda_{k}^{\gamma}T^{\beta}) ]^{2}\) as follows:

Next, from (3.30), applying Eqs. (2.18) and (2.14), we know that

Combining (3.30) and (3.31), it gives

Step 2:

From (3.33), using Lemma 3.4, we have

Here, put

Therefore, combining (3.33) to (3.34), we know that

From (3.35), it is very easy to see that

Therefore, we conclude that

which gives the required results. □

Theorem 3.6

Assume that \(f(x)\) is defined in (2.18) and the quasi-reversibility solution \(f_{\varepsilon, \alpha(\varepsilon)}\) be given by (3.5). In this theorem, we suppose that \(f(x)\) satisfy a prior bounded condition (2.33), and the condition (3.2) holds. There exists \(\zeta> 1\) such that \([ \langle\ell_{\varepsilon}(\cdot),\mathrm {e}_{k}(\cdot ) \rangle - \langle g_{\varepsilon}(\cdot),\mathrm{e}_{k}(\cdot) \rangle E_{\beta ,1}(-\lambda_{k}^{\gamma}T^{\beta}) ] > \zeta\varepsilon> 0\). Then we have the following.

-

If \(0 < j < 1\), it gives

$$\begin{aligned} \bigl\Vert f(\cdot)-f_{\varepsilon,\alpha(\varepsilon)}(\cdot) \bigr\Vert _{L^{2}(\Omega)} \textit{ is of order } \varepsilon^{\frac{j}{j+1}}. \end{aligned}$$(3.38) -

If \(j \ge1\), it gives

$$\begin{aligned} \bigl\Vert f(\cdot)-f_{\varepsilon,\alpha(\varepsilon)}(\cdot) \bigr\Vert _{L^{2}(\Omega)} \textit{ is of order } \varepsilon^{\frac{1}{2}}. \end{aligned}$$(3.39)

Proof

Applying the triangle inequality, we get

Lemma 3.7

Assume that \(\|f\|_{H^{\gamma j}(\Omega)} \leq\mathcal{M}_{1}\), we see that \(\|f(\cdot)-f_{\alpha(\varepsilon)}(\cdot)\|_{L^{2}( \Omega )}\) is estimated as follows:

Using the Hölder inequality with \(0 < j < 1\), we get

From (3.41), we have estimates through two steps.

Claim 1

From (3.22) and (3.30), we can find that estimation for \(\mathcal{N}_{1}\) as follows:

Claim 2

Using Lemma 2.7then we get an estimate of \(\mathcal{N}_{2}\) as follows:

Combining (3.41) to (3.43), we can conclude that

Now, we give the bound for the first term. Similar to (3.14), we recall that

-

If \(0 < j < 1\) then

$$\begin{aligned} \bigl\Vert f_{\alpha(\varepsilon)}(\cdot)- f_{\varepsilon,\alpha (\varepsilon )}( \cdot) \bigr\Vert _{L^{2}(\Omega)} \leq\varepsilon^{\frac{j}{j+1}} \mathcal{M}_{1}^{\frac{1}{j+1}} \mathcal{Z}_{\gamma,\beta }( \mathcal {V}_{1},\zeta,f,\lambda_{1},j). \end{aligned}$$(3.46) -

If \(j \geq1\) then

$$\begin{aligned} \bigl\Vert f_{\alpha(\varepsilon)}(\cdot)- f_{\varepsilon,\alpha (\varepsilon )}( \cdot) \bigr\Vert _{L^{2}(\Omega)} \leq\varepsilon^{\frac{1}{2}} \mathcal {M}_{1}^{\frac{1}{2}} \mathcal{Z}_{\gamma,\beta}(\mathcal {V}_{2},\zeta ,f,\lambda_{1},1), \end{aligned}$$(3.47)

in which

-

If \(0 < j < 1\), combining (3.44) and (3.46), then we have the following estimate:

$$\begin{aligned} \bigl\Vert f(\cdot)-f_{\varepsilon,\alpha(\varepsilon)}(\cdot) \bigr\Vert _{L^{2}(\Omega)} \text{ is of order } \varepsilon^{\frac{j}{j+1}}. \end{aligned}$$(3.49) -

If \(j \geq1\), combining (3.44) and (3.47), then we have the following estimate:

$$\begin{aligned} \bigl\Vert f(\cdot)-f_{\varepsilon,\alpha(\varepsilon)}(\cdot) \bigr\Vert _{L^{2}(\Omega)} \text{ is of order } \varepsilon^{\frac{1}{2}}. \end{aligned}$$(3.50)

□

4 Simulation

In this example, we consider the assumptions \(T = 1\), \(\lambda _{k}^{\gamma} = k^{2\gamma}\), \(\mathrm{e}_{k}(x) = \sqrt{\frac {2}{\pi}} \sin(kx)\) and choose

In here, we choose the φ function as follows:

We use the fact that we have

From (4.1) and (4.2), we know that

Here, the prior parameter choice rule \(\alpha_{\mathrm{pri}} = (\frac {\varepsilon}{M_{1}} )^{\frac{1}{2}}\), and \(\alpha_{\mathrm{pos}}\) is chosen by the formula in Lemma 3.5 with \(j=1\) and \(\zeta=1.95\). We see that \(M_{1}\) plays a role as a prior condition computed by \(\|f\| _{\mathcal {H}^{\gamma j }(0,\pi)}\). From (2.18), one has

From (3.3), we by definition compute the regularized solution by a quasi-reversibility method:

-

Suppose that the interval \([a,b]\) is split up into n sub-intervals, with n being an even number. Then the Simpson rule is given by

$$\begin{aligned} \int_{a}^{b}\varphi(z)\, dz&\approx{ \frac{h}{3}}\sum_{j=1}^{n/2}{ \bigl[}\varphi(z_{2j-2})+4\varphi(z_{2j-1})+\varphi (z_{2j}){ \bigr]} \\ &={\frac{h}{3}} { \Biggl[} \varphi(z_{0})+2\sum _{j=1}^{n/2-1}\varphi (z_{2j})+4 \sum_{j=1}^{n/2}\varphi(z_{2j-1})+ \varphi(z_{n}){ \Biggr]}, \end{aligned}$$(4.6)where \(z_{j}=a+jh\) for \(j=0,1,\ldots,n-1,n \) with \(h=\frac{b-a}{n}\), in particular, \(z_{0}=a\) and \(z_{n}=b\).

-

Use a finite difference method to discretize the time and spatial variable for \((x,t) \in(0,\pi) \times(0,1)\) as follows:

$$\begin{aligned} &x_{i} = (i-1) \Delta x,\qquad t_{j} = (j-1) \Delta t, \\ &1\le i \le N+1,\qquad 1\le j \le M+1,\qquad \Delta x = \frac{\pi}{N+1} , \qquad\Delta t = \frac{1}{M+1}. \end{aligned}$$ -

Instead of getting accurate data \((\ell_{\varepsilon },g_{\varepsilon},\varphi_{\varepsilon})\), we get approximated data of \((\ell,g,\varphi)\), i.e., the input data \((\ell,g,\varphi)\) is noised by observation data \((\ell_{\varepsilon}, g_{\varepsilon}, \varphi _{\varepsilon} )\) with order ε which satisfies

$$\begin{aligned} \begin{gathered} \ell_{\varepsilon} = \ell+ \varepsilon\bigl(2\operatorname{rand}(\cdot)-1\bigr),\qquad g_{\varepsilon} = g + \varepsilon\bigl(2\operatorname{rand}(\cdot)-1\bigr),\\ \varphi _{\varepsilon} = \varphi+ \varepsilon\bigl(2\operatorname{rand}(\cdot)-1\bigr).\end{gathered} \end{aligned}$$ -

Next, the relative error estimation is defined by

$$\begin{aligned} \mathit{Error} = \frac{ \sqrt{ \sum_{k=1}^{N+1} \vert f_{\varepsilon, \alpha (\varepsilon)}(x_{k}) - f(x_{k}) \vert _{L^{2}(\Omega)}^{2} } }{ \sqrt{ \sum_{k=1}^{N+1} \vert f(x_{k}) \vert _{L^{2}(\Omega)}^{2} } } . \end{aligned}$$(4.7)

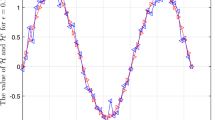

In Fig. 1, we show the convergent estimate between exact solution and its approximation by the quasi-reversibility method under a prior parameter choice rule and under a posterior parameter choice rule. In Fig. 2, we show the convergent estimate between the sought solution and its approximation by QRM and the corresponding errors with \(\varepsilon= 0.2\). Similarly, in Fig. 3 and in Fig. 4, we show the comparison in the cases \(\varepsilon = 0.02\) and \(\varepsilon= 0.0125\). While drawing these figures, we choose values \(\beta= 0.5\), \(\gamma=0.5\) and \(j=1\). In the tables of errors that we calculated in this numerical example, we present the error estimation for both a prior and a posterior parameter choice rule, respectively. In Table 1, we give the comparison of the convergent rate between the sought solution and the regularized solutions. Next, in Table 2, we fixed \(\varepsilon=0.034\). In the first column, with \(\beta_{p+1} = \beta_{p} + 0.11\), \(p=\overline{1,8}\) with \(\beta_{1}=0.11\). Using Eq. (4.7), we show the error estimate between the sought solution and its approximation with \(\beta=0.3\), in the second column and the third column. Similarly for \(\beta=0.5\) and \(\beta=0.7\). According to the observations on the tables, we can conclude that the convergent results are appropriate. It is clear that in Tables 1 and 2 the convergence levels of these two methods are still equivalent.

5 Conclusions

In this work, we use the QR method to regularize the inverse problem to determine an unknown source term of a space-time-fractional diffusion equation. We showed that the problem (1.1) is ill-posed in the sense of Hadamard. Next, we give the results for the convergent estimate between the regularized solution and the sought solution under a prior and a posterior parameter choice rule. We illustrate our theoretical results by a numerical example. In future work, we will be interested in the case of the source function being a function of the general form \(f(x, t) \), and this is still an open problem and will show more difficulty.

References

Podlubny, I.: Fractional Differential Equations. Mathematics in Science and Engineering, vol. 198. Academic Press, San Diego (1990)

Kirsch, A.: An Introduction to the Mathematical Theory of Inverse Problem. Springer, Berlin (1996)

Sweilam, N.H., Al-Mekhlafi, S.M., Assiri, T., Atangana, A.: Optimal control for cancer treatment mathematical model using Atangana–Baleanu–Caputo fractional derivative. Adv. Differ. Equ. 2020(1), Article ID 334 (2020)

Atangana, A., Goufo, E.F.D.: Cauchy problems with fractal-fractional operators and applications to groundwater dynamics. Fractals (2020). https://doi.org/10.1142/s0218348x20400435

Kumar, S., Atangana, A.: A numerical study of the nonlinear fractional mathematical model of tumor cells in presence of chemotherapeutic treatment. Int. J. Biomath. 13(3), Article ID 2050021 (2020)

Abro, K.A., Atangana, A.: Mathematical analysis of memristor through fractal-fractional differential operators: a numerical study. Math. Methods Appl. Sci. 43(10), 6378–6395 (2020)

Atangana, A., Akgül, A., Owolabi, K.M.: Analysis of fractal fractional differential equations. Alex. Eng. J. 59(3), 1117–1134 (2020)

Atangana, A., Hammouch, Z.: Fractional calculus with power law: the cradle of our ancestors. Eur. Phys. J. Plus 134(9), Article ID 429 (2019)

Atangana, A., Bonyah, E.: Fractional stochastic modeling: new approach to capture more heterogeneity. Chaos 29(1), Article ID 013118 (2019)

Adigüzel, R.S., Aksoy, Ü., Karapinar, E., Erhan, İ.M.: On the solution of a boundary value problem associated with a fractional differential equation. Math. Methods Appl. Sci. (2020). https://doi.org/10.1002/mma.6652

Karapinar, E., Abdeljawad, T., Jarad, F.: Applying new fixed point theorems on fractional and ordinary differential equations. Adv. Differ. Equ. 2019, Article ID 421 (2019)

Alqahtani, B., Aydi, H., Karapınar, E., Rakocevic, V.: A solution for Volterra fractional integral equations by hybrid contractions. Mathematics 7, Article ID 694 (2019)

Abdeljawad, T., Agarwal, R.P., Karapinar, E., Kumari, P.S.: Solutions of he nonlinear integral equation and fractional differential equation using the technique of a fixed point with a numerical experiment in extended b-metric space. Symmetry 11, Article ID 686 (2019)

Karapınar, E., Fulga, A., Rashid, M., Shahid, L., Aydi, H.: Large contractions on quasi-metric spaces with an application to nonlinear fractional differential-equations. Mathematics 7, Article ID 444 (2019)

Afshari, H., Kalantari, S., Karapinar, E.: Solution of fractional differential equations via coupled fixed point. Electron. J. Differ. Equ. 2015, Article ID 286 (2015)

Tatar, S., Ulusoy, S.: An inverse source problem for a one-dimensional space-time fractional diffusion equation. Appl. Anal. 94(11), 2233–2244 (2015)

Tatar, S., Tinaztepe, R., Ulusoy, S.: Determination of an unknown source term in a space-time fractional diffusion equation. J. Fract. Calc. Appl. 6(1), 83–90 (2105)

Tatar, S., Tinaztepe, R., Ulusoy, S.: Simultaneous inversion for the exponents of the fractional time and space derivatives in the space-time fractional diffusion equation. Appl. Anal. 95(1), 1–23 (2016)

Tuan, N.H., Ngoc, T.B., Baleanu, D., O’Regan, D.: On well-posedness of the sub-diffusion equation with conformable derivative model. Commun. Nonlinear Sci. Numer. Simul. 89, Article ID 105332 (2020)

Tuan, N.H., Huynh, L.N., Baleanu, D., Can, N.H.: On a terminal value problem for a generalization of the fractional diffusion equation with hyper-Bessel operator. Math. Methods Appl. Sci. 43(6), 2858–2882 (2020)

Yang, F., Zhang, P., Li, X., et al.: Tikhonov regularization method for identifying the space-dependent source for time-fractional diffusion equation on a columnar symmetric domain. Adv. Differ. Equ. 2020, Article ID 128 (2020)

Cheng, J., Nakagawa, J., Yamamoto, M., Yamazaki, T.: Uniqueness in an inverse problem for a one-dimensional fractional diffusion equation. Inverse Probl. 25(11), Article ID 115002 (2009)

Dou, F.F., Hon, Y.C.: Fundamental kernel-based method for backward space-time fractional diffusion problem. Comput. Math. Appl. 71(1), 356–367 (2016)

Yang, F., Fu, C.L.: The quasi-reversibility regularization method for identifying the unknown source for time fractional diffusion equation. Appl. Math. Model. 39(5–6), 1500–1512 (2015)

Yang, F., Sun, Y., Li, X., et al.: The quasi-boundary value method for identifying the initial value of heat equation on a columnar symmetric domain. Numer. Algorithms 82, 623–639 (2019)

Yang, F., Ren, Y.-P., Li, X.-X.: The quasi-reversibility method for a final value problem of the time-fractional diffusion equation with inhomogeneous source. Math. Methods Appl. Sci. 41(5), 1774–1795 (2018)

Yang, F., Fan, P., Li, X.-X., Ma, X.-Y.: Fourier truncation regularization method for a time-fractional backward diffusion problem with a nonlinear source. Mathematics 7, Article ID 865 (2019)

Thach Ngoc, T., Nguyen Huy, T., Pham Thi Minh, T., Mach Nguyet, M., Nguyen Huu, C.: Identification of an inverse source problem for time-fractional diffusion equation with random noise. Math. Methods Appl. Sci. 42(1), 204–218 (2019)

Tuan, N.H., Nane, E.: Inverse source problem for time-fractional diffusion with discrete random noise. Stat. Probab. Lett. 120, 126–134 (2017)

Nguyen, H.T., Le, D.L., Nguyen, V.T.: Regularized solution of an inverse source problem for a time fractional diffusion equation. Appl. Math. Model. 40(19–20), 8244–8264 (2016)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Application of Fractional Differential Equations. North-Holland Mathematics Studies, vol. 204. Elsevier, Amsterdam (2006)

Sakamoto, K., Yamamoto, M.: Initial value/boundary value problems for fractional diffusion–wave equations and applications to some inverse problems. J. Math. Anal. Appl. 382(1), 426–447 (2011)

Wang, J.-G., Wei, T.: Quasi-reversibility method to identify a space-dependent source for the time-fractional diffusion equation. Appl. Math. Model. 39, 6139–6149 (2015)

Acknowledgements

The authors wish to express their sincere appreciation to the editor and the anonymous referees for their valuable comments and suggestions.

Availability of data and materials

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

All authors contributed equally. All the authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare to have no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Karapinar, E., Kumar, D., Sakthivel, R. et al. Identifying the space source term problem for time-space-fractional diffusion equation. Adv Differ Equ 2020, 557 (2020). https://doi.org/10.1186/s13662-020-02998-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02998-y