Abstract

Focusing on delay differential neoclassical growth model in random environments, we introduce the stochastic model to describe the dynamics of the long-run behavior of the economy with a parameter perturbed by white noises. We prove that the global positive solution exists uniquely and estimate its ultimate boundedness in mean and sample Lyapunov exponent. Finally, some numerical tests are given to illustrate theoretical results.

Similar content being viewed by others

1 Introduction

The classic neoclassical growth model with time delay can be described as follows:

with the initial conditions

Here, \(R^{+}=[0,+\infty )\), y is the capital per labor, τ is the delay in the production process, \(\alpha =n+s\mu \) with μ being the depreciation ratio of capital, n is the growth rate of labor, and \(s\in (0,1)\) is the average propensity to save. Moreover, the other positive parameters β, γ, and δ possess obvious economic meanings. For more details on the background of model (1.1), one can refer to the literature [1, 2].

It is easy to see that model (1.1), a determinate delay differential equation, was first presented by Matsumoto and Szidarovszky [1, 3] who created the economic model based on the work of Day [4,5,6], Solow [7], Swan [8], Puu [9], and Bischi et al. [10]. Furthermore, delay differential neoclassical growth model with variable coefficients and delays is also examined in [11,12,13,14,15,16].

Nevertheless, environmental noises often interfere in the delay differential neoclassical growth model. Indeed, May [17] have pointed out that in the population model, because of environmental noises, many parameters involved with the system, such as growth rates, environmental capacity, competition coefficient, and so on, exhibit random fluctuation to some degree. Since the neoclassical growth model is always affected by environmental noises, the stochastic model is more suitable in the real world. However, to the best of our knowledge, almost no one considered the stochastic delay differential neoclassical growth model except Shaikhet [18], who has studied the stability of equilibriums of stochastically perturbed delay differential neoclassical growth model.

Suppose that environmental noises disturb the parameter α, the stochastically perturbed model is described by the stochastic delay differential equation

where \(B(t)\) is a one-dimensional Brownian motion with \(B(0)=0\) defined on a complete probability space \((\varOmega ,\{\mathcal{F}_{t}\}_{t \geq 0},\mathcal{P})\), \(\sigma ^{2}\) denotes the intensity of the noise.

This paper has two purposes. One is to find the criteria to guarantee the unique global positive solution, and the other is to estimate the ultimate boundedness and the sample Lyapunov exponent of (1.3).

Let us quickly sketch the structure of the paper. In Sect. 2, we obtain a simple condition that ensures the global positive solution of (1.3) exists uniquely almost surely. Next, we estimate its the ultimate boundedness in mean and the sample Lyapunov exponent in Sect. 3. In Sect. 4, we present a test example with numerical simulation to support the main results. Finally, we conclude and expect our results in the last section.

2 Preliminary results

In this section, some basic definitions and lemmas are provided in order to prove the main result in the next section.

Definition 2.1

(See [19])

If there is independent of initial conditions (1.2) \(L>0\) satisfying

then equation (1.3) is said to be ultimately bounded in mean.

Lemma 2.1

If \(\alpha >\frac{\sigma ^{2}}{2}\), then for any \(y\in R\),

where \(K=\min \{\frac{\beta ^{2}\gamma ^{2\gamma }}{(2\alpha -\sigma ^{2})\delta ^{2\gamma }e^{2\gamma }},\frac{\beta \gamma ^{\gamma }}{ \delta ^{\gamma }e^{\gamma }}\}\).

Proof

It is easy to analyze the property of the quadratic function, so we omit the proof. □

Lemma 2.2

If \(\alpha >\frac{\sigma ^{2}}{2}\), then for any given initial condition (1.2), (1.3) has a unique solution \(y(t)\) on \([0,+\infty )\) and \(y(t)\) is positive almost surely for \(t\geq 0\).

Proof

Because the constant coefficients of the equations are locally Lipschitz continuous, there is a unique max local solution \(y(t)\) on \([-\tau , \tau _{e})\) for initial condition (1.2), where \(\tau _{e}\) is explosion time. Firstly, we prove \(y(t)>0\) on \([0, \tau _{e}]\) almost surely. We will deal with it stage by stage. For \(t\in [0, \tau ]\), model (1.3) with initial condition (1.2) becomes the following linear stochastic differential equations:

where \(b_{1}(t)=\beta \varphi ^{\gamma }(t-\tau )e^{-\delta \varphi (t- \tau )}\geq 0\) a.s., \(t\in [0,\tau ]\). It is easy to see that (2.3) has the explicit solution \(y(t)=e^{-(\alpha -\frac{\sigma ^{2}}{2})t+ \sigma B(t)}[y(0)+\int _{0}^{t}e^{(\alpha -\frac{\sigma ^{2}}{2})s- \sigma B(s)}b_{1}(s)\,ds]>0\) a.s. for \(t\in [0,\tau ]\). Next, on \(t\in [\tau ,2\tau ]\), (1.3) becomes the following linear stochastic differential equation:

where \(b_{2}(t)=\beta y^{\gamma }(t-\tau )e^{-\delta y(t-\tau )}>0\) a.s., \(t\in [\tau ,2\tau ]\). Also, (2.4) has the explicit solution \(y(t)=e^{-(\alpha -\frac{\sigma ^{2}}{2})(t-\tau )+\sigma (B(t)-B( \tau ))}[y(\tau )+\int _{\tau }^{t}e^{(\alpha -\frac{\sigma ^{2}}{2})s- \sigma B(s)}b_{2}(s)\,ds]>0\) a.s. for \(t\in [\tau ,2\tau ]\). This process can be repeated to demonstrate that for any integer \(m\geq 1\), \(y(t)>0\) on \([m\tau , (m+1)\tau ]\) a.s. Hence, model (1.3) with initial condition (1.2) has the unique solution \(y(t)>0\) almost surely for \(t\in [0, \tau _{e}]\).

In order to prove this solution is global, it is sufficient to show \(\tau _{e}=\infty \) a.s. Let \(k_{0}>0\) be sufficiently large such that \(\max_{-\tau \leq t\leq 0}|y(t)|< k_{0}\). For every integer \(k\geq k_{0}\), define the stopping time

where \(\inf \phi =\infty \) (ϕ is the empty set). It is obvious that \(\tau _{k}\) is increasing as \(k\rightarrow \infty \). Set \(\tau _{\infty }=\lim_{k\rightarrow \infty }\tau _{k}\), where \(\tau _{\infty }\leq \tau _{e}\) a.s. If we can show that \(\tau _{\infty }=\infty \) a.s., then \(\tau _{e}=\infty \) a.s.

Define a \(C^{2}\)-function \(V(y)=y^{2}\). Let \(k\geq k_{0}\) and \(T>0\) be arbitrary. It follows from the Itô formula that, for \(0\leq t\leq \tau _{k}\wedge T\),

where \(LV:R\times R\rightarrow R\) is defined by \(LV(x_{1},x_{2})=-(2 \alpha -\sigma ^{2})x_{1}^{2}+2\beta x_{1}x_{2}^{\gamma }e^{-\delta x _{2}}\). Using (2.2) and noting the fact that \(\sup_{x\in R^{+}}x ^{\gamma }e^{-x}=\frac{\gamma ^{\gamma }}{e^{\gamma }}\), we can show that

In view of (2.6), we obtain from (2.5) that

For any \(t_{1}\in [0,T]\), integrating both sides of (2.7) from 0 to \(\tau _{k}\wedge t_{1}\) yields

This implies

where \(\widetilde{K}= V(y(0))+ \frac{T\beta ^{2}\gamma ^{2\gamma }}{(2 \alpha -\sigma ^{2})\delta ^{2\gamma }e^{2\gamma }}\). Specially, \(EV(y(\tau _{k}\wedge T))\leq \widetilde{K}\) for all \(k\geq k_{0}\).

It is clear that \(V(y(\tau _{k},\omega ))\geq k^{2}\) for every \(\omega \in \{\tau _{k}< T\}\). Then we obtain from (2.8) that

where \(I_{\{\tau _{k}< T\}}\) is the indicator function of \(\{\tau _{k}< T \}\). Letting \(k\rightarrow \infty \) gives \(\lim_{k\rightarrow \infty }P\{\tau _{k}\leq T\}=0\), so \(P\{ \tau _{\infty }\leq T\}=0\). Because \(T>0\) is arbitrary, we obtain \(P\{\tau _{\infty }< \infty \}=0\). Hence \(P\{\tau _{\infty }= \infty \}=1\) is proved and the proof of Lemma 2.2 is completed. □

Remark 2.1

It is amusing to find from Lemma 2.2 that the local existence of positive solution of (1.3) with (1.2) is independent of noise intensities, but the global existence of positive solution is no longer, which is verified by (2.2).

3 Main results

In this section we present a criterion for the ultimate boundedness in mean of model (1.3), which is an important property in the stochastic population model.

Theorem 3.1

Let \(\alpha >\frac{\sigma ^{2}}{2}\) hold and \(y(t)\) be the global solution of (1.3) for any given initial value (1.2). Then \(y(t)\) is positive almost surely on \(t\geq 0\) and it has the properties that

and

In particular, (1.3) is ultimately bounded in mean.

Proof

In view of Lemma 2.2, it is easy to see that \(y(t)>0\) on \(t\geq 0\) almost surely. Again using (1.1) and the formula \(\sup_{x\in R^{+}}x^{\gamma }e^{-x}=\frac{\gamma ^{\gamma }}{e ^{\gamma }}\), we have

This, with the help of the Itô formula, implies that

So

This yields \(\limsup_{t\rightarrow \infty }Ey(t)\leq \frac{ \beta \gamma ^{\gamma }}{\alpha \delta ^{\gamma }e^{\gamma }}\). To show the other assertion (3.2), we derive from (2.5) and (2.6) that

This implies

Noting \(-(\alpha -\frac{\sigma ^{2}}{2}) y^{2}(s)+2\frac{\beta \gamma ^{\gamma }}{\delta ^{\gamma }e^{\gamma }}|y(s)|\leq \frac{2\beta ^{2}\gamma ^{2\gamma }}{(2\alpha -\sigma ^{2})\delta ^{2\gamma } e^{2 \gamma }}\), we obtain from (3.5) that

which suggests that

So the proof is now completed. □

Theorem 3.2

Let \(\alpha >\frac{\sigma ^{2}}{2}\) hold. Then the sample Lyapunov exponent of the solution of (1.3) with (1.2) should not be greater than \(\frac{K}{2}\), that is,

Proof

Using the Itô formula and the fact \(\sup_{x\in R^{+}}x^{\gamma }e^{-x}=\frac{\gamma ^{\gamma }}{e ^{\gamma }}\) once more, we obtain from (1.3) and (2.1) that

where \(M(t)=2\int _{0}^{t}\frac{\sigma y^{2}(s)}{1+y^{2}(s)}\,dB(s)\). For every \(n\geq 0\), application of the known exponential martingale inequality (Theorem 1.7.4 of [20]) yields

Using the Borel–Cantelli lemma, one sees that for almost all \(\omega \in \varOmega \) there are random integers \(n_{0}=n_{0}(\omega ) \geq 1\) such that

That is,

for all \(0\leq t\leq n\), \(n\geq n_{0}\) almost surely. Then (3.7), together with (3.8), implies that

for all \(0\leq t\leq n\), \(n\geq n_{0}\) almost surely. Hence, for almost all \(\omega \in \varOmega \), if \(n\geq n_{0}\), \(n-1\leq t\leq n\), we get

This implies

The proof is over. □

Remark 3.1

One can surprisingly find that the condition \(\alpha >\frac{\sigma ^{2}}{2}\) depends on noise intensity but statement (3.1) does no more. In other words, the ultimate boundedness in mean of (1.3) will fix under small noises. Namely, the property of this boundedness is robust when the environmental noise is small.

4 An example and its numerical simulations

In this section, we provide a test example with numerical simulations to illustrate the main results.

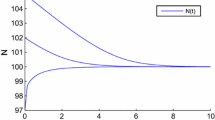

Example 4.1

Consider the following stochastic delay differential neoclassical growth model:

Obviously, \(\alpha =0.0011\), \(\gamma =2\), \(\beta =0.02\), \(\delta = \tau =1\), \(\sigma =0.0447\), and \(\alpha \geq \frac{\sigma ^{2}}{2}\) hold. In view of Theorems 3.1 and 3.2, we conclude that the solution of system (4.1) satisfies \(\limsup_{t\rightarrow \infty }Ey(t)\leq \frac{80}{11e ^{2}}\), \(\limsup_{t\rightarrow \infty }\frac{1}{t}\int _{0}^{t}Ey ^{2}(s)\,ds\leq \frac{640{,}000}{e^{4}}\), and \(\limsup_{t\rightarrow \infty }\frac{1}{t}\ln y(t)\leq \frac{2}{25e ^{2}}\), a.s. Based on Milstein’s numerical method [21], one can verify this fact in numerical simulations of Fig. 1.

Numerical solutions of (4.1) for the initial value 0.1, 0.2, 0.3

5 Conclusions

In this paper, we consider the delay differential neoclassical growth model under a stochastic perturbation. This perturbation is of the white noise type that is directly proportional to the model state. Moreover, we deduce the simple sufficient condition \(\alpha > \frac{\sigma ^{2}}{2}\) that guarantees the global positive solution of (1.3) exists uniquely, and we estimate its ultimate boundedness and sample Lyapunov exponent. In particular, all results of [22] are the special situations of this paper with \(\gamma =1\). It is easy to see that if environmental noises are sufficiently large such that the condition \(\alpha >\frac{\sigma ^{2}}{2}\) does not hold, then Lemma 2.2, Theorems 3.1 and 3.2 are invalid. The future work consists of two parts. One is to find conditions weaker than \(\alpha >\frac{\sigma ^{2}}{2}\) such that all the results of this paper still hold. The other is to study deeply dynamic behaviors of the addressed model, such as persistence, extinction, and so on.

References

Matsumoto, A., Szidarovszky, F.: Asymptotic behavior of a delay differential neoclassical growth model. Sustain. 5, 440–455 (2013)

Chen, W., Wang, W.: Global exponential stability for a delay differential neoclassical growth model. Adv. Differ. Equ. 2014, 325 (2014)

Matsumoto, A., Szidarovszky, F.: Delay differential neoclassical growth model. J. Econ. Behav. Organ. 78, 272–289 (2011)

Day, R.: Irregular growth cycles. Am. Econ. Rev. 72, 406–414 (1982)

Day, R.: The emergence of chaos from classical economic growth. Q. J. Econ. 98, 203–213 (1983)

Day, R.: Complex Economic Dynamics: An Introduction to Dynamical Systems and Market Mechanism. MIT Press, Cambridge (1994)

Solow, R.: A contribution to the theory of economic growth. Q. J. Econ. 70, 65–94 (1956)

Swan, T.: Economic growth and capital accumulation. Econ. Rec. 32, 334–361 (1956)

Puu, T.: Attractions, Bifurcations and Chaos: Nonlinear Phenomena in Economics, 2nd edn. Springer, Berlin (2003)

Bischi, G.I., Chiarella, C., Kopel, M., Szidarovszky, F.: Nonlinear Oligopolies: Stability and Bifurcation. Springer, Berlin (2010)

Vadasz, P., Vadasz, A.S.: On the distinction between lag and delay in population growth. Microb. Ecol. 59, 233–245 (2010)

Long, Z., Wang, W.: Positive pseudo almost periodic solutions for a delayed differential neoclassical growth model. J. Differ. Equ. Appl. 22, 1893–1905 (2016)

Ning, Z., Wang, W.: The existence of two positive periodic solutions for the delay differential neoclassical growth model. Adv. Differ. Equ. 2016, 266 (2016)

Wang, W.: The exponential convergence for a delay differential neoclassical growth model with variable delay. Nonlinear Dyn. 86, 1875–1883 (2016)

Duan, L., Huang, C.: Existence and global attractivity of almost periodic solutions for a delayed differential neoclassical growth model. Math. Methods Appl. Sci. 40, 814–822 (2017)

Xu, Y.: New result on the global attractivity of a delay differential neoclassical growth model. Nonlinear Dyn. 89, 281–288 (2017)

May, R.M.: Stability and Complexity in Model Ecosystems. Princeton University Press, Princeton (1973)

Shaikhet, L.: Stability of equilibriums of stochastically perturbed delay differential neoclassical growth model. Discrete Contin. Dyn. Syst., Ser. B 22(4), 1565–1573 (2017)

Bahar, A., Mao, X.: Stochastic delay population dynamics. Int. J. Pure Appl. Math. 11, 377–399 (2004)

Mao, X.: Stochastic Differential Equations and Applications. Horwood, Chichester (1997)

Kloeden, P.E., Shardlow, T.: The Milstein scheme for stochastic delay differential equations without using anticipative calculus. Stoch. Anal. Appl. 30, 181–202 (2012)

Wang, W., Wang, L., Chen, W.: Stochastic Nicholson’s blowflies delayed differential equations. Appl. Math. Lett. 87, 20–26 (2019)

Funding

This work was supported by the Natural Scientific Research Fund of Zhejiang Province of China (Grant No. LY18A010019), Shanghai Talent Development Fund (Grant No. 2017128), and ‘Xulun’ Scholar Plan of Shanghai Lixin University of Accounting and Finance.

Author information

Authors and Affiliations

Contributions

The authors declare that the study was realized in collaboration with the same responsibility. Both authors read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wang, W., Chen, W. Stochastic delay differential neoclassical growth model. Adv Differ Equ 2019, 355 (2019). https://doi.org/10.1186/s13662-019-2292-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2292-0