Abstract

This paper presents the problem of mixed \(H_{\infty }\)/passive exponential function projective synchronization of delayed neural networks with constant discrete and distributed delay couplings under pinning sampled-data control scheme. The aim of this work is to focus on designing of pinning sampled-data controller with an explicit expression by which the stable synchronization error system is achieved and a mixed \(H_{\infty }\)/passive performance level is also reached. Particularly, the control method is designed to determine a set of pinned nodes with fixed coupling matrices and strength values, and to select randomly pinning nodes. To handle the Lyapunov functional, we apply some new techniques and then derive some sufficient conditions for the desired controller existence. Furthermore, numerical examples are given to illustrate the effectiveness of the proposed theoretical results.

Similar content being viewed by others

1 Introduction

In the recent decades, neural networks (NNs) have been extensively investigated and widely applied in various research fields, for instance, optimization problem, pattern recognition, static image processing, associative memory, and signal processing [1,2,3,4]. In many engineering applications, time delay is one of the typical characteristics in the processing of neurons and plays an important role in causing the poor performance and instability or leading to some dynamic behaviors such as chaos, instability, divergence, and others [5,6,7,8,9]. Therefore, time-delay NNs have received considerable attention in many fields of application.

In the research on stability of neural networks, exponential stability is a more desired property than asymptotic stability because it provides faster convergence rate to the equilibrium point and gives information about the decay rates of the networks. Hence, it is especially important, when the exponential stability property guarantees that, whatever transformation happens, the network stability to store rapidly the activity pattern is left invariant by self-organization [10, 11].

Amongst all kinds of NN behaviors, synchronization is a significant and attractive phenomenon, and it has been studied in various fields of science and engineering [12,13,14]. The synchronization in the network is categorized into two types namely inner and outer synchronization. For inner synchronization, it is a collective behavior within the network and most of the researchers have focused on this type [15, 16]. For outer synchronization, it is a collective behavior between two or more networks [17,18,19].

Furthermore, function projective synchronization (FPS), a generalization of projective synchronization (PS), is one of the synchronization techniques, where two identical (or different) chaotic systems can synchronize up to a scaling function matrix with different initial values. The technique has been widely studied to get a faster chemical rate with its proportional property. Apparently, the unpredictability of the scaling function in FPS can additionally improve the rate of chemical reaction. Recently, many researchers have focused on the exponential stability on function projective synchronization of neural networks [20,21,22].

Passivity theory is an excellent way to determine the stability of a dynamical system. It uses only the general characteristics of the input–output dynamics to present solutions for the proof of absolute stability. Passivity theory formed a fundamental aspect of control systems and electrical networks, in fact its roots can be traced in circuit theory. Recently, a lot of research has been conducted in relation to designing a passive filter for different kinds of systems, for example time-varying uncertain systems, nonlinear systems and switched systems [10, 11, 23]. On the other hand, the problem of \(H_{\infty }\) control has been many discussed for neural networks with time delay because the \(H_{\infty }\) controller design looks to reduce of the effects of external inputs and minimizes the frequency response peak of the system. Recently, [24] was published. For these reasons, lately the passive control problem and \(H_{\infty }\) control problem came to be solved in a unified framework. Then the mixed \(H_{\infty }\) and passive filtering problem for the continuous-time singular system has been investigated [25,26,27]. The deterministic input is presented with bounded energy through the \(H_{\infty }\) setting together with the passivity theory [27, 28]. As stated above, a lot of research has been conducted in this area. However, relatively little research has been conducted into the problem of mixed \(H_{\infty }\) and passive filtering design in discrete-time domain. Consequently, this paper attempts to highlight the benefits of the mixed \(H_{\infty }\) and passive filters for discrete-time impulse NCS with the plant being a Markovian jump system.

Nowadays, continuous-time control, for instance, feedback control, adaptive control, has been mainly used for synchronization analysis. The main point in implementing such continuous-time controllers is that the control input must be continuous, which we cannot always ensure in real-time situations. Moreover, due to advanced digital technology in measurement, the continuous-time controllers could be represented discrete-time controllers to achieve more stability, performance, and precision. So, plentiful research in sampled-data control theory has been conducted. By using a sampled-data controller, the sum of transferred information is dramatically decreased and bandwidth usage is consistent. It renders the control more reliable and handy in real world problems. In [29], one studied dissipative sampled-data control of uncertain nonlinear systems with time-varying delays, and so on [30,31,32,33,34]. Meanwhile, pinning control has been introduced to deal with the problem of large number of controllers added to large size of neural network structure [35,36,37,38,39]. In [40], pinning stochastic sampled-data control for exponential synchronization of directed complex dynamical networks with sampled-data communications has been addressed. The problem of exponential \(H_{\infty }\) synchronization of Lur’e complex dynamical networks using pinning sampled-data control has been investigated in [41]. However, a pinning sampled-data control technique has not yet been implemented for NNs with inertia and reaction–diffusion terms. These motivate us to further study this in the present work.

As discussed above, this is the first time that mixed \(H_{\infty }\)/passive exponential function projective synchronization (EFPS) of delayed NNs with hybrid coupling based on pinning sampled-data control has been studied. Therefore, as a first attempt, this paper is meant to address this problem and the main contributions are summarized now:

-

To solve the synchronization control problem for NNs, we introduce a simple actual mixed \(H_{\infty }\)/passive performance index and we make a comparison with a single \(H_{\infty }\) design.

-

We deal with the EFPS problem for NNs, which is both discrete and distributed time-varying delays consider in hybrid asymmetric coupling, is different from the time-delay case in [25, 28].

-

For our control method, the EFPS is carefully studied via mixed nonlinear and pinning sample-data controls, which is different from previous work [34, 40, 41].

Based on constructing the Lyapunov–Karsovskii functional, the parameter update law and the method of handling Jensen’s and Cauchy inequalities, some novel sufficient conditions for the existence of the EFPS of NNs with mixed time-varying delays are achieved. Finally, numerical examples are given to present the benefit of using pinning sample-data controls.

The rest of the paper is organized as follows. Section 2 provides some mathematical preliminaries and a network model. Section 3 presents the EFPS of NNs with hybrid coupling based on pinning sampled-data control. Some numerical examples with theoretical results and conclusions are given in Sects. 4 and 5, respectively.

2 Problem formulation and preliminaries

Notations: The notations used throughout this work are as follows: \(\mathcal{R}^{n}\) denotes the n-dimensional space; A matrix A is symmetric if \(A=A^{T}\) where the superscript T stands for transpose matrix; \(\lambda _{\max }(A)\) and \(\lambda _{\min }(A)\) stand for the maximum and the minimum eigenvalues of matrix A, respectively. \(z_{i}\) denotes the unit column vector having one element on its ith row and zeros elsewhere; \(\mathcal{C}([a,b],\mathcal{R}^{n})\) denotes the set of continuous functions mapping the interval \([a,b]\) to \(\mathcal{R}^{n}\); \(\mathcal{L}_{2}[0,\infty )\) denotes the space of functions \(\phi : \mathcal{R}^{+} \rightarrow \mathcal{R}^{n}\) with the norm \(\|\phi \|_{\mathcal{L}_{2}} = [\int _{0}^{\infty }|\phi ( \theta )|^{2} \,d\theta ]^{\frac{1}{2}}\); For \(z\in \mathcal{R} ^{n}\), the norm of z is defined by \(\|z\| = [\sum_{i=1}^{n} |z _{i}|^{2} ]^{1/2}\); \(\Vert z(t+\epsilon ) \Vert _{\mathrm{cl}}=\max \{ \sup_{ - \max \{ {\tau _{1}},{\tau _{2}},h\} \le \epsilon \le 0} { \Vert {z(t + \epsilon )} \Vert ^{2}}, \sup_{ - \max \{ {\tau _{1}},{\tau _{2}},h\} \le \epsilon \le 0} \Vert \dot{z}(t + \epsilon ) \Vert ^{2}\}\); \(I_{N}\) denotes an N-dimensional identity matrix; the symbol ∗ denotes the symmetric block in a symmetric matrix. The symbol ⊗ denotes the Kronecker product.

Delayed NNs containing N identical nodes with hybrid couplings are given as follows:

where \(x_{i}(t)\in \mathcal{R}^{n}\) and \(u_{i}(t)\in \mathcal{R}^{n}\) are the state variable and the control input of the node i, respectively. \(y_{i}(t)\in \mathcal{R}^{l}\) are the outputs, \(D=\operatorname{diag}(d_{1}, d_{2}, \ldots , d_{n})>0\) denotes the rate with which the cell i resets its potential to the resting state when being isolated from other cells and inputs. A, B and C are connection weight matrices. \(\tau _{1}(t)\) and \(\tau _{2}(t)\) are the time-varying delays. \(f(x_{i} (\cdot )) = (f_{1}(x_{i1}(\cdot )),f_{2}(x_{i2}( \cdot )),\ldots ,f_{n}(x_{in}(\cdot ))]^{T}\) denotes the neuron activation function vector, the positive constants \(c_{1}\), \(c_{2}\) and \(c_{3}\) are the strengths for the constant coupling and delayed couplings, respectively, \(\omega _{i}(t)\) is the system’s external disturbance, which belongs to \(\mathcal{L}[0, \infty )\), J is a known matrix with appropriate dimension, \(L_{1}, L_{2}, L_{3} \in \mathcal{R}^{n\times n}\) are inner-coupling matrices with constant elements and \(L_{1}\), \(L_{2}\), \(L_{3}\) are assumed as positive diagonal matrices, \(G^{(q)}=(g^{(q)}_{ij})_{N\times N}\) (\(q=1,2,3\)) are the outer-coupling matrices and satisfy the following conditions:

The following assumptions are made throughout this paper.

Assumption 1

The discrete delay \(\tau _{1}(t)\) and distributed delay \(\tau _{2}(t)\) satisfy the conditions \(0\leq \tau _{1}(t)\leq \tau _{1}\), \(\dot{\tau } _{1}(t)<\bar{\tau }_{1}\), and \(0\leq \tau _{2}(t)\leq \tau _{2}\).

Assumption 2

The activation functions \(f_{i}(\cdot )\), \(i=1,2,\ldots ,n\), satisfy the Lipschitzian condition with the Lipschitz constants \(\lambda _{i}>0\):

where Λ is positive constant matrix and \(\varLambda = \operatorname{diag}\{\lambda _{i}, i=1,2,\ldots ,n\}\).

The isolated node of network (1) is given by the following delayed neural network:

where \(s(t)=(s_{1}(t),s_{2}(t),\ldots ,s_{n}(t))^{T}\in \mathcal{R} ^{n}\) and the parameters D, A, B and C and the nonlinear functions \(f (\cdot )\) have the same definitions as in (1).

The network (1) is said to achieve FPS if there exists a continuously differentiable positive function \(\alpha (t)>0\) such that

where \(\|\cdot\|\) stands for the Euclidean vector norm and \(s(t)\in \mathcal{R}^{n}\) can be an equilibrium point. Let \(z_{i}(t)=x_{i}(t)- \alpha (t)s(t)\), be the synchronization error. Then, by substituting it into (1), it is easy to get the following:

where \(\hat{y}_{i}(t)=y_{i}(t)-y_{s}(t)\).

Remark 1

If the scaling function \(\alpha (t)\) is a function of the time t, then the NNs will realize FPS. The FPS includes many kinds of synchronization. If \(\alpha (t)=\alpha \) or \(\alpha (t)=1\), then the synchronization will be reduced to the projective synchronization [17, 18, 26] or common synchronization, [36, 37], respectively. Therefore, the FPS is more general.

Regarding to the pinning sampled-data control scheme, without loss of generality, the first l nodes are chosen and pinned with sampled-data control \(u_{i}(t)\), expressed in the following form:

where

where \(K_{i}\) is a set of the sampled-data feedback controller gain matrices to be designed, for every \(i = 1, 2,\ldots ,N\), \(z_{i}(t_{k})\) is discrete measurement of \(z_{i}(t)\) at the sampling interval \(t_{k}\). Denote the updating instant time of the zero-order-hold (ZOH) by \(t_{k}\); satisfying

where \(h>0\) represents the largest sampling interval.

By substituting (5) into (4), it can be derived that

where \(h(t)=t-t_{k}\) satisfies \(0\leq h(t)\leq h\), and

The initial condition of (6) is defined by

where \(\bar{\theta }={\mathrm{max}}\{\tau _{1}, \tau _{2}, h\}\) and \(\phi _{i}(\theta )\in \mathcal{C}([-\bar{\theta }, 0],\mathcal{R}^{n})\), \(i=1,2, \ldots ,N\).

Let us define

Then, with the Kronecker product, we can reformulate the system (6) as follows:

The following definitions and lemmas are introduced to serve for the proof of the main results.

Definition 2.1

([33])

The network (1) with \(\omega (t)=0\) is an exponential function projective synchronization (EFPS), if there exist two constants \(\mu >0\) and \(\varpi >0\) such that

Definition 2.2

([34])

For given scalar \(\sigma \in [0, 1]\), the error system (8) is EFPS and meets a predefined \(H_{\infty }\)/passive performance index γ, if the following two conditions can be guaranteed simultaneously:

- (i)

-

(ii)

under the zero original condition, there exists a scalar \(\gamma >0\) such that the following inequality is satisfied:

$$\begin{aligned} \int _{0}^{\mathcal{T}_{p}} \bigl[-\sigma \widetilde{y}^{T}(t) \widetilde{y}(t) +2(1-\sigma )\gamma \widetilde{y}^{T}(t)\omega (t) \bigr] \,dt \geq -\gamma ^{2} \int _{0}^{\mathcal{T}_{p}} \bigl[\omega ^{T}(t) \omega (t) \bigr] \,dt, \end{aligned}$$(9)for any \(\mathcal{T}_{p}\geq 0\) and any non-zero \(\omega (t)\in \mathcal{L}_{2}[0, \infty )\).

Lemma 2.3

([6], Cauchy inequality)

For any symmetric positive definite matrix \(N\in M^{n\times n}\) and \(x,y\in \mathcal{R}^{n}\) we have

Lemma 2.4

([6]). For any constant symmetric matrix \(M\in \mathcal{R}^{m\times m}\), \(M=M^{T}>0\), \(b>0\), vector function \(z : [0,b]\rightarrow \mathcal{R}^{m}\) such that the integrations concerned are well defined, one has

Lemma 2.5

([9])

For a positive definite matrix \(S>0\) and any continuously differentiable function \(x:[a,b]\to {\mathcal{R}^{n}}\) the following inequalities hold:

where

Lemma 2.6

([6], Schur complement lemma)

Given constant symmetric matrices X, Y, Z with appropriate dimensions satisfying \(X=X^{T}\), \(Y=Y ^{T}>0\), one has \(X+Z^{T}Y^{-1}Z<0\) if and only if

Remark 2

The condition in Definition 2.2 includes \(H_{\infty }\) performance index γ and passivity performance index γ. If \(\sigma =1\), then the condition will reduce to the \(H_{\infty }\) performance index γ and if \(\sigma =0\), then the condition will reduce to the passivity performance index γ. The condition corresponds to mixed \(H_{\infty }\) and passivity performance index γ for σ in \((0,1)\).

3 Main results

In this section, we present a control scheme to synchronize the NNs (1) to the homogeneous trajectory (3). Then we will give some sufficient conditions in the EFPS of NNs with mixed time-varying delays and hybrid coupling. To simplify the representation, we introduce some notations as follows:

where \(z_{i}\in \mathcal{R}^{n\times 14n}\) is defined as \(z_{i}=[0_{n \times (i-1)n}, I_{n}, 0_{n\times (14-i)n}]\) for \(i=1,2,\ldots,14\).

Theorem 3.1

Given constants \(\tau _{1}\), \(\tau _{2}\), \(\bar{\tau }_{1}\), h, γ and \(\sigma \in [0, 1]\), if real positive matrices \(P\in \mathcal{R}^{4n \times 4n}\), \(Q_{0}\), \(Q_{i}\), \(S_{0}\) \(S_{i},R_{i}\in \mathcal{R}^{n \times n}\) (\(i = 1,2,3\)), positive constants \(\varepsilon _{i}\) \((i = 1,2,\ldots,6)\), and real matrices \({T_{1}}\), \({T_{2}}\) with appropriate dimensions, such that

where

with

then the error system (8) is EFPS and meets a predefined \(\mathcal{H}_{\infty }\)/passive performance index γ.

Proof

We consider a candidate Lyapunov–Krasovskii functional:

where

The time derivatives of \(V(t)\) along the trajectories of the error system (8) can be calculated as

where \(\varPi _{2}\) is defined in (11). Applying Lemma 2.4 and Lemma 2.5, it can be shown that

where \(\varPi _{i}\), \(i = 1,3,4,5\), are defined in (11).

Based on the error system (8), given any matrices \(T_{1}\) and \(T_{2}\) with appropriate dimensions, it is true that

Applying Lemma 2.3 and Lemma 2.4, we have

Then, from (14), (24) and (25)–(31), we obtain

where \(\varPi _{6}\) and \(\varPi _{7}\) are defined in (11). Applying the Schur complement of Lemma 2.6, and defining \(\varOmega (t) = \sigma \widetilde{y}^{T}(t)\widetilde{y}(t) -2(1-\sigma )\gamma \widetilde{y}^{T}(t)\omega (t) -\gamma ^{2} \omega ^{T}(t)\omega (t)\), we have

where ϒ is defined in (10). If we have \(\varUpsilon <0\), then

Thus, under the zero original condition, it can be inferred that for any \(\mathcal{T}_{p}\)

which indicates that

In this case, the condition (9) is ensured for any non-zero \(\omega (t)\in \mathcal{L}_{2}[0,\infty )\). If \(\omega (t)=0\), in view of (33), there exists a scalar δ such that

We are now ready to deal with the EFPS of error system (8). Consider the Lyapunov–Krasovskii functional \(e^{2\alpha t}V(t)\), where α is a constant. By (34), we have

where

From now on, we take α to be a constant satisfying \(\alpha \leq \frac{\delta }{2\mathcal{M}}\), and then obtain from (35)

which, together with (12) and (36), implies that

and therefore

Noticing \(\lambda _{\min } (P) \Vert z(t) \Vert ^{2} \leq V(t)\), we obtain

Letting \(\mu =\frac{\mathcal{M}}{\lambda _{\min } (P)}\) and \(\varpi =2\alpha \), we can rewrite (38) as

Hence, the error system (8) is EFPS. Thus, according to Definition 2.2, the error system (8) is an EFPS with a mixed \(H_{\infty }\) and passivity performance index γ. The proof is completed. □

Based on Theorem 3.1, the pinning sampled-data controller design, ensuring the EFPS of delayed NNs (1), is explained.

Theorem 3.2

Given constants \(\tau _{1}\), \(\tau _{2}\), \(\bar{\tau }_{1}\), h, γ and \(\sigma \in [0, 1]\), if real positive matrices \(P\in \mathcal{R}^{4n \times 4n}\), \(Q_{0}\), \(Q_{i}\), \(S_{0}\) \(S_{i},R_{i}\in \mathcal{R}^{n \times n}\) (\(i = 1,2,3\)), positive constants \(\varepsilon _{i}\), \(i = 1,2,\ldots,6\), and real matrices Y, Z with appropriate dimensions, such that

where

with

then the synchronization error system (8) is exponentially stable and meets a predefined \(\mathcal{H}_{\infty }\)/passive performance index γ. Meanwhile, the designed controller gains are given as follows:

Proof

Denote

then the LMIs (39) can be achieved. This completes the proof. □

Remark 3

In Theorem 3.2, we investigate the EFPS of NNs via mixed control. \(u_{i1}(t)\) is a nonlinear control (not pinning sampled-data control). Based on the principle of EFPS, \(u_{i1}(t)\) needs to be applied for every node. And, based on the principle of pinning sampled-data control, \(u_{i2}(t)\) is a pinning sampled-data control meant to apply for the first l nodes \(0 \leq i \leq l\).

Remark 4

The advantage of this paper is that this is the first time hybrid couplings are addressed containing constant, discrete and distributed delay couplings considered in the problem of exponential function projective synchronization of delayed neural networks including with mixed \(H_{\infty }\) and passivity. So, our conditions are more general than [33, 34] where these couplings are not considered. Hence, we can see that their conditions cannot be applied to our examples.

Remark 5

A challenging problem of this work that is this is the first time the control problem and the passive control problem of exponential function projective synchronization for neural networks with hybrid coupling based on appropriate pinning sampled-data control are studied. The Lyapunov–Krasovskii functional \(V(t)\) in (12) has effectively been applied to the entire information on three kinds of time-varying delays. Moreover, some novel double and triple integral functional terms are constructed, for which Wirtinger-based integral inequalities have been employed to give much tighter upper bound on Lyapunov–Krasovskii functional’s derivative and reduce the conservatism effectively.

4 Numerical examples

Several numerical examples are given to present the feasibility of the proposed method and the effectiveness of the above theoretical results.

Example 4.1

Consider the isolated node with both discrete and distributed delays:

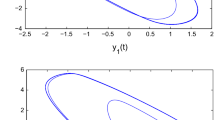

where \(f(s_{i})=\tanh (s_{i}(t))\), \((i=1,2)\), \(\tau _{1}(t)=\frac{{{1}}}{ {1+e^{-t}}}\) and \(\tau _{2}(t)=0.25\sin ^{2}(t)\). Then the trajectory of the isolated node (42) with initial conditions \(s_{1}(r)=0.4 \cos (t)\), \(s_{2}(r)=0.6\cos (t)\), \(\forall r\in [-1, 0]\) is shown in Fig. 1. For mixed \(H_{\infty }/\)passive EFPS of delayed NNs (1), choosing the time-varying scaling function \(\alpha (t)=0.6+0.25\sin (\frac{0.5 \pi }{15}t)\), the coupling strength \(c_{1}=0.5\), \(c_{2}=0.5\), \(c_{3}=0.5\), and the inner-coupling matrices are given by

We consider the directed NNs as shown in Fig. 2. From Fig. 2, the outer-coupling matrices are described by

As presented in Fig. 2, according to the pinned-node selection, nodes 1, 3, 4, 5, and 6 are chosen as controller. By applying our Theorem 3.2, the relation among the parameters h, σ, and γ, are shown in Table 1. Moreover, the histogram referring to the obtained relation is also plotted in Fig. 3. Table 2 gives the maximum allowable sampling period of h for different values of ϖ. Thus, if we set \(\varpi =0.3\) and \(h=0.5\), then the gain matrices of the designed controllers will be obtained as follows:

Furthermore, the EFPS of chaotic behaviour for the isolated node \(\alpha (t)s(t)\) (42) and network \(x_{i}(t)\) (1) with the time-varying scaling function \(\alpha (t)\) is given in Fig. 4. Figure 5 shows the state trajectories of the isolated node \(\alpha (t)s(t)\) (42) and network \(x_{i}(t)\) (1). Figure 6 shows the EFPS errors between the states of the isolated node \(\alpha (t)s(t)\) (42) and network \(x_{i}(t)\) (1) where \(z_{ij}(t)=x_{ij}(t)-\alpha _{j}(t)s _{j}(t)\) for \(i = 1,2,\ldots ,7, j = 1,2\) without pinning sampled-data control (5). Figure 7 shows the EFPS errors between the states of the isolated node \(\alpha (t)s(t)\) (42) and network \(x_{i}(t)\) (1) where \(z_{ij}(t)=x_{ij}(t)- \alpha _{j}(t)s_{j}(t)\) for \(i = 1,2,\ldots ,7, j = 1,2\) with pinning sampled-data control (5).

The trajectory of the isolated node (42)

Example 4.2

Consider the isolated node with both discrete and distributed delays:

where \(f(s_{i})=\tanh (s_{i}(t))\), \((i=1,2)\), \(\tau _{1}(t)=\frac{{{1}}}{ {1+e^{-t}}}\) and \(\tau _{2}(t)=1.2\sin ^{2}(t)\). Then the trajectory of the isolated node (43) with initial conditions \(s_{1}(r)=0.5 \cos (t)\), \(s_{2}(r)=0.1\cos (t)\), \(\forall r\in [-1.2, 0]\) is shown in Fig. 8. Choosing the time-varying scaling function \(\alpha (t)=0.65+0.2\sin (\frac{ \pi }{15}t)\), the coupling strength \(c_{1}=0.1\), \(c_{2}=0.1\), \(c_{3}=0.1\), and the inner-coupling matrices are given by

We consider the undirected NNs as shown in Fig. 9, and the outer-coupling matrices are described by

As presented in Fig. 9, according to the pinned-node selection, nodes 3, 4, and 6 are chosen as controller. Table 3 gives the maximum allowable sampling period of h for different values of ϖ. Thus, if we set \(\varpi =0.3\) and \(h=0.5\), then the gain matrices of the designed controllers will be obtained. Thus, if we set \(\varpi =0.5\) and \(h=0.7\), then the gain matrices of the designed controllers will be obtained as follows:

Furthermore, the EFPS of chaotic behaviour for the isolated node \(\alpha (t)s(t)\) (43) and network \(x_{i}(t)\) (1) with \(\alpha (t)\) is given Fig. 10. Figure 11 shows the state trajectories of the isolated node \(\alpha (t)s(t)\) (43) and network \(x_{i}(t)\) (1). Figure 12 shows the EFPS errors between the states of the isolated node \(\alpha (t)s(t)\) (43) and network \(x_{i}(t)\) (1) where \(z_{ij}(t)=x_{ij}(t)-\alpha _{j}(t)s_{j}(t)\) for \(i = 1,2,\ldots ,6, j = 1,2\) without pinning sampled-data control (5). Figure 13 shows the EFPS errors between the states of the isolated node \(\alpha (t)s(t)\) (43) and network \(x_{i}(t)\) (1) where \(z_{ij}(t)=x_{ij}(t)-\alpha _{j}(t)s _{j}(t)\) for \(i = 1,2,\ldots ,6, j = 1,2\) with pinning sampled-data control (5).

The trajectory of the isolated node (43)

Remark 6

The networks in both examples of our study and the ones in the literature [21, 32, 39] are different. In [21], the FPS of the network is achieved under pinning feedback controller design but the concerned network is still undirected. In [39], the conditions for pinning synchronization are suitable for directed network. In this paper, the pinning synchronization suitable for both directed and undirected networks. So, the considered networks are more general.

Remark 7

Accordingly, it is worthwhile to focus on sampled-data control and it has caused much attention recently [30,31,32,33,34]. In the sampled-data implementation, an important issue is to reduce the data transmission load when using a sampled-data controller to realize the stability, since the computation and communication resources are limited often. However, it is interesting to extend this method to NN systems with even-triggered sampling control in which the control packet can be lost due to several factors, for instance, communication interference, congestion or the transmission event is not triggered and the controller is not updated except when its magnitude reaches the prescribed threshold. Hence, it is necessary to design an event-triggered sampling control for NNs system, which can effectively save the communication bandwidth by only sending a necessary sampling signal through the network; see [42, 43]. Nevertheless, considering the sampled-data controller and the digital form controller, which uses only the sampled information of the system at its instants, the important benefits in using a sampled-data controller are low-cost consumption, reliability, easy installation and being handy in real world problems.

5 Conclusions

In this paper, mixed \(H_{\infty }\)/passive EFPS of NNs with time-varying delays and hybrid coupling are investigated. We have applied the using of nonlinear and pinning sampled-data controls. Some sufficient conditions were derived to guarantee the EFPS by using of the Lyapunov–Krasovskii function method. In order to manipulate the scaling functions, the drive system and response systems could be synchronized up to the desired scaling functions based on the pinning sampled-data control technique. Furthermore, numerical examples are given to illustrate the effectiveness of the proposed theoretical results in this paper as well.

References

Cichocki, A., Unbehauen, R.: Neural Networks for Optimization and Signal Processing. Wiley, Hoboken (1993)

Cao, J., Wang, J.: Global asymptotic stability of a general class of recurrent neural networks with time-varying delays. IEEE Trans. Circuits Syst. I 50, 34–44 (2003)

Wang, J., Xu, Z.: New study on neural networks: the essential order of approximation. Neural Netw. 23, 618–624 (2010)

Shen, H., Huo, S., Cao, J., Huang, T.: Generalized state estimation for Markovian coupled networks under round-robin protocol and redundant channels. IEEE Trans. Cybern. 49, 1292–1301 (2019)

Alimi, A.M., Aouiti, C., Cherif, F., Dridi, F., M’hamdi, M.S.: Dynamics and oscillations of generalized high-order Hopfield neural networks with mixed delays. Neurocomputing 321, 274–295 (2018)

Gu, K., Kharitonov, V.L., Chen, J.: Stability of Time-Delay System. Birkhauser, Boston (2003)

Seuret, A., Gouaisbaut, F.: Wirtinger-based integral inequality: application to time-delay systems. Automatica 49, 2860–2866 (2013)

Maharajan, C., Raja, R., Cao, J., Rajchakit, G.: Fractional delay segments method on time-delayed recurrent neural networks with impulsive and stochastic effects: an exponential stability approach. Neurocomputing 323, 277–298 (2019)

Zhao, N., Lin, C., Chen, B., Wang, Q.G.: A new double integral inequality and application to stability test for time-delay systems. Appl. Math. Lett. 65, 26–31 (2017)

Maharajan, C., Raja, R., Cao, J., Rajchakit, G., Alsaedi, A.: Novel results on passivity and exponential passivity for multiple discrete delayed neutral-type neural networks with leakage and distributed time-delays. Chaos Solitons Fractals 115, 268–282 (2018)

Zhang, X., Fan, X.F., Xue, Y., Wang, Y.T., Cai, W.: Robust exponential passive filtering for uncertain neutral-type neural networks with time-varying mixed delays via Wirtinger-based integral inequality. Int. J. Control. Autom. Syst. 15, 585–594 (2017)

Cao, J., Chen, G., Li, P.: Global synchronization in an array of delayed neural networks with hybrid coupling. IEEE Trans. Syst. Man Cybern., Part B, Cybern. 38, 488–498 (2008)

Huang, B., Zhang, H., Gong, D., Wang, J.: Synchronization analysis for static neural networks with hybrid couplings and time delays. Neurocomputing 148, 288–293 (2015)

Selvaraj, P., Sakthivel, R., Kwon, O.M.: Finite-time synchronization of stochastic coupled neural networks subject to Markovian switching and input saturation. Neural Netw. 105, 154–165 (2018)

Zhang, J., Gao, Y.: Synchronization of coupled neural networks with time-varying delay. Neurocomputing 219, 154–162 (2017)

Yotha, N., Botmart, T., Mukdasai, K., Weera, W.: Improved delay-dependent approach to passivity analysis for uncertain neural networks with discrete interval and distributed time-varying delays. Vietnam J. Math. 45, 721–736 (2017)

Fan, Y.Q., Xing, K.Y., Wang, Y.H., Wang, L.Y.: Projective synchronization adaptive control for different chaotic neural networks with mixed time delays. Optik 127, 2551–2557 (2016)

Yu, J., Hu, C., Jiang, H., Fan, X.: Projective synchronization for fractional neural networks. Neural Netw. 49, 87–95 (2014)

Zhang, W., Cao, J., Wu, R., Alsaedi, A., Alsaadi, F.E.: Projective synchronization of fractional-order delayed neural networks based on the comparison principle. Adv. Differ. Equ. 2018(73), 1 (2018)

Chen, Y., Li, X.: Function projective synchronization between two identical chaotic systems. Int. J. Mod. Phys. C 18, 883–888 (2007)

Shi, L., Zhu, H., Zhong, S., Shi, K., Cheng, J.: Function projective synchronization of complex networks with asymmetric coupling via adaptive and pinning feedback control. ISA Trans. 65, 81–87 (2016)

Niamsup, P., Botmart, T., Weera, W.: Modified function projective synchronization of complex dynamical networks with mixed time-varying and asymmetric coupling delays via new hybrid pinning adaptive control. Adv. Differ. Equ. 2018(435), 1 (2018)

Samidurai, R., Rajavel, S., Zhu, Q., Raja, R., Zhou, H.: Robust passivity analysis for neutral-type neural networks with mixed and leakage delays. Neurocomputing 175, 635–643 (2016)

Shen, H., Xing, M., Huo, S., Wu, Z.G., Park, J.H.: Finite-time \(H_{\infty }\) asynchronous state estimation for discrete-time fuzzy Markov jump neural networks with uncertain measurements. Fuzzy Sets Syst. 356, 113–128 (2019)

Raja, R., Zhu, Q., Samidurai, R., Senthilraj, S., Hu, W.: Improved results on delay-dependent \(H_{\infty }\) control for uncertain systems with time-varying delays. Circuits Syst. Signal Process. 36, 1836–1859 (2017)

Song, S., Song, X., Balsera, I.T.: Mixed \(H_{\infty }\)/passive projective synchronization for nonidentical uncertain fractional-order neural networks based on adaptive sliding mode control. Neural Process. Lett. 47, 443–462 (2018)

Mathiyalagan, K., Park, J.H., Sakthivel, R., Anthoni, S.M.: Robust mixed \(H_{\infty }\) and passive filtering for networked Markov jump systems with impulses. Signal Process. 101, 162–173 (2014)

Wu, Z.G., Park, J.H., Su, H., Song, B., Chu, J.: Mixed \(H_{\infty }\) and passive filtering for singular systems with time delays. Signal Process. 93, 1705–1711 (2013)

Selvaraj, P., Sakthivel, R., Marshal Anthoni, S., Mo, Y.C.: Dissipative sampled-data control of uncertain nonlinear systems with time-varying delays. Complexity 21, 142–154 (2015)

Kumar, S.V., Anthoni, S.M., Raja, R.: Dissipative analysis for aircraft flight control systems with randomly occurring uncertainties via non-fragile sampled-data control. Math. Comput. Simul. 155, 217–226 (2019)

Ma, C., Qiao, H., Kang, E.: Mixed \(H_{\infty }\) and passive depth control for autonomous underwater vehicles with Fuzzy memorized sampled-data controller. Int. J. Fuzzy Syst. 20, 621–629 (2018)

Rakkiyappan, R., Sakthivel, N.: Pinning sampled-data control for synchronization of complex networks with probabilistic time-varying delays using quadratic convex approach. Neurocomputing 162, 26–40 (2015)

Wang, J., Su, L., Shen, H., Wu, Z.G., Park, J.H.: Mixed \(H_{\infty }\)/passive sampled-data synchronization control of complex dynamical networks with distributed coupling delay. J. Franklin Inst. 254, 1302–1320 (2017)

Su, L., Shen, H.: Mixed \(H_{\infty }\)/passive synchronization for complex dynamical networks with sampled-data control. Appl. Math. Comput. 259, 931–942 (2015)

Song, Q., Cao, J.: On pinning synchronization of directed and undirected complex dynamical networks. IEEE Trans. Circuits Syst. I, Regul. Pap. 57, 672–680 (2010)

Song, Q., Cao, J., Liu, F.: Pinning synchronization of linearly coupled delayed neural networks. Math. Comput. Simul. 86, 39–51 (2012)

Zhen, C., Cao, J.: Robust synchronization of coupled neural networks with mixed delays and uncertain parameters by intermittent pinning control. Neurocomputing 141, 153–159 (2014)

Gong, D., Lewis, F.L., Wang, L., Xu, K.: Synchronization for an array of neural networks with hybrid coupling by a novel pinning control strategy. Neural Netw. 77, 41–51 (2016)

Wen, G., Yu, W., Hu, G., Cao, J., Yu, X.: Pinning synchronization of directed networks with switching topologies: a multiple Lyapunov functions approach. IEEE Trans. Neural Netw. Learn. Syst. 26, 3239–3250 (2015)

Zeng, D., Zhang, R., Liu, X., Zhong, S., Shi, K.: Pinning stochastic sampled-data control for exponential synchronization of directed complex dynamical networks with sampled-data communications. Neurocomputing 337, 102–118 (2018)

Rakkiyappan, R., Latha, V.P., Sivaranjani, K.: Exponential \(H_{\infty }\) synchronization of Lur’e complex dynamical networks using pinning sampled-data control. Circuits Syst. Signal Process. 36, 3958–3982 (2017)

Wang, J., Chen, M., Shen, H., Park, J.H., Wu, Z.G.: A Markov jump model approach to reliable event-triggered retarded dynamic output feedback control for networked systems. Nonlinear Anal. Hybrid Syst. 26, 137–150 (2017)

Shen, M., Pare, J.H., Fei, S.: Event-triggered nonfragile filtering of Markov jump systems with imperfect transmissions. Signal Process. 149, 204–213 (2018)

Funding

The first author was financially supported by the National Research Council of Thailand and Khon Kaen University 2019. The second author was supported by Rajamangala University of Technology Isan and the Thailand Research Fund (TRF), the Office of the Higher Education Commission (OHEC) (grant number MRG6180255). The third author was financial supported by Chiang Mai University. The fourth author was financially supported by University of Phayao. The fifth author was financially supported by Khon Kaen University.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Botmart, T., Yotha, N., Niamsup, P. et al. Mixed \(H_{\infty }/\)passive exponential function projective synchronization of delayed neural networks with hybrid coupling based on pinning sampled-data control. Adv Differ Equ 2019, 383 (2019). https://doi.org/10.1186/s13662-019-2286-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2286-y