Abstract

A class of stochastic Runge–Kutta–Nyström (SRKN) methods for the strong approximation of second-order stochastic differential equations (SDEs) are proposed. The conditions for strong convergence global order 1.0 are given. The symplectic conditions for a given SRKN method to solve second-order stochastic Hamiltonian systems with multiplicative noise are derived. Meanwhile, this paper also proves that the stochastic symplectic Runge–Kutta–Nyström (SSRKN) methods conserve the quadratic invariants of underlying SDEs. Some low-stage SSRKN methods with strong global order 1.0 are obtained by using the order and symplectic conditions. Then the methods are applied to three numerical experiments to verify our theoretical analysis and show the efficiency of the SSRKN methods over long-time simulation.

Similar content being viewed by others

1 Introduction

Stochastic differential equations (SDEs) have been widely used in many fields such as biology, economics, physics and finance (see, e.g., [1,2,3]) when modeling dynamical phenomenon with random perturbation. Generally, it is difficult to find explicit solutions of SDEs analytically, so the construction of efficient numerical methods is of great significance. Many effective and reliable numerical methods have been designed for SDEs in recent years, for example [4,5,6,7,8,9,10,11,12,13,14].

For some SDEs with specific properties, most general numerical methods may be inappropriate, especially in the case of preservation of geometric structures over long time for the stochastic Hamiltonian systems

which are given in the Stratonovich sense, where \(H(p,q)\) and \(\widetilde{H}(p,q)\) are two sufficiently smooth functions, \(B(t)\) is a standard one-dimensional Brownian motion defined on the complete probability space \((\varOmega,\mathcal{F},\mathbb{P})\) with a filtration \(\{\mathcal{F}_{t}\}_{t\in [0,T]}\) satisfying the usual conditions. The solution of (1.1) is a phase flow almost surely. A good study of its properties can be found in [15]. Consider the differential two-form

It turns out that the phase flows of (1.1) preserve the symplectic structure [16], i.e.,

Motivated by this, it is natural to search for numerical methods that inherit this property. A numerical method with approximation \(( P_{ n}, Q_{ n})\) is symplectic provided

Second-order stochastic Hamiltonian systems driven by Gaussian noises are significant in scientific applications [17], which can describe the classical Hamiltonian systems perturbed by random forces. In [18], the authors construct stochastic symplectic Runge–Kutta methods of mean-square order 2.0 for second-order stochastic Hamiltonian systems with additive noise by means of colored rooted tree theory. Much work has been done on designing stochastic symplectic Runge–Kutta methods and stochastic symplectic partitioned Runge–Kutta methods to solve stochastic Hamiltonian systems in recent years [19, 20], which could be viewed as a stochastic generalization of the deterministic Runge–Kutta methods. Considering the importance of deterministic Runge–Kutta–Nyström (RKN) methods in solving second-order ordinary differential equations, this paper focuses on the extension of RKN methods to d-dimensional second-order SDEs with multiplicative noise

where \(\xi (t)\) is a scalar white noise process. Here \(f(y), g(y): \mathbb{R}^{d}\mapsto \mathbb{R}^{d}\) are both Borel measurable. Throughout this work, we use the usual notation “∘” to interpret (1.5) in the Stratonovich sense.

The remainder of this paper is organized as follows. Section 2 discusses the order conditions of stochastic Runge–Kutta–Nyström (SRKN) methods. In Sect. 3, the SRKN methods are applied to solve second-order stochastic Hamiltonian systems with multiplicative noise and symplectic conditions of the SRKN methods are discussed. Section 4 is devoted to discussing the conservation of quadratic invariants of the underlying SDEs under the SRKN discretization. In Sect. 5, as an application of the main results, some low-stage stochastic symplectic Runge–Kutta–Nyström (SSRKN) methods with strong global order 1.0 are constructed. Finally, numerical experiments are presented in Sect. 6.

2 The SRKN methods and their order conditions

The second-order SDE (1.5) describes the position of a particle subject to deterministic forcing \(f(y)\) and random forcing \(\xi (t)\) [21]. By introducing a new variable \(z(t)=\dot{y}(t)\), (1.5) can be written as a pair of first-order Stratonovich type SDEs for \(y(t)\) and \(z(t)\), the position and velocity variables:

Given a step size \(h>0\), a stochastic partitioned Runge–Kutta method [20] is applied to compute the approximation \((Y_{n},Z_{n}) \approx (y(t_{n}),z(t_{n}))\) of (2.1), where \(t_{n}=nh\), by setting \((Y_{0},Z_{0})=(y_{0},z_{0})\) and forming

where \(J_{n}=B(t_{n+1})-B(t_{n})\) are independent \(N(0,h)\)-distributed Gaussian random variables. Inserting the formula for \(z_{i}\) into the others, we get

Then we obtain the following definition:

Definition 2.1

Let \(\gamma _{i}\), \(a_{ij}\), \(b_{ij}\), \(\alpha _{i}\), \(\beta _{i}\), \(\tilde{\alpha }_{i}\) and \(\tilde{\beta }_{i}\) be real coefficients, \(i, j=1, 2, \ldots, s\). A SRKN method for solving (2.1) is given by

It is convenient to display the coefficients occurring in (2.2)–(2.4) in the following form, known as a Butcher tableau:

where

To check the order conditions of the SRKN methods (2.2)–(2.4), one has to compare the expansion of the one-step solutions generated by the SRKN methods with the Stratonovich–Taylor expansion of the exact solution to Eq. (2.1). For simplicity of notations, we focus on \(d=1\) and the extension to multi-dimensional case (\(d>1\)) is a similar work.

By Taylor expansion, one shows

and

Substituting (2.6) and (2.7) into (2.3) and (2.4) yields

and

where \(R_{1}\) and \(R_{2}\) is the remainder terms to (2.8) and (2.9), respectively, and \(|\mathbb{E}R_{1}|=O(h^{2})\), \(\mathbb{E}|R_{1}|^{2}=O(h^{3})\), \(|\mathbb{E}R_{2}|=O(h^{2})\), \(\mathbb{E}|R_{2}|^{2}=O(h^{3})\).

Assuming \((y(t_{n}),z(t_{n}))=(Y_{n},Z_{n})\) and using the Stratonovich–Taylor expansion, we derive

where

with \(|\mathbb{E}R_{3}|=O(h^{2})\) and \(\mathbb{E}|R_{3}|^{2}=O(h^{3})\).

Similar to the proof of (2.10), we conclude that

with \(|\mathbb{E}R_{4}|=O(h^{2})\) and \(\mathbb{E}|R_{4}|^{2}=O(h^{3})\).

As an application of Theorem 1.1 in [22], together with (2.8), (2.9), (2.10) and (2.11), we can easily establish the following theorem.

Theorem 2.2

Assume that the necessary coefficients of Stratonovich–Taylor expansion of (2.1) are globally Lipschitz and satisfy the linear growth condition, and

Then the SRKN methods (2.2)–(2.4) converge to the true solution of (2.1) with the strong global order 1.0.

3 Symplectic conditions of the SRKN methods

In this section, we assume that there exist two sufficiently smooth real-valued functions \(V(y)\) and \(\widetilde{H}(y)\) such that \(\partial V(y)/\partial y=f(y)\) and \(\partial \widetilde{H}(y)/\partial y=-g(y)\). Then (2.1) is a stochastic Hamiltonian system determined by two sufficiently smooth real-valued functions \(H(y,z)=z^{\mathrm{T}}z/2-V(y)\) and \(\widetilde{H}(y)\) over the phase space \(\mathbb{R}^{2d}\). Here, \(y_{0}\), \(z_{0}\), y, z, \(( \partial H(y,z)/\partial y )^{\mathrm{T}}\), \((\partial H(y,z)/ \partial z )^{\mathrm{T}}\), \((\partial \widetilde{H}(y)/\partial y )^{\mathrm{T}}\) are d-dimensional column vectors with components \(y^{i}_{0}\), \(z^{i}_{0}\), \(y^{i}\), \(z^{i}\), \(\partial H(y,z)/\partial y^{i}\), \(\partial H(y,z)/\partial z^{i}\), \(\partial \widetilde{H}(y)/ \partial y^{i}\), \(i=1, 2, \ldots, d\). Let \(T=+\infty \). It is shown that the phase flows of (2.1) possess the property of preserving symplectic structure, i.e.,

So it is natural to require that the SRKN method (2.2)–(2.4) inherits this property

Now we discuss the symplectic property of the SRKN method (2.2)–(2.4).

Theorem 3.1

Assume that the coefficients \(\gamma _{i}\), \(a_{ij}\), \(b_{ij}\), \(\alpha _{i}\), \(\beta _{i}\), \(\tilde{\alpha }_{i}\) and \(\tilde{\beta } _{i}\) of (2.2)–(2.4) satisfy the conditions

Then the SRKN method (2.2)–(2.4) is symplectic when applied to solve stochastic Hamiltonian systems (2.1) with \(H(y,z)\) and \(\widetilde{H}(y)\).

Proof

Introduce the temporary notations \(f_{i}=f(y_{i})\), \(g_{i}=g(y_{i})\). Differentiating (2.3) and (2.4), we obtain

Then, by a straightforward computation, we have

For \(i=1, 2, \ldots, s\), differentiating (2.2), we know

Note that for any \(i=1, 2, \ldots, s\)

Substituting (3.5) into (3.3) yields

By virtue of the sufficiently smooth property of \(H(y,z)\), we have

It is easy to check that

Similar to the proof of (3.7), we can deduce that

Inserting (3.1), (3.7) and (3.8) into (3.6), we see that

The proof is completed. □

In Sect. 5, we will give some concrete SRKN methods satisfying condition (3.1) for \(s=1, 2\).

Remark 3.2

If the coefficients of (2.2)–(2.4) satisfy

then the SRKN methods reduce to a deterministic RKN method, and the symplectic conditions (3.1) reduce to the symplectic conditions for deterministic RKN methods [23],

4 The preservation of the quadratic invariants

Quadratic invariants often appear in applications, for example, the conservation law of angular momentum in N-body systems. It is natural to search for numerical methods that preserve quadratic invariants. Many numerical experiments show that such numerical methods not only produce an improved qualitative behavior, but also allow for a more accurate long-time numerical simulation in comparison with general-purpose ones. Let \(T=+\infty \).

Theorem 4.1

Let D be a \(d\times d\) skew-symmetric matrix such that

holds for any \(y\in \mathbb{R}^{d}\). Then system (2.1) possess a quadratic invariant \(Q(y,z)=y^{\mathrm{T}}Dz\), i.e., \(Q(y(t),z(t))=Q(y _{0},z_{0})\).

Proof

By applying the Stratonovich chain rule we have

The proof is complete. □

Theorem 4.2

Let \((Y_{n},Z_{n}) (n=1, 2, \ldots )\) be the numerical solutions to (2.1) produced by the SRKN method (2.2)–(2.4). Assume that the conditions of Theorem 4.1 are satisfied. If the symplectic conditions (3.1) hold, then the SRKN methods preserve the quadratic invariant \(Q(y,z)\) of (2.1), i.e., \(Q(Y_{n+1},Z _{n+1})=Q(Y_{n},Z_{n})\).

Proof

By straightforward calculation, we obtain

Inserting (2.2) into the above equation yields

By the symplectic conditions (3.1),

is derived, which completes the proof. □

5 Some low-stage SSRKN methods

As an application of our main results, both the order conditions (2.12) and symplectic conditions (3.1) are used to construct low-stage SSRKN methods in this section.

5.1 One-stage SSRKN methods

Consider one-stage SRKN methods

substituting the coefficients of (5.1) into (2.12) and (3.1), a family of one-stage SSRKN methods with strong global order 1.0 is given by

where \(a_{11}\) and \(\gamma _{1}\) are free parameters. If we choose \(a_{11}=0.5\) and \(\gamma _{1}=0.5\), then the above SSRKN method reduces to

5.2 Two-stage explicit SSRKN methods

For solving (2.1), a class of two-stage explicit SRKN methods is given by

According to the order conditions (2.12) and Theorem 3.1, a family of two-stage explicit SSRKN methods with strong global order 1.0 is given by

where \(\gamma _{1}\), \(\gamma _{2}\), \(\tilde{\alpha }_{1}\) and \(\tilde{\beta }_{1}\) are free parameters. If we choose \(\gamma _{1}=1/4\), \(\gamma _{2}=3/4\), \(\tilde{\alpha }_{1}=1/2\) and \(\tilde{\beta }_{1}=1/2\), then method (5.4) reduces to

6 Numerical experiments

In this section, the superiority of our symplectic integrators is illustrated by some numerical examples. To compare our symplectic integrators with non-symplectic ones, two non-symplectic SRKN methods with strong global order 1.0 are given by

and

6.1 Linear stochastic oscillator with additive noise

Here we perform computations for linear stochastic oscillator with additive noise [19], which is defined by \(H(y,z)=(y^{2}+z ^{2})/2\) and \(\widetilde{H}(y,z)=\sigma y\), where σ is the noise intensity. We also have the following form:

Since (6.3) is a SDE with additive noise, its Itô and Stratonovich form are identical. We consider it as a SDE of Stratonovich type. In addition, (6.3) possesses linear growth second moment, i.e.,

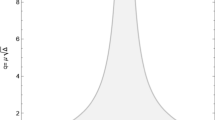

Firstly, the convergence of the numerical methods we proposed will be tested. We simulate them at terminal time \(T=1\), with \(\sigma =1\), \(z_{0}=0\), \(y_{0}=1\) in system (6.3). 1000 different discretized Brownian paths over [0,1] will be computed with step size 2−14. For each path, Euler–Maruyama method, method (6.1), method (5.2), method (5.5) are applied with five different step sizes: \(h=2^{-2}, 2^{-3}, 2^{-4}, 2^{-5}, 2^{-6}\). To simulate the exact solution of (6.3), we just use the Euler–Maruyama method with \(h=2^{-14}\) as the reference solution. We present the sample average errors, i.e.,

at the terminal time \(T=1\) in Table 1. Figure 1 shows the results of Table 1 in a log–log plot.

Then we want to check their ability of preserving the linear growth property of the second moment by numerical tests. The second moment \(\mathbb{E}(Y^{2}_{n}+Z^{2}_{n})\) of the numerical solution is approximated by taking sample average of 1000 sample trajectories, i.e.,

Figure 2 shows the linear growth property of second moment of the numerical solutions produced by the Euler–Maruyama method, method (6.1), method (5.2) and method (5.5) with fixed step size \(h=0.1\), \(\sigma =1\) and \(T=500\), respectively, and the slope of the reference line is 1.

Linear growth property of second moment for Eq. (6.3)

From the point of view of geometry, the symplecticity of the system (6.3) is equivalent to the preservation of the area of the triangle in the phase space along the flow of (6.3), which means \(S_{n}=S_{0}\), where \(S_{n}\) denotes the area of the triangle at time \(t_{n}\), namely, value \(S_{n}/S_{0}\) should remain at 1 along the exact flow of (6.3). Let \(\sigma =1\) and points for the initial triangle be \((0,0), (1,0), (1,1)\), the Euler–Maruyama method, method (6.1), method (5.2), method (5.5) are applied to solve (6.3) with \(h=0.1\) and \(T=100\). The evolution of the quantity \(S_{n}/S_{0}\) along the numerical solutions is illustrated in Fig. 3. The changes of the triangles along the numerical solutions are displayed in Fig. 4. From Fig. 3 and Fig. 4, it is easy to see that method (5.2) and method (5.5) can preserve the symplecticity of (6.3) exactly, while the Euler–Maruyama method and method (6.1) cannot.

6.2 Synchrotron oscillations of particles in storage rings

The second-order stochastic Hamiltonian system defined by \(H(p,q)=- \cos (q)+p^{2}/2\) and \(\widetilde{H}(p,q)=\sigma \sin (q)\), where σ is the noise intensity, can be written in the following form [20]:

System (6.4) is used to simulate synchrotron oscillations of a particle in a storage ring. We consider it as a SDE of Stratonovich type. The phase flow of (6.4) preserves the symplectic structure (1.3). Choose the coefficients of Eq. (6.4) as \(\sigma =0.2\), \(p_{0}=1\), \(q_{0}=1\) and \(T=200\). Figure 5 exhibits numerical solutions of a sample phase trajectory of (6.4) simulated by the Euler–Maruyama method, method (6.1), method (5.2) and method (5.5) with fixed step size \(h=0.02\), respectively.

Simulation of a sample trajectory of Eq. (6.4)

The plots show the SSRKN methods (5.2) and (5.5) can keep the vibration of the original stochastic system, but other two non-symplectic methods do not have this property.

6.3 Stochastic Kepler problem

In order to test the SSRKN methods of preserving the quadratic invariants of original SDEs, we consider stochastic Kepler problem defined by

which can be written in the following form:

According to Theorem 4.1, (6.5) possesses a quadratic invariant

Due to Theorem 4.2, the quadratic invariant will be conserved by the SSRKN methods. So, we use two-stage explicit symplectic method (5.5) and non-symplectic method (6.2) to check the ability of preserving quadratic invariant. As an initial condition we choose

where we set \(e=0.6\). Consequently, \(L_{0}=L(q_{10},q_{20},p_{10},p _{20})=0.8\). Fix the step size \(h=0.05\). Figure 6 shows that numerical solution created by method (5.5) preserve the quadratic invariant L of (6.5) exactly, while the non-symplectic method (6.2) does not have this property.

The preservation of the quadratic invariant L of (6.5) with a sample trajectory

All of these numerical experiments demonstrate the superior behavior of our SSRKN methods in long-time simulations compared to some non-symplectic numerical methods.

7 Conclusions

This paper presents the extension of deterministic RKN methods [23] to the stochastic counterpart. For general autonomous d-dimensional second-order SDEs in the Stratonovich sense, a class of SRKN methods is proposed. The order conditions of strong global order 1.0 are obtained. The symplectic conditions of the SRKN methods for solving second-order stochastic Hamiltonian systems with multiplicative noise are given. It is proved that the SSRKN methods can preserve the quadratic invariants of underlying stochastic systems. A class of one-stage SSRKN methods and two-stage explicit SSRKN methods with strong global order 1.0 are constructed based on our main results. Numerical experiments are given to verify the results of our theoretical analysis and show that the methods are valid in a long-time numerical simulation of SDEs.

References

Mao, X.: Stochastic Differential Equations and Applications. Horwood, New York (1997)

Liu, M., Zhu, Y.: Stability of a budworm growth model with random perturbations. Appl. Math. Lett. 79, 13–19 (2018)

Liu, M., Yu, L.: Stability of a stochastic logistic model under regime switching. Adv. Differ. Equ. (2015). https://doi.org/10.1186/s13662-015-0666-5

Ding, X., Ma, Q., Zhang, L.: Convergence and stability of the split-step θ-method for stochastic differential equations. Comput. Math. Appl. 60(5), 1310–1321 (2010)

Huang, C.: Exponential mean square stability of numerical methods for systems of stochastic differential equations. J. Comput. Appl. Math. 236(16), 4016–4026 (2012)

Zong, X., Wu, F., Huang, C.: Preserving exponential mean square stability and decay rates in two classes of theta approximations of stochastic differential equations. J. Differ. Equ. Appl. 20(7), 1091–1111 (2014)

Mao, W., Hu, L., Mao, X.: Approximate solutions for a class of doubly perturbed stochastic differential equations. Adv. Differ. Equ. (2018). https://doi.org/10.1186/s13662-018-1490-5

Hu, L., Li, X., Mao, X.: Convergence rate and stability of the truncated Euler–Maruyama method for stochastic differential equations. J. Comput. Appl. Math. 337, 274–289 (2018)

Li, X., Ma, Q., Yang, H., Yuan, C.: The numerical invariant measure of stochastic differential equations with Markovian switching. SIAM J. Numer. Anal. 56(3), 1435–1455 (2018)

Yin, Z., Gan, S.: An improved Milstein method for stiff stochastic differential equations. Adv. Differ. Equ. (2015). https://doi.org/10.1186/s13662-015-0699-9

Yin, Z., Gan, S.: Chebyshev spectral collocation method for stochastic delay differential equations. Adv. Differ. Equ. (2015). https://doi.org/10.1186/s13662-015-0447-1

Wang, X., Gan, S., Wang, D.: A family of fully implicit Milstein methods for stiff stochastic differential equations with multiplicative noise. BIT Numer. Math. 52(3), 741–772 (2012)

Tan, J., Mu, Z., Guo, Y.: Convergence and stability of the compensated split-step θ-method for stochastic differential equations with jumps. Adv. Differ. Equ. (2014). https://doi.org/10.1186/1687-1847-2014-209

Tan, J., Yang, H., Men, W., Guo, Y.: Construction of positivity preserving numerical method for jump-diffusion option pricing models. J. Comput. Appl. Math. 320, 96–100 (2017)

Kunita, H.: Stochastic Flows and Stochastic Differential Equations. Cambridge University Press, Cambridge (1992)

Milstein, G.N., Repin, Y.M., Tretyakov, M.V.: Symplectic integration of Hamiltonian systems with additive noise. SIAM J. Numer. Anal. 39(6), 2066–2088 (2002)

Milstein, G.N., Tretyakov, M.V.: Stochastic Numerics for Mathematical Physics. Springer, Berlin (2004)

Zhou, W., Zhang, J., Hong, J., Song, S.: Stochastic symplectic Runge–Kutta methods for the strong approximation of Hamiltonian systems with additive noise. J. Comput. Appl. Math. 325, 134–148 (2017)

Ma, Q., Ding, D., Ding, X.: Symplectic conditions and stochastic generating functions of stochastic Runge–Kutta methods for stochastic Hamiltonian systems with multiplicative noise. Appl. Math. Comput. 219(2), 635–643 (2012)

Ma, Q., Ding, X.: Stochastic symplectic partitioned Runge–Kutta methods for stochastic Hamiltonian systems with multiplicative noise. Appl. Math. Comput. 252, 520–534 (2015)

Gardiner, C.W.: Handbook of Stochastic Methods for Physics, Chemistry, and the Natural Sciences. Springer, Berlin (2004)

Milstein, G.N.: Numerical Integration of Stochastic Differential Equations. Kluwer Academic Publishers, Dordrecht (1995)

Sanz-Serna, J.M., Calvo, M.P.: Numerical Hamiltonian Problems. Chapman & Hall, London (1994)

Acknowledgements

The authors would like to express their appreciation to the editors and the anonymous referees for their many valuable suggestions and for carefully correcting the preliminary version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China (Nos. 11501150 and 11701124) and the Natural Science Foundation of Shandong Province of China (No. ZR2017PA006).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work. They all read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ma, Q., Song, Y., Xiao, W. et al. Structure-preserving stochastic Runge–Kutta–Nyström methods for nonlinear second-order stochastic differential equations with multiplicative noise. Adv Differ Equ 2019, 192 (2019). https://doi.org/10.1186/s13662-019-2133-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2133-1