Abstract

In this paper, we propose the weighted cumulative past (residual) inaccuracy for record values. For this concept, we obtain some properties and characterization results such as relationships with other reliability functions, bounds, stochastic ordering and effect of linear transformation. Dynamic versions of this weighted measure are considered. We also study a problem of estimating the weighted cumulative past inaccuracy by means of the empirical cumulative inaccuracy for lower record values.

Similar content being viewed by others

1 Introduction

Let X and Y be two non-negative random variables with distribution functions \(F(x)\), \(G(x)\) and survival functions \(\bar{F}(x)\), \(\bar{G}(x)\), respectively. If \(f (x)\) is the actual probability density function (pdf) corresponding to the observations and \(g(x)\) is the density assigned by the experimenter, then the inaccuracy measure of X and Y is defined by Kerridge [9] as

Recently, Kundu [10] considered a weighted measure of inaccuracy as

Analogous to the Kerridge measure of inaccuracy (1.1), Thapliyal and Taneja [17] proposed a cumulative past inaccuracy (CPI) measure as

When \(G(x)= F(x)\), Eq. (1.3) becomes cumulative entropy studied by Di Crescenzo and Longobardi [4]. Kundu et al. [11] studied some properties of CPI for truncated random variables. In analogy with (1.2), we define the weighted cumulative past inaccuracy (WCPI) as

Similarly, Kundu et al. [11] introduced the concept of cumulative residual inaccuracy (CRI) which is defined as

In analogy with (1.4), we define the weighted cumulative residual inaccuracy (WCRI) as

Let \(X_{1},X_{2},\ldots \) be a sequence of iid random variables having an absolutely continuous cdf \(F(x)\) and pdf \(f(x)\). An observation \(X_{j}\) is called a lower record (upper record) value if its value is less (greater) than that of all previous observations. Thus, \(X_{j}\) is a lower (upper) record if \(X_{j}<(>)X_{i}\) for every \(i< j\). Further, assume that \(T_{1}=1\) and \(T_{n}=\min \{j: j>T_{n-1}, X_{j}< X _{T_{n-1}}\}\) are known as lower record time sequence. Then, the lower record value sequence can be defined by \(L_{n}=X_{T_{n}}\), \(n\geq 1\). The density function and cumulative distribution function (cdf) of \(L_{n}\), which are denoted by \(f_{L_{n}}\) and \(F_{L_{n}}\), respectively, are given by

Similarly, assume that \(Z_{1}=1\) and \(Z_{n}=\min \{j^{*}: j^{*}>Z_{n-1}, X_{j^{*}}>X_{Z_{n-1}}\}\) are known as upper record time sequence. Then, \(R_{n}=X_{Z_{n}}\), \(n\geq 1\) are said to be upper record values. The pdf of \(R_{n}\) is given by

Also, the survival function of \(R_{n}\) can be obtained as

Record values are applied in problems such as industrial stress testing, meteorological analysis, hydrology, sporting and economics. In reliability theory, record values are used to study, for example, technical systems which are subject to shocks, e.g., peaks of voltages. For more details about records and their applications, one may refer to Arnold et al. [1]. Several authors have worked on measures of inaccuracy for ordered random variables. Thapliyal and Taneja [16] proposed a measure of inaccuracy between the ith order statistic and the parent random variable. Thapliyal and Taneja [17] developed measures of dynamic cumulative residual and past inaccuracy. They studied characterization results of these dynamic measures under proportional hazard model and proportional reversed hazard model. Thapliyal and Taneja [18] have introduced the measure of residual inaccuracy of order statistics and proved a characterization result for it. Tahmasebi and Daneshi [14] and Tahmasebi et al. [15] obtained some results for inaccuracy measures of record values. In this paper, we propose a weighted cumulative past (residual) inaccuracy of record values and study its characterization results. The paper is organized as follows. In Sect. 2, we consider a weighted measure of inaccuracy associated with \(F_{L_{n}}\) and F and obtain some results of its properties. In Sect. 3, we study a dynamic version of WCPI between \(F_{L_{n}}\) and F. In Sect. 4, we propose empirical WCPI of lower record values. In Sect. 5, we study WCRI and its dynamic version between \(\bar{F}_{R_{n}}\) and F̄, and obtain some results about their properties. Throughout the paper we assume that the terms increasing and decreasing are used in non-strict sense.

2 Weighted cumulative past inaccuracy for \(L_{n}\)

In this section, we propose a weighted measure of CPI between \(F_{L_{n}}\) and F. For this concept, we study some properties and characterization results under some assumptions.

Definition 2.1

Let X be a non-negative absolutely continuous random variable with cdf F. Then, we define the WCPI between \(F_{L_{n}}\) (distribution function of the nth lower record value \(L_{n}\)) and F as

where \(\tilde{\lambda }(x)=\frac{f(x)}{F(x)}\) is the reversed hazard rate function and \(L_{j+2}\) is a random variable with density function \(f_{L_{j+2}}(x)=\frac{[-\log F(x)]^{j+1}f(x)}{(j+1)!}\).

In the following, we present some examples and properties of \(I^{w}(F_{L_{n}},F)\).

Example 2.1

-

(i)

If X has an inverse Weibull distribution with the cdf \(F(x)=\exp (-(\frac{\alpha }{x})^{\beta })\), \(x>0\), then we have

$$ I^{w}(F_{L_{n}},F)=\frac{\alpha ^{2}}{\beta }\sum _{j=0}^{n-1}\frac{ \varGamma (\frac{(j+1)\beta -2}{\beta } )}{j!}. $$ -

(ii)

If X is uniformly distributed on \([0,\theta ]\), then we obtain

$$ I^{w}(F_{L_{n}},F)=\theta ^{2}\sum _{j=0}^{n-1}(j+1) \biggl(\frac{1}{3} \biggr) ^{j+2}. $$ -

(iii)

If X has a power distribution with cdf \(F(x)=[\frac{x}{\alpha }]^{\beta }\), \(0< x<\alpha \), \(\beta >0\), then we obtain

$$ I^{w}(F_{L_{n}},F)=\alpha ^{2}\sum _{j=0}^{n-1}(j+1)\frac{\beta ^{j+1}}{(2+ \beta )^{j+2}}. $$

Proposition 2.2

Let X be a non-negative random variable with cdf F, then we have

Proof

By (2.1) and using the relation \(-\log F(x)=\int _{x}^{\infty } \tilde{\lambda }(z)\,dz\), we have

So, the proof is completed. □

The weighted mean inactivity time (WMIT) function of a non-negative random variable X is given by

Now, the WMIT of \(L_{n}\) is given by

Note that \(\mu ^{w}_{n}(t)\) is analogous to the mean residual waiting time used in reliability and survival analysis (for more details, see Bdair and Raqab [2]).

Proposition 2.3

Let X be a non-negative random variable with cdf F. Then, we have

Proof

From (2.2) and (2.3), we obtain

yielding the claim. □

Proposition 2.4

Let X be an absolutely continuous non-negative random variable with \(I^{w}(F_{L_{n}},F)<\infty \), for all \(n\geq 1\). Then we have

where \(\tilde{h}^{w}_{j+1}(t)=\int _{t}^{\infty }x [-\log F(x) ] ^{j+1}\,dx\).

Proof

By using (2.1) and Fubini’s theorem, we obtain

□

Remark 2.1

Let X be a symmetric random variable with respect to the finite mean \(\mu =E(X)\), i.e., \(F(x+\mu )=1-F(\mu -x)\) for all \(x\in \mathbb{R}\). Then

where \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})\) is the weighted cumulative residual measure of inaccuracy between \(\bar{F}_{R_{n}}\) (survival function of the nth upper record value \(R_{n}\)) and F̄.

Now we can prove an important property of the inaccuracy measure using some properties of stochastic ordering. For that we present the following definitions:

-

1.

A random variable X is said to be smaller than Y according to stochastic ordering (denoted by \(X\leq ^{st}Y\)) if \(P(X\geq x)\leq P(Y \geq x)\) for all x. It is known that \(X \leq ^{st}Y \Leftrightarrow \mathbb{E}(\phi (X))\leq \mathbb{E}(\phi (Y))\) for all increasing functions (equivalency (1.A.7) in Shaked and Shanthikumar [13]).

-

2.

A random variable X is said to be smaller than Y in likelihood ratio ordering (denoted by \(X\leq ^{lr}Y\)) if \(\frac{g(x)}{f(x)}\) is increasing in x.

-

3.

A random variable X is said to be smaller than a random variable Y in the increasing convex order, denoted by \(X \leq ^{icx}Y\), if \(\mathbb{E}(\phi (X))\leq \mathbb{E}(\phi (Y))\) for all increasing convex functions ϕ such that the expectations exist.

-

4.

A non-negative random variable X is said to have a decreasing reversed hazard rate on average (DRHRA) if \(\frac{\tilde{\lambda }(x)}{x}\) is decreasing in x.

-

5.

A non-negative random variable X is said to have a decreasing hazard rate on average (DHRA) if \(\frac{\lambda (x)}{x}\) is decreasing in x.

Theorem 2.5

Suppose that a non-negative random variable X is DRHRA, then

Proof

Let \(f_{L_{n}}(x)\) be the pdf of the nth lower record value \(X_{L_{n}}\). Then, the ratio \(\frac{f_{L_{n}}(x)}{f _{L_{n+1}}(x)}=\frac{-n}{\log F(x)}\) is increasing in x. Therefore, \(X_{n+1}\leq ^{lr}X_{n}\), and this implies that \(X_{n+1}\leq ^{st}X _{n}\), i.e., \(\bar{F}_{n+1}(x)\leq \bar{F}_{n}(x)\) (for more details, see Shaked and Shanthikumar ([13], Chap. 1)). This is equivalent (see Shaked and Shanthikumar ([13], p. 4)) to having

for all increasing functions ϕ such that these expectations exist. Thus, if X is DRHRA and \(\tilde{\lambda }(x)\) is its reversed hazard rate, then \(\frac{x}{\tilde{\lambda }(x)}\) is increasing in x. From (2.1), we have that

Thus the proof is completed. □

Proposition 2.6

Let X be a non-negative random variable with absolutely continuous cumulative distribution function \(F(x)\). Then for \(n=1,2,\dots \), we have

Proof

Since \(-\log F(x)\geq 1-F(x)\), the proof follows by recalling (2.1). □

Proposition 2.7

Let X be a non-negative random variable with absolutely continuous cumulative distribution function \(F(x)\). Then for \(n=1,2,\dots \), we have

Assume that \(\tilde{X}_{\theta }\) denotes a non-negative absolutely continuous random variable with the distribution function \(H_{\theta }(x)=[F(x)]^{\theta }\), \(x\geq 0\). We now obtain the cumulative measure of inaccuracy between \(H_{L_{n}}\) and H as follows:

Proposition 2.8

If \(\theta \geq 1\), then for any \(n=1,2,\dots \), we have

Proof

Suppose that \(\theta \geq 1\), then it is clear that \([F(x)]^{\theta }\leq F(x)\), and hence we have

□

Proposition 2.9

Let X be a non-negative random variable with cdf F, then an analytical expression for \(I^{w}(F_{L_{n}},F)\) is given by

where

is a weighted generalized cumulative entropy (WGCE) which was introduced by Kayal and Moharana [7].

Proposition 2.10

Let \(a,b>0\). Then for \(n=1,2,\ldots \) , it holds that

Proof

From (2.8), we have

The proof is completed. □

Recently, Cali et al. [3] introduced a generalized CPI of order m defined as

In analogy with the measure defined in Eq. (2.11), we now introduce a weighted generalized CPI (WGCPI) of order m defined as

Remark 2.2

Let X be a non-negative absolutely continuous random variable with cdf F. Then, the WGCPI of order m between \(F_{L_{n}}\) and F is

3 Dynamic weighted cumulative past inaccuracy

In this section, we study a dynamic version of \(I^{w}( F_{L_{n}},F )\). If a system that begins to work at time 0 is observed only at deterministic inspection times, and is found to be ‘down’ at time t, then we consider a dynamic cumulative measure of inaccuracy as

Note that \(\lim_{t\rightarrow \infty }I^{w}(F_{L_{n}},F;t)=I^{w}(F _{L_{n}},F)\). Since \(\log F(t)\leq 0\) for \(t\geq 0\), we have

In the following theorem, we prove that \(I^{w}(F_{L_{n}},F;t)\) uniquely determines the distribution function.

Theorem 3.1

Let X be a non-negative continuous random variable with distribution function \(F(\cdot )\). Let the weighted dynamic cumulative inaccuracy of the nth lower record value be finite, that is, \(I^{w}(F_{L_{n}},F;t)< \infty \), \(t\geq 0\). Then \(I^{w}(F_{L_{n}},F;t)\) characterizes the distribution function.

Proof

From (3.1) we have

Differentiating both sides of (3.2) with respect to t, we obtain

Taking derivative with respect to t again, we get

Suppose that there are two functions F and \(F^{*}\) such that

Then for all t, from (3.3) we get

where

and \(s(t)=\mu _{n}^{w}(t)\). By using Theorem 2.1 and Lemma 2.2 of Gupta and Kirmani [5], we have \(\tilde{\lambda }_{F}(t)=\tilde{\lambda } _{F^{*}}(t)\), for all t. Since the reversed hazard rate function characterizes the distribution function uniquely, we complete the proof. □

4 Empirical weighted cumulative past inaccuracy

In this section we address the problem of estimating the weighted cumulative measure of inaccuracy by means of the empirical weighted cumulative inaccuracy of lower record values. Let \(X_{1},X_{2}, \dots ,X_{m}\) be a random sample of size m from an absolutely continuous cumulative distribution function \(F(x)\). Then according to (2.8), the empirical cumulative measure of inaccuracy is

where

is the empirical distribution of the sample and I is the indicator function. If we denote \(X_{(1)} \leq X_{(2)}\leq \cdots \leq X_{(m)}\) as the order statistics of the sample, then (4.1) can be written as

Moreover,

Hence, (4.2) can be written as

where \(U_{k}=\frac{X^{2}_{(k+1)}-X^{2}_{(k)}}{2}\), \(k=1,2,\dots ,m-1\) are the sample spacings.

Example 4.1

Consider a random sample \(X_{1},X_{2}, \dots ,X_{m}\) from the Weibull distribution with density function

Then \(Y_{k}=X_{k}^{2}\) has an exponential distribution with mean \(\frac{1}{\lambda }\). In this case, the sample spacings \(2U_{k}=X^{2} _{(k+1)}-X^{2}_{(k)}\) are independent and exponentially distributed with mean \(\frac{1}{\lambda (m-k)}\) (for more details, see Pyke [12]). Now from (4.3) we obtain

and

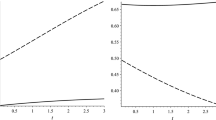

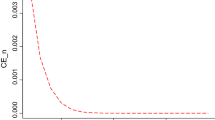

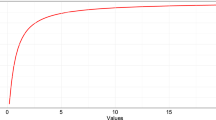

We have computed the values of \(\mathbb{E}[\hat{I}^{w}(F_{L_{n}},F)]\) and \(\operatorname{Var}[\hat{I}^{w}(F_{L_{n}},F)]\) for sample sizes \(m=10, 15,20\), \(\lambda =0.5,1,2\) and \(n=2,3,4,5\) in Table 1. We can easily see that \(\mathbb{E}[\hat{I}^{w}(F_{L_{n}},F)]\) and \(\operatorname{Var}[\hat{I}^{w}(F_{L_{n}},F)]\) are decreasing in m. Also, we consider that \(\lim_{m\rightarrow \infty }\operatorname{Var}[\hat{I}^{w}(F_{L_{n}},F)]=0\).

Example 4.2

Let \(X_{1},X_{2}, \dots ,X_{m}\) be a random sample from a population with pdf \(f(x)=2x\), \(0< x<1\). Then the sample spacings \(2U_{k}\) are independent and beta distributed with parameters 1 and m (for more details, see Pyke [12]). Now from (4.3) we obtain

and

We have computed the values of \(\mathbb{E}[\hat{I}^{w}(F_{L_{n}},F)]\) and \(\operatorname{Var}[\hat{I}^{w}(F_{L_{n}},F)]\) for sample sizes \(m=10, 15,20\) and \(n=2,3,4,5\) in Table 2. We can easily see that \(\operatorname{Var}[\hat{I}^{w}(F_{L_{n}},F)]\) is decreasing in m and \(\lim_{m\rightarrow \infty }\operatorname{Var}[\hat{I}^{w}(F _{L_{n}},F)]=0\).

Theorem 4.1

Let X be an absolutely continuous non-negative random variable such that \(I^{w}(F_{L_{n}},F)<\infty \), for all \(n\geq 1\). Then we have

Proof

From (2.8) we have

where

Now we can obtain

where

Using dominated convergence (DCT) and Glivenko–Cantelli theorems, we have

It follows that

Moreover, by using SLLN, \(\frac{1}{m}\sum_{i=1}^{m} X_{i}^{p} \longrightarrow \mathbb{E}(X^{p})\) and \(\sup_{m}(\frac{1}{m}\sum_{i=1}^{m} X_{i}^{p})< \infty \), so \(\hat{F}_{m} (x)\leq x^{-p} (\sup_{m}(\frac{1}{m} \sum_{i=1}^{m} X_{i}^{p}) )=Cx^{-p}\). Now applying DCT, we have

5 Weighted cumulative residual inaccuracy for \(R_{n}\)

In this section, we propose WCRI between \(\bar{F}_{R_{n}}\) and F̄. We discuss some properties of WCRI such as the effect of a linear transformation, relationships with other reliability functions, bounds and stochastic ordering.

Definition 5.1

Let X be a non-negative absolutely continuous random variable with survival function F̄. Then, we define the WCRI between \(\bar{F}_{R_{n}}\) and F̄ as follows:

where \(\lambda (x)=\frac{f(x)}{\bar{F}(x)}\) is the hazard rate function and \(R_{j+2}\) is a random variable with reliability \(\bar{F}_{R_{j+2}}\).

In the following example, we calculate \(\bar{I}^{w}(\bar{F}_{R_{n}}, \bar{F})\) for some specific lifetime distributions which are widely used in reliability theory and life testing.

Example 5.1

-

(a)

If X is uniformly distributed on \([0,\theta ]\), then it is easy to see that \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})=\theta ^{2}\sum_{j=0} ^{n-1}\frac{3^{j+2}-2^{j+2}}{6^{j+2}}(j+1)\), for all integers \(n\geq 1\).

-

(b)

If X has a Weibull distribution with survival function \(\bar{F}(x)=e^{-\alpha x^{\beta }}\), \(x\geq 0\), \(\alpha ,\beta >0\), then for all integers \(n\geq 1\), we obtain \(\bar{I}^{w}(\bar{F}_{R _{n}},\bar{F})=\frac{1}{\beta }\sum_{j=0}^{n-1}\frac{ \alpha ^{2(1+j-\frac{1}{\beta })}(j+\frac{2}{\beta })!}{j!}\).

-

(c)

Let X be an exponential distribution with mean \(\frac{1}{ \lambda }\), then \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})=\frac{n(n+1)(n+2)}{3 \lambda ^{2}}\).

Proposition 5.2

Let X be an absolutely continuous non-negative random variable with survival function F̄. Then, we have

where \(\mu _{n}=\int _{0}^{+\infty }\bar{F}_{R_{n}}(x)\,dx\).

Proof

□

Proposition 5.3

Let \(a,b > 0\). For \(n =1,2,\dots \), it holds that

Proof

From (5.1) and noting that \(\bar{F}_{aX+b}(x)= \bar{F}(\frac{x-b}{a})\), we have

□

Kayid et al. [8] proposed the combination mean residual life (CMRL) function of X as the reciprocal hazard rate of the length-biased equilibrium distribution given by

Now, the CMRL of \(R_{n}\) is given by

Proposition 5.4

Let X be an absolutely continuous non-negative random variable with survival function F̄. Then, we have

Proof

By (5.1) and the fact that \(-\log \bar{F}(x)=\int _{0}^{x}\lambda (z)\,dz\), we have

□

Proposition 5.5

Let X be a non-negative random variable with survival function F̄. Then, we have

Proof

From (5.3) and using Proposition 5.4, we obtain

This completes the proof. □

Proposition 5.6

Let X be an absolutely continuous non-negative random variable such that \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})<\infty \), for \(n\geq 1\). Then, we have

where

Proof

From (5.1) and using Fubini’s theorem, we obtain

□

Proposition 5.7

Let X be a non-negative and absolutely continuous random variable with cdf F. Then

where \(\mathcal{E}^{w_{j+1}}(X)=-\int _{0}^{\infty }x^{ (\frac{1}{j+1} )} \bar{F}(x)\log {\bar{F}(x)}\,dx\).

Proof

From (5.1), we have

This completes the proof. □

The next propositions give some lower and upper bounds for \(\bar{I} ^{w}(\bar{F}_{R_{n}},\bar{F})\).

Proposition 5.8

Let X be a non-negative random variable with absolutely continuous cumulative distribution function \(F(x)\). Then for \(n=1,2,\dots \), we have

Proof

By using (5.1), the proof is easy. □

Proposition 5.9

Let X be a non-negative random variable with survival function \(\bar{F}(x)\). Then for \(n=1,2,\dots \), we have

Proof

Since \(-\log \bar{F}(x)\geq 1-\bar{F}(x)\), the proof follows by recalling (5.1). □

In the following, we obtain some results on \(I^{w}(\bar{F}_{R_{n}}, \bar{F})\) and its connection with notions of reliability theory.

Proposition 5.10

If X is DFRA, then for \(n=1,2,\dots \), we have

Proof

Suppose that \(f_{R_{n}}\) is the pdf of of the nth record value \(R_{n}\). Then, the ratio \(\frac{f_{R_{n+1}}(t)}{f _{R_{n}}(t)}=\frac{-\log \bar{F}(t)}{n}\) is increasing in t. Therefore, \(R_{n}\leq ^{lr}R_{n+1}\), and this implies that \(R_{n}\leq ^{st}R_{n+1}\), i.e., \(\bar{F}_{R_{n}}\leq \bar{F}_{R_{n+1}}\) (for more details, see Shaked and Shanthikumar ([13], Chap. 1)). Hence, if X is DFRA and \(\lambda (x)\) is its hazard rate, then \(\frac{x}{\lambda (x)}\) is incre asing function of x. So, from (5.1) we have

The proof is completed. □

Proposition 5.11

If X has the exponential distribution with mean \(\mu =\frac{1}{ \theta }\), then as the hazard rate is constant, we obtain that \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})=\frac{n(n+1)(n+2)}{3}\mu ^{2}\), which is an increasing function of n.

Proposition 5.12

Let X and Y be two non-negative random variables with reliability functions \(\bar{F}(x)\) and \(\bar{G}(x)\), respectively. If \(X\leq ^{hr}Y\) and X is DFRA, then

for \(n=1,2,\dots \).

Proof

It is well known that \(X\leq ^{hr} Y\) implies \(X\leq ^{st} Y\) (see Shaked and Shanthikumar [13]). Hence, we have

where \(\bar{G}_{\tilde{R}_{j+2}}\) is the survival function of \(\tilde{R}_{j+2}\). That is, \(R_{j+2}\leq ^{st}\tilde{R}_{j+2}\) holds. This is equivalent (see Shaked and Shanthikumar [13], p. 4) to having

for all increasing functions ϕ such that these expectations exist. Thus, if we assume that X is DFRA and \(\lambda (x)\) is its failure rate, then \(\frac{x}{\lambda (x)}\) is increasing and we have

On the other hand, \(X\leq ^{hr} Y\) implies that the respective failure rate functions satisfy \(\lambda _{F}(x)\geq \lambda _{G}(y)\). Hence, we have

Therefore, using both expressions, we obtain \(\bar{I}^{w}(\bar{F}_{R _{n}},\bar{F})\leq \bar{I}^{w}(\bar{G}_{\tilde{R}_{n}},\bar{G})\). □

Proposition 5.13

Let X and Y be two non-negative random variables with reliability functions \(\bar{F}(x)\) and \(\bar{G}(x)\), respectively. If \(X\leq ^{icx}Y\), then

Proof

Since \(h_{j+1}^{w}(\cdot )\) is an increasing convex function for \(j\geq 0\), it follows by Shaked and Shanthikumar [13] that \(X\leq ^{icx}Y\) implies \(h_{j+1}^{w}(X)\leq ^{icx}h_{j+1} ^{w}(Y)\). By recalling the definition of increasing convex order and Proposition 5.6, the proof is complete. □

Proposition 5.14

If X is IFRA (DFRA), then for \(n=1,2,\dots \), we have

Proof

From (5.1), we have

Now, since X is IFRA (DFRA), \(\frac{-\log \bar{F}(x)}{x}\) is increasing (decreasing) with respect to \(x>0\), which implies that

Hence, the proof is completed by noting (5.9) and (5.10). □

Proposition 5.15

Let X and Y be two non-negative random variables with survival functions \(\bar{F}(x)\) and \(\bar{G}(x)\), respectively. If \(X\leq ^{hr}Y\), then for \(n=1,2,\dots \), it holds that

Proof

By noting that the function \(h_{j+1}^{w}(x)= \int _{0}^{x}z[-\log \bar{F}(z)]^{j+1}\,dz\) is an increasing convex function, under the assumption \(X\leq ^{hr}Y\), it follows, by Shaked and Shanthikumar [13], that

Hence, the proof is completed by recalling (5.1). □

Proposition 5.16

-

(i)

Let X be a continuous random variable with survival function \(\bar{F}(\cdot )\) that takes values in \([0, b]\), with finite b. Then,

$$ \bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})\leq b\bar{I}( \bar{F}_{R_{n}}, \bar{F}). $$ -

(ii)

Let X be a non-negative continuous random variable with survival function \(\bar{F}(\cdot )\) that takes values in \([a, \infty )\), with finite \(a>0\). Then,

$$ \bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})\geq a\bar{I}( \bar{F}_{R_{n}}, \bar{F}). $$

Assume that \(X^{*}_{\theta }\) denotes a non-negative absolutely continuous random variable with the survival function \(\bar{H}_{ \theta }(x)=[\bar{F}(x)]^{\theta }\), \(x\geq 0\). This model is known as a proportional hazards rate model. We now obtain the weighted cumulative residual measure of inaccuracy between \(\bar{H}_{R_{n}}\) and H̄ as follows:

Proposition 5.17

If \(\theta \geq (\leq )1\), then for any \(n\geq 1\), we have

where \({\mathcal{E}}^{w}_{j+1}(X)\) is the weighted generalized cumulative residual entropy of X, defined by Kayal [6] as

Proof

Suppose that \(\theta \geq (\leq )1\), then it is clear that \([\bar{F}(x)]^{\theta }\leq (\geq )\bar{F}(x)\), and hence (5.11) yields

□

Proposition 5.18

Let X be a non-negative random variable with survival function \(\bar{F}(\cdot )\), then an analytical expression for \(I^{w}(\bar{F} _{R_{n}},\bar{F})\) is given by

Theorem 5.19

\(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})=0\) if and only if X is degenerate.

Proof

Suppose X is degenerate at point a. Then, obviously, by definition of degenerate function and definition of \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})\), we have \(\bar{I}^{w}(\bar{F} _{R_{n}},\bar{F})=0\).

Now, suppose that \(I^{w}(\bar{F}_{R_{n}},\bar{F})=0\), i.e.,

Then, by noting that the integrand of (5.13) is non-negative, we conclude that

for almost all \(x\in \mathbb{R}^{+}\). Thus, \(\bar{F}(x)=0 \text{ or } 1\), for almost all \(x\in \mathbb{R}^{+}\). □

Remark 5.1

Let X be a non-negative absolutely continuous random variable with survival function \(\bar{F}(\cdot )\). Then in analogy with the measure defined in (2.13), the WGCRI of order m between \(\bar{F} _{R_{n}}\) and F̄ is given by

In the remainder of this section, we study a dynamic version of \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})\). Let X be the lifetime of a system under the condition that the system has survived up to age t. Analogously, we can also consider a dynamic version of \(\bar{I} ^{w}(\bar{F}_{R_{n}},\bar{F})\) as

Note that \(\lim_{t\rightarrow 0}\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F};t)= \bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})\). Since \(\log \bar{F}(t)\leq 0\) for \(t\geq 0\), we have

Theorem 5.20

Let X be a non-negative continuous random variable with distribution function \(F(\cdot )\). Let the weighted dynamic cumulative inaccuracy of the nth record value satisfy \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F};t)< \infty \), \(t\geq 0\). Then \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F};t)\) characterizes the distribution function.

Proof

From (5.14) we have

Differentiating both sides of (5.15) with respect to t, we obtain

Taking derivative with respect to t again, we get

Suppose that there are two functions F and \(F^{*}\) such that

Then for all t, from (5.16) we get

where

and \(\tilde{s}(t)=m_{n}^{c}(t)\). By using Theorem 3.2 and Lemma 3.3 of Gupta and Kirmani [5], we have \(\lambda _{F}(t)=\lambda _{F^{*}}(t)\), for all t. Since the hazard rate function characterizes the distribution function uniquely, we complete the proof. □

6 Conclusions

In this paper, we discussed the concept of a weighted past inaccuracy measure between \(F_{L_{n}}\) and F. We proposed a dynamic version of WCPI and studied its characterization results. We have also proved that \(I^{w}(F_{L_{n}},F;t)\) uniquely determines the parent distribution F. Moreover, we studied some new basic properties of \(I^{w}(F_{L _{n}},F)\) such as the effect of a linear transformation, relationships with other reliability functions, bounds and stochastic order properties. We estimated the WCPI by means of the empirical cumulative inaccuracy of lower record values. Finally, we proposed the WCRI measure between the survival function \(\bar{F}_{R_{n}}\) and F̄. We also studied some properties of \(\bar{I}^{w}(\bar{F}_{R_{n}},\bar{F})\) such as the connections with other reliability functions, several useful bounds and stochastic orderings. These concepts can be applied in measuring the weighted inaccuracy contained in the associated past (residual) lifetime.

References

Arnold, B.C., Balakrishnan, N., Nagaraja, H.N.: A First Course in Order Statistics. Wiley, New York (1992)

Bdair, O.M., Raqab, M.Z.: Sharp upper bounds for the mean residual waiting time of records. Stat 46(1), 69–84 (2012)

Cali, C., Longobardi, M., Navarro, J.: Properties for generalized cumulative past measures of information. Probab. Eng. Inf. Sci. (2018). https://doi.org/10.1017/S0269964818000360

Di Crescenzo, A., Longobardi, M.: On cumulative entropies. J. Stat. Plan. Inference 139, 4072–4087 (2009)

Gupta, R.C., Kirmani, S.N.U.A.: Characterizations based on conditional mean function. J. Stat. Plan. Inference 138, 964–970 (2008)

Kayal, S.: On weighted generalized cumulative residual entropy of order n. Methodol. Comput. Appl. Probab. 20(2), 487–503 (2018)

Kayal, S., Moharana, R.: A shift-dependent generalized cumulative entropy of order n. Commun. Stat., Simul. Comput. (2018). https://doi.org/10.1080/03610918.2018.1423692

Kayid, M., Izadkhah, S., Alhalees, H.: Combination of mean residual life order with reliability applications. Stat. Methodol. 29, 51–69 (2016)

Kerridge, D.F.: Inaccuracy and inference. J. R. Stat. Soc., Ser. B, Methodol. 23, 184–194 (1961)

Kundu, C.: On weighted measure of inaccuracy for doubly truncated random variables. Commun. Stat., Theory Methods 46(7), 3135–3147 (2017)

Kundu, C., Di Crescenzo, A., Longobardi, M.: On cumulative residual (past) inaccuracy for truncated random variables. Metrika 79, 335–356 (2016)

Pyke, R.: Spacings. J. R. Stat. Soc. B 27, 395–449 (1965)

Shaked, M., Shanthikumar, J.G.: Stochastic Orders. Springer, New York (2007)

Tahmasebi, S., Daneshi, S.: Measures of inaccuracy in record values. Commun. Stat., Theory Methods 47(24), 6002–6018 (2018)

Tahmasebi, S., Nezakati, A., Daneshi, S.: Results on cumulative measure of inaccuracy in record values. J. Stat. Theory Appl. 17(1), 15–28 (2018)

Thapliyal, R., Taneja, H.C.: A measure of inaccuracy in order statistics. J. Stat. Theory Appl. 12(2), 200–207 (2013)

Thapliyal, R., Taneja, H.C.: Dynamic cumulative residual and past inaccuracy measures. J. Stat. Theory Appl. 14(4), 399–412 (2015)

Thapliyal, R., Taneja, H.C.: On residual inaccuracy of order statistics. Stat. Probab. Lett. 97, 125–131 (2015)

Acknowledgements

The authors would like to thank the editors and reviewers for their valuable contributions, which greatly improved the readability of this paper.

Availability of data and materials

Not applicable.

Funding

The authors state that they have received no funding for this paper.

Author information

Authors and Affiliations

Contributions

The authors have made equal contributions. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Daneshi, S., Nezakati, A. & Tahmasebi, S. On weighted cumulative past (residual) inaccuracy for record values. J Inequal Appl 2019, 134 (2019). https://doi.org/10.1186/s13660-019-2082-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-019-2082-y