Abstract

It has turned out that the tensor expansion model has better approximation to the objective function than models of the normal second Taylor expansion. This paper conducts a study of the tensor model for nonlinear equations and it includes the following: (i) a three dimensional symmetric tensor trust-region subproblem model of the nonlinear equations is presented; (ii) the three dimensional symmetric tensor is replaced by interpolating function and gradient values from the most recent past iterate, which avoids the storage of the three dimensional symmetric tensor and decreases the workload of the computer; (iii) the limited BFGS quasi-Newton update is used instead of the second Jacobian matrix, which generates an inexpensive computation of a complex system; (iv) the global convergence is proved under suitable conditions. Numerical experiments are done to show that this proposed algorithm is competitive with the normal algorithm.

Similar content being viewed by others

1 Introduction

This paper focuses on

where \(S:\Re^{n} \rightarrow \Re^{n}\) is continuously differentiable nonlinear system. The nonlinear system (1.1) has been proved to possess wildly different application fields in parameter estimating, function approximating, and nonlinear fitting, etc. At present, there exist many effective algorithms working in it, such as the traditional Gauss–Newton method [1, 9,10,11, 14, 16], the BFGS method [8, 23, 27, 29, 39, 43], the Levenberg–Marquardt method [6, 24, 42], the trust-region method [4, 26, 35, 41], the conjugate gradient algorithm [12, 25, 30, 38, 40], and the limited BFGS method [13, 28]. Here and in the next statement, for research convenience, suppose that \(S(x)\) has solution \(x^{*}\). Setting \(\beta (x):=\frac{1}{2}\Vert S(x) \Vert ^{2}\) as a norm function, the problem (1.1) is equivalent to the following optimization problem:

The trust-region (TR) methods have as a main objective solving the so-called trust-region subproblem model to get the trial step \(d_{k}\),

where \(x_{k}\) is the kth iteration, △ is the so-called TR radius, and \(\Vert \cdot \Vert \) is the normally Euclidean norm of vectors or matrix. The first choice for many scholars is to study the above model to make a good improvement. An adaptive TR model is designed by Zhang and Wang [42]:

where \(p>0\) is an integer, and \(0< c<1\) and \(0.5<\gamma <1\) are constants. Its superlinear convergence is obtained under the local error bound assumption, by which it has been proved that the local error bound assumption is weaker than the nondegeneracy [24]. Thus one made progress in theory. However, its global convergence still needs the nondegeneracy. Another adaptive TR subproblem is defined by Yuan et al. [35]:

where \(B_{k}\) is generated by the BFGS quasi-Newton formula

where \(y_{k}=S(x_{k+1})-S(x_{k}),\,s_{k}=x_{k+1}-x_{k}\), \(x_{k+1}\) is the next iteration, and \(B_{0}\) is an initial symmetric positive definite matrix. This TR method can possess the global convergence without the nondegeneracy, which shows that this paper made a further progress in theory. Furthermore, it also possesses the quadratic convergence. It has been showed that the BFGS quasi-Newton update is very effective for optimization problems (see [32, 33, 36] etc.). There exist many applications of the TR methods (see [19,20,21, 31] etc.) for nonsmooth optimizations and other problems.

It is not difficult to see that the above models only get the second Taylor expansion and approximation. Can we get the approximation to reach one more level, namely the third expansion, or even the fourth? The answer is positive and a third Taylor expansion is used and a three dimensional symmetric tensor model is stated. In the next section, the motivation and the tensor TR model are stated. The algorithm and its global convergence are presented in Sect. 3. In Sect. 4, we do the experiments of the algorithms. One conclusion is given in the last section.

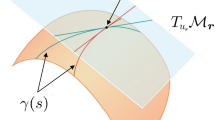

2 Motivation and the tensor trust-region model

Consider the tensor model for the nonlinear system \(S(x)\) at \(x_{k}\),

where \(\nabla S(x_{k})\) is the Jacobian matrix of \(S(x)\) at \(x_{k}\) and \(T_{k}\) is three dimensional symmetric tensor. It is not difficult to see that the above tensor model (2.1) has better approximation than the normal quadratical trust-region model. It has been proved that the tensor is significantly simpler when only information from one past iterate is used (see [3] for details), which obviously decreases the complexity of the computation of the three dimensional symmetric tensor \(T_{k}\). Then the model (2.1) can be written as the following extension:

In order to avoid the exact Jacobian matrix \(\nabla S(x_{k})\), we use the quasi-Newton update matrix \(B_{k}\) instead of it. Thus, our trust-region subproblem model is designed by

where \(B_{k}=H_{k}^{-1}\) and \(H_{k}\) is generated by the following low-storage limited BFGS (L-BFGS) update formula:

where \(\rho_{k}=\frac{1}{s_{k}^{T}y_{k}}\), \(V_{k}=I-\rho_{k}y_{k}s _{k}^{T}\), I is the unit matrix and m is a positive integer. It has turned out that the L-BFGS method has a fast linear convergence rate and minimal storage, and it is effective for large-scale problems (see [2, 13, 28, 34, 37] etc.). Let \(d_{k}^{p}\) be the solution of (2.3) corresponding to the constant p. Define the actual reduction by

and the predict reduction by

Based on definition of the actual reduction \(Ad_{k}(d_{k}^{p})\) and the predict reduction \(Pd_{k}(d_{k}^{p})\), their radio is defined by

Therefore, the tensor trust-region model algorithm for solve (1.1) is stated as follows.

Algorithm 1

- Initial: :

-

Constants ρ, \(c\in (0,1)\), \(p=0\), \(\epsilon >0\), \(x_{0}\in \Re^{n},\,m>0\), and \(B_{0}=H_{0}^{-1}\in \Re^{n}\times \Re ^{n}\) is a symmetric and positive definite matrix. Let \(k:=0\);

- Step 1: :

-

Stop if \(\Vert S(x_{k}) \Vert <\epsilon \) holds;

- Step 2: :

-

Solve (2.3) with \(\triangle =\triangle_{k}\) to obtain \(d_{k}^{p}\);

- Step 3: :

-

Compute \(Ad_{k}(d_{k}^{p})\), \(Pd_{k}(d_{k}^{p})\), and the radio \(r_{k}^{p}\). If \(r_{k}^{p}<\rho \), let \(p=p+1\), go to Step 2. If \(r_{k}^{p}\geq \rho \), go to the next step;

- Step 4: :

-

Set \(x_{k+1}=x_{k}+d_{k}^{p}\), \(y_{k}=S(x_{k+1})-S(x _{k})\), update \(B_{k+1}=H_{k+1}^{-1}\) by (2.4) if \(y_{k}^{T}d _{k}^{p}>0\), otherwise set \(B_{k+1}=B_{k}\);

- Step 5: :

-

Let \(k:=k+1\) and \(p=0\). Go to Step 1.

Remark

The procedure of “Step 2–Step 3–Step 2” is called the inner cycle in the above algorithm. It is necessary for us to prove that the inner cycle is finite, which generates the circumstance that Algorithm 1 is well defined.

3 Convergence results

This section focuses on convergence results of Algorithm 1 under the following assumptions.

Assumption i

- (A) :

-

The level set Ω defined by

$$ \varOmega =\bigl\{ x\mid\beta (x)\leq \beta (x_{0})\bigr\} $$(3.1)is bounded.

- (B) :

-

On an open convex set \(\varOmega_{1}\) containing Ω, the nonlinear system \(S(x)\) is twice continuously differentiable.

- (C) :

-

The approximation relation

$$ \bigl\Vert \bigl[\nabla S(x_{k})-B_{k} \bigr]S(x_{k}) \bigr\Vert =O\bigl(\bigl\Vert d_{k}^{p} \bigr\Vert \bigr) $$(3.2)is true, where \(d_{k}^{p}\) is the solution of the model (2.3).

- (D) :

-

On \(\varOmega_{1}\), the sequence matrices \(\{B_{k}\}\) are uniformly bounded, namely there exist constants \(0< M_{0}\leq M\) satisfying

$$ M_{s}\leq \Vert B_{k} \Vert \le M_{l} \quad \forall k. $$(3.3)

Assumption i (B) means that there exists a constant \(M_{L}>0\) satisfying

Based on the above assumptions and the definition of the model (2.3), we have the following lemma.

Lemma 3.1

Let \(d_{k}^{p}\) be the solution of (2.3), then the inequality

holds.

Proof

By the definition of \(d_{k}^{p}\) of (2.3), then, for any \(\alpha \in [0,1]\), we get

Therefore, we have

The proof is complete. □

Lemma 3.2

Let \(d_{k}^{p}\) be the solution of (2.3). Suppose that Assumption i holds and \(\{x_{k}\}\) is generated by Algorithm 1. Then we have

Proof

Using Assumption i, the definition of (2.5) and (2.6), we obtain

This completes the proof. □

Lemma 3.3

Let the conditions of Lemma 3.2 hold. We conclude that Algorithm 1 does not infinitely circle in the inner cycle (“Step 2–Step 3–Step 2”).

Proof

This lemma will be proved by contradiction. Suppose, at \(x_{k}\), that Algorithm 1 infinitely circles in the inner cycle, namely, \(r_{k}^{p}<\rho \) and \(c^{p}\rightarrow 0\) with \(p\rightarrow \infty \). This implies that \(\Vert g_{k} \Vert \geq \epsilon \), or the algorithm stops. Thus we conclude that \(\Vert d_{k}^{p} \Vert \leq \triangle_{k}=c^{p}\Vert g_{k} \Vert \rightarrow 0\) is true.

By Lemma 3.1 and Lemma 3.2, we get

Therefore, for p sufficiently large, we have

which generates a contradiction with the fact \(r_{k}^{p}<\rho \). The proof is complete. □

Lemma 3.4

Suppose that the conditions of Lemma 3.3 holds. Then we conclude that \(\{x_{k}\}\subset \varOmega \) is true and \(\{\beta (x_{k})\}\) converges.

Proof

By the results of the above lemma, we get

Combining with Lemma 3.1 generates

Then \(\{x_{k}\}\subset \varOmega \) holds. By the case \(\beta (x_{k}) \geq 0\), we deduce that \(\{\beta (x_{k})\}\) converges. This completes its proof. □

Theorem 3.5

Suppose that the conditions of Lemma 3.3 hold and \(\{x_{k}\}\) is generated by Algorithm 1. Then Algorithm 1 either finitely stops or generates an infinite sequence \(\{x_{k}\}\) satisfying

Proof

Suppose that Algorithm 1 does not finitely stop. We need to obtain (3.8). Assume that

holds. Using (3.3) one gets (3.8). So, we can complete this lemma by (3.9). We use the contradiction to have (3.9). Namely, we suppose that there exist an subsequence \(\{k_{j}\}\) and a positive constant ε such that

Let \(K=\{k\mid \Vert B_{k}S(x_{k}) \Vert \geq \varepsilon \}\) be an index set. Using Assumption i, the case \(\Vert B_{k}S(x_{k}) \Vert \geq \varepsilon\) (\(k\in K\)), and \(\Vert S(x_{k}) \Vert \) (\(k\in K\)) is bounded away from 0, we assume

holds. By Lemma 3.1 and the definition of Algorithm 1, we obtain

where \(p_{k}\) is the largest p value obtained in the inner circle. Lemma 3.4 tells us that the sequence \(\{\beta (x_{k})\}\) is convergent, thus

Then \(p_{k} \rightarrow +\infty \) when \(k\rightarrow +\infty \) and \(k\in K\). Therefore, for all \(k\in K\), it is reasonable for us to assume \(p_{k}\geq 1\). In the inner circle, by the determination of \(p_{k}\) (\(k\in K\)), let \(d_{k}'\) corresponding to the subproblem

be unacceptable. Setting \(x_{k+1}'=x_{k}+d_{k}'\) one has

Using Lemma 3.1 and the definition \(\triangle_{k}\) one has

Using Lemma 3.2 one gets

Thus, we obtain

Using \(p_{k}\rightarrow +\infty \) when \(k\rightarrow +\infty \) and \(k\in K\), we get

this generates a contradiction to (3.12). This completes the proof. □

4 Numerical results

This section reports some numerical results of Algorithm 1 and the algorithm of [35] (Algorithm YL).

4.1 Problems

The nonlinear system obeys the following statement:

Problem 1

Trigonometric function

Initial guess: \(x_{0}=(\frac{101}{100n},\frac{101}{100n},\ldots , \frac{101}{100n})^{T}\).

Problem 2

Logarithmic function

Initial points: \(x_{0}=(1,1,\ldots ,1)^{T}\).

Problem 3

Broyden tridiagonal function ([7], pp. 471–472)

Initial points: \(x_{0}=(-1,-1,\ldots ,-1)^{T}\).

Problem 4

Trigexp function ([7], p. 473)

Initial guess: \(x_{0}=(0,0,\ldots ,0)^{T}\).

Problem 5

Strictly convex function 1 ([18], p. 29). \(S(x)\) is the gradient of \(h(x)=\sum_{i=1}^{n}(e^{x_{i}}-x_{i})\). We have

Initial points: \(x_{0}=(\frac{1}{n},\frac{2}{n},\ldots ,1)^{T}\).

Problem 6

Strictly convex function 2 ([18], p. 30). \(S(x)\) is the gradient of \(h(x)=\sum_{i=1}^{n}\frac{i}{10} (e^{x_{i}}-x _{i})\). We have

Initial guess: \(x_{0}=(1,1,\ldots ,1)^{T}\).

Problem 7

Penalty function

Initial guess: \(x_{0}=(\frac{1}{3},\frac{1}{3},\ldots ,\frac{1}{3})^{T}\).

Problem 8

Variable dimensioned function

Initial guess: \(x_{0}=(1-\frac{1}{n},1-\frac{2}{n},\ldots ,0)^{T}\).

Problem 9

Discrete boundary value problem [15]

Initial points: \(x_{0}=(h(h-1),h(2h-1),\ldots ,h(nh-1))\).

Problem 10

The discretized two-point boundary value problem similar to the problem in [17]

with A is the \(n\times n\) tridiagonal matrix given by

and \(F(x)=(F_{1}(x),F_{2}(x),\ldots,F_{n}(x))^{T}\) with \(F_{i}(x)=\sin x _{i} -1\), \(i=1,2,\ldots,n\), and \(x=(50,0,50,0, \ldots )\).

Parameters: \(\rho =0.05\), \(\epsilon =10^{-4}\), \(c=0.5\), \(p=3\), \(m=6\), \(H_{0}\) is the unit matrix.

The method for ( 1.3 ) and ( 2.3 ): the \(Dogleg\) method [22].

Codes experiments: run on a PC with an Intel Pentium(R) Xeon(R) E5507 CPU @2.27 GHz, 6.00 GB of RAM, and the Windows 7 operating system.

Codes software: MATLAB r2017a.

Stop rules: the program stops if \(\Vert S(x) \Vert \leq 1e{-}4\) holds.

Other cases: we will stop the program if the iteration number is larger than a thousand.

4.2 Results and discussion

The column meaning of the tables is as follows.

Dim: the dimension.

NI: the iterations number.

NG: the norm function number.

Time: the CPU-time in s.

Numerical results of Table 1 show the performance of these two algorithms as regards NI, NG and Time. It is not difficult to see that:

-

(i)

Both of these algorithms can successfully solve all these ten nonlinear problems;

-

(ii)

the NI and the NG of these two algorithm do not increase when the dimension becomes large;

-

(iii)

the NI and the NG of Algorithm 1 are competitive to those of Algorithm YL and the Time of Algorithm YL is better than that of Algorithm 1. To directly show their the efficiency, the tool of [5] is used and three figures for NI, NG and Time are listed.

Figures 1–3 show the performance of NI, NG and Time of these two algorithms. It is easy to see that the NI and the NG of Algortihm 1 have won since their performance profile plot is on top right. And the Time of Algorithm YL has superiority to Algorithm 1. Both of these two algorithms have good robustness. All these three figures show that both of these two algorithms are very interesting and we hope they will be further studied in the future.

5 Conclusions

This paper considers the tensor trust-region model for nonlinear system. The global convergence is obtained under suitable conditions and numerical experiments are reported. This paper includes the following main work:

-

(1)

a tensor trust-region model is established and discussed.

-

(2)

the low workload update is used in this tensor trust-region model. In the future, we think this tensor trust-region model shall be more significant.

References

Bertsekas, D.P.: Nonlinear Programming. Athena Scientific, Belmont (1995)

Byrd, R.H., Nocedal, J., Schnabel, R.B.: Representations of quasi-Newton matrices and their use in limited memory methods. Math. Program. 63, 129–156 (1994)

Chow, T., Eskow, E., Schnabel, R.: Algorithm 783: a software package for unstrained optimization using tensor methods. ACM Trans. Math. Softw. 20, 518–530 (1994)

Conn, A.R., Gould, N.I.M., Toint, P.L.: Trust Region Method. SIAM, Philadelphia (2000)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Fan, J.Y.: A modified Levenberg–Marquardt algorithm for singular system of nonlinear equations. J. Comput. Math. 21, 625–636 (2003)

Gomez-Ruggiero, M., Martinez, J.M., Moretti, A.: Comparing algorithms for solving sparse nonlinear systems of equations. SIAM J. Sci. Stat. Comput. 23, 459–483 (1992)

Griewank, A.: The ’global’ convergence of Broyden-like methods with a suitable line search. J. Aust. Math. Soc. Ser. B 28, 75–92 (1986)

Levenberg, K.: A method for the solution of certain nonlinear problem in least squares. Q. Appl. Math. 2, 164–166 (1944)

Li, D., Fukushima, M.: A global and superlinear convergent Gauss–Newton-based BFGS method for symmetric nonlinear equations. SIAM J. Numer. Anal. 37, 152–172 (1999)

Li, D., Qi, L., Zhou, S.: Descent directions of quasi-Newton methods for symmetric nonlinear equations. SIAM J. Numer. Anal. 40(5), 1763–1774 (2002)

Li, X., Wang, S., Jin, Z., Pham, H.: A conjugate gradient algorithm under Yuan–Wei–Lu line search technique for large-scale minimization optimization models. Math. Probl. Eng. 2018, Article ID 4729318 (2018)

Li, Y., Yuan, G., Wei, Z.: A limited-memory BFGS algorithm based on a trust-region quadratic model for large-scale nonlinear equations. PLoS ONE 10(5), Article ID e0120993 (2015)

Marquardt, D.W.: An algorithm for least-squares estimation of nonlinear inequalities. SIAM J. Appl. Math. 11, 431–441 (1963)

Moré, J.J., Garbow, B.S., Hillström, K.E.: Testing unconstrained optimization software. ACM Trans. Math. Softw. 7, 17–41 (1981)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (1999)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. Academic Press, New York (1970)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained minimization problem. SIAM J. Optim. 7, 26–33 (1997)

Sheng, Z., Yuan, G.: An effective adaptive trust region algorithm for nonsmooth minimization. Comput. Optim. Appl. 71, 251–271 (2018)

Sheng, Z., Yuan, G., et al.: An adaptive trust region algorithm for large-residual nonsmooth least squares problems. J. Ind. Manag. Optim. 14, 707–718 (2018)

Sheng, Z., Yuan, G., et al.: A new adaptive trust region algorithm for optimization problems. Acta Math. Sci. 38B(2), 479–496 (2018)

Wang, Y.J., Xiu, N.H.: Theory and Algoithm for Nonlinear Programming. Shanxi Science and Technology Press, Xian (2004)

Wei, Z., Yuan, G., Lian, Z.: An approximate Gauss–Newton-based BFGS method for solving symmetric nonlinear equations. Guangxi Sciences 11(2), 91–99 (2004)

Yamashita, N., Fukushima, M.: On the rate of convergence of the Levenberg–Marquardt method. Computing 15, 239–249 (2001)

Yuan, G., Duan, X., Liu, W., Wang, X., Cui, Z., Sheng, Z.: Two new PRP conjugate gradient algorithms for minimization optimization models. PLoS ONE 10(10), Article ID e0140071 (2015). https://doi.org/10.1371/journal.pone.0140071

Yuan, G., Lu, S., Wei, Z.: A new trust-region method with line search for solving symmetric nonlinear equations. Int. J. Comput. Math. 88, 2109–2123 (2011)

Yuan, G., Lu, X.: A new backtracking inexact BFGS method for symmetric nonlinear equations. Comput. Math. Appl. 55, 116–129 (2008)

Yuan, G., Lu, X.: An active set limited memory BFGS algorithm for bound constrained optimization. Appl. Math. Model. 35, 3561–3573 (2011)

Yuan, G., Lu, X., Wei, Z.: BFGS trust-region method for symmetric nonlinear equations. J. Comput. Appl. Math. 230(1), 44–58 (2009)

Yuan, G., Meng, Z., Li, Y.: A modified Hestenes and Stiefel conjugate gradient algorithm for large-scale nonsmooth minimizations and nonlinear equations. J. Optim. Theory Appl. 168, 129–152 (2016)

Yuan, G., Sheng, Z.: Nonsmooth Optimization Algorithms. Press of Science, Beijing (2017)

Yuan, G., Sheng, Z., Wang, P., Hu, W., Li, C.: The global convergence of a modified BFGS method for nonconvex functions. J. Comput. Appl. Math. 327, 274–294 (2018)

Yuan, G., Wei, Z.: Convergence analysis of a modified BFGS method on convex minimizations. Comput. Optim. Appl. 47, 237–255 (2010)

Yuan, G., Wei, Z., Lu, S.: Limited memory BFGS method with backtracking for symmetric nonlinear equations. Math. Comput. Model. 54, 367–377 (2011)

Yuan, G., Wei, Z., Lu, X.: A BFGS trust-region method for nonlinear equations. Computing 92, 317–333 (2011)

Yuan, G., Wei, Z., Lu, X.: Global convergence of the BFGS method and the PRP method for general functions under a modified weak Wolfe–Powell line search. Appl. Math. Model. 47, 811–825 (2017)

Yuan, G., Wei, Z., Wu, Y.: Modified limited memory BFGS method with nonmonotone line search for unconstrained optimization. J. Korean Math. Soc. 47, 767–788 (2010)

Yuan, G., Wei, Z., Yang, Y.: The global convergence of the Polak–Ribiere–Polyak conjugate gradient algorithm under inexact line search for nonconvex functions. J. Comput. Appl. Math. (2018). https://doi.org/10.1016/j.cam.2018.10.057

Yuan, G., Yao, S.: A BFGS algorithm for solving symmetric nonlinear equations. Optimization 62, 45–64 (2013)

Yuan, G., Zhang, M.: A three-terms Polak–Ribière–Polyak conjugate gradient algorithm for large-scale nonlinear equations. J. Comput. Appl. Math. 286, 186–195 (2015)

Yuan, Y.: Trust region algorithm for nonlinear equations. Information 1, 7–21 (1998)

Zhang, J., Wang, Y.: A new trust region method for nonlinear equations. Math. Methods Oper. Res. 58, 283–298 (2003)

Zhu, D.: Nonmonotone backtracking inexact quasi-Newton algorithms for solving smooth nonlinear equations. Appl. Math. Comput. 161, 875–895 (2005)

Acknowledgements

The authors would like to thank the above the support funding. The authors also thank the referees and the editor for their valuable suggestions which greatly improve our paper.

Authors’ information

Songhua Wang and Shulun Liu are co-first authors.

Funding

This work was supported by the National Natural Science Fund of China (Grant No. 11661009).

Author information

Authors and Affiliations

Contributions

Dr. Songhua Wang mainly analyzed the theory results and Shulun Liu has done the numerical experiments. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wang, S., Liu, S. A tensor trust-region model for nonlinear system. J Inequal Appl 2018, 343 (2018). https://doi.org/10.1186/s13660-018-1935-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-018-1935-0