Abstract

The main aim of this paper is to give an improvement of the recent result on the sharpness of the Jensen inequality. The results given here are obtained using different Green functions and considering the case of the real Stieltjes measure, not necessarily positive. Finally, some applications involving various types of f-divergences and Zipf–Mandelbrot law are presented.

Similar content being viewed by others

1 Introduction

The Jensen inequality is one of the most famous and most important inequalities in mathematical analysis.

In [2], some estimates about the sharpness of the Jensen inequality are given. In particular, the difference

is estimated, where φ is a convex function of class \(C^{2}\).

The authors in [2] expanded \(\varphi ( f(x) ) \) around any given value of \(f(x)\), say around \(c=f(x_{0})\), which can be arbitrarily chosen in the domain I of φ, such that \(c=f(x_{0})\) is in the interior of I, and as their first result, they get the following inequalities:

where \(I_{2}\) denotes the domain of \(\varphi ''\).

The main aim of our paper is to give an improvement of that result using various Green functions and considering the case of the real Stieltjes measure, not necessarily positive.

2 Preliminary results

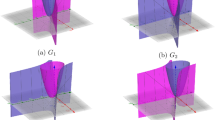

Consider the Green functions \(G_{k}:[\alpha , \beta ]\times [\alpha , \beta ]\rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) defined by

All these functions are convex and continuous with respect to both s and t.

We have the following lemma (see also [16] and [17]).

Lemma 1

For every function \(\varphi \in C^{2}[\alpha , \beta ]\), we have the following identities:

where the functions \(G_{k}\) (\(k=0,1,2,3,4\)) are defined as before in (1)–(5).

Proof

By integrating by parts we get

The other identities are proved analogously. □

Remark 1

The result (7) given in the previous lemma represents a special case of the representation of the function φ using the so-called “two-point right focal” interpolating polynomial in the case where \(n = 2\) and \(p = 0\) (see [1]).

Using the results from the previous lemma, the authors in [16] and [17] gave the uniform treatment of the Jensen-type inequalities, allowing the measure also to be negative. In this paper, we give some further interesting results.

3 Main results

To simplify the notation, for functions g and λ, we denote

Theorem 1

Let \(g:[a,b]\rightarrow \mathbb{R}\) be a continuous function, and let \(\varphi \in C^{2}[\alpha , \beta ]\), where the image of g is a subset of \([\alpha , \beta ]\). Let \(\lambda :[a,b]\rightarrow \mathbb{R}\) be a continuous function or a function of bounded variation such that \(\lambda (a)\neq \lambda (b)\) and \(\overline{g}\in [\alpha , \beta ]\). Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ]\rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Let \(p,q\in \mathbb{R}\), \(1\leq p,q \leq \infty \), be such that \(\frac{1}{p}+\frac{1}{q}=1\).

Then

where

Proof

From Lemma 1 we know that we can represent every function \(\varphi \in C^{2}[\alpha , \beta ]\) in adequate form using the previously defined functions \(G_{k}\) (\(k=0,1,2,3,4\)). By some calculation we can easily get that, for every function \(\varphi \in C^{2}[\alpha , \beta ]\) and for any \(k\in \{0,1,2,3,4\}\), we have

Taking the absolute value to (13), using the triangle inequality for integrals, and then applying the Hölder inequality, we get:

and the statement of the theorem follows. □

Now consider the case \(q=1\), that is, \(p=\infty \). If the positivity of the term \(\frac{\int_{a}^{b} G_{k} ( g(x),s ) \,d\lambda (x)}{\int_{a} ^{b} \,d\lambda (x)}- G_{k} ( \overline{g},s ) \) does not change for all \(s\in [\alpha , \beta ]\), then we can calculate Q. We have the following result.

Corollary 1

Let \(g:[a,b]\rightarrow \mathbb{R}\) be a continuous function, and let \(\varphi \in C^{2}([\alpha , \beta ])\), where the image of g is a subset of \([\alpha , \beta ]\). Let \(\lambda :[a,b]\rightarrow \mathbb{R}\) be a continuous function or a function of bounded variation such that \(\lambda (a)\neq \lambda (b)\) and \(\overline{g}\in [\alpha , \beta ]\). Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ]\rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Suppose that, for any \(k\in \{0,1,2,3,4 \}\),

for all \(s\in [\alpha , \beta ]\) or that, for any \(k\in \{0,1,2,3,4\}\), the reverse inequality in (14) holds for all \(s\in [\alpha , \beta ]\). Then

Proof

Let us start from the previous theorem and set \(q=1\), \(p=\infty \). Consider any \(k\in \{0,1,2,3,4\}\). Then (11) transforms into

When the positivity of the term \(\frac{\int_{a}^{b} G_{k} ( g(x),s ) \,d\lambda (x)}{\int_{a}^{b} \,d\lambda (x)}- G_{k} ( \overline{g},s ) \) for all \(s\in [\alpha , \beta ]\) does not change, we can calculate the integral on the right side of (16):

(For the proof, see [16] and [17].)

If inequality (14) holds for all \(s\in [\alpha , \beta ]\), then (16) becomes

Also, if the reverse inequality in (14) holds for all \(s\in [\alpha , \beta ]\), then (16) becomes

This means that if inequality (14) or the reverse inequality in (14) holds for all \(s\in [\alpha , \beta ]\), then we have (15). □

Remark 2

We can get the same result using the Lagrange mean-value theorems from [16] and [17], as they state that, for the functions g, φ, λ, \(G_{k}\) (\(k=0,1,2,3,4\)) defined as in the previous theorem, if inequality (14) or the reverse inequality in (14) holds for all \(s\in [\alpha , \beta ]\), then there exists \(\xi \in [\alpha , \beta ]\) such that

The next result represents an improvement of the aforementioned result from [2].

Corollary 2

Let \(g:[a,b]\rightarrow \mathbb{R}\) be continuous function, and let \(\varphi \in C^{2}([\alpha , \beta ])\), where the image of g is a subset of \([\alpha , \beta ]\). Let \(\lambda :[a,b]\rightarrow \mathbb{R}\) be a continuous function or a function of bounded variation such that \(\lambda (a)\neq \lambda (b)\) and \(\overline{g}\in [\alpha , \beta ]\). Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ]\rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Let \(x_{0} \in [a,b]\) be arbitrarily chosen, and let \(g(x_{0})=c\).

If for any \(k\in \{0,1,2,3,4\}\), inequality (14) or the the reverse inequality in (14) holds for all \(s\in [\alpha , \beta ]\), then

Proof

Let \(x_{0} \in [a,b]\) be arbitrarily chosen, and let \(g(x_{0})=c\). We have:

Under the prepositions of the previous corollary, applying (22) in (15) and then using the triangle inequality, we get:

□

4 Discrete case

Discrete Jensen’s inequality states that

for a convex function \(\varphi : I \to \mathbf{R}\), \(I \subseteq \mathbf{R}\), an n-tuple \(\mathbf{x}= (x_{1},\ldots,x_{n})\) (\(n\geq 2\)), and a nonnegative n-tuple \(\mathbf{r}= (r_{1},\ldots,r_{n})\) such that \(\sum_{i=1}^{n} r_{i}>0\).

In [16] and [17], we have a generalization of that result. It is allowed that \(r_{i}\) can also be negative with the sum different from 0, but there is given an additional condition on \(r_{i}\), \(x_{i}\) in terms of the Green functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ]\rightarrow \mathbb{R}\) defined in (1)–(5).

To simplify the notation, we denote \(R_{n}=\sum_{i=1}^{n} r_{i}\) and \(\overline{x}=\frac{1}{R_{n}}\sum_{i=1}^{n} r_{i} x_{i}\).

As we already know (from Lemma 1) how to represent every function \(\varphi \in C^{2}[\alpha , \beta ]\) in an adequate form using the previously defined functions \(G_{k}\) (\(k=0,1,2,3,4\)), by some calculation it is easy to show that

Similarly to the integral case, applying the Hölder inequality to (24), we get the following result.

Theorem 2

Let \(x_{i}\in [a,b]\subseteq [\alpha ,\beta ]\) and \(r_{i} \in \mathbb{R}\) (\(i=1,\ldots,n\)) be such that \(R_{n}\neq 0\) and \(\overline{x}\in [\alpha ,\beta ]\), and let \(\varphi \in C^{2}[\alpha , \beta ]\). Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ]\rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Furthermore, let \(p,q\in \mathbb{R}\), \(1\leq p,q\leq \infty \), be such that \(\frac{1}{p}+\frac{1}{q}=1\). Then

where

Set \(q=1\), \(p=\infty \). If the positivity of the term \(\frac{1}{R_{n}} \sum_{i=1} ^{n} r_{i} G_{k}(x_{i},s)- G_{k}(\overline{x},s)\) for all \(s\in [\alpha ,\beta ]\) does not change, then we can calculate Q and we get the following result.

Corollary 3

Let \(x_{i}\in [a,b]\subseteq [\alpha ,\beta ]\) and \(r_{i} \in \mathbb{R}\) (\(i=1,\ldots,n\)), be such that \(R_{n}\neq 0\) and \(\overline{x}\in [\alpha ,\beta ]\), and let \(\varphi \in C^{2}[\alpha , \beta ]\). Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ]\rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). If for any \(k\in \{0,1,2,3,4\}\), the inequality

or the reverse inequality in (27) holds for all \(s\in [\alpha , \beta ]\), then

Let \(c \in [a,b]\subseteq [\alpha ,\beta ]\) be arbitrarily chosen. Then

and we have the following result.

Corollary 4

Let \(x_{i}\in [a,b]\subseteq [\alpha ,\beta ]\) and \(r_{i} \in \mathbb{R}\) (\(i=1,\ldots,n\)) be such that \(R_{n}\neq 0\) and \(\overline{x}\in [\alpha ,\beta ]\), and let \(\varphi \in C^{2}[\alpha , \beta ]\). Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ]\rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Let \(c \in [a,b]\subseteq [\alpha , \beta ]\) be arbitrarily chosen.

If for any \(k\in \{0,1,2,3,4\}\), inequality (27) or the reverse inequality in (27) holds for all \(s\in [ \alpha , \beta ]\), then

5 Some applications

5.1 Applications to Csiszár f-divergence

Divergences between probability distributions have been introduced to measure the difference between them. A lot of different types of divergences exist, for example, the f-divergence, Rényi divergence, Jensen–Shannon divergence, and so on (see, e.g., [8] and [18]). There are numerous applications of divergences in many fields: anthropology and genetic, economics, ecological studies, music, signal processing, and pattern recognition.

The Jensen inequality plays an important role in obtaining inequalities for divergences between probability distributions, and there are many papers dealing with inequalities for divergences and entropies (see, e.g., [7, 9, 12]).

In this section, we give some applications of our results, and we first introduce the basic notions.

Csiszár [3, 4] defined the f-divergence functional as follows.

Definition 1

Let \(f:[ 0,\infty \rangle \rightarrow [ 0,\infty \rangle \) be a convex function, and let \(\mathbf{p}:= ( p_{1},\ldots,p_{n} ) \) and \(\mathbf{q}:= ( q_{1},\ldots,q_{n} ) \) be positive probability distributions. The f-divergence functional is

The undefined expressions can be interpreted by

This definition of the f-divergence functional can be generalized for a function \(f:I\rightarrow \mathbb{R}\), \(I\subset \mathbb{R}\), where \(\frac{p_{i}}{q_{i}}\in I\) for \(i=1,\ldots,n\), as follows (see also [7]).

Definition 2

Let \(I\subset \mathbb{R}\) be an interval, and let \(f:I\rightarrow \mathbb{R}\) be a function. Let \(\mathbf{p}:= ( p_{1},\ldots,p _{n} ) \in \mathbb{R}^{n}\) and \(\mathbf{q}:= ( q_{1},\ldots,q_{n} ) \in \langle 0,\infty \rangle^{n}\) be such that

Then let

Now we apply Theorem 2 to \(\hat{D}_{f}(\mathbf{p},\mathbf{q})\) and get the following result.

Theorem 3

Let \(\mathbf{p}:= ( p_{1},\ldots,p_{n} ) \in \mathbb{R}^{n}\), and \(\mathbf{q}:= ( q_{1},\ldots,q_{n} ) \in \langle 0, \infty \rangle^{n}\) be such that

Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ] \rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Furthermore, let \(p,q\in \mathbb{R}\), \(1\leq p,q\leq \infty \), be such that \(\frac{1}{p}+\frac{1}{q}=1\).

-

(a)

If \(f: [\alpha , \beta ]\rightarrow \mathbb{R}\), \(f \in C^{2}[\alpha , \beta ]\), then

$$ \biggl\vert \frac{1}{\sum_{i=1}^{n} q_{i}} \hat{D}_{f}( \mathbf{p}, \mathbf{q}) - f \biggl( \frac{\sum_{i=1}^{n} p_{i}}{\sum_{i=1}^{n} q_{i}} \biggr) \biggr\vert \leq Q \cdot \bigl\Vert f'' \bigr\Vert _{p}, $$(31)where

$$ Q= \textstyle\begin{cases} [ \int_{\alpha }^{\beta } \vert \frac{1}{\sum_{i=1}^{n} q_{i}} \hat{D}_{G_{k} (\cdot ,s)}(\mathbf{p},\mathbf{q})- G_{k} ( \frac{ \sum_{i=1}^{n} p_{i}}{\sum_{i=1}^{n} q_{i}},s ) \vert ^{q} \,ds ] ^{\frac{1}{q}} & \textit{for } q \neq \infty , \\ \sup_{s\in [\alpha ,\beta ]} \{ \vert \frac{1}{\sum_{i=1}^{n} q _{i}} \hat{D}_{G_{k} (\cdot ,s)}(\mathbf{p},\mathbf{q})- G_{k} ( \frac{ \sum_{i=1}^{n} p_{i}}{\sum_{i=1}^{n} q_{i}},s ) \vert \} & \textit{for } q = \infty . \end{cases} $$ -

(b)

If \(\mathit{id} \cdot f \in C^{2}[\alpha , \beta ]\), then

$$ \biggl\vert \frac{1}{\sum_{i=1}^{n} q_{i}} \hat{D}_{\mathit{id} \cdot f}( \mathbf{p}, \mathbf{q}) - \frac{\sum_{i=1}^{n} p_{i}}{\sum_{i=1}^{n} q_{i}} f \biggl( \frac{\sum_{i=1}^{n} p_{i}}{\sum_{i=1}^{n} q_{i}} \biggr) \biggr\vert \leq Q \cdot \bigl\Vert (\mathit{id}\cdot f)'' \bigr\Vert _{p}, $$(32)where id is the identity function, \(\hat{D}_{\mathit{id} \cdot f}(\mathbf{p}, \mathbf{q}) = { \sum_{i=1}^{n}} p_{i}f ( \frac{p_{i}}{q_{i}} ) \), and

$$ Q= \textstyle\begin{cases} [ \int_{\alpha }^{\beta } \vert \frac{1}{\sum_{i=1}^{n} q_{i}} \hat{D}_{G_{k} (\cdot ,s)}(\mathbf{p},\mathbf{q})- G_{k} ( \frac{ \sum_{i=1}^{n} p_{i}}{\sum_{i=1}^{n} q_{i}},s ) \vert ^{q} \,ds ] ^{\frac{1}{q}} & \textit{for } q \neq \infty , \\ \sup_{s\in [\alpha ,\beta ]} \{ \vert \frac{1}{\sum_{i=1}^{n} q _{i}} \hat{D}_{G_{k} (\cdot ,s)}(\mathbf{p},\mathbf{q})- G_{k} ( \frac{ \sum_{i=1}^{n} p_{i}}{\sum_{i=1}^{n} q_{i}},s ) \vert \} & \textit{for } q = \infty . \end{cases} $$

Proof

(a) The result follows directly from Theorem 2 by substitution \(\varphi :=f\),

(b) The result follows from (a) by substitution \(f:=\mathit{id} \cdot f\). □

Let us mention two particular cases of the previous result.

The first one corresponds to the entropy of a positive probability distribution.

Definition 3

The Shannon entropy of a positive probability distribution \(\mathbf{p}:= ( p_{1},\ldots,p_{n} ) \) is defined by

Corollary 5

Let \([\alpha , \beta ]\subseteq \langle 0,\infty \rangle \), and let \(\mathbf{q}:= ( q_{1},\ldots,q_{n} ) \in \langle 0,\infty \rangle^{n}\) be such that

Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ] \rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Furthermore, let \(p,q\in \mathbb{R}\), \(1\leq p,q\leq \infty \), be such that \(\frac{1}{p}+\frac{1}{q}=1\). Then

where

Proof

The result follows directly from Theorem 3(a) by substitution \(f:=\log \) and \(\mathbf{p}:= ( 1,\ldots,1 ) \). □

The second one corresponds to the relative entropy or Kullback–Leibler divergence between two probability distributions.

Definition 4

The Kullback–Leibler divergence between two positive probability distributions \(\mathbf{p}:= ( p_{1},\ldots,p_{n} ) \) and \(\mathbf{q}:= ( q_{1},\ldots,q_{n} ) \) is defined by

Corollary 6

Let \([\alpha , \beta ]\subseteq \langle 0,\infty \rangle \), and let \(\mathbf{p}:= ( p_{1},\ldots,p_{n} ) \in \mathbb{R}^{n}\) and \(\mathbf{q}:= ( q_{1},\ldots,q_{n} ) \in \langle 0,\infty \rangle^{n}\) be such that

Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ] \rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Furthermore, let \(p,q\in \mathbb{R}\), \(1\leq p,q\leq \infty \), be such that \(\frac{1}{p}+\frac{1}{q}=1\).

Then

where id is the identity function, and

Proof

The result follows from Theorem 3(b) by substitution \(f:=\log \). □

5.2 Applications to Zipf–Mandelbrot law

The forthcoming results deal with the so called Zipf–Mandelbrot law.

George Kingsley Zipf (1902–1950) was a linguist who investigated the frequencies of different words in the text. The Zipf law is one of the basic laws in information science, bibliometrics, and linguistics (see [5]). In certain fields, like economics and econometrics, this distribution is known as Pareto’s law. There it analyzes the distribution of the wealthiest members of the community (see [5], p. 125). Though, in the mathematical sense, these two laws are the same; the only difference is that they are applied in different contexts (see [6], p. 294). The same kind of distribution can be also found in other scientific disciplines, such as physics, biology, earth and planetary sciences, computer science, demography, and social sciences. For more information, we refer [15].

The mathematician Benoit Mandelbrot (1924–2010) introduced a more general model of this law (see [11]). The new Zipf–Mandelbrot law has many different applications, for example, in information sciences [6], linguistics [13], ecology [14], music [10], and so on.

Definition 5

([7])

The Zipf–Mandelbrot law is a discrete probability distribution, depending on three parameters \(N\in \{ 1,2,\ldots \} \), \(t\in [0,\infty \rangle \), and \(v>0\) and defined by

where

When \(t=0\), then the Zipf–Mandelbrot law becomes the Zipf law.

Now, we will apply our results for distributions given in Theorem 3 to the Zipf–Mandelbrot law, a sort of discrete probability distributions.

Corollary 7

Let \(\mathbf{p_{1}}\), \(\mathbf{p_{2}}\) be two Zipf–Mandelbrot laws with parameters \(N\in \{ 1,2,\ldots \} \), \(t_{1}, t_{2}\in [0, \infty \rangle \), and \(v_{1}\), \(v_{2}>0\), respectively, such that

Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ] \rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Furthermore, let \(p,q\in \mathbb{R}\), \(1\leq p,q\leq \infty \), be such that \(\frac{1}{p}+\frac{1}{q}=1\).

-

(a)

If \(f: [\alpha , \beta ]\rightarrow \mathbb{R}\), \(f \in C^{2} ( [\alpha , \beta ] ) \), then

$$ \biggl\vert \frac{1}{A_{2}} \hat{D}_{f}(\mathbf{p_{1}}, \mathbf{p_{2}}) - f \biggl( \frac{A_{1}}{A_{2}} \biggr) \biggr\vert \leq Q \cdot \bigl\Vert f'' \bigr\Vert _{p}, $$and

-

(b)

if \(\mathit{id} \cdot f \in C^{2}[\alpha , \beta ]\), then

$$ \biggl\vert \frac{1}{A_{2}} \hat{D}_{\mathit{id} \cdot f}(\mathbf{p_{1}}, \mathbf{p_{2}}) - \frac{A_{1}}{A_{2}} f \biggl( \frac{A_{1}}{A_{2}} \biggr) \biggr\vert \leq Q \cdot \bigl\Vert (\mathit{id} \cdot f)'' \bigr\Vert _{p}, $$where id is the identity function, and

$$ Q= \textstyle\begin{cases} [ \int_{\alpha }^{\beta } \vert \frac{1}{A_{2}} \hat{D}_{G_{k} ( \cdot ,s)}(\mathbf{p_{1}},\mathbf{p_{2}})- G_{k} ( \frac{A_{1}}{A _{2}},s ) \vert ^{q} \,ds ] ^{\frac{1}{q}} & \textit{for } q \neq \infty , \\ \sup_{s\in [\alpha ,\beta ]} \{ \vert \frac{1}{A_{2}} \hat{D} _{G_{k} (\cdot ,s)}(\mathbf{p_{1}},\mathbf{p_{2}})- G_{k} ( \frac{A _{1}}{A_{2}},s ) \vert \} & \textit{for } q = \infty . \end{cases} $$

Although it is a particular case of the result just given, here we also present a result for the Shannon entropy.

Corollary 8

Let \([\alpha , \beta ]\subseteq \langle 0,\infty \rangle \), let q be the Zipf–Mandelbrot law as defined in Definition 5 such that

Let the functions \(G_{k}: [\alpha , \beta ]\times [\alpha , \beta ] \rightarrow \mathbb{R}\) (\(k=0,1,2,3,4\)) be as defined in (1)–(5). Furthermore, let \(p,q\in \mathbb{R}\), \(1\leq p,q\leq \infty \), be such that \(\frac{1}{p}+\frac{1}{q}=1\).

Then

where

References

Agarwal, R.P., Wong, P.J.Y.: Error Inequalities in Polynomial Interpolation and Their Applications. Kluwer Academic, Dordrecht (1993)

Costarelli, D., Spigler, R.: How sharp is the Jensen inequality? J. Inequal. Appl. 2015, 69 (2015). https://doi.org/10.1186/s13660-015-0591-x

Csiszár, I.: Information-type measures of difference of probability distributions and indirect observations. Studia Sci. Math. Hung. 2, 299–318 (1967)

Csiszár, I.: Information measures: a critical survey. In: Trans. 7th Prague Conf. on Info. Th., Statist. Decis. Funct., Random Processes and 8th European Meeting of Statist., vol. B, pp. 73–86. Academia, Prague (1978)

Diodato, V.: Dictionary of Bibliometrics. Haworth Press, New York (1994)

Egghe, L., Rousseau, R.: Introduction to Informetrics. Quantitative Methods in Library, Documentation and Information Science. Elsevier, New York (1990)

Horváth, L., Pečarić, Ð., Pečarić, J.: Estimations of f- and Rényi divergences by using a cyclic refinement of the Jensen’s inequality. Bull. Malays. Math. Sci. Soc. (2017). https://doi.org/10.1007/s40840-017-0526-4

Liese, F., Vajda, I.: Convex Statistical Distances. Teubner-Texte Zur Mathematik, vol. 95. Teubner, Leipzig (1987)

Lovričević, N., Pečarić, Ð., Pečarić, J.: Zipf–Mandelbrot law, f-divergences and the Jensen-type interpolating inequalities. J. Inequal. Appl. 2018, 36 (2018). https://doi.org/10.1186/s13660-018-1625-y

Manaris, B., Vaughan, D., Wagner, C.S., Romero, J., Davis, R.B.: Evolutionary music and the Zipf–Mandelbrot law: developing fitness functions for pleasant music. In: Proceedings of 1st European Workshop on Evolutionary Music and Art (EvoMUSART2003), pp. 522–534 (2003)

Mandelbrot, B.: An informational theory of the statistical structure of language. In: Jackson, W. (ed.) Communication Theory, pp. 486–502. Academic Press, New York (1953)

Mikić, R., Pečarić, Ð., Pečarić, J.: Inequalities of the Jensen and Edmundson–Lah–Ribarič type for 3-convex functions with applications. J. Math. Inequal. 12(3), 677–692 (2018). https://doi.org/10.7153/jmi-2018-12-52

Montemurro, M.A.: Beyond the Zipf–Mandelbrot law in quantitative linguistics. arXiv:cond-mat/0104066v2 (2001)

Mouillot, D., Lepretre, A.: Introduction of relative abundance distribution (RAD) indices, estimated from the rank-frequency diagrams (RFD), to assess changes in community diversity. In: Environmental Monitoring and Assessment, vol. 63, pp. 279–295. Springer, Berlin (2000)

Newman, M.E.J.: Power laws, Pareto distributions and Zipf’s law. http://arxiv.org/pdf/cond-mat/0412004.pdf?origin=publication_detail (30.9.2018)

Pečarić, J., Perić, I., Rodić Lipanović, M.: Uniform treatment of Jensen type inequalities. Math. Rep. 16(66), 2, 183–205 (2014)

Pečarić, J., Rodić, M.: Uniform treatment of Jensen type inequalities II. Math. Rep. Accepted

Vajda, I.: Theory of Statistical Inference and Information. Kluwer Academic, Dordrecht (1989)

Acknowledgements

Not applicable.

Availability of data and materials

Not applicable.

Funding

The funding for this research and the costs of publication are covered by a lump sum granted by University of Zagreb Research Funding (PP2/18).

Author information

Authors and Affiliations

Contributions

All authors contributed equally and significantly in writing this article. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Pečarić, Ð., Pečarić, J. & Rodić, M. About the sharpness of the Jensen inequality. J Inequal Appl 2018, 337 (2018). https://doi.org/10.1186/s13660-018-1923-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-018-1923-4