Abstract

We present a novel finite-time average consensus protocol based on event-triggered control strategy for multiagent systems. The system stability is proved. The lower bound of the interevent time is obtained to guarantee that there is no Zeno behavior. Moreover, the upper bound of the convergence time is obtained. The relationship between the convergence time and protocol parameter with initial state is analyzed. Lastly, simulations are conducted to verify the effectiveness of the results.

Similar content being viewed by others

1 Introduction

In recent years, many applications required a lot of vehicles or robots to work cooperatively and accomplish a complicated task. Given this, many researchers have devoted themselves to the studies of coordination control of multiagent systems [1, 2]. The primary researches in this field include the problems of consensus [3], flocking [4], formation control [5, 6], collective behavior of swarms [7, 8], etc. Of these, the issue of consensus is the basis of studying other problems. Multiagent consensus refers to the design of a proper consensus protocol based on the local information of each agent such that all agents can reach an agreement with regard to certain quantities of interest [4].

In practical multiagent systems, each agent is usually equipped with a small embedded microprocessor and has limited energy, which usually has only limited computing power and working time. These disadvantages drive researchers to develop event-triggered control schemes, and some important achievements have been made recently [9–11]. For example, in [12] the authors introduced the deterministic event-triggered strategy to develop consensus control algorithms, and the lower bound of the interevent time was obtained to guarantee that there is no Zeno behavior. In [13] the problems of event-triggered integrated fault detection, isolation, and control for discrete-time linear systems were considered. It was shown that the amount of data that is sent through the sensor-to-filter and filter-to-actuator channels are dramatically decreased by using an event-triggered technique applied to both the sensor and filter nodes. In [14], the authors proposed a new multiagent consensus event-based control approach. The measurement broadcasts were scheduled in an event-based fashion, and the continuous monitoring of each neighbor’s state is no longer demanded. In [15] the authors proposed a combinational measurement strategy to event design and developed a new event-triggered control algorithm. In this strategy, each agent is only triggered at his own event time, which lowers the frequency of controller updates and reduces the amount of communication. In [16] the authors proposed a self-triggered consensus algorithm for multiagent systems. The algorithm is simpler in formulation and computation. Thus, more energy can be saved using the proposed algorithm in practical multiagent systems.

Moreover, the convergence time is a significant performance indicator for a consensus protocol in the study of the consensus problem. In most works the protocols only achieve state consensus in infinite time interval, that is, the consensus is only achieved asymptotically. However, the stability or performance of multiagent systems in a finite time interval needs to be considered in several cases. The finite-time stability focuses on the behavior of system responses over a finite time interval [17, 18]. Therefore, studying the finite-time stability of multiagent systems is valuable to some degree. The multiagent finite-time stability analysis has also elicited the attention of many researchers [19–22].

Recently, there are few results reported in the literature to address finite-time event-triggered control consensus protocols for multiagent systems. To the best of our knowledge, in [23] the authors presented two novel nonlinear consensus protocols based on event-driven strategy to investigate the finite-time consensus problem of leaderless and leader-following multiagent systems. However, many parameters exist in the proposed protocols, making the protocols complex and restricted, and the relationship between the convergence time and parameters is unclear. Inspired by these, a new consensus protocol based on the event-triggered control strategy is proposed in this paper.

The main contributions of this paper can be summarized as follows: (1) a new finite-time consensus protocol based on the event-triggered control strategy for multiagent systems is presented, and the system stability is proved. The protocol is simpler in formulation and computation. (2) The lower bound of the inter-event time is gotten to guarantee there is no Zeno behavior. (3) The upper bound of convergence time is obtained. The relationship between the convergence time and protocol parameter, the initial state, is analyzed.

The rest of this paper is organized as follows. In Section 2, we introduce some essential background and present the problem statement. The main results and the proof are provided in Section 3. The simulation results are shown in Section 4, and the conclusions and the future works are provided in Section 5.

Notations

\(\mathbf{1}= [1,1,\ldots,1]^{\mathrm{T}}\) with compatible dimensions, \(\mathcal{I}_{n} = \{ 1,2,\ldots,n\}\). \(|x|\) is the absolute value of a real number x, \(\|\cdot\|\) denotes the 2-norm in \(R^{n}\), \(\operatorname{span}\{ \mathbf{1}\} = \{\boldsymbol{\varepsilon} \in R^{n}:\boldsymbol{\varepsilon} = r\mathbf{1},r \in R\}\), and E is the Euler number (approximately 2.71828).

2 Background and problem statement

2.1 Preliminaries

In this subsection, we introduce some basic definitions and results of algebraic graph theory. Comprehensive conclusions on algebraic graph theory are found in [24]. Moreover, we present two essential lemmas.

For an undirected graph \(\mathcal{G} = ( \mathcal {V},\mathcal{E},\mathcal{A} )\) with n vertices, \(\mathcal{V} = \{ v_{1},v_{2},\ldots,v_{n}\}\) is the vertex set, and \(\mathcal{E}\) is the edge set, the adjacency matrix \(\mathcal{A} = [a_{ij}]\) is the \(n \times n\) matrix defined by \(a _{ij}=1\) for \((i,j) \in\mathcal{E}\) and \(a _{ij}=0\) otherwise. The neighbors of a vertex \(v _{i}\) are denoted by \(\mathcal {N}_{i} = \{ v_{j} \in\mathcal{V}:(v_{i},v_{j}) \in \mathcal{E}\}\), and then the vertices \(v _{i}\) and \(v _{j}\) are called adjacent. A path from \(v _{i}\) to \(v _{j}\) is a sequence of distinct vertices starting with \(v _{i}\) and ending with \(v _{j}\) such that consecutive vertices are adjacent. A graph is regarded as connected if there is a path between any two distinct vertices. The Laplacian of a graph \(L= [l _{ij}] \in R^{n \times n}\) is defined by \(l_{ij} = \sum_{k = 1,k \ne i}^{n} a_{ik}\) for \(i=j\) and \(l _{ij}=- a _{ij}\) otherwise. L has always a zero eigenvalue, and 1 is the associated eigenvector. We denote the eigenvalues of L by \(0= \lambda _{1}(L)\leq\lambda_{2}(L)\leq\cdots\leq\lambda _{n}(L)\). For an undirected and connected graph, \(\lambda_{2}(L) = \min_{\boldsymbol{\varepsilon} \ne0,1^{T}\boldsymbol{\varepsilon} = 0}\frac{\boldsymbol{\varepsilon}^{\mathrm{T}}L\boldsymbol{\varepsilon}}{ \boldsymbol{\varepsilon}^{\mathrm{T}}\boldsymbol{\varepsilon}} > 0\). Therefore, if \(\mathbf{1}^{\mathrm{T}} \boldsymbol{\varepsilon} = 0\), then \(\boldsymbol{\varepsilon} ^{\mathrm{T}} L \boldsymbol{\varepsilon} \geq \lambda_{2}(L) \boldsymbol{\varepsilon} ^{\mathrm{T}} \boldsymbol{\varepsilon} \).

Lemma 1

([25])

Suppose that a function \(V(t): [0, \infty) \rightarrow[0, \infty)\) is differentiable and satisfies the condition

where \(K >0\) and \(0 < \alpha <1\). Then \(V(t)\) reaches zero at \(t ^{*}\), and \(V(t) =0\) for all \(t \geq t ^{*}\), where

Lemma 2

([26])

If \(\xi_{1},\xi_{2}, \ldots,\xi_{n} \ge 0\) and \(0< p \leq1\), then

2.2 Problem formulation

The multiagent system investigated in this study consists of n agents, and the state of agent i is denoted by \(x _{i}\), \(i \in\mathcal{I}_{n}\). Denote the vector \(\mathbf{x} =[x _{1}, x _{2}, \ldots, x _{n}]^{\mathrm{T}}\). The dimension of \(x _{i}\) can be arbitrary as long as it is the same for all agents. For simplicity, we only analyze the one-dimensional case. The results are still valid for multidimensional cases through the introduction of the Kronecker product and inequality (3). We suppose that each agent satisfies the dynamics

where \(u _{i}\) is the protocol, which is designed based on the state information received by the corresponding agent from its neighbors.

With the given protocol \(u _{i}\), for any initial state, if there is a stable equilibrium \(x ^{*}\) and a time \(t ^{*}\) satisfying \(x_{i}= x ^{*}\) for all \(i \in\mathcal{I}_{n}\) with \(t \geq t ^{*}\), then the finite-time consensus problem is solved [25]. In addition, the average consensus problem is solved if the final consensus state is the average value of the initial state, namely, \(x_{i}(t) = \sum_{i = 1}^{n} x_{i} (0) / n\) for all \(i \in\mathcal{I}_{n}\) with \(t \to\infty\).

2.3 Event-triggered control consensus protocol

In the event design, we suppose that each agent can measure its own state \(x _{i}(t)\) and obtain its neighbors’ states stably. For each vertex \(v_{i}\) and \(t \geq 0\), introduce a measurement error \(e _{i}(t)\) and denote the vector \(\mathbf{e} (t)=[e _{1}(t), e _{2}(t),\ldots,e _{n}(t)]\). The time instants at which the events are triggered are defined by the condition \(f(e(t _{i}))=0\). Suppose the triggering time sequence of vertex \(v _{i}\) is \(t^{i} = 0,\tau_{1}, \ldots,\tau_{s}^{i}, \ldots\) .

Between events are triggered, the value of the input u is held constant and can be formalized as

It is well known that the control algorithm is a piecewise constant function, and the value of the input is equal to the last control update.

The protocol based on event-triggered control utilized to solve the finite-time average consensus problem is

where \(0< \alpha<1\), and the \(\operatorname{sign}(\cdot)\) is the sign function defined as

3 Main results

3.1 Stability analysis

In this subsection, we study protocol (6). Now, we are in a position to present our main results.

Theorem 1

Suppose that the communication topology of a multiagent system is undirected and connected and that the triggered function is given by

where \(D = [d_{ij}] \in R^{n \times n}\), \(d_{ij} = (a_{ij})^{2 / ( 1 + \alpha )}\), and \(0< \mu<1\).

Then, protocol (6) solves the finite-time average consensus problem for any initial state. Moreover, the settling time T satisfies

Proof

Given that the topology is undirected and connected, \(a _{ij} = a _{ji}\) for all \(i,j \in\mathcal{I}_{n}\). We obtain

Let

Therefore, κ is time invariant.

Consequently,

Define the measurement error as follows:

Then we get

Let \(\boldsymbol{\delta} (t) = [\delta_{1}(t),\delta_{2}(t), \ldots,\delta_{n}(t)]^{\mathrm{T}}\) and \(x_{i}(t) = \kappa+ \delta_{i}(t)\). Thus,

Here we have taken the Lyapunov function

By differentiating \(V(t)\) we obtain

Equation (17) results from \(\delta_{j} - \delta_{i} = x_{j} - x_{i}\). For \(0 < \alpha< 1\), we have \(0.5 < (1+ \alpha)/2 < 1\). Denote

Suppose that \(V(t) \neq 0\); then \(\boldsymbol{\delta} (t) \neq 0\). By Lemma 2 we have

Then we get

Then

Combining the above formulas results in

According to the triggered function of Theorem 1, we get

Then we get

Therefore, according to Lemma 1, the differential in equation (26) makes \(V(t)\) reach zero in finite time. Moreover, the settling time T satisfies

If \(V(t)=0\), then \(\boldsymbol{\delta} (t)=0\), which implies that \(u _{i} = 0\), \(i \in\mathcal{I}_{n}\). Thus, \({x}(t) \in \operatorname{span}(\mathbf{1})\). Therefore, the system stability is guaranteed, and the novel protocol can solve the finite-time average consensus problem. □

Remark 1

For each agent, an event is triggered as long as the triggered function satisfies \(f_{i} ( t,e_{i}(t),\delta_{i}(t) ) = 0\).

Remark 2

The role of the parameter μ in the triggered function (8) is adjusting the rate of decrease for the Lyapunov function. From equation (8) we know that when the parameter μ is large, the allowable error is large. This means that when μ is large, the trigger frequency is low. From equation (27) we know that when μ is large, the convergence time is long.

Remark 3

Note that if we set \(\mu=0\), then the protocol becomes the typical finite-time consensus protocol studied in [19]. However, the finite-time consensus protocol in [19] does not adapt event-triggered control strategy, so that the energy consumption of the system is large.

3.2 Existence of a lower bound for interevent times

In the event-triggered control conditions, the agent cannot exhibit Zeno behavior. Namely, for any initial, the interevent times \(\{ \tau _{i + 1} - \tau_{i} \}\) defined by equation (8) are lower bounded by a strictly positive time τ. This is proven in the following theorem.

Theorem 2

Consider the multiagent system (4) with consensus protocol (6). Suppose that the communication topology of the multiagent system is undirected and connected. The trigger function is given by equation (8). Then the agent cannot exhibit Zeno behavior. Moreover, for any initial state, the interevent times \(\{ \tau_{i + 1} - \tau_{i} \}\) are lower bounded by \(\tau_{\mathrm{min}}\) given by

where \(\theta= ( \frac{ ( 4\lambda_{2} ( D ) )^{ ( 1 + \alpha ) / 2}}{2^{ ( 1 - \alpha ) / 2}} )^{1 / \alpha} \).

Proof

Similarly to the main result in [27], define

By differentiating \(f _{i}(t)\) we obtain

Then

Then we can get that the interevent times are bounded by the time as follows:

From equation (25) we know

Combining the above formulas results in

and the proof is complete. □

Remark 4

From equation (34) it is easy to see that the minimum interevent time increases with μ.

Remark 5

Note that if we set \(\alpha=1\) in protocol (6), then the finite-time nonlinear event-triggered control strategy becomes the typical event-triggered linear consensus protocol studied in [9]. However, the event-triggered linear consensus protocol can only make agents achieve consensus asymptotically, whereas the proposed consensus protocol in this paper can solve the consensus problem in finite time.

3.3 Performance analysis

In this subsection, the relationship between convergence time and other factors, including initial state and parameter α is studied.

Firstly, we study the relationship between convergence time and initial states. In the consensus problem, rather than the size of the initial states, the disagreement between states is more concerned. By definition, \(V(0)\) measures the disagreement of the initial states with final state. From equation (27) we easily see that the convergence time increases as \(V(0)\) increases.

Then we study the relationship between convergence time and parameter α. Supposing that \(T _{u}\) is the upper bound of the communication time by equation (27) we obtain

Then

Letting \(dT _{u}( \alpha)/d \alpha=0\), we get \(\alpha= 1 - 2 / \ln ( 4V(0)\lambda_{2}(D) )\). Therefore, given that \(0< \alpha<1\), if \(V(0)\lambda_{2}(D) < E^{2} / 4\), then the convergence time increases as increases; if \(V(0)\lambda_{2}(D) > E^{2} / 4\), then the convergence time decreases initially and then increases as α becomes large, and when \(\alpha= 1 - 2 / \ln ( 4V(0)\lambda_{2}(D) )\), the convergence time gets the minimum value. Generally, the value of \(V(0)\lambda_{2}(D)\) is always large, and to reduce the convergence time, we can set \(\alpha= 1 - 2 / \ln ( 4V(0)\lambda_{2}(D) )\).

Remark 6

Convergence time is defined as the amount of time the system consumes to reach a consensus. The precise convergence time of the studied nonlinear protocol is difficult to obtain. The above conclusions were obtained based on the upper bound of convergence time in equation (27).

4 Simulations

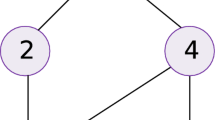

In this section, the simulations are conducted to verify the efficiency of the conclusions. Consider the multiagent system with \(n=4\) agents. The communication topology is shown in Figure 1, and the communication weight is assumed to be 1. Through calculation we can obtain \(\lambda_{2}(L) = 2\) and \(\Vert L \Vert = 4\). We suppose that the initial state is \([-2, 0, 2, 6]^{\mathrm{T}}\).

The trajectories of agents under protocol (6) when \(\alpha=0.3\) and \(\mu =0.2\) are shown in Figure 2. We can see that the final consensus state is \(\sum_{i = 1}^{n} x_{i} (0) / 4 = 1.5\). Thus, protocol (6) can solve the finite-time average consensus problem. Figure 3 shows the evolution of the error norm of agent 1 under protocol (6). The red solid line represents the evolution of \(\Vert e(t) \Vert \). It stays below the specified state-dependent threshold \(\Vert e(t) \Vert _{\max} = ( \frac {\mu ( 4\lambda_{2} ( D ) )^{ ( 1 + \alpha ) / 2}}{2^{ ( 1 - \alpha ) / 2}} )^{1 / \alpha} \Vert \delta (t) \Vert \), which is represented by the blue dotted line in the figure. Figure 4 shows the evolution of the control input of agents under protocol (6). We can see that the control input is a piecewise constant value. Moreover, when the system error norm is small, the control input is small, and when the control input tends to zero, the system reaches equilibrium states.

When \(\mu=0.2\), the trajectories of agents under \(\alpha=0.6\) and \(\alpha=0.9\) are shown in Figures 5 and 6, respectively. Comparison with Figure 2 and Figures 5 and 6 shows that if \(V(0)\lambda_{2}(D) > E^{2} / 4\) when \(\alpha= 1 - 2 / \ln ( 4V(0)\lambda_{2}(D) )\) (approximately 0.6 in this paper), the convergence time is shorter than that when \(\alpha=0.3\) and \(\alpha=0.9\).

Moreover, the relationship between the convergence time and parameter α, \(V(0)\) is simulated. The result is shown in Figure 7. The relationship between the convergence time and α when \(V(0) = 17.5\) (the corresponding \(V(0)\) for \(x(0)\) in this paper) is shown in Figure 8. The two figures show that if \(V(0)\lambda_{2}(D) > E^{2} / 4\), then the convergence time decreases initially, then increases as α becomes large, and when \(\alpha= 1 - 2 / \ln ( 4V(0)\lambda_{2}(D) )\), the convergence time gets the minimum value.

5 Conclusions

We presented a novel finite-time average consensus protocol based on event-triggered control strategy for multiagent systems, which guarantees the system stability. The upper bound of convergence time was obtained. The relationship between convergence time and protocol parameter with initial state was analyzed. The following conclusions were obtained from simulations.

-

(1)

The proposed protocol can solve the finite-time average consensus problem.

-

(2)

The lower bound of the interevent time was obtained to guarantee that there is no Zeno behavior.

-

(3)

The larger the difference in the initial state, the longer the convergence time. Moreover, if \(V(0)\lambda_{2}(D) > E^{2} / 4\), then the convergence time decreases initially, then increases as α becomes large, and when \(\alpha= 1 - 2 / \ln ( 4V(0)\lambda_{2}(D) )\), the convergence time gets the minimum value.

In this paper, the authors only considered first-order multiagent systems. Our future works will focus on extending the conclusions to second-order or higher-order multiagent systems with switching topologies, measurement noise, time delays, and so on.

References

Olfati-Saber, R, Fax, JA, Murray, RM: Consensus and cooperation in networked multi-agent systems. Proc. IEEE 95(1), 215-233 (2007)

Davoodi, M, Meskin, N, Khorasani, K: Simultaneous fault detection and consensus control design for a network of multi-agent systems. Automatica 66(5), 185-194 (2016)

Li, H, Karray, F, Basir, O, Song, I: A framework for coordinated control of multi-agent systems and its applications. IEEE Trans. Syst. Man Cybern., Part A, Syst. Hum. 38(3), 534-548 (2008)

Olfati-Saber, R: Flocking for multi-agent dynamic systems: algorithms and theory. IEEE Trans. Autom. Control 51(3), 401-420 (2006)

Dong, W, Farrell, JA: Decentralized cooperative control of multiple nonholonomic dynamic systems with uncertainty. Automatica 45(3), 706-710 (2009)

Fax, JA, Murray, RM: Information flow and cooperative control of vehicle formations. IEEE Trans. Autom. Control 49(9), 1465-1476 (2004)

Freeman, RA, Yang, P, Lynch, KM: Distributed estimation and control of swarm formation statistics. In: American Control Conference, pp. 749-755 (2006)

Olfati-Saber, R: Swarms on sphere: a programmable swarm with synchronous behaviors like oscillator networks. In: 45th IEEE Conference on Decision and Control, pp. 5060-5066 (2006)

Dimarogonas, DV, Johansson, KH: Event-triggered cooperative control. In: European Control Conference, pp. 3015-3020 (2009)

Heemels, WPMH, Johansson, KH, Tabuada, P: An introduction to event-triggered and self-triggered control. In: IEEE 51st Annual Conference on Decision and Control, pp. 3270-3285 (2012)

Davoodi, M, Meskin, N, Khorasani, K: Event-triggered multi-objective control and fault diagnosis: a unified framework. IEEE Trans. Ind. Inform. 13(1), 298-311 (2017)

Dimarogonas, DV, Frazzoli, E, Johansson, KH: Distributed event-triggered control for multi-agent systems. IEEE Trans. Autom. Control 57(5), 1291-1297 (2012)

Davoodi, MR, Meskin, N, Khorasani, K: Event-triggered fault detection, isolation and control design of linear systems. In: International Conference on Event-Based Control, Communication, and Signal Processing, pp. 1-6 (2015)

Seyboth, GS, Dimarogonas, DV, Johansson, KH: Event-based broadcasting for multi-agent average consensus. Automatica 49(1), 245-252 (2013)

Fan, Y, Feng, G, Wang, Y, Song, C: Technical communique: distributed event-triggered control of multi-agent systems with combinational measurements. Automatica 49(2), 671-675 (2013)

Fan, Y, Liu, L, Feng, G, Wang, Y: Self-triggered consensus for multi-agent systems with Zeno-free triggers. IEEE Trans. Autom. Control 60(10), 2779-2784 (2015)

Wang, R, Xing, J, Wang, P, Yang, Q, Xiang, Z: Finite-time stabilization for discrete-time switched stochastic linear systems under asynchronous switching. Trans. Inst. Meas. Control 36(5), 588-599 (2014)

Huang, T, Li, C, Duan, S, Starzyk, J: Robust exponential stability of uncertain delayed neural networks with stochastic perturbation and impulse effects. IEEE Trans. Neural Netw. Learn. Syst. 23(6), 866-875 (2012)

Wang, L, Xiao, F: Finite-time consensus problems for networks of dynamic agents. IEEE Trans. Autom. Control 55(4), 950-955 (2010)

Zuo, Z, Lin, T: A new class of finite-time nonlinear consensus protocols for multi-agent systems. Int. J. Control 87(2), 363-370 (2014)

Feng, Y, Li, C, Huang, T: Periodically multiple state-jumps impulsive control systems with impulse time windows. Neurocomputing 193, 7-13 (2016)

Wang, X, Li, J, Xing, J, Wang, R, Xie, L, Zhang, X: A novel finite-time average consensus protocol for multi-agent systems with switching topology. Trans. Inst. Meas. Control (2016). doi:10.1177/0142331216663617

Zhu, Y, Guan, X, Luo, X, Li, S: Finite-time consensus of multi-agent system via nonlinear event-triggered control strategy. IET Control Theory Appl. 9(17), 2548-2552 (2015)

Godsil, C, Royle, GF: Algebraic Graph Theory. Springer, Berlin (2013)

Bhat, SP, Bernstein, DS: Finite-time stability of continuous autonomous systems. SIAM J. Control Optim. 38(3), 751-766 (2000)

Lick, WJ: Difference Equations from Differential Equations. Springer, Berlin (1989)

Tabuada, P: Event-triggered real-time scheduling of stabilizing control tasks. IEEE Trans. Autom. Control 52(9), 1680-1685 (2010)

Acknowledgements

This project was supported by the National Natural Science Foundation of China (Grant No. 61603414). We would like to express our appreciation to the anonymous referees and the Associate Editor for their valuable comments and suggestions.

Author information

Authors and Affiliations

Contributions

All authors contributed equally and significantly in writing this article. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wang, X., Li, J., Xing, J. et al. A novel finite-time average consensus protocol based on event-triggered nonlinear control strategy for multiagent systems. J Inequal Appl 2017, 258 (2017). https://doi.org/10.1186/s13660-017-1533-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-017-1533-6