Abstract

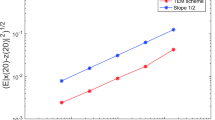

The main purpose of this paper is to investigate the strong convergence and exponential stability in mean square of the exponential Euler method to semi-linear stochastic delay differential equations (SLSDDEs). It is proved that the exponential Euler approximation solution converges to the analytic solution with the strong order \(\frac{1}{2}\) to SLSDDEs. On the one hand, the classical stability theorem to SLSDDEs is given by the Lyapunov functions. However, in this paper we study the exponential stability in mean square of the exact solution to SLSDDEs by using the definition of logarithmic norm. On the other hand, the implicit Euler scheme to SLSDDEs is known to be exponentially stable in mean square for any step size. However, in this article we propose an explicit method to show that the exponential Euler method to SLSDDEs is proved to share the same stability for any step size by the property of logarithmic norm.

Similar content being viewed by others

1 Introduction

Stochastic modeling has come to play an important role in many branches of science and industry. Such models have been used with great success in a variety of application areas, including biology, epidemiology, mechanics, economics and finance. Most stochastic differential equations (SDEs) are nonlinear and cannot be solved explicitly, whence numerical solutions are required in practice. Numerical solutions to SDEs have been discussed under the Lipschitz condition and the linear growth condition by many authors (see [1–7]). Higham et al. [2] gave almost sure and moment exponential stability in the numerical simulation of SDEs. Many authors have discussed numerical solutions to stochastic delay differential equations (SDDES) (see [8–12]). Cao et al. [8] obtained MS-stability of the Euler-Maruyama method for SDDEs. Mao [12] discussed exponential stability of equidistant Euler-Maruyama approximations of SDDES. The explicit Euler scheme is most commonly used for approximating SDEs with the global Lipschitz condition. Unfortunately, the step size of Euler method for SDEs has limits for research of stability. Therefore, the stability of the implicit Euler scheme to SDEs is known for any step size. However, in this article we propose an explicit method to show that the exponential Euler method to SLSDDEs is proved to share the stability for any step size by the property of logarithmic norm.

The paper is organized as follows. In Section 2, we introduce necessary notations and the exponential Euler method. In Section 3, we obtain the convergence of the exponential Euler method to SLSDDEs under Lipschitz condition and the linear growth condition. In Section 4, we obtain the exponential stability in mean square of the exponential Euler method to SLSDDEs. Finally, two examples are provided to illustrate our theory.

2 Preliminary notation and the exponential Euler method

In this paper, unless otherwise specified, let \(\vert x \vert \) be the Euclidean norm in \(x\in R^{n}\). If A is a vector or matrix, its transpose is denoted by \(A^{T}\). If A is a matrix, its trace norm is denoted by \(\vert A \vert =\sqrt{\operatorname{trace}(A^{T}A)}\). For simplicity, we also denote \(a\wedge b=\min\{a,b\}\), \(a\vee b=\max\{a,b\}\).

Let \((\Omega,\mathbf{F},P)\) be a complete probability space with a filtration \(\{\mathbf{F}_{t}\}_{t\geq0}\), satisfying the usual conditions. \(\mathbf{L}^{1}([0,\infty),R^{n})\) and \(\mathbf{L}^{2}([0,\infty),R^{n})\) denote the family of all real-valued \(\mathbf{F}_{t}\)-adapted processes \(f(t)_{t\geq0}\) such that for every \(T>0\), \(\int_{0}^{T} \vert f(t) \vert \,dt<\infty\) a.s. and \(\int_{0}^{T} \vert f(t) \vert ^{2}\,dt<\infty\) a.s., respectively. For any \(a,b\in R\) with \(a< b\), denote by \(C([a,b];R^{n})\) the family of continuous functions ϕ from \([a,b]\) to \(R^{n}\) with the norm \(\Vert \phi \Vert =\sup_{a\leq\theta\leq b} \vert \phi (\theta) \vert \). Denote by \(C_{\mathbf{F}_{t}}^{b}([a,b];R^{n})\) the family of all bounded \(\mathbf{F}_{t}\)-measurable \(C([a,b];R^{n})\)-valued random variables. Let \(B(t)=(B_{1}(t),\ldots,B_{d}(t))^{T}\) be a d-dimensional Brownian motion defined on the probability space \((\Omega,\mathbf{F},P)\). Throughout this paper, we consider the following semi-linear stochastic delay differential equations:

where \(T>0\), \(\tau>0\), \(\{\xi(t), t\in[-\tau,0]\}=\xi\in C^{b}_{\mathbf {F}_{0}}([-\tau,0];R^{n})\), \(f: R^{+}\times R^{n}\times R^{n} \rightarrow R^{n}\), \(g: R^{+}\times R^{n}\times R^{n}\rightarrow R^{n\times d}\), \(A\in R^{n\times n}\) is the matrix [13]. By the definition of stochastic differential, this equation is equivalent to the following stochastic integral equation:

Moreover, we also require the coefficients f and g to be sufficiently smooth.

To be precise, let us state the following conditions.

- (H1):

-

(The Lipschitz condition) There exists a positive constant \(L_{1}\) such that

$$\bigl\vert f(t,x,y)-f(t,\bar{x},\bar{y}) \bigr\vert ^{2}\vee\vert g(t,x,y)-g(t,\bar{x}, \bar{y}) \bigl\vert ^{2}\leq L_{1} \bigl( \bigr\vert x-\bar{x} \bigl\vert ^{2}+ \bigr\vert y-\bar{y} \vert^{2}\bigr) $$for those \(x, \bar{x}, y, \bar{y}\in R^{n}\).

- (H2):

-

(Linear growth condition) There exists a positive constant \(L_{2}\) such that

$$\bigl\vert f(t,x,y) \bigr\vert ^{2}\vee \bigl\vert g(t,x,y) \bigr\vert ^{2}\leq L_{2}\bigl(1+ \vert x \vert ^{2}+ \vert y \vert ^{2}\bigr) $$for all \((t,x,y)\in R^{+}\times R^{n}\times R^{n}\).

- (H3):

-

f and g are supposed to satisfy the following property:

$$\bigl\vert f(s,x,y)-f(t,x,y) \bigr\vert ^{2}\vee \bigl\vert g(s,x,y)-g(t,x,y) \bigr\vert ^{2}\leq K_{1}\bigl(1+ \vert x \vert ^{2}+ \vert y \vert ^{2}\bigr) \vert s-t \vert , $$where \(K_{1}\) is a constant and \(s,t\in[0,T]\) with \(t>s\).

Let \(h=\frac{\tau}{m}\) be a given step size with integer \(m\geq1\), and let the gridpoints \(t_{n}\) be defined by \(t_{n}=nh\) (\(n=0,1,2,\ldots\)). We consider the exponential Euler method to (2.1)

where \(\Delta B_{n}=B(t_{n})-B(t_{n-1})\), \(n=0,1,2,\ldots , y_{n}\), is approximation to the exact solution \(x(t_{n})\). The continuous exponential Euler method approximate solution is defined by

where \(\underline{s}=[\frac{s}{h}]h\) and \([x]\) denotes the largest integer which is smaller than x, \(z(t)=\sum^{\infty}_{k=0}y_{k}1_{[kh,(k+1)h)}(t)\) with \(1_{\mathcal{A}}\) denoting the indicator function for the set \(\mathcal {A}\). It is not difficult to see that \(y(t_{n})=z(t_{n})=y_{n}\) for \(n=0,1,2,\ldots\) . That is, the step function \(z(t)\) and the continuous exponential Euler solution \(y(t)\) coincide with the discrete solution at the gridpoint. Let \(C^{2,1}(R^{n}\times R_{+};R)\) denote the family of all continuous nonnegative functions \(V(x,t)\) defined on \(R^{n}\times R_{+}\) such that they are continuously twice differentiable in x and once in t. Given \(V\in C^{2,1}(R^{n}\times R_{+};R)\), we define the operator \(\mathcal {L}V:R^{n}\times R^{n}\times R_{+}\rightarrow R\) by

where

Let us emphasize that \(\mathcal{L}V\) is defined on \(R^{n}\times R^{n}\times R_{+}\), while V is only defined on \(R^{n}\times R_{+}\).

3 Convergence of the exponential Euler method

We will show the strong convergence of the exponential Euler method (2.4) on equations (2.1).

Theorem 3.1

Under conditions (H1), (H2) and (H3), the exponential Euler method approximate solution converges to the exact solution of equations (2.1) in the sense that

In order to prove this theorem, we first prepare two lemmas.

Lemma 3.1

Under the linear growth condition (H2), there exists a positive constant \(C_{1}\) such that the solution of equations (2.1) and the continuous exponential Euler method approximate solution (2.4) satisfy

where \(C_{1}=\max\{3e^{2 \vert A \vert T}e^{6e^{2 \vert A \vert T}T(T+4)L_{2}},e^{2 \vert A \vert T}T(T+4)L_{2}e^{6e^{2 \vert A \vert T}T(T+4)L_{2}}\}\) is a constant independent of h.

Proof

It follows from (2.4) that

By Hölder’s inequality, we obtain

This implies that for any \(0\leq t_{1}\leq T\),

By Doob’s martingale inequality, it is not difficult to show that

Making use of (H2) yields

Thus

By Gronwall’s inequality, we get

where \(C_{1}=(3e^{2 \vert A \vert T}E \Vert \xi \Vert ^{2}+3e^{2 \vert A \vert T}T(T+4e^{2 \vert A \vert T})L_{2})e^{6e^{2 \vert A \vert T}T(T+4e^{2 \vert A \vert T})L_{2}}\). In the same way, we obtain

where \(C_{1}=(3e^{2 \vert A \vert T}E \Vert \xi \Vert ^{2}+3e^{2 \vert A \vert T}T(T+4e^{2 \vert A \vert T})L_{2})e^{6e^{2 \vert A \vert T}T(T+4e^{2 \vert A \vert T})L_{2}}\). The proof is completed. □

The following lemma shows that both \(y(t)\) and \(z(t)\) are close to each other.

Lemma 3.2

Under condition (H2). Then

where \(C_{2}(\xi)\) is a constant independent of h.

Proof

For \(t\in[0,T]\), there is an integer k such that \(t\in[t_{k},t_{k+1})\). We compute

where I is an identity matrix. Taking the expectation of both sides, we can see

Using the linear growth conditions, we have

Since \(\vert e^{A(t-t_{k})}-I_{k} \vert \leq e^{ \vert A \vert h}-1\leq \vert A \vert he^{ \vert A \vert h}\leq \vert A \vert he^{ \vert A \vert T}\), we have

where \(C_{2}(\xi)=3 \vert A \vert ^{2}Te^{2 \vert A \vert T}C_{1}+3(T+1)e^{2 \vert A \vert T}L_{2}(1+2C_{1})\) is a constant independent of h. The proof is completed. □

Proof of Theorem 3.1

By Hölder’s inequality, we obtain

This implies that for any \(0\leq t_{1}\leq T\), by Doob’s martingale inequality, we have

We compute the first item in (3.18)

We compute the following two formulas in (3.18):

and

In the same way, we can obtain

We compute the following two formulas in (3.18):

and

Substituting (3.19) - (3.24) into (3.18), we have

By Gronwall’s inequality, since \(\vert e^{A(\underline{s}-s)}-I \vert \leq \vert A \vert he^{ \vert A \vert T}\), we can show

As a result,

The proof is completed. □

4 Exponential stability in mean square

In this section, we give the exponential stability in mean square of the exact solution and the exponential Euler method to semi-linear stochastic delay differential equations (2.1). For the purpose of stability study in this paper, assume that \(f(t,0,0)=g(t,0,0)=0\).

4.1 Stability of the exact solution

In this subsection, we will show the exponential stability in mean square of the exact solution to semi-linear stochastic delay differential equations (2.1)under the global Lipschitz condition. Next we will give the main content of this subsection.

Theorem 4.1

Under condition (H1), if \(1+2\mu[A]+4L_{1}<0\), then the solution of equations (2.1) with the initial data \(\xi\in C^{b}_{\mathbf{F}_{0}}([-\tau,0];R^{n})\) is exponentially stable in mean square, that is,

where \(\widetilde{B}(\tau)=e^{B_{1}\tau}-\frac{B_{2}}{B_{1}}(1-e^{B_{1}\tau })\), \(B_{1}=1+2\mu[A]+2L_{1}\), \(B_{2}=2L_{1}\).

By Ito’s formula and the delay term of the equation, we give the proof of Theorem 4.1. The highlight of the proof is that we give the mean square boundedness of the solution to the equation by dividing the interval into \([0,\pi],[\pi,2\pi],\ldots,[k\pi,(k+1)\pi]\). Then we give a proof of the conclusion by \(t\geq0,t\geq2\pi,t\geq4\pi,\ldots,t\geq2n\pi\). In the process of dealing with the semi-linear matrix, we use the definition of the matrix norm.

Definition 4.1

[12]

SDDEs (2.1) are said to be exponentially stable in mean square if there is a pair of positive constants λ and μ such that for any initial data \(\xi\in C^{b}_{\mathbf{F}_{0}}([-\tau,0];R^{n})\),

We refer to λ as the rate constant and to μ as the growth constant.

Definition 4.2

[14]

The logarithmic norm \(\mu[A]\) of A is defined by

Especially, if \(\Vert\cdot\Vert\) is an inner product norm, \(\mu[A]\) can also be written as

Lemma 4.1

Let \(\widetilde{B}(t)=e^{B_{1}t}-\frac{B_{2}}{B_{1}}(1-e^{B_{1}t})\). If \(B_{1}<0\), \(B_{2}>0 \) and \(B_{1}+B_{2}<0\), then for all \(t\geq0\), \(0< \widetilde{B}(t)\leq1\) and \(\widetilde{B}(t)\) is decreasing.

Proof

It is known from \(B_{1}<0\), \(B_{2}>0 \) and \(B_{1}+B_{2}<0\) that for all \(t\geq0\)

and

For all \(t\geq0\), we compute

Thus \(\widetilde{B}(t)\) is decreasing. The proof is complete. □

Proof of Theorem 4.1

By Itô’s formula and Definition 4.2, for all \(t\geq0\), we have

where \(B_{1}=1+2\mu[A]+2L_{1}\), \(B_{2}=2L_{1}\). Let \(V(x,t)=e^{-B_{1}t} \vert x(t) \vert ^{2}\), by Itô’s formula, we obtain

Integrating (4.6) from 0 to t yields

Taking expected values gives

For any \(t\in[0,\tau]\), we have

Hence

For any \(t\in[\tau,2\tau]\), we obtain

Thus

Repeating this procedure, for all \(t\in[k\tau,(k+1)\tau]\), we can show

Hence, for any \(t>0\), we have

On the other hand, for any \(t\geq0\), one can easily show that

Therefore,

Especially, we can see

For any \(t\geq2\tau\), we have

Therefore,

Obviously, we can obtain

For any \(t\geq4\tau\), we can see that

Therefore,

For any \(t\geq0\), there is an integer n such that \(t\geq2n\tau\); repeating this procedure, we can show

By (4.23) and Lemma 4.1, we obtain

which proves the theorem. □

4.2 Stability of the exponential Euler method

In this subsection, under the same conditions as those in Theorem 4.1, we will obtain the exponential stability in mean square of the exponential Euler method (2.4) to SLSDDEs (2.1) in Theorem 4.2. It is shown that the stability region of the numerical solution to the equation is the same as that of the analytical solution, which means that our method is effective.

Definition 4.3

[12]

Given a step size \(h=\tau/m\) for some positive integer m, the discrete exponential Euler method is said to be exponentially stable in mean square on SDDEs (2.1) if there is a pair of positive constants λ̄ and μ̄ such that for any initial data \(\xi\in C^{b}_{\mathbf{F}_{0}}([-\tau,0];R^{n})\),

Lemma 4.2

[14]

Let \(\mu[A]\) be the smallest possible one-sided Lipschitz constant of the matrix A for a given inner product. Then \(\mu[A]\) is the smallest element of the set

Theorem 4.2

Under condition (H1), if \(1+2\mu [A]+4L_{1}<0\), then for all \(h>0\) the numerical method to equations (2.1) is exponentially stable in mean square, that is,

where \(A_{1}=e^{2\mu[A]h}(1+L_{1}h^{2}+2L_{1}h+h)\), \(A_{2}=e^{2\mu[A]h}(L_{1}h^{2}+2L_{1}h)\).

Proof

Squaring and taking the conditional expectation on both sides of (2.3), noting that \(\Delta B_{n}\) is independent of \(\mathbf{F}_{nh}\), \(E(\Delta B_{n}\vert\mathbf{F}_{nh})=E(\Delta B_{n})=0\) and \(E((\Delta B_{n})^{2}\vert\mathbf{F}_{nh})=E(\Delta B_{n})^{2}=h\), we have

Taking expectations on both sides, we obtain that

By (H1) and the inequality \(2ab\leq a^{2}+b^{2}\), we have

Substituting (4.30) into (4.29), by (H1), we have

where \(A_{1}=e^{2\mu[A]h}(1+L_{1}h^{2}+2L_{1}h+h)\), \(A_{2}=e^{2\mu[A]h}(L_{1}h^{2}+2L_{1}h)\). In view of \(1+2\mu[A]+4L_{1}<0\), we have \(\mu[A]<0\) and \(-\mu[A]>\max\{1,L_{1}\}\). Consequently, \(L_{1}-\mu^{2}[A]<0\). Hence

for all \(h>0\), which implies

That is,

for all \(h>0\). From (4.31), we have

So we obtain

Thus, for all \(n=1,2\ldots\) ,

The proof is completed. □

5 Numerical experiments

In this section, we give several numerical experiments in order to demonstrate the results about the strong convergence and the exponential stability in mean square of the numerical solution for equations (2.1). We consider the test equation

Example 5.1

When \(a_{1}=-4\), \(a_{2}=1.5\), \(b_{1}=1\), \(b_{2}=0.05\), \(\xi=1+t\), \(\tau=1\). In Table 1, the convergence of the exponential Euler method to Example 5.1 is described. Here we focus on the error at the endpoint \(T=2,4\), and the error is given as \(E \vert y_{n}(\omega)-x(T,\omega) \vert ^{2}\), where \(y_{n}(\omega)\) denotes the value of (2.3) at the endpoint. The expectation is estimated by averaging random sample paths (\(\omega_{i}\), \(1\leq i\leq1\text{,}000\)) over the interval \([0,10]\), that is,

In Table 1, we can see that the exponential Euler method to Example 5.1 is convergent, suggesting that (2.3) is valid.

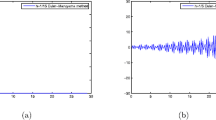

Example 5.2

When \(a_{1}=-5\), \(a_{2}=1\), \(b_{1}=2\), \(b_{2}=0.5\), \(\xi=1+t\), \(\tau=1\). We can show the stability of the exponential Euler method to (2.3). In Figure 1, all the curves decay toward to zero when \(h=1/2\), \(h=1/4\), \(h=1/8\), \(h=1/16\), \(h=1/32\), \(h=1/64\), \(h=1/128\), \(h=1/256\). So we can consider that our experiments are consistent with our proved results in Section 4.

6 Conclusions

In this paper, we study convergence and exponential stability in mean square of the numerical solution for the exponential Euler method to semi-linear stochastic delay differential equations under the global Lipschitz condition and the linear growth condition. Firstly, Theorem 3.1 gives the exponential Euler approximation solution converging to the analytic solution with the strong order \(\frac{1}{2}\) to SLSDDEs. Secondly, we give the exponential stability in mean square of the exact solution to SLSDDEs by using the definition of logarithmic norm. Then we propose an explicit method to show that the exponential Euler method to SLSDDEs is proved to share the same stability for any step size. Finally, a numerical example is given to verify the method, the conclusion is correct. In Table 1, the convergence of the exponential Euler method to Example 5.1 is described. Here we focus on the error at the endpoint \(T=2,4\). In Figure 1, all the curves decay toward zero when \(h=1/2\), \(h=1/4\), \(h=1/8\), \(h=1/16\), \(h=1/32\), \(h=1/64\), \(h=1/128\), \(h=1/256\), and there is the same conclusion for any step size. So we can consider that our experiments are consistent with our proved results in Section 4.

References

Friedman, A: Stochastic Differential Equations and Applications, Vol. 1 and 2. Academic Press, New York (1975)

Higham, DJ, Mao, X, Yuan, C: Almost sure and moment exponential stability in the numerical simulation of stochastic differential equations. SIAM J. Numer. Anal. 41, 592-609 (2007)

Mao, X: Stochastic Differential Equations and Applications. Horwood, Chichester (1997)

Fuke, W, Xuerong, M: Convergence and stability of the semi-tamed Euler scheme for stochastic differential equations with non-Lipschitz continuous coefficients. Appl. Math. Comput. 228, 240-250 (2014)

Mao, X: The truncated Euler Maruyama method for stochastic differential equations. J. Comput. Appl. Math. 290, 370-384 (2015)

Mao, X: Convergence rates of the truncated Euler Maruyama method for stochastic differential equations. J. Comput. Appl. Math. 296, 362-375 (2016). doi:10.1016/j.cam.2015.09.035

Mao, X: Almost sure exponential stability in the numerical simulation of stochastic differential equations. SIAM J. Numer. Anal. 53, 370-389 (2015)

Cao, WR, Liu, MZ, Fan, ZC: MS-stability of the Euler-Maruyama method for stochastic differential delay equations. Appl. Math. Comput. 159, 127-135 (2004)

Fan, ZC, Liu, MZ: The Pth moment exponential stability for the stochastic delay differential equation. J. Nat. Sci. Heilongjiang Univ. 22, 4 (2005)

Mao, X: Numerical solutions of stochastic differential delay equations under the generalized Khasminskii-type conditions. Appl. Math. Comput. 217, 5512-5524 (2011)

Wu*, K, Ding, X: Convergence and stability of Euler method for impulsive stochastic delay differential equations. Appl. Math. Comput. 229, 151-158 (2014)

Mao, X: Exponential stability of equidistant Euler-Maruyama approximations of stochastic differential delay equations. J. Comput. Appl. Math. 200, 297-316 (2007)

Kunze, M, Neerven, J: Approximating the coefficients in semilinear stochastic partial differential equations. J. Evol. Equ. 11, 577-604 (2011)

Dekker, K, Verwer, JG: Stability of Runge-Kutta Methods for Stiff Nonlinear Differential Equations. Centre for Mathematics and Computer Science, Amsterdam (1983)

Acknowledgements

I would like to thank the referees for their helpful comments and suggestions. The financial support from the Youth Science Foundations of Heilongjiang Province of P.R. China (No.QC2016001) is gratefully acknowledged.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares that no competing interests exist.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhang, L. Convergence and stability of the exponential Euler method for semi-linear stochastic delay differential equations. J Inequal Appl 2017, 249 (2017). https://doi.org/10.1186/s13660-017-1518-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-017-1518-5