Abstract

The nonlinear conjugate gradient (CG) algorithm is a very effective method for optimization, especially for large-scale problems, because of its low memory requirement and simplicity. Zhang et al. (IMA J. Numer. Anal. 26:629-649, 2006) firstly propose a three-term CG algorithm based on the well known Polak-Ribière-Polyak (PRP) formula for unconstrained optimization, where their method has the sufficient descent property without any line search technique. They proved the global convergence of the Armijo line search but this fails for the Wolfe line search technique. Inspired by their method, we will make a further study and give a modified three-term PRP CG algorithm. The presented method possesses the following features: (1) The sufficient descent property also holds without any line search technique; (2) the trust region property of the search direction is automatically satisfied; (3) the steplengh is bounded from below; (4) the global convergence will be established under the Wolfe line search. Numerical results show that the new algorithm is more effective than that of the normal method.

Similar content being viewed by others

1 Introduction

We consider the optimization models defined by

where the function \(f:\Re^{n}\rightarrow\Re\) is continuously differentiable. There exist many similar professional fields of science that can revert to the above optimization models (see, e.g., [2–21]). The CG method has the following iterative formula for (1.1):

where \(x_{k}\) is the kth iterate point, the steplength is \(\alpha_{k} > 0\), and the search direction \(d_{k}\) is designed by

where \(g_{k}=\nabla f(x_{k})\) is the gradient and \(\beta_{k} \in\Re\) is a scalar. At present, there are many well-known CG formulas (see [22–46]) and their applications (see, e.g., [47–50]), where one of the most efficient formulas is the PRP [34, 51] defined by

where \(g_{k+1}=\nabla f(x_{k+1})\) is the gradient, \(\delta _{k}=g_{k+1}-g_{k}\), and \(\Vert . \Vert \) is the Euclidian norm. The PRP method is very efficient as regards numerical performance, but it fails as regards the global convergence for the general functions under Wolfe line search technique and this is a still open problem; many scholars want to solve it. It is worth noting that a recent work of Yuan et al. [52] proved the global convergence of PRP method under a modified Wolfe line search technique for general functions. Al-Baali [53], Gilbert and Nocedal [54], Toouati-Ahmed and Storey [55], and Hu and Storey [56] hinted that the sufficient descent property may be crucial for the global convergence of the conjugate gradient methods including the PRP method. Considering the above suggestions, Zhang, Zhou, and Li [1] firstly gave a three-term PRP formula

where \(\vartheta_{k}=\frac{g_{k+1}^{T}d_{k}}{ \Vert g_{k} \Vert ^{2}}\). It is not difficult to deduce that \(d_{k+1}^{T}g_{k+1}=- \Vert g_{k+1} \Vert ^{2}\) holds for all k, which implies that the sufficient descent property is satisfied. Zhang et al. proved that the three-term PRP method has global convergence under Armijo line search technique for general functions but this fails for the Wolfe line search. The reason may be the trust region feature of the search direction that cannot be satisfied for this method. In order to overcome this drawback, we will propose a modified three-term PRP formula that will have not only the sufficient descent property but also the trust region feature.

In the next section, a modified three-term PRP formula is given and the new algorithm is stated. The sufficient descent property, the trust region feature, and the global convergence of the new method are established in Section 3. Numerical results are reported in the last section.

2 The modified PRP formula and algorithm

Motivated by the above observation, the modified three-term PRP formula is

where \(\gamma_{1}>0\), \(\gamma_{2}>0\), and \(\gamma_{3}>0\) are constants. It is easy to see that the difference between (1.5) and (2.1) is the denominator of the second and the third terms. This is a little change that will guarantee another good property for (2.1) and impel the global convergence for Wolfe conditions.

Algorithm 1

New three-term PRP CG algorithm (NTT-PRP-CG-A)

- Step 0::

-

Initial given parameters: \(x_{1} \in \Re^{n}\), \(\gamma_{1}>0\), \(\gamma_{2}>0\), \(\gamma_{3}>0\), \(0<\delta<\sigma<1\), \(\varepsilon\in(0,1)\). Let \(d_{1}=-g_{1}=-\nabla f(x_{1})\) and \(k:=1\).

- Step 1::

-

\(\Vert g_{k} \Vert \leq\varepsilon\), stop.

- Step 2::

-

Get stepsize \(\alpha_{k}\) by the following Wolfe line search rules:

$$ f(x_{k}+\alpha_{k}d_{k}) \leq f(x_{k})+\delta\alpha_{k} g_{k}^{T}d_{k}, $$(2.2)and

$$ g(x_{k}+\alpha_{k}d_{k})^{T}d_{k} \geq\sigma g_{k}^{T}d_{k}. $$(2.3) - Step 3::

-

Let \(x_{k+1}=x_{k}+\alpha_{k}d_{k}\). If the condition \(\Vert g_{k+1} \Vert \leq\varepsilon\) holds, stop the program.

- Step 4::

-

Calculate the search direction \(d_{k+1}\) by (2.1).

- Step 5::

-

Set \(k:=k+1\) and go to Step 2.

3 The sufficient descent property, the trust region feature, and the global convergence

It has been proved that, even for the function \(f(x)=\lambda \Vert x \Vert ^{2}\) (\(\lambda>0\) is a constant) and the strong Wolfe conditions, the PRP conjugate gradient method may not yield a descent direction for an unsuitable choice (see [24] for details). An interesting feature of the new three-term CG method is that the given search direction is sufficiently descent.

Lemma 3.1

The search direction \(d_{k}\) is defined by (2.1) and it satisfies

and

for all \(k\geq0\), where \(\gamma>0\) is a constant.

Proof

For \(k=0\), it is easy to get \(g_{1}^{T}d_{1}=-g_{1}^{T}g_{1}=- \Vert g_{1} \Vert ^{2}\) and \(\Vert d_{1} \Vert = \Vert -g_{1} \Vert = \Vert g_{1} \Vert \), so (3.1) is true and (3.2) holds with \(\gamma= 1\).

If \(k\geq1\), by (2.1), we have

Then (3.1) is satisfied. By (2.1) again, we obtain

where the last inequality follows from \(\frac{1}{\gamma_{1} \Vert g_{k} \Vert ^{2}+\gamma_{2} \Vert d_{k} \Vert \delta_{k} \Vert +\gamma_{3} \Vert d_{k} \Vert g_{k} \Vert }\leq\frac {1}{\gamma_{2} \Vert d_{k} \Vert \delta_{k}\Vert}\). Thus (3.2) holds for all \(k\geq0\) with \(\gamma=\max\{1,1+2/\gamma_{2}\}\). The proof is complete. □

Remark

(1) Equation (3.1) is the sufficient descent property and the inequality (3.2) is the trust region feature. And these two relations are verifiable without any other conditions.

(2) Equations (3.1) and (2.2) imply that the sequence \(\{ f(x_{k})\}\) generated by Algorithm 1 is descent, namely \(f(x_{k}+\alpha _{k}d_{k})\leq f(x_{k})\) holds for all k.

To establish the global convergence of Algorithm 1, the normal conditions are needed.

Assumption A

-

(i)

The defined level set \(\Omega=\{x\in\Re^{n}\mid f(x)\leq f(x_{1})\}\) is bounded with given point \(x_{1}\).

-

(ii)

The function f has a lower bound and it is differentiable. The gradient g is Lipschitz continuous

$$ \bigl\Vert g(x)-g(y) \bigr\Vert \leq L \Vert x-y \Vert , \quad \forall x,y\in\Re^{n}, $$(3.5)where \(L>0\) a constant.

Lemma 3.2

Suppose that Assumption A holds and NTT-PRP-CG-A generates the sequence \(\{x_{k},d_{k},\alpha_{k},g_{k}\}\). Then there exists a constant \(\beta >0\) such that

Proof

Using (3.5) and (2.3) generate

where the last equality follows from (3.1). By (3.2), we get

Setting \(\beta\in(0,\frac{1-\sigma}{L\gamma})\) completes the proof. □

Remark

The above lemma shows that the steplengh \(\alpha_{k}\) has a lower bound, which is helpful for the global convergence of Algorithm 1.

Theorem 3.1

Let the conditions of Lemma 3.2 hold and \(\{x_{k},d_{k},\alpha_{k},g_{k}\}\) be generated by NTT-PRP-CG-A. Thus we get

Proof

By (2.2), (3.1), and (3.6), we have

Summing the above inequality from \(k=1\) to ∞, we have

which means that

The proof is complete. □

4 Numerical results and discussion

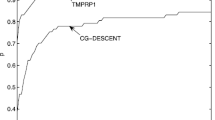

This section will report the numerical experiment of the NTT-PRP-CG-A and the algorithm of Zhang et al. [1] (called Norm-PRP-A), where the Norm-PRP-A is the Step 4 of Algorithm 1 that is replaced by: Calculate the search direction \(d_{k+1}\) by (1.5). Since the method is based on the search direction (1.5), we only compare the numerical results between the new algorithm and the Norm-PRP-A. The codes of these two algorithms are written by Matlab and the problems are chosen from [57, 58] with given initial points. The tested problems are listed in Table 1. The parameters are \(\gamma_{1}=2\), \(\gamma_{2}=5\), \(\gamma_{3}=3\), \(\delta=0.01\), \(\sigma=0.86\). The program uses the Himmelblau rule: Set \(St_{1}=\frac{ \vert f(x_{k})-f(x_{k+1}) \vert }{ \vert f(x_{k}) \vert }\) if \(\vert f(x_{k}) \vert > \tau_{1}\), otherwise set \(St_{1}= \vert f(x_{k})-f(x_{k+1}) \vert \). The program stops if \(\Vert g(x) \Vert <\epsilon\) or \(St_{1} < \tau_{2}\) hold, where we choose \(\epsilon=10^{-6}\) and \(\tau_{1}=\tau _{2}=10^{-5}\). For the choice of the stepsize \(\alpha_{k}\) in (2.2) and (2.3), the largest cycle number is 10 and the current stepsize is accepted. The dimensions of the test problems accord to large-scale variables with 3,000, 12,000, and 30,000. The runtime environment is MATLAB R2010b and run on a PC with CPU Intel Pentium(R) Dual-Core CPU at 3.20 GHz, 2.00 GB of RAM, and the Windows 7 operating system.

Table 2 report the test numerical results of the NTT-PRP-CG-A and the Norm-PRP-A, and we notate:

No. the test problems number. Dimension: the variables number.

Ni: the iteration number. Nfg: the function and the gradient value number. CPU time: the CPU time of operating system in seconds.

A new tool was given by Dolan and Moré [59] to analyze the performance of the algorithms. Figures 1-3 show that the efficiency of the NTT-PRP-CG-A and the Norm-PRP-A relate to Ni, Nfg, and CPU time, respectively. It is easy to see that these two algorithms are effective for those problems and the given three-term PRP conjugate gradient method is more effective than that of the normal three-term PRP conjugate gradient method. Moreover, the NTT-PRP-CG-A has good robustness. Overall, the presented algorithm has some potential property both in theory and numerical experiment, which is noticeable.

5 Conclusions

In this paper, based on the PRP formula for unconstrained optimization, a modified three-term PRP CG algorithm was presented. The proposed method possesses sufficient descent property also holds without any line search technique, and we have automatically the trust region property of the search direction. Under the Wolfe line search, the global convergence was proven. Numerical results showed that the new algorithm is more effective compared with the normal method.

References

Zhang, L, Zhou, W, Li, D: A descent modified Polak-Ribière-Polyak conjugate method and its global convergence. IMA J. Numer. Anal. 26, 629-649 (2006)

Fu, Z, Wu, X, Guan, C, Sun, X, Ren, K: Towards efficient multi-keyword fuzzy search over encrypted outsourced data with accuracy improvement. IEEE Trans. Inf. Forensics Secur. (2016). doi:10.1109/TIFS.2016.2596138

Gu, B, Sheng, VS, Tay, KY, Romano, W, Li, S: Incremental support vector learning for ordinal regression. IEEE Trans. Neural Netw. Learn. Syst. 26, 1403-1416 (2015)

Gu, B, Sun, X, Sheng, VS: Structural minimax probability machine, IEEE Trans. Neural Netw. Learn. Syst. (2016). doi:10.1109/TNNLS.2016.2544779

Li, J, Li, X, Yang, B, Sun, X: Segmentation-based image copy-move forgery detection scheme. IEEE Trans. Inf. Forensics Secur. 10, 507-518 (2015)

Pan, Z, Lei, J, Zhang, Y, Sun, X, Kwong, S: Fast motion estimation based on content property for low-complexity H.265/HEVC encoder. IEEE Trans. Broadcast. (2016). doi:10.1109/TBC.2016.2580920

Pan, Z, Zhang, Y, Kwong, S: Efficient motion and disparity estimation optimization for low complexity multiview video coding. IEEE Trans. Broadcast. 61, 166-176 (2015)

Xia, Z, Wang, X, Sun, X, Wang, Q: A secure and dynamic multi-keyword ranked search scheme over encrypted cloud data. IEEE Trans. Parallel Distrib. Syst. 27, 340-352 (2015)

Xia, Z, Wang, X, Sun, X, Liu, Q, Xiong, N: Steg analysis of LSB matching using differences between nonadjacent pixels. Multimed. Tools Appl. 75, 1947-1962 (2016)

Xia, Z, Wang, X, Zhang, L, Qin, Z, Sun, X, Ren, K: A privacy-preserving and copy-deterrence content-based image retrieval scheme in cloud computing. IEEE Trans. Inf. Forensics Secur. (2016). doi:10.1109/TIFS.2016.2590944

Yuan, GL: A new method with descent property for symmetric nonlinear equations. Numer. Funct. Anal. Optim. 31, 974-987 (2010)

Yuan, GL, Lu, S, Wei, ZX: A new trust-region method with line search for solving symmetric nonlinear equations. Int. J. Comput. Math. 88, 2109-2123 (2011)

Yuan, C, Sun, X, Lv, R: Fingerprint liveness detection based on multi-scale LPQ and PCA. China Commun. 13, 60-65 (2016)

Yuan, GL, Lu, XW, Wei, ZX: BFGS trust-region method for symmetric nonlinear equations. J. Comput. Appl. Math. 230, 44-58 (2009)

Yuan, G, Wei, Z: A trust region algorithm with conjugate gradient technique for optimization problems. Numer. Funct. Anal. Optim. 32, 212-232 (2011)

Yuan, GL, Wei, ZX, Lu, S: Limited memory BFGS method with backtracking for symmetric nonlinear equations. Math. Comput. Model. 54, 367-377 (2011)

Yuan, GL, Wei, ZX, Lu, XW: A BFGS trust-region method for nonlinear equations. Computing 92, 317-333 (2011)

Yuan, GL, Wei, ZX, Wang, ZX: Gradient trust region algorithm with limited memory BFGS update for nonsmooth convex minimization. Comput. Optim. Appl. 54, 45-64 (2013)

Yuan, GL, Wei, ZX, Wu, YL: Modified limited memory BFGS method with nonmonotone line search for unconstrained optimization. J. Korean Math. Soc. 47, 767-788 (2010)

Yuan, GL, Yao, SW: A BFGS algorithm for solving symmetric nonlinear equations. Optimization 62, 45-64 (2013)

Zhou, Z, Wang, Y, Wu, QMJ, Yang, C-N, Sun, X: Effective and efficient global context verification for image copy detection. IEEE Trans. Inf. Forensics Secur. (2016). doi:10.1109/TIFS.2016.2601065

Andrei, N: A hybrid conjugate gradient algorithm for unconstrained optimization as a convex combination of Hestenes-Stiefel and Dai-Yuan. Stud. Inform. Control 17, 55-70 (2008)

Dai, Y: A nonmonotone conjugate gradient algorithm for unconstrained optimization. J. Syst. Sci. Complex. 15, 139-145 (2002)

Dai, Y, Yuan, Y: A nonlinear conjugate gradient with a strong global convergence properties. SIAM J. Optim. 10, 177-182 (2000)

Dai, Y, Yuan, Y: Nonlinear Conjugate Gradient Methods. Shanghai Sci. Technol., Shanghai (1998)

Dai, Y, Yuan, Y: An efficient hybrid conjugate gradient method for unconstrained optimization. Ann. Oper. Res. 103, 33-47 (2001)

Fletcher, R: Practical Method of Optimization, Vol I: Unconstrained Optimization, 2nd edn. Wiley, New York (1997)

Fletcher, R, Reeves, C: Function minimization by conjugate gradients. Comput. J. 7, 149-154 (1964)

Grippo, L, Lucidi, S: A globally convergent version of the Polak-Ribière gradient method. Math. Program. 78, 375-391 (1997)

Hager, WW, Zhang, H: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170-192 (2005)

Hager, WW, Zhang, H: Algorithm 851: CG-DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. 32, 113-137 (2006)

Hager, WW, Zhang, H: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2, 35-58 (2006)

Hestenes, MR, Stiefel, E: Method of conjugate gradient for solving linear equations. J. Res. Natl. Bur. Stand. 49, 409-436 (1952)

Polak, E, Ribière, G: Note sur la convergence de directions conjugees. Rev. Fr. Autom. Inform. Rech. Opér. 3, 35-43 (1969)

Powell, MJD: Nonconvex minimization calculations and the conjugate gradient method. In: Numerical Analysis, Lecture Notes in Mathematics, vol. 1066, pp. 122-141. Spinger, Berlin (1984)

Powell, MJD: Convergence properties of algorithm for nonlinear optimization. SIAM Rev. 28, 487-500 (1986)

Wei, Z, Li, G, Qi, L: New nonlinear conjugate gradient formulas for large-scale unconstrained optimization problems. Appl. Math. Comput. 179, 407-430 (2006)

Wei, Z, Li, G, Qi, L: Global convergence of the PRP conjugate gradient methods with inexact line search for nonconvex unconstrained optimization problems. Math. Comput. 77, 2173-2193 (2008)

Wei, Z, Yao, S, Liu, L: The convergence properties of some new conjugate gradient methods. Appl. Math. Comput. 183, 1341-1350 (2006)

Yuan, GL: Modified nonlinear conjugate gradient methods with sufficient descent property for large-scale optimization problems. Optim. Lett. 3, 11-21 (2009)

Yuan, GL, Duan, XB, Liu, WJ, Wang, XL, Cui, ZR, Sheng, Z: Two new PRP conjugate gradient algorithms for minimization optimization models. PLoS ONE 10(10), e0140071 (2015)

Yuan, GL, Lu, XW: A modified PRP conjugate gradient method. Ann. Oper. Res. 166, 73-90 (2009)

Yuan, GL, Lu, XW, Wei, ZX: A conjugate gradient method with descent direction for unconstrained optimization. J. Comput. Appl. Math. 233, 519-530 (2009)

Yuan, GL, Wei, ZX: New line search methods for unconstrained optimization. J. Korean Stat. Soc. 38, 29-39 (2009)

Yuan, GL, Wei, ZX, Zhao, QM: A modified Polak-Ribière-Polyak conjugate gradient algorithm for large-scale optimization problems. IIE Trans. 46, 397-413 (2014)

Yuan, GL, Zhang, MJ: A modified Hestenes-Stiefel conjugate gradient algorithm for large-scale optimization. Numer. Funct. Anal. Optim. 34, 914-937 (2013)

Yuan, GL, Meng, ZH, Li, Y: A modified Hestenes and Stiefel conjugate gradient algorithm for large-scale nonsmooth minimizations and nonlinear equations. J. Optim. Theory Appl. 168, 129-152 (2016)

Yuan, GL, Wei, ZX: The Barzilai and Borwein gradient method with nonmonotone line search for nonsmooth convex optimization problems. Math. Model. Anal. 17, 203-216 (2012)

Yuan, GL, Wei, ZX, Li, GY: A modified Polak-Ribière-Polyak conjugate gradient algorithm for nonsmooth convex programs. J. Comput. Appl. Math. 255, 86-96 (2014)

Yuan, GL, Zhang, MJ: A three-terms Polak-Ribière-Polyak conjugate gradient algorithm for large-scale nonlinear equations. J. Comput. Appl. Math. 286, 186-195 (2015)

Polak, E: The conjugate gradient method in extreme problems. Comput. Math. Math. Phys. 9, 94-112 (1969)

Yuan, GL, Wei, ZX, Lu, XW: Global convergence of BFGS and PRP methods under a modified weak Wolfe-Powell line search. Appl. Math. Model. (2017). doi:10.1016/j.apm.2017.02.008

Al-Baali, A: Descent property and global convergence of the Flecher-Reeves method with inexact line search. IMA J. Numer. Anal. 5, 121-124 (1985)

Gilbert, JC, Nocedal, J: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2, 21-42 (1992)

Ahmed, T, Storey, D: Efficient hybrid conjugate gradient techniques. J. Optim. Theory Appl. 64, 379-394 (1990)

Hu, YF, Storey, C: Global convergence result for conjugate method. J. Optim. Theory Appl. 71, 399-405 (1991)

Bongartz, I, Conn, AR, Gould, NI, Toint, PL: CUTE: constrained and unconstrained testing environment. ACM Trans. Math. Softw. 21, 123-160 (1995)

Gould, NI, Orban, D, Toint, PL: CUTEr and SifDec: a constrained and unconstrained testing environment, revised. ACM Trans. Math. Softw. 29, 373-394 (2003)

Dolan, ED, Moré, JJ: Benchmarking optimization software with performance profiles. Math. Program. 91, 201-213 (2002)

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author declares that they have no competing interests.

Author’s contributions

Only the author contributed in writing this paper.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wu, Y. A modified three-term PRP conjugate gradient algorithm for optimization models. J Inequal Appl 2017, 97 (2017). https://doi.org/10.1186/s13660-017-1373-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-017-1373-4