Abstract

Let H be a real Hilbert space and C be a nonempty closed convex subset of H. Assume that g is a real-valued convex function and the gradient ∇g is \(\frac{1}{L}\)-ism with \(L>0\). Let \(0<\lambda <\frac{2}{L+2}\), \(0<\beta_{n}<1\). We prove that the sequence \(\{x_{n}\} \) generated by the iterative algorithm \(x_{n+1}=P_{C}(I-\lambda(\nabla g+\beta_{n}I))x_{n}\), \(\forall n\geq0\) converges strongly to \(q\in U\), where \(q=P_{U}(0)\) is the minimum-norm solution of the constrained convex minimization problem, which also solves the variational inequality \(\langle-q, p-q\rangle\leq0\), \(\forall p\in U\). Under suitable conditions, we obtain some strong convergence theorems. As an application, we apply our algorithm to solving the split feasibility problem in Hilbert spaces.

Similar content being viewed by others

1 Introduction

Let H be a real Hilbert space with inner product \(\langle\cdot,\cdot \rangle\) and norm \(\Vert \cdot \Vert \). Let C be a nonempty closed convex subset of H. Let \(\mathbb{N}\) and \(\mathbb{R}\) denote the sets of positive integers and real numbers. Suppose that f is a contraction on H with coefficient \(0<\alpha<1\). A nonlinear operator \(T:H\rightarrow H\) is nonexpansive if \(\Vert Tx-Ty\Vert \leq \Vert x-y\Vert \) for all \(x,y\in H\). We use \(\operatorname {Fix}(T)\) to denote the fixed point of T.

Firstly, consider the constrained convex minimization problem:

where \(g:C\rightarrow\mathbb{R}\) is a real-valued convex function. Assume that the constrained convex minimization problem (1.1) is solvable, let U denote its solution set. The gradient-projection algorithm (GPA) is an effective method for solving the constrained convex minimization problem (1.1). A sequence \(\{x_{n}\}\) generated by the following recursive formula:

where the parameter λ is real positive number. In general, if the gradient ∇g is L-Lipschitz continuous and η-strongly monotone, \(0<\lambda<\frac{2\eta}{L^{2}}\), the sequence \(\{x_{n}\}\) generated by (1.2) converges strongly to a minimizer of (1.1). However, if the gradient ∇g is only to be \(\frac{1}{L}\)-ism with \(L>0\), \(0<\lambda<\frac{2}{L}\), the sequence \(\{x_{n}\}\) generated by (1.2) converges weakly to a minimizer of (1.1).

Recently, many authors combined the constrained convex minimization problem with a fixed point problem [1–3] and proposed composited iterative algorithms to find a solution of the constrained convex minimization problem [4–7].

In 2000, Moudafi [8] introduced the viscosity approximation method for nonexpansive mappings.

In 2001, Yamada [9] introduced the so-called hybrid steepest-descent algorithm:

where F is Lipschitzian and strongly monotone operator. In 2006, Marino and Xu [10] considered a generative algorithm:

where A is a strongly positive operator. In 2010, Tian [11] combined the iterative algorithm of (1.4), (1.5), and proposed a new iterative algorithm:

In 2010, Tian [12] generalized (1.6), obtained the following iterative algorithm:

where V is Lipschitzian operator. Based on these iterative algorithms, some authors combined GPA with averaged operator to solve the constrained convex minimization problem [13, 14].

In 2011, Ceng et al. [1] proposed a sequence \(\{x_{n}\}\) generated by the following iterative algorithm:

where \(h:C\rightarrow H\) is an l-Lipschitzian mapping with a constant \(l>0\), and \(F:C\rightarrow H\) is a k-Lipschitzian and η-strongly monotone operator with constants \(k, \eta>0\). \(\theta_{n}=\frac{2-\lambda_{n}L}{4}\), \(P_{C}(I-\lambda_{n}\nabla g)=\theta_{n}I+(1-\theta_{n})T_{n}\), \(\forall n\geq0\). Then a sequence \(\{ x_{n}\}\) generated by (1.8) converges strongly to a minimizer of (1.1).

On the other hand, Xu [15] proposed that regularization can be used to find the minimum-norm solution of the minimization problem.

Consider the following regularized minimization problem:

where the regularization parameter \(\beta>0\). g is a convex function and the gradient ∇g is \(\frac{1}{L}\)-ism with \(L>0\). Then the sequence \(\{x_{n}\}\) generated by the following formula:

where the regularization parameters \(0<\beta_{n}<1\), \(0<\lambda<\frac {2}{L}\) converges weakly. But, if a sequence \(\{x_{n}\}\) defined by

where the initial guess \(x_{0}\in C\), \(\{\lambda_{n}\}\), \(\{\beta_{n}\} \) satisfy the following conditions:

-

(i)

\(0<\lambda_{n}\leq\frac{\beta_{n}}{(L+\beta_{n})^{2}}\), \(\forall n\geq 0\),

-

(ii)

\(\beta_{n}\rightarrow0\) (and \(\lambda_{n}\rightarrow 0\)) as \(n\rightarrow\infty\),

-

(iii)

\(\sum_{n=1}^{\infty}\lambda_{n}\beta_{n} = \infty\),

-

(iv)

\(\frac{(\vert \lambda_{n}-\lambda_{n-1}\vert +\vert \lambda_{n}\beta_{n}-\lambda_{n-1}\beta_{n-1}\vert )}{(\lambda _{n}\beta_{n})^{2}}\rightarrow0\) as \(n\rightarrow\infty\).

Then the sequence \(\{x_{n}\}\) generated by (1.10) converges strongly to \(x^{*}\), which is the minimum-norm solution of (1.1) [15].

Secondly, Yu et al. [16] proposed a strong convergence theorem with a regularized-like method to find an element of the set of solutions for a monotone inclusion problem in a Hilbert space.

Theorem 1.1

[16]

Let H be a real Hilbert space and C be a nonempty closed and convex subset of H. Let \(L>0\), F is a \(\frac{1}{L}\)-ism mapping of C into H. Let B be a maximal monotone mapping on H and let G be a maximal monotone mapping on H such that the domains of B and G are included in C. Let \(J_{\rho}=(I+\rho B)^{-1}\) and \(T_{r}=(I+rG)^{-1}\) for each \(\rho>0\) and \(r>0\). Suppose that \((F+B)^{-1}(0)\cap G^{-1}(0)\neq\emptyset\). Let \(\{x_{n}\}\subset H\) defined by

where \(\rho\in(0,\infty)\), \(\beta_{n}\in(0,1)\), \(r\in(0,\infty)\). Assume that

-

(i)

\(0< a\leq\rho<\frac{2}{2+L}\),

-

(ii)

\(\lim_{n\rightarrow\infty}\beta_{n}=0\), \(\sum_{n=1}^{\infty}\beta _{n}=\infty\).

Then the sequence \(\{x_{n}\}\) generated by (1.11) converges strongly to x̅, where \(\overline{x}= P_{(F+B)^{-1}(0)\cap G^{-1}(0)}(0)\).

From the article of Yu et al. [16], we obtain a new condition of parameter ρ, \(0<\rho<\frac{2}{L+2}\), which is used widely in our article. Motivated and inspired by Lin, when \(0<\lambda<\frac{2}{L+2}\), \(\{\beta_{n}\}\) satisfy certain conditions, a sequence \(\{x_{n}\}\) generated by the iterative algorithm (1.9):

converges strongly to a point \(q\in U\), where \(q=P_{U}(0)\) is the minimum-norm solution of the constrained convex minimization problem.

Finally, we give concrete example and the numerical results to illustrate our algorithm is with fast convergence.

2 Preliminaries

In this part, we introduce some lemmas that will be used in the rest part. Let H be a real Hilbert space and C be a nonempty closed convex subset of H. We use ‘→’ to denote strong convergence of the sequence \(\{x_{n}\}\) and use ‘⇀’ to denote weak convergence.

Recall \(P_{C}\) is the metric projection from H into C, then to each point \(x\in H\), the unique point \(P_{C}\in C\) satisfy the property:

\(P_{C}\) has the following characteristics.

Lemma 2.1

[17]

For a given \(x\in H\):

-

(1)

\(z=P_{C}x \Longleftrightarrow\langle x-z,z-y\rangle\geq0\), \(\forall y\in C\);

-

(2)

\(z=P_{C}x \Longleftrightarrow \Vert x-z\Vert ^{2}\leq \Vert x-y\Vert ^{2}-\Vert y-z\Vert ^{2}\), \(\forall y\in C\);

-

(3)

\(\langle P_{C}x-P_{C}y, x-y\rangle\geq \Vert P_{C}x-P_{C}y\Vert ^{2}\), \(\forall x,y\in H\).

From (3), we can derive that \(P_{C}\) is nonexpansive and monotone.

Lemma 2.2

Demiclosed principle [18]

Let \(T : C\rightarrow C\) be a nonexpansive mapping with \(F(T)\neq\emptyset\). If \(\{x_{n}\}\) is a sequence in C weakly converging to x and if \(\{ (I-T)x_{n}\}\) converges strongly to y, then \((I-T)x = y\). In particular, if \(y = 0\), then \(x\in F(T)\).

Lemma 2.3

[19]

Let \(\{a_{n}\}\) is a sequence of nonnegative real numbers such that

where \(\{\alpha_{n}\}_{n=0}^{\infty}\) and \(\{\delta_{n}\}_{n=0}^{\infty }\) are sequences of real numbers in \((0,1)\) and such that

-

(i)

\(\sum_{n=0}^{\infty}\alpha_{n} = \infty\);

-

(ii)

\(\limsup_{n\rightarrow\infty}\delta_{n} \leq0\) or \(\sum_{n=0}^{\infty}\alpha_{n}\vert \delta_{n}\vert < \infty\).

Then \(\lim_{n\rightarrow\infty}a_{n} = 0\).

3 Main results

Let H be a real Hilbert space and C be a nonempty closed convex subset of H. Assume that \(g:C\rightarrow\mathbb{R}\) is real-valued convex function and the gradient ∇g is \(\frac{1}{L}\)-ism with \(L>0\). Suppose that the minimization problem (1.1) is consistent and let U denote its solution set. Let \(0<\lambda<\frac{2}{L+2}\), \(0<\beta _{n}<1\). Consider the following mapping \(G_{n}\) on C defined by

We have

That is,

Since \(0<1-\lambda\beta_{n}<1\), it follows that \(G_{n}\) is a contraction. Therefore, by the Banach contraction principle, \(G_{n}\) has a unique fixed point \(x_{n}\), such that

Next, we prove that the sequence \(\{x_{n}\}\) converges strongly to \(q\in U\), which also solves the variational inequality

Equivalently, \(q=P_{U}(0)\), that is, q is the minimum-norm solution of the constrained convex minimization problem.

Theorem 3.1

Let C be a nonempty closed convex subset of a real Hilbert space H. Let \(g:C\rightarrow\mathbb{R}\) is real-valued convex function and assume that the gradient ∇g is \(\frac{1}{L}\)-ism with \(L>0\). Assume that \(U \neq\emptyset\). Let \(\{x_{n}\}\) be a sequence generated by

Let λ, \(\{\beta_{n}\}\) satisfy the following conditions:

-

(i)

\(0<\lambda<\frac{2}{2+L}\),

-

(ii)

\(\{\beta_{n}\}\subset(0,1)\), \(\lim_{n\rightarrow\infty}\beta _{n}=0\), \(\sum_{n=1}^{\infty}\beta_{n} = \infty\).

Then \(\{x_{n}\}\) converges strongly to a point \(q\in U\), where \(q=P_{U}(0)\), which is the minimum-norm solution of the minimization problem (1.1) and also solves the variational inequality (3.1).

Proof

First, we claim that \(\{x_{n}\}\) is bounded. Indeed, pick any \(p\in U\), then we have

Then we derive that

and hence \(\{x_{n}\}\) is bounded.

Next, we claim that \(\Vert x_{n}-P_{C}(I-\lambda\nabla g )x_{n}\Vert \rightarrow0\). Indeed

Since \(\{x_{n}\}\) is bounded, \(\beta_{n}\rightarrow0\) (\(n\rightarrow \infty\)), we obtain

∇g is \(\frac{1}{L}\)-ism. Consequently, \(P_{C}(I-\lambda\nabla g)\) is a nonexpansive self-mapping on C. As a matter of fact, we have for each \(x,y\in C\)

\(\{x_{n}\}\) is bounded, consider a subsequence \(\{x_{n_{i}}\}\) of \(\{ x_{n}\}\). Since \(\{x_{n_{i}}\}\) is bounded, there exists a subsequence \(\{x_{n_{i_{j}}}\}\) of \(\{x_{n_{i}}\}\) which converges weakly to z. Without loss of generality, we can assume that \(x_{n_{i}}\rightharpoonup z\). Then by Lemma 2.2, we obtain \(z\in U\).

On the other hand

Thus

In particular

Since \(x_{n_{i}}\rightharpoonup z\). Then we derive that \(x_{n_{i}}\rightarrow z\) as \(i\rightarrow\infty\).

Let q be the minimum-norm solution of U, that is, \(q=P_{U}(0)\). Since \(\{x_{n}\}\) is bounded, there exists a subsequence \(\{x_{n_{i}}\} \) of \(\{x_{n}\}\) such that \(x_{n_{i}}\rightharpoonup z\). As the above proof, we know that \(x_{n_{i}}\rightarrow z\), \(z\in U\).

Then we derive that

Thus

In particular

Since \(x_{n_{i}}\rightarrow z\), \(z\in U\),

So, we have \(z=q\). From the arbitrariness of \(z\in U\), it follows that \(q\in U\) is a solution of the variational inequality (3.1). By the uniqueness of solution of the variational inequality (3.1), we conclude that \(x_{n}\rightarrow q\) as \(n\rightarrow\infty\), where \(q=P_{U}(0)\). □

Theorem 3.2

Let C be a nonempty closed convex subset of a real Hilbert space H and \(g:C\rightarrow\mathbb{R}\) is real-valued convex function and assume that the gradient ∇g is \(\frac{1}{L}\)-ism with \(L>0\). Assume that \(U\neq\emptyset\). Let \(\{x_{n}\}\) be a sequence generated by \(x_{1}\in C\) and

where λ and \(\{\beta_{n}\}\) satisfy the following conditions:

-

(i)

\(0<\lambda<\frac{2}{L+2}\);

-

(ii)

\(\{\beta_{n}\}\subset(0,1)\), \(\lim_{n\rightarrow\infty}\beta _{n}=0\), \(\sum_{n=1}^{\infty}\beta_{n} = \infty\), \(\sum_{n=1}^{\infty }\vert \beta_{n+1}-\beta_{n}\vert <\infty\).

Then \(\{x_{n}\}\) converges strongly to a point \(q\in U\), where \(q=P_{U}(0)\), which is the minimum-norm solution of the minimization problem (1.1) and also solves the variational inequality (3.1).

Proof

First, we claim that \(\{x_{n}\}\) is bounded. Indeed, pick any \(p\in U\), then we know that, for any \(n\in\mathbb{N}\),

By the introduction

and hence \(\{x_{n}\}\) is bounded.

Next, we show that \(\Vert x_{n+1}-x_{n}\Vert \rightarrow0\).

where \(M=\sup\{\Vert x_{n}\Vert :n\in\mathbb{N}\}\). Hence, by Lemma 2.3, we have

Then we claim that \(\Vert x_{n}-P_{C}(I-\lambda\nabla g)x_{n}\Vert \rightarrow0\).

since \(\beta_{n}\rightarrow0\) and \(\Vert x_{n+1}-x_{n}\Vert \rightarrow0\), we have

Next, we show that

Let q be the minimum-norm solution of U, that is, \(q=P_{U}(0)\). Since \(\{x_{n}\}\) is bounded, without loss of generality, we assume that \(x_{n_{j}}\rightharpoonup z\). By the same argument as in the proof of Theorem 3.1, we have \(z\in U\).

Then

It follows that

where \(\delta_{n}=\langle-q, x_{n+1}-q\rangle\).

It is easy to see that \(\lim_{n\rightarrow\infty}\lambda\beta_{n}=0\), \(\sum_{n=1}^{\infty}\lambda\beta_{n} = \infty\) and \(\limsup_{n\rightarrow \infty}\delta_{n}\leq0\). Hence, by Lemma 2.3, the sequence \(\{x_{n}\}\) converges strongly to q, where \(q=P_{U}(0)\). This completes the proof. □

4 Application

In this part, we will illustrate the practical value of our algorithm in the split feasibility problem. In 1994, Censor and Elfving [20] came up with the split feasibility problem. The SFP is formulated as finding a point x with the property:

where C and Q are nonempty closed and convex subset of real Hilbert spaces \(H_{1}\) and \(H_{2}\), \(A:H_{1}\rightarrow H_{2}\) is bounded linear operator.

Next, we consider the constrained convex minimization problem:

If \(x^{*}\) is a solution of SFP, then \(Ax^{*}\in Q\) and \(Ax^{*}-P_{Q}Ax^{*}=0\), \(x^{*}\) is the solution of the minimization problem (4.2). The gradient of g is ∇g, where \(\nabla g=A^{*}(I-P_{Q})A\). Applying Theorem 3.2, we obtain the following theorem.

Theorem 4.1

Assume that the SFP (4.1) is consistent. Let C be a nonempty closed convex subset of a real Hilbert space H. Assume that \(A:H_{1}\rightarrow H_{2}\) is bounded linear operator, \(W\neq\emptyset \), where W denotes the solution set of SFP (4.1). Let \(\{x_{n}\}\) be a sequence generated by \(x_{1}\in C\) and

Let λ and \(\{\beta_{n}\}\) satisfy the following conditions:

-

(i)

\(0<\lambda<\frac{2}{2+\Vert A\Vert ^{2}}\);

-

(ii)

\(\{\beta_{n}\}\subset(0,1)\), \(\lim_{n\rightarrow\infty}\beta _{n}=0\), \(\sum_{n=1}^{\infty}\beta_{n} = \infty\), \(\sum_{n=1}^{\infty }\vert \beta_{n+1}-\beta_{n}\vert <\infty\).

Then \(\{x_{n}\}\) converges strongly to a point \(q\in W\), where \(q=P_{W}(0)\).

Proof

We only need to show that ∇g is \(\frac{1}{\Vert A\Vert ^{2}}\)-ism, then Theorem 4.1 can be obtained by Theorem 3.2.

Since \(P_{Q}\) is firmly nonexpansive, so \(P_{Q}\) is \(\frac {1}{2}\)-averaged mapping, then \(I-P_{Q}\) is 1-ism, for any \(x,y\in C\), we derive that

So, ∇g is \(\frac{1}{\Vert A\Vert ^{2}}\)-ism. □

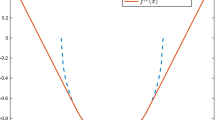

5 Numerical result

In this part, we use the algorithm in Theorem 4.1 to solve a system of linear equations. Then we calculate the \(4\times4\) system of linear equations.

Example 1

Let \(H_{1}=H_{2}=\mathbb{R}^{4}\). Take

Then the SFP can be formulated as the problem of finding a point \(x^{*}\) with the property

where \(C=\mathbb{R}^{4}\), \(Q=\{b\}\). That is, \(x^{*}\) is the solution of the system of linear equations \(Ax=b\), and

Take \(P_{C}=I\), where I denotes the \(4\times4\) identity matrix. Given the parameters \(\beta_{n}=\frac{1}{(n+2)^{2}}\) for \(n\geq0\), \(\lambda =\frac{3}{200}\). Then by Theorem 4.1, the sequence \(\{x_{n}\}\) is generated by

As \(n\rightarrow\infty\), we have \(\{x_{n}\}\rightarrow x^{*}=(1,3,2,4)^{T}\).

From Table 1, we can easily see that with iterative number increasing \(x_{n}\) approaches to the exact solution \(x^{*}\) and the errors gradually approach zero.

In Tian and Jiao [21], they use another iterative algorithm to calculate the same example.

Compare Table 1 with Table 2, we find that if the parameters \(\beta _{n}\) are the same, when \(\lambda\rightarrow\frac{2}{L+2}\), our algorithm is with fast convergence.

6 Conclusion

In a real Hilbert space, there are many methods to solve the constrained convex minimization problem. However, most of them cannot find the minimum-norm solution. In this article, we use the regularized gradient-projection algorithm to find the minimum-norm solution of the constrained convex minimization problem, where \(0<\lambda<\frac {2}{L+2}\). Then under some suitable conditions, new strong convergence theorems are obtained. Finally, we apply this algorithm to the split feasibility problem and use a concrete example and numerical results to illustrate that our algorithm has fast convergence.

References

Ceng, LC, Ansari, QH, Yao, JC: Some iterative methods for finding fixed points and for solving constrained convex minimization problems. Nonlinear Anal. 74, 5286-5302 (2011)

Ceng, LC, Ansari, QH, Yao, JC: Extragradient-projection method for solving constrained convex minimization problems. Numer. Algebra Control Optim. 1(3), 341-359 (2011)

Ceng, LC, Ansari, QH, Wen, CF: Multi-step implicit iterative methods with regularization for minimization problems and fixed point problems. J. Inequal. Appl. 2013, 240 (2013)

Deutsch, F, Yamada, I: Minimizing certain convex functions over the intersection of the fixed point sets of the nonexpansive mappings. Numer. Funct. Anal. Optim. 19, 33-56 (1998)

Xu, HK: Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 66, 240-256 (2002)

Xu, HK: An iterative approach to quadratic optimization. J. Optim. Theory Appl. 116, 659-678 (2003)

Yamada, I, Ogura, N, Yamashita, Y, Sakaniwa, K: Quadratic approximation of fixed points of nonexpansive mappings in Hilbert spaces. Numer. Funct. Anal. Optim. 19, 165-190 (1998)

Moudafi, A: Viscosity approximation methods for fixed-points problem. J. Math. Anal. Appl. 241, 46-55 (2000)

Yamada, I: The hybrid steepest descent method for the variational inequality problem over the intersection of fixed point sets of nonexpansive mappings. In: Inherently Parallel Algorithms in Feasibility and Optimization and Their Application, Haifa (2001)

Marino, G, Xu, HK: A general method for nonexpansive mappings in Hilbert space. J. Math. Anal. Appl. 318, 43-52 (2006)

Tian, M: A general iterative algorithm for nonexpansive mappings in Hilbert spaces. Nonlinear Anal. 73, 689-694 (2010)

Tian, M: A general iterative method based on the hybrid steepest descent scheme for nonexpansive mappings in Hilbert spaces. In: International Conference on Computational Intelligence and Software Engineering, CiSE 2010, art. 5677064. IEEE, Piscataway, NJ (2010)

Tian, M, Liu, L: General iterative methods for equilibrium and constrained convex minimization problem. Optimization 63, 1367-1385 (2014)

Tian, M, Liu, L: Iterative algorithms based on the viscosity approximation method for equilibrium and constrained convex minimization problem. Fixed Point Theory Appl. 2012, 201 (2012)

Xu, HK: Kim: averaged mappings and the gradient-projection algorithm. J. Optim. Theory Appl. 150, 360-378 (2011)

Yu, ZT, Lin, LJ, Chuang, CS: A unified study of the split feasible problems with applications. J. Nonlinear Convex Anal. 15(3), 605-622 (2014)

Takahashi, W: Nonlinear Functional Analysis. Yokohama Publishers, Yokohama (2000)

Hundal, H: An alternating projection that does not converge in norm. Nonlinear Anal. 57, 35-61 (2004)

Xu, HK: Viscosity approximation methods for nonexpansive mappings. J. Math. Anal. Appl. 298, 279-291 (2004)

Censor, Y, Elfving, T: A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 8, 221-239 (1994)

Tian, M, Jiao, SW: Regularized gradient-projection methods for the constrained convex minimization problem and the zero points of maximal monotone operator. Fixed Point Theory Appl. 2015, 11 (2015)

Acknowledgements

The authors thank the referees for their helping comments, which notably improved the presentation of this paper. This work was supported by the Foundation of Tianjin Key Laboratory for Advanced Signal Processing. First author was supported by the Foundation of Tianjin Key Laboratory for Advanced Signal Processing. Hui-Fang Zhang was supported in part by Technology Innovation Funds of Civil Aviation University of China for Graduate in 2017.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All the authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Tian, M., Zhang, HF. Regularized gradient-projection methods for finding the minimum-norm solution of the constrained convex minimization problem. J Inequal Appl 2017, 13 (2017). https://doi.org/10.1186/s13660-016-1289-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-016-1289-4