Abstract

It is well known that the second-order cone and the circular cone have many analogous properties. In particular, there exists an important distance inequality associated with the second-order cone and the circular cone. The inequality indicates that the distances of arbitrary points to the second-order cone and the circular cone are equivalent, which is crucial in analyzing the tangent cone and normal cone for the circular cone. In this paper, we provide an alternative approach to achieve the aforementioned inequality. Although the proof is a bit longer than the existing one, the new approach offers a way to clarify when the equality holds. Such a clarification is helpful for further study of the relationship between the second-order cone programming problems and the circular cone programming problems.

Similar content being viewed by others

1 Introduction

The circular cone [1, 2] is a pointed closed convex cone having hyperspherical sections orthogonal to its axis of revolution about which the cone is invariant to rotation. Let \({\mathcal {L}}_{\theta }\) denote the circular cone in \(\mathbb {R}^{n}\), which is defined by

with \(\|\cdot\|\) denoting the Euclidean norm and \(\theta\in(0,\frac{\pi}{2})\). When \(\theta= \frac{\pi}{4}\), the circular cone \({\mathcal {L}}_{\theta }\) reduces to the well-known second-order cone (SOC) \(\mathcal {K}^{n}\) [3, 4] (also called the Lorentz cone), i.e.,

In particular, \({\mathcal {K}}^{1}\) is the set of nonnegative reals \(\mathbb {R}_{+}\). It is well known that the second-order cone \(\mathcal{K}^{n}\) is a special kind of symmetric cones [5]. But when \(\theta\neq\frac{\pi}{4}\), the circular cone \({\mathcal {L}}_{\theta }\) is a non-symmetric cone [1, 6, 7].

In [2], Zhou and Chen showed that there is a special relationship between the SOC and the circular cone as follows:

where \(I_{n-1}\) is the \((n-1) \times(n-1)\) identity matrix. Based on the relationship (2) between the SOC and circular cone, Miao et al. [8] showed that circular cone complementarity problems can be transformed into the second-order cone complementarity problems. Furthermore from the relationship (2), we have

Besides the relationship between second-order cone and circular cone, some topological structures play important roles in theoretical analysis for optimization problems. For example, the projection formula onto a cone facilitates designing algorithms for solving conic programming problems [9–13]; the distance formula from an element to a cone is an important factor in the approximation theory; the tangent cone and normal cone are crucial in analyzing the structure of the solution set for optimization problems [14–16]. From the above illustrations, an interesting question arises: What is the relationship between second-order cone and circular cone regarding the projection formula, the distance formula, the tangent cone and normal cone, and so on? The issue of the tangent cone and normal cone has been studied in [2], Theorem 2.3. In this paper, we focus on the other two issues.

More specifically, we provide an alternative approach to achieve an inequality which was obtained in [2], Theorem 2.2. Although the proof is a bit longer than the existing one, the new approach offers a way to clarify when the equality holds, which is helpful for further studying in the relationship between the second-order cone programming problems and the circular cone programming problems.

In order to study the relationship between second-order cone and circular cone, we need to recall some background materials. For any vector \(x=(x_{1},x_{2})\in \mathbb {R}\times \mathbb {R}^{n-1}\), the spectral decomposition of x with respect to second-order cone is given by

where \(\lambda_{1}(x)\), \(\lambda_{2}(x)\), \(u_{x}^{(1)}\), and \(u_{x}^{(2)}\) are expressed as

with \(w=\frac{x_{2}}{\|x_{2}\|}\) if \(x_{2}\neq0\), or any vector in \(\mathbb {R}^{n-1}\) satisfying \(\|w\|=1\) if \(x_{2}=0\). In the setting of the circular cone \({\mathcal {L}}_{\theta }\), Zhou and Chen [2] gave the following spectral decomposition of \(x\in \mathbb {R}^{n}\) with respect to \({\mathcal {L}}_{\theta }\):

where \(\mu_{1}(x)\), \(\mu_{2}(x)\), \(v_{x}^{(1)}\), and \(v_{x}^{(2)}\) are expressed as

with \(w=\frac{x_{2}}{\|x_{2}\|}\) if \(x_{2}\neq0\), or any vector in \(\mathbb {R}^{n-1}\) satisfying \(\|w\|=1\) if \(x_{2}=0\). Moreover, \(\lambda_{1}(x)\), \(\lambda_{2}(x)\) and \(u_{x}^{(1)}\), \(u_{x}^{(2)}\) are called the spectral values and the spectral vectors of x associated with \({\mathcal {K}}^{n}\), whereas \(\mu_{1}(x)\), \(\mu_{2}(x)\) and \(v_{x}^{(1)}\), \(v_{x}^{(2)}\) are called the spectral values and the spectral vectors of x associated with \({\mathcal {L}}_{\theta }\), respectively.

To proceed, we denote \(x_{+}\) (resp. \(x^{\theta }_{+}\)) the projection of x onto \({\mathcal {K}}^{n}\) (resp. \({\mathcal {L}}_{\theta }\)); also we set \(a_{+} = \max\{ a, 0 \}\) for any real number \(a \in \mathbb {R}\). According to the spectral decompositions (3) and (5) of x, the expressions of \(x_{+}\) and \(x^{\theta }_{+}\) can be obtained explicitly, as stated in the following lemma.

Lemma 1.1

Let \(x=(x_{1},x_{2})\in \mathbb {R}\times \mathbb {R}^{n-1}\) have the spectral decompositions given as (3) and (5) with respect to SOC and circular cone, respectively. Then the following hold:

-

(a)

$$\begin{aligned} x_{+} =&\bigl(x_{1}-\|x_{2}\|\bigr)_{+}u_{x}^{(1)} +\bigl(x_{1}+\|x_{2}\|\bigr)_{+}u_{x}^{(2)} \\ =& \left \{ \textstyle\begin{array}{l@{\quad}l} x, & \textit{if } x\in {\mathcal {K}}^{n}, \\ 0, & \textit{if } x\in-({\mathcal {K}}^{n})^{\ast}=-{\mathcal {K}}^{n}, \\ u, & \textit{otherwise}, \end{array}\displaystyle \quad \textit{where } u=\left [ \textstyle\begin{array}{c} \frac{x_{1}+\|x_{2}\|}{2} \\ \frac{x_{1}+\|x_{2}\|}{2} \frac{x_{2}}{\|x_{2}\|} \end{array}\displaystyle \right ]; \right . \end{aligned}$$

-

(b)

$$\begin{aligned} x^{\theta }_{+} =& \bigl(x_{1}-\|x_{2}\|\cot \theta \bigr)_{+}u_{x}^{(1)} +\bigl(x_{1}+\|x_{2}\|\tan \theta \bigr)_{+}u_{x}^{(2)} \\ =& \left \{ \textstyle\begin{array}{l@{\quad}l} x, & \textit{if } x\in {\mathcal {L}}_{\theta }, \\ 0, & \textit{if } x\in-({\mathcal {L}}_{\theta })^{\ast}=-\mathcal{L}_{\frac{\pi}{2}-\theta }, \\ v, & \textit{otherwise}, \end{array}\displaystyle \quad \textit{where } v=\left [ \textstyle\begin{array}{c} \frac{x_{1}+\|x_{2}\|\tan \theta }{1+\tan^{2} \theta } \\ (\frac{x_{1}+\|x_{2}\|\tan \theta }{1+\tan^{2} \theta } \tan \theta ) \frac{x_{2}}{\|x_{2}\|} \end{array}\displaystyle \right ]. \right . \end{aligned}$$

Based on the expression of the projection \(x_{+}^{\theta }\) onto \({\mathcal {L}}_{\theta }\) in Lemma 1.1, it is easy to obtain, for any \(x=(x_{1},x_{2})\in \mathbb {R}\times \mathbb {R}^{n-1}\), the explicit formula of projection of \(x\in \mathbb {R}^{n}\) onto the dual cone \({\mathcal {L}}_{\theta }^{\ast}\) (denoted by \((x^{\theta })_{+}^{\ast}\)):

2 Main results

In this section, we give the main results of this paper.

Theorem 2.1

For any \(x\in \mathbb {R}^{n}\), let \(x_{+}^{\theta }\) and \((x^{\theta })_{+}^{\ast}\) be the projections of x onto the circular cone \({\mathcal {L}}_{\theta }\) and its dual cone \({\mathcal {L}}_{\theta }^{\ast}\), respectively. Let A be the matrix defined as in (2). Then the following hold:

-

(a)

If \(Ax\in {\mathcal {K}}^{n}\), then \((Ax)_{+}=Ax_{+}^{\theta }\).

-

(b)

If \(Ax\in-{\mathcal {K}}^{n}\), then \((Ax)_{+}=A(x^{\theta })_{+}^{\ast}=0\).

-

(c)

If \(Ax \notin {\mathcal {K}}^{n}\cup(-{\mathcal {K}}^{n})\), then \((Ax)_{+} = (\frac{1 + \tan^{2} \theta}{2} ) A^{-1} (x^{\theta })_{+}^{\ast}\), where \((x^{\theta })_{+}^{\ast}\) retains its expression only in the case of \(x\notin {\mathcal {L}}_{\theta }^{\ast}\cup(-{\mathcal {L}}_{\theta })\).

Theorem 2.2

Let \(x=(x_{1},x_{2})\in \mathbb {R}\times \mathbb {R}^{n-1}\) have the spectral decompositions with respect to the SOC and the circular cone given as in (3) and (5), respectively. Then the following hold:

-

(a)

\(\operatorname{dist}(Ax, {\mathcal {K}}^{n})= \sqrt{\frac{1}{2}(x_{1} \tan \theta -\|x_{2}\|)_{-}^{2}+\frac{1}{2}(x_{1} \tan \theta +\|x_{2}\|)_{-}^{2}}, \)

-

(b)

\(\operatorname{dist}(x,{\mathcal {L}}_{\theta })=\sqrt{\frac{\cot^{2}\theta }{1+\cot^{2}\theta }(x_{1}\tan \theta -\|x_{2}\|)_{-}^{2}+ \frac{\tan^{2}\theta }{1+\tan^{2}\theta }(x_{1}\cot \theta +\|x_{2}\|)_{-}^{2}}, \)

where \((a)_{-}=\min\{a,0\}\).

Now, applying Theorem 2.2, we can obtain the relation on the distance formulas associated with the second-order cone and the circular cone. Note that when \(\theta =\frac{\pi}{4}\), we know \({\mathcal {L}}_{\theta }={\mathcal {K}}^{n}\) and \(Ax=x\). Thus, it is obvious that \(\operatorname{dist}(Ax, {\mathcal {K}}^{n}) = \operatorname{dist}(x,{\mathcal {L}}_{\theta })\). In the following theorem, we only consider the case \(\theta \neq\frac{\pi}{4}\).

Theorem 2.3

For any \(x=(x_{1},x_{2})\in \mathbb {R}\times \mathbb {R}^{n-1}\), according to the expressions of the distance formulas \(\operatorname{dist}(Ax, {\mathcal {K}}^{n})\) and \(\operatorname{dist}(x, {\mathcal {L}}_{\theta })\), the following hold.

-

(a)

For \(\theta \in(0,\frac{\pi}{4})\), we have

$$\operatorname{dist}\bigl(Ax, {\mathcal {K}}^{n}\bigr) \leq \operatorname{dist}(x,{\mathcal {L}}_{\theta }) \leq\cot \theta \cdot \operatorname{dist}\bigl(Ax, {\mathcal {K}}^{n}\bigr). $$ -

(b)

For \(\theta \in(\frac{\pi}{4},\frac{\pi}{2})\), we have

$$\operatorname{dist}(x,{\mathcal {L}}_{\theta }) \leq \operatorname{dist}\bigl(Ax, {\mathcal {K}}^{n}\bigr) \leq\tan \theta \cdot \operatorname{dist}(x, {\mathcal {L}}_{\theta }). $$

3 Proofs of main results

3.1 Proof of Theorem 2.1

(a) If \(Ax\in {\mathcal {K}}^{n}\), by the relationship (2) between the SOC and the circular cone, we have \(x\in {\mathcal {L}}_{\theta }\). Thus, it is easy to see that \((Ax)_{+}=Ax=Ax_{+}^{\theta }\).

(b) If \(Ax\in-{\mathcal {K}}^{n}\), we know that \(-Ax\in {\mathcal {K}}^{n}\), which implies \((Ax)_{+}=0\). Besides, combining with (2), we have \(-x\in {\mathcal {L}}_{\theta }\), which leads to \((x^{\theta })_{+}^{\ast}=0\). Hence, we have \((Ax)_{+}=A(x^{\theta })_{+}^{\ast}=0\).

(c) If \(Ax \notin {\mathcal {K}}^{n}\cup(-{\mathcal {K}}^{n})\), from Lemma 1.1(a), we have

The proof is complete. □

Remark 3.1

Here, we say a few more words as regards part (c) in Theorem 2.1. Indeed, if \(Ax \notin {\mathcal {K}}^{n}\cup(-{\mathcal {K}}^{n})\), there are two cases for the element \(x\in \mathbb {R}^{n}\), i.e., \(x\notin {\mathcal {L}}_{\theta }^{\ast}\cup(-{\mathcal {L}}_{\theta })\) or \(x\in {\mathcal {L}}_{\theta }^{\ast}\). When \(x\notin {\mathcal {L}}_{\theta }^{\ast}\cup(-{\mathcal {L}}_{\theta })\), the relationship between \((Ax)_{+}\) and \((x^{\theta })_{+}^{\ast}\) is just as stated in Theorem 2.1(c), that is, \((Ax)_{+} = (\frac{1+\tan^{2} \theta}{2} ) A^{-1} (x^{\theta })_{+}^{\ast}\). However, when \(x\in {\mathcal {L}}_{\theta }^{\ast}\), we have \((x^{\theta })_{+}^{\ast}=x\). This implies that the relationship between \((Ax)_{+}\) and \((x^{\theta })_{+}^{\ast}\) is not very clear. Hence, the relation between \((Ax)_{+}\) and \((x^{\theta })_{+}^{\ast}\) in Theorem 2.1(c) is a bit limited.

3.2 Proof of Theorem 2.2

(a) For any \(x=(x_{1},x_{2})\in \mathbb {R}\times \mathbb {R}^{n-1}\), from the spectral decomposition (3) with respect to the SOC, we have \(Ax=(x_{1} \tan \theta -\|x_{2}\|)u_{x}^{(1)}+(x_{1} \tan \theta +\|x_{2}\|)u_{x}^{(2)}\), where \(u_{x}^{(1)}\) and \(u_{x}^{(2)}\) are given as in (4). It follows from Lemma 1.1(a) that \((Ax)_{+}=(x_{1} \tan \theta -\|x_{2}\|)_{+}u_{x}^{(1)}+(x_{1} \tan \theta +\|x_{2}\|)_{+}u_{x}^{(2)}\). Hence, we obtain the distance \(\operatorname {dist}(Ax, {\mathcal {K}}^{n})\):

(b) For any \(x=(x_{1},x_{2})\in \mathbb {R}\times \mathbb {R}^{n-1}\), from the spectral decomposition (5) with respect to circular cone and Lemma 1.1(b), with the same argument, it is easy to see that

□

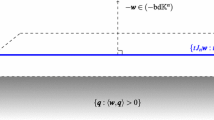

3.3 Proof of Theorem 2.3

(a) For \(\theta \in(0,\frac{\pi}{4})\), we have \(0<\tan \theta < 1 < \cot \theta \) and \({\mathcal {L}}_{\theta }\subset {\mathcal {K}}^{n} \subset {\mathcal {L}}_{\theta }^{\ast}\). We discuss three cases according to \(x\in {\mathcal {L}}_{\theta }\), \(x\in-{\mathcal {L}}_{\theta }^{\ast}\), and \(x\notin {\mathcal {L}}_{\theta }\cup(-{\mathcal {L}}_{\theta }^{\ast})\).

Case 1. If \(x\in {\mathcal {L}}_{\theta }\), then \(Ax\in {\mathcal {K}}^{n}\), which clearly yields \(\operatorname{dist}(Ax, {\mathcal {K}}^{n}) = \operatorname{dist}(x,{\mathcal {L}}_{\theta }) =0\).

Case 2. If \(x\in-{\mathcal {L}}_{\theta }^{\ast}\), then \(x_{1}\cot \theta \leq-\|x_{2}\|\) and

In this case, there are two subcases for the element Ax. If \(x_{1}\cot \theta \leq x_{1}\tan \theta \leq-\|x_{2}\|\), i.e., \(Ax\in-{\mathcal {K}}^{n}\), it follows that

where the first inequality holds since \(\tan \theta < 1\) (it becomes an equality only in the case of \(x=0\)), and the second inequality holds since \(\cot \theta > 1\) (it becomes an equality only in the case of \(x_{2}=0\)). On the other hand, if \(x_{1}\cot \theta \leq-\|x_{2}\| < x_{1}\tan \theta \leq0\), we have

where the third inequality holds because \(\|x_{2}\| \leq-x_{1}\cot \theta \), and the fourth inequality holds since \(\|x_{2}\| > -x_{1}\tan \theta \geq0\). Therefore, for the subcases of \(x \in-{\mathcal {L}}_{\theta }^{\ast}\), we can conclude that

and \(\operatorname{dist}(x,{\mathcal {L}}_{\theta }) = \cot \theta \cdot \operatorname{dist}(Ax,{\mathcal {K}}^{n})\) holds only in the case of \(x_{2}=0\).

Case 3. If \(x\notin {\mathcal {L}}_{\theta }\cup(-{\mathcal {L}}_{\theta }^{\ast})\), then \(-\|x_{2}\|\tan \theta < x_{1}<\|x_{2}\|\cot \theta \), which yields \(x_{1}\tan \theta <\|x_{2}\|\) and \(x_{1}\cot \theta >-\|x_{2}\|\). Thus, we have

On the other hand, it follows from \(-\|x_{2}\|\tan \theta < x_{1}<\|x_{2}\|\cot \theta \) and \(\theta \in(0,\frac{\pi}{4})\) that

This implies that

From this and \(\theta \in(0,\frac{\pi}{4})\), we see that

Therefore, in these cases of \(x\notin {\mathcal {L}}_{\theta }\cup(-{\mathcal {L}}_{\theta }^{\ast})\), we can conclude

To sum up, from all the above and the fact that \(\max\{\cot \theta ,\sqrt{\frac{2}{1+\tan^{2}\theta }}\}=\cot \theta \) for \(\theta \in(0, \frac{\pi}{4})\), we obtain

(b) For \(\theta \in(\frac{\pi}{4},\frac{\pi}{2})\), we have \(0<\cot \theta < 1 < \tan \theta \) and \({\mathcal {L}}_{\theta }^{\ast}\subset {\mathcal {K}}^{n} \subset {\mathcal {L}}_{\theta }\). Again we discuss the following three cases.

Case 1. If \(x\in {\mathcal {L}}_{\theta }\), then \(Ax\in {\mathcal {K}}^{n}\), which implies that \(\operatorname{dist}(Ax,{\mathcal {K}}^{n})= \operatorname{dist}(x,{\mathcal {L}}_{\theta })=0\).

Case 2. If \(x\in-{\mathcal {L}}_{\theta }^{\ast}\), then \(x_{1}\cot \theta \leq-\|x_{2}\|\) and

It follows from \(x_{1}\cot \theta \leq-\|x_{2}\|\) and \(\theta \in(\frac{\pi}{4},\frac{\pi}{2})\) that \(x_{1}\tan \theta \leq x_{1}\cot \theta \leq-\|x_{2}\|\), which leads to \(Ax\in-{\mathcal {K}}^{n}\). Hence, we have

With this, it is easy to verify that \(\operatorname{dist}(Ax,{\mathcal {K}}^{n}) \geq \operatorname{dist}(x,{\mathcal {L}}_{\theta })\) for \(\theta \in(\frac{\pi }{4},\frac{\pi}{2})\). Moreover, we note that

Thus, it follows that

and \(\operatorname{dist}(Ax,{\mathcal {K}}^{n}) = \tan \theta \cdot \operatorname{dist}(x,{\mathcal {L}}_{\theta })\) holds only in the case of \(x_{2}=0\).

Case 3. If \(x\notin {\mathcal {L}}_{\theta }\cup(-{\mathcal {L}}_{\theta }^{\ast})\), then we have \(-\|x_{2}\|\tan \theta < x_{1}<\|x_{2}\|\cot \theta \) and

Since \(-\|x_{2}\|\tan \theta < x_{1}<\|x_{2}\|\cot \theta \), it follows immediately that \(-\|x_{2}\|\tan^{2} \theta < x_{1} \tan \theta <\|x_{2}\|\). Again, there are two subcases for the element Ax. If \(-\|x_{2}\|\tan^{2} \theta <-\|x_{2}\| <x_{1} \tan \theta <\|x_{2}\|\), then we have \(Ax \notin {\mathcal {K}}^{n} \cup(-{\mathcal {K}}^{n})\). Thus, it follows that

This together with \(\theta \in(\frac{\pi}{4},\frac{\pi}{2})\) yields

Moreover, by the expressions of \(\operatorname{dist}(Ax,{\mathcal {K}}^{n})\) and \(\operatorname{dist}(x,{\mathcal {L}}_{\theta })\), it is easy to verify

On the other hand, if \(-\|x_{2}\|\tan^{2} \theta < x_{1} \tan \theta \leq-\|x_{2}\|<\|x_{2}\|\), then we have \(Ax\in-{\mathcal {K}}^{n}\), which implies

Therefore, it follows that

Since

where the first inequality holds due to \(-\|x_{2}\|\tan^{2}\theta < x_{1}\tan \theta \), and the second inequality holds due to \(x_{1}\tan \theta <-\|x_{2}\|\) and \(\theta \in(\frac{\pi}{4},\frac{\pi}{2})\), we have

From all the above analyses and the fact that \(\max\{\tan \theta , \sqrt{\frac{2}{1+\tan^{2}\theta }} \}=\tan \theta \) for \(\theta \in(\frac{\pi}{4},\frac{\pi}{2})\), we can conclude that

Thus, the proof is complete. □

Remark 3.2

We point out that Theorem 2.3 is equivalent to the results in [2], Theorem 2.2, that is, for any \(x, z \in \mathbb {R}^{n}\), we have

and

However, the above inequalities depends on the factors \(\|A\|\) and \(\| A^{-1}\|\). Here, we provide a more concrete and simple expression for the inequality. What is the benefit of such a new expression? Indeed, the new approach provides the situation where the equality holds, which is helpful for further study of the relationship between the second-order cone programming problems and the circular cone programming problems. In particular, from the proof of Theorem 2.3, it is clear that \(\operatorname{dist}(Ax,{\mathcal {K}}^{n}) = \tan \theta \cdot \operatorname{dist}(x,{\mathcal {L}}_{\theta })\) holds only under the cases of \(x=(x_{1},x_{2})\in {\mathcal {L}}_{\theta }\) or \(x_{2}=0\); otherwise we would have the strict inequality \(\operatorname{dist}(Ax,{\mathcal {K}}^{n}) < \tan \theta \cdot \operatorname{dist}(x,{\mathcal {L}}_{\theta })\). In contrast, it takes tedious algebraic manipulations to obtain such situations by using (7) and (8).

The following example elaborates more why \(\operatorname{dist}(Ax,{\mathcal {K}}^{n}) = \tan \theta \cdot \operatorname{dist}(x,{\mathcal {L}}_{\theta })\) holds only in the cases of \(x=(x_{1},x_{2})\in {\mathcal {L}}_{\theta }\) or \(x_{2}=0\).

Example 3.1

Let \(x=(x_{1},x_{2})\in \mathbb {R}\times \mathbb {R}^{n-1}\) and

When \(x\in {\mathcal {L}}_{\theta }\), we have \(Ax\in {\mathcal {K}}^{n}\). It is clear to see that \(\operatorname{dist}(Ax,{\mathcal {K}}^{n}) = \tan \theta \cdot \operatorname{dist}(x,{\mathcal {L}}_{\theta })=0\). When \(x_{2}=0\), i.e., \(x=(x_{1},0)\in \mathbb {R}\times \mathbb {R}^{n-1}\), it follows that \(Ax=(x_{1} \tan \theta , 0)\). If \(x_{1} \geq0\), we have \(x\in {\mathcal {L}}_{\theta }\) and \(Ax\in {\mathcal {K}}^{n}\), which implies that \(\operatorname{dist}(Ax,{\mathcal {K}}^{n}) = \tan \theta \cdot \operatorname{dist}(x,{\mathcal {L}}_{\theta })=0\). In the other case, if \(x_{1} <0\), we see that \(x\in-{\mathcal {L}}_{\theta }^{\ast}\) and \(Ax\in-{\mathcal {K}}^{n}\). All the above gives \(\operatorname{dist}(Ax,{\mathcal {K}}^{n}) = \|Ax\|=|x_{1}|\tan \theta =\tan \theta \cdot \operatorname{dist}(x,{\mathcal {L}}_{\theta })\).

References

Pinto Da Costa, A, Seeger, A: Numerical resolution of cone-constrained eigenvalue problems. Comput. Appl. Math. 28, 37-61 (2009)

Zhou, JC, Chen, JS: Properties of circular cone and spectral factorization associated with circular cone. J. Nonlinear Convex Anal. 14, 807-816 (2013)

Chen, JS, Chen, X, Tseng, P: Analysis of nonsmooth vector-valued functions associated with second-order cones. Math. Program. 101, 95-117 (2004)

Facchinei, F, Pang, J: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer, New York (2003)

Faraut, U, Korányi, A: Analysis on Symmetric Cones. Oxford Mathematical Monographs. Oxford University Press, New York (1994)

Nesterov, Y: Towards nonsymmetric conic optimization. Discussion paper 2006/28, Center for Operations Research and Econometrics (2006)

Zhou, JC, Chen, JS, Hung, HF: Circular cone convexity and some inequalities associated with circular cones. J. Inequal. Appl. 2013, Article ID 571 (2013)

Miao, XH, Guo, SJ, Qi, N, Chen, JS: Constructions of complementarity functions and merit functions for circular cone complementarity problem. Comput. Optim. Appl. 63, 495-522 (2016)

Chen, JS: A new merit function and its related properties for the second-order cone complementarity problem. Pac. J. Optim. 2, 167-179 (2006)

Fukushima, M, Luo, ZQ, Tseng, P: Smoothing functions for second-order cone complementarity problems. SIAM J. Optim. 12, 436-460 (2001)

Hayashi, S, Yamashita, N, Fukushima, M: A combined smoothing and regularization method for monotone second-order cone complementarity problems. SIAM J. Optim. 15, 593-615 (2005)

Kong, LC, Sun, J, Xiu, NH: A regularized smoothing Newton method for symmetric cone complementarity problems. SIAM J. Optim. 19, 1028-1047 (2008)

Zhang, Y, Huang, ZH: A nonmonotone smoothing-type algorithm for solving a system of equalities and inequalities. J. Comput. Appl. Math. 233, 2312-2321 (2010)

Jeyakumar, V, Yang, XQ: Characterizing the solution sets of pseudolinear programs. J. Optim. Theory Appl. 87, 747-755 (1995)

Mangasarian, OL: A simple characterization of solution sets of convex programs. Oper. Res. Lett. 7, 21-26 (1988)

Miao, XH, Chen, JS: Characterizations of solution sets of cone-constrained convex programming problems. Optim. Lett. 9, 1433-1445 (2015)

Acknowledgements

We would like to thank the editor and the anonymous referees for their careful reading and constructive comments which have helped us to significantly improve the presentation of the paper. The first author’s work is supported by National Natural Science Foundation of China (No. 11471241). The third author’s work is supported by Ministry of Science and Technology, Taiwan.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that none of the authors have any competing interests in the manuscript.

Authors’ contributions

All authors participated in its design and coordination and helped to draft the manuscript. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Miao, XH., Lin, Yc.R. & Chen, JS. An alternative approach for a distance inequality associated with the second-order cone and the circular cone. J Inequal Appl 2016, 291 (2016). https://doi.org/10.1186/s13660-016-1243-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-016-1243-5