Abstract

We consider two nonlinear matrix equations \(X^{r} \pm \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i} = I\), where \(- 1 < \delta_{i} < 0\), and r, m are positive integers. For the first equation (plus case), we prove the existence of positive definite solutions and extremal solutions. Two algorithms and proofs of their convergence to the extremal positive definite solutions are constructed. For the second equation (negative case), we prove the existence and the uniqueness of a positive definite solution. Moreover, the algorithm given in (Duan et al. in Linear Algebra Appl. 429:110-121, 2008) (actually, in (Shi et al. in Linear Multilinear Algebra 52:1-15, 2004)) for \(r = 1\) is proved to be valid for any r. Numerical examples are given to illustrate the performance and effectiveness of all the constructed algorithms. In Appendix, we analyze the ordering on the positive cone \(\overline{P(n)}\).

Similar content being viewed by others

1 Introduction

Consider the two nonlinear matrix equations

and

where \(A_{i}\) are \(n \times n\) nonsingular matrices, I is the \(n \times n\) identity matrix, and r, m are positive integers, whereas X is an \(n \times n\) unknown matrix to be determined (\(A_{i}^{*}\) stands for the conjugate transpose of the matrix \(A_{i}\)). The existence and uniqueness, the rate of convergence, and necessary and sufficient conditions for the existence of positive definite solutions of similar kinds of nonlinear matrix equations have been studied by several authors [1–15]. El-Sayed [16] considered the matrix equation \(X + A^{*}F(X)A = Q\) with positive definite matrix Q and has shown that under some conditions an iteration method converges to the positive definite solution. Dehghan and Hajarian [17] constructed an iterative algorithm to solve the generalized coupled Sylvester matrix equations over reflexive matrices Y, Z. Also, they obtained an optimal approximation reflexive solution pair to a given matrix pair \([Y, Z]\) in the reflexive solution pair set of the generalized coupled Sylvester matrix equations \((AY - ZB,CY - ZD) = (E,F)\).

Dehghan and Hajarian [18] constructed an iterative method to solve the general coupled matrix equations \(\sum_{j = 1}^{p} A_{ij}X_{j}B_{ij} = M_{i}\), \(i = 1,2, \ldots,p\) (including the generalized (coupled) Lyapunov and Sylvester matrix equations as particular cases) over generalized bisymmetric matrix group \((X_{1},X_{2}, \ldots,X_{p})\) by extending the idea of conjugate gradient (CG) method. They determined the solvability of the general coupled matrix equations over generalized bisymmetric matrix group in the absence of roundoff errors. In addition, they obtained the optimal approximation generalized bisymmetric solution group to a given matrix group in Frobenius norm by finding the least Frobenius norm of the generalized bisymmetric solution group of new general coupled matrix equations.

Hajarian [19] derived a simple and efficient matrix algorithm to solve the general coupled matrix equations \(\sum_{j = 1}^{p} A_{ij}X_{j}B_{ij} = C_{i}\), \(i = 1,2, \ldots,p\) (including several linear matrix equations as particular cases) based on the conjugate gradients squared (CGS) method.

Hajarian [20] developed the conjugate gradient squared (CGS) and biconjugate gradient stabilized (Bi-CGSTAB) methods for obtaining matrix iterative methods for solving the Sylvester-transpose matrix equation \(\sum_{i = 1}^{k} (A_{i}XB_{i} + C_{i}X^{T}D_{i}) = E\) and the periodic Sylvester matrix equation for \(j = 1,2, \ldots,\lambda\).

Shi [2] considered the matrix equation

and Duan [1] considered the matrix equation

and Duan [6] considered the matrix equation

The main results of [2] are the following:

-

(a1)

The proof of the uniqueness of a positive definite solution of Equation (1.3).

-

(a2)

An algorithm for obtaining that solution with an original proof of convergence.

The main results of [1] are the following:

-

(b1)

The uniqueness of a positive definite solution of Equation (1.4), depending on Lemma 2.3.

-

(b2)

The same algorithm given by [2] is used with the same slightly changed proof.

In this paper, we show that the proof given in [1] can be deduced directly from [2]. In [6], the authors proved that Equation (1.5) always has a unique Hermitian positive definite solution for every fixed r. We would like to assure that Equations (1.1) and (1.2) are a nontrivial generalization of the corresponding problems with \(r = 1\). In fact, Equations (1.1) and (1.2) correspond to infinitely many \((r = 1)\)-problems with nested intervals of \(\delta_{i}\) as \(- \frac{1}{r} < \delta_{i} < 0\), as shown by the following 1-1 transformation: Putting \(X^{r} = Y\), Equations (1.1) and (1.2) become \(Y \pm \sum_{i = 1}^{m} A_{i}^{*}Y^{\delta_{i}}A_{i} = I\) with \(- \frac{1}{r} < \delta_{i} < 0\) for \(r = 1,2,\ldots\) . Our results show that some properties are invariant for all the corresponding problems such as the existence of the positive definite and extremal solutions of Equation (1.1) and the property of uniqueness of a solution of Equation (1.2). Moreover, it is clear that the set of solutions of Equation (1.1) differs with respect to the corresponding problems. Furthermore, in our paper [21], we obtained a sufficient condition for Equation (1.1) to have a unique solution. Since this condition depends on r, the uniqueness may hold for some corresponding problems and fails for others.

This paper is organized as follows. First, in Section 2, we introduce some notation, definitions, lemmas, and theorems that will be needed for this work. In Section 3, the existence of positive definite solutions of Equation (1.1) beside the extremal (maximal and minimal) solutions, which is of a more general form than other existing ones, is proved. In Section 4, two algorithms for obtaining the extremal positive definite solutions of Equation (1.1) are proposed. The merit of the proposed method is of iterative nature, which makes it more efficient. In Section 5, some numerical examples are considered to illustrate the performance and effectiveness of the algorithms. In Section 6, the existence and uniqueness of a positive definite solution of Equation (1.2) is proved. Finally, the algorithm in [1] is adapted for solving this equation. At the end of this paper, in Appendix, we analyze the defined ordering on the positive cone \(\overline{P(n)}\) showing that the ordering is total. This important result shows that problem (1.1) has one maximal and one minimal solution. Also, we explain the effect of the value of α on the number of iterations of the given algorithms, shown in Tables 1 and 2.

2 Preliminaries

The following notation, definitions, lemmas, and theorems will be used herein:

-

1.

For \(A,B \in C^{n \times n}\), we write \(A > 0\) (≥0) if the matrix A is Hermitian positive definite (HPD) (semidefinite). If \(A - B > 0\) (\(A - B \ge 0\)), then we write \(A > B\) (\(A \ge B\)).

-

2.

If a Hermitian positive definite matrix X satisfies \(A \le X \le B\), then we write \(X \in [A,B]\).

-

3.

By a solution we mean a Hermitian positive definite solution.

-

4.

If Equation (1.1) has the maximal solution \(X_{L}\) (minimal solution \(X_{S}\)), then for any solution X, \(X_{S} \le X \le X_{L}\).

-

5.

\(P(n)\) denotes the set of all \(n \times n\) positive definite matrices.

-

6.

Let E be a real Banach space. A nonempty convex closed set \(P \subset E\) is called a cone if:

-

(i)

\(x \in P\), \(\lambda \ge 0\) implies \(\lambda x \in P\).

-

(ii)

\(x \in P\), \(- x \in P\) implies \(x = \theta\), where θ denotes the zero element.

-

(i)

We denote the set of interior points of P by \(P^{o}\). A cone is said to be solid if \(P^{o} \ne \phi\). Each cone P in E defines a partial ordering in E given by \(x \le y\) if and only if \(y - x \in P\).

In this paper, we consider P to be the cone of \(n \times n\) positive semidefinite matrices, denoted \(\overline{P(n)}\); its interior is the set of \(n \times n\) positive definite matrices \(P(n)\).

Definition 2.1

([1])

A cone \(P \subset E\) is said to be normal if there exists a constant \(M > 0\) such that \(\theta \le x \le y\) implies \(\Vert x \Vert \le M\Vert y \Vert \).

Definition 2.2

([1])

Let P be a solid cone of a real Banach space E, and \(\Gamma:P^{o} \to P^{o}\). Let \(0 \le a < 1\). Then Γ is said to be a-concave if \(\Gamma (tx) \ge t^{a}\Gamma (x)\) \(\forall x \in P^{o}\), \(0 < t < 1\).

Similarly, Γ is said to be \(( - a)\)-convex if \(\Gamma (tx) \le t^{ - a}\Gamma (x)\) \(\forall x \in P^{o}\), \(0 < t < 1\).

Lemma 2.3

([1])

Let P be a normal cone in a real Banach space E, and let \(\Gamma:P^{o} \to P^{o}\) be a-concave and increasing (or \(( - a)\)-convex and decreasing) for an \(a \in [0,1)\). Then Γ has exactly one fixed point x in \(P^{o}\).

Lemma 2.4

([4])

If \(A \ge B > 0\) (or \(A > B > 0\)), then \(A^{\gamma} \ge B^{\gamma} > 0\) (or \(A^{\gamma} > B^{\gamma} > 0\)) for all \(\gamma \in (0,1]\), and \(B^{\gamma} \ge A^{\gamma} > 0\) (or \(B^{\gamma} > A^{\gamma} > 0\)) for all \(\gamma \in [ - 1,0)\).

Definition 2.5

([22])

A function f is said to be matrix monotone of order n if it is monotone with respect to this order on \(n \times n\) Hermitian matrices, that is, if \(A \le B\) implies \(f(A) \le f(B)\). If f is matrix monotone of order n for all n, then we say that f is matrix monotone or operator monotone.

Theorem 2.6

([22])

Every operator monotone function f on an interval I is continuously differentiable.

Definition 2.7

([1])

Let \(D \subset E\). An operator \(f:D \to E\) is said to be an increasing operator if \(y_{1} \ge y_{2}\) implies \(f(y_{1}) \ge f(y_{2})\), where \(y_{1},y_{2} \in D\). Similarly, f is said to be a decreasing operator if \(y_{1} \ge y_{2}\) implies \(f(y_{1}) \le f(y_{2})\), where \(y_{1},y_{2} \in D\).

Theorem 2.8

(Brouwer’s Fixed Point, [23])

Every continuous map of a closed bounded convex set in \(R^{n}\) into itself has a fixed point.

3 On the existence of positive definite solutions of \(X^{r}+\sum_{i=1}^{m}A_{i}^{*}X^{\delta_{i}}A_{i}=I\)

The map F associated with Equation (1.1) is defined by

Theorem 3.1

The mapping F defined by (3.1) is operator monotone.

Proof

Suppose \(X_{1} \ge X_{2} > 0\). Then, \(F(X_{1}) = (I - \sum_{i = 1}^{m} A_{i}^{*}X_{1}^{\delta_{i}}A_{i} )^{\frac{1}{r}}\) and \(F(X_{2}) = (I - \sum_{i = 1}^{m} A_{i}^{*} X_{2}^{\delta_{i}}A_{i} )^{\frac{1}{r}}\).

Since \(X_{1} \ge X_{2}\), we have \(X_{1}^{\delta_{i}} \le X_{2}^{\delta_{i}}\) for all \(i = 1,2,\ldots,m\).

Then \(A_{i}^{*}X_{1}^{\delta_{i}}A_{i} \le A_{i}^{*}X_{2}^{\delta_{i}}A_{i}\) and \(\sum_{i = 1}^{m} A_{i}^{*}X_{1}^{\delta_{i}}A_{i} \le \sum_{i = 1}^{m} A_{i}^{*}X_{2}^{\delta_{i}}A_{i}\).

Therefore, \(I - \sum_{i = 1}^{m} A_{i}^{*}X_{1}^{\delta_{i}}A_{i} \ge I - \sum_{i = 1}^{m} A_{i}^{*}X_{2}^{\delta_{i}}A_{i}\), and since r is a positive integer, \(0 < \frac{1}{r} \le 1\).

Hence, \((I - \sum_{i = 1}^{m} A_{i}^{*}X_{1}^{\delta_{i}}A_{i} )^{\frac{1}{r}} \ge (I - \sum_{i = 1}^{m} A_{i}^{*}X_{2}^{\delta_{i}}A_{i} )^{\frac{1}{r}}\), that is, \(F(X_{1}) \ge F(X_{2})\). Thus, \(F(X)\) is operator monotone. □

The following theorem proves the existence of positive definite solutions for Equation (1.1), based on the Brouwer fixed point theorem.

Theorem 3.2

If a real number \(\beta < 1\) satisfies \((1 - \beta^{r})I \ge \sum_{i = 1}^{m} \beta^{\delta_{i}}A_{i}^{*}A_{i}\), then Equation (1.1) has positive definite solutions.

Proof

It can easily be proved that the condition of the theorem leads to \(\beta I \le (I - \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{1}{r}}\). Let \(D_{1} = [\beta I,(I - \sum_{i = 1}^{m} A_{i}^{*}A_{i})^{\frac{1}{r}} ]\). It is clear that \(D_{1}\) is closed, bounded, and convex. To show \(F:D_{1} \to D_{1}\), let \(X \in D_{1}\). Then \(\beta I \le X\), and thus \(\beta^{\delta_{i}}I \ge X^{\delta_{i}}\), \(- 1 < \delta_{i} < 0\). Therefore, \((I - \sum_{i = 1}^{m} \beta^{\delta_{i}}A_{i}^{*}A_{i} )^{\frac{1}{r}} \le (I - \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i} )^{\frac{1}{r}}\). By the definition of β, \((I - \sum_{i = 1}^{m} \beta^{\delta_{i}}A_{i}^{*}A )^{\frac{1}{r}} \ge \beta I\), and thus \(\beta I \le (I - \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i} )^{\frac{1}{r}} = F(X)\), that is,

It is clear that \(X \le I\). Then \(X^{\delta_{i}} \ge I\) and \((I - \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i} )^{\frac{1}{r}} \le (I - \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{1}{r}}\), that is,

From (3.2) and (3.3) we get that \(F(X) \in D_{1}\); therefore, \(F:D_{1} \to D_{1}\). F is continuous since it is operator monotone. Therefore, F has a fixed point in \(D_{1}\), which is a solution of Equation (1.1).

The following remark, in addition to Examples 5.1 and 5.2 in Section 5, assures the validity of this theorem. □

Remark

Let us consider the simple case \(r = m = n = 1\), \(\delta = - \frac{1}{2}\). It is clear that β does not exist for \(a^{2} > \frac{2\sqrt{3}}{9}\) (where \(a^{2}\) is \(A^{*}A\) in this simple case) and also that no solution exists. For \(a^{2} \le \frac{2\sqrt{3}}{9}\), there exist β and solutions (i.e., the theorem holds). For instance, for \(a^{2} = \frac{2\sqrt{3}}{9}\), there exist \(\beta = \frac{1}{3}\) and the solution, namely \(x = \frac{1}{3}\).

Theorem 3.3

The mapping F has the maximal and the minimal elements in \(D_{1} = [\beta I,(I - \sum_{i = 1}^{m} A_{i}^{*}A_{i})^{\frac{1}{r}} ]\), where β is given in Theorem 3.2.

Proof

By Theorems 2.6 and 3.1 the mapping F is continuous and bounded above since \(F(X) < I\). Let \(\sup_{X \in D_{1}}F(X) = Y\). So, there exists an X̂ in \(D_{1}\) satisfying \(Y - \varepsilon I < F(\hat{X}) \le Y\). We can choose a sequence \(\{ X_{n} \}\) in \(D_{1}\) satisfying \(Y - (\frac{1}{n})I < F(X_{n}) \le Y\). Since \(D_{1}\) is compact, the sequence \(\{ X_{n} \}\) has a subsequence \(\{ X_{n_{k}} \}\) convergent to \(\overline{X} \in D_{1}\). So, \(Y - (\frac{1}{n})I < F(X_{n_{k}}) \le Y\). Taking the limit as \(n \to \infty\), by the continuity of F we get \(\lim_{n \to \infty} F(X_{n_{k}}) = F(\overline{X}) = Y = \max \{ F(X):X \in D_{1} \}\). Hence, F has a maximal element \(\overline{X} \in D_{1}\).

Similarly, we can prove that F has a minimal element in \(D_{1}\), noting that F is bounded below by the zero matrix. □

4 Two algorithms for obtaining extremal positive definite solution of \(X^{r}+\sum_{i=1}^{m}A_{i}^{*}X^{\delta_{i}}A_{i}=I\)

In this section, we present two algorithms for obtaining the extremal positive definite solutions of Equation (1.1). The main idea of the algorithms is to avoid computing the inverses of matrices.

Algorithm 4.1

(INVERSE-FREE Algorithm)

Consider the iterative algorithm

where δ is a negative integer such that \(\frac{\vert \delta \vert }{r} < 1\).

Theorem 4.2

Suppose that Equation (1.1) has a positive definite solution. Then the iterative Algorithm 4.1 generates subsequences \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\) that are decreasing and converge to the maximal solution \(X_{L}\).

Proof

Suppose that Equation (1.1) has a solution. We first prove that the subsequences \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\) are decreasing and the subsequences \(\{ Y_{2k} \}\) and \(\{ Y_{2k + 1} \}\) are increasing. Consider the sequence of matrices generated by (4.1).

For \(k = 0\), we have

Since \((\alpha^{r} - 1)I + \sum_{i = 1}^{m} \alpha^{\delta_{i}}A_{i}^{*}A_{i} > 0\), we have \(I - \sum_{i = 1}^{m} \alpha^{\delta_{i}}A_{i}^{*}A_{i} < \alpha^{r}I\). Then

So we get \(X_{1} < X_{0}\). Also, \(Y_{1} = Y_{0}[2I - X_{0}Y_{0}^{ - \frac{1}{\delta}} ] = \alpha^{\delta} [2I - \alpha \alpha^{ - 1}I] = \alpha^{\delta} I = Y_{0}\).

For \(k = 1\), we have

So we have \(X_{2} < X_{0}\). Also,

From (4.2) we get \(2\alpha^{\delta} I - \alpha^{\delta - 1}(I - \sum_{i = 1}^{m} \alpha^{\delta_{i}}A_{i}^{*}A_{i} )^{\frac{1}{r}} > \alpha^{\delta} I\). Thus, \(Y_{2} > Y_{0}\).

For \(k = 2\), we have

Since \(Y_{2} > Y_{0}\), we have \(Y_{2}^{\frac{\delta_{i}}{\delta}} > Y_{0}^{\frac{\delta_{i}}{\delta}}\), so we get \((I - \sum_{i = 1}^{m} A_{i}^{*} Y_{2}^{\frac{\delta_{i}}{\delta}} A_{i})^{\frac{1}{r}} < (I - \sum_{i = 1}^{m} A_{i}^{*}Y_{0}^{\frac{\delta_{i}}{\delta}} A_{i})^{\frac{1}{r}}\).

Thus, \(X_{3} < X_{1}\). Also, \(Y_{3} = Y_{2}[2I - X_{2}Y_{2}^{ - \frac{1}{\delta}} ]\). Since \(Y_{2} > Y_{0}\) and \(X_{2} < X_{0}\), we have \(Y_{2}^{ - \frac{1}{\delta}} > Y_{0}^{ - \frac{1}{\delta}}\) and \(- X_{2} > - X_{0}\), so that \(- X_{2}Y_{2}^{ - \frac{1}{\delta}} > - X_{0}Y_{0}^{ - \frac{1}{\delta}}\) and \(2I - X_{2}Y_{2}^{ - \frac{1}{\delta}} > 2I - X_{0}Y_{0}^{ - \frac{1}{\delta}}\), and we get \(Y_{2}[2I - X_{2}Y_{2}^{ - \frac{1}{\delta}} ] > Y_{0}[2I - X_{0}Y_{0}^{ - \frac{1}{\delta}} ]\). Thus, \(Y_{3} > Y_{1}\).

Similarly, we can prove:

Hence, \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\), \(k = 0,1,2,\ldots\) , are decreasing, whereas \(\{ Y_{2k} \}\) and \(\{ Y_{2k + 1} \}\), \(k = 0,1,2,\ldots\) , are increasing.

Now, we show that \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\) are bounded from below by \(X_{L}\) (\(X_{k} > X_{L}\)) and that \(\{ Y_{2k} \}\) and \(\{ Y_{2k + 1} \}\) are bounded from above by \(X_{L}^{\delta}\).

By induction on k we get

Since \(X_{L}\) is a solution of (1.1), we have that \((I - \sum_{i = 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}}A_{i} )^{\frac{1}{r}} < I\) and \(\alpha I - (I - \sum_{i = 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}}A_{i} )^{\frac{1}{r}} > \alpha I - I = (\alpha - 1)I > 0\), \(\alpha > 1\), that is, \(X_{0} > X_{L}\). Also, \(X_{1} - X_{L} = (I - \sum_{i = 1}^{m} A_{i}^{*}X_{0}^{\delta_{i}}A_{i} )^{\frac{1}{r}} - (I - \sum_{i = 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}}A_{i})^{\frac{1}{r}}\).

Since \(X_{0} > X_{L}\), we have \(X_{0}^{\delta_{i}} < X_{L}^{\delta_{i}}\), and therefore \((I - \sum_{i = 1}^{m} A_{i}^{*}X_{0}^{\delta_{i}}A_{i} )^{\frac{1}{r}} > (I - \sum_{i = 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}}A_{i})^{\frac{1}{r}}\). So we get \(X_{1} > X_{L}\). Also, \(X_{L}^{\delta} - Y_{0} = (I - \sum_{i - 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}}A_{i} )^{\frac{\delta}{r}} - \alpha^{\delta} I\).

Since \(X_{0} > X_{L}\), we have \(\alpha I > X_{L}\) and \(\alpha^{\delta_{i}}I < X_{L}^{\delta_{i}}\) \(\forall i = 1,2,\ldots, m\), so we have

From (4.2) we get

From (4.3) and (4.4) we get \((I - \sum_{i = 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}}A_{i} )^{\frac{\delta}{r}} > \alpha^{\delta} I\), so we have \(X_{L}^{\delta} > Y_{0}\) and \(X_{L}^{\delta} > Y_{1}\).

Assume that \(X_{2k} > X_{L}\), \(X_{2k + 1} > X_{L}\) at \(k = t\) that is, \(X_{2t} > X_{L}\), \(X_{2t + 1} > X_{L}\). Also, \(Y_{2t} < X_{L}^{\delta}\) and \(Y_{2t + 1} < X_{L}^{\delta}\).

Now, for \(k = t + 1\),

Since \(Y_{2t + 1} < X_{L}^{\delta}\), we have \(Y_{2t + 1}^{\frac{\delta_{i}}{\delta}} < X_{L}^{\delta_{i}}\) and thus \(\sum_{i = 1}^{m} A_{i}^{*} Y_{2t + 1}^{\frac{\delta_{i}}{\delta}} A_{i} < \sum_{i = 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}} A_{i}\). Therefore, \((I - \sum_{i = 1}^{m} A_{i}^{*} Y_{2t + 1}^{\frac{\delta_{i}}{\delta}} A_{i})^{\frac{1}{r}} > (I - \sum_{i = 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}} A_{i})^{\frac{1}{r}}\).

Hence, \(X_{2t + 2} - X_{L} > 0\), that is, \(X_{2t + 2} > X_{L}\). Also, \(X_{L}^{\delta} - Y_{2t + 2} = X_{L}^{\delta} - Y_{2t + 1}[2I - X_{2t + 1}Y_{2t + 1}^{ - \frac{1}{\delta}} ]\).

Since \(Y_{2t + 1} < X_{L}^{\delta}\) and \(X_{2t + 1} > X_{L}\), we have \(Y_{2t + 1}^{ - \frac{1}{\delta}} < X_{L}^{ - 1}\) and \(- X_{2t + 1} < - X_{L}\),

Then \(Y_{2t + 1}[2I - X_{2t + 1}Y_{2t + 1}^{ - \frac{1}{\delta}} ] < X_{L}^{\delta}\). Hence, \(Y_{2t + 2} < X_{L}^{\delta}\), and thus

Since, \(Y_{2t + 2} < X_{L}^{\delta}\), we have \(Y_{2t + 2}^{\frac{\delta_{i}}{\delta}} < X_{L}^{\delta_{i}}\) and \(\sum_{i = 1}^{m} A_{i}^{*} Y_{2t + 2}^{\frac{\delta_{i}}{\delta}} A_{i} < \sum_{i = 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}} A_{i}\). Therefore, \((I - \sum_{i = 1}^{m} A_{i}^{*} Y_{2t + 2}^{\frac{\delta_{i}}{\delta}} A_{i})^{\frac{1}{r}} > (I - \sum_{i = 1}^{m} A_{i}^{*}X_{L}^{\delta_{i}} A_{i})^{\frac{1}{r}}\).

Hence, \(X_{2t + 3} - X_{L} > 0\), that is, \(X_{2t + 3} > X_{L}\), and thus \(X_{L}^{\delta} - Y_{2t + 3} = X_{L}^{\delta} - Y_{2t + 2}[2I - X_{2t + 2}Y_{2t + 2}^{ - \frac{1}{\delta}} ]\).

Since \(Y_{2t + 2} < X_{L}^{\delta}\) and \(X_{2t + 2} > X_{L}\), we have \(Y_{2t + 2}^{ - \frac{1}{\delta}} < X_{L}^{ - 1}\) and \(- X_{2t + 2} < - X_{L}\), and thus

Then \(Y_{2t + 2}[2I - X_{2t + 2}Y_{2t + 2}^{ - \frac{1}{\delta}} ] < X_{L}^{\delta}\). Hence, \(Y_{2t + 3} < X_{L}^{\delta}\).

Since \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\) are decreasing and bounded from below by \(X_{L}\) and \(\{ Y_{2k} \}\) and \(\{ Y_{2k + 1} \}\) are increasing and bounded from above by \(X_{L}^{\delta}\), it follows that \(\lim_{k \to \infty} X_{k} = X\) and \(\lim_{k \to \infty} Y_{k} = Y\) exist.

Taking limits in (4.1) gives \(Y = X^{\delta}\) and \(X = (I - \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}} A_{i})^{\frac{1}{r}}\), that is, X is a solution. Hence, \(X = X_{L}\). □

Remark

We have proved that the maximal solution is unique; see the Appendix.

Now, we consider the case \(0 < \alpha < 1\).

Algorithm 4.3

(INVERSE-FREE Algorithm)

Consider the iterative (simultaneous) algorithm

where δ is a negative integer such that \(\frac{\vert \delta \vert }{r} < 1\).

Theorem 4.4

Suppose that Equation (1.1) has a positive definite solution such that \(\sum_{i = 1}^{m} \alpha^{\delta_{i}}A_{i}^{*}A_{i} < (1 - \alpha^{r})I\). Then the iterative Algorithm 4.3 generates the subsequences \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\) that are increasing and converge to the minimal solution \(X_{S}\).

Proof

Suppose that Equation (1.1) has a solution. We first prove that the subsequences \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\) are increasing and the subsequences \(\{ Y_{2k} \}\) and \(\{ Y_{2k + 1} \}\) are decreasing. Consider the sequence of matrices generated by (4.5).

For \(k = 0\), we have

From the condition of the theorem we have

Then,

So we get \(X_{1} > X_{0}\). Also, \(Y_{1} = Y_{0}[2I - X_{0}Y_{0}^{ - \frac{1}{\delta}} ] = \alpha^{\delta} [2I - \alpha \alpha^{ - 1}I] = \alpha^{\delta} I = Y_{0}\).

For \(k = 1\), we have

So we have \(X_{2} > X_{0}\). Also,

From (4.7) we get \(2\alpha^{\delta} I - \alpha^{\delta - 1}(I - \sum_{i = 1}^{m} \alpha^{\delta_{i}}A_{i}^{*}A_{i} )^{\frac{1}{r}} < \alpha^{\delta} I\). Thus, \(Y_{2} < Y_{0}\).

For \(k = 2\), we have

Since \(Y_{2} < Y_{0}\), we have \(Y_{2}^{\frac{\delta_{i}}{\delta}} < Y_{0}^{\frac{\delta_{i}}{\delta}}\), so we get \((I - \sum_{i = 1}^{m} A_{i}^{*} Y_{2}^{\frac{\delta_{i}}{\delta}} A_{i})^{\frac{1}{r}} > (I - \sum_{i = 1}^{m} A_{i}^{*}Y_{0}^{\frac{\delta_{i}}{\delta}} A_{i})^{\frac{1}{r}}\). Thus, \(X_{3} > X_{1}\). Also, \(Y_{3} = Y_{2}[2I - X_{2}Y_{2}^{ - \frac{1}{\delta}} ]\).

Since \(Y_{2} < Y_{0}\) and \(X_{2} > X_{0}\), we have \(Y_{2}^{ - \frac{1}{\delta}} < Y_{0}^{ - \frac{1}{\delta}}\) and \(- X_{2} < - X_{0}\), so that \(- X_{2}Y_{2}^{ - \frac{1}{\delta}} < - X_{0}Y_{0}^{ - \frac{1}{\delta}}\) and \(2I - X_{2}Y_{2}^{ - \frac{1}{\delta}} < 2I - X_{0}Y_{0}^{ - \frac{1}{\delta}}\), and we get \(Y_{2}[2I - X_{2}Y_{2}^{ - \frac{1}{\delta}} ] < Y_{0}[2I - X_{0}Y_{0}^{ - \frac{1}{\delta}} ]\). Thus, \(Y_{3} < Y_{1}\).

Similarly, we can prove that

Hence \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\), \(k = 0,1,2,\ldots\) , are increasing, whereas \(\{ Y_{2k} \}\) and \(\{ Y_{2k + 1} \}\), \(k = 0,1,2,\ldots\) , are decreasing.

Now, we show that \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\) are bounded from above by \(X_{S}\) (\(X_{S} > X_{k}\)), and \(\{ Y_{2k} \}\) and \(\{ Y_{2k + 1} \}\) are bounded from below by \(X_{S}^{\delta}\).

By induction on k we obtain

that is, \(X_{S} > X_{0}\). Also,

Since \(X_{S} > X_{0}\), we have \(X_{S}^{\delta_{i}} < X_{0}^{\delta_{i}}\), and therefore \((I - \sum_{i = 1}^{m} A_{i}^{*}X_{S}^{\delta_{i}}A_{i} )^{\frac{1}{r}} > (I - \sum_{i = 1}^{m} A_{i}^{*}X_{0}^{\delta_{i}}A_{i})^{\frac{1}{r}}\). So we get \(X_{S} > X_{1}\). Also, \(Y_{0} - X_{S}^{\delta} = \alpha^{\delta} I - (I - \sum_{i - 1}^{m} A_{i}^{*}X_{S}^{\delta_{i}}A_{i} )^{\frac{\delta}{r}}\).

Since \(X_{S} > X_{0}\), we have \(X_{S} > \alpha I\) and \(X_{S}^{\delta_{i}} < \alpha^{\delta_{i}}I\) \(\forall i = 1,2,\ldots, m\), and we get

From (4.6) we get

From (4.8) and (4.9) we get \(\alpha^{\delta} I > (I - \sum_{i = 1}^{m} A_{i}^{*}X_{S}^{\delta_{i}}A_{i} )^{\frac{\delta}{r}}\), and thus \(Y_{0} > X_{S}^{\delta}\) and \(Y_{1} > X_{S}^{\delta}\).

Assume that \(X_{2k} < X_{S}\) and \(X_{2k + 1} < X_{S}\) at \(k = t\), that is, \(X_{2t} < X_{S}\) and \(X_{2t + 1} < X_{S}\). Also, \(Y_{2t} > X_{S}^{\delta}\) and \(Y_{2t + 1} > X_{S}^{\delta}\).

Now, for \(k = t + 1\), we have

Since \(Y_{2t + 1} > X_{S}^{\delta}\), we have \(Y_{2t + 1}^{\frac{\delta_{i}}{\delta}} > X_{S}^{\delta_{i}}\) and \(\sum_{i = 1}^{m} A_{i}^{*} Y_{2t + 1}^{\frac{\delta_{i}}{\delta}} A_{i} > \sum_{i = 1}^{m} A_{i}^{*}X_{S}^{\delta_{i}} A_{i}\). Therefore, \((I - \sum_{i = 1}^{m} A_{i}^{*} Y_{2t + 1}^{\frac{\delta_{i}}{\delta}} A_{i})^{\frac{1}{r}} < (I - \sum_{i = 1}^{m} A_{i}^{*}X_{S}^{\delta_{i}} A_{i})^{\frac{1}{r}}\).

Hence, \(X_{S} - X_{2t + 2} > 0\), that is, \(X_{S} > X_{2t + 2}\). Also,

Since \(Y_{2t + 1} > X_{S}^{\delta}\) and \(X_{2t + 1} < X_{S}\), we have \(Y_{2t + 1}^{ - \frac{1}{\delta}} > X_{S}^{ - 1}\) and \(- X_{2t + 1} > - X_{S}\), and then

Then \(Y_{2t + 1}[2I - X_{2t + 1}Y_{2t + 1}^{ - \frac{1}{\delta}} ] > X_{S}^{\delta}\). Hence, \(Y_{2t + 2} > X_{S}^{\delta}\). Consider

Since \(Y_{2t + 2} > X_{S}^{\delta}\), we have \(Y_{2t + 2}^{\frac{\delta_{i}}{\delta}} > X_{S}^{\delta_{i}}\) and \(\sum_{i = 1}^{m} A_{i}^{*} Y_{2t + 2}^{\frac{\delta_{i}}{\delta}} A_{i} > \sum_{i = 1}^{m} A_{i}^{*}X_{S}^{\delta_{i}} A_{i}\). Therefore, \((I - \sum_{i = 1}^{m} A_{i}^{*} Y_{2t + 2}^{\frac{\delta_{i}}{\delta}} A_{i})^{\frac{1}{r}} < (I - \sum_{i = 1}^{m} A_{i}^{*}X_{S}^{\delta_{i}} A_{i})^{\frac{1}{r}}\).

Hence, \(X_{S} - X_{2t + 3} > 0\), that is, \(X_{S} > X_{2t + 3}\). Now consider

Since \(Y_{2t + 2} > X_{S}^{\delta}\) and \(X_{2t + 2} < X_{S}\), we have \(Y_{2t + 2}^{ - \frac{1}{\delta}} > X_{S}^{ - 1}\) and \(- X_{2t + 2} > - X_{S}\), and thus

Then \(Y_{2t + 2}[2I - X_{2t + 2}Y_{2t + 2}^{ - \frac{1}{\delta}} ] > X_{S}^{\delta}\), and hence \(Y_{2t + 3} > X_{S}^{\delta}\).

Since \(\{ X_{2k} \}\) and \(\{ X_{2k + 1} \}\) are increasing and bounded from above by \(X_{S}\) and \(\{ Y_{2k} \}\) and \(\{ Y_{2k + 1} \}\) are decreasing and bounded from below by \(X_{S}^{\delta}\), it follows that \(\lim_{k \to \infty} X_{k} = X\) and \(\lim_{k \to \infty} Y_{k} = Y\) exist. Taking the limits in (4.5) gives \(Y = X^{\delta}\) and \(X = (I - \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}} A_{i})^{\frac{1}{r}}\), that is, X is a solution of Equation (1.1). Hence, \(X = X_{S}\). □

Remark

We have proved that the minimal solution is unique; see the Appendix.

5 Numerical examples

In this section, we report a variety of numerical examples to illustrate the accuracy and efficiency of the two proposed Algorithms 4.1 and 4.3 to obtain the extremal positive definite solutions of Equation (1.1). The solutions are computed for different matrices \(A_{i}\), \(i = 1,2,\ldots,m\), and different values of α, r, δ, and \(\delta_{i}\), \(i = 1,2,\ldots,m\). All programs are written in MATLAB version 5.3. We denote \(\varepsilon (X_{k}) = \Vert X_{k}^{r} + \sum_{i = 1}^{m} A_{i}^{*}X_{k}^{\delta_{i}}A_{i} - I \Vert _{\infty} = \Vert X_{k + 1} - X_{k} \Vert _{\infty}\) for the stopping criterion, and we use \(\varepsilon (X_{k}) < \mathrm{tol}\) for different chosen tolerances.

Example 5.1

Consider the matrix equation (1.1) with the following two matrices \(A_{1}\) and \(A_{2}\):

where \(r = 3\), \(\delta_{1} = - \frac{1}{2}\), \(\delta_{2} = - \frac{1}{3}\), \(\delta = - 2\).

We applied Algorithm 4.1 for different values of the parameter \(\alpha > 1\). The number of iterations needed to satisfy the stopping condition (required accuracy) in order the sequence of positive definite matrices to converge to the maximal solution of Equation (1.1) are listed in Table 1.

The eigenvalues of \(X_{L}\) are \((0.9556, 0.9984, 0.9695, 0.9969)\).

Note: Theorem 3.2 holds for \(\beta = \frac{1}{3}, \frac{1}{2},\ldots\) , etc.

Example 5.2

Consider the matrix equation (1.1) with the following two matrices \(A_{1}\) and \(A_{2}\):

which satisfy the conditions of Theorem 4.4, where \(r = 3\), \(\delta_{1} = - \frac{1}{4}\), \(\delta_{2} = - \frac{1}{5}\), \(\delta = - 2\). We applied Algorithm 4.3 for different values of the parameter α (\(0 < \alpha < 1\)). The obtained results are summarized in Table 2, where k is the number of iterations needed to satisfy the stopping condition (required accuracy) in order the sequence of positive definite matrices converge to the minimal solution of Equation (1.1).

The eigenvalues of \(X_{S}\) are \((0.9180, 0.9909, 0.9733, 0.9966)\).

Also, Theorem 3.2 holds for \(\beta = \frac{1}{3}, \frac{1}{2},\ldots\) , etc.

Remark

From Table 1 we see that the number of iterations k increases as the value of α (\(\alpha > 1\)) increases, and from Table 2 we see that the number of iterations k decreases as the value of α (\(0 < \alpha < 1\)) increases. For details, see the Appendix in the end of this paper.

Example 5.3

Consider the matrix equation (1.1) with the following two matrices \(A_{1}\) and \(A_{2}\):

where \(r = 4\), \(\delta_{1} = - \frac{1}{3}\), \(\delta_{2} = - \frac{1}{5}\), \(\delta = - 3\). We applied Algorithm 4.1 for different values of the parameter α (\(\alpha > 1\)). The number of iterations needed to satisfy the stopping condition (required accuracy) in order the sequence of positive definite matrices to converge to the maximal solution of Equation (1.1) are listed in Table 3.

The eigenvalues of \(X_{L}\) are: \((0.99954, 0.99976, 0.99995, 0.99982, 0.99987, 0.99991)\).

Example 5.4

Consider the matrix equation (1.1) with the two matrices \(A_{1}\) and \(A_{2}\) as follows:

which satisfy the conditions of Theorem 4.4, where \(r = 3\), \(\delta_{1} = - \frac{1}{2}\), \(\delta_{2} = - \frac{1}{3}\), \(\delta = - 2\). Applying Algorithm 4.3, for different values of the parameter α (\(0 < \alpha < 1\)). The obtained results are summarized in Table 4, where k is the number of iterations needed to satisfy the stopping condition (required accuracy) in order the sequence of positive definite matrices converge to the minimal solution of Equation (1.1).

The eigenvalues of \(X_{S}\) are \((0.99181, 0.9944, 0.9992, 0.99882, 0.99596, 0.99676)\).

Remarks

-

1.

The obtained results for Examples 5.3 and 5.4 shown in Tables 3 and 4, respectively, indicate that increasing the dimension of the problem does not affect the efficiency of the proposed algorithms.

-

2.

From Tables 1, 2, 3, and 4, it is clear that we obtained a high accuracy for different values of α after a few numbers of steps; see the number of iterations, which indicate that our algorithms have high efficiency.

6 On the existence and the uniqueness of a positive definite solution of \(X^{r}-\sum_{i=1}^{m}A_{i}^{*}X^{\delta_{i}}A_{i}=I\)

In this section, we prove that Equation (1.2) has a unique positive definite solution and construct an interval that includes that solution. Associated with Equation (1.2) is the operator G defined by

Theorem 6.1

If Equation (1.2) has a positive definite solution X, then \(X \in [G^{2}(I),G(I)]\).

Proof

Let X be the positive definite solution of Equation (1.2). Then \(X = (I + \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}} A_{i} )^{\frac{1}{r}}\), and we have

which implies that \(X^{\delta_{i}} \le I\), \(- 1 < \delta_{i} < 0\), \(\forall i = 1,2,\ldots,m\).

Then

Hence, from (6.2) and (6.3) we get

Then \((I + \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{\delta_{i}}{r}} \le X^{\delta_{i}} \le I\), and thus \(\sum_{i = 1}^{m} A_{i}^{*}(I + \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{\delta_{i}}{r}}A_{i} \le \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i} \le \sum_{i = 1}^{m} A_{i}^{*}A_{i}\).

We have \(\sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i} = X^{r} - I\). Then \(\sum_{i = 1}^{m} A_{i}^{*}(I + \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{\delta_{i}}{r}}A_{i} \le X^{r} - I \le \sum_{i = 1}^{m} A_{i}^{*}A_{i}\).

Therefore, \((I + \sum_{i = 1}^{m} A_{i}^{*}(I + \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{\delta_{i}}{r}}A_{i})^{\frac{1}{r}} \le X \le (I + \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{1}{r}}\), that is, \(G^{2}(I) \le X \le G(I)\). Hence, \(X \in [G^{2}(I),G(I)]\).

We use the Brouwer fixed point theorem to prove the existence of positive definite solutions of Equation (1.2). Since \(P(n)\) is not complete, we consider the subset \(D_{2} = [G^{2}(I),G(I)] \subset P(n)\), which is compact. □

Theorem 6.2

Equation (1.2) has a Hermitian positive definite solution.

Proof

It is obvious that \(D_{2}\) is closed, bounded, and convex. To show that \(G:D_{2} \to D_{2}\), let \(X \in D_{2}\). Then \(G^{2}(I) \le X \le G(I)\), that is, \(X \le (I + \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{1}{r}}\), and thus \(X^{\delta_{i}} \ge (I + \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{\delta_{i}}{r}}\), \(- 1 < \delta_{i} < 0\). Therefore, \((I + \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i})^{\frac{1}{r}} \ge (I + \sum_{i = 1}^{m} A_{i}^{*}(I + \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{\delta_{i}}{r}} A_{i} )^{\frac{1}{r}}\).

Hence,

Similarly, since \(X \ge I\), we have \(X^{\delta_{i}} \le I\) and \((I + \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i})^{\frac{1}{r}} \le (I + \sum_{i = 1}^{m} A_{i}^{*}A_{i} )^{\frac{1}{r}}\), that is,

From (6.5) and (6.6) we get \(G(X) \in D_{2}\), that is, \(G:D_{2} \to D_{2}\).

According to Lemma 6.2.37 in [16], \(\sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i}\) is continuous on \(D_{2}\). Hence, \(G(X)\) is continuous on \(D_{2}\). By Brouwer’s fixed point theorem, G has a fixed point in \(D_{2}\), which is a solution of Equation (1.2). □

Remark

Applying Lemma 2.3, in [1], it is proved that Equation (1.2) has a unique positive definite solution for \(r = 1\). The following theorem shows that the uniqueness holds for any r, that is, for Equation (1.2).

Theorem 6.3

Equation (1.2) has a unique positive definite solution.

Proof

We show that G has a unique fixed point. For all \(X,Y \in P(n)\) such that \(X \ge Y\), by Definition 2.7 we have \(G:P(n) \to P(n)\) and

that is, the operator G is decreasing. We define \(\theta = \max \{ \vert \delta_{i} \vert ,i = 1,2,\ldots,m \}\). Then \(0 < \theta < 1\). For all \(t \in (0,1)\), we have

where \(0 < \frac{\theta}{r} < 1\). Let \(\gamma = \frac{\theta}{r}\). Then \(G(tX) \le t^{ - \gamma} G(X)\), which means that the operator G is \(( - \gamma )\)-convex. We obtain that G has a unique fixed point X in \(P(n)\), which is the unique positive definite solution of Equation (1.2). □

Remarks

-

1.

In [2], the authors considered the nonlinear matrix equation

$$ X = \sum_{i = 1}^{m} A_{i}^{T}X^{\delta_{i}}A_{i},\quad \vert \delta_{i} \vert < 1, i = 1,2,\ldots,m. $$(6.7)They proved that the recursively defined matrix sequence

$$ X_{n + m + 1} = \sum_{i = 1}^{m} A_{i}^{T}X_{n + i}^{\delta_{i}}A_{i}, \quad n \ge 0, $$(6.8)where \(X_{1},X_{2},\ldots,X_{m}\) are arbitrary initial positive definite matrices, converge to the unique positive definite solution of (6.7).

In [1], the authors considered the nonlinear matrix equation

$$ X = Q + \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i}, \quad 0 < \vert \delta_{i} \vert < 1, i = 1,2,\ldots,m, $$(6.9)where Q is a known positive definite matrix. They considered the same formula (6.8) as

$$ X_{n + m + 1} = Q + \sum_{i = 1}^{m} A_{i}^{*}X_{n + i}^{\delta_{i}}A_{i}, \quad n \ge 0. $$(6.10)They used the algorithm given in [2] and used the main steps for the proof of convergence with slight changes to suit their problem (6.9).

-

2.

Since Q has no effect on the convergence of the algorithm and since in [2] it is proved that both \(X_{n + m + 1}\) and \(X_{n + i}\) converge to the unique solution X, we see that the proof given in [1] is redundant.

For our Equation (1.2), we use the recursive formula

$$ X_{n + m + 1} = \Biggl(I + \sum_{i = 1}^{m} A_{i}^{*}X_{n + i}^{\delta_{i}}A_{i} \Biggr)^{\frac{1}{r}} $$(6.11)according to the following proposition.

Proposition 6.4

The matrix sequence defined by (6.11) converges to the unique positive definite matrix solution X of Equation (1.2) for arbitrary initial positive definite matrices \(X_{1},X_{2},\ldots,X_{m}\), provided that it is valid for \(r = 1\).

Proof

We have that \(\sum_{i = 1}^{m} A_{i}^{*}X_{n + i}^{\delta_{i}}A_{i}\) is continuous and thus \(G_{n}(X) = I + \sum_{i = 1}^{m} A_{i}^{*}X_{n + i}^{\delta_{i}}A_{i}\) is continuous. Define \(F(G_{n}(X)) = (G_{n}(X))^{\frac{1}{r}}\), where r is a positive integer. Then F is also continuous. So, \(\lim_{n \to \infty} F(G_{n}(X)) = F\lim_{n \to \infty} G_{n}(X)\). Taking the limit of (6.11) as \(n \to \infty\) and using (6.9) and (6.10) with \(Q = I\), we obtain \(X = (I + \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i} )^{\frac{1}{r}}\). □

7 Numerical examples

Example 7.1

Consider the matrix equation (1.2), with the following two matrices \(A_{1}\) and \(A_{2}\):

where \(r = 2\), \(\delta_{1} = - \frac{1}{2}\), \(\delta_{2} = - \frac{1}{3}\). Let the initial positive definite matrices be

Applying the recursive formula (6.11), after nine iterations of (6.11), we get the unique positive definite solution of (1.2)

We see that \(X_{9} \in [G^{2}(I),G(I)]\), where G is defined by (6.1).

Example 7.2

Consider the matrix equation (1.2) with three matrices

where \(r = 3\), \(\delta_{1} = - \frac{1}{3}\), \(\delta_{2} = - \frac{1}{4}\), \(\delta_{3} = - \frac{1}{2}\). Let the initial positive definite matrices be

Applying the recursive formula (6.11), after 14 iterations of (6.11), we get the unique positive definite solution of (1.2)

We see that \(X_{14} \in [G^{2}(I),G(I)]\), where G is defined by (6.1).

Remarks

-

1.

If we use \(X_{1}\) and \(X_{2}\) from Example 7.2, then we get the same solution \(X = X_{9}\), which proves the uniqueness of the solution of Equation (1.2).

-

2.

If we put

$$\begin{aligned}& X_{1} = \left ( \textstyle\begin{array}{c@{\quad}c} 0.9355 & 0.4103 \\ 0.9169 & 0.8936 \end{array}\displaystyle \right ),\qquad X_{2} = \left ( \textstyle\begin{array}{c@{\quad}c} 0.3046 & 0.1934 \\ 0.1897 & 0.9822 \end{array}\displaystyle \right ),\quad \mbox{and} \\& X_{3} = \left ( \textstyle\begin{array}{c@{\quad}c} 0.3028 & 0.1509 \\ 0.5417 & 0.6979 \end{array}\displaystyle \right ) \end{aligned}$$in Example 7.2, then we get the same solution

$$X = X_{10} = \left ( \textstyle\begin{array}{c@{\quad}c} 1.0793 & 0.0693 \\ 0.0693 & 1.3536 \end{array}\displaystyle \right ), $$which proves the uniqueness of the solution of Equation (1.2).

The above examples show that the recursive formula defined by (6.11) is feasible and effective to compute the unique positive definite solution of Equation (1.2).

8 Conclusion

In this paper we considered two nonlinear matrix equations \(X^{r} \pm \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i} = I\). For the first equation (plus case), the proofs of the existence of positive definite solutions beside the extremal solutions are given. Also two algorithms are suggested for computing the extremal solutions. For the second equation (negative case), the existence and uniqueness of a positive definite solution are proved. The algorithm in [1] is adapted for solving this equation. Numerical examples are introduced to illustrate the obtained theoretical results.

References

Duan, X, Liao, A, Tang, B: On the nonlinear matrix equation \(X - \sum_{i = 1}^{m} A_{i}^{*} X^{\delta_{i}}A{}_{i} = Q\). Linear Algebra Appl. 429, 110-121 (2008)

Shi, X, Liu, F, Umoh, H, Gibson, F: Two kinds of nonlinear matrix equations and their corresponding matrix sequences. Linear Multilinear Algebra 52, 1-15 (2004)

Cai, J, Chen, G: Some investigation on Hermitian positive definite solutions of the matrix equation \(X^{s} + A^{*}X^{ - t}A = Q\). Linear Algebra Appl. 430, 2448-2456 (2009)

Duan, X, Liao, A: On the existence of Hermitian positive definite solutions of the matrix equation \(X^{s} + A^{*}X^{ - t}A = Q\). Linear Algebra Appl. 429, 673-687 (2008)

Duan, X, Liao, A: On the nonlinear matrix equation \(X + A^{*}X^{ - q}A = Q\) (\(q \ge 1\)). Math. Comput. Model. 49, 936-945 (2009)

Duan, X, Liao, A: On Hermitian positive definite solution of the matrix equation \(X - \sum_{i = 1}^{m} A_{i}^{*} X^{r}A{}_{i} = Q\). J. Comput. Appl. Math. 229, 27-36 (2009)

El-Sayed, SM, Ramadan, MA: On the existence of a positive definite solution of the matrix equation \(X - A^{*} \sqrt[2^{m}]{X^{ - 1}}A = I\). Int. J. Comput. Math. 76, 331-338 (2001)

Lim, Y: Solving the nonlinear matrix equation \(X = Q + \sum_{i = 1}^{m} M_{i}X^{\delta_{i}}M_{i}^{*}\) via a contraction principle. Linear Algebra Appl. 430, 1380-1383 (2009)

Liu, XG, Gao, H: On the positive definite solutions of the matrix equations \(X^{s} \pm A^{T}X^{ - t}A = I_{n}\). Linear Algebra Appl. 368, 83-97 (2003)

Peng, ZY, El-Sayed, SM, Zhang, XL: Iterative methods for the extremal positive definite solution of the matrix equation \(X + A^{ *} X^{ - \alpha} A = Q\). Appl. Math. Comput. 200, 520-527 (2007)

Ramadan, MA: On the existence of extremal positive definite solutions of a kind of matrix. Int. J. Nonlinear Sci. Numer. Simul. 6, 115-126 (2005)

Ramadan, MA: Necessary and sufficient conditions to the existence of positive definite solution of the matrix equation \(X + A^{T}X^{ - 2}A = I\). Int. J. Comput. Math. 82, 865-870 (2005)

Ramadan, MA, El-Shazly, NM: On the matrix equation \(X + A^{*}\sqrt[2^{m}]{X^{ - 1}}A = I\). Appl. Math. Comput. 173, 992-1013 (2006)

Yueting, Y: The iterative method for solving nonlinear matrix equation \(X^{s} + A^{*}X^{ - t}A = Q\). Appl. Math. Comput. 188, 46-53 (2007)

Zhan, X: Computing the extremal positive definite solutions of a matrix equation. SIAM J. Sci. Comput. 17, 1167-1174 (1996)

El-Sayed, SM, Ran, ACM: On an iteration method for solving a class of nonlinear matrix equation. SIAM J. Matrix Anal. Appl. 23, 632-645 (2001)

Dehghan, M, Hajarian, M: An iterative algorithm for the reflexive solutions of the generalized coupled Sylvester matrix equations and its optimal approximation. Appl. Math. Comput. 202, 571-588 (2008)

Dehghan, M, Hajarian, M: The general coupled matrix equations over generalized bisymmetric matrices. Linear Algebra Appl. 432, 1531-1552 (2010)

Hajarian, M: Matrix form of the CGS method for solving general coupled matrix equations. Appl. Math. Lett. 34, 37-42 (2014)

Hajarian, M: Matrix iterative methods for solving the Sylvester-transpose and periodic Sylvester matrix equations. J. Franklin Inst. 350, 3328-3341 (2013)

Sarhan, AM, El-Shazly, NM, Shehata, EM: On the existence of extremal positive definite solutions of the nonlinear matrix equation \(X^{r} + \sum_{i = 1}^{m} A_{i}^{*}X^{\delta_{i}}A_{i} = I\). Math. Comput. Model. 51, 1107-1117 (2010)

Bhatia, R: Matrix Analysis, vol. 169. Springer, Berlin (1997)

Choudhary, B, Nanda, S: Functional Analysis with Applications. Wiley, New Delhi (1989)

Acknowledgements

The authors acknowledge the reviewers for reviewing the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

Naglaa M. El-Shazly has made substantial contributions to conception and design, acquisition of data, and analysis and interpretation of data. Abdel-Shakoor M. Sarhan has been involved in drafting the manuscript and revising it critically for important intellectual content. Both authors read and approved the final manuscript.

Appendix

Appendix

The paper is concerned with the positive definite solutions of our two problems (1.1) and (1.2), that is, the solutions that lie in the set \(P(n)\) of positive definite matrices, which is the interior of the cone \(\overline{P(n)}\) of positive (semidefinite) matrices.

Definition A.1

(Positive operator)

A bounded self-adjoint linear operator T from the Hilbert space H into it self, \(T:H \to H\), is said to be positive, written \(T \ge 0\), if

-

If T is self-adjoint, then \(\langle Tx,x \rangle\) is real.

-

The matrices are linear bounded operators defined on the Hilbert space \(R^{n}\).

-

One of our goals is to prove the existence of the extremal (maximal-minimal) positive definite solutions of the matrix equation (1.1). So we need the definition of an ordered Hilbert space: If \(T_{1}\) and \(T_{2}\) are linear bounded self-adjoint operators, then

$$ 0 \le T_{1} \le T_{2}\quad \mbox{if } T_{2} - T_{1} \in \overline{P(n)}, $$(A.2)where \(\overline{P(n)}\) is the cone of positive semidefinite matrices.

The classical definition an ordered Hilbert space in functional analysis: \(0 \le T_{1} \le T_{2}\) if \(\langle T_{2}x,x \rangle \ge \langle T_{1}x,x \rangle \ge 0\) \(\forall x \in H\), which leads to

that is, (A.2) is equivalent to (A.3).

Definition (A.3) gives us an important result: The ordering of linear bounded self-adjoint operators on \(\overline{P(n)}\) is 1-1 corresponding to the order of the positive quadratic forms (which is the order of positive real numbers), which is total (since all its elements are comparable). So the elements of the cone of positive matrices \(\overline{P(n)}\) have at most one maximal and one minimal element. In Theorem 3.2, we determined the interval \(D_{1} = [\beta I,(I - \sum_{i = 1}^{m} A_{i}^{*}A_{i})^{\frac{1}{r}} ]\) that contains all positive definite solutions of Equation (1.1). In Theorem 3.3, we proved the existence of maximal and minimal solutions in \(D_{1} = [\beta I,(I - \sum_{i = 1}^{m} A_{i}^{*}A_{i})^{\frac{1}{r}} ]\).

To compute the maximal solution, we constructed the decreasing sequence of positive definite matrices (which are not solutions of Equation (1.1)) and proved that it converges to the positive definite limit matrix, which is the maximal solution \(X_{L}\).

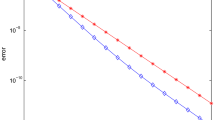

So, as \(\alpha > 1\) increases, the algorithm needs more iterations, as is explained in Table 1. For example, since \(5I > 2I\), the algorithm needs more iterations at \(\alpha = 5\) than at \(\alpha = 3\). Similarly, the same analysis holds for the minimal solution \(X_{S}\). See Figure 1.

The effect of increasing α on the number of iterations of Algorithm 4.1 .

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sarhan, AS.M., El-Shazly, N.M. Investigation of the existence and uniqueness of extremal and positive definite solutions of nonlinear matrix equations. J Inequal Appl 2016, 143 (2016). https://doi.org/10.1186/s13660-016-1083-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-016-1083-3