Abstract

Background

Effective communication among interdisciplinary healthcare teams is essential for quality healthcare, especially in nursing homes (NHs). Care aides provide most direct care in NHs, yet are rarely included in formal communications about resident care (e.g., change of shift reports, family conferences). Audit and feedback is a potentially effective improvement intervention. This study compares the effect of simple and two higher intensity levels of feedback based on goal-setting theory on improving formal staff communication in NHs.

Methods

This pragmatic three-arm parallel cluster-randomized controlled trial included NHs participating in TREC (translating research in elder care) across the Canadian provinces of Alberta and British Columbia. Facilities with at least one care unit with 10 or more care aide responses on the TREC baseline survey were eligible. At baseline, 4641 care aides and 1693 nurses cared for 8766 residents in 67 eligible NHs. NHs were randomly allocated to a simple (control) group (22 homes, 60 care units) or one of two higher intensity feedback intervention groups (based on goal-setting theory): basic assisted feedback (22 homes, 69 care units) and enhanced assisted feedback 2 (23 homes, 72 care units). Our primary outcome was the amount of formal communication about resident care that involved care aides, measured by the Alberta Context Tool and presented as adjusted mean differences [95% confidence interval] between study arms at 12-month follow-up.

Results

Baseline and follow-up data were available for 20 homes (57 care units, 751 care aides, 2428 residents) in the control group, 19 homes (61 care units, 836 care aides, 2387 residents) in the basic group, and 14 homes (45 care units, 615 care aides, 1584 residents) in the enhanced group. Compared to simple feedback, care aide involvement in formal communications at follow-up was 0.17 points higher in both the basic ([0.03; 0.32], p = 0.021) and enhanced groups ([0.01; 0.33], p = 0.035). We found no difference in this outcome between the two higher intensity groups.

Conclusions

Theoretically informed feedback was superior to simple feedback in improving care aides’ involvement in formal communications about resident care. This underlines that prior estimates for efficacy of audit and feedback may be constrained by the type of feedback intervention tested.

Trial registration

ClinicalTrials.gov (NCT02695836), registered on March 1, 2016

Similar content being viewed by others

Background

Effective communication in interdisciplinary healthcare teams is key to quality and safety in healthcare. In 2001, the US Institute of Medicine identified a lack of interdisciplinary communication and collaboration as a major reason for system-wide quality and safety issues [1]. Communication issues are the root cause of one in five sentinel events in healthcare [2], were responsible for a third of all malpractice claims in the USA in 2009–2013, and resulted in more than 1700 deaths and $1.7 billion in avoidable costs [3]. International studies demonstrate that improved communication in interdisciplinary healthcare teams improves patients’ depressive symptoms [4], reduces risks of postoperative complications and mortality [5], and improves assessment and patient management practices in oncology settings [6]. A recent study in acute care settings showed that enhancing communication between healthcare providers, patients, and their families using formal interactions significantly reduced 7-day readmission rates [7]. In contrast to informal communications (e.g., spontaneous discussions on the hallway), formal communications are planned and scheduled meetings to discuss and make decisions about care. In nursing homes, a systematic review found that better formal team communication improved resident outcomes including responsive behaviors, falls, use of antipsychotics, depressive symptoms, appropriateness of medications, restraint use, nutrition, and pain [8]. However, specific interventions, roles of team members, and implementation processes were often poorly described and evaluated [8]. A significant knowledge gap exists on how to effectively improve team communication, which is a prerequisite for improved patient outcomes [9] and team quality improvement success.

The quality of care in nursing homes is an international source of concern, but increasing regulation, inspection, and research to improve quality have had limited success [10, 11]. Nursing homes are a vital component of the health and social care system, providing 24-h care to people with complex care needs who are unable to live in their own homes. Residents are commonly frail, older, have substantial disability, and up to 70% have a diagnosis of dementia [12,13,14] (which may be underestimated by more than 10% [15]). With an aging population and policy shifts that support people living in their own homes as long as possible, the needs of the nursing home population have become increasingly complex [16]. In addition, 60–80% of the nursing home workforce are care aides (also called care assistants, personal support workers, or nursing assistants) [17]. This largely unregulated workforce has little formal training and low levels of education and wages, but provides most of the direct care to these complex residents [17]. Care aides have knowledge that no other care provider group has, because they are in protracted, close contact with residents and have an intimate awareness of their care needs and preferences. Their knowledge is key to improving the quality of care and life for frail, vulnerable older nursing home residents [18]. However, research in nursing homes suggests that information exchange between care aides and regulated staff is top-down and that care aides are rarely involved in decisions about resident care [19].

Audit and feedback were identified as an effective intervention to improve care team performance [11, 20, 21]. It involves assessing recipients’ performance and providing them with a summary of their performance over a specified period of time [20, 22]. However, audit and feedback often achieves only marginal gains [20], because studies often (a) are not based on robust theory [23], (b) compare audit and feedback to no intervention, rather than comparing different approaches to audit and feedback [22], and (c) focus on education alone [24], rather than including concrete tools and strategies for achieving improvement [25]. Our study responds to calls to optimize audit and feedback and maximize its effects by addressing these issues [23]. Furthermore, limited evidence is available on audit and feedback in nursing homes and especially on its effectiveness in improving the inclusion of care aides in formal communications about resident care [26].

TREC (translating research in elder care) is a longitudinal program of applied health services research that since 2007 has collected comprehensive data on nursing home residents, care staff, care units, and facilities [27]. TREC’s mission is to improve the quality of care and quality of life for frail, older nursing home residents, and quality of worklife for their paid caregivers. Feeding back research data to care teams has always been one of TREC’s integral activities [28,29,30,31]. To improve formal communication in interdisciplinary care teams and integrate care aides into these formal communications, we further developed our feedback approach and included two higher intensity feedback interventions based on goal-setting theory and compared them to our simple feedback intervention [32], which in this case was our team’s usual feedback approach. Here, we report the effectiveness of a large definitive cluster-randomized controlled trial (INFORM, improving nursing home care through feedback on perfoRMance data) in increasing care aide involvement in formal team communications and decision-making about resident care. Secondary outcomes included care aides’ perception of (a) their work environment (evaluation, social capital, slack time), (b) use of research, (c) quality of worklife (psychological empowerment, job satisfaction), and two indicators of quality of resident care (percent of residents with worsening responsive behaviors and percent of residents with worsening pain). Consistent with MRC guidelines [33] and suggestions by other audit and feedback [23] and quality improvement experts [34], we simultaneously conducted a fidelity study to deepen understanding of the effectiveness results presented here [submitted to Impl Sci as a companion paper to this paper; add reference upon acceptance].

Methods

Study design

In a pragmatic three-arm parallel cluster-randomized controlled trial with assessment at baseline and at 12-month follow-up, we compared a simple feedback approach with two higher intensity feedback approaches: basic assisted feedback and enhanced assisted feedback. In contrast to explanatory trials, aiming to assess whether an intervention works under well-defined and highly controlled conditions, pragmatic trials aim to assess whether an intervention is effective under “real-life conditions” that are more complex and less controllable and predictable [35]. Although the primary study outcome focused on the involvement of care aides in formal team communications about resident care, the intervention was directed to care unit managerial teams: care managers, the director of care, and persons who assist them (e.g., clinical educators). We targeted managers because they are the persons who have the power and ability to change the organizational structures and processes required to facilitate care aide involvement in these formal communications. Nursing homes are made up of one or more care units, the organizational subunits where residents receive care by care teams. Quality of care can vary substantially within nursing home care units. Norton et al. [36] demonstrated that improvement strategies targeted at the care unit level are more powerful than those at the facility level. We performed cluster randomization (i.e., all units within a nursing home randomized into the same study arm) to prevent contamination (i.e., spread of the intervention to non-intervention units). Our study intervention was designed to help care teams increase care aide involvement in formal team communications about resident care, to improve quality of communication, worklife for care staff, and resident care.

INFORM was registered (ClinicalTrials.gov Identifier: NCT02695836) and the trial protocol published [26]. We followed CONSORT reporting guidelines for cluster-randomized trials [37].

Hypotheses

-

Basic and enhanced assisted feedback on care aide involvement in formal communications about resident care to managerial teams will increase (a) care aide involvement in formal team communications, (b) quality of resident care, and (c) care aide quality of worklife more strongly than simple feedback.

-

Enhanced assisted feedback on care aide involvement in formal communications about resident care to managerial teams will increase (a) care aide involvement in formal team communications, (b) quality of resident care, and (c) care aide quality of worklife more strongly than basic assisted feedback.

Setting

Nursing homes participating in TREC (translating research in elder care, a longitudinal program of applied health and care research), took part in this study [27]. At INFORM inception, TREC included 75 urban nursing homes with 9613 residents across the Western Canadian provinces of Alberta and British Columbia, randomly selected from the overall urban nursing home population and stratified by health region, ownership type, and size. TREC facilities participate in a longitudinal observational study that generates a comprehensive dataset of residents, care staff, care unit, and facility outcomes. We used data from our September 2014–May 2015 wave of primary data collection to assess baseline outcomes, then carried out our INFORM intervention in the subset of TREC facilities participating in INFORM, and then carried out our January 2017–December 2017 wave of primary data collection to assess follow-up outcomes.

Participants

Table 1 lists the inclusion and exclusion criteria for facilities and care units. Only nursing homes that participated in the TREC observational study were included because our trial outcomes were available for these facilities. Only facilities with at least one care unit with 10 or more care aide responses on the TREC baseline survey were eligible, for stable, valid, and reliable aggregation of study outcomes at the unit level [38]. We excluded facilities if we were unable to assign surveys to their care units, which made unit-level analyses impossible in those sites. We only included care units with an identifiable care manager or leader. At baseline, 4641 care aides and 1693 nurses cared for a total of 8766 residents in 67 eligible nursing homes.

Randomization and masking

To avoid contamination, we randomized at the facility level. All care unit managerial teams within a facility received the same feedback intervention. Using stratified permuted block randomization, an independent person not involved in this study assigned eligible nursing homes to one of three study arms. Study arm allocation was determined by assigning computer-generated random numbers to facilities. Randomization was stratified by health region (Edmonton or Calgary Health Zones in Alberta, Fraser, or Interior Health Regions in British Columbia) to account for regional policy differences that might influence structures, processes, or outcomes in participating facilities.

A regional project coordinator in each health region obtained additional written informed consent from facilities randomized to the basic and enhanced assisted feedback arms. These facilities were offered additional feedback—coordinators explained to managers that they would receive specific extra feedback as part of the intervention—but we blinded managers to the fact that there were additional study arms. Facilities in different study arms received different recruitment materials (information sheets and informed consents) and attended different types of workshops. Coordinators organized intervention workshops, invited managerial teams to workshops, and disseminated workshop materials to teams. Coordinators could not be blinded to study arm allocation in their region, but we blinded each coordinator to how facilities were allocated in all other regions. Coordinators were requested not to reveal to anyone how their facilities were allocated. To inform a coordinator about study arm allocation of facilities in their region, we used a secure online platform (https://www.igloosoftware.com/) that could only be accessed by the coordinator, the person who carried out randomization, and the system administrator.

People who delivered the intervention and monitored intervention fidelity were (a) a facilitator and a study investigator who attended every workshop and (b) additional investigators, trainees, decision-makers, and study staff (varied by region and time of intervention). Workshop contents, activities, duration, materials, and format differed between basic and enhanced assisted feedback groups (described below). The simple feedback group (our control or usual care arm) received tailored feedback reports only 2-3 months after the baseline data collection. We consider simple feedback “usual care” because all TREC homes receive this kind of feedback after each wave of data collection [28,29,30,31], regardless of whether they participate in an intervention study or any other TREC activity. To deliver the correct intervention to each study group and to monitor correct fidelity criteria, persons involved in these activities could not be blinded to study arm allocation. However, they were requested not to talk about facilities by name or share information that could identify a facility or its allocation status. None of these persons took part in data analyses. Before workshops, each facility agreed to not share workshop tools with other managers or facilities during the study.

Data analyses were carried out by analysts not involved in intervention delivery or data collection. These analysts worked with de-identified data sources (surveys, interview, and focus group transcripts) and data sets. A research assistant not involved in INFORM assigned a unique random ID to each participant, care unit, and facility in all data sources before processing, cleaning, and analysis. Random IDs were generated by the same person who randomized facilities. Only that person, the research assistant, and the TREC managing director had access to the de-identification list, and they were all required to keep this information confidential.

Interventions

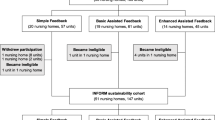

The study had three arms (Fig. 1). The audit component of this study is reflected by the baseline data collections for care units in all three study arms. We used baseline data on a care unit’s involvement of care aides in formal communications about resident care and fed these data back to care units. The control or usual care group received simple feedback only: a feedback report to each facility with information on resident and care staff outcomes. This feedback report was discussed in 4-h face-to-face dissemination workshops. In addition to simple feedback, the two higher intensity groups received a more focused feedback report on care aides’ involvement in formal communications about resident care. Results were discussed in a 3-h in-person goal-setting workshop. Basic assisted feedback participants received two additional 1.5-h web-based support workshops. Enhanced assisted feedback participants received two additional 3-h in-person support workshops and had access to on-demand email and phone support. For the enhanced assisted feedback arm, longer workshops, and in-person contact of participants with peers and the study team aimed to provide a more intense learning experience to improve intervention effectiveness.

Goal-setting theory

Our trial protocol contains a detailed description of the theoretical foundations of our intervention [26]. Briefly, audit and feedback interventions based on goal-setting theory are more effective. Participants who set goals and define strategies to achieve them will identify with the goals, perceive them as achievable, and work to achieve them [32]. Both short-term and long-term goals are required. Short-term goals break down the task and enhance self-efficacy and task persistence. Long-term goals keep people accountable. Both performance and learning goals are also required. Learning goals indicate how to make improvements. Performance goals indicate what to achieve [32]. Written action plans hold individuals accountable and are reminders of performance goals [20, 22]. Specifically, managers and their teams attending the basic and enhanced intervention workshops were supported to specify various performance goals related to the improvements targeted on their care units (such as involving care aides in formal team communications about resident care in 80% (vs 10% at baseline) of the formal team meetings on the care unit within the next 3 months). Further, managers specified learning goals related to their own learning (such as improving a manager’s ability to specify measurable performance goals and to routinely measure success in achieving performance goals) and to their care team’s learning (increase the ability and comfort of care aides to speak up in formal team communications about resident care, and increase the team’s acceptance of care aide opinions as valuable contribution).

Dissemination workshops

Sites in all three study arms received simple feedback in November 2015. Reports focused on a core set of actionable measures including care aides’ perception of their involvement in formal communications about resident care (formal interactions), data-based feedback on their care unit’s performance (evaluation), connections within their teams (social capital), and slack time. Managerial teams of each facility were then invited to a half-day face-to-face dissemination workshop where feedback reports were discussed. Workshops were led by an experienced professional facilitator, hired by the study team. After a senior researcher of the research team presented reports, managerial teams participated in small group discussions to interpret results, identify improvement areas, and think about improvement strategies. Workshops did not set specific goals but gave simple instructions on interpreting reports and planning improvement strategies.

Goal-setting workshops

In June 2016, care unit managers and their teams in the basic and enhanced assisted feedback groups participated in a face-to-face goal-setting workshop. We held separate workshops for basic and enhanced assisted feedback groups in each of the four health regions. Each unit received a package of goal-setting workshop materials 1 week before the workshop: a feedback report on the care unit’s data (formal interactions, evaluation, social capital, slack time) and a goal-setting workbook summarizing details of the INFORM study, defining key concepts, and outlining the goal-setting approach. Managers were encouraged to bring care staff (care aides, nurses, allied health providers) to the workshops. Workshops used small group activities such as reflecting on data, establishing a series of specific and measurable learning and performance goals, and identifying measures and tools to track goal achievement. Participants generated an action plan and received instructions on how to track goal progress and how to report back at the support workshops.

Support workshops

In November 2016, basic assisted feedback participants attended a 1.5-h virtual support workshop via a web-based conference platform. Enhanced assisted feedback participants attended a 3-h face-to-face support workshop. Managerial teams reported their progress in implementing goals and described their implementation strategies. They discussed challenges encountered and received support from the study team, regional decision-makers, and their peers in addressing these challenges. A second support workshop with the same content was held in April 2017.

On-demand email and phone support

Enhanced assisted feedback teams also had access to on-demand email and phone support from the facilitator throughout the intervention period. The facilitator addressed questions and helped resolve challenges as managerial teams worked toward goal achievement.

Process evaluation

We comprehensively evaluated intervention fidelity, implementation, and participant experiences using a mixed-methods approach. Methods and results are reported in a separate publication [submitted to Impl Sci as a companion paper to this paper; add reference upon acceptance].

Outcomes

Our study outcomes were assessed using data collected in two waves of TREC’s longitudinal observational study [27]—September 2014–May 2015 to assess baseline outcomes before the intervention and January 2017–December 2017 to assess follow-up outcomes after the intervention (Fig. 1). These included care staff use of best practices, quality of worklife (e.g., psychological empowerment, job satisfaction), organizational context, and characteristics of nursing homes and care units (Additional file 1)—all of which were measured using the validated TREC survey [27]. Resident data were obtained from the Resident Assessment Instrument—Minimum Data Set 2.0 (RAI-MDS 2.0) [39]. Nursing homes in health regions participating in TREC are required to assess residents on admission and at least quarterly thereafter and participating TREC homes submit their resident data to TREC on a quarterly basis. Data are used for national reporting.

Primary outcome

Our primary outcome was care aides’ self-reported involvement in formal team communications about resident care (formal interactions). Formal interactions is one of 10 concepts measured by the Alberta Context Tool, a comprehensively validated tool to assess modifiable features of care unit work environments (details in Additional file 1) [40]. The Alberta Context Tool is embedded within the TREC care aide survey [27], a suite of validated survey instruments completed by computer-assisted structured personal interview. The formal interactions rating consists of four items (rated from 1 = never to 5 = almost always) asking care aides how often, in the last typical month, they participated in the following: team meetings about residents, family conferences, change-of-shift reports, and continuing education (conferences, courses) outside their nursing home. In our psychometric studies [40], we found that the most valid way to generate an overall score is count-based: recoding each item (1 and 2 to 0; 3 to 0.5; 4 and 5 to 1) and summing recoded values (possible range, 0–4).

Secondary outcomes

Organizational

Using the Alberta Context Tool completed by care aides, we assessed evaluation (feedback of routine data to the unit), social capital, and slack time. Using the TREC unit survey completed by managers, we assessed care unit managerial teams’ responses to major near misses and managers’ organizational citizenship behavior. Using the TREC facility survey completed by Directors of Care, we assessed processes and practices in quality improvement activities. These instruments are described elsewhere [27] and in Additional file 1.

Staff

Using the TREC care aide survey, we assessed care aides’ use of best practice, psychological empowerment, job satisfaction, and individual staff attributes (Additional file 1).

Residents

Using the RAI-MDS 2.0 data, we assessed two practice-sensitive (modifiable by care staff) quality indicators [41]: residents with worsening pain and residents with declining behavioral symptoms (Additional file 1). These outcomes were chosen because they are two of the most practice sensitive (modifiable by care staff) [41] quality indicators and both, responsive behaviors and pain are considered among the most burdensome resident outcomes [42].

Statistical analyses

Sample size calculation

Full details of the sample size calculation are in our trial protocol [26]. Assumed effect sizes of the formal interactions score were β1 = 0.2 in the simple feedback group, β2 = 0.4 in the basic assisted feedback group, and β3 = 0.6 in the enhanced assisted feedback group. An adapted simulation-based approach [43] suggested that 12 facilities per study arm (with on average three units per facility) were required to detect the assumed effects with a statistical power of 0.90. To allow for attrition and effects smaller than the assumed ones, we invited all eligible units in the 67 eligible facilities in Alberta and British Columbia to participate in this study.

Statistical approach

We used SAS® 9.4 for all statistical analyses. Using descriptive statistics, between study arms we compared baseline characteristics of nursing homes, care units, participants in our first intervention workshop, and care aides working on participating units. To assess the effects of interventions on our primary outcome (formal interactions) and secondary staff outcomes, we ran mixed effects regression models with random intercepts for care unit and facility levels, and a random effect for care aides responding to our survey at both baseline and follow-up. We adjusted the model for the three stratification variables of the TREC facility sample (region, owner-operator model, and facility size); baseline differences of the dependent variables; care aides’ sex, age, and first language (English yes/no); and care unit staffing (total care hours per resident day and percentage of total hours per resident day provided by care aides). Intra-cluster correlation (ICC) of formal interaction scores within facilities and care units was calculated by dividing the cluster-level variance by the total variance (sum of residual variance, unit-level random intercept, and facility-level random intercept). For secondary resident outcomes (percent of residents on a care unit whose behavior worsened and percent of residents on a care unit whose pain worsened), we ran mixed effects regression models with a facility-level random intercept. These models were adjusted for facility characteristics (region, owner-operator model, and facility size); baseline differences of the dependent variables; and care unit staffing. We carried out an intention-to-treat analysis. A care unit was considered to be adherent with the intervention if at least one representative of this unit attended the goal-setting workshop and at least one of the two support workshops.

Public and patient involvement

TREC is a program of integrated knowledge translation research [44]. Throughout all projects, TREC partners with researchers, trainees, policymakers, owner-operators, care staff, people in need of care, and their family/friend caregivers in all phases of the research process. In INFORM, these stakeholders were co-applicants on the research grant that funded the study, were team members of working groups and committees that carried out the study, and were involved in discussions on study results and their interpretation.

Results

Between November 1 and 15, 2015, we assessed 75 nursing homes with 277 care units for eligibility. We randomized each of the 67 eligible nursing homes (201 eligible care units) into one of the three study arms (Fig. 2). Two nursing homes with 5 eligible care units declined participation in the basic assisted feedback arm and seven nursing homes with 21 eligible care units declined participation in the enhanced assisted feedback arm. Two nursing homes (three eligible units) in each of the simple and basic assisted feedback arms and two nursing homes (six eligible care units) in the enhanced assisted feedback arm were not included in final analyses because no follow-up data were available. One nursing home (six eligible care units) in the basic assisted feedback arm did not participate in any intervention workshops, but we included this facility in intention-to-treat analyses.

Table 2 presents baseline characteristics of included facilities, care units, and participants attending the first intervention workshop (goal-setting workshop), and of care aides by study arm. Facilities, care units, and care aides had similar characteristics at baseline in all study arms. Numbers of participants attending the first intervention workshop (predominantly managers) were also comparable. Care aides were predominantly female and older than 40 years. They had worked for 10–11 years on average as a care aide and had 5–6 years of experience on their current unit.

Table 3 presents the number of intervention workshops that each facility and care unit attended.

Table 4 presents the study outcome scores at baseline and follow-up.

Primary outcome

There was a statistically significant increase in care aides’ attendance at formal team communications about resident care in both the basic and enhanced assisted feedback arms compared with the control arm, as measured by the multiply adjusted model (Table 5). However, this outcome was not different between the basic and enhanced assisted feedback arms. Unit-level ICCs (95% CIs) of this outcome were 0.0437 (0.0263, 0.0860) at baseline and 0 at follow-up. Facility-level ICCs (95% CIs) were 0.0510 (0.0276, 0.1287) at baseline and 0.0453 (0.0274, 0.0920) at follow-up.

Secondary outcomes

Care aides’ social capital scores were higher in the basic assisted feedback arm than in the simple feedback (control) arm. Care aide job satisfaction in the enhanced assisted feedback arm was lower than in the basic assisted feedback arm. There were no differences between arms in other secondary staff outcomes. Enhanced assisted feedback care units had higher rates of residents whose responsive behavior worsened over the study than simple and basic assisted feedback care units. We found no differences between study arms at follow-up in the proportion of residents whose pain worsened.

Characteristics of included and excluded facilities were not different, and neither were characteristics of care units nor care aides in included versus excluded facilities. A per-protocol analysis (only including care units who sent a representative to all workshops [analysis 1] and care units who attended at least 2 workshops [analysis 2]) did not alter the results and conclusions of our intention-to-treat analysis.

Discussion

With the goal of improving communication among team members in nursing homes and working with care unit managerial teams in nursing homes, we compared simple (usual care) feedback with one workshop and no goal setting or action plans to two higher intensity feedback processes that included goal setting, generation of action plans, and two follow-up workshops. We found that the higher intensity feedback processes were more effective in increasing care aide involvement in formal communications about resident care (our primary outcome). However, we found no difference in our primary outcome between the two higher intensity intervention groups, even though one group received 3-h in-person support workshops and the other group received 1.5-h web-based support workshops.

Our study first and foremost demonstrates an effective and practical strategy for improving communication among team members in nursing homes, specifically, for enabling and supporting reciprocal communication between unregulated and regulated care staff. Such communication strategies are essential to improving quality and safety in nursing homes and our study offers an achievable pathway to do so. Improved communication is not only an essential quality improvement strategy, it offers benefits to the workforce, specifically improved quality of worklife, here assessed by the construct of social capital. The unregulated workforce in nursing homes is woefully understudied [45,46,47] and a rising inability of supply to meet demand, as well as, high levels of concern about the conditions of work are urgent international issues [48,49,50]. Information exchange between care aides and regulated staff is top-down and care aides are rarely involved in decisions about resident care [19, 51, 52]. As essential but highly stressed components of the health and social care systems, nursing homes should be a major focus of implementation and improvement strategies designed to enhance not only quality of care but importantly quality of life for their highly vulnerable population of older adults, the majority with dementia.

In this study, we designed and tested feedback interventions from robust theory on goal setting and compared different interventions head to head. In line with systematic reviews [11, 20, 21], we found that audit and feedback are effective in changing the behaviors of care staff. The most recent Cochrane review on audit and feedback [20] found a weighted median absolute improvement in desired practices of 1.3% (interquartile range; IQR 1.3–28.9%). A systematic review on audit and feedback in dementia care settings reported a non-weighted median absolute improvement of 17% (IQR 0.5–50%) [21]. Our absolute improvement in the formal interactions score for our basic and enhanced assisted feedback groups was 6.4%, comparable to the two reviews and in keeping with the idea that incremental effects of audit and feedback modifications are likely to be small, but important [23].

Our findings contribute knowledge about how to optimize audit and feedback by demonstrating that combining audit and feedback with goal setting and an action plan is more effective than simply feeding back data. However, longer workshops (3-h in the enhanced vs 1.5-h in the basic assisted feedback group) and in-person versus web-based delivery did not further improve the effectiveness of our intervention. This means that significant improvements are possible with a less intense (and therefore less costly) intervention. The web-based workshops in the basic feedback intervention may have been more attractive to care teams (shorter and do not require travel), offsetting the additional learning effect of the more intense enhanced feedback intervention. This may also explain why more facilities declined during the recruitment phase to participate in the enhanced than in the basic feedback group—a concern that 3-h in-person workshops may be too demanding. Finally, how well a facility enacted the intervention was more important for the success of the intervention than the intensity (i.e., basic versus enhanced arm) of the intervention [submitted to Impl Sci as companion paper to this paper; add reference upon acceptance].

Our basic feedback intervention (compared to simple feedback) improved connections among care team members (social capital). However, job satisfaction was lower with enhanced than with basic feedback and our enhanced feedback intervention (compared to simple and basic feedback) was associated with a higher rate of residents with dementia whose behavior worsened. It is possible that our most intense intervention raised care team members’ awareness of behavioral changes, which may have increased their reporting of these outcomes. However, the baseline rates of worsening behavior were already higher in the enhanced group than in the other study groups, and in each group, rates remained relatively stable pre and post-intervention (Table 4). Other secondary study outcomes did not differ between study arms at follow-up. It is possible that, as Wensing and Grol [9] recently highlighted, for organizational and system change to affect resident outcomes, more time is required than is typically available in a research project, and there are many steps (and thus increased time required) from implementation to patient outcome. Studies typically do not assess intermediate steps from implementation to outcome nor are they typically funded for sufficiently long periods to capture changes in patient outcomes. Nonetheless, our study responds to their call to carefully select implementation strategies that fit the problems to be solved, base the intervention on robust theory and evidence, systematically involve stakeholders, and use rigorous study designs and outcome measures that will respond to the intervention.

Strengths and weaknesses of the study

A strength of our study is that it was systematically based on goal-setting theory [32] and robust evidence on the effect of audit and feedback [11, 20,21,22] on formal interdisciplinary team communication [4,5,6, 8]. Our study comprehensively reports all required details of the audit and feedback interventions tested [53] and follows recommendations to conduct concurrent process evaluations with complex behavioral trials [33] such as INFORM—a fidelity sub-study [currently under review as a companion paper to this manuscript] can aid with interpretation of the effectiveness results presented here. We used well-validated measures and robust data collection methods in the INFORM trial [27]. Our cluster-randomized design minimized the risk of contamination between study arms. Robust statistical methods accounting for clustering, baseline differences, and repeated measures maximized the validity of our statistical conclusions.

However, cluster-randomization can introduce a risk of selection bias. Seven nursing homes with 26 care units declined participation, but those numbers are small compared with the whole, and characteristics of those non-participating homes did not differ from the characteristics of participating homes. Given the nature of our intervention, blinding was difficult. However, we blinded facility managerial teams and regional study coordinators to the extent possible to minimize this risk of bias. Another risk of bias could arise from including only facilities already participating in TREC. TREC facilities may be more engaged than other facilities, and interactions with TREC study teams during TREC data collections and feedback activities may make these facilities better equipped to improve. Whether we see this effect in nursing homes that have not been exposed to a research program like TREC needs to be examined in future studies. We also need to assess the long-term sustainability of these effects and whether these improvements in communication translate into improved resident quality of care and quality of life. Therefore, we will assess study outcomes again after our next wave of data collection (September 2019–March 2020).

Conclusions

Our study findings demonstrate that communications in care homes can be improved by providing feedback, guided by goal-setting theory. It also demonstrates that this theory-based intervention is more effective than simple feedback in improving formal care staff communications about resident care. Results highlight the importance of concrete strategies and support mechanisms for front-line managers and teams wishing to change practice. However, they also suggest highly resource-intensive feedback interventions may be unnecessary. Instead, moderate-intensity interventions that take advantage of more economical web-based delivery and communication technologies may be optimal. Consistent with recent suggestions for health systems and researchers to collaborate in “implementation laboratories” to learn how to advance the science of feedback and optimize its use in practice using larger sequential trials [23], we are revising the INFORM intervention for use by health authorities as a routine quality improvement strategy. We plan to evaluate this revised INFORM package and its rollout across all health regions in a Canadian province. Lastly, in terms of generalizability, the feedback and goal-setting model that INFORM is based on has the potential to improve interprofessional communication and other practices designed to improve nursing home care inside and outside Canada.

Availability of data and materials

The data used for this article are housed in the secure and confidential Health Research Data Repository (HRDR) in the Faculty of Nursing at the University of Alberta (https://www.ualberta.ca/nursing/research/supports-and-services/hrdr), in accordance with the health privacy legislation of participating TREC jurisdictions. These health privacy legislations and the ethics approvals covering TREC data do not allow public sharing or removal of completely disaggregated data (resident-level records) from the HRDR, even if de-identified. The data were provided under specific data sharing agreements only for approved use by TREC within the HRDR. Where necessary, access to the HRDR to review the original source data may be granted to those who meet pre-specified criteria for confidential access, available at request from the TREC data unit manager (https://trecresearch.ca/about/people), with the consent of the original data providers and the required privacy and ethical review bodies. Statistical and anonymous aggregate data, the full dataset creation plan, and underlying analytic code associated with this paper are available from the authors upon request, understanding that the programs may rely on coding templates or macros that are unique to TREC.

Abbreviations

- INFORM:

-

Improving Nursing Home Care Through Feedback On PerfoRMance Data

- TREC:

-

Translating Research in Elder Care

References

Institute of Medicine (IOM). Crossing the quality chiasm: a new health system for the 21st century. Washington, DC: The National Academies Press; 2001.

Sentinel event statistics released through second quarter 2015. https://www.jointcommission.org/issues/article.aspx?Article=Cn%2B7R3OBGIW5yk5DuJxM92q4pYTPG4F4i%2Bz4172eoIY%3D. Accessed 7 Sept 2020.

CRICO Strategies. Malpractice risks in communication failures: 2015 annual benchmarking report. Boston: CRICO Strategies; 2015.

Panagioti M, Bower P, Kontopantelis E, Lovell K, Gilbody S, Waheed W, Dickens C, Archer J, Simon G, Ell K, et al. Association between chronic physical conditions and the effectiveness of collaborative care for depression: an individual participant data meta-analysis. JAMA Psychiatry. 2016;73(9):978–89.

Sacks GD, Shannon EM, Dawes AJ, Rollo JC, Nguyen DK, Russell MM, Ko CY, Maggard-Gibbons MA. Teamwork, communication and safety climate: a systematic review of interventions to improve surgical culture. BMJ Qual Saf. 2015;24(7):458–67.

Pillay B, Wootten AC, Crowe H, Corcoran N, Tran B, Bowden P, Crowe J, Costello AJ. The impact of multidisciplinary team meetings on patient assessment, management and outcomes in oncology settings: a systematic review of the literature. Cancer Treat Rev. 2016;42:56–72.

Sunkara PR, Islam T, Bose A, Rosenthal GE, Chevli P, Jogu H, Tk LA, Huang CC, Chaudhary D, Beekman D, et al. Impact of structured interdisciplinary bedside rounding on patient outcomes at a large academic health centre. BMJ Qual Saf. 2020;29(7):569–75.

Nazir A, Unroe K, Tegeler M, Khan B, Azar J, Boustani M. Systematic review of interdisciplinary interventions in nursing homes. J Am Med Dir Assoc. 2013;14(7):471–8.

Wensing M, Grol R. Knowledge translation in health: how implementation science could contribute more. BMC Med. 2019;17(1):88.

Mills WL, Pimentel CB, Palmer JA, Snow AL, Wewiorski NJ, Allen RS, Hartmann CW. Applying a theory-driven framework to guide quality improvement efforts in nursing homes: the LOCK model. Gerontologist. 2018;58(3):598–605.

Low LF, Fletcher J, Goodenough B, Jeon YH, Etherton-Beer C, MacAndrew M, Beattie E. A systematic review of interventions to change staff care practices in order to improve resident outcomes in nursing homes. PLoS One. 2015;10(11):e0140711.

Onder G, Carpenter I, Finne-Soveri H, Gindin J, Frijters D, Henrard JC, Nikolaus T, Topinkova E, Tosato M, Liperoti R, et al. Assessment of nursing home residents in Europe: the Services and Health for Elderly in Long TERm care (SHELTER) study. BMC Health Serv Res. 2012;12:5.

Centers for Medicare & Medicaid Services (CMS). Nursing home data compendium 2015 edition. Baltimore: CMS; 2015.

Dementia in long-term care. https://www.cihi.ca/en/dementia-in-canada/dementia-across-the-health-system/dementia-in-long-term-care. Accessed 7 Sept 2020.

Bartfay E, Bartfay WJ, Gorey KM. Prevalence and correlates of potentially undetected dementia among residents of institutional care facilities in Ontario, Canada, 2009-2011. Int J Geriatr Psychiatry. 2013;28(10):1086–94.

Hoben M, Chamberlain SA, Gruneir A, Knopp-Sihota JA, Sutherland JM, Poss JW, Doupe MB, Bergstrom V, Norton PG, Schalm C etal. Nursing home length of stay in three Canadian health regions: temporal trends, jurisdictional differences and associated factors. J Am Med Dir Assoc. 2019;20(9):1121–8.

Hewko SJ, Cooper SL, Huynh H, Spiwek TL, Carleton HL, Reid S, Cummings GG. Invisible no more: a scoping review of the health care aide workforce literature. BMC Nurs. 2015;14:38.

Morley JE. Certified nursing assistants: a key to resident quality of life. J Am Med Dir Assoc. 2014;15(9):610–2.

Kolanowski A, Van Haitsma K, Penrod J, Hill N, Yevchak A. “Wish we would have known that!” Communication breakdown impedes person-centered care. Gerontologist. 2015;55(Suppl_1):S50–60.

Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, O'Brien MA, Johansen M, Grimshaw J, Oxman AD. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;2012(6):Art. No.: CD000259.

Sykes MJ, McAnuff J, Kolehmainen N. When is audit and feedback effective in dementia care? A systematic review. Int J Nurs Stud. 2018;79:27–35.

Ivers NM, Sales A, Colquhoun H, Michie S, Foy R, Francis JJ, Grimshaw JM. No more ‘business as usual’ with audit and feedback interventions: towards an agenda for a reinvigorated intervention. Implement Sci. 2014;9(1):14.

Grimshaw JM, Ivers N, Linklater S, Foy R, Francis JJ, Gude WT, Hysong SJ, Audit, Feedback M. Reinvigorating stagnant science: implementation laboratories and a meta-laboratory to efficiently advance the science of audit and feedback. BMJ Qual Saf. 2019;28(5):416–23.

Soong C, Shojania KG. Education as a low-value improvement intervention: often necessary but rarely sufficient. BMJ Qual Saf. 2020;29:353–7.

Roos-Blom MJ, Gude WT, de Jonge E, Spijkstra JJ, van der Veer SN, Peek N, Dongelmans DA, de Keizer NF. Impact of audit and feedback with action implementation toolbox on improving ICU pain management: cluster-randomised controlled trial. BMJ Qual Saf. 2019;28(12):1007–15.

Hoben M, Norton PG, Ginsburg LR, Anderson RA, Cummings GG, Lanham HJ, Squires JE, Taylor D, Wagg AS, Estabrooks CA. Improving nursing home care through feedback on PerfoRMance data (INFORM): protocol for a cluster-randomized trial. Trials. 2017;18(1):9.

Estabrooks CA, Squires JE, Cummings GG, Teare GF, Norton PG. Study protocol for the translating research in elder care (TREC): building context – an organizational monitoring program in long-term care project (project one). Implement Sci. 2009;4(1):52.

Estabrooks CA, Teare GF, Norton PG. Should we feed back research results in the midst of a study? Implement Sci. 2012;7:87.

Bostrom AM, Cranley LA, Hutchinson AM, Cummings GG, Norton PG, Estabrooks CA. Nursing home administrators’ perspectives on a study feedback report: a cross sectional survey. Implement Sci. 2012;7:88.

Cranley LA, Birdsell JM, Norton PG, Morgan DG, Estabrooks CA. Insights into the impact and use of research results in aresidential long-term care facility: a case study. Implement Sci. 2012;7:90.

Hutchinson AM, Batra-Garga N, Cranley L, Bostrom AM, Cummings G, Norton P, Estabrooks CA. Feedback reporting of survey data to healthcare aides. Implement Sci. 2012;7:89.

Latham GP, Locke EA. Self-regulation through goal-setting. Organ Behav Hum Decis Process. 1991;50(2):212–47.

Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, Moore L, O’Cathain A, Tinati T, Wight D, et al. Process evaluation of complex interventions: medical research council guidance. BMJ. 2015;350:h1258.

Dixon-Woods M, Bosk CL, Aveling EL, Goeschel CA, Pronovost PJ. Explaining Michigan: developing an ex post theory of a quality improvement program. Milbank Q. 2011;89(2):167–205.

Patsopoulos NA. A pragmatic view on pragmatic trials. Dialogues Clin Neurosci. 2011;13(2):217–24.

Norton PG, Murray M, Doupe MB, Cummings GG, Poss JW, Squires JE, Teare GF, Estabrooks CA. Facility versus unit level reporting of quality indicators in nursing homes when performance monitoring is the goal. BMJ Open. 2014;4(2):e004488.

Campbell MK, Piaggio G, Elbourne DR, Altman DG, Group C. Consort 2010 statement: extension to cluster randomised trials. BMJ. 2012;345:e5661.

Translating Research in Elder Care (TREC): Minimum number of care aide responses needed per care unit to obtain stable, valid and reliable unit-aggregated ACT scores, (Internal report, available upon request) edn. Edmonton, AB: TREC; 2010.

Canadian Institute for Health Information. Data quality documentation, continuing care reporting system, 2013-2014. Ottawa: CIHI; 2015.

Estabrooks CA, Squires JE, Hayduk LA, Cummings GG, Norton PG. Advancing the argument for validity of the Alberta Context Tool with healthcare aides in residential long-term care. BMC Med Res Methodol. 2011;11(1):107.

Estabrooks CA, Knopp-Sihota JA, Norton PG. Practice sensitive quality indicators in RAI-MDS 2.0 nursing home data. BMC Res Notes. 2013;6(1):460.

Hoben M, Chamberlain SA, Knopp-Sihota JA, Poss JW, Thompson GN, Estabrooks CA. Impact of symptoms and care practices on nursing home residents at the end of life: a rating by front-line care providers. J Am Med Dir Assoc. 2016;17(2):155–61.

Arnold BF, Hogan DR, Colford JM Jr, Hubbard AE. Simulation methods to estimate design power: an overview for applied research. BMC Med Res Methodol. 2011;11:94.

Bowen SJ, Graham ID. From knowledge translation to engaged scholarship: promoting research relevance and utilization. Arch Phys Med Rehabil. 2013;94(1 Suppl):S3–8.

Estabrooks CA, Squires JE, Carleton HL, Cummings GG, Norton PG. Who is looking after mom and dad? Unregulated workers in Canadian long-term care homes. Can J Aging. 2015;34(1):47–59.

Chamberlain SA, Hoben M, Squires JE, Cummings GG, Norton P, Estabrooks CA. Who is (still) looking after mom and dad? Few improvements in care aides’ quality-of-work life. Can J Aging. 2019;38(1):35–50.

Osterman P. Who will care for us? Long-term care and the long-term workforce. New York: Sage; 2017.

Muir T. Measuring social protection for long-term care (OECD Health Working Papers, No. 93). Paris: OECD Publishing; 2017.

MacDonald B-J, Wolfson M, Hirdes JP. The future co$t of long-term care in Canada. Toronto: National Institute on Aging; 2019.

Sinha S, Dunning J, Wong I, Nicin M, Nauth S. Enabling the future provision of long-term care in Canada. Toronto: National Institute on Aging; 2019.

Caspar S, Ratner PA, Phinney A, MacKinnon K. The influence of organizational systems on information exchange in long-term care facilities: an institutional ethnography. Qual Health Res. 2016;26(7):951–65.

Janes N, Sidani S, Cott C, Rappolt S. Figuring it out in the moment: a theory of unregulated care providers’ knowledge utilization in dementia care settings. Worldviews Evid Based Nurs. 2008;5(1):13–24.

Colquhoun H, Michie S, Sales A, Ivers N, Grimshaw JM, Carroll K, Chalifoux M, Eva K, Brehaut J. Reporting and design elements of audit and feedback interventions: a secondary review. BMJ Qual Saf. 2017;26(1):54–60.

Acknowledgements

Tara Penner edited the manuscript and was funded by Matthias Hoben. We would like to thank the facilities, administrators, and their care teams who participated in this study. We acknowledge the contributions of Sube Banerjee and William Ghali who gave valuable input on the manuscript. We would also like to thank Don McLeod for facilitating the intervention workshops and contributing to the development of the intervention materials; the TREC regional project coordinators (Fiona MacKenzie, Kirstie McDermott, Julie Mellville, Michelle Smith) for recruiting facilities and participants, and keeping them engaged; Charlotte Berendonk for administrative support; the TREC data unit manager Joseph Akinlawon and the TREC analyst Moses Kim for carrying out the statistical analyses; and Malcolm Doupe for carrying out the randomization.

Funding

This study was funded by a Canadian Institute of Health Research (CIHR) Transitional Operating Grant (#341532). During the time of the study, Matthias Hoben was holding a Postdoctoral Fellowship from Alberta Innovates (formerly Alberta Innovates–Health Solutions; 2014–2017; File No: 201300543, CA #: 3723). He also received top-up funding from TREC and Estabrooks’ Tier 1 Canada Research Chair and his last year of postdoctoral training (2017–2018) was funded by a TREC Postdoctoral Fellowship. Matthias Hoben currently holds a University of Alberta Faculty of Nursing Professorship in Continuing Care Policy Research and a University of Alberta Faculty of Nursing Establishment Grant, both of which were partially used to fund work related to this study. Carole Estabrooks holds a Tier 1 Canada Research Chair in Knowledge Translation.

Author information

Authors and Affiliations

Contributions

MH co-led the study with CAE, LRG, and PGN; MH attended all study workshops, oversaw the analysts who carried out the statistical analyses, drafted all figures and tables, and wrote the first draft of the manuscript. MH in collaboration with LRG, AE, PGN, CAE, RAA, HJL, GGC, JMHL, JES, and ASW developed the statistical analysis plan and interpreted the analyses. LRG in collaboration with MH, AE, PGM, CAE, EAA, RAA, AMB, LAC, HJL, and LEW developed the workshop materials and evaluations and oversaw the intervention implementation and data collections. LRG, AE, MH, EAA, AMB, LAC, HJL, and LEW carried out the process evaluation data collections. All authors revised the paper critically for intellectual content and approved the final version.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by the Research Ethics Boards of the University of Alberta (Pro00059741), Covenant Health (1758), University of British Columbia (H15-03344), Fraser Health Authority (2016-026), and Interior Health Authority (2015-16-082-H). Operational approval was obtained from all included facilities as required. All TREC facilities have agreed and signed written informed consent to participate in the TREC observational study and to receive simple feedback (our control group, details below). Facilities randomized to the two higher intensity study arms were asked for additional written informed consent. Managerial teams and care team members were asked for verbal informed consent before participating in any primary data collection (evaluation surveys, focus groups, interviews).

Consent for publication

Not applicable

Competing interests

JHL reports personal fees received from the Canadian Medical Association Journal, outside the submitted work. No financial relationships with any organizations that might have an interest in the submitted work in the previous 3 years, no other relationships or activities that could appear to have influenced the submitted work. All other authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

Study measures.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Hoben, M., Ginsburg, L.R., Easterbrook, A. et al. Comparing effects of two higher intensity feedback interventions with simple feedback on improving staff communication in nursing homes—the INFORM cluster-randomized controlled trial. Implementation Sci 15, 75 (2020). https://doi.org/10.1186/s13012-020-01038-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-020-01038-3