Abstract

Background

Little work investigates the effect of behavioral health system efforts to increase use of evidence-based practices or how organizational characteristics moderate the effect of these efforts. The objective of this study was to investigate clinician practice change in a system encouraging implementation of evidence-based practices over 5 years and how organizational characteristics moderate this effect. We hypothesized that evidence-based techniques would increase over time, whereas use of non-evidence-based techniques would remain static.

Method

Using a repeated cross-sectional design, data were collected three times from 2013 to 2017 in Philadelphia’s public behavioral health system. Clinicians from 20 behavioral health outpatient clinics serving youth were surveyed three times over 5 years (n = 340; overall response rate = 60%). All organizations and clinicians were exposed to system-level support provided by the Evidence-based Practice Innovation Center from 2013 to 2017. Additionally, approximately half of the clinicians participated in city-funded evidence-based practice training initiatives. The main outcome included clinician self-reported use of cognitive-behavioral and psychodynamic techniques measured by the Therapy Procedures Checklist-Family Revised.

Results

Clinicians were 80% female and averaged 37.52 years of age (SD = 11.40); there were no significant differences in clinician characteristics across waves (all ps > .05). Controlling for organizational and clinician covariates, average use of CBT techniques increased by 6% from wave 1 (M = 3.18) to wave 3 (M = 3.37, p = .021, d = .29), compared to no change in psychodynamic techniques (p = .570). Each evidence-based practice training initiative in which clinicians participated predicted a 3% increase in CBT use (p = .019) but no change in psychodynamic technique use (p = .709). In organizations with more proficient cultures at baseline, clinicians exhibited greater increases in CBT use compared to organizations with less proficient cultures (8% increase vs. 2% decrease, p = .048).

Conclusions

System implementation of evidence-based practices is associated with modest changes in clinician practice; these effects are moderated by organizational characteristics. Findings identify preliminary targets to improve implementation.

Similar content being viewed by others

Background

The last two decades have shown increasing emphasis on the implementation of evidence-based practices (EBPs) in publicly funded behavioral health systems nationally [1, 2]. Policy-makers in public behavioral health systems (e.g., City of Philadelphia, Los Angeles County, Washington, Hawaii, New York) have committed to using EBPs [1, 3,4,5,6] to improve the quality of psychosocial services and client outcomes [7,8,9] using various approaches including tying reimbursement to EBP use (i.e., financial incentives), building EBPs into contracts, and policy initiatives [2, 10, 11]. Although many public behavioral health systems have invested in implementing EBPs, very few of these efforts have been systematically and rigorously evaluated, thus limiting the ability to understand the effect of these efforts on clinician and organizational behavior and subsequent client reach [2]. Thought leaders in implementation science have recommended learning from natural experiments enacted by systems via observational research designs in order to produce generalizable knowledge to advance implementation science [12]. Thus, rigorous evaluation of system-wide EBP implementation can produce valuable information to achieve this objective.

The majority of what is known about system-wide EBP implementation is largely descriptive in nature (i.e., if systems are implementing EBPs, how they support EBPs). One set of studies takes a broad perspective and reports on national trends across system EBP implementations. For example, a study reporting on a set of national surveys conducted with state mental health directors found increases in states offering EBPs for youth from 2001 to 2012 [2]. Another survey study found that the majority of state mental health directors endorsed using financial incentives to promote EBP use in their system [11]. Another set of studies takes a more granular perspective and reports on specific strategies used within one system such as the City of Philadelphia [10], New York [11], Hawaii, and Illinois [13]. For example, Powell and colleagues describe how the City of Philadelphia Department of Behavioral Health and Intellectual Disability Services (DBHIDS), which oversees behavioral health services for over 600,000 Medicaid-enrolled consumers, began implementing EBPs in 2007 via “EBP initiatives” [10, 14] and through the creation of the Evidence-based Practice and Innovation Center (EPIC) which included policy, fiscal, and operational changes to encourage EBP implementation [10].

Although these perspectives have enriched the field’s understanding of whether systems are implementing EBPs and how EBPs are supported, there is a gap in the literature with regard to how system-wide efforts to implement EBPs are related to clinician practice over time. Only a few studies have attempted to evaluate the effect of system-wide EBP implementation. One national study within the Veterans Health Administration, a large system supporting EBP implementation, found that medical record documentation suggested that only 20% of veterans with post-traumatic stress disorder (PTSD) (total n = 255,968) received at least one session of EBP for PTSD. Another study using administrative claims data found that there was an increased rate of EBP claims over time within the context of a fiscally mandated implementation effort in Los Angeles County [15]. By leveraging existing data sources, these studies provide preliminary insights into how system-wide efforts to implement EBPs may be related to patterns in clinician and organizational behavior, but additional work is needed to understand the effect of such efforts.

Another focus of research inquiry includes investigating how system implementation of EBPs interacts with characteristics of the organizations nested within the system, such as organizational leadership, culture, and climate. This line of research can both elucidate potential mutable targets of implementation strategies in future implementation efforts and advance the science of implementation by providing empirical evidence for implementation science frameworks that posit the criticality of these constructs [16]. Leading determinant frameworks [17] such as the Consolidated Framework for Implementation Research [18] and the Exploration, Preparation, Implementation, and Sustainment framework [16] suggest the importance of the relationship between implementation and organizational characteristics, such as leadership (i.e., extent to which leaders are capable of guiding, directing, and supporting implementation) [19], culture (i.e., shared norms, behavioral expectations, and values of an organization) [20, 21], and climate (i.e., shared perceptions regarding the impact of the work environment on clinician well-being). A growing body of literature explores the relationship between these constructs and implementation (e.g., [19, 22,23,24,25,26,27]). However, findings have been somewhat mixed [19] and few studies have prospectively investigated the relationship between these factors and implementation—which would provide the most compelling evidence for potential mutable targets of implementation strategies, as well as build causal theory, a key imperative in implementation science [19, 28, 29].

The current study builds on previous work by investigating how a centralized system effort to support implementation in the City of Philadelphia is related to clinicians’ EBP use and how organizational characteristics, specifically implementation leadership, implementation climate, and organizational culture, might moderate these effects [16, 23, 30]. We measured clinicians’ self-reported use of psychotherapy techniques for youth in outpatient clinics over 5 years within the context of a system-wide effort to implement EBPs. We measured cognitive-behavioral therapy (CBT) techniques, which have evidence for their effectiveness for youth psychiatric disorders [31] and comprised the majority of EBPs implemented by DBHIDS, and psychodynamic techniques, which are frequently used [32] but have less evidence for youth psychiatric disorders [31, 33,34,35,36,37]. We hypothesized that (a) clinician CBT use would increase over time, whereas psychodynamic technique use would remain static; (b) clinician participation in system-sponsored EBP initiatives would increase CBT use over time; and (c) baseline organizational variables would predict variability in clinician CBT use over time [14].

Methods

Design

We used a repeated cross-sectional design [38] across 5 years of system-wide EBP implementation in which we were interested in change in technique use at the population level and the moderating effect of organization-level variables on those estimates. In a repeated cross-sectional design, there may be zero overlap in the samples between periods and yet valid inferences of change in population values can be made on the basis of repeated cross-sections. Additionally, the effect of organizational moderators can be examined as long as the same organizations are in the sample. Overlap in the cross-sectional samples is beneficial because it reduces variance of the parameter estimates; however, high overlap between samples is not necessary for valid inferences about changes in population trends over time.

The design incorporated two sampling stages. First, we purposively selected organizations delivering youth outpatient services in Philadelphia’s public behavioral health system. Second, cross-sections of clinicians working within sampled organizations at each wave were recruited. At each wave (2013, 2015, 2017), we attempted to recruit all clinicians within enrolled organizations. This allowed us to examine changes in the population of interest over time without assuming that individuals were the same at each wave, given high clinician turnover rates and the real-world context.

Procedure

With the permission of organizational leaders, researchers scheduled group meetings with all clinicians working within the organizations that delivered youth outpatient services, during which the research team presented the study, obtained written informed consent, and collected measures onsite. The only inclusion criterion was that clinicians deliver behavioral health services to youth (clients under age 18) via the outpatient program. We did not exclude any clinicians meeting this criterion and included calinicians-in-training (e.g., interns); the majority of clinicians had their master’s degree. Clinicians received $50 each wave; clinicians participating in all three waves received an additional $50. Procedures were approved by the University of Pennsylvania and City of Philadelphia IRBs.

Setting

Prior to 2013, DBHIDS supported EBPs via separate “EBP initiatives” that included training and expert consultation for enrolled clinicians lasting approximately 1 year, as recommended by treatment developers [10]. Between 2007 and 2019, through these initiatives, DBHIDS supported the implementation of a variety of cognitive behavioral therapy-focused practices addressing a range of psychiatric disorders including cognitive therapy [39], prolonged exposure [40], trauma-focused CBT [10, 41], dialectical behavior therapy [42], and parent-child interaction therapy [43] (all currently ongoing; see Fig. 1). Initially, DBHIDS largely guided organization selection for initiative participation; more recently, organizations have applied for participation through a competitive process. Organizations decided which of their clinicians would participate [44].

As system leaders identified similar barriers across single EBP initiatives, the DBHIDS Commissioner (ACE) convened a task force of academics and policy-makers in 2012 to apply best practices from implementation science to support EBP implementation. This resulted in EPIC, an entity intended to provide a centralized infrastructure for EBP administration. EPIC was formally launched in 2013 and oversees all EBP implementation efforts in the Philadelphia public behavioral health system. EPIC is led by a Director and currently supported by two staff who provide technical assistance to organizations around EBP implementation through meetings, telephone calls, and regular events. In addition to supporting the EBP initiatives, which predated the creation of this centralized infrastructure, EPIC aligned policy, fiscal, and operational approaches by developing systematic processes to contract for EBP delivery, hosting events to publicize EBP delivery, designating providers as EBP agencies, and creating enhanced rates for the delivery of some EBPs. For more details on the approach taken by EPIC and DBHIDS, please see [10]. All organizations and clinicians were exposed to system-level support provided by EPIC from 2013 to 2017. Data collection in 2013 occurred prior to the official launch of EPIC.

Participants

Organizations

We used a purposive sampling approach for organizational recruitment. Philadelphia has a single payer system (Community Behavioral Health; CBH) for public behavioral health services, thus we obtained a list from the payer of all organizations that had submitted a claim in 2011–2012. There were over 100 organizations delivering outpatient services to youth. Our intention was to use purposive sampling to generate a representative sample of the organizations that served the largest number of youth in the system. We selected the first 29 organizations as our population of interest because together they serve approximately 80% of youth receiving publically funded behavioral health care. Over the course of the 5 years, we enrolled 21 out of the 29 organizations (73%; some organizations had more than one site, resulting in a total of 27 sites). Sixteen organizations and 20 sites were enrolled in the study at baseline and participated in at least one additional wave and were included in our analysis (k = 20). The organizations included in this study served approximately 42% of youth receiving outpatient services through the public mental health system (total) and represented approximately 52% of youth receiving outpatient services in the purposive sample of organizations that we targeted for recruitment. Organizations were geographically spread across Philadelphia and ranged in size with regard to youth served annually (M = 772.27, range = 337–2275 youth).

Clinicians

The three cross-sectional samples included 112 clinicians at wave 1 (46% organizational response rate), 164 clinicians at wave 2 (65% organizational response rate), and 151 clinicians at wave 3 (69% organizational response rate; total N = 340). Each cross-sectional sample included new clinicians and previous participating clinicians; 259 clinicians (76%) provided data once, 65 clinicians (19%) provided data twice, and 16 clinicians (5%) provided data three times [45, 46].

Measures

Dependent variable

Use of CBT and psychodynamic techniques was measured using the Therapy Procedures Checklist-Family Revised (TPC-FR) [47], a 62-item self-reported checklist of clinician practice. At each wave, clinicians reported on the specific psychotherapy techniques they used with a current, representative client. All items are rated from 1 (rarely) to 5 (most of the time). The factor structure has been confirmed, and the instrument is sensitive to within-therapist changes in strategy use [47, 48]. Only the CBT (33 items; α = .93) [49] and psychodynamic subscales (16 items; α = .85) were used.

Independent variable

Formal participation in system-sponsored EBP initiatives was reported by clinicians in response to a series of questions asking if they “formally participated as a trainee through DBHIDS” in the five EBP initiatives (yes/no). To ensure accuracy, we confirmed with each participant that they understood that the questions referred to the 1-year training and ongoing consultation provided by DBHIDS for each initiative. In analyses, this variable was included as a time-varying continuous value indexing the cumulative number of initiatives the clinician had participated in up to each wave (range = 0 to 5). As a control variable, we also created a dichotomous variable indexing whether the clinician had participated in a system-sponsored EBP initiative prior to study entry (yes/no).

Organizational moderators of interest

Organizational measures were constructed by aggregating (i.e., averaging) individual responses within the organization after confirming high levels of within-organization agreement using average within-group correlation (awg, rwg) statistics [50]. These statistics indicate the extent to which clinicians within an organization exhibit absolute agreement with each other on their ratings of organizational characteristics. Values range from 0 to 1, where higher values indicate greater reduction in error variance, and hence higher level of agreement. Typically, a cutoff of .6 or higher is recommended to provide validity evidence for aggregating individual scores to the organization level [51, 52].

Proficient organizational culture was measured using the 15-item proficiency scale (α = .92) of the Organizational Social Context measure [53]. Proficient organizational culture has been theoretically linked to EBP implementation [54]; items refer to shared norms and expectations that clinicians place client well-being first, are competent, and have up-to-date knowledge. Proficiency scale scores demonstrate excellent reliability, criterion-related validity, and predictive validity [23, 53,54,55,56]. Items are scored on a 1 (never) to 5 (always) scale.

Implementation leadership was measured using the Implementation Leadership Scale (ILS) [57], a 12-item scale that measures leader proactiveness (α = .92), knowledge (α = .97), supportiveness (α = .96), and perseverance (α = .95) in EBP implementation. Given high inter-correlations across ILS subscales and for parsimony, we used the total score (α = .98) only, which is supported by psychometric work [57]. Psychometrics suggest excellent internal consistency and convergent and discriminant validity [57]. Items are scored on a 0 (not at all) to 4 (very great extent) scale.

Implementation climate was measured using the Implementation Climate Scale (ICS) [58], an 18-item measure of strategic climate around EBP implementation. The six subscales on the ICS measure include organizational focus on EBP (α = .91); educational support for EBP (α = .86); recognition for using EBP (α = .86); rewards for using EBP (α = .87); selection of staff for EBP (α = .93); and selection of staff for openness (α = .95) [59]. The ICS produces subscale scores for each of these factors in addition to a total score. Given high inter-correlations across ICS subscales and for parsimony, we used the total score (α = .94) only, which is supported by psychometric work [58]. Psychometric data suggest good reliability and validity [58]. Items are scored on a 0 (not at all) to 4 (very great extent) scale.

Covariates

Covariates were included in the models on the basis of theory and prior research showing that client age [45], organizational size [60], clinician demographic characteristics (i.e., age, gender, educational level, years of clinical experience, experience in an EBP initiative prior to baseline [23]), and clinician attitudes toward EBPs (as measured by the Evidence-Based Practice Attitude Scale (EBPAS) [61]) are related to clinicians’ use of psychotherapy techniques [23, 62,63,64,65].

Data analysis plan

We used three-level mixed effects regression models with a Gaussian distribution to generate estimates of clinicians’ average technique use at baseline and over time [66]. Models were estimated via full information maximum likelihood [67] in HLM 6.08 [68] and incorporated random intercepts and a random effect for time at the clinician and organization levels to account for the nested data structure. Preliminary analyses indicated that the optimal functional form for time was a single linear trend based on model comparisons using Schwarz’s Bayesian information criterion (BIC) where lower values indicate superior fit. Differences in BIC values of 5.6 (for CBT use) and 3.4 (for psychodynamic technique use) provided positive evidence for the superiority of the linear trend model relative to quadratic and categorical parameterizations of time [69]. Table 1 presents the raw means of each dependent variable by wave. Preliminary analyses also confirmed there was significant variance in clinicians’ use of CBT techniques (ICC (1) = .17, p < .001) and psychodynamic techniques (ICC (1) = .09, p < .001) at the organization level. All analyses controlled for all covariates described above (i.e., client age, clinician age, gender, education, years of experience, attitudes toward EBP, participation in EBP initiative prior to study entry, and organization size) [23, 44, 45]. No data were missing at the organization level. Clinician-level covariates had < 4% missingness; results of Little’s MCAR test indicated that they were missing completely at random (χ2 = 26.10, df = 22, p = .247); we imputed these covariate values using the serial mean [66].

Average change in clinicians’ self-reported use of psychotherapy techniques (hypothesis 1) was tested in models with a linear main effect for wave and covariates. This estimated the overall change in clinicians’ use of psychotherapy techniques across waves. The influence of clinician participation in EBP initiatives on use of psychotherapy techniques (hypothesis 2) was tested by adding a time-varying variable which indexed the cumulative number of initiatives each clinician had participated in at each wave (0 to 5). Relationships between organizational variables of interest at baseline and subsequent trends in clinicians’ average use of therapy techniques over time (hypothesis 3) were tested by adding a main effect and interaction term for each organizational moderator. Because of high inter-correlations among organizational characteristics (mean r = .66), each organizational characteristic was tested separately. The issue of multiple comparisons is complex and contested [70, 71]. To avoid type II errors and the premature closing of important lines of inquiry, we used the Benjamini-Hochberg (B-H) procedure with a false discovery rate of .25 to evaluate the statistical significance of moderator tests for each outcome variable [72]. For each moderator test, we report the results of the B-H test of statistical significance, unadjusted and adjusted p values, and measures of effect size. Effect sizes were calculated using two metrics. First, we calculated percent change in technique use from wave 1 to wave 3. Second, we calculated a standardized mean difference in technique use from wave 1 to wave 3. After specifying each model, we examined residuals at levels 1, 2, and 3 and confirmed the tenability of underlying statistical assumptions including normality, homoscedasticity, and functional form [67].

Results

Clinician demographics

Clinicians were 80% female (n = 271), averaged 37.52 years of age (SD = 11.40), and had 8.37 (SD = 7.19) years of experience. Sixteen percent of clinicians endorsed identifying as Hispanic/Latino. With regard to race, clinicians endorsed identifying as White (43.5%), Black (30.6%), Asian-American (5.9%), American Indian or Alaska Native (1.2%), and Other (16.5%). Racial information was missing or not reported for 2.4% of the sample. Most clinicians had a master’s degree (n = 288, 85%). The three cross-sections of clinicians did not differ on age, gender, education, years of experience, or attitudes toward EBP (all ps > .25). The sample demographics broadly matches national demographics in mental health clinicians with regard to gender and ethnicity/race [73].

Clinician participation in EBP initiatives

By study conclusion, 171 (50%) clinicians had participated in one or more EBP initiatives. Of this group, 100 clinicians (29%) had participated in one, 39 (12%) participated in two, 19 (6%) participated in three, ten (3%) participated in four, and three (1%) participated in five initiatives. See Table 1 for the average number of initiatives clinicians had participated in by wave.

Trends in clinicians’ psychotherapy technique use over time

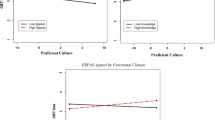

There was an increase in clinicians’ average use of CBT techniques across waves, controlling for covariates (Badj = .09, SE = .04, p = .021); specifically, average CBT use increased by .09 points per wave, resulting in a 6% increase in clinicians’ average adjusted use of CBT techniques from wave 1 (M = 3.18) to wave 3 (M = 3.37). This represents a small standardized mean increase in CBT use of d = .29. In contrast, there was no observed change in clinicians’ average adjusted use of psychodynamic techniques (Badj = .02, SE = .04, p = .570) from wave 1 (M = 3.37) to wave 3 (M = 3.41). Table 1 and Fig. 2 show the unadjusted means of CBT and psychodynamic use at each wave (Table 2).

Relation of EBP initiative participation to clinicians’ psychotherapy technique use

Clinicians’ participation in EBP initiatives during the study was positively related to increased use of CBT techniques, controlling for wave and all covariates (Badj = .09, SE = .04, p = .019). For each additional EBP initiative, clinician CBT technique use increased by 3%. For clinicians who did not participate in any EBP initiatives, average adjusted CBT use increased from wave 1 (M = 3.14) to wave 3 (M = 3.28) by d = .21, a small effect. On average, clinicians in the study participated in .85 EBP initiatives by the study’s end which corresponds to an adjusted average increase in CBT use from wave 1 (M = 3.14) to wave 3 (M = 3.36) of 7% or d = .32, a small effect. For clinicians who participated in two EBP initiatives by the study’s end, average adjusted CBT use increased from wave 1 (M = 3.14) to wave 3 (M = 3.46) by 10% or d = .47, a medium effect. For the 9.6% of clinicians who participated in three or more EBP initiatives by the study’s end, average adjusted CBT use increased by d = .61 (13%) or more, a medium-to-large effect. See Fig. 3. Participation in EBP initiatives was not related to clinicians’ psychodynamic technique use (Badj = .01, SE = .04, p = .709).

Associations among baseline organizational characteristics and trends in clinicians’ average use of psychotherapy techniques

Proficient organizational culture at baseline predicted variation in clinicians’ average CBT technique use over time (Table 3). Specifically, results from the mixed effects regression model indicated that organizations with more proficient cultures at baseline exhibited greater increases in clinicians’ average use of CBT techniques across waves (Badj = .007, SE = .003, p = .048; percent change = 8%, d = .41) compared to organizations with less proficient cultures (percent change = − 2%, d = −.12). This effect remained significant after adjustment under the B-H false discover procedure. None of the organizational characteristics moderated the effect of time on clinicians’ average adjusted psychodynamic technique use either before or after the B-H correction (see Table 3).

Discussion

This study represents an opportunity to learn from a system encouraging EBP implementation [10, 14] and can inform future policy and research. First, in a public system supporting EBP implementation, EBP use increased over time and clinicians who participated in system-sponsored training initiatives increased their EBP use even more. Second, proficient organizational culture modified the effect of system efforts to increase implementation, which elucidates a potential future target for implementation strategy trials [28, 74]. While prior work has identified correlational associations between determinants like proficient organizational culture and outcomes, this study advances the field by prospectively elucidating the relationship between proficient culture and change over time. Despite our enthusiasm about these findings, it is important to note that they are preliminary given study limitations (i.e., self-reported measure of practice use and lack of a comparison system).

As expected, clinician use of EBPs modestly increased over the 5 years in which the system created a centralized infrastructure to de-silo EBP implementation [10]. Although only half the clinicians in the study participated in system-sponsored EBP initiatives, there was a significant increase in clinicians’ use of EBPs system-wide during the study period. Potential explanations include that supervisors trained in these EBPs through EBP initiatives may have supported clinicians not formally trained in applying these techniques, that peer interactions may have increased clinician interest in these techniques, or that the changing system culture might reflect new organizational priorities. Clinicians participating in system-sponsored EBP training initiatives increased their use of EBPs twice as much as those not formally trained. Although these increases are promising, the effects were not large in magnitude and raise questions about clinical significance. In a large system serving over 30,000 children and families annually, an increase of 6% might have a population mental health impact, but further research is needed to understand the clinical impact of small, system-wide increases in use of EBPs. Future studies evaluating the impact of system-wide implementation must include client outcomes using hybrid effectiveness-implementation designs [75] to ensure that the end goal of implementation efforts (i.e., client reach and outcomes) is achieved and that questions related to clinical significance and cost-effectiveness can be answered.

Consistent with the literature [53, 56, 76,77,78], clinicians working in organizations with more proficient cultures at baseline exhibited greater increases in CBT use. This extends previous findings by prospectively linking proficiency culture to increased EBP use over time. Clinicians working in such organizations may be more motivated to improve their competence in up-to-date practices and have more opportunities to participate in EBP training because of leaders’ EBP prioritization. Although proficient culture is a more distal construct on the causal implementation pathway, these preliminary results suggest the importance of attending to general organizational factors in the implementation process. Future work should clarify if proficient culture is a more powerful target for implementation efforts versus training and ongoing support initiatives or if they result in a synergistic effect.

Clinicians’ self-reported non-evidence-based technique use remained stable. Given that there is little knowledge of the effect of delivering EBPs alongside potentially contraindicated approaches [23], these findings point to the importance of attending to deimplementation [79, 80]. Further, this finding provides evidence of discriminant validity and suggests that the relationships observed between initiative participation and proficient organizational culture are not due to spurious findings or common method error variance [81].

Study methodological limitations include that this study was only conducted in one system and that CBT increases observed may be a national trend; that results may be influenced by cohort effects; that we relied on self-reported clinician use of techniques [82, 83] [84] rather than actual clinician behavior or patient outcomes; that the response rate was only 60%; that implementation strategies were not experimentally manipulated; and that these results may not generalize to smaller organizations and/or single clinician organizations given that we focused our sampling on organizations with larger programs. Study analytical limitations include that we conducted multiple tests of moderators given our exploratory aims, which increased the likelihood of a type I error; that the study may have been underpowered to detect effects of organizational moderators given the sample of 20 organizations at level 3; and that results included large confidence intervals on all effects that almost overlap with zero.

Conclusions

Despite substantial efforts to implement EBPs over the past 20 years, downstream effects have rarely been systematically measured [85]. This study provides insight into clinician self-reported change in use of EBPs for youth with psychiatric disorders and suggests that system efforts to implement EBPs can result in modest clinician behavior change. It also suggests the potential importance of attending to organizational factors when targeting implementation strategies. It is commendable that systems are prioritizing EBPs and applying principles from implementation science to engender clinician behavior change. If the findings from this study are replicated in other settings, future research should develop implementation strategies that move beyond training and consultation to target and align system characteristics like policies and funding and organizational characteristics such as proficient cultures to be optimally effective [86].

Change history

09 September 2019

.

Abbreviations

- CBT:

-

Cognitive-behavioral therapy

- DBHIDS:

-

Philadelphia Department of Behavioral Health and Intellectual Disability Services

- EBP:

-

Evidence-based practice

- EBPAS:

-

Evidence-Based Practice Attitude Scale

- EPIC:

-

Philadelphia Evidence Based Practice and Innovation Center

- ICS:

-

Implementation Climate Scale

- OSC:

-

Organizational social context

- TPC-FR:

-

Therapy Procedures Checklist-Family Revised

References

Hoagwood KE, Olin SS, Horwitz S, McKay M, Cleek A, Gleacher A, et al. Scaling up evidence-based practices for children and families in New York state: toward evidence-based policies on implementation for state mental health systems. J Clin Child Adolesc Psychol. 2014;43(2):145–57. https://doi.org/10.1080/15374416.2013.869749.

Bruns EJ, Kerns SE, Pullmann MD, Hensley SW, Lutterman T, Hoagwood KE. Research, data, and evidence-based treatment use in state behavioral health systems, 2001–2012. Psychiatr Serv. 2015;67(5):496–503. https://doi.org/10.1176/appi.ps.201500014.

Rubin RM, Hurford MO, Hadley T, Matlin SL, Weaver S, Evans AC. Synchronizing watches: the challenge of aligning implementation science and public systems. Admin Pol Ment Health. 2016;43(6):1023–8. https://doi.org/10.1007/s10488-016-0759-9.

Regan J, Lau AS, Barnett M, Stadnick N, Hamilton A, Pesanti K, et al. Agency responses to a system-driven implementation of multiple evidence-based practices in children’s mental health services. BMC Health Serv Res. 2017;17(1):671. https://doi.org/10.1186/s12913-017-2613-5.

Walker SC, Lyon AR, Aos S, Trupin EW. The consistencies and vagaries of the Washington state inventory of evidence-based practice: the definition of “evidence-based” in a policy context. Admin Pol Ment Health. 2017;44(1):42–54. https://doi.org/10.1007/s10488-015-0652-y.

Mark DD, Rene’e WL, White JP, Bransford D, Johnson KG, Song VL. Hawaii's statewide evidence-based practice program. Nurs Clin. 2014;49(3):275–90. https://doi.org/10.1016/j.cnur.2014.05.002.

Cristofalo MA. Implementation of health and mental health evidence-based practices in safety net settings. Soc Work Health Care. 2013;52(8):728–40. https://doi.org/10.1080/00981389.2013.813003.

Hemmelgarn AL, Glisson C, James LR. Organizational culture and climate: implications for services and interventions research. Clin Psychol. 2006;13(1):73–89. https://doi.org/10.1111/j.1468-2850.2006.00008.x.

Okamura KH, Wolk B, Courtney L, Kang-Yi CD, Stewart R, Rubin RM, et al. The price per prospective consumer of providing therapist training and consultation in seven evidence-based treatments within a large public behavioral health system: an example cost-analysis metric. Front Public Health. 2018;5:356. https://doi.org/10.3389/fpubh.2017.00356.

Powell BJ, Beidas RS, Rubin RM, Stewart RE, Wolk CB, Matlin SL, et al. Applying the policy ecology framework to Philadelphia’s behavioral health transformation efforts. Admin Pol Ment Health. 2016;43(6):909–26. https://doi.org/10.1007/s10488-016-0733-6.

Stewart RE, Marcus SC, Hadley TR, Hepburn BM, Mandell DS. State adoption of incentives to promote evidence-based practices in behavioral health systems. Psychiatr Serv. 2018;69(6):685–8. https://doi.org/10.1176/appi.ps.201700508.

Brown CH, Curran G, Palinkas LA, Aarons GA, Wells KB, Jones L, et al. An overview of research and evaluation designs for dissemination and implementation. Annu Rev Public Health. 2017;38:1–22. https://doi.org/10.1146/annurev-publhealth-031816-044215.

Starin AC, Atkins MS, Wehrmann KC, Mehta T, Hesson-McInnis MS, Marinez-Lora A, et al. Moving science into state child and adolescent mental health systems: Illinois' evidence-informed practice initiative. J Clin Child Adolesc Psychol. 2014;43(2):169–78. https://doi.org/10.1080/15374416.2013.848772.

Beidas RS, Aarons GA, Barg F, Evans AC, Hadley T, Hoagwood KE, et al. Policy to implementation: evidence-based practice in community mental health–study protocol. Implement Sci. 2013;8(1):38. https://doi.org/10.1186/1748-5908-8-38.

Brookman-Frazee L, Stadnick N, Roesch S, Regan J, Barnett M, Bando L, et al. Measuring sustainment of multiple practices fiscally mandated in children’s mental health services. Admin Pol Ment Health. 2016;43(6):1009–22. https://doi.org/10.1007/s10488-016-0731-8.

Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Admin Pol Ment Health. 2011;38(1):4–23. https://doi.org/10.1007/s10488-010-0327-7.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):53. https://doi.org/10.1186/s13012-015-0242-0.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):50. https://doi.org/10.1186/1748-5908-4-50.

Aarons GA, Ehrhart MG, Farahnak LR, Sklar M. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annu Rev Public Health. 2014;35:255–74. https://doi.org/10.1146/annurev-publhealth-032013-182447.

Cameron KS, Quinn RE. Diagnosing and changing organizational culture: based on the competing values framework. San Francisco: Wiley; 2011.

Hartnell CA, Ou AY, Kinicki A. Organizational culture and organizational effectiveness: a meta-analytic investigation of the competing values framework's theoretical suppositions. J Appl Psychol. 2011;96(4):677. https://doi.org/10.1037/a0021987.

Brownson RC, Royer C, Ewing R, McBride TD. Researchers and policymakers: travelers in parallel universes. Am J Prev Med. 2006;30(2):164–72. https://doi.org/10.1016/j.amepre.2005.10.004.

Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, et al. Predictors of community therapists’ use of therapy techniques in a large public mental health system. JAMA Pediatr. 2015;169(4):374–82. https://doi.org/10.1001/jamapediatrics.2014.3736.

Locke J, Lawson GM, Beidas RS, Aarons GA, Xie M, Lyon AR, et al. Individual and organizational factors that affect implementation of evidence-based practices for children with autism in public schools: a cross-sectional observational study. Implement Sci. 2019;14(1):29. https://doi.org/10.1186/s13012-019-0877-3.

Guerrero EG, Fenwick K, Kong Y. Advancing theory development: exploring the leadership–climate relationship as a mechanism of the implementation of cultural competence. Implement Sci. 2017;12(1):133. https://doi.org/10.1186/s13012-017-0666-9.

Williams NJ, Glisson C, Hemmelgarn A, Green P. Mechanisms of change in the ARC organizational strategy: increasing mental health clinicians’ EBP adoption through improved organizational culture and capacity. Admin Pol Ment Health. 2017;44(2):269–83. https://doi.org/10.1007/s10488-016-0742-5.

Saldana L, Chamberlain P, Wang W, Brown CH. Predicting program start-up using the stages of implementation measure. Admin Pol Ment Health. 2012;39(6):419–25. https://doi.org/10.1007/s10488-011-0363-y.

Lewis CC, Klasnja P, Powell BJ, Tuzzio L, Jones S, Walsh-Bailey C, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health. 2018;6:136. https://doi.org/10.3389/fpubh.2018.00136.

Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, et al. Enhancing the impact of implementation strategies in healthcare: a research agenda. Front Public Health. 2019;7:3. https://doi.org/10.1097/HCO.0000000000000067.

Williams NJ, Beidas RS. Annual research review: the state of implementation science in child psychology and psychiatry: a review and suggestions to advance the field. J Child Psychol Psychiatry. 2018; https://doi.org/10.1111/jcpp.12960.

Chambless DL, Ollendick TH. Empirically supported psychological interventions: controversies and evidence. Annu Rev Psychol. 2001;52(1):685–716. https://doi.org/10.1146/annurev.psych.52.1.685.

Garland AF, Brookman-Frazee L, Hurlburt MS, Accurso EC, Zoffness RJ, Haine-Schlagel R, et al. Mental health care for children with disruptive behavior problems: a view inside therapists' offices. Psychiatr Serv. 2010;61(8):788–95. https://doi.org/10.1176/ps.2010.61.8.788.

Hofmann SG, Asnaani A, Vonk IJ, Sawyer AT, Fang A. The efficacy of cognitive behavioral therapy: a review of meta-analyses. Cognit Ther Res. 2012;36(5):427–40. https://doi.org/10.1007/s10608-012-9476-1.

Weisz JR, Kuppens S, Ng MY, Eckshtain D, Ugueto AM, Vaughn-Coaxum R, et al. What five decades of research tells us about the effects of youth psychological therapy: a multilevel meta-analysis and implications for science and practice. Am Psychol. 2017;72(2) https://doi.org/10.1037/a0040360.

Zhou X, Hetrick SE, Cuijpers P, Qin B, Barth J, Whittington CJ, et al. Comparative efficacy and acceptability of psychotherapies for depression in children and adolescents: a systematic review and network meta-analysis. World Psychiatry. 2015;14(2):207–22. https://doi.org/10.1002/wps.20217.

Abbass AA, Rabung S, Leichsenring F, Refseth JS, Midgley N. Psychodynamic psychotherapy for children and adolescents: a meta-analysis of short-term psychodynamic models. J Am Acad Child Adolesc Psychiatry. 2013;52(8):863–75. https://doi.org/10.1016/j.jaac.2013.05.014.

De Nadai AS, Storch EA. Design considerations related to short-term psychodynamic psychotherapy. J Am Acad Child Adolesc Psychiatry. 2013;52(11):1213–4. https://doi.org/10.1016/j.jaac.2013.08.012.

Steel D. Repeated cross-sectional design. In: Encyclopedia of survery research methods. Edited by Layrakas PJ, vol. 2. Thousand Oaks, CA: SAGE Publications; 2008. p. 714–6.

Stirman SW, Buchhofer R, McLaulin JB, Evans AC, Beck AT. Public-academic partnerships: the Beck initiative: a partnership to implement cognitive therapy in a community behavioral health system. Psychiatr Serv. 2009;60(10):1302–4. https://doi.org/10.1176/ps.2009.60.10.1302.

Foa EB, Hembree EA, Cahill SP, Rauch SA, Riggs DS, Feeny NC, et al. Randomized trial of prolonged exposure for posttraumatic stress disorder with and without cognitive restructuring: outcome at academic and community clinics. J Consult Clin Psychol. 2005;73(5):953. https://doi.org/10.1037/0022-006X.73.5.953.

Beidas RS, Adams DR, Kratz HE, Jackson K, Berkowitz S, Zinny A, et al. Lessons learned while building a trauma-informed public behavioral health system in the city of Philadelphia. Eval Program Plann. 2016;59:21–32. https://doi.org/10.1016/j.evalprogplan.2016.07.004.

Linehan M. DBT skills training manual. New York: Guilford Publications; 2014.

Eyberg S. Parent-child interaction therapy: integration of traditional and behavioral concerns. Child Fam Behav Ther. 1988;10(1):33–46. https://doi.org/10.1300/J019v10n01_04.

Skriner LC, Wolk CB, Stewart RE, Adams DR, Rubin RM, Evans AC, et al. Therapist and organizational factors associated with participation in evidence-based practice initiatives in a large urban publicly-funded mental health system. J Behav Health Serv Res. 2017;45(2):174–86. https://doi.org/10.1007/s11414-017-9552-0.

Benjamin Wolk C, Marcus SC, Weersing VR, Hawley KM, Evans AC, Hurford MO, et al. Therapist-and client-level predictors of use of therapy techniques during implementation in a large public mental health system. Psychiatr Serv. 2016;67(5):551–7. https://doi.org/10.1176/appi.ps.201500022.

Babbar S, Adams DR, Becker-Haimes EM, Skriner LC, Kratz HE, Cliggitt L, et al. Therapist turnover and client non-attendance. Child Youth Serv Rev. 2018;93:12–6. https://doi.org/10.1016/j.childyouth.2018.06.026.

Weersing VR, Weisz JR, Donenberg GR. Development of the therapy procedures checklist: a therapist-report measure of technique use in child and adolescent treatment. J Clin Child Adolesc Psychol. 2002;31(2):168–80. https://doi.org/10.1207/S15374424JCCP3102_03.

Kolko DJ, Cohen JA, Mannarino AP, Baumann BL, Knudsen K. Community treatment of child sexual abuse: a survey of practitioners in the National Child Traumatic Stress Network. Admin Pol Ment Health. 2009;36(1):37–49. https://doi.org/10.1007/s10488-008-0180-0.

Do M, Warnick E, Weersing V. Examination of the psychometric properties of the Therapy Procedures Checklist-Family Revised. In: Poster presented at the annual meeting of the Association for Behavioral and Cognitive Therapies (ABCT). MD: National Harbor; 2012.

James LR, Demaree RG, Wolf G. Estimating within-group interrater reliability with and without response bias. J Appl Psychol. 1984;69(1):85. https://doi.org/10.1037/0021-9010.69.1.85.

Brown RD, Hauenstein NM. Interrater agreement reconsidered: an alternative to the rwg indices. Organ Res Methods. 2005;8(2):165–84. https://doi.org/10.1177/1094428105275376.

Bliese PD. Within-group agreement, non-independence, and reliability: implications for data aggregation and analysis. In: Klein KJ, SWJ K, editors. Multilevel theory, research, and methods in organizations: foundations, extensions, and new directions. San Francisco: Jossey-Bass; 2000. p. 349–81.

Glisson C, Landsverk J, Schoenwald SK, Kelleher K, Hoagwood KE, Mayberg S, et al. Assessing the organizational social context (OSC) of mental health services: implications for research and practice. Admin Pol Ment Health. 2008;35:98–113. https://doi.org/10.1007/s10488-007-0148-5.

Williams NJ, Glisson C. Testing a theory of organizational culture, climate and youth outcomes in child welfare systems: a United States national study. Child Abuse Negl. 2014;38(4):757–67. https://doi.org/10.1016/j.chiabu.2013.09.003.

Aarons GA, Glisson C, Green PD, Hoagwood KE, Kelleher KJ, Landsverk JA. The organizational social context of mental health services and clinician attitudes toward evidence-based practice: a United States national study. Implement Sci. 2012;7(1):56. https://doi.org/10.1186/1748-5908-7-56.

Olin SS, Williams NJ, Pollock M, Armusewicz K, Kutash K, Glisson C, et al. Quality indicators for family support services and their relationship to organizational social context. Admin Pol Ment Health. 2014;41(1):43–54. https://doi.org/10.1007/s10488-013-0499-z.

Aarons GA, Ehrhart MG, Farahnak LR. The implementation leadership scale (ILS): development of a brief measure of unit level implementation leadership. Implement Sci. 2014;9(1):45. https://doi.org/10.1186/1748-5908-9-45.

Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the implementation climate scale (ICS). Implement Sci. 2014;9(1):157. https://doi.org/10.1186/s13012-014-0157-1.

Beidas RS, Stewart RE, Adams DR, Fernandez T, Lustbader S, Powell BJ, et al. A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system. Admin Pol Ment Health. 2015; https://doi.org/10.1007/s10488-015-0705-2.

Beidas RS, Skriner L, Adams D, Wolk CB, Stewart RE, Becker-Haimes E, et al. The relationship between consumer, clinician, and organizational characteristics and use of evidence-based and non-evidence-based therapy strategies in a public mental health system. Behav Res Ther. 2017;99:1–10. https://doi.org/10.1016/j.brat.2017.08.011.

Aarons GA, Glisson C, Hoagwood KE, Kelleher K, Landsverk J, Cafri G. Psychometric properties and US national norms of the evidence-based practice attitude scale (EBPAS). Psychol Assess. 2010;22(2):356. https://doi.org/10.1037/a0019188.

Garner BR, Godley SH, Bair CM. The impact of pay-for-performance on therapists' intentions to deliver high-quality treatment. J Subst Abus Treat. 2011;41(1):97–103. https://doi.org/10.1016/j.jsat.2011.01.012.

Henggeler SW, Chapman JE, Rowland MD, Halliday-Boykins CA, Randall J, Shackelford J, et al. Statewide adoption and initial implementation of contingency management for substance-abusing adolescents. J Consult Clin Psychol. 2008;76(4):556–67. https://doi.org/10.1037/0022-006X.76.4.556.

Jensen-Doss A, Hawley KM, Lopez M, Osterberg LD. Using evidence-based treatments: the experiences of youth providers working under a mandate. Prof Psychol Res Pr. 2009;40(4):417. https://doi.org/10.1037/a0014690.

Williams JR, Williams WO, Dusablon T, Blais MP, Tregear SJ, Banks D. Evaluation of a randomized intervention to increase adoption of comparative effectiveness research by community health organizations. J Behav Health Serv Res. 2014;41(3):308–23. https://doi.org/10.1007/s11414-013-9369-4.

Enders CK. Applied missing data analysis. New York: Guildford Press; 2010.

Raudenbush SW, Bryk AS. Hierarchical linear models: applications and data analysis methods, vol. 1. Thousand Oaks, CA: Sage Publications; 2002.

Raudenbush SW, Bryk AS, Congdon R. HLM 6 for windows. In., 6.08 edn. Lincolnwood: Scientific software international, Inc.; 2004.

Raftery AE. Bayesian model selection in social research. Sociol Methodol. 1995;25:111–64. https://doi.org/10.2307/271063.

Rothman K: No adjustments are needed for multiple comparisons. 1990; 1:43–46.

Michels K, Rosner B. Data trawling: to fish or not to fish. Lancet. 1996;348:1152–3. https://doi.org/10.1016/S0140-6736(96)05418-9.

Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Ser B Methodol. 1995;57(1):289–300. https://doi.org/10.1111/j.2517-6161.1995.tb02031.x.

Salsberg E, Quigley L, Mehfoud N, Acquaviva KD, Wyche K, Silwa S: Profile of the social work workforce. The George Washington University; 2017. https://www.cswe.org/Centers-Initiatives/Initiatives/National-Workforce-Initiative/SW-Workforce-Book-FINAL-11-08-2017.aspx. Accessed: April 23, 2019.

Williams NJ. Multilevel mechanisms of implementation strategies in mental health: integrating theory, research, and practice. Admin Pol Ment Health. 2016;43(5):783–98. https://doi.org/10.1007/s10488-015-0693-2.

Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217–26. https://doi.org/10.1097/MLR.0b013e3182408812.

Glisson C, Schoenwald SK, Kelleher K, Landsverk J, Hoagwood KE, Mayberg S, et al. Therapist turnover and new program sustainability in mental health clinics as a function of organizational culture, climate, and service structure. Admin Pol Ment Health. 2008;35(1–2):124–33. https://doi.org/10.1007/s10488-007-0152-9.

Glisson C, Hemmelgarn A, Green P, Williams NJ. Randomized trial of the availability, responsiveness and continuity (ARC) organizational intervention for improving youth outcomes in community mental health programs. J Am Acad Child Adolesc Psychiatry. 2013;52(5):493–500. https://doi.org/10.1016/j.jaac.2012.05.010.

Glisson C. Assessing and changing organizational culture and climate for effective services. Res Soc Work Pract. 2007;17(6):736–47. https://doi.org/10.1177/1049731507301659.

Wang V, Maciejewski ML, Helfrich CD, Weiner BJ. Working smarter not harder: coupling implementation to de-implementation. Healthcare. 2018;6:104–7. https://doi.org/10.1016/j.hjdsi.2017.12.004.

McKay VR, Morshed AB, Brownson RC, Proctor EK, Prusaczyk B. Letting go: conceptualizing intervention de-implementation in public health and social service settings. Am J Community Psychol. 2018;62:189–202. https://doi.org/10.1002/ajcp.12258.

Podsakoff N. Common method biases in behavioral research: a critical review of the literature and recommended remedies. J Appl Psychol. 2003;88(5):879–903. https://doi.org/10.1037/0021-9010.88.5.879.

Bellg AJ, Borrelli B, Resnick B, Hecht J, Minicucci DS, Ory M, et al. Enhancing treatment fidelity in health behavior change studies: best practices and recommendations from the NIH behavior change consortium. Health Psychol. 2004;23(5):443–51. https://doi.org/10.1037/0278-6133.23.5.443.

Hogue A, Dauber S, Lichvar E, Bobek M, Henderson CE. Validity of therapist self-report ratings of fidelity to evidence-based practices for adolescent behavior problems: correspondence between therapists and observers. Admin Pol Ment Health. 2015;42(2):229–43. https://doi.org/10.1007/s10488-014-0548-2.

Hurlburt MS, Garland AF, Nguyen K, Brookman-Frazee L. Child and family therapy process: concordance of therapist and observational perspectives. Admin Pol Ment Health. 2010;37(3):230–44. https://doi.org/10.1007/s10488-009-0251-x.

McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments: a review of current efforts. Am Psychol. 2010;65(2):73–84. https://doi.org/10.1037/a0018121.

Aarons GA, Ehrhart MG, Farahnak LR, Hurlburt MS. Leadership and organizational change for implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implement Sci. 2015;10(1):11. https://doi.org/10.1186/s13012-014-0192-y.

Acknowledgements

We are grateful for the support that the Department of Behavioral Health and Intellectual Disability Services has provided us to conduct this work within their system, for the Evidence Based Practice and Innovation (EPIC) group, and for the partnership provided to us by participating agencies, therapists, youth, and families.

Availability of data and material

RB and NW had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. Requests for access to deidentified data can be sent to the Penn ALACRITY Data Sharing Committee by contacting research coordinator, Kelly Zentgraf at zentgraf@upenn.edu, 3535 Market Street, 3rd Floor, Philadelphia, PA 19107, 215–746-6038.

Funding

Funding for this research project was supported by the following grants from NIMH (K23 MH099179; Beidas). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

RB is the principal investigator and thus takes responsibility for study design, data collection, and the integrity of the data. She designed the study, was the primary writer of the manuscript, and approved all changes. NW conceptualized and conducted the analyses. EBH and SM provided support around data analysis and interpretation. The following authors (GA, FB, AE, KJ, DJ, TH, KH, GN, RR, SS, DM) were involved in the design and execution of the study. LW, DA, and KZ have coordinated the study and have contributed to the design of the study. All authors reviewed and provided feedback for this manuscript. The final version of this manuscript was vetted and approved by all authors.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The institutional review board (IRB) at the City of Philadelphia approved this study on October 2nd, 2012 (Protocol Number 2012-41). The City of Philadelphia IRB serves as the IRB of record. The University of Pennsylvania also approved all study procedures on September 14th, 2012 (Protocol Number 816619). Written informed consent and/or assent was obtained for all study participants.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Beidas, R.S., Williams, N.J., Becker-Haimes, E.M. et al. A repeated cross-sectional study of clinicians’ use of psychotherapy techniques during 5 years of a system-wide effort to implement evidence-based practices in Philadelphia. Implementation Sci 14, 67 (2019). https://doi.org/10.1186/s13012-019-0912-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-019-0912-4