Abstract

Background

Epidemics of infectious disease occur frequently in low-income and humanitarian settings and pose a serious threat to populations. However, relatively little is known about responses to these epidemics. Robust evaluations can generate evidence on response efforts and inform future improvements. This systematic review aimed to (i) identify epidemics reported in low-income and crisis settings, (ii) determine the frequency with which evaluations of responses to these epidemics were conducted, (iii) describe the main typologies of evaluations undertaken and (iv) identify key gaps and strengths of recent evaluation practice.

Methods

Reported epidemics were extracted from the following sources: World Health Organization Disease Outbreak News (WHO DON), UNICEF Cholera platform, Reliefweb, PROMED and Global Incidence Map. A systematic review for evaluation reports was conducted using the MEDLINE, EMBASE, Global Health, Web of Science, WPRIM, Reliefweb, PDQ Evidence and CINAHL Plus databases, complemented by grey literature searches using Google and Google Scholar. Evaluation records were quality-scored and linked to epidemics based on time and place. The time period for the review was 2010–2019.

Results

A total of 429 epidemics were identified, primarily in sub-Saharan Africa, the Middle East and Central Asia. A total of 15,424 potential evaluations records were screened, 699 assessed for eligibility and 132 included for narrative synthesis. Only one tenth of epidemics had a corresponding response evaluation. Overall, there was wide variability in the quality, content as well as in the disease coverage of evaluation reports.

Conclusion

The current state of evaluations of responses to these epidemics reveals large gaps in coverage and quality and bears important implications for health equity and accountability to affected populations. The limited availability of epidemic response evaluations prevents improvements to future public health response. The diversity of emphasis and methods of available evaluations limits comparison across responses and time. In order to improve future response and save lives, there is a pressing need to develop a standardized and practical approach as well as governance arrangements to ensure the systematic conduct of epidemic response evaluations in low-income and crisis settings.

Similar content being viewed by others

Background

Infectious disease epidemics continue to pose a substantial risk globally [1]. Epidemics routinely occur in low-income and humanitarian settings [2]. Populations in these settings often do not have the resources to effectively respond to epidemics [3] and as a result are at higher risk of increased morbidity and mortality [4]. Globally, more than 700 million people live in low-income countries [5], while 2 billion live in fragile or conflict-affected settings [6]. Responses to large-scale epidemics or epidemics of newly emergent pathogens tend to generate global attention and corresponding responses incur scrutiny [7,8,9]. However, evidence on responses to smaller-scale epidemics or epidemics involving well-known pathogens (e.g. measles, cholera) for which effective control measures exist is thought to be limited [10]. Evidence from some limited contexts points to weaknesses in responses ranging from detection, investigation to effective and timely response [11, 12]. However, the practice of epidemic response evaluation has not been systematically assessed in low-income and humanitarian settings. Within public health programming, effective evaluations generate critical evidence and allow for systematic understanding, improvement and accountability of health action [13]. We sought to review the extent to which evaluations of epidemic responses are actually conducted in low-income and crisis settings and describe key patterns in evaluation practice. Specifically, we aimed to (i) identify epidemics reported in low-income and crisis settings, by aetiologic agent, over a recent period; (ii) determine the frequency with which evaluations of responses to these epidemics were conducted; (iii) describe the main typologies of evaluations undertaken; and (iv) identify key gaps and strengths of recent evaluation practice, so as to formulate recommendations.

Methods

Scope of the review

This review (PROSPERO registration CRD42019150693) focuses on recent epidemics in low-income settings, defined using the 2018 World Bank criteria [14], as well as epidemics occurring in settings with ongoing humanitarian responses, as reported in the United Nations Office for the Coordination of Humanitarian Affairs’ annual Global Humanitarian Overview. Our search focused on epidemic-prone pathogens commonly occurring in low resource or humanitarian settings and which presented an immediate threat to life. For this reason, our search excluded HIV [15], tuberculosis [16] and Zika [17]. Epidemics occurring within healthcare settings only or within animal populations were considered outside the scope of this review. In order to capture recent trends and assess contemporary reports, we focused on the period 2010–2019.

Epidemics

Search strategy

The following sources were reviewed in order to compile a list of reported epidemics: World Health Organization Disease Outbreak News (WHO DON) [18], UNICEF Cholera platform [19], Reliefweb [20], PROMED [21] and Global Incidence Map [22]. In line with WHO guidance on infectious disease control in emergencies [23], one suspected case of the following was considered to be an epidemic: acute haemorrhagic fevers (Ebola, Lassa fever, Rift valley fever, Crimean-Congo haemorrhagic fever), anthrax, cholera, measles, typhus, plague and polio. For the remainder of the pathogens, we defined an epidemic as an unusual increase in incidence relative to a previously established baseline in a given setting.

We reviewed WHO DON narrative reports to extract metadata on location (country), year, month and pathogen. Reliefweb was searched for reported epidemics using the search engine and the disaster type filter. For the PROMED database, only epidemics rated as 3 or higher in the 5-point rating system (which reflected a higher degree of certainty in the scale of the epidemic and its potential severity) and in which incident cases and deaths were reported were considered for inclusion. The Global Incident Map database was searched utilizing the inbuilt search function filtering results that were out of scope (wrong location, pathogen, etc.) at the source.

We collated all epidemic records into a single database and removed duplicate reports of the same epidemic based on first date and location of occurrence; duplicated included multiple reports within any given database (e.g. an update on an earlier reported epidemic) and reports of the same epidemic in multiple databases. As phylogenetic or spatio-temporal reconstructions of epidemics were mostly unavailable, we assumed that reports of the same pathogen from within the same country and 4-month period referred to the same single epidemic. We decided to split cross-border epidemics (e.g. the West Africa 2013–2016 Ebola epidemic) into one separate epidemic for each country affected, recognizing that responses would have differed considerably across these countries.

Screening and data extraction

We compiled epidemic reports from various sources into one database. For each epidemic, information on location (country), year, month and pathogen was extracted using a standardized form (see Additional file 1). For reach evaluation record, information was extracted on a number of variables including type of evaluation, location (country), year, month and pathogen using a standardized form (see Additional file 2).

Evaluations

Search strategy

To determine the availability and quality of epidemic response evaluations within recent epidemics, we undertook a systematic review using PRISMA criteria including peer-reviewed and grey literature. We identified peer-reviewed reports by consulting the MEDLINE, EMBASE, Global Health, Web of Science, Western Pacific Region Index Medicus, PDQ Evidence and Cumulative Index to Nursing and Allied Health Literature (CINAHL) Plus databases. We utilized Google, Google Scholar and Reliefweb, to undertake a comprehensive search of the grey literature. Given previously described challenges in using such search engines [24], we reviewed results from the first 150 hits only. We searched the webpages of major humanitarian and health organizations including the World Health Organization (WHO), United Nations Children’s Fund (UNICEF), Save the Children, International Federation of Red Cross and Red Crescent Societies (IFRC) and Médecins Sans Frontières (MSF) for evaluation records and contacted these organizations to source non-public evaluations identified through this webpage search. Overarching conceptual search terms synonymous with outbreaks, evaluations and humanitarian crises were utilized. The full search strategy can be found in Additional file 3.

We cross-referenced the reported epidemics with the evaluation reports, matching on date (month and year) and location.

Inclusion criteria

We limited our search to any record that met the following criteria: any document published in the period 2010–2019 in the English and French languages that examined epidemics within low-income countries and humanitarian settings, as defined above. There were no restrictions on study design.

We excluded records relying exclusively on mathematical models of potential responses as the review was focused on responses that were operationally implemented. We also excluded evaluations of a novel diagnostic or treatment; evaluations that focussed on preparedness, resilience or recovery from an epidemic, as opposed to the epidemic period itself; records addressing other health issues (e.g. reproductive health) in the context of an epidemic; epidemiological studies of the epidemic (e.g. transmission patterns, risk factors) that did not explore the response; records classified as clinical research, opinion or news pieces; and abstracts for which full records could not be accessed.

In assessing the eligibility of records for narrative synthesis, we used a broad definition of epidemic evaluation as one in which:

-

I.

An epidemic was reported to have occurred

-

II.

The intervention(s) being evaluated began after the start of the epidemic and were specifically implemented in response to the epidemic

-

III.

The intervention(s) were assessed on at least one specified criterion (i.e. the report was not merely a description of activities).

Screening and data extraction

After removing duplicates, two reviewers independently assessed the relevance of all titles and abstracts based on the inclusion criteria. We retrieved the full text of each article initially meeting the criteria. Two researchers then independently confirmed that full-text records met inclusion criteria. Any disagreements were resolved through discussion and consensus with a third reviewer.

We used the following definitions to classify the type of evaluations retrieved in this search:

-

Formative evaluation: Evaluation which assesses whether a program or program activity is feasible, appropriate and acceptable before it is fully implemented

-

Process evaluation: Evaluation which determines whether program activities have been implemented as intended

-

Output evaluation: Evaluation which assesses progress in short-term outputs resulting from program implementation

-

Outcome/performance evaluation: Evaluation which assesses program effects in the target population by measuring the progress in the outcomes or outcome objectives that the program is meant to achieve

-

Impact evaluation: An evaluation that considers ‘positive and negative, primary and secondary long-term effects produced by a development intervention, directly or indirectly, intended or unintended.’ [25]

Analysis

We undertook a narrative synthesis of the findings and tabulated key characteristics of evaluations. We created an evaluation quality checklist derived from existing standards to grade the quality of the evaluation records. Reference standards included the United Nations’ Evaluation Group (UNEG) Quality Checklist [26], the European Commission Quality Assessment for Final Evaluation Reports [27] and the UNICEF-Adapted UNEG Quality Checklist [28]. We derived 13 evaluation criteria grouped into 4 equally weighted categories: scope, methodology, findings and recommendations. The checklist can be found in Additional file 3.

Role of the funding source

The funder of the study had no role in study design, data collection, data analysis, data interpretation or writing of the report. The corresponding author had full access to all the data in the study and had final responsibility for the decision to submit for publication.

Results

Epidemics

A total of 429 epidemics were identified across 40 low-income and crisis affected countries during the study period (Table 1). The most common pathogens reported were Vibrio cholerae, measles, poliovirus and Lassa virus. Epidemics were reported primarily in sub-Saharan Africa, the Middle East and Central Asia. Generally, the more populous countries in each region experienced the highest number of epidemics including Nigeria and the Democratic Republic of Congo in the AFRO region and Pakistan and Sudan in the EMRO region.

Evaluations

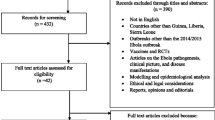

A total of 15,124 records were identified and screened based on title and abstract (Fig. 1). The full text of 699 records was assessed for eligibility. A final tally of 132 records was carried forward for cross referencing against reported epidemics and for narrative synthesis [29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160]. See Additional file 2 for full list of included evaluations.

Evaluation characteristics

More than half of the evaluation records assessed outcome of the response, with a substantial number of process and output evaluations (Table 2). Very few evaluations could be classified as impact or formative evaluations while 4 studies were considered to be of mixed typology. Half of the evaluations reported (n = 66) utilizing a mix of primary and secondary data while approximately a quarter of evaluations utilized either mainly primary (n = 36) or secondary data (n = 30). Additionally, more than half of evaluations (n = 78) collected a mixture of quantitative and qualitative data while a smaller proportion relied on either qualitative data (n = 18) and quantitative data (n = 37). Few records (n = 9) had no explicit evaluation framework or criteria while the majority (n = 123) did refer to some evaluation criteria including the OECD evaluation criteria. However, only few (n = 10) presented an explicitly named framework which anchored the evaluation approach. Effectiveness was the most widely used evaluation criterion while the most widely evaluated activities included coordination, vaccination, contact tracing, case management and community sensitization. See Additional file 2 for full results. There was an improvement in the availability of evaluation reports over time with fewer evaluations in the first 3 years of the decade (n = 10) compared to the last 3 years (n = 43) (Fig. 2). Lastly, where evaluations were published, there was an average of 2 years between the onset of an epidemic and publication of the response evaluation.

Quality findings

Quality scores of evaluation reports ranged from 31 to 96 on a 100-point scale. The average quality score of evaluations in low-income settings was 76 compared to 68 in middle-income countries. The average quality scores of evaluations undertaken in humanitarian versus non-humanitarian settings did not differ substantially (76.7 vs 75.2) nor between mid-epidemic versus post epidemic (74.4 vs 76.9). Quality scores ranged amongst disease pathogens with the highest average quality scores for evaluation of measles epidemics (88.4) and the lowest for evaluations of leishmaniosis epidemics (57.6). Additionally, there appeared to be an improvement in the quality of evaluation reports over time with reports in the first 3 years of the decade averaging a score of 64 compared to a score of 80 in the last 3 years. Although the majority of evaluations (n = 104) did identify and utilize existing information and documentation, few (n = 28) provided an appraisal of quality or reliability of these data sources. For the most part, evaluation studies did score well in presenting the rationale of the evaluation (average score = 0.88), providing the contextual information (average score 0.92) and clarifying the evaluation timeline (average score 0.82). They scored less well in providing sufficient detail on the methodological approach suitable to the scope (average score 0.77) as well as detailing limitations of the evaluation (average score 0.61).

Evaluation coverage

We were able to link approximately 9% (n = 39) of epidemics with one or more response evaluations (Table 3). Some evaluation reports (n = 18) covered responses in multiple countries. A large number of evaluations focused on the same epidemic; for example, 47 evaluations were undertaken to assess the West Africa Ebola epidemic (2013–2016). There were approximately equal numbers of post-epidemic (56%) and mid-epidemic evaluations (44%). The majority of epidemic response evaluations (87%) were undertaken in countries which had experienced humanitarian emergencies during the study period. Furthermore, 83% of response evaluations were undertaken in the WHO Africa region, 8% in the Eastern Mediterranean region and the remainder in the Americas region. Two evaluations could not be linked to an epidemic as the epidemic occurred outside of the study period (prior to 2010).

Coverage of response evaluations varied by disease (Fig. 3). Ebola epidemics had the highest coverage of response evaluations with 64% of reported epidemics having a response evaluation with Lassa fever epidemics having the lowest coverage (6%). No response evaluations were found for epidemics of anthrax, brucellosis, diphtheria, hepatitis E, Japanese encephalitis, malaria, Marburg haemorrhagic fever, Meningococcal disease and Rift Valley fever epidemics.

Discussion

To our knowledge, this is the first study to systematically explore the coverage and characteristics of epidemic evaluations in low resource and humanitarian settings. The low proportion of epidemics with evidence of evaluations in settings with low resources and high needs suggests an inequity [3] requiring urgent addressing. The lack of evaluations also points to a deficit in the accountability to affected populations, a key principle in humanitarian response [161]. Without the availability of rigorous, high quality and standardized response evaluations, affected populations are unable to hold responders to account and have no recourse to redress [29].

The 2-year delay between the onset of an epidemic and the publication of an evaluation report is a barrier to efficient dissemination of response findings. Reducing this delay can potentially be of use in addressing existing delays in global disease response [162]. More importantly, it represents a missed opportunity to enact changes in a timely manner.

There was considerable variability in the criteria considered by the evaluations, including quality, coverage, efficiency, effectiveness, relevance, appropriateness, fidelity or adherence, acceptability and feasibility. Various combinations of these criteria were used to assess a large number of response activities. Within a given epidemic as well as across epidemics, individual evaluations utilized a wide array of differing assessment criteria and assessed response activities. This variability combined with a lack of an overarching evaluation framework makes it difficult to compare evaluations to the same response or generalize their conclusions. This finding is consistent with a previous study on the use of public health evaluation frameworks in emergencies [1] and underscores a need for an approach and corresponding toolset that standardizes the evaluation of epidemics in these settings. We have previously proposed an overarching framework for such a unifying approach [1].

The term ‘impact’ was frequently found in the assessed evaluations, but very few of the assessed evaluations could be classified as impact evaluations, reflecting the relatively high technical and resource barriers required to conduct a robust impact evaluation. Furthermore, there were marked differences in the number of evaluations by disease pathogen. Whereas one would expect well-characterized diseases with frequent epidemics to have the most evaluations, the opposite was largely found. Approximately two thirds of the Ebola epidemics recorded had response evaluations compared to 8% of cholera epidemics and 5% of Lassa fever epidemics. This is despite the annual attributable deaths due to cholera being 120,000 [163] and Lassa fever 5000 [164]. This finding is in line with previous research suggesting that more severe epidemics or epidemics that threaten large numbers of people do not necessarily receive more timely response [162]. The overrepresentation of Ebola response evaluations is perhaps reflective of a number of factors such as the unprecedented scale and better resourcing of the response of the West African outbreak. Superficially, this overrepresentation could perhaps be due to the poor containment efforts at the outset of the epidemic leading to international spread and in turn generating higher international attention and scrutiny. However, the scale-up and securitization of the response and subsequent increased scrutiny may have also reflected the proximity of the epidemic to developed countries [165, 166].

Implications of this study

The gaps identified in this review are particularly pertinent to future evaluations of the COVID-19 pandemic which has reached most low-income and humanitarian settings [167]. Infectious disease epidemics have been known to exploit and exacerbate social inequalities within societies for some time [168, 169]. This review highlights the current global inequality in the response to these epidemics as gauged by the number of epidemics in low-income settings and the paucity of evaluation reports. This nonexistence or lack of publication of these critical evaluations prevents improvements to future public health response. The lack of uniformity of available evaluations limits comparison of the findings across responses and across time, precluding tracking of whether epidemic responses do in fact improve over time, globally or at regional level. The quality limitations of some of the evaluations hinder the strength of inference and applicability of their findings. There is a need to overcome this limitation in order to enable future research to be conducted on the findings of response valuations. More specifically, future reviews of epidemic response should attempt to synthesize quantitative effects of response interventions and may benefit from SWiM guidelines where appropriate [170].

Limitations

Our study relied overwhelmingly on publicly available evaluations. It is possible that the disparity between the number of epidemics, their responses and their subsequent evaluations could be overstated as evaluation findings might simply be kept internal and not shared more widely. However, the effect is largely the same as internal evaluations are only of benefit to the commissioning organization and cannot be used more widely. Additionally, we assumed that all epidemics were responded to and therefore should have been evaluated. However, we did not know the true proportion of epidemics that were responded to and therefore could potentially overestimate the gap between evaluations and epidemics. On the other hand, the use of a 4-month decision rule to combine multiple reports of epidemics within the same country could have resulted in an underestimate of the total number of epidemics and thus an overestimate of evaluation coverage. We did not look at records that were not written in English or French and could potentially have missed some evaluations.

Conclusion

The relative paucity of evaluated epidemics, the disproportionate number of evaluations focusing on a limited number of epidemics together with constrained resource availability in low-income settings suggests the need for a governance arrangement or systematic mechanism that would trigger the conduct of evaluations, no matter what. The need for strengthening global governance mechanisms related to infectious disease epidemics and related challenges have been discussed [171, 172]. We suggest that arrangements should cover the criteria that should trigger an evaluation, the timing of evaluation, the composition and affiliation of the evaluation team, funding, minimum evaluation standards (e.g. a common scope and framework) and publication steps.

Approximately 2 billion people live in conflict-affected or fragile states and are at risk of increased morbidity and mortality due to epidemics every year. Robust epidemic response evaluations seek to improve response through critically assessing the performance of response interventions in a given context. However, evaluations of epidemic response are not a stand-alone activity but rather must be integrated into a cycle of preparedness and recovery in order to reach their full utility [173]. The lessons learned from an evaluation should concretely support all responders to better prepare for similar epidemic and to support health system recovery.

Availability of data and materials

All data generated or analysed during this study are included in this published article and its supplementary information files.

References

Warsame A, Blanchet K, Checchi F. Towards systematic evaluation of epidemic responses during humanitarian crises: A scoping review of existing public health evaluation frameworks. BMJ Glob Heal. 2020;5:e002109.

Spiegel PB, Checchi F, Colombo S, Paik E. Health-care needs of people affected by conflict: future trends and changing frameworks. Lancet. 2010;375:341–5. https://doi.org/10.1016/S0140-6736(09)61873-0.

Bhutta ZA, Sommerfeld J, Lassi ZS, Salam RA, Das JK. Global burden, distribution, and interventions for infectious diseases of poverty. Infect Dis Poverty. 2014;3:21. https://doi.org/10.1186/2049-9957-3-21.

Quinn SC, Kumar S. Health inequalities and infectious disease epidemics: A challenge for global health security. Biosecur Bioterror. 2014;12:263–73.

World Bank. Population, total | Data. World Bank Databank. 2020; https://data.worldbank.org/indicator/SP.POP.TOTL. Accessed 22 Apr 2020.

UNOCHA. Global Humanitarian Overview 2019: United Nations Coordinated Support to People Affected by Disaster and Conflict. 2018. www.unocha.org/datatrends2018. Accessed 9 July 2019.

Harvard Global Health Institute. Global Monitoring of Disease Outbreak Preparedness: Preventing the Next Pandemic. Cambridge: Harvard University; 2018. https://globalhealth.harvard.edu/files/hghi/files/global_monitoring_report.pdf. Accessed 6 Sept 2019.

Daily chart - Coronavirus research is being published at a furious pace | Graphic detail | The Economist. The Economist. 2020. https://www.economist.com/graphic-detail/2020/03/20/coronavirus-research-is-being-published-at-a-furious-pace. Accessed 23 Apr 2020.

Ballabeni A, Boggio A. Publications in PubMed on Ebola and the 2014 outbreak. F1000Res. 2015;4:68.

Rull M, Kickbusch I, Lauer H. Policy Debate | International Responses to Global Epidemics: Ebola and Beyond. Rev Int Polit développement. 2015;6. https://doi.org/10.4000/poldev.2178.

Bruckner C, Checchi F. Detection of infectious disease outbreaks in twenty-two fragile states, 2000-2010: A systematic review. Confl Heal. 2011;5:1–10. https://doi.org/10.1186/1752-1505-5-13.

Kurup KK, John D, Ponnaiah M, George T. Use of systematic epidemiological methods in outbreak investigations from India, 2008–2016: A systematic review. Clin Epidemiol Glob Heal. 2019;7:648–53.

Program Performance and Evaluation Office. Framework for Program Evaluation. Centers for Disease Control and Prevention. 2017. https://www.cdc.gov/eval/framework/index.htm. Accessed 22 Apr 2020.

World Bank. World Bank Country and Lending Groups. World Bank Databank. 2019; https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups. Accessed 31 May 2020.

Deeks SG, Lewin SR, Havlir DV. The end of AIDS: HIV infection as a chronic disease. Lancet. 2013;382:1525–33. https://doi.org/10.1016/S0140-6736(13)61809-7.

Zaman K. Tuberculosis: A global health problem. J Health Popul Nutr. 2010;28:111–3. https://doi.org/10.3329/jhpn.v28i2.4879.

World Health Organization (WHO). Fifth meeting of the Emergency Committee under the International Health Regulations (2005) regarding microcephaly, other neurological disorders and Zika virus. 2016. https://www.who.int/en/news-room/detail/18-11-2016-fifth-meeting-of-the-emergency-committee-under-the-international-health-regulations-(2005)-regarding-microcephaly-other-neurological-disorders-and-zika-virus. Accessed 23 July 2020.

World Health Organization (WHO). Disease Outbreak News (DONs). 2019. https://www.who.int/csr/don/en/. Accessed 31 May 2020.

UNICEF. WCA Cholera Platform. 2019. https://www.plateformecholera.info/. Accessed 31 May 2020.

Reliefweb. Disasters. 2020. https://reliefweb.int/disasters. Accessed 31 May 2020.

ProMED-mail. https://promedmail.org/. Accessed 31 May 2020.

Global Incident Map Displaying Outbreaks Of All Varieties Of Diseases. http://outbreaks.globalincidentmap.com/. Accessed 31 May 2020.

Connolly MA. Communicable disease control in emergencies: a field manual edited by. 2005. https://apps.who.int/iris/bitstream/handle/10665/96340/9241546166_eng.pdf?sequence=1. Accessed 21 Aug 2019.

Paez A. Gray literature: An important resource in systematic reviews. J Evid Based Med. 2017;10:233–40. https://doi.org/10.1111/jebm.12266.

OECD. Development Results-An Overview of Results Measurement and Management. www.oecd.org/dac. Accessed 1 June 2020.

UNEG. Detail of UNEG Quality Checklist for Evaluation Terms of Reference and Inception Reports. 2010. http://www.unevaluation.org/document/detail/608. Accessed 1 June 2020.

European Commission. ANNEX 10: TAXUD QUALITY ASSESSMENT FORM Quality Assessment for Final Evaluation Report-Quality Assessment for Final Evaluation Report. 2014.

UNICEF. UNICEF-Adapted UNEG Quality Checklist for Evaluation Terms of Reference. 2017.

Altmann M, Suarez-Bustamante M, Soulier C, Lesavre C, Antoine C. First Wave of the 2016-17 Cholera Outbreak in Hodeidah City, Yemen - Acf Experience and Lessons Learned. PLoS Curr. 2017. https://doi.org/10.1371/currents.outbreaks.5c338264469fa046ef013e48a71fb1c5.

Cavallaro EC, Harris JR, Da Goia MS, Dos Santos Barrado JC, Da Nóbrega AA, De Alvarenga IC, et al. Evaluation of pot-chlorination of wells during a cholera outbreak, Bissau, Guinea-Bissau, 2008. J Water Health. 2011;9:394–402.

Lowe T. Emergency Health Unit After Action Review Madagascar July 2019; 2019.

Ashbaugh HR, Kuang B, Gadoth A, Alfonso VH, Mukadi P, Doshi RH, et al. Detecting Ebola with limited laboratory access in the Democratic Republic of Congo: evaluation of a clinical passive surveillance reporting system. Trop Med Int Heal. 2017;22:1141–53.

Miller LA, Stanger E, Senesi RG, DeLuca N, Dietz P, Hausman L, et al. Use of a Nationwide Call Center for Ebola Response and Monitoring During a 3-Day House-to-House Campaign — Sierra Leone, September 2014. MMWR Morb Mortal Wkly Rep. 2015;64:28–9 https://www.cdc.gov/mmwr/preview/mmwrhtml/mm6401a7.htm. Accessed 17 May 2020.

Khetsuriani N, Perehinets I, Nitzan D, Popovic D, Moran T, Allahverdiyeva V, et al. Responding to a cVDPV1 outbreak in Ukraine: Implications, challenges and opportunities. Vaccine. 2017;35:4769–76.

Age international. Evaluation of Disasters Emergency Committee and Age International funded: Responding to the Ebola outbreak in Sierra Leone through age-inclusive community-led action. 2015. https://www.alnap.org/help-library/evaluation-of-disasters-emergency-committee-and-age-international-funded-responding-to. Accessed 18 May 2020.

Bagonza J, Rutebemberwa E, Mugaga M, Tumuhamye N, Makumbi I. Yellow fever vaccination coverage following massive emergency immunization campaigns in rural Uganda, May 2011: A community cluster survey. BMC Public Health. 2013;13. https://doi.org/10.1186/1471-2458-13-202.

Khonje A, Metcalf CA, Diggle E, Mlozowa D, Jere C, Akesson A, et al. Cholera outbreak in districts around Lake Chilwa, Malawi: Lessons learned. Malawi Med J. 2012;24:29–33.

Jia K, Mohamed K. Evaluating the use of cell phone messaging for community ebola syndromic surveillance in high risked settings in Southern Sierra Leone. Afr Health Sci. 2015;15:797–802.

Youkee D, Brown CS, Lilburn P, Shetty N, Brooks T, Simpson A, et al. Assessment of Environmental Contamination and Environmental Decontamination Practices within an Ebola Holding Unit, Freetown, Sierra Leone. PLoS One. 2015;10.

Ntshoe GM, McAnerney JM, Archer BN, Smit SB, Harris BN, Tempia S, et al. Measles Outbreak in South Africa: Epidemiology of Laboratory-Confirmed Measles Cases and Assessment of Intervention, 2009–2011. PLoS One. 2013;8. https://doi.org/10.1371/journal.pone.0055682.

Sierra Leone YMCA Ebola Outbreak Emergency Response - Evaluation report, February 2016 - Sierra Leone | ReliefWeb. 2016. https://reliefweb.int/report/sierra-leone/sierra-leone-ymca-ebola-outbreak-emergency-response-evaluation-report-february. Accessed 17 May 2020.

Malik MR, Mnzava A, Mohareb E, Zayed A, Al Kohlani A, Thabet AAK, et al. Chikungunya outbreak in Al-Hudaydah, Yemen, 2011: Epidemiological characterization and key lessons learned for early detection and control. J Epidemiol Glob Health. 2014;4:203–11.

Cascioli Sharp R. Real-time learning report on World Vision’s response to the ebola virus in Sierra Leone | ALNAP. 2015. https://www.alnap.org/help-library/real-time-learning-report-on-world-visions-response-to-the-ebola-virus-in-sierra-leone. Accessed 17 May 2020.

Summers A, Nyensaw T, Montgomery JM, Neatherlin J, Tappero JW. Challenges in Responding to the Ebola Epidemic — Four Rural Counties, Liberia, August–November 2014. MMWR Recomm Rep Morb Mortal Wkly Rep Recomm Rep. 2014;63:1202–4 https://www.cdc.gov/mmwr/preview/mmwrhtml/mm6350a5.htm. Accessed 16 May 2020.

Fogden D, Matoka S, Singh G. MDRNG020 Nigeria Cholera Epidemic Operational Review. Nigeria: IFRC; 2016. https://www.ifrc.org/en/publications-and-reports/evaluations/?c=&co=&fy=&mo=&mr=1&or=&r=&ti=nigeria&ty=&tyr=&z=. Accessed 24 June 2019.

Lee CT, Bulterys M, Martel LD, Dahl BA. Evaluation of a National Call Center and a Local Alerts System for Detection of New Cases of Ebola Virus Disease — Guinea, 2014–2015. MMWR Morb Mortal Wkly Rep. 2016;65:227–30. https://doi.org/10.15585/mmwr.mm6509a2.

Oladele DA, Oyedeji KS, Niemogha MT, Nwaokorie F, Bamidele M, Musa AZ, et al. An assessment of the emergency response among health workers involved in the 2010 cholera outbreak in northern Nigeria. J Infect Public Health. 2012;5:346–53 https://doi.org/10.1016/j.jiph.2012.06.004.

Fitzpatrick G, Decroo T, Draguez B, Crestani R, Ronsse A, Van den Bergh R, et al. Operational research during the Ebola emergency. Emerg Infect Dis. 2017;23:1057–62.

Logue CH, Lewis SM, Lansley A, Fraser S, Shieber C, Shah S, et al. Case study: Design and implementation of training for scientists deploying to ebola diagnostic field laboratories in Sierra Leone: October 2014 to February 2016. Philos Trans R Soc B Biol Sci. 2017;372. https://doi.org/10.1098/rstb.2016.0299.

Senga M, Koi A, Moses L, Wauquier N, Barboza P, Fernandez-Garcia MD, et al. Contact tracing performance during the ebola virus disease outbreak in kenema district, Sierra Leone. Philos Trans R Soc B Biol Sci. 2017;372. https://doi.org/10.1098/rstb.2016.0300.

Hermans V, Zachariah R, Woldeyohannes D, Saffa G, Kamara D, Ortuno-Gutierrez N, et al. Offering general pediatric care during the hard times of the 2014 Ebola outbreak: Looking back at how many came and how well they fared at a Médecins Sans Frontières referral hospital in rural Sierra Leone. BMC Pediatr. 2017;17.

A.S. W, B.G. M, G. G, J.L. G, H. B, M. A, et al. Evaluation of economic costs of a measles outbreak and outbreak response activities in Keffa Zone, Ethiopia. Vaccine. 2014;32:4505–14. https://doi.org/10.1016/j.vaccine.2014.06.035.

Dhillon P, Annunziata G. The Haitian Health Cluster Experience: A comparative evaluation of the professional communication response to the 2010 earthquake and the subsequent cholera outbreak. PLoS Curr. 2012;4. https://doi.org/10.1371/5014b1b407653.

Jones-Konneh TEC, Murakami A, Sasaki H, Egawa S. Intensive education of health care workers improves the outcome of ebola virus disease: Lessons learned from the 2014 outbreak in Sierra Leone. Tohoku J Exp Med. 2017;243:101–5.

Azman AS, Parker LA, Rumunu J, Tadesse F, Grandesso F, Deng LL, et al. Effectiveness of one dose of oral cholera vaccine in response to an outbreak: a case-cohort study. Lancet Glob Heal. 2016;4:e856–63.

Mbonye AK, Wamala JF, Nanyunja M, Opio A, Aceng JR, Makumbi I. Ebola viral hemorrhagic disease outbreak in West Africa- lessons from Uganda. Afr Health Sci. 2014;14:495–501.

Swanson KC, Altare C, Wesseh CS, Nyenswah T, Ahmed T, Eyal N, et al. Contact tracing performance during the Ebola epidemic in Liberia, 2014-2015. PLoS Negl Trop Dis. 2018;12:e0006762. https://doi.org/10.1371/journal.pntd.0006762.

Katawera V, Kohar H, Mahmoud N, Raftery P, Wasunna C, Humrighouse B, et al. Enhancing laboratory capacity during Ebola virus disease (EVD) heightened surveillance in Liberia: lessons learned and recommendations. Pan Afr Med J. 2019;33:8.

Ilesanmi OS, Fawole O, Nguku P, Oladimeji A, Nwenyi O. Evaluation of Ebola virus disease surveillance system in Tonkolili District, Sierra Leone. Pan Afr Med J. 2019;32(Suppl 1):2.

Nyenswah T, Engineer CY, Peters DH. Leadership in Times of Crisis: The Example of Ebola Virus Disease in Liberia. Heal Syst Reform. 2016;2:194–207. https://doi.org/10.1080/23288604.2016.1222793.

Bennett SD, Lowther SA, Chingoli F, Chilima B, Kabuluzi S, Ayers TL, et al. Assessment of water, sanitation and hygiene interventions in response to an outbreak of typhoid fever in Neno District, Malawi. PLoS One. 2018;13.

Adamu US, Archer WR, Braka F, Damisa E, Siddique A, Baig S, et al. Progress toward poliomyelitis eradication — Nigeria, January 2018–May 2019. Morb Mortal Wkly Rep. 2019;68:642–6.

Garde DL, Hall AMR, Marsh RH, Barron KP, Dierberg KL, Koroma AP. Implementation of the first dedicated Ebola screening and isolation for maternity patients in Sierra Leone. Ann Glob Heal. 2016;82:418. https://doi.org/10.1016/j.aogh.2016.04.164.

Aka L-P, Brunnström C, Ogle M. Benin Floods, Cholera and Fire (MDRBJ 009, MDRBJ010 and MDRBJ011) DREF Review March 2013. 2013. http://adore.ifrc.org/Download.aspx?FileId=42185&.pdf.

Lokuge K, Caleo G, Greig J, Duncombe J, McWilliam N, Squire J, et al. Successful Control of Ebola Virus Disease: Analysis of Service Based Data from Rural Sierra Leone. PLoS Negl Trop Dis. 2016;10. https://doi.org/10.1371/journal.pntd.0004498.

IFRC. Health Epidemics Joint Evaluation Report (IFRC). Uganda: IFRC; 2013. https://www.ifrc.org/en/publications-and-reports/evaluations/?c=&co=&fy=&mo=&mr=1&or=&r=&ti=jointevaluation&ty=&tyr=&z=. Accessed 24 July 2019.

Sévère K, Rouzier V, Anglade SB, Bertil C, Joseph P, Deroncelay A, et al. Effectiveness of oral cholera vaccine in Haiti: 37-month follow-up. Am J Trop Med Hyg. 2016;94:1136–42.

Ciglenecki I, Sakoba K, Luquero FJ, Heile M, Itama C, Mengel M, et al. Feasibility of Mass Vaccination Campaign with Oral Cholera Vaccines in Response to an Outbreak in Guinea. PLoS Med. 2013;10:e1001512. https://doi.org/10.1371/journal.pmed.1001512.

Mcgowan C. Somalia OCV campaign After Action Review; 2018.

Santa-Olalla P, Gayer M, Magloire R, Barrais R, Valenciano M, Aramburu C, et al. Implementation of an alert and response system in Haiti during the early stage of the response to the Cholera Epidemic. Am J Trop Med Hyg. 2013;89:688–97.

Ciglenecki I, Bichet M, Tena J, Mondesir E, Bastard M, Tran NT, et al. Cholera in Pregnancy: Outcomes from a Specialized Cholera Treatment Unit for Pregnant Women in Léogâne, Haiti. PLoS Negl Trop Dis. 2013;7.

Dureab F, Ismail O, Müller O, Jahn A. Cholera outbreak in Yemen: Timeliness of reporting and response in the national electronic disease early warning system. Acta Inform Med. 2019;27:85–8.

Dyson C. EHU After Action Review Somalia Cholera Response 2017; 2018.

Stone E, Miller L, Jasperse J, Privette G, Diez Beltran JC, Jambai A, et al. Community Event-Based Surveillance for Ebola Virus Disease in Sierra Leone: Implementation of a National-Level System During a Crisis. PLoS Curr. 2016;8. https://doi.org/10.1371/currents.outbreaks.d119c71125b5cce312b9700d744c56d8.

Asuzu MC, Onajole AT, Disu Y. Public health at all levels in the recent Nigerian Ebola viral infection epidemic: Lessons for community, public and international health action and policy. J Public Health Policy. 2015;36:251–8.

Tegegne AA, Braka F, Shebeshi ME, Aregay AK, Beyene B, Mersha AM, et al. Characteristics of wild polio virus outbreak investigation and response in Ethiopia in 2013-2014: implications for prevention of outbreaks due to importations. BMC Infect Dis. 2018;18:9.

with Jennifer Leigh S, Cook G, Hansch S, Toole Swati Sadaphal M, -Team Leader Jennifer Leigh M, -Public Health Advisor Gayla Cook M, et al. Evaluation of the USAID/OFDA Ebola Virus Disease Outbreak Response in West Prepared for. 2017.

Requesa L, Bolibarb I, Chazelleb E, Gomesb L, Prikazsky V, Banza F, et al. Evaluation of contact tracing activities during the Ebola virus disease outbreak in Guinea, 2015. Int Health. 2017;9:131–3.

Gleason B, Redd J, Kilmarx P, Sesay T, Bayor F, Mozalevskis A, et al. Establishment of an ebola treatment unit and laboratory — Bombali District, Sierra Leone, July 2014–January 2015. Morb Mortal Wkly Rep. 2015;64:1108–11.

Kouadio KI, Clement P, Bolongei J, Tamba A, Gasasira AN, Warsame A, et al. Epidemiological and surveillance response to ebola virus disease outbreak in lofa county, liberia (march-september, 2014); lessons learned. PLoS Curr. 2015;7. https://doi.org/10.1371/currents.outbreaks.9681514e450dc8d19d47e1724d2553a5.

Hurtado C, Meyer D, Snyder M, Nuzzo JB. Evaluating the frequency of operational research conducted during the 2014–2016 West Africa Ebola epidemic. Int J Infect Dis. 2018;77:29–33.

Munodawafa D, Moeti MR, Phori PM, Fawcett SB, Hassaballa I, Sepers C, et al. Monitoring and Evaluating the Ebola Response Effort in Two Liberian Communities. J Community Health. 2018;43:321–7.

Tappero JW, Tauxe RV. Lessons learned during public health response to cholera epidemic in Haiti and the Dominican Republic. Emerg Infect Dis. 2011;17:2087–93.

Makoutodé M, Diallo F, Mongbo V, Guévart E, Bazira L. La Riposte à L’épidémie de Choléra de 2008 àCotonou (Bénin). Sante Publique (Paris). 2010;22:425–35.

Darcy J, Valingot C, Olsen L, Noor al deen A, Qatinah A. A crisis within a crisis -evaluation of the UNICEF Level 3 response to the cholera epidemic in Yemen. 2018. https://www.unicef.org/evaldatabase/files/Evaluation_of_the_UNICEF_Level_3_response_to_the_cholera_epidemic_in_Yemen_HQEO-2018-001.pdf. Accessed 18 Apr 2019.

Thormar BS. Joint review of Ebola response – Uganda. Uganda: IFRC; 2013. https://www.ifrc.org/en/publications-and-reports/evaluations/?c=&co=&fy=&mo=&mr=1&or=&r=&ti=uganda&ty=&tyr=&z=. Accessed 24 May 2019.

Vaz RG, Mkanda P, Banda R, Komkech W, Ekundare-Famiyesin OO, Onyibe R, et al. The Role of the Polio Program Infrastructure in Response to Ebola Virus Disease Outbreak in Nigeria 2014. J Infect Dis. 2016;213:S140–6 https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4818557/. Accessed 17 May 2020.

Diallo A, Diallo M, Hyjazi Y, Waxman R, Pleah T. Baseline evaluation of infection prevention and control (IPC) in the context of Ebola virus disease (EVD) in nine healthcare facilities in the city of Conakry, Guinea. Antimicrob Resist Infect Control. 2015;4:1–1.

Swati Sadaphal with, Leigh J, Toole M, Cook Swati Sadaphal G, -Team Leader Jennifer Leigh M, -Public Health Advisor Gayla Cook M, et al. Evaluation of the USAID/OFDA Ebola Virus Disease Outbreak Response in West Africa 2014-2016 Objective 4: Coordination of the Response USAID/DCHA/OFDA CONTRACT # AID-OAA-I-15-00022 Task Order # AID-OAA-TO-16-00034 Prepared for.

Kamadjeu R, Gathenji C. Designing and implementing an electronic dashboard for disease outbreaks response - Case study of the 2013-2014 Somalia Polio outbreak response dashboard. Pan Afr Med J. 2017;27:22.

Oji MO, Haile M, Baller A, Tremblay N, Mahmoud N, Gasasira A, et al. Implementing infection prevention and control capacity building strategies within the context of Ebola outbreak in a “Hard-to-Reach” area of Liberia. Pan Afr Med J. 2018;31. https://doi.org/10.11604/pamj.2018.31.107.15517.

Elemuwa C, Kutalek R, Ali M, Mworozi E, Kochhar S, Rath B, et al. Global lessons from Nigeria’s ebolavirus control strategy. Expert Rev Vaccines. 2015;14:1397–400. https://doi.org/10.1586/14760584.2015.1064313.

Bwire G, Mwesawina M, Baluku Y, Kanyanda SSE, Orach CG. Cross-border cholera outbreaks in Sub-Saharan Africa, the mystery behind the silent illness: What needs to be done? PLoS One. 2016;11. https://doi.org/10.1371/journal.pone.0156674.

Shepherd M, Frize J, De Meulder F, Bizzari M, Lemaire I, Horst LR, et al. An evaluation of WFP’s L3 Response to the Ebola virus disease (EVD) crisis in West Africa Evaluation Report WFP Office of Evaluation. 2017.

Teng JE, Thomson DR, Lascher JS, Raymond M, Ivers LC. Using Mobile Health (mHealth) and Geospatial Mapping Technology in a Mass Campaign for Reactive Oral Cholera Vaccination in Rural Haiti. PLoS Negl Trop Dis. 2014;8:e3050. https://doi.org/10.1371/journal.pntd.0003050.

OXFAM. Evaluation of Sierra Leone Cholera Response 2012 Project Effectiveness Review Oxfam GB Global Humanitarian Indicator. 2013.

Cardile AP, Littell CT, Backlund MG, Heipertz RA, Brammer JA, Palmer SM, et al. Deployment of the 1st Area Medical Laboratory in a Split-Based Configuration During the Largest Ebola Outbreak in History. Mil Med. 2016;181:e1675–84.

Vogt F, Fitzpatrick G, Patten G, van den Bergh R, Stinson K, Pandolfi L, et al. Assessment of the MSF triage system, separating patients into different wards pending ebola virus laboratory confirmation, Kailahun, Sierra Leone, July to September 2014. Eurosurveillance. 2015;20. https://doi.org/10.2807/1560-7917.ES.2015.20.50.30097.

Oza S, Jazayeri D, Teich JM, Ball E, Nankubuge PA, Rwebembera J, et al. Development and deployment of the OpenMRS-Ebola electronic health record system for an Ebola treatment center in Sierra Leone. J Med Internet Res. 2017;19. https://doi.org/10.2196/jmir.7881.

ACF. Rapport de capitalisation au sujet de l’épidémie de choléra au Tchad, 2010 - Chad ReliefWeb. https://reliefweb.int/report/chad/rapport-de-capitalisation-au-sujet-de-lépidémie-de-choléra-au-tchad-2010.

Spiegel P, Ratnayake R, Hellman N, Lantagne D, Ververs M, Ngwa M, et al. I Cholera in Yemen: a case study of epidemic preparedness and response CHOLERA IN YEMEN: A CASE STUDY OF EPIDEMIC PREPAREDNESS AND RESPONSE. 2019.

Hennessee I, Guilavogui T, Camara A, Halsey ES, Marston B, McFarland D, et al. Adherence to Ebola-specific malaria case management guidelines at health facilities in Guinea during the West African Ebola epidemic. Malar J. 2018;17. https://doi.org/10.1186/s12936-018-2377-3.

Murray A, Majwa P, Roberton T, Burnham G. Report of the real time evaluation of Ebola control programs in Guinea, Sierra Leone and Liberia ALNAP; 2015.

UNICEF. Global: Evaluation of UNICEF’s response to the Ebola outbreak in West Africa, 2014-2015 Evaluation database UNICEF. 2016.

World Health Organization. WHO Report of the Ebola Interim Assessment Panel - July 2015. Geneva: WHO; 2020.

Ivers LC, Hilaire IJ, Teng JE, Almazor CP, Jerome JG, Ternier R, et al. Effectiveness of reactive oral cholera vaccination in rural Haiti: A case-control study and bias-indicator analysis. Lancet Glob Heal. 2015;3:e162–8.

Wolfe CM, Hamblion EL, Schulte J, Williams P, Koryon A, Enders J, et al. Ebola virus disease contact tracing activities, lessons learned and best practices during the Duport Road outbreak in Monrovia, Liberia, November 2015. PLoS Negl Trop Dis. 2017;11. https://doi.org/10.1371/journal.pntd.0005597.

Abramowitz S, Bardosh K, Heaner G. Evaluation of Save the Children’s Community Care Centers in Dolo Town and Worhn, Margibi County, Liberia ALNAP; 2015.

YMCA. Evaluation Report Liberia YMCA Ebola Outbreak Emergency Response Learning for impact Humanitarian Response Executive Summary Acknowledgements from Liberia YMCA. 2015.

Platt A, Kerley L. External Evaluation of Plan International UK’s Response to the Ebola Virus Outbreak in Sierra Leone ALNAP; 2016.

Adams J, Lloyd A, Miller C. The Oxfam Ebola Response in Liberia and Sierra Leone: An evaluation report for the Disasters Emergency Committee Oxfam Policy & Practice; 2015.

Ajayi NA, Nwigwe CG, Azuogu BN, Onyire BN, Nwonwu EU, Ogbonnaya LU, et al. Containing a Lassa fever epidemic in a resource-limited setting: Outbreak description and lessons learned from Abakaliki, Nigeria (January-March 2012). Int J Infect Dis. 2013;17:e1011–6.

Rees-Gildea P. Sierra Leone Cholera ERU Operation Review | ALNAP; 2013.

de WE, Rosenke K, Fischer RJ, Marzi A, Prescott J, Bushmaker T, et al. Ebola Laboratory Response at the Eternal Love Winning Africa Campus, Monrovia, Liberia, 2014–2015. J Infect Dis. 2016;214(Suppl 3):S169–76.

Communities G. Stopping Ebola in its Tracks: A Community-Led Response; 2015.

Clive M. Brown Gabrielle A. Benenson, Gary Brunette, Marty Cetron, Tai-Ho Chen, Nicole J. Cohen, Pam Diaz, Yonat Haber, Christa R. Hale, Kelly Holton, Katrin Kohl, MD1, Amanda W. Lee, MPH1, Gabriel J. Palumbo, Kate Pearson, Christina R, Nicki Pesik AEA. Airport Exit and Entry Screening for Ebola — August–November 10, 2014. 2020. https://www.cdc.gov/mmwr/preview/mmwrhtml/mm6349a5.htm.

Tauxe RV, Lynch M, Lambert Y, Sobel J, Domerçant JW, Khan A. Rapid development and use of a nationwide training program for cholera management, Haiti, 2010. Emerg Infect Dis. 2011;17:2094–8.

TKG International. CARE International DEC Ebola Emergency Response Project Final Evaluation Report. 2016.

Victoria M, Gerber GS, Gasasira A, Sugerman DE, Manneh F, Chenoweth P, Kurnit MR, Abanida AN EA. Evaluation of Polio Supplemental Immunization Activities in Kano, Katsina, and Zamfara States, Nigeria: Lessons in Progress | The Journal of Infectious Diseases | Oxford Academic. J Infect Dis. 2014;20:S91–7.

Nevin RL, Anderson JN. The timeliness of the US military response to the 2014 Ebola disaster: a critical review. Med Confl Surviv. 2016;32:40–69.

Fu C, Roberton T, Burnham G. Community-based social mobilization and communications strategies utilized in the 2014 West Africa Ebola outbreak. Ann Glob Heal. 2015;81:126.

of Commons H. Ebola: Responses to a public health emergency Second Report of Session 2015-16 HC 338.

de la Rosa Vazquez O. Evaluation of EHU/Save the Children Democratic Republic of Congo (DRC) Yellow Fever Mass Vaccination Campaign in Binza Ozone Health Zone, Kinshasa province (DRC), 2016; 2017.

Kokki M, Safrany N. Evaluation of ECDC Ebola deployment in Guinea Final report. Stockholm: ECDC; 2017. https://doi.org/10.2900/202126.

Ndiaye SM, Ahmed MA, Denson M, Craig AS, Kretsinger K, Cherif B, et al. Polio Outbreak Among Nomads in Chad: Outbreak Response and Lessons Learned. J Infect Dis. 2014;210(Suppl (1)):S74–84. https://doi.org/10.1093/infdis/jit564.

Li ZJ, Tu WX, Wang XC, Shi GQ, Yin ZD, Su HJ, et al. A practical community-based response strategy to interrupt Ebola transmission in sierra Leone, 2014-2015. Infect Dis Poverty. 2016;5. https://doi.org/10.1186/s40249-016-0167-0.

Borchert M, Mutyaba I, Van Kerkhove MD, Lutwama J, Luwaga H, Bisoborwa G, et al. Ebola haemorrhagic fever outbreak in Masindi District, Uganda: Outbreak description and lessons learned. BMC Infect Dis. 2011;11. https://doi.org/10.1186/1471-2334-11-357.

Routh JA, Sreenivasan N, Adhikari BB, Andrecy LL, Bernateau M, Abimbola T, et al. Cost evaluation of a government-conducted oral cholera vaccination campaign - Haiti, 2013. Am J Trop Med Hyg. 2017;97:37–42.

Grayel Y. Programme D’Intervention Pour Limiter Et Prevenir La Propagation De L’Epidemie Du Cholera En Republique Democratique Du Congo. New York: ACF; 2014.

OXFAM. Humanitarian Quality Assurance - Sierra Leone: Evaluation of Oxfam’s humanitarian response to the West Africa Ebola crisis | Oxfam Policy & Practice. 2017. https://policy-practice.oxfam.org.uk/publications/humanitarian-quality-assurance-sierra-leone-evaluation-of-oxfams-humanitarian-r-620191. Accessed 17 May 2020.

Cook G, Leigh J, Toole M, Hansch S, Sadaphal S, Leader M-T, et al. Evaluation of the USAID/OFDA Ebola Virus Disease Outbreak Response in West Africa 2014-2016 Objective 2: Effectiveness of Programmatic Components. Washington DC: Effectiveness of Programmatic; 2018.

Nkwogu L, Shuaib F, Braka F, Mkanda P, Banda R, Korir C, et al. Impact of engaging security personnel on access and polio immunization outcomes in security-inaccessible areas in Borno state, Nigeria. BMC Public Health. 2018;18:1311. https://doi.org/10.1186/s12889-018-6188-9.

Dobai A, Tallada J. Final Evaluation Cholera Emergency Appeal in Haiti and the Dominican Republic 2014 - 2016; 2016.

Cancedda C, Davis SM, DIerberg KL, Lascher J, Kelly JD, Barrie MB, et al. Strengthening Health Systems while Responding to a Health Crisis: Lessons Learned by a Nongovernmental Organization during the Ebola Virus Disease Epidemic in Sierra Leone. J Infect Dis. 2016;214:S153–63.

Nielsen CF, Kidd S, Sillah ARM, Davis E, Mermin J, Kilmarx PH. Improving burial practices and cemetery management during an Ebola virus disease epidemic — Sierra Leone, 2014. Morb Mortal Wkly Rep. 2015;64:20–7.

Olu OO, Lamunu M, Nanyunja M, Dafae F, Samba T, Sempiira N, et al. Contact Tracing during an Outbreak of Ebola Virus Disease in the Western Area Districts of Sierra Leone: Lessons for Future Ebola Outbreak Response. Front Public Heal. 2016;4. https://doi.org/10.3389/fpubh.2016.00130.

Oleribe OO, Crossey MME, Taylor-Robinson SD. Nigerian response to the 2014 Ebola viral disease outbreak: lessons and cautions. Pan Afr Med J. 2015;22(Suppl 1):13.

Msyamboza KP, M’bang’ombe M, Hausi H, Chijuwa A, Nkukumila V, Kubwalo HW, et al. Feasibility and acceptability of oral cholera vaccine mass vaccination campaign in response to an outbreak and floods in Malawi. Pan Afr Med J. 2016;23:203.

SCI. AFTER ACTION REVIEW DRC EVD Response in North Kivu and Ituri 2019. 2019.

Yaméogo TM, Kyelem CG, Poda GEA, Sombié I, Ouédraogo MS, Millogo A. Épidémie de méningite : Évaluation de la surveillance et du traitement des cas dans les formations sanitaires d’un district du Burkina Faso. Bull la Soc Pathol Exot. 2011;104:68–73.

Bell BP, Damon IK, Jernigan DB, Kenyon TA, Nichol ST, O’Connor JP, et al. Overview, Control Strategies, and Lessons Learned in the CDC Response to the 2014–2016 Ebola Epidemic. MMWR Suppl. 2016;65:4–11. https://doi.org/10.15585/mmwr.su6503a2.

Standley CJ, Muhayangabo R, Bah MS, Barry AM, Bile E, Fischer JE, et al. Creating a National Specimen Referral System in Guinea: Lessons From Initial Development and Implementation. Front Public Heal. 2019;7 MAR:83. https://doi.org/10.3389/fpubh.2019.00083.

Abubakar A, Ruiz-Postigo JA, Pita J, Lado M, Ben-Ismail R, Argaw D, et al. Visceral Leishmaniasis Outbreak in South Sudan 2009-2012: Epidemiological Assessment and Impact of a Multisectoral Response. PLoS Negl Trop Dis. 2014;8. https://doi.org/10.1371/journal.pntd.0002720.

Kateh F, Nagbe T, Kieta A, Barskey A, Gasasira AN, Driscoll A, et al. Rapid response to ebola outbreaks in remote areas — Liberia, July–November 2014. Morb Mortal Wkly Rep. 2015;64:188–92.

Federspiel F, Ali M. The cholera outbreak in Yemen: Lessons learned and way forward. BMC Public Health. 2018;18:1338. https://doi.org/10.1186/s12889-018-6227-6.

Kamadjeu R, Mahamud A, Webeck J, Baranyikwa MT, Chatterjee A, Bile YN, et al. Polio outbreak investigation and response in Somalia, 2013. J Infect Dis. 2014;210 Suppl:S181–6 https://doi.org/10.1093/infdis/jiu453.

Bayntun C, Zimble SA. Evaluation of the OCG Response to the Ebola Outbreak Lessons learned from the Freetown Ebola Treatment Unit, Sierra Leone Managed by the Vienna Evaluation Unit. 2016. http://tukul.msf.org. Accessed 18 May 2020.

Sepers CE, Fawcett SB, Hassaballa I, Reed FD, Schultz J, Munodawafa D, et al. Evaluating implementation of the Ebola response in Margibi County, Liberia. Health Promot Int. 2019;34:510–2.

Lupel A, Snyder M. The Mission to Stop Ebola: Lessons for UN Crisis Response. 2017. www.ipinst.org. Accessed 18 May 2020.

Grayel Y. Evaluation Externe Réponse d’Urgence à L’Epidémie de Choléra en Haïti (ACF); 2011.

Ratnayake R, Crowe SJ, Jasperse J, Privette G, Stone E, Miller L, et al. Assessment of community event-based surveillance for Ebola virus disease, Sierra Leone, 2015. Emerg Infect Dis. 2016;22:1431–7.

Momoh HB, Lamin F, Samai I. Final Report: Evaluation of DEC Ebola Response Program Phase 1 and 2 DEC Emergency Response Program Implemented by CAFOD, Caritas, Street Child and Troacaire in Sierra Leone; 2016.

Nic Lochlainn LM, Gayton I, Theocharopoulos G, Edwards R, Danis K, Kremer R, et al. Improving mapping for Ebola response through mobilising a local community with self-owned smartphones: Tonkolili District, Sierra Leone, January 2015. PLoS One. 2018;13:e0189959. https://doi.org/10.1371/journal.pone.0189959.

Gauthier J. A Real-Time Evaluation of ACF’s response to cholera emergency in Juba, South Sudan | ALNAP. 2014. https://www.alnap.org/help-library/a-real-time-evaluation-of-acf’s-response-to-cholera-emergency-in-juba-south-sudan. Accessed 17 May 2020.

Jobanputra K, Greig J, Shankar G, Perakslis E, Kremer R, Achar J, et al. Electronic medical records in humanitarian emergencies - the development of an Ebola clinical information and patient management system. F1000Research. 2017;5. https://doi.org/10.12688/f1000research.8287.3.

IFRC. Evaluation of the Red Cross and Red Crescent contribution to the 2009 Africa polio outbreak response. 2010.

Carafano JJ, Florance C, Kaniewski D. The Ebola Outbreak of 2013–2014: An Assessment of U.S. Actions | The Heritage Foundation. 2015. https://www.heritage.org/homeland-security/report/the-ebola-outbreak-2013-2014-assessment-us-actions. Accessed 18 May 2020.

Heitzinger K, Impouma B, Farham B, Hamblion EL, Lukoya C, MacHingaidze C, et al. Using evidence to inform response to the 2017 plague outbreak in Madagascar: A view from the WHO African Regional Office. Epidemiol Infect. 2019;147:e3 https://doi.org/10.1017/S0950268818001875.

Soeters HM, Koivogui L, de Beer L, Johnson CY, Diaby D, Ouedraogo A, et al. Infection prevention and control training and capacity building during the Ebola epidemic in Guinea. PLoS One. 2018;13. https://doi.org/10.1371/journal.pone.0193291.

Stehling-Ariza T, Rosewell A, Moiba SA, Yorpie BB, Ndomaina KD, Jimissa KS, et al. The impact of active surveillance and health education on an Ebola virus disease cluster - Kono District, Sierra Leone, 2014-2015. BMC Infect Dis. 2016;16:611. https://doi.org/10.1186/s12879-016-1941-0.

Accountability to Affected Populations (AAP) : A brief overview. https://interagencystandingcommittee.org/system/files/iasc_aap_psea_2_pager_for_hc.pdf. Accessed 15 May 2020.

Hoffman SJ, Silverberg SL. Delays in global disease outbreak responses: Lessons from H1N1, Ebola, and Zika. Am J Public Health. 2018;108:329–33.

WHO. Number of deaths due to cholera. Geneva: WHO; 2011. https://www.who.int/gho/epidemic_diseases/cholera/cases_text/en/. Accessed 21 Apr 2020.

Lassa Fever | CDC. https://www.cdc.gov/vhf/lassa/index.html. Accessed 23 Apr 2020.

Allen T, Parker M, Stys P. Ebola responses reinforce social inequalities | Africa at LSE: London School of Economics; 2019. https://blogs.lse.ac.uk/africaatlse/2019/08/22/ebola-responses-social-inequalities/. Accessed 18 May 2020.

Ali H, Dumbuya B, Hynie M, Idahosa P, Keil R, Perkins P. The Social and Political Dimensions of the Ebola Response: Global Inequality, Climate Change, and Infectious Disease. In: Climate Change Management. Cham: Springer; 2016. p. 151–69.

World Health Organization (WHO). Coronavirus Disease (COVID-19) Dashboard. 2020. https://covid19.who.int/?gclid=EAIaIQobChMIgvnklafj6gIVl-7tCh1O9AX-EAAYASAAEgIXxvD_BwE. Accessed 23 July 2020.

Farmer P. Social Inequalities and Emerging Infectious Diseases. Emerg Infect Di. 1996;2:259–69.

Barreto ML. Health inequalities: A global perspective. Cienc e Saude Coletiva. 2017;22:2097–108.

Campbell M, McKenzie JE, Sowden A, Katikireddi SV, Brennan SE, Ellis S, et al. Synthesis without meta-analysis (SWiM) in systematic reviews: Reporting guideline. BMJ. 2020;368. https://doi.org/10.1136/bmj.l6890.

Herten-Crabb, Asha McDonald B, Sigfrid L, Rahman-A, Shepherd, Verrecchia R, Carson G, et al. The state of governance and coordination for health emergency preparedness and response- Background report commissioned by the Global Preparedness Monitoring Board. 2019. https://apps.who.int/gpmb/thematic_report.html. Accessed 24 Apr 2020.

Wang R. Governance Implications Of Global Infectious Disease Epidemics Under Shared Health Governance Scheme. Lessons From Sars. Public Heal Theses. 2012:1–32 https://elischolar.library.yale.edu/ysphtdl/1306. Accessed 24 Apr 2020.

Bedford J, Farrar J, Ihekweazu C, Kang G, Koopmans M, Nkengasong J. A new twenty-first century science for effective epidemic response. Nature. 2019;575:130–6.

Acknowledgements

We would like to thank Ruwan Ratnayake and Imran Morhaso-Bello for their inputs.

Funding

This work was supported by UK Research and Innovation as part of the Global Challenges Research Fund, grant number ES/P010873/.

Author information

Authors and Affiliations

Contributions

This study was designed by AW and FC. The data collection and cleaning was undertaken by AW, JM and AG. The analysis was undertaken by AW. All authors contributed to writing of the first draft. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

As this study relied on secondary data, no ethical approval was required.

Consent for publication

Not applicable

Competing interests

We declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1.

List of Epidemics.

Additional file 2.

Evaluation Extraction Table.

Additional file 3.

Search Strategy & Evaluation Quality Checklist.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Warsame, A., Murray, J., Gimma, A. et al. The practice of evaluating epidemic response in humanitarian and low-income settings: a systematic review. BMC Med 18, 315 (2020). https://doi.org/10.1186/s12916-020-01767-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12916-020-01767-8