Abstract

Background

eHealth programmes in African countries face fierce competition for scarce resources. Such initiatives should not proceed without adequate appraisal of their probable impacts, thereby acknowledging their opportunity costs and the need for appraisals to promote optimal use of available resources. However, since there is no broadly accepted eHealth impact appraisal framework available to provide guidance, and local expertise is limited, African health ministries have difficulty completing such appraisals. The Five Case Model, used in several countries outside Africa, has the potential to function as a decision-making tool in African eHealth environments and serve as a key component of an eHealth impact model for Africa.

Methods

This study identifies internationally recognised metrics and readily accessible data sources to assess the applicability of the model’s five cases to African countries.

Results

Ten metrics are identified that align with the Five Case Model’s five cases, including nine component metrics and one summary metric that aggregates the nine. The metrics cover the eHealth environment, human capital and governance, technology development, and finance and economics. Fifty-four African countries are scored for each metric. Visualisation of the metric scores using spider charts reveals profiles of the countries’ relative performance and provides an eHealth Investment Readiness Assessment Tool.

Conclusion

The utility of these comparisons to strengthen eHealth investment planning suggests that the five cases are applicable to African countries’ eHealth investment decisions. The potential for the Five Case Model to have a role in an eHealth impact appraisal framework for Africa should be validated through field testing.

Similar content being viewed by others

Background

African countries experience high disease burdens compounded by resource shortages. This results in competition for available resources so that each decision to invest represents an opportunity cost that governments must weigh carefully to ensure optimal use of available funds [1]. eHealth initiatives offer potential to help improve health system performance and there is growing pressure to show positive impacts and health system benefits for each investment [2]. African governments are handicapped by a lack of evidence of which eHealth impact appraisal methodologies are applicable to support decision-making in African countries. This is further complicated by a diversity of views on what eHealth means [3], the role it should play [4], and benchmarks for good practice [5]. Without resolving these issues, the likelihood of optimal investment decisions being made diminishes.

A number of terms have been used to describe the use of Information Communication Technology (ICT) in the health sector, such as eHealth, digital health and health ICT, as well as sub-disciplines such as telemedicine and mobile health [6]. This paper uses eHealth and its World Health Organization (WHO) definition; “eHealth is the use of ICTs for health” [7]. While digital health frequently appears as an alternative to eHealth, there are recognised differences in its meaning [3].

Many eHealth initiatives fail [8, 9]. Therefore, eHealth should not attract public investment until its probable impacts have been appraised. The main reason for estimating impact is to ensure that the benefits realised from an investment justify the costs over time for key stakeholders, and rationalise the opportunity cost. This requires a value judgement tailored to local priorities such as access to services, Sustainable Development Goals (SDGs) and Universal Health Coverage (UHC) [10], and a way to balance the competing dimensions of value and affordability. An eHealth Impact model is a generic appraisal approach to support this type of decision-making [11]. For African countries, a methodology is needed to help those faced with making decisions about proposed eHealth initiatives to conduct prospective appraisals despite a scarcity of specialised economics, eHealth and other expertise [12].

There are numerous approaches to the assessment of economic impact in the health sector [13,14,15]. Some, such as the Health Impact Assessment approach [16, 17], extend beyond economic aspects to deal with broader societal impact, often referred to as socio-economic impact [18]. Few approaches are specific to eHealth, with notable exceptions such as the European eHealth IMPACT study [19], the Digital Health Impact Framework (DHIF) developed by the Asian Development Bank [20] and based on the Five Case Model, and a staged-based approach to integrating economic and financial evaluations specifically for mobile health initiatives [21].

The Five Case Model is described in The Green Book [22] and a User Manual [23]. Each case has a specific purpose, addressing the distinct questions summarised in Table 1. The Five Case Model is recommended by the United Kingdom [22, 24] and New Zealand [25] as a tool for promoting accountability for decisions about a variety of public spending initiatives, including eHealth. It provides an appraisal of the estimated probable value of an initiative’s options within the complex health system it operates in. This strengthens the justification for investing in initiatives that do well in the five cases, and justifies further research into its potential utility.

The Five Case Model is a decision-making tool designed with the flexibility African countries need, such as balancing value against affordability constraints, and allowing progress despite limited human capacity to conduct complex eHealth economic appraisals. Nevertheless, the applicability of the five cases to African eHealth investments has not been assessed. In order to show this applicability, metrics are needed that are aligned to the five cases and relevant to African countries’ eHealth investment decisions. This study aims to identify appropriate metrics and data sources in order to judge the applicability of the Five Case Model in African eHealth settings, as an important step preceding field testing of the Five Case Model in Africa.

Methods

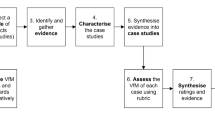

To achieve the aim, readily accessible online data sources were explored to identify candidate metrics. The primary selection criteria were that each metric should provide information relevant to an eHealth issue aligned to one or more of the primary questions of the five cases, and be accessible online. Candidate metrics were then assessed for data availability from recognised sources such as the World Health Organization (WHO), International Telecommunications Union (ITU) and World Bank. Finally, the number of African countries for which the data were available was assessed. The initial intention was to select candidate metrics for which data were available for more than 80% of countries. However, the threshold was subsequently revised to 60% due to sparsity of data. Where less than 60% of African countries had available data, the metric was excluded. The remaining metrics became the component metrics of an eHealth Investment Readiness Assessment Tool.

Data for component metrics were collected for the fifty-four countries of the United Nations African region [26]. Where data were missing for a metric, values were set at zero to avoid recording progress that was not substantiated, except where more than a third of a metric’s data were missing, in which case the mean was used, since a zero value might reflect challenges with the metric’s data collection process rather than limited eHealth development. Thereafter, for each metric, values were reduced to a proportion of 1, where 1 was the maximum score possible and was assigned to the country with the highest score for that metric. An average of all component metrics provided a summary score that was used to rank overall country eHealth investment readiness. No weightings were applied. Scores were categorised as good (> 0.70), moderate (0.50–0.70) or poor (< 0.50).

Metric scores were analysed using spider charts, a graphical method of displaying multivariate data in a two-dimensional chart of three or more quantitative variables represented on axes starting from the same point. These visualisations were used to create multi-country profiles for five groups of countries: first, the countries with the highest five summary scores, and thereafter comparison of the countries with the highest, the lowest and the median scores for each of four regional economic communities of the African Union [27], the Arab Maghreb Union (AMU), East African Community (EAC), Economic Community of West African States (ECOWAS) and the Southern African Development Community (SADC).

Results

Candidate metrics were identified based on the authors’ experience working in eHealth in African countries over many decades. This identified the following nineteen candidate metrics, arranged in four categories:

Category 1: eHealth development indicators

-

1.

Global digital health index [28]

-

2.

Global Observatory for eHealth (GOE) survey score [29]

-

3.

Relative ranking among countries that have achieved 100% birth registration and 80% death registration

-

4.

Relative ranking among countries that have conducted at least one population and housing census in the last 10 years

-

5.

Service availability and readiness assessment [30]

-

6.

Status of national eHealth strategy

Category 2: Financial and economic indicators

-

7.

Current Health Expenditure (CHE) as a percentage of Gross Domestic Product (GDP) [31]

-

8.

CHE per capita [32]

-

9.

Government debt per capita

-

10.

Proportion of total government spending on essential services

- 11.

Category 3: ICT development indicators

- 12.

-

13.

International Health Regulations (IHR) capacity and health emergency preparedness [37]

-

14.

International Organization of Standardization ratings

-

15.

Internet penetration score [38]

-

16.

ITU percentage of individuals using the Internet [39]

Category 4: Workforce and governance indicators

- 17.

-

18.

Ibrahim Index of Governance in Africa score [42]

-

19.

Rating agency risks assessment scores

Nine of the candidate metrics had sufficiently complete and readily accessible data. Table 2 lists the nine selected metrics and indicates which of the five cases each addresses and the percentage of countries for which data were found. For each metric, the most recent available data were used. The most recent GOE survey score was for 2015 and was the only source found for data about countries’ eHealth environments. In contrast, the most recent data for the status of eHealth Strategy were for 2018. The other seven data sources were for years from 2015 to 2017.

Ten candidate metrics were rejected for the following reasons:

-

Data sets incomplete, in the case of the digital health readiness index

-

Data sets not readily accessible, in the case of government debt per capita and proportion of total government spending on essential services

-

No easily interpretable summary score, in the case of the service availability and readiness assessment, rating agency assessments, IHR capacity and health emergency preparedness, and membership of the IOS

-

Similar, more appropriate metrics identified, as in the case of relative ranking among countries that have conducted at least one population and housing census in the last 10 years, and the relative ranking among countries that have achieved 100% birth registration and 80% death registration.

Descriptions of each of the selected metrics and the approaches to missing data are provided in Table 3.

Figure 1 shows the country scores and relative rankings of the summary metric. Detailed scores are provided in Tables 4 and 5.

Mauritius achieved the highest summary metric score and was in the top five for five other metrics: CHE per capita (1st), IDI (1st), Ibrahim Governance Index (1st), HCI (2nd) and Internet penetration (4th). For the other four metrics Mauritius did not score in the top 20 countries.

Seven metrics showed greater than 0.50 correlation with the summary metric, namely the Ibrahim Governance Index (0.85), Internet penetration (0.78), ICT Development Index (IDI) (0.73), eHealth Strategy (0.61), Human Capital Index (HCI) (0.60), Global Observatory for eHealth (GOE) survey (0.53) and Current Health Expenditure (CHE) per capita (0.52). Correlation between component metrics was generally low, except between IDI and Internet penetration (0.84). Other correlations greater than 0.50 were between the Ibrahim Governance index and four other metrics: IDI (0.66), Internet penetration (0.64), CHE per capita (0.59), and HCI (0.52), and between CHE per capita and the two ICT metrics, Internet penetration (0.65) and IDI (0.65).

Results for five country groupings are provided in Tables 6, 7, 8, 9, 10. Table 6 is of five countries that scored highest on the summary metric. Tables 7, 8, 9, 10 each show the scores for three countries (highest scoring, lowest scoring and the median) from each of four economic regions. To aid comparison, these data are presented in Tables 6, 7, 8, 9, 10 and the corresponding spider charts in Figs. 2, 3, 4, 5, 6 where the axes represent metrics and are arranged radially (1–10), with the scores for each metric plotted and linked.

In the highest scoring group there are results from Mauritius (0.74), South Africa (0.68), Botswana (0.65), Seychelles (0.64) and Morocco (0.62). All five countries scored above 0.70 on the Ibrahim Governance Index (> 0.73) and IDI (> 0.77). Internet penetration scores were good (> 0.84) for all except Botswana (0.62). Despite these high scores, all five countries had poor scores for CHE as a % of GDP and moderate or poor for real GDP growth rates. The results are shown in Table 6 and Fig. 2.

Three of the five AMU member countries were compared. Morocco scored highest (0.62), Libya lowest (0.36) and Tunisia was the median (0.52). Libya achieved the highest score for growth of real GDP out of all 54 countries and Morocco and Tunisia achieved good scores for IDI, Internet penetration, HCI and governance. All other scores were moderate or poor. The results are in Table 7 and Fig. 3.

Three of the six EAC member countries were compared. Kenya scored highest (0.58), South Sudan lowest (0.16) and Tanzania was the median (0.54). Kenya and Tanzania scored above 0.70 for strategy, growth of real GDP and governance. Kenya also scored above 0.70 for Internet penetration and HCI. All three countries’ scores were poor for CHE per capita and CHE as a percentage of GDP. South Sudan’s scores were poor for all metrics. The results are in Table 8 and Fig. 4.

Three of the fifteen ECOWAS member states were compared. Senegal scored highest (0.59), Guinea-Bissau lowest (0.28) and Sierra Leone was the median (0.46). All three countries achieved good scores for growth of real GDP and poor scores for CHE per capita and IDI. Senegal’s scores were good for strategy, GOE survey and governance and Sierra Leone scored the highest out of all 54 countries for CHE as a percentage of GDP. All other scores were moderate or poor. The results are in Table 9 and Fig. 5.

Finally, three member states of SADC were compared. Mauritius scored highest (0.74), Angola lowest (0.31) and Namibia was the median (0.49). As discussed earlier, Mauritius was the only country to score above 0.70 for the summary metric. Mauritius surpassed Namibia and Angola in all metrics except the GOE survey, where Mauritius, Namibia and Angola scored the same (0.54), and CHE as a % GDP, where Namibia scored higher (0.49) than Mauritius (0.30). Namibia and Angola had poor scores for eHealth strategy, CHE as a % GDP, growth of real GDP and Internet penetration. The results are in Table 10 and Fig. 6.

Discussion

Analysis of a country’s relative ranking on each component metric, and the summary metric, can be used to identify aspects where further development would contribute to eHealth investment strengthening. The summary metric provides an overall indication of a country’s eHealth investment readiness, relative to other countries. The inconsistency of data source years is a limitation, since a country’s economic condition, ICT development and eHealth development may vary from year to year. Future publication of an updated tool using metrics from a single, recent year – should they become available – would be of value.

Comparison of the component metric profiles of regional country groupings can help those countries identify good practices to be shared with neighbouring countries. Individual metrics can hide nuances, therefore exploring all metrics for each country under evaluation is encouraged. Similarly, comparing countries’ profiles provides additional insights illustrated by the varying patterns seen on the spider charts. Scoring less than 1.00 for a metric shows underperformance against peers, and represents an opportunity for improvement. Comparison of the scoring patterns can reveal individual and/or regional performance in each of these quadrants: bottom and right lower quadrant for financial and economic indicators, left lower quadrant for two ICT development indicators, left upper quadrant for human capital and governance, and right upper quadrant for development of the eHealth environment (Fig. 7).

Using the study findings, each African country can review its metric scores, plot its spider chart to show its performance, and use the results to establish an eHealth investment strengthening plan. For example, despite having the highest summary score, Mauritius’ results identified four areas that, if strengthened, will improve the likelihood of successful eHealth investment. These included updating its eHealth strategy, addressing aspects of the GOE survey that scored poorly, growing the Mauritian economy and lobbying for more allocation of the fiscus to health.

Countries with an eHealth Strategy, relatively high GDP and health spend per capita, and high governance scores, such as Mauritius, South Africa and Botswana, can apply the Five Case Model to improve eHealth investment decisions. Countries with an eHealth Strategy and high governance score, but low CHE scores, such as Kenya, Morocco and Senegal, should start by focusing on the economics and finance aspects of their eHealth programmes. Countries with an eHealth Strategy and low governance score, such as Nigeria, should focus on governance strengthening, as a foundational requirement for eHealth investment.

Regional spider charts help to illustrate this analysis. Thus, data for the AMU (Fig. 3) suggest that while Morocco and Tunisia show similar patterns, Tunisia remains hampered by lack of an eHealth Strategy retarding its eHealth development. Changing this will remain challenging while growth of real GDP and CHE metrics remain low, represented on the spider chart as low scores on the right, lower quadrant. Despite Libya’s generally lower than average performance, Libya scores well on growth of real GDP (2nd), far higher than Morocco (22nd) and Tunisia (39th). This, combined with a moderate IDI score (0.70), sets the stage for Libya to craft an eHealth Strategy to guide the beginning of eHealth investments.

In the EAC (Fig. 4), Kenya and Tanzania have similar summary metric scores and high scores for strategy, growth rates of real GDP and governance, yet important differences, such as Kenya’s higher score on Internet penetration. If the region considered identifying a country lead for key elements, it would include Kenya leading on connectivity. South Sudan scores are poor on all metrics, though with a slight shift to the left caused by the HCI score (0.45), which could indicate potential warranting further development. A dominant feature of the EAC spider chart is poor scores on the two CHE metrics, represented by the “missing” bottom right quadrant, highlighting the need for growth to include more fiscal allocations to health.

In the ECOWAS (Fig. 5), all three countries show good growth of real GDP, though CHE per capita remains poor and IDI is poor. Each of the spider chart quadrants has some activity, which may indicate that a collaborative regional approach will prove fruitful. Sierra Leone has achieved the highest score on CHE as a percentage of GDP (1st), though has inadequate eHealth Strategy (36th) and poor GOE survey scores (37th). An opportunity could be to develop a new eHealth Strategy, fuelled by CHE priorities. Promising governance rankings in Senegal (10th) underpin the growth of real GDP and a regional eHealth leadership role for Senegal.

The SADC spider chart (Fig. 6), shows a marked “lean” towards the left caused by low scores in the two eHealth implementation metrics in the top right quadrant. Namibia’s poor eHealth strategy score may help to explain why, despite promising rankings on governance (5th) and IDI (13th), the GOE survey score remains low (33rd). Angola is constrained by poor scores on strategy, CHE metrics, IDI and governance. A regional strategy that includes collaboration to share good practices, particularly to improve SADC country’s eHealth strategies, might prove useful.

Correlation analysis provides information about relationships between component metrics. Correlations above 0.75 between the summary metric and two component metrics, the Ibrahim Governance Index (0.85) and Internet penetration (0.78), suggest that either of these would provide a reasonable surrogate indicator of overall eHealth investment readiness. Correlation between component metrics shows modest correlation for Ibrahim Governance Index and IDI (0.66), and Ibrahim Governance Index and Internet penetration (0.64). These are consistent with suggestions that ICT development plays a role in promoting good governance [43, 44] and may suggest that governance is a requirement for countries to make productive eHealth investments. Correlation between health expenditure per capita and ICT development (0.65) underpins the importance of addressing affordability issues and may support suggestions that ICT initiatives themselves contribute positively to economic growth [45].

The metrics used to develop the eHealth Investment Readiness Assessment Tool reflect aspects of eHealth investment that are aligned to the five cases. The tool highlights countries’ strengths and weaknesses, thereby providing information for targeted eHealth investment plans. It also helps to identify strengths in neighbouring countries to support collaborative partnerships for regional eHealth investment. This demonstrates the applicability of the Five Case Model to African eHealth investment decisions. The Five Case Model should now be validated through in-country field testing, by designing a tool based on the five cases and testing its utility to help decision makers select an appropriate initiative for investment from among promising candidates.

Conclusion

The absence of recognised eHealth impact appraisal frameworks in regular use in African countries increases the opportunity cost of eHealth and the risk that investments will not produce optimal net benefits. The eHealth Investment Readiness Assessment Tool presented in this study ranked fifty-four African countries and profiled potential approaches for country and regional eHealth investment strengthening plans using metrics relevant to eHealth and aligned to the Five Case Model. The results illustrate the applicability of the Five Case Model for African eHealth investment decisions to serve as a component of an eHealth impact model for Africa. Whilst this study used African countries as the exemplar, the approach is likely to be useful elsewhere, particularly in Low and Middle Income Countries (LMICs), and complements recent developments such as the DHIF. Further scrutiny of the approach and assessment of its eHealth investment strengthening utility is encouraged.

Availability of data and materials

The datasets utilised for this study are available from the corresponding author on reasonable request, or from the following public repositories:

• Global diffusion of eHealth: Making universal health coverage achievable. Report of the third global survey on eHealth. Geneva: Global Observatory for eHealth, World Health Organization; 2016. http://africahealthforum.afro.who.int/first-edition/IMG/pdf/global_diffusion_of_ehealth_-_making_universal_health_coverage_achievable.pdf. Accessed 09 Mar 2019.

• Current health expenditure (CHE) as percentage of gross domestic product World Health Organization. http://apps.who.int/gho/data/view.main.GHEDCHEGDPSHA2011v. Accessed 04 Feb 2019.

• Current health expenditure per capita. The World Bank; 2015. https://data.worldbank.org/indicator/SH.XPD.CHEX.PC.CD. Accessed 04 Feb 2019.

• World Economic Situation and Prospects. United Nations, 2018. https://www.un.org/development/desa/dpad/wp-content/uploads/sites/45/publication/WESP2018_Full_Web-1.pdf. Accessed 04 Feb 2019.ITU Development Index. ITU; 2017. https://www.itu.int/net4/ITU-D/idi/2017/index.html. Accessed 09 Mar 2019.

• Measuring the Information Society Report Geneva: International Telecommunications Union, 2017. https://www.itu.int/en/ITU-D/Statistics/Documents/publications/misr2017/MISR2017_Volume1.pdf. Accessed 04 Mar 2019.

• Internet Users Statistics for Africa. 2017. https://www.internetworldstats.com/stats1.htm. Accessed 04 Feb 2019.

• Percentage of Individuals using the Internet. International Telecommunications Union; 2016. https://www.itu.int/en/ITU-D/Statistics/Pages/stat/default.aspx. Accessed 27 Feb 2019.

• Human Capital Index. World Bank; 2017. http://www.worldbank.org/en/publication/human-capital#Data. Accessed 09 Mar 2019.

• Ibrahim Index of Governance in Africa 2017. http://iiag.online/. Accessed 04 Feb 2019.

Abbreviations

- AMU:

-

Arab Maghreb Union

- CHE:

-

Current Health Expenditure

- DHIF:

-

Digital Health Impact Framework

- EAC:

-

East African Community

- ECOWAS:

-

Economic Community of West African States

- GDP:

-

Gross Domestic Product

- GOE:

-

Global Observatory of eHealth

- HCI:

-

Human Capital Index

- ICT:

-

Information Communication Technology

- IDI:

-

ICT Development Index

- IHR:

-

International Health Regulations

- ITU:

-

International Telecommunication Union

- LMICs:

-

Low and Middle Income Countries

- PFI:

-

Private Finance Initiative

- PPP:

-

Public Private Partnership

- SADC:

-

South African Development Community

- SDGs:

-

Sustainable Development Goals

- UHC:

-

Universal Health Coverage

- WHO:

-

World Health Organization

References

Scott RE, Saeed A. Global e-health - measuring outcomes: why, what, and how. A report commissioned by the World Health Organization’s global observatory for eHealth. Making the eHealth connection: global partnerships, local solutions conference; 08 august 2008. The Rockefeller Foundation: Bellagio; 2008.

Digital Health Geneva: World Health Assembly, 71; 2018.

Mars M, Scott R. Here we go again – ‘Digital Health’. J Int Soc Telemed eHealth. 2019;7(e1):1–2.

Ahern DK, Kreslake JM, Phalen JM. What is eHealth (6): perspectives on the evolution of eHealth research. J Med Internet Res. 2006;8(1):e4 Epub 2006/04/06.

Zelmer J, Ronchi E, Hyppönen H, Lupiáñez-Villanueva F, Codagnone C, Nøhr C, et al. International health IT benchmarking: learning from cross-country comparisons. J Am Med Inform Assoc. 2017 Mar 1;24(2):371–9. https://doi.org/10.1093/jamia/ocw111.

Scott RE, Mars M, Jordanova M. Would a rose by any other name - cause such confusion? J Int Soc Telemed eHealth. 2013;1(2):52–3.

eHealth at WHO. Geneva: World Health Organization. https://www.who.int/ehealth/about/en/. Accessed 07 Feb 2019.

Digital Dividends. World development report. Washington: World Bank; 2016. http://documents.worldbank.org/curated/en/896971468194972881/pdf/102725-PUB-Replacement-PUBLIC.pdf. Accessed 18 Feb 2019.

Sundin PCJ, Mehta K. Why do entrepreneurial mHealth ventures in the developing world fail to scale? J Med Eng Technol. 2016;40(7–8):444–57.

Drury P, Roth S, Jones T, Stahl M, Medeiros D. Guidance for Investing in Digital Health: Asian Development Bank; 2018. https://www.adb.org/sites/default/files/publication/424311/sdwp-052-guidance-investing-digital-health.pdf. Accessed 07 Feb 2019.

Stroetmann K, Jones T, Dobrev A, Stroetmann VN. eHealth is Worth It. European Commission, 2006. http://ehealth-impact.eu/fileadmin/ehealth_impact/documents/ehealthimpactsept2006.pdf. Accessed 18 Feb 2019.

Mars M. A new model for ehealth capacity development in Africa. Global Telemedicine and eHealth Updates: Knowledge resources. International Society for Telemedicine & eHealth. 2010;3:56–59. https://www.isfteh.org/files/media/Global_Telemedicine_Vol_3_2010.pdf. Accessed 15 Jul 2020.

Rudmik L, Drummond M. Health economic evaluation: important principles and methodology. Laryngoscope. 2013;123(6):1341–7 Epub 2013/03/14.

Kumar S, Williams AC, Sandy JR. How do we evaluate the economics of health care? Eur J Orthodontics. 2006;28(6):513–9.

Drummond MF. Methods for the economic evaluation of health care programs. 3rd ed. Oxford: Oxford University Press; 2005.

Kemm J. Past achievement, current understanding and future Progress in health impact assessment. Oxford: Oxford University Press; 2013.

Haigh F, Harris E, Harris-Roxas B, FB F, Dannenberg AL, Harris MF, et al. What makes health impact assessments successful? Factors contributing to effectiveness in Australia and New Zealand. BMC Public Health. 2015;15:1009.

NICE. Methods for the development of NICE public health guidance. London: National Institute for Health and Care Excellence; 2012. https://www.nice.org.uk/process/pmg4/resources/methods-for-the-development-of-nice-public-health-guidance-third-edition-pdf-2007967445701. Accessed 17 Feb 2019.

Dobrev V, Jones T, Kersting A, Artmann J, Stroetmann K, Stroetmann V. Methodology for evaluating the socio-economic impact of interoperable EHR and ePrescribing systems: European Commission Information Society and Media; 2008. http://www.ehr-impact.eu/downloads/documents/EHRI_D1_3_Evaluation_Methodology_v1_0.pdf. Accessed 07 Feb 2019.

Jones T, Drury P, Zuniga P, Roth S. Digital health impact framework user manual. Asian Development Bank: Manila; 2018. https://www.adb.org/sites/default/files/publication/465611/sdwp-057-digital-health-impact-framework-manual.pdf. Accessed 07 Feb 2019.

LeFevre AE, Shillcutt SD, Broomhead S, Labrique AB, Jones T. Defining a staged-based process for economic and financial evaluations of mHealth programs. Cost Eff Resour Alloc. 2017;15(5):5 Epub 2017/04/22.

The Green Book, Central Government Guidance on Appraisal and Evaluation. London: HM Treasury, 2018. https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/685903/The_Green_Book.pdf. Accessed 07 Feb 2019.

Smith C, Flanagan J. Making sense of public sector investments: the five case model in decision making: Radcliffe publishing; 2001.

Scottish Capital Investment Manual Option Appraisal Guide. A practical guide to the appraisal, evaluation, approval and Management of Policies, programmes and projects. Edinburgh: Government of Scotland; 2017.

Better Business Cases: Guide to Developing an Implementation Business Case. New Zealand Government; 2015. https://treasury.govt.nz/sites/default/files/2015-04/bbc-impl-gd.pdf. Accessed 17 Feb 2019.

United Nations Regional Groups of Member States – African Group. United Nations Department for General Assembly and Conference Management. United Nations. http://www.un.org/depts/DGACM/RegionalGroups.shtml. Accessed 18 Feb 2019.

Regional Economic Communities. African Union. https://au.int/en/organs/recs. Accessed 25 Jan 2019.

Global digital health index. https://www.digitalhealthindex.org/. Accessed 04 Mar 2019.

Global diffusion of eHealth: Making universal health coverage achievable. Report of the third global survey on eHealth. Geneva: Global Observatory for eHealth, World Health Organization; 2016. http://africahealthforum.afro.who.int/first-edition/IMG/pdf/global_diffusion_of_ehealth_-_making_universal_health_coverage_achievable.pdf. Accessed 09 Mar 2019.

Service availability and readiness assessment. World Health Organization. http://www.who.int/healthinfo/systems/sara_introduction/en/. Accessed 03 Dec 2018.

Current health expenditure (CHE) as percentage of gross domestic product World Health Organization. http://apps.who.int/gho/data/view.main.GHEDCHEGDPSHA2011v. Accessed 04 Feb 2019.

Current health expenditure per capita. The World Bank; 2015. https://data.worldbank.org/indicator/SH.XPD.CHEX.PC.CD. Accessed 04 Feb 2019.

World Economic Situation and Prospects. United Nations, 2018. https://www.un.org/development/desa/dpad/wp-content/uploads/sites/45/publication/WESP2018_Full_Web-1.pdf. Accessed 04 Feb 2019.

Enderwick P. A dictionary of business and Management in India: Oxford University press; 2018.

ITU Development Index. ITU; 2017. https://www.itu.int/net4/ITU-D/idi/2017/index.html. Accessed 09 Mar 2019.

Measuring the Information Society Report Geneva: International Telecommunications Union, 2017. https://www.itu.int/en/ITU-D/Statistics/Documents/publications/misr2017/MISR2017_Volume1.pdf. Accessed 04 Mar 2019.

Country Health Emergency Preparedness & International Health Regulations. World Health Organization Africa. https://www.afro.who.int/about-us/programmes-clusters/cpi. Accessed 04 Mar 2019.

Internet Users Statistics for Africa. 2017. https://www.internetworldstats.com/stats1.htm. Accessed 04 Feb 2019.

Percentage of Individuals using the Internet. International Telecommunications Union; 2016. https://www.itu.int/en/ITU-D/Statistics/Pages/stat/default.aspx. Accessed 27 Feb 2019.

Human Capital Index. World Bank; 2017. http://www.worldbank.org/en/publication/human-capital#Data. Accessed 09 Mar 2019.

The World Bank Data Catelog. The World Bank; 2018. https://datacatalog.worldbank.org/human-capital-index-hci-scale-0-1-2. Accessed 03 Mar 2019.

Ibrahim Index of Governance in Africa 2017. http://iiag.online/. Accessed 04 Feb 2019.

Roy S, Mukherjee M. Application of ICT in Good Governance: ResearchGate; 2016. https://www.researchgate.net/publication/299563631_Application_of_ICT_in_Good_Governance. Accessed 04 Mar 2019.

Harpreet S. E-Governance as a Tool of Good Governance in India: A Case Study of Suwidha Kendra, Jalandhar (Punjab). Dynamics of Public Administration. 2018;35(2):203–12.

Stanley TD, Doucouliagos H, Steel P. Does ICT generate economic growth? A meta-regression analysis. J Econ Surv. 2018;32(3):705–26.

Acknowledgements

Not applicable.

Funding

Research reported in this publication was supported by the Fogarty International Center of the National Institutes of Health under Award Number D43TW007004–13. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author information

Authors and Affiliations

Contributions

All authors [SB, MM, RES and TJ] were responsible for identifying the need for and conceptualising of the paper. One author [SB] designed the approach, collected data, and prepared the initial draft of the manuscript. All authors [SB, MM, RES and TJ] made substantial contributions to the analysis and interpretation of data and substantive revisions to the manuscript. All authors [SB, MM, RES and TJ] have approved the submitted version (and any substantially modified version that involves each author’s contribution to the study). All authors [SB, MM, RES and TJ] have agreed both to be personally accountable for each author’s own contributions and to ensure that questions related to the accuracy or integrity of any part of the work, even ones in which the author was not personally involved, are appropriately investigated, resolved, and the resolution documented in the literature.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Broomhead, S.C., Mars, M., Scott, R.E. et al. Applicability of the five case model to African eHealth investment decisions. BMC Health Serv Res 20, 666 (2020). https://doi.org/10.1186/s12913-020-05526-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-020-05526-6