Abstract

Background

Given the increased attention to sepsis at the population level there is a need to assess hospital performance in the care of sepsis patients using widely-available administrative data. The goal of this study was to develop an administrative risk-adjustment model suitable for profiling hospitals on their 30-day mortality rates for patients with sepsis.

Methods

We conducted a retrospective cohort study using hospital discharge data from general acute care hospitals in Pennsylvania in 2012 and 2013. We identified adult patients with sepsis as determined by validated diagnosis and procedure codes. We developed an administrative risk-adjustment model in 2012 data. We then validated this model in two ways: by examining the stability of performance assessments over time between 2012 and 2013, and by examining the stability of performance assessments in 2012 after the addition of laboratory variables measured on day one of hospital admission.

Results

In 2012 there were 115,213 sepsis encounters in 152 hospitals. The overall unadjusted mortality rate was 18.5%. The final risk-adjustment model had good discrimination (C-statistic = 0.78) and calibration (slope and intercept of the calibration curve = 0.960 and 0.007, respectively). Based on this model, hospital-specific risk-standardized mortality rates ranged from 12.2 to 24.5%. Comparing performance assessments between years, correlation in risk-adjusted mortality rates was good (Pearson’s correlation = 0.53) and only 19.7% of hospitals changed by more than one quintile in performance rankings. Comparing performance assessments after the addition of laboratory variables, correlation in risk-adjusted mortality rates was excellent (Pearson’s correlation = 0.93) and only 2.6% of hospitals changed by more than one quintile in performance rankings.

Conclusions

A novel claims-based risk-adjustment model demonstrated wide variation in risk-standardized 30-day sepsis mortality rates across hospitals. Individual hospitals’ performance rankings were stable across years and after the addition of laboratory data. This model provides a robust way to rank hospitals on sepsis mortality while adjusting for patient risk.

Similar content being viewed by others

Background

Sepsis is a leading cause of in-hospital mortality and a major driver of health care spending in developed nations [1]. Several evidence-based practices for sepsis exist, including adequate control of the infectious source, early administration of appropriate antibiotics, and early administration of intravenous fluids to support intravascular volume [2]. However, hospitals deliver these treatments inconsistently, leading to excess morbidity and mortality [3, 4]. In response to this persistent quality gap, health systems and governments have developed large scale strategies to improve sepsis care both through traditional clinically-oriented quality improvement [5] and through health policies designed to incentivize quality improvement at the regional and national level [6, 7].

Understanding the impact of these efforts and providing hospitals with feedback on their quality of care in patients with sepsis requires a robust method for assessing hospital-specific mortality rates. Such a method would ideally use widely available data that is readily accessible across hospital systems and must effectively account for individual patients’ variation in risk of mortality. At the same time, mortality-based performance measures should not adjust for variation in treatment practices that may modify the risk of mortality, which are reflective of hospital quality.

To address this need, we used a state-wide Pennsylvania discharge database that captures administrative claims data along with a selection of laboratory data to create a novel method to adjust for individual patients’ severity of illness on presentation in order to meaningfully compare sepsis outcomes across hospitals.

Methods

Study design and data

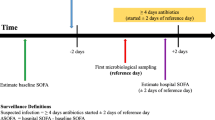

We conducted a retrospective cohort study of patients with sepsis admitted to non-federal general acute care hospitals in the Commonwealth of Pennsylvania in the United States during calendar years 2012 and 2013. First, we developed a de novo risk-adjustment model using 2012 administrative data. Next, we examined the construct validity of our model by examining the stability of hospital rankings over time (comparing the 2012 administrative model to the 2013 administrative model) and after addition of clinical laboratory variables (comparing the 2012 administrative model to a 2012 clinical model with both administrative and laboratory data). In this context, a valid administrative model would produce relatively stable performance estimates over time (i.e. with few exceptions, hospitals that are high performers one year would be high performers the next year). A valid administrative model would also yield performance estimates that are similar to those estimated from a more granular clinical model which better accounts for variation in risk.

We used the Pennsylvania Health Care Cost Containment Council (PHC4) database. PHC4 collects administrative data on all hospital admissions in Pennsylvania and makes them available for research, including both demographic information and International Classification of Diseases—version 9.0—Clinical Modification (ICD-9-CM) diagnosis and procedure codes. Unlike most administrative claims-based data sets, these data also contain a selection of laboratory values obtained on the day of admission, enabling us to create a clinical model in addition to the standard administrative model [8]. We augmented these data with the Pennsylvania Department of Health vital status records to capture post-discharge mortality.

Patients and hospitals

All encounters for patients meeting the “Angus” definition of sepsis—either an explicit ICD-9-CM code for sepsis or co-documentation of ICD-9-CM codes for an infection and an organ dysfunction—were eligible for the study [9, 10]. We chose the Angus definition because it is the broadest administrative definition of sepsis and has undergone rigorous clinical validation (10). We excluded admissions to non-short term and non-general acute care hospitals as these hospitals were not the focus of our study. We also excluded admissions less than 20 years of age, admissions for which gender or age were missing, and admissions at hospitals that were not continuously open and admitting patients for the duration of the study period. To maintain independence of observations, if a single patient had multiple encounters within a study year, then we randomly included a single encounter per year.

Base model for risk-adjusted mortality

We first created a base logistic regression model for risk-adjusted mortality using exclusively risk-adjustment variables that are available in administrative data. The primary outcome variable for this model was all-cause mortality within 30 days of the admission date, as determined using the Pennsylvania vital status records. The model was based on five categories of risk-adjustment variables hypothesized to be associated with sepsis outcomes based on prior work [9, 11, 12]: demographics, admission source, comorbidities, organ failures present on admission, and infection source.

Demographic variables were obtained directly from the claims and included age and gender. Gender was modeled as an indicator covariate, and age was modeled as a linear spline by age quintile. Admission source was obtained directly from the claims and modelled as an indicator covariate defined as admission through the emergency department versus admission from another source. Comorbidities were defined using ICD-9-CM codes in the manner of Elixhauser [13] and modelled as indicator covariates. Organ failures present on admission were defined in the manner of Elias [12] and modelled as indicator covariates. For comorbidities and organ failures present on admission, we excluded from the model any designation that had less than a 1% prevalence in our sample population.

Infection source was modeled as hierarchical infection categories in which we assigned each patient an infectious source category identified using ICD-9-CM diagnosis codes (see Additional file 1: Table S1). We created the categories from the Angus sepsis definition [9] which we further divided into 12 groups: septicemia, bacteremia, fungal infection, peritoneal infection, heart infection, upper respiratory infection, lung infection, central nervous system infection, gastrointestinal infection, genitourinary infection, skin infection, and other infection source. For patients with multiple ICD-9-CM codes indicating multiple infection sources, we assigned them the single infection source category associated with the highest unadjusted mortality. In ranking the infectious sources based on their unadjusted mortality, we used 2011 data in order to avoid model overfitting. The final variable was modelled as a series of mutually exclusive indicator covariates with upper respiratory infection as the reference category.

Augmented mortality model including laboratory variables

We next created an augmented logistic regression model for risk-adjusted mortality using all of the variables from the base model plus selected laboratory values obtained on the day of admission. The list of available laboratory values including their units, frequency, averages, and ranges is available in Additional file 1: Table S2 and S3. Values outside the plausible range, such as negative data points for non-calculated laboratory values, were recoded as missing.

We used a multi-step process to determine not only which lab variables to include in our model but also their functional forms. First, we used locally weighted scatterplot smoothing to visually assess inflection points in the relationship between each numeric laboratory value and 30-day mortality [14]. Based on visual inspection of these plots and standard reference values from our hospital’s laboratory, we categorized each variable into between two and five categories, with one category representing a normal result and the other categories representing non-normal extremes: very low, low, high, and very high. For arterial pH and arterial pCO2, which are interdependent, we performed an additional step in which we created a single combined variable for which the categories were permutations of the non-normal categories defined for pH and pCO2, respectively, as previously performed [15].

For each patient, we assigned an appropriate category for every laboratory test based on the reported result. If the patient had more than one result available for a given laboratory test, we selected the value that would be included in the category associated with a higher mortality rate. When a laboratory test result was missing, we assumed it to fall into the normal range and assigned the normal category, as is standard in physiological risk-adjustment models [15].

Next, we used Bayesian information criterion (BIC)-based stepwise logistic regression to identify the laboratory value covariates to be included in the model. This regression included all the covariates in the claims-based model. Laboratory values that did not contribute to a maximal BIC were excluded from the final model. Each laboratory value’s categories were assessed in the BIC regression as a group and ultimately either included in or excluded from the model as a group, so as not to partially remove categories for a given laboratory value. Laboratory values deemed contributory by the BIC regression entered the final model as categorical variables with the normal category as the reference group.

Risk-standardized mortality rates

Based on these models we use mixed-effects logistic regression to create risk-standardized hospital-specific 30-day mortality rates. These rates account for variation in both risk and reliability across hospitals: they account for variation in risk in that they control for the different baseline characteristics of sepsis patients across hospitals; they account for reliability in that the rates for small hospitals, which are more susceptible to random variation than rates for large hospitals, are adjusted toward the state-wide mean [16].

We calculated hospital-specific risk adjusted mortality rates by dividing each hospital’s predicted mortality (using the base model plus a hospital-specific random effect) by each hospital’s expected mortality (using the base model without a hospital-specific random effect), generating a risk-standardized mortality ratio. Multiplying the risk-standardized mortality ratio by the mean 30-day mortality of the state-wide sample yielded a hospital-specific risk-standardized mortality rate.

We performed this process separately for 2012 and 2013 without laboratory data and then again for 2012 with laboratory data, resulting in three sets of hospital-specific mortality rates: 2012 administrative rates, 2013 administrative rates, and 2012 clinical rates.

Analysis

For all models we assessed discrimination, using the C-statistic, and calibration, using the slope and intercept of regression lines fit to the calibration plots. We assessed the validity of our administrative model by examining the consistency of hospital rankings over time and with the addition of laboratory data. As noted above, we assumed that a valid model would yield hospital rankings that did not markedly change between years or after the addition of laboratory values. We generated scatter plots to compare the hospital-specific risk-standardized mortality rates between the 2012 and 2013 administrative rates; and between the 2012 administrative and clinical rates, calculating a coefficient of determination. Additionally, for each of the three sets of hospital-specific mortality rates, we calculated performance quintiles, with the outer quintiles representing the highest and lowest performing 20% of hospitals, respectively. We compared the composition of the quintiles between the 2012 and 2013 administrative rates and then between the 2012 administrative and clinical rates. We considered hospital movement of one quintile or less between comparison groups to be a marker of stability.

Data management and analysis was performed using Stata version 14.0 (StataCorp, College Station, Texas). All aspects of this work were reviewed and approved by the University of Pittsburgh institutional review board.

Results

Patients and model development

A total of 236,154 patients met our final inclusion criteria: 115,213 in 2012 and 120,941 in 2013 (Fig. 1). These patients were admitted to 152 different acute care hospitals. Patient characteristics stratified by year are shown in Table 1. In both years average age was over 70 and a large percentage of patients were admitted through the emergency department. The most common comorbidity was hypertension (58.2% in 2012 and 57.4% in 2013) followed by fluid and electrolyte disorders, renal disease, diabetes, congestive heart failure, and chronic pulmonary disease. The most common organ failure on admission was renal failure (48.2% in 2012 and 49.1% in 2013) followed by cardiovascular failure. Unadjusted 30-day mortality was 18.5% in 2012 and 18.2% in 2013.

The hierarchical infection categories along with each category’s mortality rate and the number of patients who were placed into that infection category are shown in Fig. 2. In both years, septicemia was the most prevalent category (30.9% in 2012 and 33.0% in 2013) and was associated with the highest mortality (30.5% in 2012 and 28.9% in 2013).

The set of laboratory test results available from PHC4 along with their plausible ranges and final categorizations are shown in Additional file 1: Table S2 and S3. Based on BIC criteria, 19 of these laboratory test results were included in the final risk-adjustment model. These tests along with the proportion of results that were normal, abnormal, or missing, are shown in Table 2. For individual laboratory values, the percent of patients with a reported value ranged from 4.4 to 72% in 2012 and 5.2 to 74% in 2013. The most frequently reported lab value was serum glucose, and the least frequently reported lab value was serum pro B-type natriuretic peptide.

The final base model results are shown in Additional file 1: Table S4. The factors most strongly associated with mortality included age, selected hierarchical infection categories (e.g., septicemia, heart infection, lung infection, and fungal infection), and selected comorbidities (e.g., metastatic cancer and neurologic decline). Regarding laboratory results, derangements in pro-BNP, albumin, troponin, bilirubin, BUN, and sodium were most strongly associated with mortality. All models showed good discrimination and calibration. The C-statistics were 0.776 for the 2012 administrative model, 0.772 for the 2013 administrative model, and 0.796 for the 2012 clinical model. The slope and intercept of the calibration plots were 0.960 and 0.007 for the 2012 administrative model, 0.960 and 0.007 for the 2013 administrative model, and 0.965 and 0.006 for the 2012 clinical model.

Risk-adjusted mortality rates

Risk-adjusted mortality rates varied widely in all models, demonstrating their utility in identifying high performing and low performing hospitals. The range of hospital-specific risk-standardized mortality rates was 12.2 to 24.5% with a mean of 18.4% in the 2012 administrative model; 12.7 to 23.7% with a mean of 18.1% in the 2013 administrative model; and 12.9 to 23.9% with a mean of 18.4% in the 2012 clinical model that included both administrative variables and laboratory results.

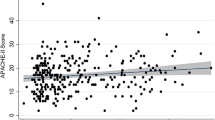

In the validation steps, the risk-standardized mortality rates for individual hospitals were relatively stable across years (Pearson’s correlation = 0.53; Fig. 3a); and after the addition of laboratory values (Pearson’s correlation = 0.93, Fig. 3b). When stratifying hospitals into quintiles by performance and comparing the 2012 and 2013 administrative models, of the 152 hospitals, 69 (45%) did not change quintile and only 19 (13%) moved by more than one quintile between the 2 years (Table 3). Comparing the 2012 administrative model to the 2012 clinical model, 113 (74%) hospitals stayed in the same quintile, and only 1 hospital (1%) moved by more than one quintile (Table 3).

Correlation of risk-adjusted mortality rates between the 2012 and 2013 administrative models (Panel A) and the 2012 administrative and clinical models (Panel B). Y and X axes are the model-derived risk-adjusted mortality rates. Blue dots represent a single hospital. Grey lines represent the linear correlation between the two performance estimates

Discussion

Using a large, state-wide sample of sepsis admissions to over 150 hospitals, we developed an administrative risk-adjustment model suitable for benchmarking hospitals on their 30-day sepsis mortality. This model showed very good discrimination and calibration. In addition, the model results were reasonably stable, yielding performance assessments that were similar when comparing multiple years and when comparing the administrative model to a model that contained more granular clinical risk adjustment variables.

Our model can be used by health systems and governments to assess hospital performance in the care of patients with sepsis. Sepsis is increasingly recognized as a major public health problem, and there is increasing attention to implementing large-scale sepsis performance improvement initiatives in hospitals [17]. For example, in the United States, the federal government requires all hospitals participating in the Medicare program to report data on adherence to a sepsis care bundle [6]. In addition, several US localities require hospitals to implement protocols for sepsis recognition and treatment [7]. Our model can be used to assess the impact of those initiatives and others like them, providing a valuable tool for sepsis-focused health policy assessment and population-based comparative effectiveness research.

Similarly, our model could allow researchers and policy makers to identify hospitals with outlying performance as candidates for targeted quality improvement efforts. For example, poor performing hospitals could benefit from dedicated resources to improve sepsis outcomes, and high performing hospitals could serve as laboratories to understand how to deliver high-quality sepsis care. This framework, known as “positive-negative deviance” [18], is an increasingly common quality improvement tool and has been useful in other analogous areas such as performance improvement in intensive care unit telemedicine [19].

The current study builds off prior work in this area, including related studies performed in Germany [20], in the United States Medicare population [21], and in patients with septic shock [22]. Our study adds to this literature in that it examined all hospitalized sepsis patients in a large US state and included patients with all insurance types instead of just Medicare, thus filling an important niche. Our study also extends related work which developed an administrative model for sepsis mortality but for which the time horizon was limited to the hospital [23] (i.e. patients were not followed for their outcome after discharge). In-hospital mortality as an outcome measure is known to be biased by discharge practices [24]. Benchmarking hospitals using in-hospital mortality might incentivize them to discharge patients more quickly to post-acute care hospitals, biasing the performance assessments [25, 26]. This problem is overcome when using 30-day mortality as an outcome measure, as we do here, making our results particularly useful.

Our study has several limitations. First, by using administrative data, we cannot rule out that we insufficiently accounted for variation in case-mix across hospitals. Although our comparison to a model that included lab values provides important construct validity, we did not have access to other key variables like vital signs or patients’ preferences for limitations of life-sustaining treatment [27]. Including these values might demonstrate that a more accurate model would perform differently than our administrative model and result in more significant changes in hospital performance rankings. Second, in addition to administrative risk adjustment we used an administrative case-ascertainment strategy, which is only modestly accurate and may lead to different performance rankings than a different administrative strategy or a clinical strategy [28]. Third, we used data from only one US state, however it is a large state with both urban and rural areas, supporting the generalizability of our results. Finally, we examined 30-day mortality but not other important outcome measures like sepsis readmission rates or long-term outcomes. Future work should be directed at understanding hospital-level variation in these outcome measures.

Conclusions

In conclusion, we developed a robust risk-adjustment model that may be implemented on existing data collection structures and can be used to benchmark hospitals on sepsis outcomes. Future work should be directed at using this model to develop and test large scale sepsis performance improvement initiatives.

Abbreviations

- BIC:

-

Bayesian information criterion

- ICD-9-CM:

-

International Classification of Diseases, Version 9.0—Clinical Modification

- PHC4:

-

Pennsylvania Health Care Cost Containment Council

References

Fleischmann C, Scherag A, Adhikari NKJ, Hartog CS, Tsaganos T, Schlattmann P, et al. Assessment of global incidence and mortality of hospital-treated Sepsis. Current estimates and limitations. Am J Respir Crit Care Med. 2016;193:259–72.

Dellinger RP, Levy MM, Rhodes A, Annane D, Gerlach H, Opal SM, et al. Surviving Sepsis Campaign: International Guidelines for Management of Severe Sepsis and Septic Shock: 2012. 2013;41:580–637.

Levy MM, Rhodes A, Phillips GS, Townsend SR, Schorr CA, Beale R, et al. Surviving Sepsis campaign: association between performance metrics and outcomes in a 7.5-year study. Crit Care Med. 2015;43:3–12.

Rhodes A, Phillips G, Beale R, Cecconi M, Chiche J-D, De Backer D, et al. The surviving Sepsis campaign bundles and outcome: results from the international multicentre prevalence study on Sepsis (the IMPreSS study). Intensive Care Med Springer Berlin Heidelberg; 2015;41:1620–8.

Scheer CS, Fuchs C, Kuhn S-O, Vollmer M, Rehberg S, Friesecke S, et al. Quality improvement initiative for severe Sepsis and septic shock reduces 90-day mortality: a 7.5-year observational study. Crit Care Med. 2017;45:241–52.

Barbash IJ, Kahn JM, Thompson BT. Medicare’s Sepsis reporting program: two steps forward, one step Back. Am J Respir Crit Care Med. 2016;194(2):139.

Hershey TB, Kahn JM. State Sepsis mandates - a new era for regulation of hospital quality. N Engl J Med. 2017;376:2311–3.

Pine M, Jordan HS, Elixhauser A, Fry DE, Hoaglin DC, Jones B, et al. Enhancement of claims data to improve risk adjustment of hospital mortality. JAMA. 2007;297:71–6.

Angus DC, Linde-Zwirble WT, Lidicker J, Clermont G, Carcillo J, Pinsky MR. Epidemiology of severe sepsis in the United States: analysis of incidence, outcome, and associated costs of care. 2001;29:1303–1310.

Iwashyna TJ, Odden A, Rohde J, Bonham C, Kuhn L, Malani P, et al. Identifying patients with severe sepsis using administrative claims: patient-level validation of the angus implementation of the international consensus conference definition of severe sepsis. Med Care. 2014:e39–43.

Zimmerman JE, Kramer AA, McNair DS, Malila FM. Acute Physiology and Chronic Health Evaluation (APACHE) IV: Hospital mortality assessment for today's critically ill patients. 2006;34:1297–1310.

Elias KM, Moromizato T, Gibbons FK, Christopher KB. Derivation and validation of the acute organ failure score to predict outcome in critically ill patients: a cohort study. Crit Care Med. 2015;43:856–64.

Quan H, Sundararajan V, Halfon P, Fong A, Burnand B, Luthi J-C, et al. Coding algorithms for defining comorbidities in ICD-9-CM and ICD-10 administrative data. Med Care. 2005;43:1130–9.

Cleveland WS. Robust locally weighted regression and smoothing scatterplots. J Am Stat Assoc Taylor & Francis; 1979;74:829–36.

Knaus WA, Zimmerman JE, Wagner DP, Draper EA, Lawrence DE. APACHE-acute physiology and chronic health evaluation: a physiologically based classification system. Crit Care Med. 1981;9:591–7.

Normand S, Glickman ME. Statistical methods for profiling providers of medical care: issues and applications. J Am Stat Assoc. 1997;92:803–14.

Cooke CR, Iwashyna TJ. Sepsis mandates: improving inpatient care while advancing quality improvement. JAMA. 2014;312:1397–8.

Bradley EH, Curry LA, Ramanadhan S, Rowe L, Nembhard IM, Krumholz HM. Research in action: using positive deviance to improve quality of health care. Implement Sci. 2009;4:25.

Kahn JM, Rak KJ, Kuza CC, Ashcraft LE, Barnato AE, Fleck JC, et al. Determinants of intensive care unit telemedicine effectiveness: an ethnographic study. Am J Respir Crit Care Med 2018;doi: https://doi.org/10.1164/rccm.201802-0259OC.

Schwarzkopf D, Fleischmann-Struzek C, Rüddel H, Reinhart K, Thomas-Rüddel DO. A risk-model for hospital mortality among patients with severe sepsis or septic shock based on German national administrative claims data. Lazzeri C, editor. PLoS One 2018;13:e0194371.

Hatfield KM, Dantes RB, Baggs J, Sapiano MR, Fiore AE, Jernigan JA, et al. Assessing variability in hospital-level mortality among US medicare beneficiaries with hospitalizations for severe sepsis and septic shock. Crit. Care Med. 2018;46:1753–60.

Walkey AJ, Shieh M-S, Liu VX, Lindenauer PK. Mortality measures to profile hospital performance for patients with septic shock. Crit Care Med. 2018;46:1247–54.

Ford DW, Goodwin AJ, Simpson AN, Johnson E, Nadig N, Simpson KN. A severe Sepsis mortality prediction model and score for use with administrative data. Crit Care Med. 2016;44:319–27.

Reineck LA, Pike F, Le TQ, Cicero BD, Iwashyna TJ, Kahn JM. Hospital factors associated with discharge bias in ICU performance measurement. Crit Care Med. 2014;42:1055–64.

Hall WB, Willis LE, Medvedev S, Carson SS. The implications of long-term acute care hospital transfer practices for measures of in-hospital mortality and length of stay. Am J Respir Crit Care Med. 2012;185:53–7.

Vasilevskis EE, Kuzniewicz MW, Dean ML, Clay T, Vittinghoff E, Rennie DJ, et al. Relationship between discharge practices and intensive care unit in-hospital mortality performance: evidence of a discharge bias. Med Care. 2009;47:803–12.

Walkey AJ, Barnato AE, Wiener RS, Nallamothu BK. Accounting for patient preferences regarding life-sustaining treatment in evaluations of medical effectiveness and quality. Am J Respir Crit Care Med. 2017;196:958–63.

Rhee C, Jentzsch MS, Kadri SS, Seymour CW, Angus DC, Murphy DJ, et al. Variation in identifying Sepsis and organ dysfunction using administrative versus electronic clinical data and impact on hospital outcome comparisons. Crit Care Med. 2018:1.

Acknowledgements

Not applicable.

Funding

This work was funded by grants R01HL126694 (PI: Kahn), K24HL133444 (PI: Kahn), and K08HS025455 (PI: Barbash) from the United States National Institutes of Health. The funder played no role in the design of the study; the collection, analysis, and interpretation of data; or in writing the manuscript.

Availability of data and materials

The data that support the findings of this study are available from the Pennsylvania Health Care Cost Containment Council (PHC4). Restrictions apply to the availability of these data, which were used under license for the current study. Please visit http://www.phc4.org/services/datarequests/ for information on how to acquire these data.

Author information

Authors and Affiliations

Contributions

Conception and design (JLD, JMK); acquisition of the data (JMK); analysis and interpretation of data (JLD, BSD, IJB, JMK); drafting of the manuscript (JLD); critically revising the manuscript for important intellectual content (JLD, BSD, IJB, JMK). All authors (JLD, BSD, IJB, JMK) approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was reviewed by the University of Pittsburgh Human Research Protections Office and was determined to be exempt from human subjects review under Exemption 4 of the United States Code of Federal Regulations in that it used only existing, publicly available data and the subjects cannot be identified (Reference # PRO11100193).

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Supplementary Data. Supplementary data referenced in primary manuscript (DOCX 49 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Darby, J.L., Davis, B.S., Barbash, I.J. et al. An administrative model for benchmarking hospitals on their 30-day sepsis mortality. BMC Health Serv Res 19, 221 (2019). https://doi.org/10.1186/s12913-019-4037-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-019-4037-x