Abstract

Background

An emerging field of knowledge translation (KT) research has begun to focus on health consumers, particularly in child health. KT tools provide health consumers with research knowledge to inform health decision-making and may foster ‘effective consumers’. Thus, the purpose of this scoping review was to describe the state of the field of previously published effectiveness research on child health-related KT tools for parents/caregivers to understand the evidence-base, identify gaps, and guide future research efforts.

Methods

A health research librarian developed and implemented search strategies in 8 databases. One reviewer conducted screening using pre-determined criteria. A second reviewer verified 10% of screening decisions. Data extraction was performed by one reviewer. A descriptive analysis was conducted and included patient-important outcome classification, WIDER Recommendation checklist, and methodological quality assessment.

Results

Seven thousand nine hundred fifty two independent titles and abstracts were reviewed, 2267 full-text studies were retrieved and reviewed, and 18 articles were included in the final data set. A variety of KT tools, including single- (n = 10) and multi-component tools (n = 10), were evaluated spanning acute (n = 4), chronic (n = 5) and public/population health (n = 9) child health topics. Study designs included: cross-sectional (n = 4), before-after (n = 1), controlled before-after (n = 2), cohort (n = 1), and RCTs (n = 10). The KT tools were evaluated via single primary outcome category (n = 11) and multiple primary outcome categories (n = 7). Two studies demonstrated significant positive effects on primary outcome categories; the remaining studies demonstrated mixed effects (n = 9) and no effect (n = 3). Overall, methodological quality was poor; studies lacked a priori protocols (n = 18) and sample size calculations (n = 13). Overall, intervention reporting was also poor; KT tools lacked description of theoretical underpinnings (n = 14), end-user engagement (n = 13), and preliminary research (n = 9) to inform the current effectiveness evaluation.

Conclusions

A number of child health-related knowledge translation tools have been developed for parents/caregivers. However, numerous outcomes were used to assess impact and there is limited evidence demonstrating their effectiveness. Moreover, the methodological rigor and reporting of effectiveness studies is limited. Careful tool development involving end-users and preliminary research, including usability testing and mixed methods, prior to large-scale studies may be important to advance the science of KT for health consumers.

Similar content being viewed by others

Background

It is well established that the creation of new knowledge through biomedical and health services research does not automatically lead to widespread implementation or health impacts [1]. To maximize health system resources and improve patient outcomes, it is increasingly important to close the research-practice gap by ensuring that research knowledge translates into action – a process called knowledge translation (KT). KT is defined as the synthesis, exchange, and application of knowledge to improve the health of individuals, provide more effective health services and products, and strengthen health care systems [1]. Current approaches to KT are largely focused on aligning the behaviours of health professionals with best research evidence; however, ever-increasing healthcare complexity and health professional time constraints are barriers to effective research use [2, 3]. An emerging approach to KT is directing information to health consumers (i.e., patients, parents, caregivers) to increase their knowledge and participation in health decision-making.

In the field of child health, connecting parents and caregivers to research evidence has the power to improve health decision-making and reduce health system costs [4]. Traditional approaches used by health providers to share information with parents and caregivers have been found lacking. For instance, verbal information is often brief [5] and written information is often too complex for most adults to comprehend [6, 7]. There is little guidance on the most effective approach, content, duration, and intensity of information provision for the diverse population that parents represent [8,9,10].

While KT interventions encompass a wide array of strategies to bridge the research-practice gap, including individual, organizational, and structural interventions [11], KT tools are a sub-group of KT interventions that present research-based information in user-friendly language and formats to provide explicit recommendations, and/or meet knowledge/information needs [12]. KT tools are particularly suited for lay audiences, including parents and caregivers. It is hypothesized that KT tools may foster and empower ‘effective consumers’ with research knowledge to inform their health decision-making [13].

The purpose of this scoping review was to identify previously published effectiveness research on child health-related KT tools for parents/caregivers. We sought to understand the breadth of KT tools that have been developed and evaluated (including their intended purpose), how they are being evaluated (including the outcomes selected), and whether they are demonstrating hypothesized effects. Understanding the evidence-base for KT tools for parents/caregivers in child health and identifying gaps in this emerging field is a critical next step to inform KT science for health consumers.

Methods

This scoping review was guided by the rigorous, systematic methods outlined by Arksey & O’Malley [14].

Search strategy

A comprehensive literature search was designed and implemented by a health research librarian (TC) in eight databases: Medline, Medline In-Process & Other None-Indexed Citations, EBM Reviews, Embase, PsychINFO, CINAHL, SocINDEX, and Web of science. The search included language (English only) and date restrictions (2005 – June 2015) (search strategies and terms in Additional file 1). Date restrictions reflect the advent of KT science [12, 15] and the emergence of KT targeting health consumers [16].

Study inclusion criteria

The inclusion criteria are outlined in Table 1. In brief, we were interested in any primary research evaluating the effectiveness of a KT tool on a child health topic and targeting parents/lay caregivers. A KT tool was defined as a tangible, on-demand product presenting research-based information in user-friendly language and format(s) to provide explicit recommendations, and/or meet knowledge/information needs.

Study selection

One reviewer (LA) conducted primary and secondary screening using pre-determined criteria (Additional file 2). A second, independent reviewer (XW) screened 10% of all studies to verify inclusion/exclusion decisions. Interrater agreement was determined to be ‘very good’ with a kappa statistic of 0.803 [17].

Data collection

Data were extracted by one reviewer (LA). The following general variables were extracted: authors, year of publication, country, and journal of publication. Methodological elements were also extracted, including: study design, study focus (i.e., purpose), availability of a priori protocol, study population, sample size calculation, recruitment and retention, intervention and comparison groups, data collection methods, primary outcome(s) and measures. We also extracted the results for the primary outcomes, and author conclusions. Additional variables specific to the KT tools were extracted, including: child health topic, purpose of tool, description of tool, tool development approaches (e.g., including end-users, theoretical basis, and preliminary research conducted prior to effectiveness evaluation), type of tool, and number of interacting tool elements.

Methodological quality assessment

Scoping reviews do not typically include critical appraisal of individual studies [14, 18]. This has been acknowledged as a limitation of the Arksey & O’Malley method [19]. New methodological recommendations include methodological quality assessment to demonstrate gaps in the evidence-base and demonstrate feasibility of future systematic reviews [19]. However, studies should not to be excluded based on these methodological quality ratings [19], which is how we proceeded in this review.

For randomized controlled trials (RCTs), methodological quality was assessed by one reviewer (LA) using the Cochrane Risk of Bias Tool [20]. This tool has been deemed the most comprehensive for assessing potential for bias in RCTs [21] and has become the standard approach for systematic reviews [22]. A global quality rating of low, high or unclear risk of bias is assigned to each RCT based on seven components: random sequence generation, allocation concealment, blinding of participants and personnel, blinding of outcome assessment, incomplete outcome data, selective reporting, ‘other’ sources of bias.

For all other quantitative study designs, methodological quality was assessed by one reviewer (LA) using the Quality Assessment Tool for Quantitative Studies [23]. Content validity, construct validity, and inter-rater reliability have been established for this tool [24]. A global quality rating of weak, moderate or strong is assigned to each study based on eight components: selection bias, study design, confounders, blinding, data collection methods, withdrawals and dropouts, intervention integrity, and analysis.

Data analysis

A descriptive analysis of the extracted variables was conducted. The WIDER Recommendations Checklist was applied to describe the reporting quality of the KT tools [25] (Additional file 3). Since the studies assessed primary outcomes at different levels, a classification scheme of outcomes for assessing patient-focused interventions was applied [26] (Additional file 4). Study results were described as positive effect, mixed effects, no effect, or unclear in relation to the intended impact on the primary outcome(s). A narrative summary of these effects was performed considering the nature of the intervention, topic, and study design features.

Results

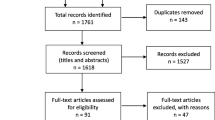

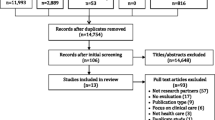

After removing duplicates, 7952 titles and abstracts were reviewed in primary screening, 2267 full-text studies were reviewed in secondary screening, and 18 studies met our inclusion criteria (Fig. 1) [27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44]. The included studies are summarized in an additional file (Additional file 5).

KT tool interventions

The KT tools provided evidence-based information on different acute conditions (n = 4; e.g., gastroenteritis, tonsillitis, procedural pain, surgery), chronic conditions (n = 5; e.g., inherited metabolic disorders, Type I diabetes, asthma, vision impairment), and public health/health promotion topics (n = 9; e.g., preventive care/minor child health issues, vaccination, antibiotics use, healthy diet & physical activity, infant feeding, smoking prevention) in child health. A variety of KT tool interventions were studied, including pamphlet (n = 3), information sheet (n = 2), cartoon book (n = 1), book (n = 1), video (n = 1), website (n = 2), video + booklet (n = 1), 2 videos +2 booklets (n = 2), video + book (n = 1), video + pamphlet (n = 1), 2 videos +2 pamphlets (n = 1), 5 activity guides + tip sheets + newsletters (n = 1), and 6 books (n = 1). Additionally, 6 studies had KT tools as comparison/control conditions; these tools included, pamphlet [42], 1 video +1 pamphlet [34, 35], 2 pamphlets [33], 2 information sheets [44] 5 pamphlets [32].

Another approach to classify KT tools is to examine the number of different components (i.e., single or multiple) within the intervention (as shown in the above list) (Table 2). In nine studies, KT tools featured one (single) stand-alone component (e.g., information sheet) [27, 29, 30, 36, 38,39,40,41,42]; two single-component KT tools were compared and evaluated in one of these studies [42]. In nine studies, KT tools included multiple (more than one) components that worked in tandem (e.g., pamphlet + video) [28, 31,32,33,34,35, 37, 43, 44]; two multi-component KT tools were compared and evaluated in five of these studies [32,33,34,35, 44].

The quality of reporting of the KT tools was described using the WIDER Recommendations Checklist [25] (Table 2). Overall reporting quality was low. For the ‘Detailed Description of Intervention’ recommendation there were 8 components; included studies achieved between 3 and 6 components with a mean of 4 and a mode of 5 components. Generally included studies did not report on: characteristics of those delivering the intervention, the intensity of the intervention, and adherence/fidelity to delivery protocols. For the ‘Clarification of Assumed Change Process and Design Principles’ recommendation there were 3 components. Change techniques used in the intervention were the most reported component (12/18 studies). Causal processes targeted by change techniques and intervention development processes were rarely reported (5/18 studies for both components). Four of 18 studies satisfied the third recommendation, ‘Access to Intervention Manuals/Protocols’. For the fourth recommendation, ‘Detailed Description of Active Control Conditions’, a variety of control conditions were present (i.e., active control, no active control, multiple control groups, no control group).

Study designs

Five different quantitative study designs were represented: cross-sectional (n = 4) [30, 31, 36, 40], before-after (n = 1) [39], controlled before-after (n = 2) [38, 41], cohort (n = 1) [33], and RCT (n = 10) [27,28,29, 32, 34, 35, 37, 42,43,44]. No qualitative studies met the inclusion criteria.

Methodological quality

Ten RCTs were assessed for risk of bias (Table 3). Five studies were assessed as high risk of bias [27,28,29, 32, 44]; the most frequent reason for high risk of bias was lack of blinding of participants and personnel. Five studies were determined to have unclear risk of bias [34, 35, 37, 42, 43]; the most frequent reason for unclear risk of bias was the possibility of selective outcome reporting. None of the included studies were assessed as low risk of bias overall.

All eight of the quantitative, non-RCT studies had a global methodological quality rating of weak [30, 31, 33, 38,39,40,41] (Table 4). The most problematic domains across studies were ‘study design’ and ‘data collection and methods’; all studies were weak with respect to these domains except one.

Primary outcomes

Primary outcomes were classified into four categories using the Outcomes of Interest for Assessing Patient-Focused Interventions classification scheme [26] (Table 5). It was possible for one outcome category to encompass several different outcome measures (e.g., self-efficacy measures and perceived barrier measures are both captured under the Patients’ Experience outcome category) (Additional file 4). Overall, 11 studies assessed one primary outcome category to determine the effectiveness of KT tools: patients’ knowledge (n = 3) [37, 41, 44]; patients’ experience (n = 1) [30]; health behaviour and health status (n = 7) [29,31,3234,35,40,42]. None of the included studies assessed outcomes in the health services utilization and cost category of the outcome classification scheme.

Seven studies assessed KT tool effectiveness with multiple primary outcome categories. Four of these studies identified and assessed primary outcomes in two different outcome categories: patients’ knowledge and patients’ experience (n = 2) [39, 43]; patients’ knowledge and health behaviour/health status (n = 1) [38]; and patients’ experience and health behaviour/health status (n = 1) [33]. Three studies identified primary outcomes in three different outcome categories: patients’ knowledge, patients’ experience, and health behaviour/health status categories [27, 28, 36].

Study results

A summary of study results is presented in Additional file 4. Of the 18 included studies, two studies demonstrated significant positive effects on the primary outcome [32, 35]. Both studies were RCTs that assessed the effectiveness of multi-component KT tools using the health behaviour/health status primary outcome category. In both studies the primary outcome was assessed using one single measure at two time points (i.e., baseline and follow-up) with a long follow up period (i.e., 2 years, 3 years). Jackson et al. (2006) compared 2 multi-component KT tools, with the more dynamic tool (i.e., 5 printed activity guides with supplementary fact sheets for parents and newsletters for children vs 5 pamphlets) demonstrating effectiveness in delaying initiation of smoking. This study was assessed to have high risk of bias due to lack of blinding of study participants and personnel (Table 3). Nordfeldt et al. (2005) compared 2 multi-component KT tools (i.e., 2 videos +2 booklets vs 1 video +1 booklet with different information for each study arm) and a usual care control group, with the more dynamic and specific tool (2 videos +2 booklets on self-control and treatment information vs 1 video +1 booklet on general diabetes information) demonstrating effectiveness on reducing yearly incidence of severe hypoglycemia needing assistance. This study was assessed to have unclear risk of bias with respect to incomplete outcome data and selective outcome reporting (Table 3).

Two additional studies demonstrated significant positive effects on at least one of the identified primary outcome categories [27, 39]. Both studies assessed the effectiveness of single-component KT tools. Skranes et al. (2015) utilized a before-after design and determined that a website was effective for improving mothers’ knowledge of minor child health conditions, but not mothers’ experience (i.e., self-perceived anxiety) over a six to 12-month follow-up period. The methodological quality was assessed as weak (Table 4). Bailey et al. (2015) conducted a RCT and determined that an information sheet was effective for improving knowledge and experience with respect to tonsillectomy surgery pain management, but not health behaviour/status over a 10-day follow-up period. This study was assessed to have a high risk of bias due to selective outcome reporting and other sources of bias (i.e., baseline imbalances in study groups) (Table 3).

Nine studies demonstrated a combination of mixed effects and no effect on primary outcome categories (both single and multiple primary outcome categories) [28, 29, 34, 36,37,38, 40, 42, 43]. These studies were diverse in terms of the KT tool interventions (i.e., a variety of single and multi-component KT tools), study designs, and number of outcomes within and between the three primary outcome categories. Four of nine studies had longer follow-up periods (i.e., from 10 weeks to three years), three had shorter follow-up periods (i.e., less than 8 weeks), and two had undefined follow-up periods (i.e., referred to as post-intervention assessment with no timing provided).

Three studies showed no effect on the primary outcome categories [30, 41, 44]. These studies were conducted using different designs: cross-sectional [30], controlled before-after [41], and RCT [44]. They represented both single and multi-component KT tools; however, all three KT tools were non-electronic, written materials (i.e., information sheet, pamphlets). The three studies measured single, proximal outcome categories (i.e., knowledge, experience) over a short follow-up period (i.e., 2 weeks, 2 months). The methodological quality of two studies was assessed as weak [30, 41] (Table 4) and the third study was determined to have high risk of bias [44] (Table 3).

Additional key study features

To contextualize the study results and methodological quality ratings, additional data were extracted about key study features with a focus on methodological and intervention development variables (Table 6). References to trial registration and a priori protocols were extracted and relevant databases were searched. None of the 18 studies had an a priori protocol publicly available; however, four studies were registered retrospectively [27, 29, 37, 40]. Five of 18 studies provided a sample size calculation [28,29,30, 40, 41] and of those studies, three were sufficiently powered to detect the desired change in the primary outcome [28, 30, 40]. Four of 18 studies described a theoretical basis for the KT tool intervention [30, 33, 36, 43] and five of 18 studies explicitly described end-user involvement in intervention development [28, 31, 33, 38, 43]. Finally, nine studies described or referenced preliminary research (i.e., qualitative, feasibility, pilot studies) that informed the current KT tool effectiveness study [28, 32,33,34,35, 38, 40, 41]. There were no discernable patterns between these variables and the effectiveness of the KT tools.

Discussion

This scoping review has demonstrated that several different KT tools have been specifically designed for parents/caregivers on diverse child health topics, which include a variety of single- and multi-component strategies. Few KT tools demonstrated positive effects for primary outcomes; the majority of studies showed mixed effects within and between primary outcome categories. Only two studies showed strictly positive effects and both evaluated multi-component KT tools. Three studies showed no effect and these evaluated single-component KT tools, specifically they were all non-electronic, written materials. This suggests that multi-component KT tools may be more effective for health consumers, specifically parents and caregivers. While we did not conduct formal comparisons, these findings contradict previous research indicating the effectiveness of patient-focused interventions decreases as the number of intervention components number increases [45].

This review demonstrated that the most common design was the RCT (n = 10), which is recognized as the most rigorous design for evaluating effectiveness [46]. All included RCTs were assessed as unclear or high risk of bias; further, included non-RCT studies all had substantial methodological weaknesses. Given these methodological weaknesses, it might be expected that interventions would be more likely to demonstrate an effect, particularly for the primary outcomes; however, most studies did not demonstrate significantly positive results on the primary outcomes of interest. This raises three considerations: 1) was the design or certain design features (e.g., sample size, nature of the comparison) inappropriate or inadequate to assess effectiveness; 2) were appropriate outcomes selected and measured to accurately assess intended impact and establish effectiveness; or 3) have the KT tools been appropriately developed and incrementally assessed to establish effectiveness?

There were several design/methodological issues that may have impacted the effectiveness results. None of the included studies was shown to have low risk of bias (i.e., high or unclear risk of bias ratings only). Low methodological quality ratings were due to deficiencies in multiple categories in both tools. Interestingly, no a priori protocols were available and only 4 studies retrospectively registered their protocols. While a priori protocols may not yet be standard for all study designs, it is standard practice to register a priori protocols for RCTs [47, 48]. Additionally, only 3 studies (16.7%) demonstrated adequate power to detect statistical significance of the primary outcomes. This information, generally provided in study protocols (if not also in primary publications), is a key aspect of effective comparative studies in health research [49]. With strict journal length restrictions, it is difficult to determine if high risk of bias/weak methodological quality can be attributed to lack of reporting and/or poor study design and execution; however, the publication of study protocols has been proposed as an important approach in the primary prevention of poor medical/health research [50], particularly selective outcome reporting [51].

There is little agreement on the best outcomes and measures to determine effectiveness of patient-focused interventions [26]. Applying the Coulter & Ellins patient-important outcomes framework was useful because it helped to reduce the ‘noise’ and classify multiple outcome measures within and across four distinct outcome categories. Studies used many different outcome measures across a variety of proximal (i.e., patients’ knowledge) and distal (i.e., health services utilization/health behavior) outcome categories. Just under half (44%) of studies skipped proximal outcomes (i.e., knowledge) and instead only measured more distal, behaviour-related outcomes. Two such studies showed statistically significant positive effects of the KT tools, but methodological quality concerns (i.e., high and unclear ratings) and no a priori protocol or sample size estimation limit our confidence in the link between the KT tool and these distal, health behaviour and health status changes. There may be other mitigating factors in the ‘black box’ between the interventions and outcomes. Additionally, the use of multiple outcome measures within the same primary outcome category and/or measuring multiple primary outcome categories most often resulted in mixed effectiveness (n = 9). It is difficult to interpret these results without authors’ providing explicit rationale linking primary outcome(s) and measures to the intended effect of the KT tools. It is important to note that only four studies described the theoretical basis for the KT tool; more explicit theoretical underpinnings may help in tool development and linking tools to intended outcomes.

Unfortunately, the overall poor intervention reporting quality in the literature [52,53,54,55], including development approaches/methods [46], theoretical basis [56, 57], and end-user involvement [58], results in limited understanding of intervention components and relationship/interaction between these components, which are responsible for observed changed and desired effects on outcomes [59]. These issues were exemplified in this review with poor reporting across all WIDER Recommendation categories. Without detailed understanding of these important elements, KT tool sustainability, replication, scale-up and future development efforts are limited [25].

Effectiveness evaluation is typically resource intensive, yet we need to understand whether KT tools are effective for a lay audience prior to large-scale implementation. Formative research (i.e., qualitative, feasibility, pilot studies) prior to launching into effectiveness evaluation may be essential to attend to intervention development and implementation issues, refine effectiveness evaluation protocols, including most appropriate outcomes and measures, and ensure potential impact [57, 60]. However, only half (n = 9) of the included studies described or referenced preliminary research that was conducted to inform the current effectiveness evaluation study. Both studies in this review that demonstrated significant positive effects on primary outcomes referenced such preliminary research. Unfortunately, these studies did not provide sufficient detail to guide future KT tool evaluations; however, future research could attend to this need.

There is also a growing body of literature to support the use of qualitative research in the design and implementation of RCTs [61,62,63,64]. Qualitative research has been used to add value to trials in the areas of bias, efficiency, ethics, implementation, interpretation, relevance, success, and validity [65]. Novel study designs, beyond RCTs, possibly including mixed methods, may explain why the KT tools worked or not, help explain and interpret effectiveness results, and explore the implementation process [57].

Strengths & limitations

This scoping review provides a detailed summary of the state of the science for the emerging field of KT tools for parents and caregivers on child health topics. By conducting critical appraisal using two rigorous frameworks for multiple study design types, this review offers important methodological advancement of the Arksey & O’Malley (2005) scoping review method [14, 18, 19]. One limitation is the lack of a second reviewer to verify data extraction and critical appraisal, as would be expected in a systematic review. Another limitation was the lack of a classification scheme for the KT tools; the Coulter & Ellins patient-focused intervention classification was not used because it had a broader scope than desired [26]. Multiple, overlapping frameworks are a persistent problem in the KT field [66, 67]; however, the recently published AIMD meta-framework may be the solution [68] and future research should explore KT tool development, reporting, and classification with this new framework.

Conclusions

KT tools offer a promising approach to communicate complex health information to health consumers. While a breadth of KT tools have been developed to provide research-based information on a wide variety of acute, chronic and public health/health promotion topics in child health, improved reporting is essential to ensure intervention design is appropriate for desired change and that well designed interventions are replicable. Additionally, increased methodological rigor is needed to determine the effectiveness of the KT tools. This includes the publication of a priori protocols, sample size calculations, primary outcome identification, and attending to multiple outcome measures and mixed results. More preliminary research, including KT tool development involving the target end-users and usability testing prior to large-scale trials, may be important to optimize KT tool effectiveness. Further, ensuring all necessary intervention and methodological components are attended to before and during effectiveness evaluation will help provide a more solid scientific base for KT targeting health consumers.

Abbreviations

- KT:

-

knowledge translation

- RCT:

-

randomized controlled trial

References

Canadian Institutes of Health Research. Knowledge translation definition. http://www.cihr-irsc.gc.ca/e/29418.html#2 (2015). Accessed 18 March 2015.

Institute of Medicine. Crossing the quality chasm: a new health system for the 21st century. 2000. http://www.nap.edu/books/0309072808/html/. Accessed 20 March 2015.

Thompson DS, O’Leary K, Jensen E, Scott-Findlay SD, O’Brien-Pallas L, Estabrooks CA. The relationship between busyness and research utilization: It is about time. J Clin Nurs. 2008;7(4):539–48.

Morrison AK, Myrvik MP, Brousseau DC, Hoffman RG, Stanley RM. The relationship between parent health literacy and pediatric emergency department utilization: A systematic review. Acad Pediatr. 2013;13(5):421–9.

Vashi A, Rhodes KV. “Sign right here and you’re good to go”: A content analysis of audiotaped emergency department discharge instructions. Ann Emerg Med. 2011;57(4):315–22.

Sanders LM, Federico S, Klass P, Abrams MA, Dreyer B. Literacy and child health: A systematic review. JAMA Pediatr. 2009;163(2):131–40.

Spandorfer JM, Karras DJ, Hughes LA, Caputo C. Comprehension of discharge instructions by patients in an urban emergency department. Ann Emerg Med. 1995;25(1):71–4.

Wilson EAH, Makoul G, Bojarski EA, Cooper Bailey S, Waite KR, Rapp DN, Baker DW, et al. Comparative analysis of print and multimedial health materials: A review of the literature. Patient Educ Couns. 2012;89:7–14.

Jusko Friedman A, Cosby R, Boyko S, Hatton-Bauer J, Turnbull G. Effective teaching strategies and methods of delivery for patient education: A systematic review and practice guideline recommendations. J Cancer Educ. 2011;26:12–21.

Boyd M, Lasserson TJ, McKean MC, Gibson PG, Ducharme FM, Haby M. Interventions for educating children who are at risk of asthma-related emergency department attendance. Cochrane Database of Systematic Reviews. 2009, Issue 2. Art. No.: CD001290. DOI: https://doi.org/10.1002/14651858.CD001290.pub2.

Effective Practice and Organisation of Care (EPOC). (2015). EPOC Taxonomy Retrieved from https://epoc.cochrane.org/epoc-taxonomy

Graham ID, Logan J, Harrison MB, Straus SE, Tetroe J, Caswell W, Robinson N. Lost in knowledge translation? Time for a map. J Contin Educ Heal Prof. 2006;26(1):13–24.

Tugwell PS, Santesso NA, O’Connor AM, Wilson AJ, Effective Consumer Investigative Group. Knowledge translation for effective consumers. Phys Ther. 2007;87:1728–38.

Arksey H, O’Malley L. Scoping studies: Towards a methodological framework. Int J Social Res Method. 2005;8:19–31.

McKibbon KA, Lokker C, Keepanasseril A, Colquhoun H, Haynes RB, Wilczynski NL. WhatisKT wiki: A case study of a platform for knowledge translation terms and definitions – descriptive analysis. Implement Sci. 2013;8:13.

Grimshaw JM, Eccles MP, Lavis JN, Hill SJ, Squires JE. Knowledge translation of research findings. Implement Sci. 2012;7:50.

Graphpad Software Inc. QuickCalcs: Quantify agreement with kappa. https://graphpad.com/quickcalcs/kappa2/. Accessed 15 November 2015.

Brien SE, Lorenzetti DL, Lewis S, Kennedy J, Ghali WA. Overview of a formal scoping review on health system report cards. Implement Sci. 2010;5:2.

Pham MT, Rajic A, Greig JD, Sargeant JM, Papadopoulos A, McEwen SA. A scoping review of scoping reviews: Advancing the approach and enhancing the consistency. Research Synthesis Methods. 2014;5(4):371–85.

Higgins JPT, Green S (editors). Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. The Cochrane Collaboration, 2011. Available from www.handbook.cochrane.org.

Higgins Julian PT, Altman Douglas G, Gøtzsche Peter C. Jüni Peter, Moher David. Oxman Andrew D et al The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials BMJ. 2011;343:d5928.

Jorgensen L, Paludan-Muller AS, Laursen DRT, Savovic J, Boutron I, Sterne JAC, Higgins JPT, Hrobjartsson A. Evaluation of the Cochrane tool for assessing risk of bias in randomized clinical trials: Overview of published comments and analysis of user practice in Cochrane and non-Cochrane reviews. Systematic Reviews. 2016;5:80.

Effective Public Health Practice Project. Quality assessment tool for quantitative studies. http://www.ephpp.ca/tools.html. Accessed 8 January 2016.

Thomas H, Ciliska D, Dobbins M, Micucci S. A process for systematically reviewing the literature: providing evidence for public health nursing interventions. Worldviews Evid-Based Nurs. 2004;2:91–9.

Albrecht L, Archibald M, Arseneau D, Scott SD. Development of a checklist to assess the quality of reporting of knowledge translation interventions using the Workgroup for Intervention Development and Evaluation Research (WIDER) recommendations. Implement Sci. 2013;8:52.

Coulter A, Ellins J. Patient-focused interventions: A review of the evidence. The Health Foundation: London, UK. 2006. ISBN 0954896815.

Bailey L, Sun J, Courtney M, Murphy P. Improving postoperative tonsillectomy pain management in children – A double randomized control trial of a patient analgesia information sheet. Int J Pediatr Otorhinolaryngol. 2015;79:732–9.

Bauchner H, Osganian S, Smith K, Triant R. Improving parent knowledge about antibiotics: A video intervention. Pediatrics. 2001;108(4):845–50.

Christakis DA, Zimmerman FJ, Rivara FP, Ebel B. Imrproving pediatric prevention via the Internet: A randomized, controlled trial. Pediatrics. 2006;118(3):1157–66.

Dempsey AF, Zimet GD, Davis RL, Koutsky L. Factors that are associated with parental acceptance of human papillomavirus vaccines: A randomized intervention study of written information about HPV. Pediatrics. 2006;117(5):1486–93.

Evans S, Daly A, Hopkins V, Davies P, MacDonald A. The impact of visual media to encourage low protein cooking in inherited metabolic disorders. J Hum Nutr Diet. 2009;22:409–13.

Jackson C, Dickinson D. Enabling parents who smoke to prevent their children from initiating smoking: Results from a 3-year intervention evaluation. Arch Pediatr Adolesc Med. 2006;160:36–62.

Nordfeldt S, Ludvigsson J. Self-study material to prevent severe hypoglycaemia in children and adolescents with type 1 diabetes: A prospective intervention study. Pract Diab Int. 2002;19(5):131–6.

Nordfeldt S, Johansson C, Carlsson E, Hammersjo J-A. Prevention of severe hypoglaecemia in type 1 diabetes: A randomized controlled population study. Arch Dis Child. 2003;88:240–5.

Nordfeldt S, Johansson C, Carlsson E, Hammersjo J-A. Persistent effects of a pedagogical device targeted at prevention of severe hypoglycaemia: A randomized, controlled sutyd. Acta Paediatr. 2005;94:1395–401.

Ranjit N, Menendez T, Creamer M, Hussaini A. Potratz CR< Hoelscher DM. Narrative communication as a strategy to improve diet and activity in low-income families: The use of role model stories American Journal of Health Education. 2015;46:99–108.

Reich SM, Bickman L, Saville BR, Alvarez J. The effectiveness of baby books for providing pediatric anticipatory guidance to new mothers. Pediatrics. 2010;125(5):997–1002.

Scheinmann R, Chiasson AM, Hartel D, Rosenberg TJ. Evaluating a bilingual video to improve infant feeding knowledge and behaviour among immigrant Latina mothers. J Community Health. 2010;35:464–70.

Skranes LP, Lohaugen GCC, Skranes J. A child health information website developed by physicians: The impact of use on perceived parental anxiety and competence of Norwegian mothers. J Public Health. 2015;23:77–85.

Sustersic M, Jeannet E, Cozon-Rein L, Marechaux F, Genty C, Foote A, David-Tchouda S, Martinez L, Bosson JL. Impact of information leaflets on behavior of patients with gastroenteritis or tonsillitis: A cluster randomized trial in French primary care. J Gen Intern Med. 2012;28(1):25–31.

Taddio A, MacDonald NE, Smart S, Parikh C, Allen V, Halperin B, Shah V. Impact of a parent-directed pamphlet about pain management during infant vaccinations on maternal knowledge and behaviour. Neonatal Network. 2014;33(2):74–82.

Tijam AM, Holtslag G, Van Minderhout HM, Simonsz-Toth B, Vermeulen-Jong MHL, Borsboom GJJM. Loudon SE< Simonnsz HJ. Randomised comparison of three tools for improving compliance with occlusion therapy: An educational cartoon story, a reward calendar, and an information leaflet for parents. Graefes Arch Clin Exp Ophthalmol. 2013;251(1):321–9.

Wakimizu R, Kamagata S, Kuwabara T, Kamibeppy K. A randomized controlled trial of an at-home preparation programme for Japanese preschool children: Effects on children’s and caregivers anxiety associated with surgery. J Eval Clin Pract. 2009;15:393–401.

Wilson FL, Brown DL, Stephens-Ferris M. Can easy-to-read immunization information increase knowledge in urban low-income mothers? J Pediatr Nurs. 2006;21(1):4–12.

Silagy C, Lancaster T, Gray S, Fowler G. Effectiveness of training health professionals to provide smoking cessation interventions: Systematic review of randomized controlled trials. Qual Health Care. 1994;3(4):193–8.

Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: The new Medical Research Council Guidance. BMJ. 2008;337

Chan A-W, Tetzlaff JM, Altman DG, Laupacis A, Gøtzsche PC, Krleža-Jerić K, Hróbjartsson A, Mann H, Dickersin K, Berlin J, Doré C, Parulekar W, Summerskill W, Groves T, Schulz K, Sox H, Rockhold FW, Rennie D, Moher D. SPIRIT 2013 Statement: Defining standard protocol items for clinical trials. Ann Intern Med. 2013;158:200–7.

Chan A-W, Tetzlaff JM, Gøtzsche PC, Altman DG, Mann H, Berlin J, Dickersin K, Hróbjartsson A, Schulz KF, Parulekar WR, Krleža-Jerić K, Laupacis A, Moher D. SPIRIT 2013 Explanation and Elaboration: Guidance for protocols of clinical trials. BMJ. 2013;346:e7586.

Jones SR, Carley S, Harrison M. An introduction to power and sample size estimation. Emerg Med J. 2003;20:453–8.

Chalmers I, Altman DG. How can medical journals help prevent poor medical research? Some opportunities presented by electronic publishing. Lancet. 1999;353(9151):490–3.

Chan AW, Hrobjartsson A, Haahr MT, Gotzsche PC, Altman DG. Emperical evidence for selective reporting of outcomes in randomized trials: Comparison of protocols to published articles. JAMA. 2004;291(20):2457–65.

Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: Are implementation effects out of control? Clin Psychol Rev. 1998;18:23–45.

Gresham FM, Gansle KA, Noell GH. Treatment integrity in applied behavior analysis with children. J Appl Behav Anal. 1993;26:257–63.

Moncher FJ, Prinz RJ. Treatment fidelity in outcome studies. Clin Psychol Rev. 1991;11:247–66.

Odom SI, Brown WH, Frey T, Karasu N, Smith-Canter LL, Strain PS. Evidence-based practices for young children with autism: Contributions for single-subject design research. Focus Autism Other Dev Disabil. 2003;18:166–75.

Davies P, Walker AE, Grimshaw JM. A systematic review of the use of theory in the design of guideline dissemination and implementation strategies and interpretation of the results of rigorous evaluations. Implement Sci. 2010;5:114.

McCormack L, Sheridan S, Lewis M, Boudewyns V, Melvin CL, Kistler C, Lux LJ, Cullen K, Lohr KN. Communication and dissemination strategies to facilitate the use of health-related evidence. In: Evidence Report/Technology Assessment No. 213. AHRQ Publication No. 13(14)-E003-EF.

Gagliardi AR, Berta W, Kothari A, Boyko J, Urquhart R. Integrated knowledge translation (IKT) in health care: A scoping review. Implement Sci. 2016;11:38.

Michie S, Fixsen D, Grimshaw JM, Eccles MP. Specifying and reporting complex behaviour change interventions: The need for a scientific method. Implement Sci. 2009;4(40):1–6.

Green LW, Ottoson JM, Garcia C, Hiatt RA. Diffusion theory and knowledge dissemination, utilization, and integration in public health. Annu Rev Public Health. 2009;30:151–74.

Snowdon C. Qualitative and mixed methods research in trials. Trials. 2015;16:558.

Woolfall K, Young B, Frith L, Appleton R, Iyer A, Messahel S, Hickey H, Gamble C. Doing challenging research studies in a patient-centred way: A qualitative study to inform a randomised controlled trial in the pediatric emergency care setting. BMJ Open. 2014;4:e005045.

Murtagh MJ, Thomson RG, May CR, Rapley T, Heaven BR, Graham RH, Kaner EF, Stobbart L, Eccles MP. Qualitative methods in a randomised controlled trial: The role of an integrated qualitative process evaluation in providing evidence to discontinue the intervention in one arm of a trial of a decision support tool. Qual Saf Health Care. 2007;16:224–9.

O’Cathain A, Goode J, Drabble SJ, Thomas KJ, Rudolph A, Hewison J. Getting added value from using qualitative research with randomized controlled trials: A qualitative interview study. Trials. 2014;15:215.

O’Cathain A, Thomas KJ, Drabble SJ, Rudolph A, Hewison J. What can qualitative research do for randomised controlled trials? A systematic mapping review BMJ Open. 2013;3:e002889.

Colquhoun H, Leeman J, Michie S, Lokker C, Bragge P, Hempel S, McKibbon KA, Peters GJ, Stevens KR, Wilson MG, et al. Towards a common terminology: A simplified framework of interventions to promote and integrate evidence into health practices, systems, and policies. Implement Sci. 2014;9(1):51.

Walshe K. Pseudoinnovation: The development and spread of healthcare quality improvement methodologies. Int J Qual Health Care. 2009;21(3):153–9.

Bragge P, Grimshaw JM, Lokker C, Colquhoun H, The AIMD Writing/Working Group. AIMD - A validated, simplified framework of interventions to promote and integrate evidence into health practices, systems, and policies. BMC Medical Research Methodology. 2017;17:38.

Acknowledgements

Thank you to Thane Chambers for designing and implementing the search strategy and Xuan Wu for conducting verification of inclusion/exclusion decisions.

Funding

Lauren Albrecht is supported by Alberta Innovates – Health Solutions Graduate Studentship and Women & Children’s Health Research Institute Graduate Studentship. Shannon Scott is a Canada Research Chair (Tier II) for Knowledge Translation in Child Health. Lisa Hartling is supported by a CIHR New Investigator Salary Award.

Availability of data and materials

All data generated or analysed during this study are included in this published article [and its supplementary information files].

Author information

Authors and Affiliations

Contributions

All authors designed the scoping review. LA conducted primary and secondary screening, data extraction, data analysis, and manuscript writing. All authors provided substantive feedback and approved the manuscript prior to submission.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable. Scoping review conducted secondary analysis of previously published literature.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Search strategies. (DOCX 31 kb)

Additional file 2:

Secondary screening criteria. (DOCX 17 kb)

Additional file 3:

WIDER recommendations to improve reporting of the content of behavior change interventions. (DOCX 14 kb)

Additional file 4:

Outcomes of interest for assessing patient-focused interventions. (DOCX 15 kb)

Additional file 5:

Summary of included studies (n=18). (DOCX 32 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Albrecht, L., Scott, S.D. & Hartling, L. Knowledge translation tools for parents on child health topics: a scoping review. BMC Health Serv Res 17, 686 (2017). https://doi.org/10.1186/s12913-017-2632-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-017-2632-2