Abstract

Background

To evaluate the effectiveness of audit and communication strategies to reduce diagnostic errors made by clinicians.

Methods

MEDLINE complete, CINHAL complete, EMBASE, PSNet and Google Advanced. Electronic and manual search of articles on audit systems and communication strategies or interventions, searched for papers published between January 1990 and April 2017. We included studies with interventions implemented by clinicians in a clinical environment with real patients.

Results

A total of 2431 articles were screened of which 26 studies met inclusion criteria. Data extraction was conducted by two groups, each group comprising two independent reviewers. Articles were classified by communication (6) or audit strategies (20) to reduce diagnostic error in clinical settings. The most common interventions were delivered as technology-based systems n = 16 (62%) and within an acute care setting n = 15 (57%). Nine studies reported randomised controlled trials. Three RCT studies on communication interventions and 3 RCTs on audit strategies found the interventions to be effective in reducing diagnostic errors.

Conclusion

Despite numerous studies on interventions targeting diagnostic errors, our analyses revealed limited evidence on interventions being practically used in clinical settings and a bias of studies originating from the US (n = 19, 73% of included studies). There is some evidence that trigger algorithms, including computer based and alert systems, may reduce delayed diagnosis and improve diagnostic accuracy. In trauma settings, strategies such as additional patient review (e.g. trauma teams) reduced missed diagnosis and in radiology departments review strategies such as team meetings and error documentation may reduce diagnostic error rates over time.

Trial registration

The systematic review was registered in the PROSPERO database under registration number CRD42017067056.

Similar content being viewed by others

Background

Diagnostic error can be defined as “diagnosis that was unintentionally delayed (sufficient information was available earlier), wrong (wrong diagnosis made before the correct one), or missed (no diagnosis ever made), as judged from the eventual appreciation of more definitive information” [1] (page 1493). Diagnostic error as an area of patient safety has had insufficient research despite the costs in terms of negative health outcomes, loss of life, income and productivity, health system mistrust and dissatisfaction from both patients and health professionals [2, 3]. This has partly been attributed to the lack of an effective method to measure diagnostic errors, limited sources of reliable and valid data, and challenges of detecting diagnostic errors in clinical practice settings [4]. This is further complicated by diagnostic errors having many contributory factors at multiple levels of the patient care pathway, and diagnostic errors being context sensitive [5, 6]. Furthermore, diagnostic errors have differing definitions that make comparability across studies difficult [1, 7,8,9,10,11].

Earlier studies have mainly explored interventions to reduce diagnostic error including cognitive [12], system and process [13,14,15,16,17,18] errors. Regardless of the numerous studies on diagnostic errors [12,13,14,15,16,17], very few have investigated the effectiveness of strategies aimed at reducing diagnostic errors especially in a clinical setting [7, 19, 20], including audit and communication strategies. Clinical audit and communication strategies have been cited in the literature as a means to evaluate healthcare clinical performance, reduce diagnostic errors and improve quality of patient care [7, 21,22,23,24] 20). Graber et al., [18] and Singh et al., [25] emphasised that suggested approaches to diagnostic errors have rarely been operationalised in actual clinical practice hence there is a need to evaluate such interventions in the future.

To our knowledge audit and communication strategies to reduce diagnostic errors have not been studied separately. “Audit systems” were defined as systems that provide an individual or organisational performance measure against professional standards or targets to provide feedback to the individual or organisation [21,22,23,24]. This includes interventions such as processes, systems, models, programs and procedures aimed to ensure certain activities are carried out effectively and consistently to achieve the objectives [26]. Communication can be defined as the transmission of information and common understanding from one party to another [27]. The Committee on Diagnostic Error in Health Care supports processes for effective and timely communication between diagnostic testing, health professionals and treating health professionals and recommends that they should be implemented across all health care settings in the diagnostic process [7].

The aim of this systematic literature review is to summarize the current evidence on the effectiveness of audit and communication strategies undertaken by clinicians in reducing diagnostic errors within a clinical setting. This review will be helpful to clinicians that are involved in the diagnostic process; useful to managers in the clinical setting; and for policymakers involved in developing patient safety policies to improve the diagnostic process.

Methods

Search parameters and inclusion criteria

The systematic review follows PRISMA guidelines [28] and was registered in the PROSPERO database [29], registration number CRD42017067056. The search focused on audit and communication strategies implemented by clinicians in real patient or clinical environments to reduce diagnostic errors, with no restriction on the type of study design. Additional file 1 lists the details of the search strategies. We included articles written in English with sufficient information (at least an abstract).

The literature search included both published and unpublished work between January 1990 and April 2017. Database search included MEDLINE complete, CINHAL complete and EMBASE. Additional articles were manually searched using Agency for Healthcare Research and Quality Patient Safety Network (PSNet) [30] and Google Advanced search engine where unpublished studies were also located. In addition, systematic reviews retrieved from the database search were hand searched.

Two groups of two independent reviewers, (JAO and MF) and (SBM and MC), screened the titles and abstracts of articles from the databases to identify articles that met the inclusion criteria. Both eligible and inconclusive articles were included for full text screening. The same step was completed by the same groups for the articles from PSNet and a single reviewer (MF) screened the articles from the Google Advanced search engine. Articles that met inclusion criteria were added to the previously selected articles for full text review. Although both published and unpublished articles were included in the search, none of the unpublished articles met the inclusion criteria.

Data extraction strategy

Information was extracted from each included study using a data extraction form that included: study population characteristics; descriptive information about study (year of publication, country, sample size, health states, study design, type of targeted clinicians); nature of the diagnostic error; nature of the intervention (technology based systems, additional patient reviews, staff education and training, structured process changes and specific patient examination instruments or forms); the effectiveness of interventions (as the difference between the intervention and the control) and nature of the clinical setting (emergency department, outpatients and primary care). All data extracted were crosschecked by the reviewers and any discrepancies discussed among the team until a consensus was reached.

Quality assessment and risk of bias assessment

Study quality was assessed using the Cochrane Risk of Bias tool for RCTs (Randomized Control Trials) [31] and the Effective Public Health Practice Project quality assessment tool for non-RCT studies (observational descriptive, clinical trials, cohort/longitudinal and review) [32, 33]. Quality assessment data included selection bias, blinding of participants and researchers, blinding of outcome assessment, withdrawals and drop outs, selective reporting, data collection methods, study design, confounders, intervention integrity and data analysis. Studies were classified as high quality, medium quality and low quality. Publication bias and reporting bias on diagnostic errors as an outcome was minimised in this systematic review by inclusion of studies from multiple literature databases and searching unpublished “grey” literature.

Results

Study characteristics

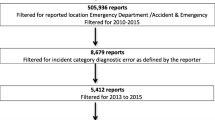

We identified 26 studies (Fig. 1) on strategies to reduce diagnostic error that met the criteria for full review. Nine studies (35%) were randomized controlled trials and the majority (17 of 26; 65%) had no randomisation, and were predominantly observational descriptive studies (9 of 26; 35%).

Literature Search PRISMA Flow Diagram- Systematic Review. Source: [34]

Twenty studies looked at audit systems [5, 35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53] and six studies considered communication strategies [54,55,56,57,58,59] employed by clinicians to reduce diagnostic errors. Nineteen studies were based in the US [5, 36,37,38,39, 41,42,43,44, 46, 47, 50, 51, 54,55,56,57,58,59]; 2 each in the UK [45, 52]; Sweden [40, 53]; Canada [48, 49]; and 1 from Lithuania [35]. Further details on study characteristics are given in Table 1.

Quality and risk of Bias assessment

Results of the Risk of Bias assessment for RCTs is shown in Fig. 2 and Additional file 2.1. Two studies had selection bias due to allocation non-concealment, four studies demonstrated high Risk of Bias due to non-blinding and two studies rated as medium to high Risk of Bias due to non-blinding of assessment outcome. In summary there were 9/54 (16%) criteria assessed as medium to high Risk of Bias across all 9 RCTS and five of the nine studies were assessed as low Risk of Bias on all criteria. This suggests the quality of the RCT studies is relatively high.

Results of quality assessment for the non-RCT studies are shown in the Additional file 2.2. The quality of these studies was medium quality with most rated as weak for non-randomized study design.

Strategies to reduce diagnostic error

Included publications were summarized under communication strategies and audit processes. These were further analysed by the types of communication or audit processes, disease group, healthcare setting and/or target clinician group.

Communication strategies

Six studies examined the interventions related to communication strategies to address diagnostic errors [54,55,56,57,58,59]. There was one study in an emergency setting (abdominal pain) [55], two studies in primary care settings (cancer) [56, 57] and three studies in an outpatient setting (psychiatry and laboratory) [54, 58, 59]. The communication interventions were technology based systems, mostly computerised trigger systems.

Our review located three recent studies that examined trigger algorithms to identify patients with potential delayed diagnosis or follow-up in order to reduce diagnostic errors [55,56,57]. Murphy and his team [57] tested an algorithm in a randomised controlled trial to identify patients at risk of delays in diagnostic evaluation for a range of cancers. The intervention effectively reduced time to diagnostic evaluation and increased the number of patients that received follow-up care. Another RCT [56] identified follow-up delays via an electronic health record based algorithm and record reviews that communicated information through three alert steps: email, telephone call to clinicians, and informing clinical directors. This intervention led to more timely follow-up and diagnosis. However, effectiveness was reduced by non-responsiveness of clinicians in relation to triggers which meant that back-up strategies were required. Medford-Davis and colleagues’ algorithm [55] identified patients at high risk of delayed diagnosis or misdiagnosis who presented at the emergency department with abdominal pain and returned within 10 days requiring hospitalisation. This study concluded that breakdown in diagnostic processes led to diagnostic errors, finding that triggers provided opportunities for process improvement within emergency departments.

There were three studies that used computerised notification systems either as reminders or alerts for abnormal lab test results for timely follow-up to reduce diagnostic errors. Cannon and Allen [54] in an RCT compared effectiveness of a computer reminder system with a manual reminder system in terms of adhering to the implementation of clinical practice guidelines and found the computer system to be more effective. However, Singh and colleagues [59] in a prospective study revealed automated notifications of abnormal laboratory results through electronic medical records were unable to guarantee timely follow-up. Similarly, another study by Singh and colleagues [58] used a computerised test result notification system to reduce errors in communication of abnormal imaging results however the intervention was unable to prevent results from being lost to follow-up. Neither of these studies were RCTs.

Audit processes

Twenty studies examined the interventions related to audit to address diagnostic errors [5, 35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53]. There were 10 studies in emergency settings (including two trauma and two cardiology) [5, 37,38,39, 41, 42, 44, 46, 47, 52], one in an outpatient setting [36], three in laboratory settings [35, 43, 51], and two in hospital setting [45, 48]. Four studies did not explicitly mention the setting [40, 49, 50, 53].

Additional patient reviews

There were five studies on additional patient reviews [5, 36, 39, 42, 44]. Two studies examined use of trauma teams to diagnose complex injuries in trauma patients [5, 44] and showed use of a trauma response team reduced delayed diagnosis. One US study examined the impact of tertiary examination, a complete re-evaluation, on missed diagnosis of injury at a Level II Trauma Centre [42] and revealed 14% missed injuries, hence recommended adoption of this intervention as standard care at Level II Trauma Centres to improve accuracy of injury diagnosis. Another study [39] used a three pronged strategy for improving the diagnostic interpretation of radiographs that used a combination of review at monthly meetings, documenting errors and ongoing training of new staff and found a significant reduction in error rates. A study by Casalino and colleagues [36] audited 23 primary care practices using retrospective medical record review to determine if patients had been informed when test results were abnormal. Practices with partial electronic medical records were found to be less likely to inform patients of abnormal results compared to fully paper-based, or fully electronic systems.

Computerised decision support systems

Eleven studies were based on computerised decision support systems [35,36,37, 43, 45,46,47, 50,51,52,53]. Studies by Tsai and colleagues [51], and David et al. [37] showed improvement in diagnosis accuracy using computer-based interpretation. Support systems enhanced junior doctor’s ability to diagnose acute paediatric conditions [45]; increased accuracy in diagnosis of acute abdominal pain [52]; and provided more accurate prediction of Alzheimer’s disease [50]. Ramnarayan and colleagues [45] stated that eliminating barriers to computer access is crucial for computerised assistance in clinical settings for the improvement in diagnosis. Boguševičius and colleagues [35] compared diagnosis of acute small bowel obstruction using computer aided diagnosis with radiology contrast, whilst they found no difference in accuracy, the computer aided time to diagnosis was only 1 h compared to 16 h for contrast radiology. Jiang et al., [43] compared a single radiologist reading, independent double reading by two radiologists and single reading with computer aid. They found computer aided diagnosis superior to all other methods in improving diagnostic accuracy of radiology reports.

One study found a computer diagnostic system to improve diagnosis of occult psychiatric illness but found no guaranteed response from the physician to diagnose or treat the condition [46]; and another found no difference in missed diagnosis of mental health conditions comparing computer aided diagnosis with traditional pen and paper [53]. Both studies favouring the traditional method for difficult mental health cases. Selker and colleagues [47] showed that computerised prediction did not impact on admission of people with acute cardiac ischemia but reduced unnecessary admission of people without the condition.

Checklists

Checklists were used in four studies [38, 41, 48, 49]. Graber and his colleagues [41], used checklists in emergency settings and concluded that checklists could prevent diagnostic errors because they included additional diagnostic possibilities, however the study indicated the need to consistently use the checklists in collaboration with patients to achieve maximum value in usage of checklists.

Two Canadian studies showed improvement in accuracy of diagnosis in cardiology using a checklist approach: one used a checklist in verification of diagnosis by experts [49]; and another reviewed a cardiac exam using a checklist [48]. The third study used a checklist of symptoms but diagnosis accuracy was not different from usual care [38].

Education programs

One study that was based on education programs [40] in primary care settings showed evidence of improved diagnostic accuracy through training and the use of a standard questionnaire. This study showed a 77% reduction in diagnostic errors due to an ongoing education program between physicians and neurosurgeons.

Effectiveness of audit and communication strategies

The 9 RCTs were explored to determine the effectiveness of the interventions in reducing diagnostic errors. Three studies(54, 56, 57)were on communication and 9 on audit strategies [35, 38, 46, 48, 51, 53].

Cannon and colleagues [54] found the rate of screening increased by 25.5% for a reminder system compared to a checklist in a psychiatric outpatient setting. Another study [57] in a primary care setting (cancer) showed that patient identification triggers in combination with communication to primary care providers reduced the time to diagnostic evaluation by 96, 48 and 28 days for colorectal cancers, prostate cancers and lung cancers respectively. In addition, 21.2% more patients received diagnostic evaluation by the primary care providers’ final review. Meyer and colleagues [56] examined 3 escalating communication strategies-first emails, followed by telephones and lastly contact by clinic directors in reducing delayed follow-up using the same study by Murphy and colleagues [57]. Delayed follow-up was 88.9% using email, 54.5% for contact by clinic directors, and 31.4% using telephone.

Tsai et al., [51] reported that computer assistance in a laboratory setting increased the accuracy of interpretation of electrocardiograms by 6.6%, therefore reducing wrong diagnosis. Checklists used for audit process were found to increase correct diagnosis by 5% in a hospital setting [48]. Another study [38] revealed a diagnostic checklist made no difference in diagnostic errors among primary care physicians although there was a reduction of 25.9% among emergency physicians sub-group. However, three studies [35, 46, 53] identified computerised decision support systems to have no effect on the frequencies or the accuracy of diagnosis. Further details of the effectiveness of the interventions in non-RCTs is provided in Additional file 3.

Discussion

This is the first systematic review on clinician focused audit or communication strategies employed to reduce diagnostic errors in real clinical practice settings. Twenty-six studies on strategies to reduce diagnostic errors were reviewed. The majority of studies were US based (19 studies), and high quality trials in terms of RCTs were low (9 studies, 35%). There were no studies that considered additional benefits to providers or clinical practices such as cost effectiveness or return on investment.

Our results confirmed earlier research [18, 25] by highlighting that there are very few systems that improve diagnostic error rates in real practice settings despite there being substantial information on the significant impact of diagnostic errors. To help address this gap, there is an urgent need for future research to evaluate such interventions to establish their effectiveness and cost effectiveness in actual practice.

The bias towards studies from the US may limit the generalisability of interventions to address diagnostic errors. Of the studies from the US, 8 (42%) were based in the ED, which may further impact on generalisability of findings. The organisation and funding of health care in the US varies considerably to other jurisdictions, with prevalence of private insurers impacting care as a major stakeholder in the system. Investment in high quality research beyond the US is warranted so that comparability with other countries and health systems is feasible.

The interventions in our study were mostly technology-based systems (n = 16, 62%) mainly computer decision support systems and alert systems. Technological advancements have meant that decision support systems are more likely to be available to clinicians. Nearly all computer decision support systems demonstrated improvement in the diagnostic process. However, it is vital to consider the barriers to technical access [45], including technical capacity of organisations and clinicians; and how effectively decision support systems can be integrated within the existing capacity of organisations [60] to realise the benefits in reducing diagnostic errors.

Technology based interventions reduced clinician bias by prompting clinicians to consider a variety of conditions that might be relevant to a patient’s clinical presentation. Our review revealed twofold improvement in the rate of accurate diagnosis through the use of checklists for cardiac examination [48], and improvement in the overall diagnostic process by shortening the time to diagnosis, for example 16-fold quicker time to diagnosis of acute small bowel obstruction compared to radiology contrast [35].

Patient safety research has highlighted the lack of appropriate measurement information for diagnostic errors hence the difficulty to ascertain the frequency of occurrence relative to other medical errors [7]. Studies identified in our review had outcome measures that varied significantly, including rates of screening [54], time to diagnostic evaluation [35, 44] and lost to follow-up rates [56, 58, 59]. Although there is ‘no one size fits all approach’ to measuring diagnostic errors improving the methods of identification of such errors will also improve measurement information.

Feedback to clinicians on their errors has the potential to improve the overall diagnostic process and therefore patient safety [61, 62]. Our review showed evidence of radiologists benefiting from error review [43], however this will depend on an organisational culture that is open to sharing information from their data sources.

Changing the culture of organisations in relation to diagnostic errors where the focus on feedback and diagnostic performance is correction of the system (using non-litigation approaches) and learn from diagnostic errors rather than focus on the individual who made the error has been suggested as a means to improve the learning process of clinicians [61, 63, 64]. Results from our review did not detect any culture change interventions for diagnostic errors.

Education and training interventions have been highlighted to improve the diagnostic process, our review identified only one study that explored the impact of education on diagnostic error rates [40]. Broadening the composition of the healthcare team improved accuracy in the diagnostic process through greater consultation and discussion between healthcare professionals, for example a paediatric specialist trauma team was shown to significantly reduce delay in trauma diagnosis [44].

Realising the full benefit from an intervention requires clinicians to be responsive to any additional information received from the intervention. There was evidence of improvement in the diagnostic process for some of the tested interventions but the benefit was only realised when clinicians accepted and acted upon the recommendations given [46, 56, 58, 59]. Clinician’s unresponsiveness to provided information limits realisation of benefits to the patient, hence the need for back-up strategies to improve physician responsiveness and therefore intervention effectiveness.

Strengths and limitations of review

The strengths of the review include use of two independent reviewers which controlled for random errors and bias in deciding included studies [65, 66]; searching the grey published and unpublished literature which minimises publication and reporting bias on outcomes [65, 66]; and prior registration of the systematic review with PROSPERO to ensure transparency and rigor, reducing bias in study selection [65].

This systematic review is limited by a number of factors: firstly, concentrating only on clinician interventions notwithstanding the improvement in diagnostic accuracy demands involvement of all stakeholders notably patients and their families; secondly, considering only studies post-1990 and before April 2017 hence results may exclude important earlier and more recent studies; and lastly, methodological limitation since studies only in English language were included (which perhaps could explain some of the bias towards studies from the US).

Conclusion

In conclusion, we found limited evidence on suggested interventions actually used in clinical settings. There is some evidence that trigger algorithms, including computer based and alert systems, may reduce delayed diagnosis and improve diagnostic accuracy. In trauma settings, strategies such as additional patient review (e.g. trauma teams) reduced missed diagnosis and in radiology departments review strategies such as team meetings and error documentation may reduce diagnostic error rates over time. However, none of the studies explored cost effectiveness in real practice. For this reason, it is recommended that future work establish the effectiveness and cost effectiveness of suggested interventions in real-world clinical settings. The implication is that at both the national and global level, policies around patient safety need to be harmonised to enable comparison and evaluation of progress with time. We agree with Singh and colleagues in highlighting the importance of WHO’s global leadership as instrumental in addressing diagnostic error as a global problem [61]. Policy makers can prioritise patient safety and research to ensure sustainable funding to develop actionable, evidence based interventions to address diagnostic errors, whether due to delayed diagnosis, misdiagnosis or missed diagnosis.

Availability of data and materials

Full electronic search strategies and review protocol are available in Additional file 1.

Abbreviations

- PSNet:

-

Patient Safety Network

- RCT:

-

Randomised Controlled Trials

References

Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165(13):1493–9.

Donaldson MS, Corrigan JM, Kohn LT. To err is human: building a safer health system: National Academies Press; 2000.

Korn L, Corrigan J, Donaldson M. To err is human: building a safer health system. Washington: Institute of Medicine. National academy press; 1999.

Zwaan L, Singh H. The challenges in defining and measuring diagnostic error. Diagnosis 2015. p. 97.

Aaland MO, Smith K. Delayed diagnosis in a rural trauma center. Surgery. 1996;120(4):774–9.

Chopra V, Krein S, Olmstead R, Safdar N, Saint S. Making health care safer II: an updated critical analysis of the evidence for patient safety practices. Comparative Effectiveness Review No. 2013;211.

National Academies of Sciences EaM. Improving diagnosis in health care: National Academies Press; 2016.

Singh H. Editorial: helping organizations with defining diagnostic errors as missed opportunities in diagnosis. Jt Comm J Qual Patient Saf. 2014;40(3):99–101.

Schiff GD, Hasan O, Kim S, Abrams R, Cosby K, Lambert BL, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arch Intern Med. 2009;169(20):1881–7.

Newman-Toker DE. A unified conceptual model for diagnostic errors: underdiagnosis, overdiagnosis, and misdiagnosis. Diagnosis. 2014;1(1):43–8.

CRICO. Annual benchmarking report: Malpractice risks in the diagnostic process. Cambridge, MA; 2014 MARCH 2017.

Lambe KA, Curristan S, O'Reilly G, Kelly BD. Dual-process cognitive interventions to enhance diagnostic reasoning: a systematic review. BMJ Qual Saf. 2016;25(10):808–20.

Schewe S, Schreiber MA. Stepwise development of a clinical expert system in rheumatology. The Clinical Investigator. 1993;71(2):139–44.

Graber M, Gordon R, Franklin N. Reducing diagnostic Erros in medicine. Acad Med. 2002;77(10):981–92.

Nurek M, Kostopoulou O, Delaney BC, Esmail A. Reducing diagnostic errors in primary care. A systematic meta-review of computerized diagnostic decision support systems by the LINNEAUS collaboration on patient safety in primary care. The European Journal Of General Practice. 2015;21 Suppl:8–13.

Haynes RB, Wilczynski NL. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: Methods of a decision-maker-researcher partnership systematic review. Implementation Science. 2010;5(1):12–9.

Greenes RA. Reducing diagnostic error with computer-based clinical decision support. Adv Health Sci Educ. 2009;1:83.

Graber ML, Kissam S, Payne VL, Meyer AN, Sorensen A, Lenfestey N, et al. Cognitive interventions to reduce diagnostic error: a narrative review. BMJ Qual Saf. 2012.

Graber ML, Wachter RM, Cassel CK. Bringing diagnosis into the quality and safety equations. Jama. 2012;308(12):1211–2.

Singh H. Diagnostic errors: moving beyond ‘no respect’and getting ready for prime time: BMJ Publishing Group Ltd; 2013.

Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Libr. 2012.

Ivers NM, Sales A, Colquhoun H, Michie S, Foy R, Francis JJ, et al. No more ‘business as usual’with audit and feedback interventions: towards an agenda for a reinvigorated intervention. Implement Sci. 2014;9(1):14.

Lopez-Campos JL, Asensio-Cruz MI, Castro-Acosta A, Calero C, Pozo-Rodriguez F. Results from an audit feedback strategy for chronic obstructive pulmonary disease in-hospital care: a joint analysis from the AUDIPOC and European COPD audit studies. PLoS One. 2014;9(10):e110394.

Hysong SJ, Best RG, Pugh JA. Audit and feedback and clinical practice guideline adherence: making feedback actionable. Implement Sci. 2006;1(1):9.

Singh H, Graber ML, Kissam SM, Sorensen AV, Lenfestey NF, Tant EM, et al. System-related interventions to reduce diagnostic errors: a narrative review. BMJ Qual Saf. 2012;21(2):160–70.

Esposito P, Dal CA. Clinical audit, a valuable tool to improve quality of care: general methodology and application in nephrology. World journal of nephrology. 2014;3(4):249–55.

Keyton J. Communication and organizational culture: a key to understanding work experience. Thousand Oaks, CA: Sage; 2011.

PRISMA. PRISMA Statement 2015 [Available from: http://www.prisma-statement.org/PRISMAStatement/CitingAndUsingPRISMA.aspx.

PROSPERO International prospective register of systematic reviews. A systematic review: audit systems and communication strategies to prevent diagnostic error 2017 [Available from: https://www.crd.york.ac.uk/PROSPERO/display_record.asp?ID=CRD42017067056.

U.S. Department of Health and Human Services. Pateint Safety Netwark USA: Agency for Healthcare Reserch and Quality 2017 [updated 14-06-2017. Available from: https://psnet.ahrq.gov/.

Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343.

EPHP. Effective Public Health Practice Project. Quality assessment tools for quantitative studies. 2009 [Available from: http://www.ephpp.ca/tools.html.

Thomas B, Ciliska D, Dobbins M, Micucci S. A process for systematically reviewing the literature: providing the research evidence for public health nursing interventions. Worldviews Evid-Based Nurs. 2004;1(3):176–84.

THETA Collaborative. PRISMA Flow Diagram Generator 2017 [Available from: http://prisma.thetacollaborative.ca/.

Boguševičius A, Maleckas A, Pundzius J, Skaudickas D. Prospective randomised trial of computer-aided diagnosis and contrast radiography in acute small bowel obstruction. Eur J Surg. 2002;168(2):78–83.

Casalino LP, Dunham D, Chin MH, Bielang R, Kistner EO, Karrison TG, et al. Frequency of failure to inform patients of clinically significant outpatient test results. Arch Intern Med. 2009;169(12):1123–9.

David CV, Chira S, Eells SJ, Ladrigan M, Papier A, Miller LG, et al. Diagnostic accuracy in patients admitted to hospitals with cellulitis. Dermatology online journal. 2011;17(3).

Ely JW, Graber MA. Checklists to prevent diagnostic errors: a pilot randomized controlled trial. Diagnosis. 2015;2(3):163–9.

Espinosa JA, Nolan TW. Reducing errors made by emergency physicians in interpreting radiographs: longitudinal study. Bmj. 2000;320(7237):737–40.

Fridriksson S, Hillman J, Landtblom AM, Boive J. Education of referring doctors about sudden onset headache in subarachnoid hemorrhage. Acta Neurol Scand. 2001;103(4):238–42.

Graber ML, Sorensen AV, Biswas J, Modi V, Wackett A, Johnson S, et al. Developing checklists to prevent diagnostic error in emergency room settings. Diagnosis. 2014;1(3):223–31.

Howard J, Sundararajan R, Thomas SG, Walsh M, Sundararajan M. Reducing missed injuries at a level II trauma center. Journal of Trauma Nursing. 2006;13(3):89–95.

Jiang Y, Nishikawa RM, Schmidt RA, Metz CE, Doi K. Relative gains in diagnostic accuracy between computer-aided diagnosis and independent double reading. 2000.

Perno JF, Schunk JE, Hansen KW, Furnival RA. Significant reduction in delayed diagnosis of injury with implementation of a pediatric trauma service. Pediatr Emerg Care. 2005;21(6):367–71.

Ramnarayan P, Winrow A, Coren M, Nanduri V, Buchdahl R, Jacobs B, et al. Diagnostic omission errors in acute paediatric practice: impact of a reminder system on decision-making. BMC Medical Informatics And Decision Making. 2006;6(1):37-.

Schriger DL, Gibbons PS, Langone CA, Lee S, Altshuler LL. Enabling the diagnosis of occult psychiatric illness in the emergency department: a randomized, controlled trial of the computerized, self-administered PRIME-MD diagnostic system. Ann Emerg Med. 2001;37(2):132–40.

Selker HP, Beshansky JR, Griffith JL, et al. Use of the acute cardiac ischemia time-insensitive predictive instrument (aci-tipi) to assist with triage of patients with chest pain or other symptoms suggestive of acute cardiac ischemia: A multicenter, controlled clinical trial. Annals of Internal Medicine. 1998;129(11_Part_1):845–55.

Sibbald M, de Bruin AB, Cavalcanti RB, van Merrienboer JJ. Do you have to re-examine to reconsider your diagnosis? Checklists and cardiac exam. BMJ quality & safety. 2013;22(4):333–8.

Sibbald M, de Bruin AB, van Merrienboer JJ. Checklists improve experts’ diagnostic decisions. Med Educ. 2013;47(3):301–8.

Soininen H, Mattila J, Koikkalainen J, Van Gils M, Simonsen AH, Waldemar G, et al. Software tool for improved prediction of Alzheimer’s disease. Neurodegener Dis. 2012;10(1–4):149–52.

Tsai TL, Fridsma DB, Gatti G. Computer decision support as a source of interpretation error: the case of electrocardiograms. J Am Med Inform Assoc. 2003;10(5):478–83.

Wellwood J, Johannessen S, Spiegelhalter D. How does computer-aided diagnosis improve the management of acute abdominal pain? Ann R Coll Surg Engl. 1992;74(1):40.

Bergman LG, Fors UG. Decision support in psychiatry–a comparison between the diagnostic outcomes using a computerized decision support system versus manual diagnosis. BMC medical informatics and decision making. 2008;8(1):9.

Cannon DS, Allen SN. A comparison of the effects of computer and manual reminders on compliance with a mental health clinical practice guideline. J Am Med Inform Assoc. 2000;7(2):196–203.

Medford-Davis L, Park E, Shlamovitz G, Suliburk J, Meyer AN, Singh H. Diagnostic errors related to acute abdominal pain in the emergency department. Emergency Medicine Journal. 2015:emermed-2015-204754.

Meyer AN, Murphy DR, Singh H. Communicating findings of delayed diagnostic evaluation to primary care providers. The Journal of the American Board of Family Medicine. 2016;29(4):469–73.

Murphy DR, Wu L, Thomas EJ, Forjuoh SN, Meyer AND, Singh H. Electronic trigger-based intervention to reduce delays in diagnostic evaluation for cancer: a cluster randomized controlled trial. J Clin Oncol. 2015;33(31):3560–7.

Singh H, Arora HS, Vij MS, Rao R, Khan MM, Petersen LA. Communication outcomes of critical imaging results in a computerized notification system. J Am Med Inform Assoc. 2007;14(4):459–66.

Singh H, Thomas EJ, Sittig DF, Wilson L, Espadas D, Khan MM, et al. Notification of abnormal lab test results in an electronic medical record: do any safety concerns remain? Am J Med. 2010;123(3):238–44.

Cresswell KM, Mozaffar H, Lee L, Williams R, Sheikh A. Safety risks associated with the lack of integration and interfacing of hospital health information technologies: a qualitative study of hospital electronic prescribing systems in England. BMJ quality &. Safety. 2017;26(7):530–41.

Singh H, Schiff GD, Graber ML, Onakpoya I, Thompson MJ. The global burden of diagnostic errors in primary care. BMJ quality &. Safety. 2017;26(6):484–94.

de Wet C, Black C, Luty S, McKay J, O'Donnell CA, Bowie P. Implementation of the trigger review method in Scottish general practices: patient safety outcomes and potential for quality improvement. BMJ quality &. Safety. 2017;26(4):335–42.

Milstead JA. The culture of safety. Policy, Politics, & Nursing Practice. 2005;6(1):51–4.

Moore J, Mello MM. Improving reconciliation following medical injury: a qualitative study of responses to patient safety incidents in New Zealand. BMJ quality &. Safety. 2017;26(10):788–98.

McDonagh M, Peterson K, Raina P, Chang S, Shekelle P. Avoiding bias in selecting studies; 2013.

Sox H, McNeil B, Wheatley B, Eden J. Knowing what works in health care: a roadmap for the nation: National Academies Press; 2008.

Acknowledgements

The authors are grateful to Andrew Georgiou, Gordon Schiff, Matthew Sibbald, Ashley N.D. Meyer and Laura Zwaan for their thoughtful comments and constructive suggestions to improve our manuscript.

Funding

This research was funded with a grant from the Victorian Managed Insurance Authority (VMIA), Australia. The funding body approved the research question, but had no role in the study design, analysis, and interpretation or in the writing of the manuscript.

Author information

Authors and Affiliations

Contributions

JAO and JJW conceptualised the research. JAO, MC, SBM and MF conducted the title, abstract and full-text review for this systematic review, extracted data, undertook risk of bias assessment and drafted major parts of the manuscript. JJW developed the search strategy and drafted major parts of the manuscript. SWD overviewed the study designs and drafted tables of the manuscript. The final manuscript was read and approved by all authors.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

None declared.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Search Strategies. (DOCX 17 kb)

Additional file 2:

Risk of bias assessment. (DOCX 27 kb)

Additional file 3:

The effectiveness of audit and communication strategies in reducing diagnostic errors. (DOCX 21 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Abimanyi-Ochom, J., Bohingamu Mudiyanselage, S., Catchpool, M. et al. Strategies to reduce diagnostic errors: a systematic review. BMC Med Inform Decis Mak 19, 174 (2019). https://doi.org/10.1186/s12911-019-0901-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12911-019-0901-1