Abstract

Background

Diagnostic uncertainty occurs frequently in emergency medical care, with more than one-third of patients leaving the emergency department (ED) without a clear diagnosis. Despite this frequency, ED providers are not adequately trained on how to discuss diagnostic uncertainty with these patients, who often leave the ED confused and concerned. To address this training need, we developed the Uncertainty Communication Education Module (UCEM) to teach physicians how to discuss diagnostic uncertainty. The purpose of the study is to evaluate the effectiveness of the UCEM in improving physician communications.

Methods

The trial is a multicenter, two-arm randomized controlled trial designed to teach communication skills using simulation-based mastery learning (SBML). Resident emergency physicians from two training programs will be randomly assigned to immediate or delayed receipt of the two-part UCEM intervention after completing a baseline standardized patient encounter. The two UCEM components are: 1) a web-based interactive module, and 2) a smart-phone-based game. Both formats teach and reinforce communication skills for patient cases involving diagnostic uncertainty. Following baseline testing, participants in the immediate intervention arm will complete a remote deliberate practice session via a video platform and subsequently return for a second study visit to assess if they have achieved mastery. Participants in the delayed intervention arm will receive access to UCEM and remote deliberate practice after the second study visit. The primary outcome of interest is the proportion of residents in the immediate intervention arm who achieve mastery at the second study visit.

Discussion

Patients’ understanding of the care they received has implications for care quality, safety, and patient satisfaction, especially when they are discharged without a definitive diagnosis. Developing a patient-centered diagnostic uncertainty communication strategy will improve safety of acute care discharges. Although use of SBML is a resource intensive educational approach, this trial has been deliberately designed to have a low-resource, scalable intervention that would allow for widespread dissemination and uptake.

Trial registration

The trial was registered at clinicaltrials.gov (NCT04021771). Registration date: July 16, 2019.

Similar content being viewed by others

Background

Diagnostic uncertainty is a frequent occurrence in the emergency department (ED), with at least 37% of patients treated in the ED discharged without a pathologic diagnosis [1]. For example, a patient may present to the ED with a complaint of chest pain. After potentially dangerous diagnoses are excluded, the patient may then be discharged with a discharge diagnosis of “chest pain,” a symptom-based diagnosis. In the absence of a specific cause of her symptoms, she is tasked with navigating future care in the setting of diagnostic uncertainty. Faced with this uncertainty, patients often experience fear, with negative impacts on mental and physical health in the post-discharge period [2,3,4]. Although diagnostic uncertainty is frequent in emergency care settings, medical professionals have minimal guidance on how to communicate effectively to help patients transition home safely with such uncertainty. Among emergency medicine trainees, 99% reported encountering challenges discharging patients with diagnostic uncertainty at least sometimes, and 43% have encountered this challenge ‘often’ or all of the time [5].

Transitions of care are well established as a high-risk period for patient safety events [6,7,8,9,10,11,12,13]. Effective patient communication is essential in promoting safety during care transitions [14,15,16], yet studies of verbal ED discharge have found that instructions are often incomplete, with few opportunities for patients to ask questions or confirm understanding [17]. Numerous research efforts target reducing harm at care transitions [6,7,8,9,10,11, 18], including efforts to improve discharge coordination [19] and communication of discharge instructions [20]. Communication of discharge diagnosis, prognosis, treatment plan, and expected course of illness is important to high quality ED discharge [21], and prior work on communication during care transitions has focused on improving the content, delivery, and comprehension of discharge instructions [15, 22]. These efforts have included provision of diagnosis-specific discharge instructions [23, 24]. However, for patients discharged from the ED with diagnostic uncertainty, no standardized instructions and no standard approach to discuss uncertainty currently exist.

We developed an educational curriculum using simulation-based mastery learning (SBML) for resident physicians to guide patient-provider communication in the setting of uncertainty at discharge. SBML is a competency-based educational approach that allows learners to develop skills through deliberate practice, resulting in very high levels of performance outcomes, with little variation in outcomes among learners [25, 26]. In SBML, learners are required to meet or exceed a predetermined minimum passing standard (MPS) on a skills-based checklist or knowledge examination before completion of training. There is a large and growing body of evidence supporting the use of SBML as an educational modality for acquiring technical and procedural skills [27,28,29,30,31,32]. However, SBML has less frequently been used for acquisition of communication and interpersonal skills [33, 34]. SBML is time and resource intensive, as one of the main means by which trainees acquire mastery is through repeated deliberate practice. A SBML curriculum relevant to a communication task requires the additional specialized resource of 1-on-1 time with a standardized patient. Such increased time with standardized patients raises educational costs and increases the need for coordination with trainees’ clinical schedules. To address these intensive resource needs, Issenberg et al. suggested that perhaps skill acquisition and retention “can be achieved with different, often less costly and more flexible, simulation modalities such as virtual patients” [35].

Research groups have begun to develop educational designs that use video-based observational platforms [36, 37] and have found that these designs improve efficiency of skill acquisition within SBML [38]. In parallel, there is a growing body of research on the role of virtual patients (defined as “an interactive computer simulation of real-life clinical scenarios for the purpose of healthcare and medical training, education, or assessment”) [39]. A less frequently explored modality is a hybrid approach to simulation using standardized patients remotely. To our knowledge, only four studies have evaluated the use of remote standardized patients [40,41,42,43]; however, this method has promise as it minimizes the local resources needed, builds upon known strengths of standardized patients and retains the fidelity of a human interaction, yet does not require the intensive computer programming of developing virtual patients.

This randomized controlled waitlist trial will evaluate the acquisition and retention of communication skills in discussing diagnostic uncertainty at the time of ED discharge among participants exposed to remote standardized patient practice and two web-based educational tools (a curriculum and a game), compared to usual education. To our knowledge, this is the first multi-center randomized trial of SBML designed to teach communication skills using remote standardized patients to complete the deliberate practice.

Methods/design

The study will use a randomized controlled waitlist trial design with two study arms. The intervention arm exposes emergency medicine (EM) resident physicians to a two-part intervention, entitled the Uncertainty Communication Education Module (UCEM), while residents in the control arm receive delayed exposure to UCEM. The study will test the effectiveness of UCEM and remote deliberate practice in teaching residents to achieve mastery of uncertainty communication, as assessed by the Uncertainty Communication Checklist (UCC) [44]. UCEM has two components: 1) a web-based interactive module; and 2) a smart-phone-based game. Both components teach and reinforce communication skills that can be applied clinically when treating patients who are discharged from the ED with diagnostic uncertainty.

The UCEM is coupled with deliberate practice sessions with remote standardized patients. Participants will be assessed using the Uncertainty Communication Checklist (UCC) [44], a tool to guide the assessment of trainees when discharging patients from the ED in the setting of diagnostic uncertainty. Both the UCEM and the UCC were developed through an iterative process of feedback with experts and patients with the goal of educating providers about diagnostic uncertainty in the acute care setting, and improving their skills in discussing this topic with patients.

All study procedures were reviewed and approved by the Thomas Jefferson University Internal Review Board (IRB), with an IRB authorization agreement between Northwestern University and Thomas Jefferson. The trial was registered at clinicaltrials.gov (NCT04021771). The authors hypothesize that resident physicians who receive the UCEM intervention and complete deliberate practice will be significantly more likely to achieve mastery at the initial post-test in this communication skill compared to residents in the control arm.

Study design and setting

Rather than employing a pre- / post-test design, as is often used in SBML, we will use a randomized controlled waitlist study design. The waitlist design allows us to maintain the goal of a mastery-learning curriculum, eventually affording all resident learners the access to the intervention and the opportunity to achieve mastery, but simultaneously allows for rigorous scientific evaluation.

The study will take place at two EM residency-training programs in the United States (i.e., Philadelphia and Chicago) during the 2019–2020 academic year (study ongoing). All emergency medicine residents, post-graduate years (PGY) 1 through 4 (i.e., PGY 1 through 3 in Philadelphia, and PGY 1 through 4 in Chicago) are eligible for participation. There are no exclusion criteria. The educational activities are being integrated into the formal residency curriculum at each site; therefore, residents are being consented for use of their data, but if they decline consent, they will nonetheless complete the simulation testing and educational components of the curriculum. A study team member will obtain written consent from all study participants for use of their data. Residents at each site will be randomly assigned to a study arm using a computer-generated randomization schema stratified by stage of training (i.e., junior resident [PGY 1–2], senior resident [PGY 3–4]) and training site (i.e., Philadelphia, Chicago).

Study visits

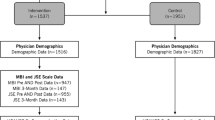

Three study test visits are planned for all participants at the following time points: T1 (baseline), T2 (8–12 weeks post-baseline), and T3 (16–24 weeks post-baseline) (Fig. 1). The T1 visit will consist of the baseline test for all participants, during which they will complete a simulated encounter with an in-person standardized patient to establish a baseline score on the UCC. Participants who are randomized to the Group A (immediate intervention) will receive immediate and detailed feedback on their performance in the encounter, which will include an introduction to the UCC. Following the baseline testing, each participant will receive a group allocation. The allocation sequence will be generated in advance by the study statistician and implemented via sequentially numbered, sealed, opaque envelopes which will be opened by research personnel while the participant is completing their baseline simulated encounter. Participants in Group A will be given access to UCEM (the intervention) and will be scheduled to participate in the telehealth remote deliberate practice sessions with a standardized patient. Participants in Group B, allocated to delayed access to UCEM, will not receive any study-related intervention prior to T2.

The T2 visit will be scheduled approximately 8–12 weeks after T1, and will consist of another in-person test encounter with a standardized patient for both Group A and B. The waitlist approach allows for evaluation of the impact of exposure to the baseline test, UCEM and deliberate practice in Group A versus baseline testing alone in Group B. Although T1 is a baseline “test”, often times a test can act as an intervention by stimulating subsequent reflection and self-directed practice of a skill during routine clinical activities.

Following the T2 visit, participants in Group B will be given access to UCEM and scheduled for telehealth remote deliberate practice sessions with standardized patients. Both groups will return for a third study test visit (T3) approximately 8–12 weeks after T2. All study test visits will involve participants completing a video-recorded simulated encounter with in-person standardized patients, although the clinical scenarios will vary each visit to maximize participants’ exposure to different scenarios. Participants will be assigned a score on the UCC for their performance for each visit, with the primary endpoint being achieving the MPS (binary, yes/no) [45]. Any participant not achieving mastery upon the completion of T3 will be given additional opportunities to practice and retest until mastery is achieved.

Participants will complete surveys at each of the study test visits (T1-T3) to collect demographic information and/or feedback on their experiences with the simulation encounters and educational curriculum. Participating residents will be compensated $75 for sharing their data and their time with completing surveys ($25 at initial enrollment and $50 upon completion of all study activities). Trained research assistants will enter all survey data and UCC scores into an electronic REDCap (Research Electronic Data Capture) database under study identification numbers which will be used to maintain confidentiality [46] (Table 1).

Simulation cases

Simulation cases intentionally represent common symptom-based diagnoses. A total of sixteen cases are included (i.e., four reserved for testing, eight for deliberate practice, four held in reserve if needed). Each case includes a case history, a normal physical examination, and normal laboratory results with variable degrees of testing performed in the ED (e.g., abdominal pain with blood and urine tests completed versus abdominal pain with computed tomography (CT) completed). Participating residents will be provided with the instructions to: 1) “update the patient” on his/her results; and 2) “discharge the patient” from the ED. They will further be informed that the simulation will be focused on communication and not medical decision making, clarifying that additional testing or admission to the hospital should not be performed.

Standardized patients will complete a training session to learn about the checklist and case content, using multiple modalities including video recordings paired with group discussions and role-plays. In addition to cases representing a range of symptoms, the standardized patients will be assigned an “emotional state” for each case (i.e., reassured, confused, anxious/nervous, or inquisitive). Cases were written by emergency physician experts in simulation education, and revised by team members with both clinical and communication expertise. All cases were designed to be of equal difficulty. Cases were pilot tested and further refined prior to the start of the study.

UCEM intervention

The UCEM intervention includes an online educational module with links to reading and an interactive smartphone-based game. Participants will receive the intervention at one of two time periods during the study depending on their group assignment (between T1 and T2 for Group A, between T2 and T3 for Group B). During the intervention period, participants may complete the online educational module and smartphone-based game independently at a pace and frequency of their choosing. They may return to the module and game as frequently as they wish.

The online educational module introduces the concept of diagnostic uncertainty, explains the UCC, and includes a variety of tools to support knowledge retention including matching games, video vignettes, and sample discharge conversation content presented via audio-clips. The online educational module was developed by the study team in collaboration with the Center for Teaching and Learning at Thomas Jefferson University. The online educational module is available at https://rise.articulate.com/share/7HtSck8Gw2zbg56yeO356oV0VrN17LWZ#/ (Password: Uncertainty); screenshots from the curriculum are also shown in Fig. 2.

The interactive game provides learners with a low-stakes environment to reflect on and practice their word choice during discharge conversations. The game includes a series of discharge scenarios. For each scenario, the game displays two choices of phrases relevant to the discharge conversation and asks the learner to select the “better” phrase. Follow-up questions ask the learner to select reasons why a specific phrase is the less/more optimal choice (e.g., “it did not use lay language” or “provides an explanation for why a specific test was ordered”) to reinforce the items of the UCC. Learners are awarded points for their performance. Scores are displayed anonymously on a “leaderboard.” As with the online educational module, participants will be able to play and return to the game as frequently as they desire. Jump Simulation-OSF HealthCare developed the interactive game with input from the study team. The interactive game can be downloaded free from the mobile application stores (i.e., Apple Store) on both Apple and Android™ platforms via the following links: (http://bit.ly/ucomm-apple or http://bit.ly/ucomm-android). Screenshots from the game are show in Fig. 3.

Telehealth remote deliberate practice

After accessing the UCEM, participants who did not meet the MPS at baseline will be required to schedule at least one telehealth-mediated remote deliberate practice session. Unlike traditional in-person simulation encounters, participants will not be required to report to a dedicated simulation center; rather, residents may participate in the encounter from a location of their own choosing. These sessions will be completed using the Zoom platform [(2019) Zoom Cloud Meetings: A video-conferencing tool (Version 4.4.6). Available from https://zoom.us]. After each practice session, participants will receive immediate and detailed feedback from the standardized patient regarding their performance. Participants will have the option to complete additional deliberate practice sessions before their subsequent test visit (either T2 or T3) during which they will be officially scored on the UCC. After each remote deliberate practice session, participants will be asked whether they would like to complete additional remote deliberate practice sessions. Case scenarios used for deliberate practice will be distinct from case scenarios used for testing (i.e., different medical complaints), although of similar case complexity.

Outcomes and measures

The analysis of the trial will report outcomes in three different domains, derived from Kirkpatrick’s Four Level Evaluation Model [47].

Reaction: participants’ experiences

For this first domain, we will assess participants’ reaction to the educational curriculum and intervention. Although this first domain does not measure the impact of the intervention, it is nonetheless important because holding a learner’s interest and attention is necessary for the success of the teaching intervention. All participants will complete surveys to gather feedback on the simulation experience, the UCEM intervention, and the remote deliberate practice sessions using a combination of open- and closed-ended questions.

Learning: performance on simulation

The second domain will assess learning. To measure the curriculum effectiveness in the learning domain, we will report the data from the two-arm randomized controlled waitlist trial, as detailed above. Our primary outcome of interest will be the achievement of the MPS at T2.

Performance on the simulation encounter will be rated at all study visits using the MPS derived previously for the UCC [45]. The primary outcome of interest for this trial is the participant score on the UCC at T2. The UCC is a 21-item checklist assessing if uncertainty was addressed in each step of the discharge communication process and includes the following major categories: Introduction, Test Results/ED summary, No/Uncertain Diagnosis, Next Steps/Follow up, Home Care, Reasons to Return, and General Communication Skills (Appendix). Trained standardized patients will complete UCC ratings immediately following the simulation sessions. Standardized patients will be blinded to study arm allocation, as well as the MPS, at the time of the simulated encounter and scoring; both participants and standardized patients will be unblinded after scores have been collected in order to provide feedback to those in the immediate intervention group. Additionally, a research team member, blinded to the participant’s study arm, will complete ratings for at least a 50% random sample of the videotaped encounters to demonstrate reliability of scoring [48].

As previously described, SBML results in highly reliable achievement of mastery; however, individual trainees may vary in the amount of practice needed to achieve mastery. In order to assess the differences in participants’ effort required to achieve mastery, we will track the number of testing sessions required by each participant to achieve mastery. Trainees who do not achieve mastery at T2 and T3 will have additional deliberate practice and testing until mastery is achieved. Secondary outcomes of interest in the learning domain include: the number of deliberate practice sessions and the change in pass rate on the UCC from T2 to T3 within groups. This metric will assess retention of mastery in the intervention group and will be a supplemental assessment of the interventions’ efficacy in the delayed intervention (control) group.

Additionally, game access will be tracked; frequency of online module use will be measured via self-reporting. These data will be used to evaluate the relationship between access rates and time to achieve mastery.

Transfer: skill utilization in clinical practice

For the third domain, transfer of knowledge, we will contact participants after completion of the education curriculum to inquire about their use of the new communication skills in clinical practice. Topics of questions will include: frequency of encounters with diagnostic uncertainty, comfort level in discussing this topic with patients, patients’ reactions to the conversation and request for anecdotes on patient encounters that could inform future training.

The fourth level of Kirkpatrick’s model, “Results,” will not be assessed in this study due to the timeframe and scope of the funding mechanism.

Participant characteristics

All participants will complete a survey after completion of the simulation encounter at T1 with items including basic demographic characteristics (i.e., age, gender, race/ethnicity, post-graduate year). Additionally, participants will be asked open-ended questions, including how often they encounter clinical scenarios with diagnostic uncertainty in their clinical practice, how comfortable they are in having these conversations, what strategies they have used and found successful, and if they have had prior training on this topic.

Sample size calculation

As this will be the first application of the UCC, it is difficult to estimate a baseline pass rate for power calculations. Prior studies of other clinical skills in EM trainees show pre-test pass rates in the 45–70% range, albeit not in an SBML testing setting [32]. Pre-test communication skills vary widely in clinical practice, with most metrics reporting “excellent” ratings in the 50–80% range. In the ED setting, items related to “allowing patient to ask questions,” which are likely most relevant to the UCC score, are lower, with only 50% of clinicians being rated as excellent [49, 50]. Although participants may “pass” individual items on the UCC at a rate of 60% at baseline, we estimate a baseline performance of 3% of residents meeting the MPS on the pre-test. This estimate was based on prior SBML studies in communication with pass-rates all less than 10% [29, 33]. We expect waitlist subjects to have only a slight improvement in meeting MPS at T2 resulting in a 10% pass rate. Randomizing 110 participants and conservatively estimating a near 90% retention at the Period 2 assessment (n = 100, 50 per group), we will have greater than 87% power to detect a difference of 25% between study groups at T2 (intervention pass rate of 35%) assuming a two-sided Type I error of 5%. To optimize participant enrollment and retention, several strategies will be deployed including wide availability of simulation dates and times, email and text reminders for scheduled sessions, and financial incentive as noted above.

Analysis

The primary outcome of interest for the trial is within Kirkpatrick’s learning domain, and is the percentage of residents in each arm who meet or exceed the MPS of the UCC at the T2 assessment. Agreement between standardized patients and the study team rater will be estimated using the Kappa coefficient. We will use a logistic regression analysis to compare groups with respect to the primary outcome at T2. Secondary analysis will consider change within groups from T2 to T3 separately using McNemar’s test. In Group A, this will be a test of whether mastery is retained versus not retained. In Group B, this will be a supplemental test of the intervention’s efficacy. Association between number of remote deliberate practice sessions completed and achieving a passing score will be evaluated using logistic regression, adjusting for the stratification factors of site and stage of training. Among those who pass, the association of the number of remote deliberate practice sessions completed with baseline characteristics will be evaluated using Poisson regression. The outcome of the trial will be reported according to the recently published Reporting Master Education Learning in Medicine (ReMERM) guidelines [51]. Figure 4 illustrates how results of SBML are often reported visually.

Open-ended responses to participants’ experiences “reaction” outcomes and use in current practice “transfer” outcomes will be analyzed qualitatively using content and constant comparative method to evaluate for emergent themes. Closed-ended questions related to their reaction and transfer will be reported with descriptive statistics.

Discussion

Patients’ understanding of the care they received and their next steps upon leaving an acute care setting have implications on care quality, safety, and patient satisfaction, especially when they are discharged from the ED without a definitive diagnosis. Developing a patient-centered way to communicate is a necessary step to improve safety of acute care discharges in the setting of diagnostic uncertainty. Use of a SBML curriculum focused on diagnostic uncertainty has many potential benefits for improving communication skills; however, SBML is a resource intensive educational approach. Our study attempts to address the high resource of deliberate practice in SBML through the novel use of a telehealth-facilitated approach for practice sessions. We anticipate that this platform will be favorable for our trainees, and may have broader applicability for other communication-related educational curricula.

Findings from this trial may shape residency training of communication skills related to diagnostic uncertainty. A benefit of the UCEM intervention is the potential for widespread dissemination with use of minimal resources, including remote access to deliberate practice via a telehealth video platform and web-based curricular materials. Additionally, while our study focuses on a specific clinical scenario (i.e., discharge from the ED in the setting of diagnostic uncertainty), the educational approach and potential findings may be transferrable to other clinical settings. If findings from the study support the use of this approach, comparable curricular materials and SBML checklists could be developed to improve communication in other clinical settings. Diagnostic uncertainty is only one way in which uncertainty can shape health care decision-making. Analogous interventions may be valuable to improve communication and manage other forms of uncertainty, such as uncertainty related to treatment successes or prognoses.

Our study has several limitations. We are conducting this investigation at two residency-training sites. It is possible that the residents are not representative of a national sample. In addition, as residents in both study arms interact regularly, it is possible that there may be contamination between the control and intervention arms, which would result in an underestimation of the impact of the UCEM. The multifaceted nature of the intervention could make it difficult to discern which part of the intervention was most impactful (online modules, interactive game or SBML); however most educational interventions are multifaceted and information is often retained best when presented via multiple modalities. In addition, the use of multiple (n = 16) cases increases the complexity of the evaluation. While use of multiple cases was a deliberate choice of the study team to reflect the true ED environment, focusing more narrowly on one diagnosis (e.g., chest pain) and/or one emotional state (e.g., nervousness) would decrease potential unintended variation in difficulty of cases. Another potential limitation is our delivery of the deliberate practice sessions using a video tele-simulation platform. We acknowledge video-based deliberate practice has not historically been used for mastery learning, as the simulation encounters and deliberate practice are supposed to be as close as possible to real clinical experience; however, the majority of SBML has been completed for procedures where hands-on training is necessary to learn skills. Traditional in-person deliberate practice sessions were considered; but this option is not easily disseminated, and the team decided that an in-person encounter was not essential for a conversation-based skill that can be practiced via a less-resource intensive platform.

Further, as with any simulation, there is a possible lack of translation of the improved conversations in simulation into the clinical environment. The ultimate goal of this line of research is to demonstrate translation to the clinical environment; however, a necessary and foundational step before evaluating translational outcomes is to ensure that the intervention has been well designed and is effective within the simulation environment.

In conclusion, this trial will have important implications for residency training of communication skills related to diagnostic uncertainty. The trial will further have implications for design and dissemination of future SBML interventions via a telehealth platform. Further, for the nearly one in three patients who leave their ED encounter with diagnostic uncertainty, this trial will lay the groundwork for improving the quality of that clinical encounter.

Availability of data and materials

The intervention components of the UCEM are publically available—links provided in text. Data access will be considered upon request from Drs. McCarthy and Rising. d-mccarthy2@northwestern.edu and Kristin.rising@jefferson.edu

Abbreviations

- ED:

-

Emergency Department

- MPS:

-

Minimum Passing Standard

- SBML:

-

Simulation Based Mastery Learning

- UCC:

-

Uncertainty Communication Checklist

- UCEM:

-

Uncertainty Communication Education Module

References

Wen LS, Espinola JA, Kosowsky JM, Camargo CA Jr. Do emergency department patients receive a pathological diagnosis? A nationally-representative sample. West J Emerg Med. 2015;16(1):50–4.

Rising KL, Hudgins A, Reigle M, Hollander JE, Carr BG. “I’m just a patient”: fear and uncertainty as drivers of emergency department use in patients with chronic disease. Ann Emerg Med. 2016;68(5):536–43.

Rising KL, LaNoue MD, Gerolamo AM, Doty AMB, Gentsch AT, Powell RE. Patient uncertainty as a predictor of 30-day return emergency department visits: an observational study. Acad Emerg Med. 2019;26(5):501–9.

Rising KL, Padrez KA, O'Brien M, Hollander JE, Carr BG, Shea JA. Return visits to the emergency department: the patient perspective. Ann Emerg Med. 2015;65(4):377–86 e3.

Rising KL, Papanagnou D, McCarthy D, Gentsch A, Powell R. Emergency medicine resident perceptions about the need for increased training in communicating diagnostic uncertainty. Cureus. 2018;10(1):e2088.

Naylor M, Brooten D, Jones R, Lavizzo-Mourey R, Mezey M, Pauly M. Comprehensive discharge planning for the hospitalized elderly. A randomized clinical trial. Ann Intern Med. 1994;120(12):999–1006.

Naylor MD, Brooten DA, Campbell RL, Maislin G, McCauley KM, Schwartz JS. Transitional care of older adults hospitalized with heart failure: a randomized, controlled trial. J Am Geriatr Soc. 2004;52(5):675–84.

Hansen LO, Greenwald JL, Budnitz T, Howell E, Halasyamani L, Maynard G, et al. Project BOOST: effectiveness of a multihospital effort to reduce rehospitalization. J Hosp Med. 2013;8(8):421–7.

Boutwell AE, Johnson MB, Rutherford P, Watson SR, Vecchioni N, Auerbach BS, et al. An early look at a four-state initiative to reduce avoidable hospital readmissions. Health Aff (Millwood). 2011;30(7):1272–80.

Greenwald JL, Jack BW. Preventing the preventable: reducing rehospitalizations through coordinated, patient-centered discharge processes. Prof Case Manag. 2009;14(3):135–40 quiz 41-2.

Anthony D, Chetty VK, Kartha A, McKenna K, DePaoli MR, Jack B. Re-engineering the Hospital Discharge: An Example of a Multifaceted Process Evaluation. In: Henriksen K, Battles JB, Marks ES, Lewin DI, editors. Advances in Patient Safety: From Research to Implementation (Volume 2: Concepts and Methodology). Rockville: Advances in Patient Safety; 2005.

Coleman EA, Berenson RA. Lost in transition: challenges and opportunities for improving the quality of transitional care. Ann Intern Med. 2004;141(7):533–6.

Snow V, Beck D, Budnitz T, Miller DC, Potter J, Wears RL, et al. Transitions of care consensus policy statement American College of Physicians-Society of General internal medicine-Society of Hospital Medicine-American Geriatrics Society-American College of emergency physicians-Society of Academic Emergency Medicine. J Gen Intern Med. 2009;24(8):971–6.

Engel KG, Buckley BA, Forth VE, McCarthy DM, Ellison EP, Schmidt MJ, et al. Patient understanding of emergency department discharge instructions: where are knowledge deficits greatest? Acad Emerg Med. 2012;19(9):E1035–44.

Samuels-Kalow ME, Stack AM, Porter SC. Effective discharge communication in the emergency department. Ann Emerg Med. 2012;60(2):152–9.

VanSuch M, Naessens JM, Stroebel RJ, Huddleston JM, Williams AR. Effect of discharge instructions on readmission of hospitalised patients with heart failure: do all of the joint commission on accreditation of healthcare organizations heart failure core measures reflect better care? Qual Saf Health Care. 2006;15(6):414–7.

Vashi A, Rhodes KV. “Sign right here and you’re good to go”: a content analysis of audiotaped emergency department discharge instructions. Ann Emerg Med. 2011;57(4):315–22 e1.

Williams H, Edwards A, Hibbert P, Rees P, Prosser Evans H, Panesar S, et al. Harms from discharge to primary care: mixed methods analysis of incident reports. Br J Gen Pract. 2015;65(641):e829–37.

Guttman A, Afilalo M, Guttman R, Colacone A, Robitaille C, Lang E, et al. An emergency department-based nurse discharge coordinator for elder patients: does it make a difference? Acad Emerg Med. 2004;11(12):1318–27.

Limpahan LP, Baier RR, Gravenstein S, Liebmann O, Gardner RL. Closing the loop: best practices for cross-setting communication at ED discharge. Am J Emerg Med. 2013;31(9):1297–301.

Johns Hopkins University: Armstrong Institute for Patient Safety and Quality. Improving the Emergency Department Discharge Process: Environmental Scan Report. Rockville: (Prepared by Johns Hopkins University, Baltimore, MD, under Contract No. HHSA 2902010000271.); 2014.

Ackermann S, Heierle A, Bingisser MB, Hertwig R, Padiyath R, Nickel CH, et al. Discharge communication in patients presenting to the emergency department with chest pain: defining the ideal content. Health Commun. 2016;31(5):557–65.

Lawrence LM, Jenkins CA, Zhou C, Givens TG. The effect of diagnosis-specific computerized discharge instructions on 72-hour return visits to the pediatric emergency department. Pediatr Emerg Care. 2009;25(11):733–8.

Waisman Y, Siegal N, Siegal G, Amir L, Cohen H, Mimouni M. Role of diagnosis-specific information sheets in parents’ understanding of emergency department discharge instructions. Eur J Emerg Med. 2005;12(4):159–62.

McGaghie WC, Siddall VJ, Mazmanian PE, Myers J, American College of Chest Physicians H, Science Policy C. Lessons for continuing medical education from simulation research in undergraduate and graduate medical education: effectiveness of continuing medical education: American college of chest physicians evidence-based educational guidelines. Chest. 2009;135(3 Suppl):62S–8S.

Cook DA, Brydges R, Zendejas B, Hamstra SJ, Hatala R. Mastery learning for health professionals using technology-enhanced simulation: a systematic review and meta-analysis. Acad Med. 2013;88(8):1178–86.

Barsuk JH, Cohen ER, Caprio T, McGaghie WC, Simuni T, Wayne DB. Simulation-based education with mastery learning improves residents’ lumbar puncture skills. Neurology. 2012;79(2):132–7.

Barsuk JH, Cohen ER, Potts S, Demo H, Gupta S, Feinglass J, et al. Dissemination of a simulation-based mastery learning intervention reduces central line-associated bloodstream infections. BMJ Qual Saf. 2014;23(9):749–56.

Cohen ER, Barsuk JH, Moazed F, Caprio T, Didwania A, McGaghie WC, et al. Making July safer: simulation-based mastery learning during intern boot camp. Acad Med. 2013;88(2):233–9.

McGaghie WC, Issenberg SB, Barsuk JH, Wayne DB. A critical review of simulation-based mastery learning with translational outcomes. Med Educ. 2014;48(4):375–85.

Ahn J, Yashar MD, Novack J, Davidson J, Lapin B, Ocampo J, et al. Mastery learning of video laryngoscopy using the Glidescope in the emergency department. Simul Healthc. 2016;11(5):309–15.

Reed T, Pirotte M, McHugh M, Oh L, Lovett S, Hoyt AE, et al. Simulation-based mastery learning improves medical student performance and retention of Core clinical skills. Simul Healthc. 2016;11(3):173–80.

Sharma RK, Szmuilowicz E, Ogunseitan A, Jones SF, Montalvo JA, O’Leary KJ, et al. Evaluation of a mastery learning intervention on Hospitalists’ code status discussion skills. J Pain Symptom Manag. 2017;53(6):1066–70.

Vermylen JH, Wood GJ, Cohen ER, Barsuk JH, McGaghie WC, Wayne DB. Development of a simulation-based mastery learning curriculum for breaking bad news. J Pain Symptom Manag. 2019;57(3):682–7.

Issenberg SB, Ringsted C, Ostergaard D, Dieckmann P. Setting a research agenda for simulation-based healthcare education: a synthesis of the outcome from an Utstein style meeting. Simul Healthc. 2011;6(3):155–67.

Domuracki K, Wong A, Olivieri L, Grierson LE. The impacts of observing flawed and flawless demonstrations on clinical skill learning. Med Educ. 2015;49(2):186–92.

Cheung JJ, Koh J, Mackinnon K, Brett C, Bagli D, Kapralos B, et al. The use of web-based learning for simulation-based education and training of central venous catheterization in novice learners. Stud Health Technol Inform. 2013;184:71–7.

Cheung JJ, Koh J, Brett C, Bagli DJ, Kapralos B, Dubrowski A. Preparation with web-based observational practice improves efficiency of simulation-based mastery learning. Simul Healthc. 2016;11(5):316–22.

AAMC Institute for improving medical education. Effective use of educational technology in medical education: Colloquium on educational technology: Recommendations and guidelines for medical educators. Washington, DC; 2007. Available at: https://members.aamc.org/eweb/upload/Effective%20Use%20of%20Educational.pdf. Accessed 1 Feb 2016.

Daetwyler CJ, Cohen DG, Gracely E, Novack DH. eLearning to enhance physician patient communication: a pilot test of “doc.com” and “WebEncounter” in teaching bad news delivery. Med Teach. 2010;32(9):e381–90.

Langenau E, Kachur E, Horber D. Web-based objective structured clinical examination with remote standardized patients and Skype: resident experience. Patient Educ Couns. 2014;96(1):55–62.

Novack DH, Cohen D, Peitzman SJ, Beadenkopf S, Gracely E, Morris J. A pilot test of WebOSCE: a system for assessing trainees’ clinical skills via teleconference. Med Teach. 2002;24(5):483–7.

Palmer RT, Biagioli FE, Mujcic J, Schneider BN, Spires L, Dodson LG. The feasibility and acceptability of administering a telemedicine objective structured clinical exam as a solution for providing equivalent education to remote and rural learners. Rural Remote Health. 2015;15(4):3399.

Rising KL, Powell RE, Cameron KA, Salzman DH, Papanagnou D, Doty AMB, et al. Development of the Uncertainty Communication Checklist: A patient-centered approach. Academic Medicine (in press 2019). 2019.

Salzman DH, Rising KL, Cameron KA, Powell RE, Papanagnou D, Doty AMB, et al. Setting a Minimum Passing Standard for the Uncertainty Communication Checklist through Patient and Physician Engagement. Journal of Graduation Medical Education (provisional acceptance 10–2019). 2019.

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–81.

Kirkpatrick DL. Evaluating training programs: the four levels. San Francisco: CA Berrett-Koehler Publishers; 1998.

Walter SD, Eliasziw M, Donner A. Sample size and optimal designs for reliability studies. Stat Med. 1998;17(1):101–10.

Makoul G, Krupat E, Chang CH. Measuring patient views of physician communication skills: development and testing of the communication assessment tool. Patient Educ Couns. 2007;67(3):333–42.

McCarthy DM, Ellison EP, Venkatesh AK, Engel KG, Cameron KA, Makoul G, et al. Emergency department team communication with the patient: the patient's perspective. J Emerg Med. 2013;45(2):262–70.

Cohen ER, McGaghie WC, Wayne DB, Lineberry M, Yudkowsky R, Barsuk JH. Recommendations for reporting mastery education research in medicine (ReMERM). Acad Med. 2015;90(11):1509–14.

Acknowledgements

Center for Teaching and Learning at Thomas Jefferson University.

Jump Simulation, OSF HealthCare.

Funding

This project was supported by grant number R18HS025651 from the Agency for Healthcare Research and Quality. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality. This funding source had no role in the design of this study and will not have any role during its execution, analyses, interpretation of the data, or decision to submit results.

Author information

Authors and Affiliations

Contributions

DMM: conception, design, drafting, revising, final approval. REP: conception, design, drafting, revising, final approval. KAC: conception, design, drafting, revising, final approval. DHS: conception, design, revising, final approval. DP: conception, design, revising, final approval. AD: design, revising, final approval. BEL: design, revising, final approval. KP: design, revising, final approval. MK: design, revising, final approval. XCZ: design, revising, final approval. WCM: conception, revising, final approval. KLR: conception, design, drafting, revising, final approval. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All study procedures were IRB approved by Thomas Jefferson University and Northwestern University. All participants provided written, informed consent.

Consent for publication

Not applicable.

Competing interests

Dimitrios Papanagnou is an Associate Editor of BMC Medical Education; all other authors have no conflicts of interest.

The study is funded as detailed below and the project proposal underwent grant study section review by AHRQ.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The Uncertainty Communication Checklist

INTRODUCTION

-

1.

Explain to the patient that they are being discharged.

-

2.

Ask if there is anyone else that the patient wishes to have included in this conversation in person and/or by phone

TEST RESULTS/ED SUMMARY

-

3.

Clearly state that either “life-threatening” or “dangerous” conditions have not been found.

-

4.

Discuss diagnoses that were considered (using both medical and lay terminology).

-

5.

Communicate relevant results of tests to patients (normal or abnormal).

-

6.

Ask patient if there are any questions about testing and/or results.

-

7.

Ask patient if they were expecting anything else to be done during their encounter - if yes, address reasons not done.

“NO/UNCERTAIN DIAGNOSIS”

-

8.

Discuss possible alternate or working diagnoses

-

9.

Clearly state that there is a not a confirmed explanation (diagnosis) for what the patient has been experiencing

-

10.

Validates the patient’s symptoms

-

11.

Discuss that the ED role is to identify conditions that require immediate attention

-

12.

Normalize leaving the ED with uncertainty

NEXT STEPS/FOLLOW UP

-

13.

Suggest realistic expectations / trajectory for symptoms

-

14.

Discuss next tests that are needed, if any

-

15.

Discuss who to see next AND in what timeframe

HOME CARE

-

16.

Discuss a plan for managing symptoms at home

-

17.

Discuss any medication changes.

-

18.

Ask patient if there are any questions and/or anticipated problems related to next steps (self-care and future medical care) after discharge

REASONS TO RETURN

-

19.

Discuss what symptoms should prompt immediate return to the ED

GENERAL COMMUNICATION SKILLS

-

20.

Make eye contact

-

21.

Ask patient if there are any other questions or concerns

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

McCarthy, D.M., Powell, R.E., Cameron, K.A. et al. Simulation-based mastery learning compared to standard education for discussing diagnostic uncertainty with patients in the emergency department: a randomized controlled trial. BMC Med Educ 20, 49 (2020). https://doi.org/10.1186/s12909-020-1926-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-020-1926-y