Abstract

Background

Interpersonal and Communication Skills (ICS) and Professionalism milestones are challenging to evaluate during medical training. Paucity in proficiency, direction and validity evidence of assessment tools of these milestones warrants further research. We validated the reliability of the previously-piloted Instrument for Communication skills and Professionalism Assessment (InCoPrA) in medical learners.

Methods

This validity approach was guided by the rigorous Kane’s Framework. Faculty-raters and standardized patients (SPs) used their respective InCoPrA sub-component to assess distinctive domains pertinent to ICS and Professionalism through multiple expert-built simulated-scenarios comparable to usual care. Evaluations included; inter-rater reliability of the faculty total score; the correlation between the total score by the SPs; and the average of the total score by two-faculty members. Participants were surveyed regarding acceptability, realism, and applicability of this experience.

Results

Eighty trainees and 25 faculty-raters from five medical residency training sites participated. ICC of the total score between faculty-raters was generally moderate (ICC range 0.44–0.58). There was on average a moderate linear relationship between the SPs and faculty total scores (Pearson correlations range 0.23–0.44). Majority of participants ascertained receiving a meaningful, immediate, and comprehensive patient-faculty feedback.

Conclusions

This work substantiated that InCoPrA was a reliable, standardized, evidence-based, and user-friendly assessment tool for ICS and Professionalism milestones. Validating InCoPrA showed generally-moderate agreeability and high acceptability. Using InCoPrA also promoted engaging all stakeholders in medical education and training–faculty, learners, and SPs—using simulation-media as pathway for comprehensive feedback of milestones growth.

Similar content being viewed by others

Background

Competency-based medical education (CBME) has become the cornerstone model of education and training in the U.S. and beyond [1, 2]. The Accreditation Council for Graduate Medical Education (ACGME) implemented the six global core competencies system in 1999 [3] and later on revised these efforts by implementing additional milestones in 2013 [4]. During those phases, educators have frequently struggled to find ways to evaluate learners’ skills in these fundamental areas. Although the ACGME produced the milestones to provide a framework for assessment [5,6,7,8], they tend to be subjective with language that allows room for interpretation, which likely reduces the fidelity and reliability of the milestones from one program or even one assessor to the other [9,10,11]. These competencies and milestones have also created an additional burden to already-overwhelmed educators and core faculty who genuinely want to spend sufficient time to properly teach and assess their trainee’s achievement of the competencies and professional growth [9, 12,13,14].

Since milestone reporting is required and is indirectly used to assess the quality of individual training programs, residencies are always searching for reliable, user-friendly, and efficient simplified assessment tools. Of the six competencies and their milestones, Interpersonal and Communication Skills (ICS) and Professionalism [15] have been particularly challenging to evaluate since they can be influenced by numerous factors [16].

The assessment of ICS and Professionalism has been studied using various methods of direct observation, global assessment, or Objective Structured Clinical Examinations (OSCEs), singularly or combined [17,18,19,20,21,22,23]. Methods that include simulation training and the use of Standardized Patients (SPs) are particularly important within this context [24,25,26,27]. In further attempts to improve the reliability of evaluations, others have used composite scores, checklist forms, and global rating scales within direct observation or simulation settings [20, 23, 28,29,30,31,32,33,34,35,36,37,38,39]. Despite these attempts, recent evidence suggests that the current available tools for medical education evaluation lack or provide insufficient validity evidence about their direction, value, educational outcome; thus limiting providing an intrinsic meaning and support decision making [40], and allowing room for improvements [13, 26, 41,42,43].

Validity is a growing science that has been widely studied using different approaches to provide a meaningful interpretation of an “output” to guide in decision making [26, 37, 42, 44,45,46].. The concept of validity has evolved over the last two decades [47,48,49]; most of the studies that assessed competencies and milestones did not sufficiently outline or adhere to validity frameworks to ascertain their findings [26, 37, 42, 44,45,46, 50]. Abu Dabrh et al., previously co-developed and piloted the Instrument for Communication skills and Professionalism Assessment (InCoPrA), a de novo tool used during an OSCE-like simulated training scenario to assess ICS and Professionalism [24]. The instrument showed strong feasibility and applicability within a residency training program setting; thus providing rational to further validate its use within other programs with larger participation using a contemporary approach to its validity evidence [33, 40, 51].

We hypothesize that the InCoPrA is a feasible, acceptable and reliable method to provide a meaningful and supportive validated interpretation of ICS and Professionalism skills of the learners, and to minimize the administrative burden of assessing their milestones using simulation settings. Generating such knowledge it will help minimizing the gap in evidence about validated assessment tools of these challenging competencies and milestones.

Methods

The conceptualization and feasibility assessment of InCoPrA has been previously studied [24].

Setting

The study occurred across five Department of Family Medicine (DoFM) sites at Mayo Clinic (Florida, Minnesota, Arizona, and Wisconsin) at their designated SimCenters. The study included medical learners from these participating sites who participated in the simulation activities. These activities were part of the expected didactic training and curricula; therefore, there were no special sampling methods of participants. The learners were not informed about the purpose of this scenario in order to minimize reactive biases. Each scenario was directly supervised by faculty (raters/assessors) in the respective SimCenters. Each simulation was video-recorded and all EMIR visited by participants during the encounter were live-tracked, recorded, and stored using a secure server with a password-protected data repository. The SimCenters staff created matching electronic medical records (EMR) and access to electronic medical information resources (EMIR) environment; thus, allowing participants to resources comparable to those in their routine practice.

Participants

Observing faculty and SPs

All participating core faculty (assessors/raters) and SPs received standardized orientation about the proposed simulation activities and scenarios, debriefing techniques, and the use of performance checklist and global assessment on the InCoPrA. All faculty independently observed a scenario in real time, was blinded to other raters, and provided an evaluation at the conclusion of OSCE to the participants using the InCoPrA. The participating SPs had extensive experience in role-playing multiple patient scenarios for the SimCenters.

Medical learners

The study recruited mainly first-post-graduate year (PGY1), second- (PGY2) and third-year (PGY3) Family Medicine residents, and 3rd and 4th year medical students doing their clerkships at the DoFM. Learners were blinded to the scenarios they were administered during their simulation day experience.

Educational intervention

The InCoPrA was developed, reviewed, and pilot-tested previously, taking into consideration the ACGME definition of competencies and existing tools used for other OSCE scenarios and competencies evaluation [52,53,54] and the feedback provided during the pilot testing [24]. The InCoPrA constructs assess professionalism if the trainee; 1) demonstrates integrity and ethical behavior; 2) accepts responsibility and follows through on tasks; and 3) demonstrates empathic care for patients. ICS was assessed through; 1) ability to utilize EMR and EMIR to understand the scenario; 2) communicate findings effectively with patients; and 3) communicate effectively with other healthcare professionals. The InCoPrA has three parts; The first two components, the faculty and SP parts, both use a 3-point Likert-like scale (outstanding; satisfactory; and unsatisfactory) and checklist with different questions (points) to address six categories/domains (the context of discussion, communication and detection the assigned task, management of the task assigned, empathy, use of EMR and EMIR, and a global rating); The 3rd component, the participants’ self-evaluation survey (REDcap® format), consisted of asking to: self-rate their performance after the encounter; self-rate their general skills of EMR and EMIR use; how realistic and acceptable the simulations felt; and how often they receive faculty feedback during their current training.

Simulation scenarios

Building on our previous work [24], we have developed four scenarios which have been reviewed for content, realism, acceptability and expert validity by participating faculty members, leadership, SPs and non-participating learners. These scenarios were pertinent to: 1) detection of medical error [24, 55, 56]; 2) managing chronic opioid use; 3) Managing depression; and 4) Delivering bad news. In all these scenarios, the learners had access to a simulated EMR and EMIRs to help in identifying medical history, medication use, interactions and side effects. Before seeing the patient, the learners were instructed to perform an initial history, relevant exam (if needed), and discuss their findings with the patient. They also knew that they can leave the room to discuss the patient with faculty and then return to the room to dismiss the patient and discuss the plan of action. Afterwards, they debriefed with faculty.

Validity approach

Our study design was guided by the Kane’s Framework as proposed by M. T. Kane [44, 57] and highlighted by others [47]; this approach proposes that to support validation assumption, studies should identify four critical inferences: 1) Scoring (i.e., to render the observed experiment into a quantitative or qualitative scoring system; 2) Generalization (i.e., to translate these scoring systems or scales developed into a meaningful general/overall interpretation of the performance; 3) Extrapolation (i.e., imply how/what this generalized inference translates into the real-world setting /experience; and 4) Implication (i.e., draw a final conclusion and reach a decision regarding its value/results).

In each respective site, the faculty-raters directly observed while using the InCoPrA to check-point and use the narrative interaction between learners and SPs and evaluated learners through their simulation encounter with SPs. (i.e. scoring). Each trainee went through 3 scenarios of various difficulties to allow sufficient spectrum for reproducibility and to minimize the error margin due to variation in performance. Each trainee was assessed by two raters. At the end, the faculty formulated their feedback through graded scale and narratives (i.e. generalization). Raters then had the opportunity to review and outline the performance of learners and compare that with the respective trainee’s real-world daily performance and pertinent scores (e.g. current milestones assessment reviews, course, internship … etc.) and draw conclusions and feedback to be provided to learners in person (i.e. extrapolation). Once these conclusions are reached, raters had a “sense of direction and action” to how the trainee performed and provided recommendations. For example, those learners who were observed and concluded to not have achieved well (i.e. unsatisfactory), faculty provided additional narrative feedback to outline the areas of needed improvement and identify deficiencies (i.e. implication).

Once these inferences from the Kane’s framework were synthesized, we used construct validity to evaluate evidence of validity [58]; construct validity demonstrates whether one can establish inferences about test results related to the constructs being studied. To test that, we compared all inferences with the current standing and evaluations of participants (i.e. convergence validity testing). Convergence construct validity testing compares the evaluated tool or instrument to others that are established. In this study, we compared InCoPrA results to the ACGME-proposed evaluation forms used routinely in the respective residency programs).

Ethics approval and consent to participate

The study activities were approved by the Institutional Review Board (IRB) at Mayo Clinic as an educational intervention required and expected by the regular, didactic training and activities within the respective participating residency programs, and thus considered it to be IRB-exempt study (45 CFR 46.101, item 1); therefore no specific participation consent deemed required. The Mayo Clinic Simulation Center obtained standard consents for observation for all trainees per regular institutional guidelines. Additionally, the study authors and coordinators obtained standard consent for observation for all trainees per institutional guidelines.

Statistical analysis

Each site was be randomized to 3 of the 4 scenarios by the study statistician; the order of the scenarios was also randomly assigned for each trainee. All analyses were done separately for each scenario. We descriptively summarized the percentage of learners with a satisfactory or outstanding rating separately according to rater and domain. We assigned points for each of the 6 domains with (unsatisfactory rating = 0; satisfactory rating = 1 point; and outstanding rating = 2 points). For each trainee, we calculated a total score by the standardized patient and a total score by each of the two faculty evaluators (scoring). Total scores were calculated by summing the responses of the 5 domains with a plausible score ranging from 5 to 15 (a lower score indicates a better performance). If a rating was missing for one of the domains, the mean of the other 4 domains was imputed for purposes of calculating the total score.

We descriptively summarized the standardized patients’ responses to each domain separately for each scenario. We evaluated the interrater reliability of the faculty total score with the intraclass correlation coefficient (Type 1) as described by Shrout and Fleiss [59] where faculty raters were assumed to be randomly assigned (generalization). For each domain, we assessed interrater reliability using the weighted kappa statistic. We additionally evaluated the correlation between the total score by the standardized patient and the average of the total score by the two faculty members (extrapolation). Kendall rank correlation coefficients will examine the correlation between SP assessment total raw score, the consensus faculty assessment total raw score, and the ACGME milestone evaluations for ICS and professionalism, separately for each scenario (implication).

Results

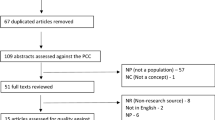

Eighty learners were included from five different sites. The scenarios assigned to each center are shown in Appendix Table 5. Table 1 summarizes the rationale, methods, and findings as guided by the Kane’s Framework from this study.

The trainee assessment by the standardized patient is summarized in Table 2. The median (interquartile range) for the total scores indicated satisfactory to outstanding performance by most learners [Scenario A: 6 (5–8); Scenario B: 5 (5–7); Scenario C: 7 (6–8); Scenario D: 5 (5–7)].

Interrater reliability of the total score between faculty raters was generally moderate for the four simulation scenarios (ICC range 0.44 to 0.58, Table 2). Kappa scores for the individual domains are reported in Table 3.

There is a generally moderate linear relationship between the standardized patient and faculty total scores (Pearson correlations range from 0.23 to 0.44) (Table 3 and Fig. 1-a, b, c, and d). Rank based correlations (Kendall and Spearman) are additionally reported in Table 4.

a: Relationship of Trainee Assessment between Standardized Patient and Faculty Reviewers (Scenario A). b: Relationship of Trainee Assessment between Standardized Patient and Faculty Reviewers (Scenario B). c: Relationship of Trainee Assessment between Standardized Patient and Faculty Reviewers (Scenario C). d: Relationship of Trainee Assessment between Standardized Patient and Faculty Reviewers (Scenario D)

Among the 78 learners, 71 completed a post-training survey. Their responses are summarized in Appendix Table 6.

Discussion

Validating InCoPrA showed generally-moderate agreeability, high acceptability, and strong evidence of benefit and feasibility. Users found its standardized structure to be efficient, simplified, and user-friendly for assessment of ICS and Professionalism milestones.

Honest assessment of competency-based and milestones outcomes for learners is essential for professional growth and development [43, 45]. While many tools and checklists that assess clinical skills have been studied and described, very few have been validated [16, 37, 38, 42, 51]. In particular, assessment of professionalism and ICS is very complex and is highly influenced by raters [43, 49,50,51]. Teaching raters to use a tool is as simple idea, but is often overlooked in preparation for assessments [37, 43, 48, 57, 58]. Utilizing a validated, easy to use, easy to train tool like InCoPrA, can set the stage for fair assessment of professionalism and ICS and more importantly promote dialogue within the Clinical Competency Committee (CCC). This validation study was completed using 80 learners, 25 faculty-raters from five medical residency training sites, 12 SPs and 4 OSCE’s to validate InCoPrA as a feasible and user-friendly tool to be used when assessing professionalism and ICS. While 82% of learners who completed the post-training self-assessment scored the scenario as realistic, this has the potential to confound rater scores if the scenario is viewed as less realistic especially given the ICS and professionalism domains. 69% of learners who completed the post training self-assessment felt that more simulation training done in similar fashion could be beneficial.

Utilizing InCoPrA in an OSCE scenario is valuable because faculty-raters and SPs are able to view multiple learners in the same scenario, allowing for richer feedback. Including SPs as part of the assessment team gives an additional nuanced and contextual perspective of all stakeholders. While the data shows generally moderate agreeability between faculty-raters and SPs, there were observed differences in other ratings and scoring, with more positive skewness from the SPs. While this could be due to with rater nuances, however, skewness or variations in assessment and perception between physicians and patients– as observed by the SPs ratings here— is not a novel phenomenon, and it agrees with previous findings [60,61,62,63,64,65]. This phenomenon could be explained by the innate variation in ‘performance perception and assessment’ between patients and educators/physicians due to the nature and significance of their specific roles. Patients often emphasize on and identify compassionate and positive interactions as surrogate of quality care and value while educators/physicians especially focus more on the clinical knowledge and management skills [60, 61, 66]. The varied interdependence and difference in scoring can serve as a prompt for the CCC to create a space for an open dialogue regarding these potential differences. Future studies may also need to better define the scoring differences and its important role, in line with other reports [64, 67, 68].

Strengths and Limitations

To overcome barriers often encountered in validity studying, we used evidence-based validity framework to; guide our study design, filling the gap in current evidence [47, 51]; employ different scenarios with various levels of difficulties; use currently-adopted forms of evaluation to compare findings; include expert/core faculty raters; and included portals to deliver and receive feedback between learners and faculty. Performance contamination may occurred if learners took the OSCE and then informed other learners of upcoming scenarios, though previous research found that using such study methodology did not result in significant differences in performance among their tested learners [69]; however, we instructed all learners to avoid sharing their experiences. Most of the learners and faculty represent the specialty of Family Medicine. Further studying of InCoPrA will be needed to define the generalizability as it pertains to discipline and setting (simulation versus clinical). We also realize that generalization in our study to other institutions might be limited by availability of resources, faculty training and IT infrastructure provided at Mayo Clinic; however, these activities may still be conducted within resource-limited that incorporates faculty-SP-learner by modifying the simulation center resources to direct OSCE-style setting.

Conclusions

Existing comparable assessment tools lack sufficient validity evidence, direction, and educational outcomes. This work examined these gaps by substantiating that InCoPrA is a standardized, evidence-based, user-friendly, feasible, and competency/milestone-specific assessment tool for ICS and Professionalism. Validating InCoPrA showed a generally moderate agreeability, and high acceptability and strong evidence of benefit. Using InCoPrA also promoted engaging all stakeholders in medical education and training –faculty, learners, and SPs—through using simulation-media as pathway for comprehensive feedback of milestones growth. In addition to the importance of education and training provided by faculty, engaging patients in providing feedback about Professionalism and ICS of medical learners is valuable to assess and improve these core competencies and milestones. Allowing immediate reflective feedback from learners also enhances this comprehensive-feedback approach as shown through using InCoPrA.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- InCoPrA:

-

Instrument for Communication skills and Professionalism Assessment

- DoFM:

-

Department of Family Medicine

- CCC:

-

Clinical Competency Committee

- ACGME:

-

Accreditation Council for Graduate Medical Education

- CBME:

-

Competency-based medical education

- ICS:

-

Interpersonal and Communication Skills

- SPs:

-

Standardized Patients

- EMR:

-

Electronic Medical Records

- EMIR:

-

Electronic Medical Information Resources

- PGY:

-

Post-Graduate Year

- OSCE:

-

Objective Structured Clinical Examination

References

Carraccio CL, Englander R. From Flexner to competencies: reflections on a decade and the journey ahead. Acad Med. 2013;88(8):1067–73.

ten Cate O, Scheele F. Competency-based postgraduate training: can we bridge the gap between theory and clinical practice? Acad Med. 2007;82(6):542–7.

Parikh RB, Kirch RA, Smith TJ, Temel JS. Early specialty palliative care--translating data in oncology into practice. N Engl J Med. 2013;369(24):2347–51.

Frequently Asked Questions: Milestones [http://www.acgme.org/Portals/0/MilestonesFAQ.pdf].

Holmboe ES, Call S, Ficalora RD. Milestones and Competency-Based Medical Education in Internal Medicine. JAMA Intern Med. 2016;176(11):1601.

Iobst W, Aagaard E, Bazari H, Brigham T, Bush RW, Caverzagie K, Chick D, Green M, Hinchey K, Holmboe E, et al. Internal medicine milestones. J Grad Med Educ. 2013;5(1 Suppl 1):14–23.

Green ML, Aagaard EM, Caverzagie KJ, Chick DA, Holmboe E, Kane G, Smith CD, Iobst W. Charting the road to competence: developmental milestones for internal medicine residency training. J Grad Med Educ. 2009;1(1):5–20.

Nabors C, Peterson SJ, Forman L, Stallings GW, Mumtaz A, Sule S, Shah T, Aronow W, Delorenzo L, Chandy D, et al. Operationalizing the internal medicine milestones-an early status report. J Grad Med Educ. 2013;5(1):130–7.

Witteles RM, Verghese A. Accreditation Council for Graduate Medical Education (ACGME) milestones-time for a revolt? JAMA Intern Med. 2016;176(11):1599.

Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system--rationale and benefits. N Engl J Med. 2012;366(11):1051–6.

Norman G, Norcini J, Bordage G. Competency-based education: milestones or millstones? J Grad Med Educ. 2014;6(1):1–6.

Caverzagie KJ, Nousiainen MT, Ferguson PC, Ten Cate O, Ross S, Harris KA, Busari J, Bould MD, Bouchard J, Iobst WF, et al. Overarching challenges to the implementation of competency-based medical education. Med Teach. 2017;39(6):588–93.

Natesan PBN, Bakhti R, El-Doueihi PZ. Challenges in measuring ACGME competencies: considerations for milestones. Int J Emerg Med. 2018;11:39.

Hawkins RE, Welcher CM, Holmboe ES, Kirk LM, Norcini JJ, Simons KB, Skochelak SE. Implementation of competency-based medical education: are we addressing the concerns and challenges? Med Educ. 2015;49(11):1086–102.

Swing SR. The ACGME outcome project: retrospective and prospective. Medical teacher. 2007;29(7):648–54.

Hodges BD, Ginsburg S, Cruess R, Cruess S, Delport R, Hafferty F, Ho MJ, Holmboe E, Holtman M, Ohbu S, et al. Assessment of professionalism: recommendations from the Ottawa 2010 conference. Med Teach. 2011;33(5):354–63.

Posner G, Nakajima A. Assessing residents' communication skills: disclosure of an adverse event to a standardized patient. J Obstet Gynaecol Canada. 2011;33(3):262–8.

Hochberg MS, Kalet A, Zabar S, Kachur E, Gillespie C, Berman RS. Can professionalism be taught? Encouraging evidence. Am J Surg. 2010;199(1):86–93.

Mazor KM, Zanetti ML, Alper EJ, Hatem D, Barrett SV, Meterko V, Gammon W, Pugnaire MP. Assessing professionalism in the context of an objective structured clinical examination: an in-depth study of the rating process. Med Educ. 2007;41(4):331–40.

Brasel KJ, Bragg D, Simpson DE, Weigelt JA. Meeting the accreditation Council for Graduate Medical Education competencies using established residency training program assessment tools. Am J Surg. 2004;188(1):9–12.

Fontes RB, Selden NR, Byrne RW. Fostering and assessing professionalism and communication skills in neurosurgical education. J Surg Educ. 2014;71(6):e83–9.

Krajewski A, Filippa D, Staff I, Singh R, Kirton OC. Implementation of an intern boot camp curriculum to address clinical competencies under the new accreditation Council for Graduate Medical Education supervision requirements and duty hour restrictions. JAMA Surg. 2013;148(8):727–32.

Yudkowsky R, Downing SM, Sandlow LJ. Developing an institution-based assessment of resident communication and interpersonal skills. Acad Med. 2006;81(12):1115–22.

Abu Dabrh AM, Murad MH, Newcomb RD, Buchta WG, Steffen MW, Wang Z, Lovett AK, Steinkraus LW. Proficiency in identifying, managing and communicating medical errors: feasibility and validity study assessing two core competencies. BMC Med Educ. 2016;16(1):233.

Sevdalis N, Nestel D, Kardong-Edgren S, Gaba DM. A joint leap into a future of high-quality simulation research-standardizing the reporting of simulation science. Simul Healthc. 2016;11(4):236–7.

Cook DA, Brydges R, Zendejas B, Hamstra SJ, Hatala R. Technology-enhanced simulation to assess health professionals: a systematic review of validity evidence, research methods, and reporting quality. Acad Med. 2013;88(6):872–83.

Newcomb AB, Trickey AW, Porrey M, Wright J, Piscitani F, Graling P, Dort J. Talk the talk: implementing a communication curriculum for surgical residents. J Surg Educ. 2017;74(2):319–28.

Goff BA, VanBlaricom A, Mandel L, Chinn M, Nielsen P. Comparison of objective, structured assessment of technical skills with a virtual reality hysteroscopy trainer and standard latex hysteroscopy model. J Reprod Med. 2007;52(5):407–12.

Dumestre D, Yeung JK, Temple-Oberle C. Evidence-based microsurgical skills acquisition series part 2: validated assessment instruments--a systematic review. J Surg Educ. 2015;72(1):80–9.

Chipman JG, Schmitz CC. Using objective structured assessment of technical skills to evaluate a basic skills simulation curriculum for first-year surgical residents. J Am Coll Surg. 2009;209(3):364–70 e362.

Tavakol M, Mohagheghi MA, Dennick R. Assessing the skills of surgical residents using simulation. J Surg Educ. 2008;65(2):77–83.

Rekman J, Hamstra SJ, Dudek N, Wood T, Seabrook C, Gofton W. A new instrument for assessing resident competence in surgical clinic: the Ottawa clinic assessment tool. J Surg Educ. 2016;73(4):575–82.

Glarner CE, McDonald RJ, Smith AB, Leverson GE, Peyre S, Pugh CM, Greenberg CC, Greenberg JA, Foley EF. Utilizing a novel tool for the comprehensive assessment of resident operative performance. J Surg Educ. 2013;70(6):813–20.

George BC, Teitelbaum EN, Meyerson SL, Schuller MC, DaRosa DA, Petrusa ER, Petito LC, Fryer JP. Reliability, validity, and feasibility of the Zwisch scale for the assessment of intraoperative performance. J Surg Educ. 2014;71(6):e90–6.

Borman KR, Augustine R, Leibrandt T, Pezzi CM, Kukora JS. Initial performance of a modified milestones global evaluation tool for semiannual evaluation of residents by faculty. J Surg Educ. 2013;70(6):739–49.

Phillips DP, Zuckerman JD, Kalet A, Egol KA. Direct observation: assessing Orthopaedic trainee competence in the ambulatory setting. J Am Acad Orthop Surg. 2016;24(9):591–9.

Kane MT. Validity as the evaluation of the claims based on test scores. Assessment in Education-Principles Policy & Practice. 2016;23(2):309–11.

Auewarakul C, Downing SM, Jaturatamrong U, Praditsuwan R. Sources of validity evidence for an internal medicine student evaluation system: an evaluative study of assessment methods. Med Educ. 2005;39(3):276–83.

Corcoran J, Downing SM, Tekian A, DaRosa DA. Composite score validity in clerkship grading. Acad Med. 2009;84(10 Suppl):S120–3.

Cook DA. When I say... validity. Med Educ. 2014;48(10):948–9.

Kogan JR, Holmboe ES, Hauer KE. Tools for direct observation and assessment of clinical skills of medical trainees: a systematic review. JAMA. 2009;302(12):1316–26.

Ilgen JS, Ma IW, Hatala R, Cook DA. A systematic review of validity evidence for checklists versus global rating scales in simulation-based assessment. Med Educ. 2015;49(2):161–73.

Downing SM. Validity: on meaningful interpretation of assessment data. Med Educ. 2003;37(9):830–7.

Kane MT. Validating interpretive arguments for licensure and certification examinations. Eval Health Prof. 1994;17(2):133–59 discussion 236-141.

Kane MT. Current concerns in validity theory. J Educ Meas. 2001;38:319–42.

Cook DA, Lineberry M. Consequences validity evidence: evaluating the impact of educational assessments. Acad Med. 2016;91(6):785–95.

Cook DA, Brydges R, Ginsburg S, Hatala R. A contemporary approach to validity arguments: a practical guide to Kane's framework. Med Educ. 2015;49(6):560–75.

Kane MT. An argument-based approach to validity. Psychol Bull. 1992;112(3):527–35.

Downing SM. Reliability: on the reproducibility of assessment data. Med Educ. 2004;38(9):1006–12.

Cook DA, Zendejas B, Hamstra SJ, Hatala R, Brydges R. What counts as validity evidence? Examples and prevalence in a systematic review of simulation-based assessment. Adv Health Sci Educ Theory Pract. 2014;19(2):233–50.

Clauser BE, Margolis MJ, Holtman MC, Katsufrakis PJ, Hawkins RE. Validity considerations in the assessment of professionalism. Adv Health Sci Educ Theory Pract. 2012;17(2):165–81.

Chipman JG, Beilman GJ, Schmitz CC, Seatter SC. Development and pilot testing of an OSCE for difficult conversations in surgical intensive care. J Surg Education. 2007;64(2):79–87.

Tabuenca A, Welling R, Sachdeva AK, Blair PG, Horvath K, Tarpley J, Savino JA, Gray R, Gulley J, Arnold T, et al. Multi-institutional validation of a web-based core competency assessment system. J Surg Education. 2007;64(6):390–4.

Chan DK, Gallagher TH, Reznick R, Levinson W. How surgeons disclose medical errors to patients: a study using standardized patients. Surgery. 2005;138(5):851–8.

Drug Products Associated with Medication Errors [http://www.fda.gov/drugs/drugsafety/medicationerrors/ucm286522.htm].

Saftey Alerts for Human Medical Products [http://www.fda.gov/downloads/Safety/MedWatch/SafetyInformation/SafetyAlertsforHumanMedicalProducts/UCM168792.pdf].

Kane MT. Explicating validity. Assessment in Education-Principles Policy & Practice. 2016;23(2):198–211.

Heale R, Twycross A. Validity and reliability in quantitative studies. Evid Based Nurs. 2015;18(3):66–7.

Shrout PE, Fleiss JL. Intraclass correlations: uses in assessing rater reliability. Psychol Bull. 1979;86(2):420–8.

Bientzle M, Fissler T, Cress U, Kimmerle J. The impact of physicians' communication styles on evaluation of physicians and information processing: a randomized study with simulated video consultations on contraception with an intrauterine device. Health Expect. 2017;20(5):845–51.

Ha JF, Longnecker N. Doctor-patient communication: a review. Ochsner J. 2010;10(1):38–43.

Saha S, Beach MC. The impact of patient-centered communication on patients' decision making and evaluations of physicians: a randomized study using video vignettes. Patient Educ Couns. 2011;84(3):386–92.

Kenny DA, Veldhuijzen W, Weijden T, Leblanc A, Lockyer J, Legare F, Campbell C. Interpersonal perception in the context of doctor-patient relationships: a dyadic analysis of doctor-patient communication. Soc Sci Med. 2010;70(5):763–8.

Makoul G, Krupat E, Chang CH. Measuring patient views of physician communication skills: development and testing of the communication assessment tool. Patient Educ Couns. 2007;67(3):333–42.

Cegala DJMK, McGee DS. A study of Doctors' and Patients' perceptions of information processing and communication competence during the medical interview. Health Commun. 2009;7(3):179–203.

Tanco K, Rhondali W, Perez-Cruz P, Tanzi S, Chisholm GB, Baile W, Frisbee-Hume S, Williams J, Masino C, Cantu H, et al. Patient perception of physician compassion after a more optimistic vs a less optimistic message: a randomized clinical trial. JAMA Oncol. 2015;1(2):176–83.

Archer JC, Norcini J, Davies HA. Use of SPRAT for peer review of paediatricians in training. BMJ. 2005;330(7502):1251–3.

Violato C, Lockyer J, Fidler H. Multisource feedback: a method of assessing surgical practice. BMJ. 2003;326(7388):546–8.

Stroud L, McIlroy J, Levinson W. Skills of internal medicine residents in disclosing medical errors: a study using standardized patients. Academic Med. 2009;84(12):1803–8.

Acknowledgements

The authors would like to express their gratitude for the support provided by all staff at the participating Simulation Centers and the administrative staff at the participating residency programs at Mayo Clinic in Jacksonville, FL; Rochester, MN; La Crosse, WI; and Phoenix and Scottsdale, AZ, for their incredible efforts and help to make this study possible.

Funding

This work was supported by the Endowment for Education Research Awards from the Office of Applied Scholarship and Education Science (OASES) at Mayo Clinic.

Author information

Authors and Affiliations

Contributions

All authors contributed significantly to warrant co-authorship that meets BMC editorial Policy standards of authorship. Study hypothesis: AMAD, KBA, TAW, MHM, LWP. Study planning and set-up: AMAD, KBA, RPB, TAW, JM, TN, MMW, TG, TB, JK, SAP. Study conducting: AMAD, RPB, TAW, JM, TN, MMW, TB, AJN, AA, JK, TG, SAP. Data collection and cleaning: AJN, AA, TB, TN, JM, MMW, AMAD. Data analysis and interpretation: AMAD, KBA, TAW, MHM, LWP, RPB. Manuscript draft writing: AMAD, TAW, RPB, TN, MHM. Manuscript reviewing and approval: All co-authors

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study activities were approved by the Institutional Review Board (IRB) at Mayo Clinic as an educational intervention required and expected by the regular, didactic training and activities within the respective participating residency programs, and thus considered it to be IRB-exempt study (45 CFR 46.101, item 1); therefore no specific participation consent deemed required. The Mayo Clinic Simulation Center obtained standard consents for observation for all trainees per regular institutional guidelines. Additionally, the study authors and coordinators obtained standard consent for observation for all trainees per institutional guidelines.

Consent for publication

Not applicable.

Competing interests

The authors report no declarations of conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Abu Dabrh, A.M., Waller, T.A., Bonacci, R.P. et al. Professionalism and inter-communication skills (ICS): a multi-site validity study assessing proficiency in core competencies and milestones in medical learners. BMC Med Educ 20, 362 (2020). https://doi.org/10.1186/s12909-020-02290-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-020-02290-3