Abstract

Background

Simulation training is widely used in medical education as students rarely perform clinical procedures, and confidence can influence practitioners’ ability to perform procedures. Thus, this study assessed students’ perceptions and experiences of a pediatric skills program and compared their informed self-assessment with their preceptor-evaluated performance competency for several pediatric clinical procedures.

Methods

A total of 65 final-year medical students attended a weeklong pediatric skills training course by the University of Tripoli that used a manikin and various clinical scenarios to simulate real-life cases. Participants completed questionnaires self-assessing their performance skills, while examiners evaluated each students’ competency on five procedural skills (lumbar puncture, nasogastric tube insertion, umbilical vein catheterization, intraosseous access, and suprapubic aspiration) using an objective structured clinical examination (OSCE) model. Differences between agreement levels in question responses were evaluated through a nonparametric chi-square test for a goodness of test fit, and the relationship between confidence levels and the OSCE scores for each procedure was assessed using Spearman’s rank-order correlation.

Results

All participants completed the informed self-assessment questionnaire and OSCE stations. The frequency differences in agreement levels in students’ questionnaire responses were statistically significant. No significant differences were found between students’ self-assessment and preceptors’ evaluation scores. For each procedure’s passing score rate, umbilical vein catheterization had the highest passing rate (78.5%) and nasogastric tube placement the lowest (56.9%). The mean performance scores were above passing for all procedures. The Wilcoxon signed-rank test revealed no significant differences between participants’ self-assessment and their preceptor-evaluated competency; students correctly perceived and assessed their ability to perform each procedure.

Conclusions

High competence in several life-saving procedures was demonstrated among final-year medical students. The need for consistent and timely feedback, methods to increase medical students’ confidence, and further development and improvement of competency-based assessments are also highlighted.

Similar content being viewed by others

Background

Clinical rotations are designed to provide medical students with the necessary knowledge, practical experience, and skills before commencing their medical practice. The final year of medical school is a particularly critical time for students to acquire clinical and practical skills, both procedural and cognitive, to ensure greater competency [1, 2]. The University of Tripoli has introduced a new skill lab in pediatric clerkship in 2017 for final-year medical students, based on simulation training and manikins. This university offers Bachelor of Medicine and Bachelor of Surgery (MBBCh) programs, equivalent to the medical doctor degree (MD), which comprise a pre-clinical year, five clinical years, and a clinical training internship year. The university’s skill lab was designed to provide students with the advanced skills and knowledge necessary for their internships after completing medical school, as part of a new educational curriculum introduced recently to meet international standards and ensure high-quality care and patient safety in Libya.

Simulation training is widely used in medical education because it allows programs to teach clinical skills in a safe learning environment, which is essential in pediatrics training, as students rarely get the chance to perform clinical procedures. Simulation training can also improve safety and reduce costs and harm to patients. Students and early-career doctors can effectively develop professional health skills, knowledge, and attitudes through this method [3,4,5,6].

Assessment of the learning and education process is essential to ensure students are adequately trained to perform procedures in a hospital. Additionally, the relationship between students’ practicing confidence (based on informed self-assessment) and their performance competency (based on preceptor evaluation) is critical [7,8,9]. Confidence can influence practitioners’ ability to perform a procedure, and patients may be at greater risk of adverse events if a practitioner is incompetent or performs inaccurately [10]. In 1999, Kruger and Dunning demonstrated that people with incompetent skills may overestimate their ability to perform certain tasks and fail to recognize their own misperception [11]. The only way to ensure physicians acquire the skills necessary for their work is to improve their ability to accurately appraise their own performance and measure their abilities, thereby ensuring their optimal and competent treatment and management of patients [7, 12, 13].

Informed self-assessment is a process that includes learners in assessing whether or not learner-identified expectations have been achieved [14]. In education, self-assessment allows learners to recognize their strengths and weaknesses both during and after the learning process [15]. In healthcare practice, self-assessment allows practitioners to identify their strengths and weaknesses and react accordingly to improve the services provided. For example, an important aspect of medical practice is avoiding potential risks by recognizing the need for help or support, or declining to perform certain actions due to awareness of inadequate abilities. This awareness allows medical practitioners to act appropriately, without hesitation or negligence.

Previous studies have addressed the relationship between informed self-assessment and preceptor evaluation in different clinical skills. Some have suggested that self-evaluation tools based on specific assessments can more accurately determine an individual’s competency [16,17,18]. Several have noted that self-assessment is an inaccurate measurement of competence [19, 20]. However, few studies have systematically examined the relationship between student self-assessments and preceptor evaluations. No guidance based on national or international consensus exists on methods of assessing medical students’ skills as part of the learning outcomes using their informed self-assessment as a predictor of preceptor-evaluated competency.

Therefore, this study evaluated the relationship between students’ informed self-assessments (i.e., their confidence in their learning outcomes) and preceptors’ competency evaluations (i.e., students’ ability to accurately perform the trained procedures) following completion of a pediatric skills training course. The fundamental principle of accurate self-assessment, as reflected by students’ self-assessment of their learning outcomes in the pediatric skills training course, should be applicable to other clinical skills.

Aim

To assess students’ perceptions and experiences of a pediatric clinical skills program and to compare their informed self-assessment with their preceptor-evaluated performance competency for several pediatric clinical procedures, including lumbar puncture, intraosseous infusion, nasogastric tube placement, umbilical vein catheterization, and suprapubic aspiration.

Methods

The study sample, enrolled in August 2017, comprised 65 final-year medical students who attended a special pediatric skills training course. Students who undertook the course were informed about each part of the course and were provided with an introductory summary lecture about the course structure and learning outcomes expected by the course’s end. The weeklong, comprehensive course, introduced by the University of Tripoli, used a manikin and various clinical scenarios to simulate real-life cases. Each clinical scenario was first devised by a professor with two instructors; then, the students were allowed to voluntarily perform a certain skill under the supervision of the instructors, who provided feedback about any mistakes or misunderstandings. The goal was to help students acquire crucial pediatric life support skills that would be necessary during clinical rotations in their foundation (internship) year.

Participants completed informed self-assessment of their skills using a questionnaire. Self-assessment was defined as the involvement of participants in judging whether or not learning expectations were met or whether their ability to perform procedures were of an acceptable standard [14]. For each of the five procedures, the participants rated their confidence in their abilities. Subsequently, their actual competency in each procedure was assessed based on preceptor evaluations using an objective structured clinical examination (OSCE) model. Figure 1 illustrates the two main steps of this study.

Participant questionnaires and self-assessment

Participants were first provided with a validated questionnaire to collect feedback on the course. This questionnaire included seven items assessed on a 4-point Likert scale (1 = strongly disagree, 2 = disagree, 3 = agree, 4 = strongly agree), and its internal consistency and reliability were assessed using Cronbach’s alpha [21].

The researchers then explained the informed self-assessment and evaluation procedure to the participants and provided them with another questionnaire to assess their confidence in performing five procedures (i.e., lumbar puncture, nasogastric tube placement, umbilical vein catheterization, intraosseous infusion, and suprapubic aspiration). Each procedure was covered during the weeklong pediatric skills course, and participants were able to practice in low- and medium-fidelity simulations. All items were rated on a 4-point Likert scale (1 = not confident enough to perform even under supervision, 2 = not confident enough to perform without supervision, 3 = confident enough to perform without supervision, 4 = confident enough to teach the procedure).

Preceptor competency evaluation

Following participants’ self-assessment, their competency in each of the five procedures were assessed by examiners through an OSCE. For each procedure, a checklist was created that contained discrete steps derived from the existing university curriculum. These checklists were pre-validated using a pilot sample of students, and to ensure reliability, these underwent several revisions by the clinical department, and finally by the head of the medical education department. The pilot assessment was performed by medical students and pediatric demonstrators unaware of the present study design or research question. To evaluate inter-rater reliability of the assessments completed during the main study, two examiners were present at each station during the test.

In the OSCE, a 10-min time limit and specific schedule was set for each task. At each station, participants were presented with a standardized scenario requiring the use of each particular procedure, and had to then perform the procedure using manikins and prosthesis parts. Two examiners evaluated each participant and rated their performance on a 4-point Likert scale (4 = excellent, 3 = good, 2 = pass, 1 = fail). Overall scores were also calculated for each participant at each station based on the accumulated total of correct OSCE checklist steps (see the supplementary files for the content of each procedure’s checklist).

All participants provided informed written consent and were blinded to the study design and objectives. The OSCE checklists and self-assessment questionnaires were not made available to participants before the start of the study. For the OSCE evaluations, two sets of the five stations were set up, allowing 10 participants to complete the test at the same time. To prevent measurement bias, participants who had completed the assessment were not allowed contact with those still waiting to participate. All assessments were conducted by pediatric skill demonstrators and pediatricians who volunteered to take part in the project and were blinded to the research questions, informed self-assessment results, and study outcomes. The examiners were not involved in the analysis or interpretation of the data following the evaluations. All were trained and had previous experience conducting pediatric OSCE exams or serving as demonstrators in clinical skills education. All evaluations were completed on a single day.

After the OSCE evaluations were completed, participants’ competency levels were compared with their self-assessed confidence. To define their competency level, each participant received a raw score based on the OSCE checklist. Each skill had a specific OSCE score; possible scores for each were: lumbar puncture 0–27, intraosseous infusion 0–32, nasogastric tube 0–25, umbilical venous catheterization 0–26, and suprapubic aspiration 0–24. This score was matched against a score from 1 to 4 provided by both examiners at each station to determine the overall performance (excellent, good, average, poor). A score of at least 60% was defined as passing for each OSCE station.

Statistical analysis

First, participants’ feedback on the pediatric skills course was tabulated against the questionnaire response rate. Descriptive statistics were used to calculate the mean and standard deviation for continuous variables and frequency and percentage for categorical variables. Responses of strongly disagree, disagree, agree, and strongly agree were coded as 1, 2, 3, and 4, respectively. Differences between agreement with each item were calculated using a nonparametric chi-square test for goodness of test fit for a single sample. The response rate was calculated as a percentage. The internal consistency of the instrument was determined using Cronbach’s alpha [21].

A Wilcoxon matched-pairs signed-rank test was used to test the hypothesis that the distribution of difference scores between informed-self assessment and preceptor evaluation score was symmetric about zero. Spearman’s rank-order correlation was conducted to assess the relationship between participants’ informed self-assessment level and OSCE score for each procedure. P-values less than 0.05 were considered statistically significant. All analyses were conducted using IBM SPSS version 25.

Results

All 65 participants completed both the informed self-assessment questionnaire and all OSCE stations. The Cronbach’s alpha was 0.71 for the self-assessment questionnaire and 0.827 for the OSCE checklists, indicating high reliability and good internal consistency.

Table 1 illustrates students’ responses concerning the pediatrics course evaluation. Statistically significant differences in the frequency of participants who indicated various levels of agreement were observed (p < .001).

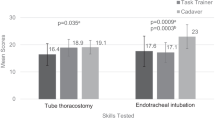

Preceptor evaluations revealed varying levels of competency among the participants. Figure 2 and Fig. 3 illustrate the results of their informed self-assessment and preceptor evaluation scores for each clinical procedure. No significant differences were found between self-assessment and preceptor evaluation scores; the latter scores were thus very similar to students’ self-rated assessments. The passing score rates for each procedure were higher than expected, with umbilical vein catheterization having the highest passing rate (78.5%) and nasogastric tube placement having the lowest (56.9%). Meanwhile, the mean performance scores were above passing for all procedures. Table 2 presents the passing scores and passing rates for each procedure.

The Wilcoxon signed-rank test revealed no significant differences between the participants’ self-assessment and their preceptor-evaluated competency (Table 3). The null hypothesis that the distribution of difference between informed self-assessments and preceptor evaluation scores would be symmetrical about zero was accepted—the students’ confidence in their skills matched their preceptor-evaluated competence. Thus, the participants were able to correctly perceive and assess their ability to perform each procedure. However, the results also showed that the participants with lower confidence and self-assessed abilities demonstrated higher competence and received higher preceptor evaluations, despite their negative perceptions.

Spearman’s rank-order correlation was used to assess the relationship between perceived self-assessment levels and OSCE scores for each procedure. A preliminary analysis showed the relationship to be monotonic, as assessed by visual inspection of a scatterplot. However, there was no statistically significant correlation between the confidence level and OSCE performance score recorded for the students as follows: lumbar puncture rs(63) = − 0.193, p = 0.123, nasogastric tube insertion rs (63) = 0.009, p = 0.944, umbilical vein catheterization rs (63) = − 0.006, p = 0.959, suprapubic bladder aspiration rs (63) = − 0.155, p = 0.219, and intraosseous access rs (63) = 0.007, p = 0.954).

Discussion

This study illustrates the use of comparing informed self-assessment with faculty evaluation of students as an outcome measure for pediatric procedural skill acquisition. Despite the difficulty and complexity of the procedures assessed in this study, passing rates were high, reflecting that most participants had received instruction and constructive feedback, and hence, gained practical experience. Statistically, participants’ informed self-assessment scores were largely similar to their preceptor evaluation scores, with no significant difference between the results of self-assessment and those of faculty assessment for a given competence.

This study’s findings contradict several previous studies that found no agreement between confidence and competence, specifically those of Donoghue et al. on pediatric OSCE skills [22] and Barnsley et al. on junior doctors in their first internship year [23]. A similar study of house officers in the United Kingdom found no significant correlation between performance and self-assessment [24]. In a study of third-year medical students in the United States, in which students’ self-assessments of 10 skills were compared to evaluation scores given by trained simulated patients, Isenberg et al. found significant differences between the students’ and patients’ scores for clinical skills, but not procedural skills [25]. The students were less confident in their procedural competencies. Finally, a study of podiatric medical students found no statistically significant correlation between academic performance and clinical self-assessment, either at the start of students’ third year or after completion of their study [26]. In other words, students’ self-assessment of their clinical performance was not associated with their academic performance. We expected that students would not perform exactly in line with their confidence level, as there was some concern about their ability to perform the procedures correctly and well. We consider that the students who did not achieve a passing score or demonstrated lower competence were those who did not actually perform the procedures on the manikin during the training course, depending instead on learning by watching or doing only discrete steps. It is crucial to fully understand these results and implement this knowledge in future educational and training programs for medical students.

Overall, the ability to objectively evaluate one’s performance and skills allows healthcare workers to achieve a successful balance between engaging in everyday medical practice and ensuring patient safety. Medical students’ perceptions must be considered, as social and personal factors continuously affect learners’ use of both structured and informal learning and assessment practices by the learners, which could help inform their self-assessment of their performance [27]. This highlights the need to develop faculty programs to raise awareness of the value of self-assessment.

There is no gold standard for the assessment of medical students’ confidence and competency levels. Therefore, the validity of these assessment tools in their current form was determined through consultation with the pediatric educational department, several discussions and revisions, and pilot testing.

Some of the medical students who participated in this study were unfamiliar with all the steps of certain procedures, such as lumbar punctures and suprapubic aspiration, and omission of vital steps lead to failure in these students’ evaluations. However, higher success was observed in procedures such as umbilical vein catheterization and nasogastric tube placement, perhaps due to students’ previous experience performing these procedures during the training course. The variation observed in students’ performance might reflect their experiences or engagement during the training course, as some students performed procedures multiple times, whereas others performed them only once or not at all, instead, only observing. Insufficient training or lack of opportunity to practice the procedure prior to the evaluation could explain the variations in this study’s results.

A possible source of bias in this study’s results could be the examiners. However, all examiners were blinded to the study design and objectives, and were not aware that the test was for research purposes. All were either instructors with previous experience in conducting pediatric OSCE exams or pediatricians. To prevent subjective or personal bias, two examiners were assigned to each station, both of whom independently assessed and scored the participants’ performance. The medical students in this study were evaluated in a simulation-based environment using manikins. Although they had gained some experience performing advanced procedures usually conducted by senior pediatricians, they had little to no real experience managing clinical situations in pediatric intensive care units, either alone or under supervision by junior pediatricians. Thus, the results may overestimate students’ abilities, which were evaluated based on training with a manikin rather than human beings. Regardless, this study provides evidence for faculty and future classes supporting the benefits of the pediatric skills training course. Additionally, the results demonstrate alignment between students’ competence and their own confidence in their skills, unlike many previous studies. This could indicate that students correctly assessed their competence in these procedures, or that the examiners overestimated their performance.

Reliable and standardized testing methods are necessary to assess the performance and competency of medical students and junior doctors. To some extent, OSCEs and simulation-based educational courses can meet this demand. However, it is also essential to develop an educational approach that allows students to accurately assess their competence and abilities.

No significant relationship between preceptor evaluations and informed self-assessment was found among this study’s sample of final-year medical students after completing the pediatric intensive care skills course. Continued training and improved self-assessment will thus be vital to properly ensure their ability to perform these procedures. This may imply preparing a lifelong educational curriculum designed to enhance students’ and practitioners’ pediatric intensive care skills.

Before accepting the results of this study, it is critical to consider any potential bias or error that may have affected the outcomes. However, the findings indicate that medical students can perform complex procedures and demonstrate a high standard of care with relatively high confidence in their skills.

Conclusions

This study demonstrates the comparability of student self-assessment results with those of faculty assessments for several pediatric clinical procedural skills among final-year medical students and supports the implementation of the University of Tripoli’s pediatric skills training course as a standard curriculum. The need for constructive feedback, methods to increase medical students’ confidence, and further development and improvement of competency-based assessments are also highlighted.

Availability of data and materials

The datasets used in this study are available from the corresponding author on reasonable request.

Abbreviations

- OSCE:

-

objective structured clinical examination

References

Störmann S, Stankiewicz M, Raes P, Berchtold C, Kosanke Y, Illes G, Loose P, Angstwurm MW. How well do final year undergraduate medical students master practical clinical skills? GMS J Med Educ. 2016;33(4):Doc58.

Harden RM. AMEE guide no. 14: outcome-based education: part 1-an introduction to outcome-based education. Med Teacher. 1999;21(1):7–14.

Lateef F. Simulation-based learning: just like the real thing. J Emerg Trauma Shock. 2010;3(4):348–52.

Gaba DM. The future vision of simulation in health care. Quality Safety Health Care. 2004;13(Suppl 1):i2–10.

Gaba DM. The future vision of simulation in healthcare. Simulation Healthcare. 2007;2(2):126–35.

Abrahamson S, Denson JS, Wolf RM: Effectiveness of a simulator in training anesthesiology residents 1969. Quality Safety Health Care 2004, 13(5):395–397.

Regehr G, Hodges B, Tiberius R, Lofchy J. Measuring self-assessment skills: an innovative relative ranking model. Acad Med. 1996;71(10 Suppl):S52–4.

Speechley M, Weston WW, Dickie GL, Orr V. Self-assessed competence: before and after residency. Can Fam Physician. 1994;40:459–64.

Westberg J, Jason H. Fostering learners' reflection and self-assessment. Fam Med. 1994;26(5):278–82.

Hodges B, Regehr G, Martin D. Difficulties in recognizing one's own incompetence: novice physicians who are unskilled and unaware of it. Acad Med. 2001;76(10 Suppl):S87–9.

Kruger J, Dunning D. Unskilled and unaware of it: how difficulties in recognizing one's own incompetence lead to inflated self-assessments. J Pers Soc Psychol. 1999;77(6):1121–34.

Hochberg M, Berman R, Ogilvie J, Yingling S, Lee S, Pusic M, Pachter HL. Midclerkship feedback in the surgical clerkship: the "professionalism, reporting, interpreting, managing, educating, and procedural skills" application utilizing learner self-assessment. Am J Surg. 2017;213(2):212–6.

Shumway JM, Harden RM. AMEE guide no. 25: the assessment of learning outcomes for the competent and reflective physician. Med Teacher. 2003;25(6):569–84.

Vella F: Enhancing learning through self-assessment D Boud. pp 247. Kogan Page, London. 1995. £19.95 ISBN 0–7494–1368-9. 1996, 24(3):183–183.

Eva KW, Regehr G. Self-assessment in the health professions: a reformulation and research agenda. Acad Med. 2005;80(10 Suppl):S46–S54. https://doi.org/10.1097/00001888-200510001-00015.

Stewart J, O'Halloran C, Barton JR, Singleton SJ, Harrigan P, Spencer J. Clarifying the concepts of confidence and competence to produce appropriate self-evaluation measurement scales. Med Educ. 2000;34(11):903–9.

Carr SJ. Assessing clinical competency in medical senior house officers: how and why should we do it? Postgrad Med J. 2004;80(940):63–6.

Perry P. Concept analysis: confidence/self-confidence. Nurs Forum. 2011;46(4):218–30.

Gordon MJ. A review of the validity and accuracy of self-assessments in health professions training. Acad Med. 1991;66(12):762–9.

Gordon MJ. Self-assessment programs and their implications for health professions training. Acad Med. 1992;67(10):672–9.

De Vellis RF. Scale development: Theory and applications. Vol. 26. Sage publications, 2016.

O'Donoghue D, Davison G, Hanna LJ, McNaughten B, Stevenson M, Thompson A. Calibration of confidence and assessed clinical skills competence in undergraduate paediatric OSCE scenarios: a mixed methods study. BMC Med Educ. 2018;18(1):211.

Barnsley L, Lyon PM, Ralston SJ, Hibbert EJ, Cunningham I, Gordon FC, Field MJ. Clinical skills in junior medical officers: a comparison of self-reported confidence and observed competence. Med Educ. 2004;38(4):358–67.

Fox RA, Ingham Clark CL, Scotland AD, Dacre JE. A study of pre-registration house officers' clinical skills. Med Educ. 2000;34(12):1007–12.

Isenberg GA, Roy V, Veloski J, Berg K, Yeo CJ. Evaluation of the validity of medical students' self-assessments of proficiency in clinical simulations. J Surg Res. 2015;193(2):554–9.

Yoho RM, Vardaxis V, Millonig K. Relationship between academic performance and student self-assessment of clinical performance in the College of Podiatric Medicine and Surgery. J Am Podiatr Med Assoc. 2016;106(3):214–7.

Sargeant J, Eva KW, Armson H, Chesluk B, Dornan T, Holmboe E, Lockyer JM, Loney E, Mann KV, van der Vleuten CP. Features of assessment learners use to make informed self-assessments of clinical performance. Med Educ. 2011;45(6):636–47.

Acknowledgements

The authors would like to acknowledge the contribution of the Faculty of Medicine at the Department of Pediatrics of the University of Tripoli, the skill lab team, the instructors, and the medical students who participated in this study.

Funding

This study did not receive any grant or funding from any department or institute.

Author information

Authors and Affiliations

Contributions

ME and HA conceptualized the study design under HE’s supervision. AK and AE recorded the questionnaire data. WA, SA, and HE supervised the skill scenarios and assessed the skill records with other assessors. To extract the themes, ME and HA assessed the skill data, and they independently reviewed the group scores. AE constructed the tables and figures. ME and HA wrote the initial draft of the manuscript. ME performed the statistical analyses. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The authors confirm that the study procedures comply with the ethical standards of the relevant national and institutional committee on human experimentation, with the Helsinki Declaration of 1975, as revised in 2008. Ethical approval was granted by the Faculty of Medicine, University of Tripoli. Informed written consent was obtained from the participants before participation.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Elhadi, M., Ahmed, H., Khaled, A. et al. Informed self-assessment versus preceptor evaluation: a comparative study of pediatric procedural skills acquisition of fifth year medical students. BMC Med Educ 20, 318 (2020). https://doi.org/10.1186/s12909-020-02221-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-020-02221-2