Abstract

Background

Digital assessment is becoming more and more popular within medical education. To analyse the dimensions of this digital trend, we investigated how exam questions (items) are created and designed for use in digital medical assessments in Germany. Thus, we want to explore whether different types of media are used for item creation and if a digital trend in medical assessment can be observed.

Methods

In a cross-sectional descriptive study, we examined data of 30 German medical faculties stored within a common assessment platform. More precise, 23,008 exams which contained 847,137 items were analysed concerning the exam type (paper-, computer- or tablet-based) and their respective media content (picture, video and/or audio). Out of these, 5252 electronic exams with 12,214 questions were evaluated. The media types per individual question were quantified.

Results

The amount of computer- and tablet-based exams were rapidly increasing from 2012 until 2018. Computer- and tablet-based written exams showed with 45 and 66% a higher percentage of exams containing media in comparison to paper-based exams (33%). Analysis on the level of individual questions showed that 90.8% of questions had one single picture. The remaining questions contained either more than one picture (2.9%), video (2.7%), audio (0.2%) or 3.3% of questions had picture as well as video added. The main question types used for items with one picture are TypeA (54%) and Long_Menu (31%). In contrast, questions with video content contain only 11% TypeA questions, whereas Long_Menu is represented by 66%. Nearly all questions containing both picture and video are Long_Menu questions.

Conclusions

It can be stated that digital assessment formats are indeed on the raise. Moreover, our data indicates that electronic assessments formats have easier options to embed media items and thus show a higher frequency of media addition. We even identified the usage of different media types in the same question and this innovative item design could be a useful feature for the creation of medical assessments. Moreover, the choice of media type seems to depend on the respective question type.

Similar content being viewed by others

Background

Artificial intelligence, big data, university 4.0, digital transformation and digitalisation are current trends and buzzwords highly discussed in the world of health care and higher education [1,2,3]. Especially the topic of digitalisation is reaching an omnipresence within society and meanwhile takes on an important role within higher education. Digitalisation not only describes the transition from analogous to digital data, but also refers to the consequences that occur due to this digital shift. Computers, tablets and smartphones are capable to process data digitally and are nowadays inevitable electronic devices in health care, medicine and medical education [4,5,6]. These digital media will sooner or later change the way we think of and handle daily and critical health care situations [7]. On the basis of these facts, we observe new tasks in medical education which address the teaching and assessment of digital competencies of medical examinees, implying the necessity to define these competencies [8, 9].

Digitalisation encompasses the emergence of novel tools and applications which offer many new opportunities within the educational sector, especially concerning new assessment formats and content of exams [10]. For this reason, technical aspects have to be revised and optimised for the implementation of electronic assessments within medical education [8]. The benefits of these electronic assessments are on the one hand faster correction of exams through automated evaluations. In addition, E-Assessment in general is less prone to errors and thus leads to an increased quality of the exam [11]. On the other hand, the availability of numerous digital assessment tools changes the process of exam creation and new assessments formats can be applied. Modern technologies offer new possibilities to create items with innovative designs [12]. Due to these new technological possibilities, audio-visual media content can be added to support the textual structure of exam questions. Moreover, multiple media types can be combined in the same exam question leading to an advanced question composition.

The potential addition of media content to questions is the benefit of E-Assessment. In the context of medical education, a study area with the most performed electronic exams [13], electronic exams have the advantage that high resolution images of diseases or medical conditions can be illustrated without losing quality due to printing on paper. Furthermore, digital image marking is another asset of digital assessments. This item type can be used to mark a specific point in an image like a foreign object in a lung (called Hot Spot [12]).

The usage of digital tools on mobile devices allows a layered and dynamic item design, and thus the medical educator has become a designer of items in assessments [14]. The constructed environment (real or virtual) offers new opportunities to act [15]. Therefore, it is essential to examine the already ongoing process of digitalisation in medical schools [16]. It is unavoidable that the use of computer assisted systems and mobile devices in medical education will change how we will assess medical knowledge in the future [17]. In the age of digitalisation more and more electronic assessments are performed in medical education in Germany [18]. Hence, this article focuses on the “digital” status quo of assessments in German medical schools and discusses exams in the context of their respective exam type and media content.

Methods

Data acquisition and ItemManagementSystem

The basis for our data evaluation is the assessment platform ItemManagementSystem (IMS) of the Umbrella Consortium for Assessment Networks (UCAN) [19, 20], where data of roughly 35,042 performed exams with 616.543 unique items from 70 institutions are stored. For this cross-sectional descriptive study, we focused on data from 30 German medical faculties which stored in total 28,376 exams that included 957,059 questions (several questions were used in more than one exam). After confining the exams to the selected time frame from 2012 to 2018, 26,742 exams with 856,150 questions remained. This time frame was chosen because from 2012 media elements were used in electronic exams. Since we cannot control whether the users of the IMS provide correct information about their exams, we filtered the exams and excluded exams with less than 5 questions and exams having the word “test” in their description with less than 10 questions. This represents the best possible way to exclude most exams generated to test the system or new features of the IMS. After disregarding above mentioned exams, 23,008 exams that contained 847,137 items were evaluated regarding their exam type (paper, computer and tablet) and included media content (pictures, videos or audio).

For the investigation of the media usage in exam questions, 17,756 paper-based exams were disregarded because paper-based exams offer less possibilities for media addition compared to electronic exams. Thus, the remaining 5252 computer- and tablet-based exams with 205,988 questions were further analysed. For tablet-based exams we distinguish between tablet-based written assessments (tEXAM) and Objective Structured Clinical Examinations (OSCE) evaluated by the examiners via tablet (tOSCE). tOSCE was introduced in 2013 and tEXAM in 2015.

First, the different media types per single question were quantified and second the preferred question type for each media type determined. We categorised the questions into six different classes: TypeA, Pick-N, Kprime, Freetext, Long_Menu and OSCE:

TypeA questions are multiple-choice questions with one correct answer.

Pick-N questions are also multiple-choice questions, but with more than one correct possible answer.

Kprime questions are basically yes or no questions where each possible answer must be marked as true or not true.

Freetext questions have a textbox where any desired answer can be given.

Long_Menu question have usually a long answer list (e.g. 10,000 diagnoses) to choose from and thus are comparable to freetext questions where the knowledge of the right answer is presumed.

OSCE is an assessment format for skill testing in written or tablet-based form that was first described by Harden et al. [21, 22]. In our case, tablet-based OSCE refers to the evaluation mode of the OSCE which is performed via a tablet. OSCE questions refer to questions used in OSCE assessments. These questions can be a mixture of any of the above-mentioned question types.

Software and statistical analyses

The evaluation of the compiled data occurred through the software packages Microsoft Excel (Microsoft Office version 2019, Microsoft Corporation, Redmond WA, USA). With the Excel software mean values and standard deviations were determined. The dependency of question type and media type was analysed with R (version 3.4.3, R Core Team) using Pearson’s Chi-squared test.

Results

Different exam types and tendencies in electronic assessment

Figure 1 illustrates the number of performed exams categorised into three different exam types, and shows the frequent usage of paper-based exams which were rapidly increasing from 2012 until 2015 where they seem to stay stable with around 3000 exams per year. In the year 2010 the first computer-based exams were performed (not shown in graph) and akin to paper-based exam, the numbers were increasing every year. A similar effect can be observed for tablet-based assessments, an exam type that was introduced 2013 and proved quite popular, with a higher number of performed exams in 2018 compared to other electronic assessment types.

Item design and use of items containing media

The average percentage of exams containing media with reference to all exams of the respective exams type is illustrated in Fig. 2. While 33% of all paper-based exams contain a media element, 45% of computer-based exams and 20% of tablet-based assessments have media added. OSCEs assess the practical clinical skills of medical examinees and thus it is probably not surprising that quite few media items (14,5%) are added to these exams. On the other hand, tEXAM assessments contain on average 66% media, which is double the amount compared to paper-based exams.

In the investigations what kind of media types are used in electronic exams, it is not surprising that pictures are still the main media type used in combination with text (Fig. 3). Additionally, the quantity of exams with picture content is increasing every year and most likely will further increase in the future. The second most used media type are videos, which were added to exams for the first time in 2014. Their numbers seem, compared to exams with pictures, quite stable with approximately 30–40 performed exams per year (Fig. 3). The last media type utilised are audio data attached to questions. This media type is only used for 3 years and the numbers are still quite low with ten exams in 2018, but a tendency to increase in the future can be observed (Fig. 3).

Allocation of media during exam question creation

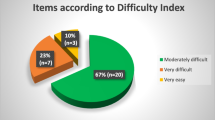

To analyse the used media type in E-Assessment, 5252 electronic exams were evaluated with 2065 exams that contained either picture, video or audio as media types. These exams contained 12,214 questions that were further assessed individually regarding their added media types. In accordance with the above-mentioned data, the evaluation of questions showed that pictures are indeed the main media type in combination with the question text (Fig. 4). While 11,095 questions (90.8%) contained one picture, 1119 questions had other media types. Out of these 1119 questions, 309 questions (2.5%) contained two pictures per question, and 50 questions (0.4%) had three or more pictures added. Few questions had even 5 pictures attached to it. Apart from pictures, 335 questions (2.7%) contained videos and 24 questions audios (0.2%). Furthermore, we observed 401 questions (3.3%) that had both picture as well as video additions (Fig. 4), which represents a feature only possible in digital assessment.

We investigated whether a particular media type is favoured for a special question type. Our findings show that 54% of the questions containing one picture can be assigned to single answer multiple choice questions (TypeA) (Fig. 5) and 31% of the questions belong to the question type Long_Menu. The question types Pick-N, OSCE, Kprime and Freetext are represented with less than 5% each. An analogous result was observed for questions with 2 and more pictures, whereby Long_Menu and OSCE questions slightly increased to 37 and 9% respectively. Surprisingly, questions containing videos show a completely different pattern. Single answer multiple choice questions, for instance, cover only 11% of all questions with video data, whereas most of this media type is applied to Long_Menu questions (66%). OSCE questions containing video are represented with 18%. Finally, we analysed the second most common type of media, the use of both image and video, and found that they occur mainly in Long_Menu questions. However, it has to be noted that the combination of these two media types in exam questions is only used by two of the evaluated institutions. The execution of Pearson’s Chi-squared test showed a dependence of question type and media type (X -squared = 1344.3, df = 15, p-value < 2.2e-16).

Discussion

The current study shows the digital trend towards electronic exams in German medical schools. The quantity of electronic assessment methods, such as computer- and tablet-based exams has grown strongly until the end of 2018 according to our analysis. The frequent usage of picture items could be demonstrated with our data since pictures still represent the main media type used in medical assessments. Our data shows that other media types such as audio and video are quite rarely used within medical education in Germany. Perhaps these media types are more advantageous for other areas of studies. Within the musicology, parts of musical works could be played and learning foreign languages native speakers could be listened to which is already an element of learning management systems [23,24,25] and part of E-learning platforms used to learn foreign languages [26, 27].

In general, good quality questions used for exams are costly [28] and one of the drawbacks of video data is that the production and reuse in following exams might be expensive. For this reason, assessment platforms that are shared by multiple users open new possibilities and can be applied to solve this issue. In the course of digitalisation, the number of digital tools for medical assessment is increasing rapidly and numerous tools for the use of media are available. Videos can be captured and uploaded instantly. Following this, the videos can even be annotated during an exam. Thus, we speculate that the use of various media in exam questions will become more and more popular in the future. Other drawbacks of using audio and video files include data protection rights and copyrights, especially when data resources of patients are intended to be used. Moreover, video and audio files are more prone to technical issues compared to pictures.

Unfortunately, the authors did not find any references regarding nationwide studies to determine a change in media usage after implementation of digital exams. However, there is a trend in the use of digital assessment formats worldwide [29,30,31,32]. As early as 2004, the British government announced a very ambitious project to expand the use of E-Assessment [33]. They state that “E-assessment can take a number of forms, including automating administrative procedures; digitising paper-based systems, and online testing” indicating that digitalisation of medical assessments occurs on several levels apart from the exam delivery mode itself. Many studies are available, that report the transition of traditionally pen and paper testing to electronic exam [32, 34,35,36,37]. This change leads to an increase of electronic exam delivery and can be found worldwide and in several areas of study and is in accordance with our findings, that the number of electronic exams is continuously increasing.

There are some limitations to our study. First, we only analysed the data of one specific database which depends on the accurate entering of the data by its users. Second, we have only focused on medicine as a subject of study and only considered German medical faculties. Third, our exam exclusion criteria may have resulted in exclusion of potentially relevant exams or questions. Moreover, the number of some question type and media type combination in our analysis might be rather low for less frequently used question types. Nonetheless, this study is the first attempt to compare and analyse the used media types for medical exam questions in different exam types.

Further research could incorporate the analysis whether the use of media in exam questions is beneficial for the quality of the assessments and thus the quality of medical study. For that reason, statistical data like the difficulty, discriminatory power and reliability of exam questions with and without media should be collected. An automatic upload of statistical values does currently not take place in the examined assessment platform, but is planned for the current year, which is why a statistical analysis of exam questions would be feasible in the future and is aimed at. Furthermore, it should also be explored to what extent different medical competencies and knowledge can be tested depending on the chosen media and question type. Hurtubise and colleagues discussed the usage of video clips linked to different competency domains [38]. In Germany, medical competencies are defined, among others, in the National Competence Based Catalogue of Learning Objectives for Undergraduate Medical Education (NKLM; Nationaler Kompetenzbasierter Lernzielkatalog in der Medizin) which serves as an orientation for the medical faculties and has the character of a recommendation [39]. The professional roles taken by physicians are derived from the Canadian CanMEDS framework concept, which originally referred to a level of competence in specialist medicine, but which has gained wide international acceptance and dissemination for medical training [40]. The model was transferred to the competence level of the NKLM. It would be conceivable to explore the use of media for the various areas of competence of the NKLM or the various CanMEDS roles, and to determine whether certain types of media are used more frequently for certain areas of competence or roles.

Conclusion

In the age of digitalisation, a digital trend is occurring in higher education in Germany. In our study we analysed how this digital change is affecting assessments in medical education. Interestingly, an increased number of media additions like picture, video and audio can be observed in electronic exams and even media combinations of text, picture and video are utilised for the creation of exam questions. So far, the usage of different media types in the same question is quite rare, but modern digital technologies together with the collective exchange of items in a common item-pool and a broad range of available assessment tools will facilitate these innovative digital assessment formats in the future. In addition, our study demonstrates that some media types are more used for a specific question type than others. Thus, one could speculate that our data shows that specific media types are more suitable for some question types than for others.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- CanMEDS:

-

Canadian Medical Education Directives for Specialists

- E-Assessment:

-

Electronic Assessment

- E-learning platform:

-

Electronic learning platform

- IMS:

-

ItemManagementSystem

- NKLM:

-

Nationaler Kompetenzbasierter Lernzielkatalog in der Medizin (National Competence Based Catalogue of Learning Objectives for Undergraduate Medical Education)

- OSCE:

-

Objective Structured Clinical Examinations

- tEXAM:

-

Tablet-based written exams

- tOSCE:

-

Tablet-evaluated OSCE

- UCAN:

-

Umbrella Consortium for Assessment Networks

References

Benke K, Benke G. Artificial intelligence and big data in public health. Int J Environ Res Public Health. 2018. https://doi.org/10.3390/ijerph15122796 .

Arora VM. Harnessing the power of big data to improve graduate medical education: big idea or bust? Acad Med. 2018;93:833–4. https://doi.org/10.1097/ACM.0000000000002209 .

Gopal G, Suter-Crazzolara C, Toldo L, Eberhardt W. Digital transformation in healthcare - architectures of present and future information technologies. Clin Chem Lab Med. 2019;57:328–35. https://doi.org/10.1515/cclm-2018-0658 .

Mohapatra D, Mohapatra M, Chittoria R, Friji M, Kumar S. The scope of mobile devices in health care and medical education. Int J Adv Med Health Res. 2015;2:3. https://doi.org/10.4103/2349-4220.159113 .

Dimond R, Bullock A, Lovatt J, Stacey M. Mobile learning devices in the workplace: 'as much a part of the junior doctors' kit as a stethoscope'? BMC Med Educ. 2016;16:207. https://doi.org/10.1186/s12909-016-0732-z .

Fan S, Radford J, Fabian D. A mixed-method research to investigate the adoption of mobile devices and Web2.0 technologies among medical students and educators. BMC Med Inform Decis Mak. 2016;16:43. doi:https://doi.org/10.1186/s12911-016-0283-6 .

Masters K, Ellaway RH, Topps D, Archibald D, Hogue RJ. Mobile technologies in medical education: AMEE guide no. 105. Med Teach. 2016;38:537–49. https://doi.org/10.3109/0142159X.2016.1141190 .

Amin Z, Boulet JR, Cook DA, Ellaway R, Fahal A, Kneebone R, et al. Technology-enabled assessment of health professions education: consensus statement and recommendations from the Ottawa 2010 conference. Med Teach. 2011;33:364–9. https://doi.org/10.3109/0142159X.2011.565832 .

Konttila J, Siira H, Kyngäs H, Lahtinen M, Elo S, Kääriäinen M, et al. Healthcare professionals' competence in digitalisation: a systematic review. J Clin Nurs. 2019;28:745–61. https://doi.org/10.1111/jocn.14710 .

Kuhn S, Frankenhauser S, Tolks D. Digitale Lehr- und Lernangebote in der medizinischen Ausbildung : Schon am Ziel oder noch am Anfang? Bundesgesundheitsblatt Gesundheitsforschung Gesundheitsschutz. 2018;61:201–9. https://doi.org/10.1007/s00103-017-2673-z .

Denison A, Bate E, Thompson J. Tablet versus paper marking in assessment: feedback matters. Perspect Med Educ. 2016;5:108–13. https://doi.org/10.1007/s40037-016-0262-8.

Dennick R, Wilkinson S, Purcell N. Online eAssessment: AMEE guide no. 39. Med Teach. 2009;31:192–206.

Persike M, Friedrich J-D. Lernen mit digitalen Medien aus Studierendenperspektive. Sonderauswertung aus dem CHE Hochschulranking für die deutschen Hochschulen 2016. https://hochschulforumdigitalisierung.de/sites/default/files/dateien/HFD_AP_Nr_17_Lernen_mit_digitalen_Medien_aus_Studierendenperspektive.pdf. Accessed 19 Mar 2020.

Kress G, Selander S. Multimodal design, learning and cultures of recognition. Internet High Educ. 2012;15:265–8. https://doi.org/10.1016/j.iheduc.2011.12.003.

Withagen R, de Poel HJ, Araújo D, Pepping G-J. Affordances can invite behavior: reconsidering the relationship between affordances and agency. New Ideas Psychol. 2012;30:250–8. https://doi.org/10.1016/j.newideapsych.2011.12.003.

Wollersheim H-W, März M, Schminder J. Digitale Prüfungsformate. Zum Wandel von Prüfungskultur und Prüfungspraxis in modularisierten Studiengängen: digital examination formats - on the changes in the examination culture and examination practice in modular courses of studies. Zeitschrift für Pädagogik. 2011;57:363–74.

Haag M, Igel C, Fischer MR. Digital Teaching and Digital Medicine: A national initiative is needed. GMS J Med Educ. 2018;35:Doc43. https://doi.org/10.3205/zma001189 .

Nikendei C, Weyrich P, Jünger J, Schrauth M. Medical education in Germany. Med Teach. 2009;31:591–600.

Hochlehnert A, Brass K, Möltner A, Schultz J-H, Norcini J, Tekian A, Jünger J. Good exams made easy: the item management system for multiple examination formats. BMC Med Educ. 2012;12:63. https://doi.org/10.1186/1472-6920-12-63.

Institute for Communication and Assessment Research. Umbrella Consortium for Assessment Networks. www.ucan-assess.org. Accessed 24 Jan 2020.

Harden RM, Stevenson M, Downie WW, Wilson GM. Assessment of clinical competence using objective structured examination. Br Med J. 1975;1:447–51. https://doi.org/10.1136/bmj.1.5955.447.

Harden RM. What is an OSCE? Med Teach. 1988;10:19–22. https://doi.org/10.3109/01421598809019321.

Giguruwa N, Hoang D, Pishv D. A multimedia integrated framework for learning management systems. In: Ghislandi P, editor. Developing an Online: INTECH Open Access Publisher; 2012. doi:https://doi.org/10.5772/32396.

Scherl A, Dethleffsen K, Meyer M. Interactive knowledge networks for interdisciplinary course navigation within Moodle. Adv Physiol Educ. 2012;36:284–97. https://doi.org/10.1152/advan.00086.2012.

Landsiedler I, Pfandl-Buchegger I, Insam M. Lernen und Hören: Audio-vokales Training im Sprachunterricht. In: Schröttner B, Hofer C, editors. Looking at learning: Blicke auf das Lernen. Münster: Waxmann; 2011. p. 179–92.

Gonzalez-Vera P. The e-generation: the use of technology for foreign language learning. In: Pareja-Lora A, Calle-Martínez C, Rodríguez-Arancón P, editors. New perspectives on teaching and working with languages in the digital era. Dublin: Research-publishing.net; 2016. p. 51–61.

Lesson Nine GmbH. Lernen mit Babbel. https://about.babbel.com/de/. Accessed 19 Mar 2020.

Freeman A, Nicholls A, Ricketts C, Coombes L. Can we share questions? Performance of questions from different question banks in a single medical school. Med Teach. 2010;32:464–6. https://doi.org/10.3109/0142159X.2010.486056.

Bennett RE. Technology for Large-Scale Assessment. In: Peterson P, Baker E, McGaw B, editors. International encyclopedia of education. 3rd ed. Oxford: Elsevier; 2010. p. 48–55. https://doi.org/10.1016/B978-0-08-044894-7.00701-6.

Csapó B, Ainley J, Bennett RE, Latour T, Law N. Technological issues for computer-based assessment. In: Griffin P, Care E, McGaw B, editors. Assessment and teaching of 21st century skills. Dordrecht: Springer; 2012. p. 143–230. https://doi.org/10.1007/978-94-007-2324-5_4.

Redecker C, Johannessen Ø. Changing assessment - towards a new assessment paradigm using ICT. Eur J Educ. 2013;48:79–96. https://doi.org/10.1111/ejed.12018.

Björnsson J, Scheuermann F. The transition to computer-based assessment: new approaches to skills assessment and implications for large-scale testing. Luxembourg: OPOCE; 2009.

Ridgway J, Mccusker S, Pead D. Literature Review of E-assessment. 2004. hal-00190440. 2004. https://telearn.archives-ouvertes.fr/hal-00190440/document. Accessed 19 Mar 2020.

Washburn S, Herman J, Stewart R. Evaluation of performance and perceptions of electronic vs. paper multiple-choice exams. Adv Physiol Educ. 2017;41:548–55. https://doi.org/10.1152/advan.00138.2016.

Hochlehnert A, Schultz J-H, Möltner A, Tımbıl S, Brass K, Jünger J. Electronic acquisition of OSCE performance using tablets. GMS Z Med Ausbild. 2015;32:Doc41. https://doi.org/10.3205/zma000983.

Pawasauskas J, Matson KL, Youssef R. Transitioning to computer-based testing. Curr Pharmacy Teach Learn. 2014;6:289–97. https://doi.org/10.1016/j.cptl.2013.11.016.

Bloom TJ, Rich WD, Olson SM, Adams ML. Perceptions and performance using computer-based testing: one institution's experience. Curr Pharm Teach Learn. 2018;10:235–42. https://doi.org/10.1016/j.cptl.2017.10.015.

Hurtubise L, Martin B, Gilliland A, Mahan J. To play or not to play: leveraging video in medical education. J Grad Med Educ. 2013;5:13–8. https://doi.org/10.4300/JGME-05-01-32.

Fischer MR, Bauer D, Mohn K. Finally finished! National Competence Based Catalogues of Learning Objectives for Undergraduate Medical Education (NKLM) and Dental Education (NKLZ) ready for trial. GMS Z Med Ausbild. 2015;32:Doc35. https://doi.org/10.3205/zma000977.

Frank JR, Danoff D. The CanMEDS initiative: implementing an outcomes-based framework of physician competencies. Med Teach. 2007;29:642–7. https://doi.org/10.1080/01421590701746983.

Acknowledgements

We would like to thank the Center of Excellence Assessment in Medicine in Heidelberg, Germany, for its advice on test statistical analysis.

Funding

None.

Author information

Authors and Affiliations

Contributions

SE and KB devised the main conceptual ideas. SE and AM contributed to the design and implementation of the research. SE processed the experimental data, performed the analysis and designed the Figures. SE and AM wrote the manuscript in consultation with AT and JN. SE and KB supervised the findings of this work. All authors provided critical feedback, helped shaping the research and analysis and approved the final manuscript.

Authors’ information

Saskia Egarter, PhD, is Research Associate at the Institute for Communication and Assessment Research, Heidelberg, Germany.

Anna Mutschler, Degree in Educational Sciences, is Research Associate at the Institute for Communication and Assessment Research and the Center of Excellence Assessment in Medicine, University of Heidelberg, Heidelberg, Germany.

Ara Tekian, PhD, MHPE, is Professor, Department of Medical Education, and Associate Dean for the Office of International Education at the University of Illinois at Chicago College of Medicine, USA (https://orcid.org/0000-0002-9252-1588).

John Norcini, Research Professor in the Department of Psychiatry at SUNY Upstate Medical School and President Emeritus at FAIMER.

Konstantin Brass, Dipl.-Inform. Med., Managing Director of the Institute for Communication and Assessment Research, Heidelberg, Germany.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Egarter, S., Mutschler, A., Tekian, A. et al. Medical assessment in the age of digitalisation. BMC Med Educ 20, 101 (2020). https://doi.org/10.1186/s12909-020-02014-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-020-02014-7