Abstract

Background

Summer student research programs (SSRPs) serve to generate student interest in research and a clinician-scientist career path. This study sought to understand the composition of existing medically-related Canadian SSRPs, describe the current selection, education and evaluation practices and highlight opportunities for improvement.

Methods

A cross-sectional survey study among English-language-based medically-related Canadian SSRPs for undergraduate and medical students was conducted. Programs were systematically identified through academic and/or institutional websites. The survey, administered between June–August 2016, collected information on program demographics, competition, selection, student experience, and program self-evaluation.

Results

Forty-six of 91 (50.5%) identified programs responded. These SSRPs collectively offered 1842 positions with a mean 3.76 applicants per placement. Most programs (78.3%, n = 36/46) required students to independently secure a research supervisor. A formal curriculum existed among 61.4% (n = 27/44) of programs. Few programs (5.9%, n = 2/34) offered an integrated clinical observership. Regarding evaluation, 11.4% (n = 5/44) of programs tracked subsequent research productivity and 27.5% (n = 11/40) conducted long-term impact assessments.

Conclusions

Canadian SSRPs are highly competitive with the responsibility of selection primarily with the individual research supervisor rather than a centralized committee. Most programs offered students opportunities to develop both research and communication skills. Presently, the majority of programs do not have a sufficient evaluation component. These findings indicate that SSRPs may benefit from refinement of selection processes and more robust evaluation of their utility. To address this challenge, the authors describe a logic model that provides a set of core outcomes which can be applied as a framework to guide program evaluation of SSRPs.

Similar content being viewed by others

Background

Clinician-scientists are uniquely qualified to integrate perspectives from their clinical experiences with scientific inquiry to generate new knowledge about health and disease through research and translate research findings into medical practice. However, despite the recognized value of integrating scientific discovery and clinical care, the decline in relative numbers of new clinician-scientists is well documented [1,2,3,4,5,6,7,8]. A number of recent reports have highlighted strategies to address this phenomenon, including the critical need to integrate recruitment efforts and research training across various stages of education [8,9,10,11]. A study by Silberman et al. [12] also indicated that most medical trainees decide whether to pursue a scientific career before entering residency training. Hence, opportunities to enhance involvement in medically-related research during earlier educational stages (i.e. undergraduate and medical school) provide a valuable opportunity to increase the numbers of well-trained clinician-scientists. Medically-related summer student research programs (SSRPs) targeting undergraduate and medical students represent one such avenue to increase students’ interest in a clinician-scientists career path.

Previous research has shown that SSRPs stimulate or strengthen students’ interest and participation in research activities, encourage integration of research into career choices, and aid in research-related skills development [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25]. Many of the individual programs outlined in the literature have served to develop future clinician-scientists and increase researcher diversity by providing underrepresented students opportunities to become involved with research [15, 21, 23, 26,27,28]. To date, however, research regarding SSRPs has largely utilized self-reported data and described individual program successes within the United States (US) [15, 16, 19, 29, 30]. To our knowledge, there has been no previous comprehensive comparison of SSRPs across disciplines. Additionally, there remains a knowledge gap regarding the types of educational opportunities offered by such programs, the level of competition, and how programs measure impact and fulfillment of their implicit and explicit goals. This study, therefore, sought to understand the composition of existing medically-related Canadian SSRPs, describe the current selection, education and evaluation practices and highlight opportunities for improvement.

Methods

This cross-sectional survey-based study took place from June to August 2016. Ethical approval was obtained from the Women’s College Hospital Research Ethics Board and verbal consent to participate was received from all participants.

Program identification

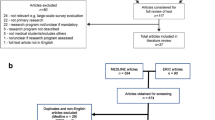

Eligible summer student research programs were systematically identified using search engines, online databases and medical school websites. First, Canadian SSRPs were identified by making a list of all 17 Canadian medical schools and their affiliated partners, including academic hospitals, universities, laboratories and institutions. Second, to account for national programs, Canadian healthcare organizations that offered summer research studentships were identified by exploring medical school webpages. Using this comprehensive list, an online search was conducted to identify eligible programs. SSRPs that offered research placements within Canada, were based in the English language and independent of school credit, and specific to the summer months (May to August) were included. SSRPs open to students from non-medical disciplines, such as Masters, PhD, pharmacy and dentistry, were excluded. Programs were also excluded if they were unable to provide the research team with contact information for a designated research coordinator and/or director that could accurately answer the study questionnaire.

Data collection

The authors developed a survey instrument based on a literature review, knowledge of current research training programs, discussions with experts in the field, and their own research training experience. Two investigators (JU and SP) reviewed the questionnaire to provide feedback on clarity and completion time. Additionally, the instrument was pre-tested on a sample of included respondents (n = 5) to ensure ease of use and relevance. Feedback was incorporated into the final instrument. The instrument consists of 32 questions divided into 8 sections designed to obtain information regarding program characteristics (n = 6), competition level (n = 4), accessibility (n = 2), student selection (n = 2), funding (n = 2), student experience (n = 7), program self-evaluation (n = 8) and other (n = 1). The survey (Additional file 1: Appendix 1) was administered via telephone interview (91.3% [42/46]) or written questionnaire in cases where the SSRPs’ representative was unavailable for a phone interview (8.7% [4/46]). A call script was used to standardize survey administration. To maximize response rates, the survey design and distribution was based on Dillman’s tailored design method [31], including clear and easy-to-understand language, personalized communication, a short cover letter and distribution of up to two reminder emails. Additionally, the option of responding to a written questionnaire was provided to enhance participation.

Statistical analysis

Descriptive statistics were used to summarize survey responses and summary statistics, as means and/or raw number and percent, are reported where appropriate. Differences between responders and non-responders with respect to geographic region and institution type were assessed using chi square. Where applicable, data were stratified and analyzed according to program size to facilitate comparisons across programs with similar resources and infrastructure: small (≤20 seats), medium (20–50 seats), large (≥50 seats) and pan-Canadian programs available to student’s independent of the province in which they live.

Results

Ninety-one programs met inclusion criteria (See Additional file 1: Appendix 2 for complete list and Fig. 1 for national distribution of programs). Four different types of institutions offered SSRPs, including Canadian Medical Schools and their affiliated research institutes and universities, and academic hospitals together with their associated research institutes. In many cases multiple different summer research programs were offered by the same institution. A comparison of types of institutions offering SSRPs across Canadian provinces is provided in Additional file 1: Appendix 3. Overall, 26 SSRPs were offered by Canadian medical schools, 12 by affiliated research institutes, 21 by universities and 19 by academic hospitals and their associated research institute. Forty-six of 91 (50.5%) identified programs participated in the study. Geographically, the distribution of response rates across Canada were 34.5% (10/29) for western Canada, 57.5% (23/40) for central Canada, 66.7% (6/9) for eastern Canada and 53.8% (7/13) for pan-Canadian programs. Amongst the types of institutions offering SSRPs, response rates were 53.8% (14/26) for Canadian medical schools, 42.9% (9/21) for affiliated universities, 41.7% (5/12) for affiliated research institutes, and 57.9% (11/19) for academic hospitals and their associated research institute. There were no differences between responders and non-responders with respect to geographic region or institution type (p > 0.05). Question completion rate was variable either due to missing data or the participants’ choice to not respond.

Heat map depicting competition level of SSRPs across Canadian provinces for 2016 as represented by the mean applicant to placement ratio (APPR). Darker grey colour represents higher completion level. APPR: mean applicant to placement ratio; SSRP: summer student research program. * Survey responses by provinces were as follows: British Columbia = 4, Alberta = 4, Saskatchewan = 0, Manitoba = 0, Ontario = 16, Quebec = 2, Nova Scotia/New Brunswick = 6, Newfoundland = 3, Pan-Canadian programs = 4

General program characteristics

General program characteristics of Canadian SSRPs are presented in Table 1. Overall, most of the programs surveyed (78.3% [36/46]) had been in existence for greater than 5 years and offered both clinical (87.0% [40/46]) and basic (82.6% [38/46]) research opportunities. Greater than half of SSRPs (58.7% [27/46]) offered research positions at both the undergraduate and medical student level, and 23.9% of programs (11/46) were not restricted to students from a specific university. Less than half (34.8% [16/46]) of programs had a formal screening process for supervisor selection. Canadian SSRPs reported internal variations regarding the length of summer student employment, with 12-week (50.0% [23/46]) and 16-week (41.3% [19/46]) placements being most common. Funding came from a variety of contributions, including the host institution, principal investigator grants, private donations, government support and independent student funding. Disciplines of research offered by the programs are presented in Additional file 1: Appendix 4, with medicine being the most common and bioinformatics, biomedical engineering and physics being the least represented disciplines.

Competition, accessibility and student selection

The 46 programs collectively offered 1842 positions. Competitiveness of entry varied by program size and geographical location, as presented in Fig. 1. The competition level is reported as applicants per placement ratio (APPR). Small (94.1% [16 /17 responded]), medium (63.6% [7/11 responded]) and large programs (72.7% [8/11 responded]) reported a competition level of 4.27, 4.46 and 3.06 APPR, respectively. Pan-Canadian programs (4/7 responded [57.1%]) reported a competition level of 1.93 APPR. Amongst respondents (76.1% [35/46]), the mean APPR was 3.76:1. Half of the programs (50.0% [23/46]) reported on the proportion of female applicants and accepted female students, which were 53.7 and 53.0%, respectively. Less than one tenth of SSRPs (6.5% [3/46]) reported special consideration and/or reserved spots for underrepresented minorities during the interview and/or hiring process.

The majority of programs reported on their application requirements (93.5% [43/46]), with 41.9% of programs (18/43) requiring a minimum GPA cut-off and 58.1% (25/43) necessitating a reference letter. Less than half of SSRPs (44.2% [19/43]) requested a letter of interest detailing the applicant’s interest in a research-oriented career, a career in medicine (4.7% [2/43]) or both (16.3% [7/43]). Most programs (81.8% [36/44]) required students to independently find a principal investigator (PI) to supervise their research experience, while only 18.2% (8/44) had a centralized oversight committee to screen and assist students’ matches with PIs.

Student experience

Table 2 outlines the learning opportunities available to students. Of the programs offering clinical research experience, chart-based research was most common (73.0% [27/37]), while few programs (29.7% [11/37]) offered opportunities to conduct research within the operating room setting. Very few (5.9% [2/34]) SSRPs that were based within an academic health centre combined student’s research experience with a mandatory integrated clinical observership. As a supplementary educational component, the majority (61.4% [27/44]) of programs held educational research rounds and/or teaching sessions. The most common forms of presentation experience required by SSRPs included internal oral (43.5% [20/46]) and poster (41.3% [19/46]) presentations.

Program self-evaluation

Table 3 illustrates the usage of program self-evaluation strategies, such as measurement of student’s research productivity through resultant publications, collection of student and PI feedback, and students’ future career trajectories. Very few (11.4% [5/44]) programs reported collecting resultant student publications centrally. Although many (65.2% [30/46]) programs elicited student feedback, a smaller number (32.6% [15/46]) reported collecting PI feedback. Additionally, most (87.5% [35/40]) SSRPs did not follow up with students after program completion.

Discussion

This national survey provides, to our knowledge, the first comprehensive comparison of medically-related SSRPs. Overall, competition for SSRP positions was strong across Canada. Ontario and Alberta had the highest rates of competition, while pan-Canadian programs had relatively low competition rates. We identified that the most common hiring approach required students to independently find support from a research supervisor before applying to a program. Regarding supplementary educational opportunities, the majority of programs offered educational seminars and opportunities for students to enhance their oral presentation skills. Very few programs situated within an academic healthcare center had an integrated clinical observership as a mandatory component and the majority of students performing clinical research did not have direct patient contact. Finally, most programs did not track resultant student publications and few programs followed students after completion of their summer experience. Most performance review effort and post-program student follow-up was left to the discretion of the individual PI.

The growing shortage of clinician-scientists make early intervention and exposure to research a priority [9]. No previous study has comprehensively described the characteristics of existing SSRPs on a national scale. Agarwal et al. [13] sought to review SSRPs, but their study was limited in that it focused solely on national radiation oncology research programs in the US and only examined opportunities at the medical student level. The study surveyed five national programs and reported on a few general characteristics, including program length and the class of research offered [13]. Most of their surveyed SSRPs were 6–10 weeks long which, on average, was shorter than our findings. Solomon et al. [25], who examined SSRPs from two medical schools in the US, found that a positive summer research experience was associated with increased interest in a research career. Additionally, long-term follow-up data (minimum 8 years) revealed that alumni were more likely to pursue an academic and/or research career, as compared with their classmates [25]. Our study builds on previous research as it provides a more comprehensive comparison of programs on a national scale.

Our results highlight several important areas for potential improvement in regard to student selection, administration, curriculum and program evaluation. First, trends observed suggest that the selection of SSRP placements fell primarily to the independent research supervisors, as opposed to a central committee. A non-centralized student selection process may reduce student diversity through biases posed against specific cohorts of students and/or interpersonal connections; an area of particular concern as women and individuals from underrepresented groups represent a very small percentage of the clinician–scientist workforce [32, 33]. An oversight committee may help to mitigate selection bias, such as the one developed by the Michigan State University research education program which was associated with a diverse student body [18]. However, an oversight committee has potential limitations of increased cost and time to administer the program.

Second, we found that many programs offered opportunities for student growth through educational seminars and student presentations. A number of published program evaluations highlight the effectiveness of these supplementary opportunities for the development of technical research skills and non-technical skills, such as effective written communication and public speaking [17, 19, 27]. Further supplementary opportunities may involve offering clinical exposure as part of the summer research experience. Among programs affiliated with an academic health care center, very few offered a formal integrated clinical observership as part of their curriculum. The benefits and implication of incorporating clinical observerships has not been formally evaluated. However, recommendations stemming from studies on clinician scientist-training programs have underscored the need for clinical-research integration [10, 34]. These recommendations work under the assumption that concurrent clinical and research exposure enables students to appreciate the importance of basic research, research application and the symbiotic relationship between research and practicing medicine [10, 35, 36]. Such benefits have been reported by a few programs, including the Rural Summer Studentship Program at The University of Western Ontario [37]. A retrospective analysis of the program showed that clinical learning combined with a research component increased students’ knowledge about rural medicine and stimulated students’ interest in rural medicine both clinically and academically [37]. Similar evidence has been reported by individual programs in the US that utilized clinical observerships as a medium for students to better understand the relationship between research and practicing medicine [27, 38].

Third, our findings suggest that improved self-evaluation strategies are required to help programs monitor their impact on students and the scientific community. Self-evaluation could also help track the achievement of a program’s implicit and explicit goals [39]. These goals might be as fundamental as providing students an opportunity to practice research skills to as specific as generating interest in becoming a palliative care researcher [27, 37]. Successful implementation of short-term impact surveys and longitudinal tracking surveys have been modeled by National Cancer Institute funded short-term cancer research training programs [40]. Our results suggest that most programs in Canada do not track resultant student publications nor do they follow-up with students after program completion. Objective quantitative metrics of program success, such as publication rate, could help support SSRPs if they are applying for funding and/or support to improve or expand their program [41]. Such metrics could also be used to evaluate the effectiveness of curricular changes. Student follow-up may also serve as a form of self-evaluation to identify short- and long-term impacts of a SSRP on students’ research abilities, professional identify formation and future career trajectories. University alumni records have been shown to facilitate longer term tracking of program graduates’ contact information and automated literature searches facilitate identification of post-training publications [42, 43]. Social networking tools, such as Facebook and LinkedIn, are reported to be ineffective in longitudinal tracking of program alumni career path and professional achievements [42]. Utilization of newer social networking tools, such as ResearchGate©, and unique digital identifiers for research contributors, such as Open Researcher and Contributor IDs (ORCID), have not been explored and may serve as a more effective platform to track longer term research productivity of previous program alumni.

The lack of self-evaluation strategies reported may be attributed to the complexity of SSRPs. For example, institutional context, quality of supervision, differences in individual research projects and format of learning experiences. For this reason, traditional methods of program evaluation may not be feasible and/or accurate in measuring program impact. To address this challenge, we described a logic model which provides a framework to guide program evaluation of SSRPs (Additional file 1: Appendix 5). A logic model is a systematic and visual way of presenting the relationships among the operational resources of a program (inputs), program activities, the immediate results of a program (outputs) and desired program accomplishments (outcomes) [44, 45]. Table 4 highlights both program-related outcomes and system-wide outcomes stemming from the model that can be targeted for evaluation [46]. Understanding that SSRPs, in general, have differences in objectives and mission statement we have designed the model with the mind-set of flexibility. Collection of self-evaluative data would serve as a resource to allow future students and investigators to quantify and accurately assess the value of an individual SSRPs and compare outcomes across programs. Additionally, evaluation data could be used to help better define characteristics of effective SSRP programs and, ultimately, to help optimize training and support for those individuals who are poised to develop into clinician-scientists.

Limitations

This study had several limitations. First, there is no centralized directory of Canadian SSRPs, so it is possible that despite our efforts we may have missed some programs in our systematic search. Secondly, our overall response rate was 50.5%, with a lower response rate for western Canada (34.5%). Although typical for survey-based research [47], it leaves significant room for non-response bias and may serve to limit the generalizability of the results. We believe our sample is representative as the respondents were from diverse institutional types across Canada and there were no systematic differences between responders and non-responders with respect to geographic region or institution type. Thirdly, we limited our search to English-language-based programs, thus excluding French programs, based predominantly in Quebec. In an effort to develop a feasible survey we may not have captured the full breadth of available program data. Follow-up semi-structured interviews would have been a useful method to capture such data. It is also difficult to interpret the mixed reporting of summer programs for undergraduates and those for medical students. The two groups may have different motivations for participation; however, the need for evaluation of programs remains essential. The high competition level we described may be influenced by students applying to multiple SSRPs which our survey could not discern. Finally, due to administrative and logistical complexities of SSRPs it was sometimes difficult for program directors and/or coordinators to select survey responses that perfectly mirrored their unique processes and circumstances.

Conclusions

Our national survey of Canadian SSRPs provides an up-to-date picture of current practices. Overall, our findings suggested that SSRPs were highly competitive. The student selection process was primarily the responsibility of individual research supervisors; a task which may be better suited for a centralized oversight committee to decrease the administrative burdens placed on PIs and reduce the potential for bias and favouritism during the student selection process. While most programs provided students with supplementary educational opportunities, clinical exposure was rare. Very few programs have a robust formal self-evaluation plan to monitor fulfillment of their implicit and explicit goals. Programs should be encouraged to implement quantitative and qualitative self-evaluative strategies, such as tracking resultant student publications and long-term impact assessments. The logic model provided affords a framework of core outcomes that can be utilized to guide program evaluation of SSRPs and prioritize outcomes evaluation.

Abbreviations

- APPR:

-

Applicants per placement ratio

- PI:

-

Principal Investigator

- SSRPs:

-

Summer Student Research Programs

- US:

-

United States

References

Ley TJ, Rosenberg LE. The physician-scientist career pipeline in 2005: build it, and they will come. JAMA. 2005;294:1343–51.

Faxon DP. The chain of scientific discovery: the critical role of the physician-scientist. Circulation. 2002;105:1857–60.

Roberts SF, Fischhoff MA, Sakowski SA, Feldman EL. Perspective: transforming science into medicine. how clinician-scientists can build bridges across research’s “valley of death” Acad Med. 2012;87:266–70.

Schafer AI. The vanishing physician-scientist? Transl Res. 2010;155:1–2.

Gordon R. The vanishing physician scientist: a critical review and analysis. Account Res. 2012;19:89–113.

Nathan DG. Careers in translational clinical research-historical perspectives, future challenges. JAMA. 2002;287:2424–7.

Wyngaarden JB. The clinical investigator as an endangered species. N Engl J Med. 1979;301:1254–9.

National Institutes of Health. Physician-Scientist Workforce Working Group. Physician-Scientist Workforce report. 2014. https://acd.od.nih.gov/documents/reports/PSW_Report_ACD_06042014.pdf. Accessed 21 Oct 2017.

Hall AK, Mills SL, Lund PK. Clinician-investigator training and the need to pilot new approaches to recruiting and retaining this workforce. Acad Med. 2017;92(10):1382–9.

Strong MJ, Busing N, Goosney DL, Harris KA, Horsley T, Kuzyk A, et al. The rising challenge of training physician-scientists: recommendations from a Canadian national consensus conference. Acad Med. 2018;93:172–8.

Yager J, Greden J, Abrams M, Riba M. The Institute of Medicine’s report on research training in psychiatry residency: strategies for reform--background, results, and follow up. Acad Psychiatry. 2004;28(4):267–74.

Silberman EK, Belitsky R, Bernstein CA, Cabaniss DL, Crisp-Han H, Dickstein LJ, et al. Recruiting researchers in psychiatry: the influence of residency vs. early motivation. Acad Psychiatry. 2012;36(2):85–90.

Agarwal A, DeNunzio NJ, Ahuja D, Hirsch AE. Beyond the standard curriculum: a review of available opportunities for medical students to prepare for a career in radiation oncology. Int J Radiat Oncol Biol Phys. 2014;88:39–44.

Agarwal N, Norrmen-Smith IO, Tomei KL, Prestigiacomo CJ, Gandhi CD. Improving medical student recruitment into neurological surgery: a single institution’s experience. World Neurosurg. 2013;80:745–50.

Alfred L, Beerman PR, Tahir Z, LaHousse SF, Russell P, Sadler GR. Increasing underrepresented scientists in cancer research: the UCSD CURE program. J Cancer Educ. 2011;26:223–7.

Allen JG, Weiss ES, Patel ND, Alejo DE, Fitton TP, Williams JA, et al. Inspiring medical students to pursue surgical careers: outcomes from our cardiothoracic surgery research program. Ann Thorac Surg. 2009;87:1816–9.

Cluver J, Book S, Brady K, Back S, Thornley N. Engaging medical students in research: reaching out to the next generation of physician-scientists. Acad Psychiatry. 2014;38:345–9.

Crockett ET. A research education program model to prepare a highly qualified workforce in biomedical and health-related research and increase diversity. BMC Med Educ. 2014;14:202.

Kashou A, Durairajanayagam D, Agarwal A. Insights into an award-winning summer internship program: the first six years. World J Mens Health. 2016;34:9–19.

Eagan MK Jr, Hurtado S, Chang MJ, Garcia GA, Herrera FA, Garibay JC. Making a difference in science education: the impact of undergraduate research programs. Am Educ Res J. 2013;50:683–713.

Fuchs J, Kouyate A, Kroboth L, McFarland W. Growing the pipeline of diverse HIV investigators: the impact of mentored research experiences to engage underrepresented minority students. AIDS Behav. 2016;20(Suppl 2):249–57.

Zier K, Stagnaro-Green A. A multifaceted program to encourage medical students’ research. Acad Med. 2001;76:743–7.

Harding CV, Akabas MH, Andersen OS. History and outcomes of 50 years of physician-scientist training in medical scientist training programs. Acad Med. 2017;92:1390–8.

de Leng WE, Stegers-Jager KM, Born MP, Frens MA, Themmen AP. Participation in a scientific pre-university program and medical students’ interest in an academic career. BMC Med Educ. 2017;17:150.

Solomon SS, Tom SC, Pichert J, Wasserman D, Powers AC. Impact of medical student research in the development of physician-scientists. J Investig Med. 2003;51:149–56.

Decker SJ, Grajo JR, Hazelton TR, Hoang KN, McDonald JS, Otero HJ, et al. Research challenges and opportunities for clinically oriented academic radiology departments. Acad Radiol. 2016;23:43–52.

Kolber BJ, Janjic JM, Pollock JA, Tidgewell KJ. Summer undergraduate research: a new pipeline for pain clinical practice and research. BMC Med Educ. 2016;16:135.

Simpson CF, Durso SC, Fried LP, Bailey T, Boyd CM, Burton J. The Johns Hopkins geriatric summer scholars program: a model to increase diversity in geriatric medicine. J Am Geriatr Soc. 2005;53:1607–12.

Durairajanayagam D, Kashou AH, Tatagari S, Vitale J, Cirenza C, Agarwal A. Cleveland Clinic’s summer research program in reproductive medicine: an inside look at the class of 2014. Med Educ Online. 2015;20(1):29517.

Mackenzie CJ, Elliot GR. Summer internships: 12 years’ experience with undergraduate medical students in summer employment in various areas of preventive medicine. Can Med Assoc J. 1965;92:740–6.

Dillman DA, Christian LM, Smyth JD. Internet, phone, mail, and mixed-mode surveys: the tailored design method. Hoboken: John Wiley & Sons, Inc; 2009.

Eliason J, Gunter B, Roskovensky L. Diversity resources and data snapshots. Washington: Association of American Medical Colleges; 2015. https://www.aamc.org/download/431540/data/may2015pp.pdf. Accessed 14 Jul 2017.

Jeffe DB, Andriole DA, Wathington HD, Tai RH. The emerging physician-scientist workforce: demographic, experiential, and attitudinal predictors of MD-PhD program enrollment. Acad Med. 2016;89:1398–407.

Daye D, Patel CB, Ahn J, Nguyen FT. Challenges and opportunities for reinvigorating the physician-scientist pipeline. J Clin Invest. 2015;125:883–7.

Kluijtmans M, de Haan E, Akkerman S, van Tartwijk J. Professional identity in clinician-scientists: brokers between care and science. Med Educ. 2017;51:645–55.

Rosenblum ND, Kluijtmans M, Ten Cate O. Professional identity formation and the clinician-scientist: a paradigm for a clinical career combining two distinct disciplines. Acad Med. 2016;91:1612–7.

Zorzi A, Rourke J, Kennard M, Peterson M, Miller K. Combined research and clinical learning make rural summer studentship program a successful model. Rural Remote Health. 2005;5:401.

Mason BS, Ross W, Ortega G, Chambers MC, Parks ML. Can a strategic pipeline initiative increase the number of women and underrepresented minorities in orthopaedic surgery? Clin Orthop Relat Res. 2016;474:1979–85.

White HB, Usher DC. Engaging novice researchers in the process and culture of science using a “pass-the-problem” case strategy. Biochem Mol Biol Educ. 2015;43:341–4.

Desmond RA, Padilla LA, Daniel CL, Prickett CT, Venkatesh R, Brooks CM, et al. Career outcomes of graduates of R25E short-term cancer research training programs. J Cancer Educ. 2016;31:93–100.

Godkin MA. A successful research assistantship program as reflected by publications and presentations. Fam Med. 1993;25:45–7.

Padilla LA, Venkatesh R, Daniel CL, Desmond RA, Brooks CM, Waterbor JW. An evaluation methodology for longitudinal studies of short-term cancer research training programs. J Cancer Educ. 2016;31:84–92.

Padilla LA, Desmond RA, Brooks CM, Waterbor JW. Automated literature searches for longitudinal tracking of cancer research training program graduates. J Cancer Educ 2016. Accessed 14 Jul 2017. doi: https://doi.org/10.1007/s13187-016-1120-4. [Epub ahead of print].

Frye AW, Hemmer PA. Program evaluation models and related theories: AMEE guide no. 67. Med Teach. 2012;34:288–99.

W.K. Kellogg Foundation. Logic model development guide. Battle Creek (MI): W.K. Kellogg Foundation; c1998. Updated January, 2004. Accessed 14 Jul 2017.

Armstrong EG, Barsion SJ. Using an outcomes-logic-model approach to evaluate a faculty development program for medical educators. Acad Med. 2006;81:483–8.

Baruch Y, Holtom BC. Survey response rate levels and trends in organizational research. Hum Relat. 2008;61:1139–60.

Acknowledgements

The authors wish to thank Susan Webber, RN, Women’s College Research Institute for her administrative contribution to this manuscript. The authors wish to thank Arielle A. Brickman, M.D. candidate, and Olivia R. Ghosh-Swaby, M.Sc. candidate, for contributing in the proofreading process of the manuscript. Lastly, the authors wish to thank Mona Frantzke, MLS, for the librarian support she provided during the literature review process for the manuscript.

Funding

This study was supported by a grant from the Heart and Stroke Foundation of Canada (#G-15-0009034). Mr. Patel was supported by a Women’s College Research Institute Summer Research Studentship Award. Dr. Udell was supported in part by a Heart and Stroke Foundation of Canada National New Investigator/Ontario Clinician Scientist Award (Phase I), Ontario Ministry of Research and Innovation Early Researcher Award, Women’s College Research Institute and the Department of Medicine, Women’s College Hospital; Peter Munk Cardiac Centre, University Health Network; Department of Medicine and Heart and Stroke Richard Lewar Centre of Excellence in Cardiovascular Research, University of Toronto. Dr. Walsh holds a Career Development Award from the Canadian Child Health Clinician Scientist Program and an Early Researcher Award from the Ontario Ministry of Research and Innovation.

The study’s sponsors had no role in the design and conduct of this study, in the collection, analysis, and interpretation of the data, or in the preparation or approval of the manuscript for publication.

Availability of data and materials

Not applicable.

Author information

Authors and Affiliations

Contributions

SP was involved with acquistion of data. All authors were involved with the conception and design of the survey. All authors were involved with analysis and interpretation of data. All authors were involved with drafting the manuscript and have read and approved the final manuscript. All authors agree to be accountable for all aspects of the work.

Corresponding author

Ethics declarations

Authors’ information

Sagar Patel is a medical student at the University of Toronto, Toronto, Canada.

Dr. Catharine M. Walsh, MD, MEd, PhD is a staff gastroenterologist in the Division of Gastroenterology, Hepatology and Nutrition, and a clinician-scientist in the Learning and Research Institutes at the Hospital for Sick Children. She is a cross-appointed scientist at the Wilson Centre and an Assistant Professor of Paediatrics, Faculty of Medicine, University of Toronto, Toronto, Canada.

Dr. Jacob A. Udell is a cardiologist and clinician-scientist at Women’s College Hospital and the Peter Munk Cardiac Centre, University Health Network and an Assistant Professor of Medicine, Faculty of Medicine, University of Toronto, Toronto, Canada.

Ethical approval and conset to participate

Ethical approval was obtained from the Research Ethics Board at Women’s College Hospital and verbal consent to participate was received from all participants.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Supplemental Digital Content (DOC 347 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Patel, S., Walsh, C.M. & Udell, J.A. Exploring medically-related Canadian summer student research programs: a National Cross-sectional Survey Study. BMC Med Educ 19, 140 (2019). https://doi.org/10.1186/s12909-019-1577-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-019-1577-z