Abstract

Objective

To construct and validate a model to predict responsible nerve roots in lumbar degenerative disease with diagnostic doubt (DD).

Methods

From January 2009-January 2013, 163 patients with DD were assigned to the construction (n = 106) or validation sample (n = 57) according to different admission times to hospital. Outcome was assessed according to the Japanese Orthopedic Association (JOA) recovery rate as excellent, good, fair, and poor. The first two results were considered as effective clinical outcome (ECO). Baseline patient and clinical characteristics were considered as secondary variables. A multivariate logistic regression model was used to construct a model with the ECO as a dependent variable and other factors as explanatory variables. The odds ratios (ORs) of each risk factor were adjusted and transformed into a scoring system. Area under the curve (AUC) was calculated and validated in both internal and external samples. Moreover, calibration plot and predictive ability of this scoring system were also tested for further validation.

Results

Patients with DD with ECOs in both construction and validation models were around 76 % (76.4 and 75.5 % respectively). Risk factors: more preoperative visual analog pain scale (VAS) score (OR = 1.56, p < 0.01), stenosis levels of L4/5 or L5/S1 (OR = 1.44, p = 0.04), stenosis locations with neuroforamen (OR = 1.95, p = 0.01), neurological deficit (OR = 1.62, p = 0.01), and more VAS improvement of selective nerve route block (SNRB) (OR = 3.42, p = 0.02). Validation: the internal area under the curve (AUC) was 0.85, and the external AUC was 0.72, with a good calibration plot of prediction accuracy. Besides, the predictive ability of ECOs was not different from the actual results (p = 0.532).

Conclusions

We have constructed and validated a predictive model for confirming responsible nerve roots in patients with DD. The associated risk factors were preoperative VAS score, stenosis levels of L4/5 or L5/S1, stenosis locations with neuroforamen, neurological deficit, and VAS improvement of SNRB. A tool such as this is beneficial in the preoperative counseling of patients, shared surgical decision making, and ultimately improving safety in spine surgery.

Similar content being viewed by others

Introduction

Lumbar degenerative disease (LDD) often displayed as multilevel degeneration and stenosis occurs due to compression or ischemia, or both, of the lumbosacral nerve roots as a consequence of osteoarthritic thickening of the articulating facet joints, infolding of the ligamentum flava, and degenerative bulging of the intervertebral discs [1–3]. It is the main cause of chronic low back pain in old people, leading to spine surgery among individuals older than 65 years [4, 5]. With an increase in aging population, the number of people who suffer from this condition is expected to grow exponentially and this will have a significant effect on healthcare resources in the near future. Surgical decompression of the responsible compression sites remains as a widely accepted therapy of LDD currently [6–10].

Interestingly, although most patients with LDD exhibit a typical painful experience or present obvious degenerative changes on computed tomography or magnetic resonance imaging (MRI) scans, there still exists a group of patients with LDD whose diagnosis are uncertain or who have an ambiguous compressive region. In other words, when the responsible nerve roots are vague, or the pain source does not correspond to typical classical dermatomal patterns [11, 12], it is very difficult to select the decompression site and make reasonable surgical plans for such patients with diagnosis doubt (DD). Moreover, to date, there are no studies proposing a predictive method to determine responsible nerve roots in patients with DD. Nonetheless, studies on this topic are still in progress. Recently, an increasing amount of evidence has demonstrated selective nerve root block (SNRB) may play a role in predicting the responsible compression nerve roots [13–18]. However, LDD usually reported a complicated progress, involving multiple factors, such as stenosis levels [19], stenosis locations [20], neurological deficit [21], and preoperative Oswestry disability index (ODI) score [22], that makes it quite complex to distinguish the responsible nerve roots.

Therefore, in this case, we planned to use relevant parameter of SNRB combined with some risk factors screened out from the baseline patient-related factors and clinical characteristics to establish a scoring system through multivariable logistic model. After that, the utility of this new predictive model was examined in an external subpopulation of a validation sample. Ultimately, we hope this new predictive model will play a role in decision making of which segments should to be decompressed and how many decompression segments should be conducted in such patients with DD.

Materials and methods

Research institution

The study was undertaken in the Department of Orthopedics of two hospitals (Navy General Hospital, Beijing, China, and Gaozhou people’s Hospital, Guangdong, China).

Study design

We conducted a study evaluating whether baseline patient and clinical characteristics could distinguish responsible nerve roots of LDD patients with DD. Briefly, primary outcome measures included visual analog pain scale (VAS) score (0–10 points), ODI, Japanese orthopedic association (JOA) score (0–29 points), the diagnostic test of SNRB, and imaging information.

The inclusion and exclusion criteria

Patients with DD were retrospectively and consecutively reviewed from January 2009 –January 2013. The inclusion criteria were as follows: (a) Patients diagnosed as LDD. (b) The physical examinations, radiography, MRI scans, and SNRB were all conducted for a definite diagnosis. (c) All tests of VAS score (0–10 points), ODI and JOA score (0–29 points) were evaluated and available. (d) The main characteristics of these patients were that the responsible nerve roots or pain source were difficult to be distinguished, or physical examination did not correspond to imaging scan. (e) Patients had undergone laminectomy decompression and were followed clinically for a minimum period of 24 months. The exclusion criteria included lumbar spinal stenosis caused by spondylolisthesis, tumor, deformity, osteoporosis and infection.

Statistical methods

The quantitative variables were described by mean and standard deviations and the qualitative variables by absolute and relative frequencies. All the analyses were performed at a significance level of 5 % and the associated confidence intervals (CIs) were estimated for each relevant parameter. All the analyses performed by using IBM SPSS Statistics 19.0. Mann–Whitney U test or Pearson χ2 test (according to the type of variable) were used to verify differences in patient baseline and clinical characteristics.

In the construction sample, a multivariate logistic regression model was made with outcome as the dependent variable and the other study variables as explanatory variables. The receiver operating characteristic (ROC) curve was calculated and the following points determined: [23, 24] (1) optimum: that which minimized the√([1-sensitivity]2þ[1-specificity]2); (2) discard: that which had a negative likelihood ratio (NLR) < 0.1, or the left-tail probability < 5 % (value usually taken as a small error in medical statistics); and (3) confirmation: that with positive likelihood ratio (PLR) > 10 or, if this did not exist, that with right-tail probability > 55 % (value slightly greater than chance, 50 %). For each of the points calculations were made of the sensitivity, specificity, PLR and NLR. The following risk groups were defined: very low (<discard point), low (≥discard point and < optimum point), medium (≥optimum point and < confirmation point) and high (≥confirmation point).

Ethical approval

The application for approval of human research protocol has been reviewed and approved by the Navy General Hospital Ethical Committee (NGHEC) NGHEC Approval No. 2015–0107.

Results

To evaluate the responses of last JOA score after a minimum of 2 years follow-up, questionnaires were prepared to determine the percentage of patients with ECO or non-effective clinical outcome (NECO) relative to their initial questionnaire values. The clinical outcomes were divided into the following four types according to different JOA recovery rate which was calculated by the Hirabayashi method [25]: (postoperative score − preoperative score)/(29 − preoperative score) × 100 %. The four types of recovery rates were graded as follows: >75 %, excellent; 50–74 %, good; 25–49 %, fair; and <25 %, poor. The first two results were considered as ECOs.

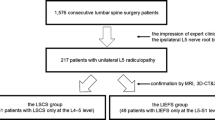

Totally, of the 191 patients included in the study, 163 cases finally fulfilled the inclusion criteria, representing a loss of 14.7 % (n = 28), of whom, had at least one of the exclusion criteria. The 163 patients with DD were assigned to the construction sample (n = 106) or validation sample (n = 57) according to different admission times to hospital.

The baseline patient-related factors between the ECOs (excellent or good) and NECOs (fail or poor) on follow-up for no less than 2 years are compared in Table 1. The results showed no significant difference between the two groups (P = 0.08–0.87) (Table 1).

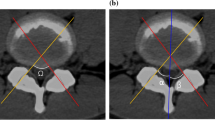

Additionally, a comparison of clinical characteristics between the ECOs and NECOs is presented in Table 2. The significant risk factors were stenosis level (L4/5, P = 0.02; L5/S1, P = 0.03), stenosis locations (neuroforamen, P = 0.03), neurological deficit (Reflexes, P = 0.03; Sensory, P = 0.02; Motor, P = 0.02), higher VAS score before operation (P = 0.01), and more VAS improvement rate after SNRB (P = 0.01). Our results of risk factors were mainly tallying with the previous report except for the higher VAS score before operation. This is a newly found risk factor in LDD patients with DD that may reflex the compression nerve roots to a certain extent.

After all risk factors were screened out, a multivariate logistic regression model was used with clinical outcome as the dependent variable, and the 5 risk factors as explanatory variables in the construction sample (Table 3). Thus, the respective odds ratio (OR) of risk factors were higher VAS score before operation (OR = 1.56, 95 % CI: 1.08–2.65, P < 0.01), stenosis levels of L4/5 or L5/S1 (OR = 1.44, 95 % CI: 1.10–1.89, P = 0.04), stenosis locations of neuroforamen (OR = 1.95, 95 % CI: 1.32–3.51, P = 0.01), neurological deficit (OR = 1.62, 95 % CI: 1.02–2.79, P = 0.01), and VAS improvement after SNRB (OR = 3.42, 95 % CI: 1.27–7.64, P = 0.02).

Once the logistic regression model was constructed, this was transformed into a scoring system according to the OR of each risk factor (Table 4). The key points that defined the risk groups were as follows. 1) Discard: value, 5; sensitivity, 0.98 (95 % CI: 0.95–1.00); specificity, 0.09 (95 % CI: 0.05–0.14); PLR, 2.03 (95 % CI: 1.93–2.77); NLR, 0.43 (95 % CI: 0.24–0.65). 2) Optimum: value, 11; sensitivity, 0.86 (95 % CI: 0.82–0.93); specificity, 0.67 (95 % CI: 0.56–0.75); PLR, 2.46 (95 % CI: 2.13–2.85); NLR, 0.46 (95 % CI: 0.22–0.57). 3) Confirmation: value, 16; sensitivity, 0.13 (95 % CI: 0.06–0.21); specificity, 0.99 (95 % CI: 0.97–1.00); PLR, 3.39 (95 % CI: 2.64–3.93); NLR, 0.61 (95 % CI: 0.42–0.79).

After the system score was established, we firstly tested it in the construction sample as an internal validation and the result of the area under curve (AUC) was 0.85, which demonstrated it to be a good model (Fig. 1). Moreover, the risk factors in the construction and validation samples were analyzed and the results were similar in both samples (P = 0.085–0.889) (Table 3). The ECOs of DD patients were around 76 % in the two groups (76.4 and 75.5 % respectively). On this basis, the ROC curve for our scoring system in the validation sample are reasonable and the AUC was 0.72 (Fig. 2). Additionally, to evaluate the calibration plot of this model, the data were also tested in the validation sample and the predicted probability showed good linear relationship with the actual probability, which exhibited as an appropriate calibration plot (Fig. 3). Finally, we once again compared the predicted and actually observed outcomes of this scoring system, and the analyzed result show no significant difference within the two samples (P = 0.532, Fig. 4).

Discussion

This study constructed and validated a predictive model to determine the responsible nerve roots in DD patients. This model was constructed by transforming complex factors into a simple scoring system to enable a rapid calculation. To the best of our knowledge, this is the first predictive model in clinical application. In this model, both the internal and external AUC were > 0.7 and the calibration plot of prediction accuracy were tested as a good linear relationship. In addition, the predictive and actual outcomes showed no significant difference. Hence, this model was applicable and valid. As is known to all, LDD often displays several segmental pathological changes without exact localizing signs on physical examination, because of its elusive symptoms and missing standards on imaging analysis [26]. When counseling a patient with DD on which segments to be decompressed, or how many decompression segments to be conducted, a predictive model such as this, is of paramount importance.

In addition, a predictive model like this is also beneficial when considering risk factors. In our study, the univariate logistic regression model suggested 5 risk factors including higher VAS score before operation, stenosis levels of L4/5 or L5/S1, stenosis locations of neuroforamen, neurological deficit, and VAS improvement after SNRB. This will also play some role in some other kinds of lumbar spine diseases like failed back syndrome. Moreover, this model is a useful adjunct in predicting the clinical outcome after decompression surgery [13, 27, 28]. In our analysis, the OR of VAS improvement after SNRB was 3.42, which played a major role in the model. At this point, whether the pain relief after surgical decompression is good could be forecasted by this test to a certain extent because of the evidence that pain originating from nerve root compression can be effectively treated by surgical decompression [29–31]. Nevertheless, our model also combines together several other related risk factors in order to improve the predictive accuracy, because only SNRB is not a cost-effective method for identifying the symptomatic nerve roots [27].

Our model was built upon JOA recovery rate with a minimum following-up of 24 months. Although this cannot replace long-term follow-up results and ultimate outcomes, our conclusions are based on the curative effect, and this model is supported by comprehensive evidence of credible outcomes in clinical trials. Meanwhile, this model also cannot draw any definite conclusion. At least, when the score in our model is >16 points or <5 points, we may get a rational and objective reason about whether it should be considered as a responsible segment or not. Additionally, this model could be used as a reference index in patients with DD for arriving at a diagnosis and for treatment purposes.

Since this model was based on the SNRB test, we would like to recommend the following guidelines: 1). Surgeons should be familiar with the anatomy so that he or she could accurately determine the precise nerve root of the test; 2). It is still important to preliminarily identify the possible responsible segment by combination of detailed physical examination and radiological results before SNRB; 3) In a possible liability gap, the most likely responsible segment should be tested first rather than one by one. If symptoms were relieved by >50 %, it could be judged as the responsible gap, or else taking order from the lowest nerve roots, because the block of upper nerve root is prone to defuse to the lower one, and thus, interferes with the result. 4). Needle should be introduced gradually under fluoroscopic guidance to avoid unnecessary nerve root injury and 5). The single dose should not be too much, generally 1 % lidocaine 0.5–1 ml, otherwise it will also cause other nerve roots.

As with any study, there are limitations to the present study. First, a great number of variations exist and we possibly did not identify all significant variables to predict the result. Future studies of this model may consider the effect of a more detailed database that contains more input variables (such as electromyography and the walking distance). Second, the number of patients was relatively small and this may have prevented significant correlation between the two groups. Finally, many subjective grading scores were not performed by the same surgeon on the same patient, and that may introduce some errors between the groups. However, we did attempt to minimize the weaknesses by using strict criteria for inclusion and exclusion. Although we were also very strict while performing the case inclusion criteria, these differences might be reduced but not abolished. Nevertheless, our model was validated, so that precise predictions are possible.

Conclusions

In summary, this study constructed and validated a predictive model that can be used to determine responsible segments or pain source of patients with DD. This tool is of substantial value in the preoperative counseling of patients, shared surgical decision making, and ultimately improving safety in spine surgery. Second, as we progress into an era of quality metrics and performance assessment, a tool like this can be beneficial in risk adjustment. Future predictive models are recommended for further risk stratification and modification.

Abbreviations

- AUC:

-

area under the curve

- BMI:

-

body mass index

- Cls:

-

confidence intervals

- DD:

-

lumbar degenerative disease with diagnostic doubt

- ECO:

-

effective clinical outcome

- JOA:

-

Japanese orthopaedic association

- LDD:

-

lumbar degenerative disease

- NECO:

-

non- effective clinical outcome

- NLR:

-

negative likelihood ratio

- ODI:

-

Oswestry disability index

- ORs:

-

odds ratios

- PLR:

-

positive likelihood ratio

- SD:

-

standard deviation

- SLR:

-

single leg raise

- SNRB:

-

selective nerve root block

- VAS:

-

visual analog pain scale

References

Yabuki S, Fukumori N, Takegami M, Onishi Y, Otani K, Sekiguchi M, Wakita T, Kikuchi S, Fukuhara S, Konno S. Prevalence of lumbar spinal stenosis, using the diagnostic support tool, and correlated factors in Japan: a population-based study. J Orthop Sci. 2013;18(6):893–900.

Genevay S, Atlas SJ. Lumbar spinal stenosis. Best Pract Res Clin Rheumatol. 2010;24(2):253–65.

Kalff R, Ewald C, Waschke A, Gobisch L, Hopf C. Degenerative lumbar spinal stenosis in older people: current treatment options. Dtsch Arztebl Int. 2013;110(37):613–23. quiz 624.

Chou R, Baisden J, Carragee EJ, Resnick DK, Shaffer WO, Loeser JD. Surgery for low back pain: a review of the evidence for an American Pain Society Clinical Practice Guideline. Spine. 2009;34(10):1094–109.

Deyo RA, Mirza SK, Martin BI, Kreuter W, Goodman DC, Jarvik JG. Trends, major medical complications, and charges associated with surgery for lumbar spinal stenosis in older adults. JAMA. 2010;303(13):1259–65.

Slatis P, Malmivaara A, Heliovaara M, Sainio P, Herno A, Kankare J, Seitsalo S, Tallroth K, Turunen V, Knekt P, et al. Long-term results of surgery for lumbar spinal stenosis: a randomised controlled trial. Eur Spine J. 2011;20(7):1174–81.

Kovacs FM, Urrutia G, Alarcon JD. Surgery versus conservative treatment for symptomatic lumbar spinal stenosis: a systematic review of randomized controlled trials. Spine. 2011;36(20):E1335–51.

Alimi M, Hofstetter CP, Pyo SY, Paulo D, Hartl R. Minimally invasive laminectomy for lumbar spinal stenosis in patients with and without preoperative spondylolisthesis: clinical outcome and reoperation rates. J Neurosurg Spine. 2015;22(4):339–52.

Nydegger A, Bruhlmann P, Steurer J. Lumbar spinal stenosis: diagnosis and conservative treatment. Praxis. 2013;102(7):391–8.

Theodoridis T, Kramer J, Kleinert H. Conservative treatment of lumbar spinal stenosis--a review. Z Orthop Unfall. 2008;146(1):75–9.

Jensen MC, Brant-Zawadzki MN, Obuchowski N, Modic MT, Malkasian D, Ross JS. Magnetic resonance imaging of the lumbar spine in people without back pain. N Engl J Med. 1994;331(2):69–73.

Germon T, Singleton W, Hobart J. Is NICE guidance for identifying lumbar nerve root compression misguided? Eur Spine J. 2014;23 Suppl 1:S20–4.

Williams AP, Germon T. The value of lumbar dorsal root ganglion blocks in predicting the response to decompressive surgery in patients with diagnostic doubt. Spine J. 2015;15(3 Suppl):S44–9.

Zhang GL, Zhen P, Chen KM, Zhao LX, Yang JL, Zhou JH, Xue QY. Application of selective nerve root blocks in limited operation of the lumbar spine. Zhongguo Gu Shang. 2014;27(7):601–4.

Shanthanna H. Ultrasound guided selective cervical nerve root block and superficial cervical plexus block for surgeries on the clavicle. Indian J Anaesth. 2014;58(3):327–9.

Ito K, Yukawa Y, Machino M, Inoue T, Ouchida J, Tomita K, Kato F. Treatment outcomes of intradiscal steroid injection/selective nerve root block for 161 patients with cervical radiculopathy. Nagoya J Med Sci. 2015;77(1–2):213–9.

Manchikanti L, Kaye AD. Comment on the Evaluation of the Effectiveness of Hyaluronidase in the Selective Nerve Root Block of Radiculopathy. Asian Spine J. 2015;9(6):995–6.

Desai A, Saha S, Sharma N, Huckerby L, Houghton R. The short- and medium-term effectiveness of CT-guided selective cervical nerve root injection for pain and disability. Skeletal Radiol. 2014;43(7):973–8.

Foulongne E, Derrey S, Ould Slimane M, Leveque S, Tobenas AC, Dujardin F, Freger P, Chassagne P, Proust F. Lumbar spinal stenosis: which predictive factors of favorable functional results after decompressive laminectomy? Neurochirurgie. 2013;59(1):23–9.

Kurd MF, Lurie JD, Zhao W, Tosteson T, Hilibrand AS, Rihn J, Albert TJ, Weinstein JN. Predictors of treatment choice in lumbar spinal stenosis: a spine patient outcomes research trial study. Spine. 2012;37(19):1702–7.

Kim HJ, Park JW, Kang KT, Chang BS, Lee CK, Kang SS, Yeom JS. Determination of the optimal cutoff values for pain sensitivity questionnaire scores and the Oswestry disability index for favorable surgical outcomes in subjects with lumbar spinal stenosis. Spine. 2015;40(20):E1110–6.

Kanayama M, Oha F, Hashimoto T. What types of degenerative lumbar pathologies respond to nerve root injection? A retrospective review of six hundred and forty one cases. Int Orthop. 2015;39(7):1379–82.

Palazon-Bru A, Martinez-Orozco MJ, Perseguer-Torregrosa Z, Sepehri A, Folgado-de la Rosa DM, Orozco-Beltran D, Carratala-Munuera C, Gil-Guillen VF. Construction and validation of a model to predict nonadherence to guidelines for prescribing antiplatelet therapy to hypertensive patients. Curr Med Res Opin. 2015;31(5):883–9.

Ramirez-Prado D, Palazon-Bru A, Folgado-de-la Rosa DM, Carbonell-Torregrosa MA, Martinez-Diaz AM, Gil-Guillen VF. Predictive models for all-cause and cardiovascular mortality in type 2 diabetic inpatients. A cohort study. Int J Clin Pract. 2015;69(4):474–84.

Hirabayashi K, Miyakawa J, Satomi K, Maruyama T, Wakano K. Operative results and postoperative progression of ossification among patients with ossification of cervical posterior longitudinal ligament. Spine. 1981;6(4):354–64.

Shabat S, Arinzon Z, Gepstein R, Folman Y. Long-term follow-up of revision decompressive lumbar spinal surgery in elderly patients. J Spinal Disord Tech. 2011;24(3):142–5.

Mallinson PI, Tapping CR, Bartlett R, Maliakal P. Factors that affect the efficacy of fluoroscopically guided selective spinal nerve root block in the treatment of radicular pain: a prospective cohort study. Can Assoc Radiol J. 2013;64(4):370–5.

Zhang C, Zhou HX, Feng SQ, Ning GZ, Wu Q, Li FY, Zheng YF, Wang P. The efficacy analysis of selective decompression of lumbar root canal of elderly lumbar spinal stenosis. Zhonghua Wai Ke Za Zhi. 2013;51(9):816–20.

Atlas SJ, Keller RB, Wu YA, Deyo RA, Singer DE. Long-term outcomes of surgical and nonsurgical management of sciatica secondary to a lumbar disc herniation: 10 year results from the maine lumbar spine study. Spine. 2005;30(8):927–35.

Weinstein JN, Lurie JD, Tosteson TD, Tosteson AN, Blood EA, Abdu WA, Herkowitz H, Hilibrand A, Albert T, Fischgrund J. Surgical versus nonoperative treatment for lumbar disc herniation: four-year results for the Spine Patient Outcomes Research Trial (SPORT). Spine. 2008;33(25):2789–800.

Lurie JD, Tosteson TD, Tosteson AN, Zhao W, Morgan TS, Abdu WA, Herkowitz H, Weinstein JN. Surgical versus nonoperative treatment for lumbar disc herniation: eight-year results for the spine patient outcomes research trial. Spine. 2014;39(1):3–16.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors of this manuscript had no conflicts of interest to disclose.

Authors’ contributions

RDK designed the study protocol. LXC, WYH had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. WYH participated in the design of the study and performed the statistical analysis. LXC managed the literature searches, summaried of previous related work and wrote the first draft of the manuscript. LXC, RDK provided revision for intellectual content and final approval of the manuscript. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Li, X., Bai, X., Wu, Y. et al. A valid model for predicting responsible nerve roots in lumbar degenerative disease with diagnostic doubt. BMC Musculoskelet Disord 17, 128 (2016). https://doi.org/10.1186/s12891-016-0973-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12891-016-0973-3