Abstract

Background

Process evaluation is increasingly recognized as an important component of effective implementation research and yet, there has been surprisingly little work to understand what constitutes best practice. Researchers use different methodologies describing causal pathways and understanding barriers and facilitators to implementation of interventions in diverse contexts and settings. We report on challenges and lessons learned from undertaking process evaluation of seven hypertension intervention trials funded through the Global Alliance of Chronic Diseases (GACD).

Methods

Preliminary data collected from the GACD hypertension teams in 2015 were used to inform a template for data collection. Case study themes included: (1) description of the intervention, (2) objectives of the process evaluation, (3) methods including theoretical basis, (4) main findings of the study and the process evaluation, (5) implications for the project, policy and research practice and (6) lessons for future process evaluations. The information was summarized and reported descriptively and narratively and key lessons were identified.

Results

The case studies were from low- and middle-income countries and Indigenous communities in Canada. They were implementation research projects with intervention arm. Six theoretical approaches were used but most comprised of mixed-methods approaches. Each of the process evaluations generated findings on whether interventions were implemented with fidelity, the extent of capacity building, contextual factors and the extent to which relationships between researchers and community impacted on intervention implementation. The most important learning was that although process evaluation is time consuming, it enhances understanding of factors affecting implementation of complex interventions. The research highlighted the need to initiate process evaluations early on in the project, to help guide design of the intervention; and the importance of effective communication between researchers responsible for trial implementation, process evaluation and outcome evaluation.

Conclusion

This research demonstrates the important role of process evaluation in understanding implementation process of complex interventions. This can help to highlight a broad range of system requirements such as new policies and capacity building to support implementation. Process evaluation is crucial in understanding contextual factors that may impact intervention implementation which is important in considering whether or not the intervention can be translated to other contexts.

Similar content being viewed by others

Background

A substantial challenge faced by implementation researchers is to understand if, why and how an intervention has worked in a real world context, and to explain how research that has demonstrated effectiveness in one context may or may not be effective in another context or setting [1]. Process evaluation provides a process by which researchers can explain the outcomes resulting from complex interventions that often have nonlinear implementation processes. Most trials are designed to evaluate whether an intervention is effective in relation to one, or more, easily measureable outcome indicator (e.g. blood pressure). Process evaluations provide additional information on the implementation process, how different structures and resources were used, the role, participation and reasoning of different actors [2, 3], contextual factors, and how all these might have impacted the outcomes [4].

Several authors have argued that in complex interventions, process measures used to examine the success of the implementation strategy, must be separated from outcomes that assess the success of the intervention itself [5]. The recent Standards of Reporting Implementation Studies (StarRI) statement consolidates and supports this concept [6, 7]. Given the significance of the causal pathway of a research intervention, which is crucial to future policy and program decisions, it is helpful to understand how different research programs, in different settings, employ process evaluation and the lessons that emerge from these approaches.

Since 2009, the Global Alliance for Chronic Diseases (GACD) has facilitated the funding and global collaboration of 49 innovative research projects for the prevention and management of chronic non-communicable diseases [8] with funding from GACD member agencies.Footnote 1 Process evaluation has increased in importance and is now an explicit criterion for project funding through this program. The objective of this paper is therefore to describe the different process evaluation approaches used in the first round of GACD projects related to hypertension, and to document the findings and lessons learned in various global settings.

Methods

Data collection

Preliminary data on how the projects planned their process evaluations, were collected from the 15 hypertension research teams in the network in 2015 and was used to develop a data collection tool. This tool was then used to collate case study information from seven projects based on the following themes: (1) description of the intervention, (2) objectives of the process evaluation, (3) approach (including theoretical basis, main sources of data and analysis methods), (4) main findings of the study and the process evaluation, (5) implications for the project, policy and research practice and (6) lessons for future process evaluations. The case study approach recognizes that projects were at different stages of intervention/ evaluation. Each process evaluation was nested within an intervention study that was either completed or nearly completed.

Data analysis

Information relevant to each of the agreed themes was documented by FL and JW using a data extraction sheet (see Table 1). The information was summarized and reported descriptively and narratively in relation to the themes above. Overarching issues were identified by the working group that had been established to oversee the project. The working group comprised researchers who had all been involved in the different process evaluations and helped to draw out the main implications of the process evaluation with respect to project and policy, as well as lessons to inform future process evaluations.

Results

Countries and interventions studied

The seven process evaluations were from low- and middle-income countries (LMICs) that had either completed or nearly completed their process evaluations including Fiji and Samoa, South Africa, Kenya, Peru, India, Sri Lanka, Tanzania and indigenous communities in Canada, (Table 1). The countries referred to in this manuscript were countries in which projects were a) funded through the GACD and b) contained process evaluations at a sufficiently advanced stage to include in the analysis. These process evaluations were part of pragmatic trials of innovative interventions to prevent and manage hypertension in the areas of salt reduction, task redistribution, mHealth, community engagement and blood pressure control [8]. Although the studies generally took place in LMICs, there were varying geographical, cultural and economic settings within and across countries (Table 1). Most of the interventions (five) were tested in randomized controlled trials with one stepped wedged trial and one pre-post study design. The duration of the studies was three to 5 years (Additional file 1).

Objectives and theoretical approaches to process evaluations

The specific objectives for each process evaluation were tailored to the broader objectives for each project and however, they were generally aimed at understanding factors that would have affected the implementation process and the impact of this process on trial outcomes. These objectives were achieved through the collection of qualitative information about context, mechanisms, feasibility, acceptability and sustainability of the interventions and quantitative information about fidelity (extent to which the intervention is implemented as intended), dose (how many units of each intervention are delivered) and reach (extent of participation of the target population) [2].Two process evaluations (in Peru and Sri Lanka) were limited to assessing barriers and facilitators which affected implementation, and in Peru the project had benefited from its previous formative research [9]. In India, the process evaluation had only evaluated the ASHA training and not the entire intervention. For the DREAM GLOBAL in Tanzania and Canada, the investigators have so far undertaken and reported on formative research as part of its process evaluation and have published their process evaluation framework protocol [7]. This formative research is aimed at assessing the major components of the intervention and how these components should vary among and between people, countries and cultures.

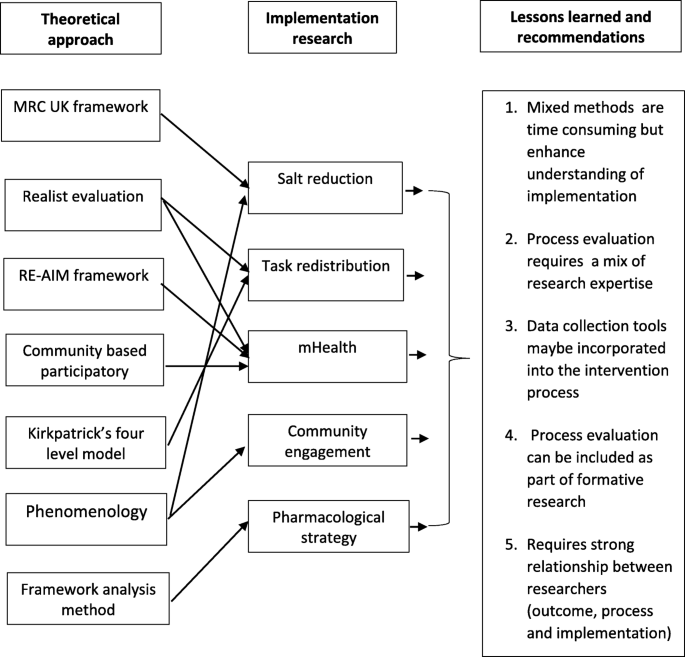

The theoretical approaches to process evaluation differed in the seven case studies, including use of the United Kingdom’s Medical Research Council (MRC) framework for process evaluation (Fiji/Samoa) [2, 10], the realist evaluation approach (South Africa and Kenya) [11], community-based participatory evaluation theory (Tanzania and Canada) [7], the RE-AIM framework (Kenya) [12], Kirkpatricks’ four level evaluation model (India) [13], phenomenology (Peru) [14] and Framework analysis method (Sri Lanka) [15] (see Table 2).

Main sources of data and data collection methods

Most of the investigators used a mixed methods approach in their data collection and inquiry, integrating quantitative and qualitative data within a single study [18]. For all seven case studies, qualitative data were obtained using one or more of the following methods: interviews, observations, focus group discussions, implementer diaries, researcher notes and reports of meetings. Quantitative data were obtained from surveys, metrics of process measures, written tests and clinical examinations. In most of these cases, data were collected and analyzed by researchers who were members of the study team but were adept at analyzing these types of data and so were specifically employed to conduct process evaluation. In two studies, phenomenological and framework analysis approaches were applied with only qualitative data used in their evaluation. This was because their process evaluation solely focused on assessing barriers and facilitators.

Main findings of process evaluations

Although the studies reported in this paper had very distinct interventions and outcomes, there were similarities in the findings of the process evaluations across the studies. The process evaluations of the salt reduction interventions in Fiji and Samoa demonstrated that the absence of a significant reduction in salt, in either country, could be explained by the fact that the interventions were not implemented with full fidelity (i.e. implemented in line with the study protocol). This was partly due to contextual factors including political and management changes, a cyclone in Fiji (affecting normal diets) – and partly due to lack of time for the intervention to take effect. However, the process evaluation also highlighted the fact that the projects had resulted in increased research capacity among government and research institutions that participated in the study. In addition, new government policies for salt were being integrated into the new Food and Nutrition security strategy in Fiji, while in Samoa government proposals for taxation of packaged foods high in sugar and salt, were being considered.

In South Africa, although control of hypertension was not improved, the lay health worker (LHW) intervention enabled the functioning of clinics to be streamlined, partly by improving the appointment system for chronic patients. The process evaluation enabled investigators to conclude that shifting certain medically and socially oriented tasks from nurses to LHWs could relieve the burden on nurses, improve delivery of chronic care, and improve functioning of primary care clinics. However, there was non-linearity between implementation process and outcomes at clinic level. The variability in implementation and outcomes between sites were likely a consequence of different levels of patient load and resources, nature of relationships and clinic management [19, 20]. For instance, in clinics with high patient loads LHWs were unable to complete all their tasks.

The process evaluation in Tanzania and Canada contributed to the formative stage of the intervention by identifying discrepancies between text messages created by researchers and those preferred by recipients, thereby enabling a change in the study design prior to commencement.

In Kenya, although analysis of the main trial outcomes had not been completed at the time of developing this paper, process evaluation data showed low fidelity in implementing certain components of the intervention, sub-optimal retention of skills among community health workers (CHWs), and gaps in recall of training elements by CHWs in some topics. Initial training of CHWs was more effective to help CHWs recognize complications, non-pharmacologic treatments and causes of hypertension, than to recognize the signs and symptoms of hypertension and the possible side effects of medication. In response to these findings, the project subsequently incorporated training that encompassed these other issues.

The salt substitute intervention in Peru was effective in reducing population levels of uncontrolled BP. The process evaluation enabled the investigators to determine that effective implementation of the intervention was attributable to (1) good relations and trust between researchers and the community facilitating the launching of the trial in the area, and thus take advantage of this established engagement platform aided the intervention’s uptake through trust; and (2) targeting of women during the intervention as the critical primary receptors of the intervention due to their role as food preparers in homes.

The process evaluation in India is still underway, but the process evaluation of the training of Accredited Social Health Activists (ASHAs) demonstrated the intervention was successfully implemented by the ASHA which improved skills, knowledge and motivation among the ASHAs [21, 22].

In Sri Lanka, patients and providers liked the triple pill because of its ease of use (single pill, once a day dosing) and significant BP control. At the beginning of the trial, providers expressed apprehension about initiating treatment with the triple pill in treatment naïve hypertensive patients. Over time, they became comfortable as no major safety issues were reported and the extent of BP lowering achieved was substantial. Providers expressed a willingness to prescribe the triple pill and patients were willing to use it if it was made available after the trial.

These findings from the seven cases demonstrate the role of process evaluations in describing the implementation process despite variation in study outcomes.

Implications for the projects and policy

A series of implications for projects and policies were highlighted by the process evaluations. For salt reduction interventions, these included the need for adequate time between baseline and follow up for the implementation to take effect; the need for strong leadership (diverse, experienced and representative) and clear roles for multi-sector advisory bodies; regular communication with stakeholders; and consideration of consumer acceptability and affordability of salt-reduced products. For the LHW intervention, the importance of a supportive and well-resourced clinic environment, strong management of the Primary Health Care facilities, and motivated staff that relate well to the patients were identified as fundamental for successful task shifting operations. The importance of continued training, communication and programmatic support was highlighted through a number of process evaluations. The process evaluation in the CHW study in Kenya showed the need for additional education about signs and symptoms of hypertension and treatment side effects as well as intensive, repeated training regarding hypertension management. Similarly, findings from the ASHA program in India emphasized the need for culturally appropriate training materials, delivered using interactive and innovative methods. It also showed the need to align project tasks and responsibilities with CHW incentives as without this, their morale for work would decline. Implementing a low dose, combination treatment strategy in Sri Lanka highlighted the need for education and training for prescribing physicians around the benefits of early use of combination therapy for treatment of hypertension and ensuring availability of the combined therapy for hypertension.

Discussion

This paper provides an overview of the application and findings of process evaluations in different hypertension implementation research projects in various LMICs and Indigenous communities in Canada. The major objective of each process evaluation was to understand the implementation of interventions. The lessons from this paper relate to: 1) the feasibility and application of process evaluations; and 2) the relevance of process evaluation results for understanding implementation effects and their impact. Research teams used a variety of frameworks and methods, each deemed to be appropriate and feasible for the research team and context. Despite the variety of methodological approaches, with some differences and overlaps, most process evaluations shared similar goals of describing the processes, structures and resources of the respective studies.

Our study has demonstrated the need to consider process evaluation early in the research cycle so as to optimize design and data collection throughout the implementation cycle. When done early in the project cycle, process evaluations can help to optimize implementation of the intervention, as was done in Kenya through repeated training for health providers delivering the intervention. In many projects, such as in the South African study, the relationship between intervention and outcome included pathways within the clinics that differed to those originally hypothesized. In addition, process evaluations allowed for documenting unexpected results. The process evaluation of the salt reduction programs in the Pacific Islands [23, 24], pointed to natural and political context slowing down the implementation of the intervention, but also demonstrated how the project led to new mechanisms for intersectoral collaboration. In general, the process evaluations illuminated that maintaining full fidelity to the original implementation plan is often difficult to achieve, with resource constraints further affecting the implementation process. These findings are vital in explaining and understanding the context in which trial outcomes were (or were not) achieved.

Some of the findings from this study align with findings from other authors which are quoted in this paragraph. The causal relationship between implementation and outcome is, in real life implementation, affected by the adaptability (or unpredictability) of actors, and the wide range of influencing elements [25] including geographical and community setup. Using a mixed-methods approach deepens the understanding by providing different perspectives, validation and triangulation by using multiple sources [2, 26]. Qualitative analysis enables exploration of the acceptability of an intervention, how it worked and why [27]. Quantitative analysis are important to measure elements of fidelity [27]. This implies the need for a comprehensive skill set within the research team.

The strength of this report is that it is based on a wide range of research projects and applications of process evaluations in different LMICs but focused on one chronic disease; hypertension. The selection of study cases, being only hypertension projects funded by GACD, was limited by the study set-up and timing of documentation. This contributes to a broad insight of how process evaluation can be incorporated into studies and used in different interventions and settings. The detailed case studies compiled by teams, coupled with the regular GACD overarching working group meetings allowed the experiences of process evaluations in different phases of the implementation process to be documented. Other GACD funded projects, for instance on diabetes, had not yet progressed far enough in their work to be able to contribute findings. The choice for including in-depth case study analyses necessitates a level of trust between the authors and the research teams, especially since most teams have not yet finalized their analyses nor published their findings. We chose not to include other GACD projects, thereby reducing the scope of projects that could contribute to the analyses. We believe the current approach facilitated more in-depth analyses, thereby enriching the findings of this study.

The implications of this study pertain to the discipline of implementation research and to the engagement with implementers and decision-makers. All of the frameworks adopted provided useful outcomes. The choice of a framework and method should be guided by the key questions that need to be answered to understand the implementation process and the skills and preferred methodological approaches of the researchers.

For process evaluations to be informative, we need a diverse skill set. Project management data are required to inform fidelity of the implementation. Further analysis of observations and interviews with people involved in the intervention is required to gain field-based understanding of the evolution of the intervention, the mechanisms triggering effect and the perceptions of actors on what crucial elements or moments have been in the evolution. Thus, the evaluations must be interdisciplinary, combining techniques and methods from a range of sources including project management, anthropology, psychology and clinical sciences.

The findings of process evaluations are crucial to understand the pathways between intervention and impact, so as to optimize implementation, impact and inform scalability of the interventions. This requires planning from the project outset, and engagement with implementers and decision makers throughout program implementation [1]. This is contrary to the classical set-up of most trials in which deviation from the study protocol is not acceptable because it interferes with the evaluation of effectiveness. The choice about whether to involve implementers in the process evaluation depends on the design of the primary study. The aim of many implementation studies is to test effectiveness instead of efficacy, and this requires more flexible study designs, such as an adaptive trial design, enabling optimization of the implementation throughout the course of the project. This requires ongoing dialogue between implementers and researchers evaluating this process. In studies where this interference is deemed problematic, researchers can opt for a more distant relationship between the intervention and evaluation teams, such as that occurring in the study of hypertension treatment in South Africa (case study 1.2).

The findings from most process evaluations demonstrate both the importance and the challenges of adapting initial research plans to accommodate the constraints in a (low resource) context. Detailed discussions are required to understand context and expectations of local stakeholders. This necessitates formative research and establishment of trusting relationship to shape mutual commitment to action between researchers and local communities. This has implications for the design of research. Many research projects experience delay in the formative and implementation phases of their projects. Only three process evaluations have so far been published [21, 23, 24]. This points to the need to reflect on planning and funding of research cycles.

Lessons and recommendations for process evaluations

A range of lessons for process evaluation as part of implementation research in LMIC have been identified through this study (Fig. 1). A common theme that emerged was that while mixed methods approaches can be time consuming and generate a vast amount of data, they significantly enhance understanding of the implementation of complex interventions as well as generate a wealth of learning to inform future projects. For instance, the semi-structured interviews used in the Fiji and Samoa process evaluation, produced qualitative data, i.e. experiences of government institutions, the food industry and other stakeholders. This information helped to explain that the lack of intervention effect on salt intake was likely at least partially attributable to the short intervention duration and the fact that policy changes had yet to take effect.

Incorporating process evaluation data collection tools into the intervention process from the onset was identified as crucial for process evaluation. Process evaluations cannot be conducted retrospectively as investigators cannot go back and collect the required data. For instance, for the clinic based LHW intervention in South Africa, it was ideal to observe and understand how nurses interacted with the intervention as it was being implemented. Thus, process evaluations should be fully embedded into the intervention protocol or a separate process evaluation protocol should be developed alongside the intervention protocol. In Sri Lanka, collecting process evaluation data before the study outcome data were available helped in exploring implementation processes without unintentionally influencing investigator or patient behavior in the study.

Some investigators who commenced their process evaluation after the intervention had begun, e.g. the CHW project in Kenya, felt that it may have been helpful to have started this earlier in the study life-cycle. Other investigators who incorporated process evaluation in formative research and situation analyses reported that this approach helped identify which specific process measures should be collected during the intervention. One study team deliberately did not collect process evaluation data until after the study was complete so as to not affect investigator or patient behavior in the study itself. Therefore multiple considerations should be taken into account when designing process evaluations.

Experiences from six of the projects support the MRC recommendation for strong relationship and consultations between researchers responsible for the design and implementation of the trial, outcome evaluation and process evaluation [2]. However, whilst the teams reported positively on coming together to exchange experiences at different stages of the project, it was felt that additional interim assessments of process throughout the project would have further strengthened implementation of the interventions. In some teams, discussion of the preliminary results of the process evaluation by the broader project group, including local country teams, was an essential part of the data synthesis and greatly enhanced the validity of the results by clarifying areas in which the researchers might not have understood the data correctly.

The DREAM GOBAL process evaluation demonstrated the need for formative research that informed the mHealth projects for rural communities in Tanzania and Indigenous people in Canada as well as the value of using a participatory research tool [28]. This tool helped to identify: a) key domains required for ongoing dialogue between the community and the research team and b) existing strengths and areas requiring further development for effective implementation. Applying this approach, it was found that key factors of this project, such as technology and task shifting required study at the patient, provider, community, organization, and health systems/setting level for effective implementation [7].

Conclusion

The analysis of process evaluations across various NCD-related research projects has deepened the knowledge of the different theoretical approaches to process evaluation, the applications and the effects of including process evaluations in implementation research, especially in LMICs. Our findings provide evidence that, whilst time-consuming, process evaluations in low resource settings are feasible and crucial for understanding the extent to which interventions are being implemented as planned, the contextual factors influencing implementation and the critical resources needed to create change. It is, therefore, essential to allocate sufficient time and resources to process evaluations, throughout the lifetime of these implementation research projects.

Availability of data and materials

The datasets generated during and/or analysed during the study are available from the corresponding author on reasonable request.

Notes

Ministry of Science, Technology and Productive Innovation (Argentina), National Health and Medical Research Council (Australia), São Paulo Research Foundation (Brazil), Canadian Institutes of Health Research, Chinese Academy of Medical Sciences, Research & Innovation DG (European Commission), Indian Council of Medical Research, Agency for Medical Research and Development (Japan), The National Institute of Medical Science and Nutrition Salvador Zubiran (Mexico), Health Research Council (New Zealand), South African Medical Research Council, Health Systems Research Institute (Thailand), UK Medical Research Council and US National Institutes of Health

Abbreviations

- ASHA:

-

Accredited social health activist

- CHW:

-

Community health worker

- FGD:

-

Focus group discussion

- GACD:

-

Global alliance for chronic diseases

- LHW:

-

Lay health worker

- LMICs:

-

Low and middle-income countries

- MRC:

-

Medical research council

- NCD:

-

Non-communicable disease

- RE-AIM:

-

Reach, efficacy, adoption, implementation, maintenance

- SMS:

-

Short message service

- StarRi:

-

Standards for reporting implementation studies

References

Peters DH, Tran NT, Adam T. Implementation research in health: a practical guide. Geneva: Alliance HPSR, WHO; 2013. p. 69. ISBN 978 92 4 150621 2

Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, Moore L, O’Cathain A, Tinati T, Wight D, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:h1258.

Community Matters. Brief introduction to realist evaluation. 2004. Available From: http://www.communitymatters.com.au/gpage1.html. Accessed 16 Dec 2014

Oakley A, Strange V, Bonell C, Allen E, Stephenson J. Process evaluation in randomised controlled trials of complex interventions. BMJ. 2006;332(7538):413–6.

Rycroft-Malone J, Burton CR. Is it time for standards for reporting on research about implementation? Worldviews Evid-Based Nurs. 2011;8(4):189–90.

Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, Rycroft-Malone J, Meissner P, Murray E, Patel A, et al. Standards for reporting implementation studies (StaRI) statement. BMJ. 2017;356:i6795.

Maar MA, Yeates K, Perkins N, Boesch L, Hua-Stewart D, Liu P, Sleeth J, Tobe SW. A framework for the study of complex mHealth interventions in diverse cultural settings. JMIR Mhealth Uhealth. 2017;5(4):e47.

Vedanthan R, Bernabe-Ortiz A, Herasme OI, Joshi R, Lopez-Jaramillo P, Thrift AG, Webster J, Webster R, Yeates K, Gyamfi J, et al. Innovative approaches to hypertension control in low- and middle-income countries. Cardiol Clin. 2017;35(1):99–115.

Saavedra-Garcia L, Bernabe-Ortiz A, Gilman RH, Diez-Canseco F, Cárdenas MK, Sacksteder KA, Miranda JJ. Applying the triangle taste test to assess differences between low sodium salts and common salt: evidence from Peru. PLoS One. 2015;10(7):e0134700.

Medical Research Council United Kingdom. Developing and evaluating complex interventions: new guidance: 2009. Available from: www.mrc.ac.uk/complexinterventionsguidance. Accessed 20 Nov 2015

Better Evaluation. Realist Evaluation. 2014. Available From: https://www.betterevaluation.org/approach/realist_evaluation. Accessed 16 Dec 2014

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–7.

Kirkpatrick DL, Kirkpatrick JD. Evaluating training programs: the four levels; 2006.

Gray D. Doing research in the real world - theoretical perspectives and research methodologies. London: Sage Publications Inc; 2018.

Gale NK, Heath G, Cameron E, Rashid S, Redwood S. Using the framework method for the analysis of qualitative data in multi-disciplinary health research. BMC Med Res Methodol. 2013;13(1):117.

Pawson R, Tilley N. Realistic Evaluation. London: Sage Publications; 1997.

Fletcher A, Jamal F, Moore G, Evans RE, Murphy S, Bonell C. Realist complex intervention science: applying realist principles across all phases of the Medical Research Council framework for developing and evaluating complex interventions. Evaluation (London). 2016;22(3):286–303.

Yu CH. Book review: Creswell, J., & Plano Clark, V. (2007). Designing and conducting mixed methods research. Thousand oaks, CA: sage. Organ Res Methods. 2009;12(4):801–4.

Thorogood M, Goudge J, Bertram M, Chirwa T, Eldridge S, Gomez-Olive FX, Limbani F, Musenge E, Myakayaka N, Tollman S, et al. The Nkateko health service trial to improve hypertension management in rural South Africa: study protocol for a randomised controlled trial. Trials. 2014;15:435.

Goudge J, Chirwa T, Eldridge S, Gómez-Olivé FXF, Kabudula C, Limbani F, Musenge E, Thorogood M. Can lay health workers support the management of hypertension? Findings of a cluster randomised trial in South Africa. BMJ Glob Health. 2018;3(1):e000577.

Abdel-All M, Thrift AG, Riddell M, Thankappan KRT, Mini GK, Chow CK, Maulik PK, Mahal A, Guggilla R, Kalyanram K, et al. Evaluation of a training program of hypertension for accredited social health activists (ASHA) in rural India. BMC Health Serv Res. 2018;18(1):320.

Riddell MA, Joshi R, Oldenburg B, Chow C, Thankappan KR, Mahal A, et al. Cluster randomised feasibility trial to improve the control of hypertension in rural India (CHIRI): a study protocol. BMJ Open. 2016;6(10):1–13.

Trieu K, Webster J, Jan S, Hope S, Naseri T, Ieremia M, Bell C, Snowdon W, Moodie M. Process evaluation of Samoa's national salt reduction strategy (MASIMA): what interventions can be successfully replicated in lower-income countries? Implement Sci. 2018;13(1):107.

Webster J, Pillay A, Suku A, Gohil P, Santos JA, Schultz J, Wate J, Trieu K, Hope S, Snowdon W, et al. Process evaluation and costing of a multifaceted population-wide intervention to reduce salt consumption in Fiji. Nutrients. 2018;10(2):155.

Leykum LK, Pugh J, Lawrence V, Parchman M, Noël PH, Cornell J, McDaniel RR: Organizational interventions employing principles of complexity science have improved outcomes for patients with type II diabetes. Implement Sci 2007, 2(1):1–8.

Greene JC. Mixed methods in social inquiry. San Francisco: Wiley; 2007.

Farquhar MC, Ewing G, Booth S. Using mixed methods to develop and evaluate complex interventions in palliative care research. Palliat Med. 2011;25(8):748–57.

Maar M, Yeates K, Barron M, Hua D, Liu P, Lum-Kwong MM, Perkins N, Sleeth J, Tobe J, Wabano MJ, et al. I-RREACH: an engagement and assessment tool for improving implementation readiness of researchers, organizations and communities in complex interventions. Implement Sci. 2015;10:64.

Acknowledgements

We appreciate the contributions and participation of the rest of the members of the GACD Process Evaluation Working Group. These include: Alfonso Fernandez Pozas, Anushka Patel, Arti Pillay, Briana Cotrez, Carlos Aguilar Salinas, Caryl Nowson, Claire Johnson, Clicerio Gonzalez Villalpando, Cristina Garcia-Ulloa, Debra Litzelman, Devarsetty Praveen, Diane Hua, Dimitrios Kakoulis, Ed Fottrell, Elsa Cornejo Vucovich, Francisco Gonzalez Salazar, Hadi Musa, Harriet Chemusto, Hassan Haghparast-Bidgoli, Jean Claude Mutabazi, Jimaima Schultz, Joanne Odenkirchen, Jose Zavala-Loayza, Joyce Gyamfi, Kirsty Bobrow, Leticia Neira, Louise Maple-Brown, Maria Lazo, Meena Daivadanam, Nilmini Wijemanne, Paloma Almeda-Valdes, Paul Camacho-Lopez, Peter Delobelle, Puhong Zhang, Raelle Saulson, Rama Guggilla, Renae Kirkham, Ricardo Angeles, Sailesh Mohan, Sheldon Tobe, Sujeet Jha, Sun Lei, Vilma Irazola, Yuan Ma, Yulia Shenderovich.

Funding

Research reported in this publication was supported by the following GACD program funding agencies; National Health and Medical Research Council of Australia (Grant No. 1040178); Medical Research Council of the United Kingdom (Grant No. MR/JO16020/1); Canadian Institutes of Health Research (Grant No. 120389); Grand Challenges Canada (Grant Nos. 0069–04, and 0070–04); International Development Research Centre; Canadian Stroke Network; National Heart, Lung, and Blood Institute of the National Institutes of Health (Grant No. U01HL114200); National Heart, Lung and Blood Institute of the National Institutes of Health (Grant No. 5U01HL114180); National Health and Medical Research Council of Australia (Grant No. 1040030); National Health and Medical Research Council of Australia (Grant No. 1040152).

The content is solely the responsibility of the authors and does not necessarily represent the official views of the Funders. The authors declare that the funders did not have a role in the design of the studies and collection, analysis, and interpretation of data and in writing the manuscript.

Author information

Authors and Affiliations

Consortia

Contributions

FL, JW, GP, MM, JG, RV, RJ, JJM, BO, MP, JO, RW, KY, MR, AS, KT and AT were responsible for leading, providing oversight and developing case studies for process evaluations of their respective studies. FL, JW, GP and MM produced the initial draft of this manuscript and the rest of the authors reviewed initial and subsequent drafts, and all read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The studies and their process evaluations described in this manuscript were granted ethics clearance from the following institutions;

-

The University of Sydney Human Research Ethics Committee (no. 15359), Deakin University Human Research Ethics Committee (2013–2020), Samoa Health Research Committee and the Fiji National Research Ethics and Review Committee, Suva, Fiji (FNRERC 201307).

-

Committee for Research on Human Subjects (Medical), University of the Witwatersrand (certificate number M130964); Mpumalanga Province Research and Ethics Committee; and Biomedical Research Ethics Committee University of Warwick, UK (certificate number REGO-2013-562).

-

The Icahn School of Medicine at Mount Sinai, New York, USA (HS# 12–00129)

-

Queen’s University Health Sciences and Affiliated Teaching Hospitals Research Ethics Board, Kingston, Ontario (DMED-1603-13); Sunnybrook Health Sciences Centre Research Ethics Board, Toronto, Ontario, (#182–2013); and the National Institute for Medical Research Tanzania (NIMR/HQ/R.8a/Vol.IX/1698).

-

Johns Hopkins Bloomberg School of Public Health (IRB N0 00004391) and Universidad Peruana Cayetano Heredia (SIDISI 58563)

-

The Centre for Chronic Disease Control, India (CCDC_IEC_09_2012); Christian Medical College, Vellore, India (IRB Min. No. 8313 dated 24.04.2013); Sree Chitra Tirunal Institute for Medical Sciences and Technology, India (IEC/484); Health Ministry Screening Council, India and Indian Council of Medical Research, Delhi, India (58/4/1F/CHR/2013/NCD II); Rishi Valley Ethics Committee, Rishi Valley School, Madanapalle, India (A:37/F – IEC); and Monash University, Australia (CF13/2516–2013001327).

-

Ethics Review Committee, Faculty of Medicine, University of Kelaniya, Colombo and Royal Prince Alfred Hospital Ethics Review Committee in Sydney, Australia

All participants gave written informed consent in their local language.

Permission to access the data from GACD hypertension teams was granted by the respective principal investigators of the teams who included:

-

Karen Yeates and Sheldon Tobe - Diagnosing hypertension: Engaging Action and Management in Getting Lower BP in Indigenous communities in Canada and rural communities in Tanzania.

-

Margaret Thorogood and Jane Goudge - Treating hypertension in rural South Africa: A clinic-based lay health worker to enhance integrated chronic care in Mpumalanga South Africa

-

Amanda Thrift, Rohina Joshi and Brian Oldenburg - Improving control of hypertension in rural India: Overcoming barriers to diagnosis and effective treatment

-

Ruth Webster and Anushka Patel - Early use of low-dose triple combination of BP lowering drugs in improving BP control in Sri Lanka

-

Jacqui Webster and Bruce Neal - Cost effectiveness of salt reduction programs in the Pacific Islands (Fiji and Samoa)

-

Jaime Miranda - Salt substitute to reduce blood pressure at the population level in northern Peru

-

Valentin Futer, Jemima Kamano and Rajesh Vedanthan - Optimizing linkage and retention to hypertension care to reduce blood pressure in rural western Kenya - Kosirai and Turbo

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Annexures (case studies) (DOCX 39 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Limbani, F., Goudge, J., Joshi, R. et al. Process evaluation in the field: global learnings from seven implementation research hypertension projects in low-and middle-income countries. BMC Public Health 19, 953 (2019). https://doi.org/10.1186/s12889-019-7261-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12889-019-7261-8