Abstract

Background

While the continued development of high-throughput sequencing has facilitated studies of entire transcriptomes in non-model organisms, the incorporation of an increasing amount of RNA-Seq libraries has made de novo transcriptome assembly difficult. Although algorithms that can assemble a large amount of RNA-Seq data are available, they are generally very memory-intensive and can only be used to construct small assemblies.

Results

We develop a divide-and-conquer strategy that allows these algorithms to be utilized, by subdividing a large RNA-Seq data set into small libraries. Each individual library is assembled independently by an existing algorithm, and a merging algorithm is developed to combine these assemblies by picking a subset of high quality transcripts to form a large transcriptome. When compared to existing algorithms that return a single assembly directly, this strategy achieves comparable or increased accuracy as memory-efficient algorithms that can be used to process a large amount of RNA-Seq data, and comparable or decreased accuracy as memory-intensive algorithms that can only be used to construct small assemblies.

Conclusions

Our divide-and-conquer strategy allows memory-intensive de novo transcriptome assembly algorithms to be utilized to construct large assemblies.

Similar content being viewed by others

Background

While high-throughput sequencing has made it possible to perform studies of entire transcriptomes in non-model organisms, applying de novo transcriptome assembly algorithms has been increasingly difficult due to an increasing amount of RNA-Seq libraries that include many experimental conditions or developmental stages with replicated experiments.

Although transcriptome assembly algorithms such as SOAPdenovo-Trans [1] and Trans-ABySS [2] can be used to process a large amount of RNA-Seq data, algorithms such as Oases [3] and Trinity [4] that have higher accuracy are generally very memory-intensive, thus they can only be used to construct small assemblies. We develop a divide-and-conquer strategy that allows these algorithms to be utilized. A large RNA-Seq data set is subdivided into small libraries. Each individual library is assembled independently, and a merging algorithm is employed to combine the small assemblies into a large transcriptome (Fig. 1).

The merging algorithm picks a subset of high quality transcripts to form a transcriptome by preferring longer transcripts, which are more highly expressed and better assembled. A de Bruijn graph is constructed to extend some of these transcripts at the left end and at the right end when there are no ambiguities. To reduce redundancy, lower ranked transcripts with all their corresponding nodes in the de Bruijn graph covered by higher ranked transcripts are removed.

We validate our algorithm by performing Schizosaccharomyces pombe, Drosophila melanogaster and Arabidopsis thaliana transcriptome assemblies using publicly available RNA-Seq libraries. We demonstrate our algorithm by assembling a large set of 93 Cochliomyia macellaria RNA-Seq libraries that we have constructed, which is about 298 G in size.

Methods

De Bruijn graph construction

Given a set of reads and a parameter k that denotes the k-mer length, a de Bruijn graph is defined by taking each k-mer that appears in the reads as a vertex, and connecting two k-mers s 1 s 2⋯s k and s 2⋯s k s k+1 by a directed edge if the (k−1)-suffix of the first k-mer is the same as the (k−1)-prefix of the second k-mer and the (k+1)-mer s 1 s 2⋯s k s k+1 appears in the reads.

Since the de Bruijn graph implicitly represents an assembly of the reads, it is employed by short read assembly algorithms [5, 6]. To reduce noise, a k-mer coverage cutoff c is imposed to remove k-mers that appear less than c times. Each maximal non-branching linear path is collapsed into a single node, thus each node can contain a longer string that is formed from concatenating successive k-mers that overlap by k−1 letters between each adjacent pair. After each individual library is assembled independently using an existing algorithm, our algorithm constructs a de Bruijn graph from the set of all predicted transcripts. Note that the k-mer coverage cutoff c is only applied during individual library assemblies and not during the merging step.

To construct the de Bruijn graph, we follow the iterative one-letter extension strategy in [7] to identify all k-mers. Given a sorted array that contains all n k ′-mers in either the forward or the reverse complementary direction for k ′<k, an array of size 4n is created that contains four slots for each k ′-mer. For each (k ′+1)-mer, binary search is applied to locate its k ′-prefix within the array and one of the four slots that corresponds to its last nucleotide is updated. Slots with zero counts are removed to obtain all (k ′+1)-mers. Edges in the de Bruijn graph are constructed by locating the corresponding k-mers through binary search. Since this step is performed on the transcripts and not on the reads, it is not time consuming and the memory requirement has a multiplicative constant of four per k-mer.

Picking high quality transcripts

Since each transcript corresponds to a path in the de Bruijn graph and there may be extra bases at the beginning node and the ending node of the path that are not included within the transcript, these bases form unambiguous extensions of the transcript and are added to the transcript. To reduce noise, we only retain a transcript if its length divided by the number of nodes in its path is above the average length cutoff c 1, where c 1 is a given parameter. To form the transcriptome from these extended transcripts, our algorithm picks a subset of high quality transcripts while preferring longer transcripts.

Since the longest transcripts are not always correct and may have translocations, and we have to make sure that redundant subsequences of a transcript are not included multiple times, we rank the transcripts in decreasing order of the number of nodes that form each transcript in the de Bruijn graph. Since transcripts that are formed from a larger number of nodes in the de Bruijn graph tend to be longer, this strategy has a preference towards longer transcripts while at the same time reduces the number of translocated transcripts.

To remove redundant transcripts, we consider long nodes in the de Bruijn graph that contain a string of length above the node length cutoff c 2, where c 2 is another given parameter. We only retain a lower ranked transcript when it contains a long node that is not covered by higher ranked transcripts. We group transcripts into a locus when they share at least one long node. This condition is applied transitively to collect all related transcripts so that each transcript in a locus shares at least one long node with another transcript in the same locus.

Results and discussion

Data sets

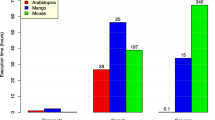

We applied our algorithm kCombine to perform transcriptome assemblies using publicly available RNA-Seq libraries from the sequence read archive [8], including one set of Schizosaccharomyces pombe libraries, two sets of Drosophila melanogaster libraries with one small set and one large set, and one set of Arabidopsis thaliana libraries (Table 1).

We compare the performance of our algorithm that utilizes an existing algorithm to assemble each individual library independently to the same algorithm that returns a single assembly directly from all libraries, with each library corresponding to one sequencing run of a biological sample and all biological replicates combined into a single library for independent assembly in our algorithm. We trimmed each read by removing bases starting from the first position that has a quality score of less than 15. We applied Oases and Trinity to the small data sets, and SOAPdenovo-Trans and Trans-ABySS to the large data sets.

We fixed the k-mer length to 25 and varied the k-mer coverage cutoff c when applying each algorithm. We used the same value of k to construct the de Bruijn graph in our algorithm, and set the average length cutoff c 1 to 25 and the node length cutoff c 2 to 50. These parameters were determined by trying a few combinations and choosing the values that give satisfactory performance. Since the performance of each algorithm is highly dependent on the k-mer coverage cutoff c and different values are needed when applying an existing algorithm during the divide-and-conquer strategy as opposed to obtaining a single assembly directly, we report the results that give the most comparable performance.

To assess the extent of translocations in predicted transcripts, we applied GMAP [9] to map the predicted transcripts to the known genome. To investigate whether our algorithm may systematically remove certain types of RNA, we applied eXpress [10] to the reads in each data set with respect to all the predicted transcripts that are full length transcripts in each assembly to obtain FPKM expression estimates.

Model organisms

Tables 2 and 3 show that kCombine generally had decreased performance when compared to obtaining single assemblies directly from Oases or Trinity. When compared to Oases, kCombine was able to obtain less translocated transcripts in Schizosaccharomyces pombe and comparable percentages of translocated transcripts in Drosophila melanogaster. When compared to Trinity, kCombine had decreased performance when the percentage of translocated transcripts is about the same, and kCombine had a higher percentage of translocated transcripts when the other performance is about the same. Tables 4 and 5 show that kCombine had improved performance when compared to SOAPdenovo-Trans and Trans-ABySS at the expense of having more translocated transcripts.

In terms of memory requirement, while Oases and Trinity required much more memory than kCombine, the memory requirement of SOAPdenovo-Trans and Trans-ABySS was comparable to kCombine. More memory was needed during the merging step by kCombine than the independent assembly of each individual library by each algorithm.

Figures 2 and 3 show that kCombine recovered comparable proportion of full length transcripts with low expression levels as Oases and Trinity, with slightly higher proportion than Oases in Schizosaccharomyces pombe and lower proportion than Trinity in Drosophila melanogaster. Figures 4 and 5 show that kCombine recovered comparable proportion of full length transcripts with low expression levels as SOAPdenovo-Trans and Trans-ABySS, with slightly lower proportion than SOAPdenovo-Trans and higher proportion than Trans-ABySS in Arabidopsis thaliana.

Comparisons of the cumulative distribution of the FPKM expression estimates of predicted transcripts that are full length transcripts in Schizosaccharomyces pombe transcriptome assemblies of Oases, Trinity and their respective applications of kCombine, with k=25 over different values of k-mer coverage cutoff c and the range of FPKM values in each assembly divided into 20 intervals of equal width

Comparisons of the cumulative distribution of the FPKM expression estimates of predicted transcripts that are full length transcripts in small Drosophila melanogaster transcriptome assemblies of Oases, Trinity and their respective applications of kCombine, with k=25 over different values of k-mer coverage cutoff c and the range of FPKM values in each assembly divided into 20 intervals of equal width

Comparisons of the cumulative distribution of the FPKM expression estimates of predicted transcripts that are full length transcripts in Arabidopsis thaliana transcriptome assemblies of SOAPdenovo-Trans, Trans-ABySS and their respective applications of kCombine, with k=25 over different values of k-mer coverage cutoff c and the range of FPKM values in each assembly divided into 20 intervals of equal width

Comparisons of the cumulative distribution of the FPKM expression estimates of predicted transcripts that are full length transcripts in large Drosophila melanogaster transcriptome assemblies of SOAPdenovo-Trans, Trans-ABySS and their respective applications of kCombine, with k=25 over different values of k-mer coverage cutoff c and the range of FPKM values in each assembly divided into 20 intervals of equal width

Non-model organism

We applied our algorithm to assemble the transcriptome of the blow fly Cochliomyia macellaria from a set of RNA-Seq libraries that we have constructed (Table 1), in which the full transcriptome was not available before. The blow fly Cochliomyia macellaria is a primary colonizer of human and animal remains, and is important in nutrient cycling [11, 12] and forensic investigations of deaths [13, 14]. As an agent of myiasis [15, 16] and as a filth feeding fly, this species can be a veterinary and medical pest by causing direct damage to hosts and by spreading pathogenic bacteria [17]. Genomic tools for this blow fly can be expected to improve the benefits of Cochliomyia macellaria biology and to ameliorate the negative aspect.

Three biological replicates were generated for each combination of one of four temperatures (20 °C, 25 °C, 30 °C, and fluctuated) and one of seven developmental stages (feeding instar, early post feeding, late post feeding, early pupae, early middle pupae, late middle pupae, and late pupae). We also include additional libraries that were selected for fast and slow development and a control sample.

Since our goal is to obtain a transcriptome that is as accurate and complete as possible, we applied kCombine based on Oases and Trinity due to their generally higher accuracy in terms of specificity. Table 6 shows that the assemblies were of high quality. In both cases of kCombine based on Oases and Trinity, the assembly based on the middle value of the k-mer coverage cutoff c provides a balanced result between sensitivity and correctness. In terms of memory requirement, Oases and Trinity required a large amount of memory during the independent assembly of each individual library. Since the total size of libraries is very large, the assembly of each individual library is difficult even when the data set is divided into 31 libraries after combining the biological replicates. The merging step by kCombine required comparably little memory.

Conclusions

We have developed a divide-and-conquer strategy that allows memory-intensive de novo transcriptome assembly algorithms to be utilized to construct large assemblies. After the individual libraries are assembled independently, the merging algorithm consumes little computational time and memory. In all our tests, the independent assembly of each individual library can be completed in a few days when performed in parallel on a computing cluster. The merging step then takes up to a few days for the largest data sets.

The choice of which algorithm to use depends on the goal of the assembly. While the memory requirement can still be high even after applying the divide-and-conquer strategy on memory-intensive algorithms for very large data sets, they are generally more accurate, with Oases returning more and longer transcripts and Trinity returning more transcripts with low expression levels and with less translocations. Among the memory-efficient algorithms, SOAPdenovo-Trans returns transcripts with less translocations while Trans-ABySS returns more and longer transcripts with higher specificity.

Since there is a decrease in performance in the divide-and-conquer strategy as the number of libraries increases, it is better to subdivide into smaller number of libraries as long as there are enough computational resources to assemble them independently. To optimize the performance, different values of the k-mer coverage cutoff c can be used on libraries of different sizes.

References

Xie Y, Wu G, Tang J, Luo R, Patterson J, Liu S, Huang W, He G, Gu S, Li S, Zhou X, Lam TW, Li Y, Xu X, Wong GK-S, Wang J. SOAPdenovo-Trans: de novo transcriptome assembly with short RNA-Seq reads. Bioinformatics. 2014; 30:1660–6.

Robertson G, Schein J, Chiu R, Corbett R, Field M, Jackman SD, Mungall K, Lee S, Okada HM, Qian JQ, Griffith M, Raymond A, Thiessen N, Cezard T, Butterfield YS, Newsome R, Chan SK, She R, Varhol R, Kamoh B, Prabhu AL, Tam A, Zhao Y, Moore RA, Hirst M, Marra MA, Jones SJM, Hoodless PA, Birol I. De novo assembly and analysis of RNA-seq data. Nat Methods. 2010; 7:909–12.

Schulz MH, Zerbino DR, Vingron M, Birney E. Oases: robust de novo RNA-seq assembly across the dynamic range of expression levels. Bioinformatics. 2012; 28:1086–92.

Grabherr MG, Haas BJ, Yassour M, Levin JZ, Thompson DA, Amit I, Adiconis X, Fan L, Raychowdhury R, Zeng Q, Chen Z, Mauceli E, Hacohen N, Gnirke A, Rhind N, di Palma F, Birren BW, Nusbaum C, Lindblad-Toh K, Friedman N, Regev A. Full-length transcriptome assembly from RNA-Seq data without a reference genome. Nat Biotechnol. 2011; 29:644–52.

Pevzner PA. l-tuple DNA sequencing: computer analysis. J Biomol Struct Dyn. 1989; 7:63–73.

Idury RM, Waterman MS. A new algorithm for DNA sequence assembly. J Comput Biol. 1995; 2:291–306.

Sze SH, Pimsler ML, Tomberlin JK, Jones CD, Tarone AM. A scalable and memory-efficient algorithm for de novo transcriptome assembly of non-model organisms. BMC Genomics. 2017; 18(Suppl 4):387.

Sayers EW, Barrett T, Benson DA, Bolton E, Bryant SH, Canese K, Chetvernin V, Church DM, DiCuccio M, Federhen S, Feolo M, Geer LY, Helmberg W, Kapustin Y, Landsman D, Lipman DJ, Lu Z, Madden TL, Madej T, Maglott DR, Marchler-Bauer A, Miller V, Mizrachi I, Ostell J, Panchenko A, Pruitt KD, Schuler GD, Sequeira E, Sherry ST, Shumway M, Sirotkin K, Slotta D, Souvorov A, Starchenko G, Tatusova TA, Wagner L, Wang Y, Wilbur WJ, Yaschenko E, Ye J. Database resources of the National Center for Biotechnology Information. Nucleic Acids Res. 2010; 38:5–16.

Wu TD, Watanabe CK. GMAP: a genomic mapping and alignment program for mRNA and EST sequences. Bioinformatics. 2005; 21:1859–75.

Roberts A, Pachter L. Streaming fragment assignment for real-time analysis of sequencing experiments. Nat Methods. 2013; 10:71–3.

Owings CG, Spiegelman C, Tarone AM, Tomberlin JK. Developmental variation among Cochliomyia macellaria Fabricius (Diptera: Calliphoridae) populations from three ecoregions of Texas, USA. Int J Legal Med. 2014; 128:709–17.

Mohr RM, Tomberlin JK. Development and validation of a new technique for estimating a minimum postmortem interval using adult blow fly (Diptera: Calliphoridae) carcass attendance. Int J Legal Med. 2015; 129:851–9.

Byrd JH, Butler JF. Effects of temperature on Cochliomyia macellaria (Diptera: Calliphoridae) development. J Med Entomol. 1996; 33:901–5.

Boatright SA, Tomberlin JK. Effects of temperature and tissue type on the development of Cochliomyia macellaria (Diptera: Calliphoridae). J Med Entomol. 2010; 47:917–23.

Scott HG. Human myiasis in North America (1952–1962 inclusive). Fla Entomol. 1964; 47:255–61.

Stevens JR. The evolution of myiasis in blowflies (Calliphoridae). Int J Parasitol. 2003; 33:1105–13.

Greenberg B. Flies and Disease. Princeton: Princeton University Press; 1971.

Daines B, Wang H, Wang L, Li Y, Han Y, Emmert D, Gelbart W, Wang X, Li W, Gibbs R, Chen R. The Drosophila melanogaster transcriptome by paired-end RNA sequencing. Genome Res. 2011; 21:315–24.

Marquez Y, Brown JWS, Simpson C, Barta A, Kalyna M. Transcriptome survey reveals increased complexity of the alternative splicing landscape in Arabidopsis. Genome Res. 2012; 22:1184–95.

Graveley BR, Brooks AN, Carlson JW, Duff MO, Landolin JM, Yang L, Artieri CG, van Baren MJ, Boley N, Booth BW, Brown JB, Cherbas L, Davis CA, Dobin A, Li R, Lin W, Malone JH, Mattiuzzo NR, Miller D, Sturgill D, Tuch BB, Zaleski C, Zhang D, Blanchette M, Dudoit S, Eads B, Green RE, Hammonds A, Jiang L, Kapranov P, Langton L, Perrimon N, Sandler JE, Wan KH, Willingham A, Zhang Y, Zou Y, Andrews J, Bickel PJ, Brenner SE, Brent MR, Cherbas P, Gingeras TR, Hoskins RA, Kaufman TC, Oliver B, Celniker SE. The developmental transcriptome of Drosophila melanogaster. Nature. 2011; 471:473–9.

Acknowledgements

We thank the reviewers for invaluable comments that significantly improve the paper. Sequencing was performed at the Genomics and Bioinformatics Services at Texas A&M University. Computations were performed on the Texas A&M Institute for Genome Sciences and Society High-Performance Computing Cluster and the Brazos Cluster at Texas A&M University.

Funding

AMT is supported by start-up funds from the College of Agriculture and Life Sciences at Texas A&M University and Texas AgriLife Research. This work was supported by the National Institute of Justice grants 2012-DN-BX-K024 and 2015-DN-BX-K020. Points of view in this document are those of the authors and do not necessarily represent the official position or policies of the U.S. Department of Justice. Publication costs for this work were funded by the Open Access to Knowledge (OAK) Fund at the Texas A&M University Libraries.

Availability of data and materials

A software program that implements our algorithm (kCombine) is available at http://faculty.cse.tamu.edu/shsze/kcombine.

About this supplement

This article has been published as part of BMC Genomics Volume 18 Supplement 10, 2017: Selected articles from the 6th IEEE International Conference on Computational Advances in Bio and Medical Sciences (ICCABS): genomics. The full contents of the supplement are available online at https://bmcgenomics.biomedcentral.com/articles/supplements/volume-18-supplement-10.

Authors’ contributions

S-HS and AMT designed the computational work. JJP and AMT designed the molecular experiments. JJP performed the molecular experiments. All authors read and approved the final manuscript.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Sze, SH., Parrott, J. & Tarone, A. A divide-and-conquer algorithm for large-scale de novo transcriptome assembly through combining small assemblies from existing algorithms. BMC Genomics 18 (Suppl 10), 895 (2017). https://doi.org/10.1186/s12864-017-4270-9

Published:

DOI: https://doi.org/10.1186/s12864-017-4270-9