Abstract

Background

A framework for high quality in post graduate training has been defined by the World Federation of Medical Education (WFME). The objective of this paper is to perform a systematic review of reviews to find current evidence regarding aspects of quality of post graduate training and to organise the results following the 9 areas of the WFME framework.

Methods

The systematic literature review was conducted in 2009 in Medline Ovid, EMBASE, ERIC and RDRB databases from 1995 onward. The reviews were selected by two independent researchers and a quality appraisal was based on the SIGN tool.

Results

31 reviews met inclusion criteria. The majority of the reviews provided information about the training process (WFME area 2), the assessment of trainees (WFME area 3) and the trainees (WFME area 4). One review covered the area 8 'governance and administration'. No review was found in relation to the mission and outcomes, the evaluation of the training process and the continuous renewal (respectively areas 1, 7 and 9 of the WFME framework).

Conclusions

The majority of the reviews provided information about the training process, the assessment of trainees and the trainees. Indicators used for quality assessment purposes of post graduate training should be based on this evidence but further research is needed for some areas in particular to assess the quality of the training process.

Similar content being viewed by others

Background

The debate on quality improvement in post graduate medical education (PME) is ongoing in many countries [1]. In the UK, the Post graduate Medical Education and Training Board (PMETB) developed generic quality standards of training in September 2009 [2]. In the U.S., the Accreditation Council for Graduate Medical Education (ACGME) is a private professional organisation and nowadays responsible for the accreditation of more than 8500 residency and fellowship programs [3]. Other countries, for instance the Netherlands and Canada also developed quality frameworks in PME [4, 5].

An international framework for quality of PME has been proposed by the World Federation of Medical Education (WFME, a non-governmental organization related to the World Health Organization). Global Standards for the undergraduate, post graduate and continuing medical education were developed by three international task forces with a broad representation of experts in medical education from all six WHO/WFME Regions [6]. One of these frameworks aims to introduce a generic and comprehensive approach to quality of PME, providing internationally accepted standards and national or even regional recognition of programs [6].

In the framework above many quality indicators and rankings are used for assessing the quality of PME. However they are based on expert consensus and a further interesting step is to know to what extent they are supported by the evidence: the concrete question is to know if the quality found for each area has an impact on the training outcomes e.g. physicians' competencies and ultimately, quality of care [7]. To date, only few studies comprehensively explored the literature of the quality of post graduate medical education [8].

A mapping of the best evidence underlying the WFME framework is an ambitious work. In general, individual studies are biased by local factors and this limits generalising the findings. Therefore systematic reviews have an important role in summarizing and synthesizing evidence in medical education on a wider scale [9]. The objective of this review is to identify, appraise the quality and synthesize the best systematic reviews on post graduate medical education using the WFME standards as a blueprint (Figure 1).

Methods

Databases

The systematic literature search was performed from 1995 onwards, using the following data sources: Medline Ovid (April 23, 2009), Embase (Excerpta Medica Database until June 9, 2009), ERIC (Education Recources Information Center) database until July 30, 2009) and RDRB (Research and Development Resource Base database until August 1, 2009) [10, 11]. The review included publications in English, French and Dutch.

A complementary manual search was performed in five core Journals (The Lancet, JAMA, New England Journal of Medicine, British Medical Journal, Annals of Internal Medicine) and five medical education journals (Academic Medicine, Medical Education, BMC Medical Education, Medical Teacher, Teaching and Learning in Medicine and Education for Health) from April 1 until October 2009.

Terms used

The Mesh terms (Medline Ovid)/Emtree terms (Embase) used were "Education, Medical, Graduate or Education" OR "Internship and Residency" OR "Family Practice/ed [Education]" as well as a combinations of terms relating to the WMFE framework items (quality, standards, legislation, education, organization, "organization and administration", *annual reports as topic, "constitution and bylaws", governing board, management audit, management information systems, mandatory programs, organizational innovation, program development, public health administration, total quality management).

In the ERIC database, the search was performed using the Thesaurus descriptors: "Graduate Medical Education" OR "Family Practice" AND keywords quality OR train* OR staff* OR standards OR organization OR legislation.

In the RDRB database, the search was performed using the key words: graduate medical education OR internship OR family practice combined with the key words: quality OR train* OR staff* OR standards OR organization OR legislation (Table 1).

Selection procedure

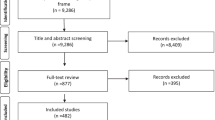

A total of 3022 unique references were identified. A first selection of reviews was performed by two independent researchers (in combinations AD, RR, JW) based on title and abstract using the following inclusion criteria:

-

scope: quality of training programs, training practices, trainers;

-

description of national, regional or official post graduate programs;

-

study design: systematic reviews

Papers were excluded using the exclusion criteria mentioned in Figure 2. The percentage of agreement between assessors was 95.1 percent.

Disagreement was resolved in discussion by pairs of researchers. No arbitrating intervention by a third researcher was needed. Finally, 188 reviews were selected for full reading and quality appraisal. One systematic review found during the manual search completed the list.

Quality appraisal

The quality appraisal of the selected reviews was performed on the full texts by one author (RR or JW) and was checked by the first author (AD). The researchers used the frameworks of Scottish Intercollegiate Network Group (SIGN) for reviews [12]. The maximal quality score was 15. The researchers excluded 3 reviews with low scores equal to 0 or 1 on three or more items out of a total of 5 items [13–15]. One review was excluded because it used, among other studies in the core curriculum, only one study in PME [16].

Results

After the selection procedure, 31 reviews were selected for further analysis. Figure 2 overviews the selection process. Overall, the quality of the 31 reviews (assessed using the SIGN criteria) was high (≥ 12/15) in 23 reviews and moderate (8/15-12/15) in 8 reviews. The selected reviews [17–47] evaluated 1570 primary studies carried out in the context of PME. The reviews publication dates range from 2000 to 2009, of which 9 reviews were from January 2008 until October 2009.

Many reviews did not focus solely on PME. They also analysed primary studies of undergraduate training or even the education of other health professionals, for instance high-fidelity training in undergraduate medical education and continuing medical education [18].

Most training settings were not specified (n = 12) [19–22, 27, 29, 31, 33, 35, 37, 45, 46]. Some studies mentioned them i.e. hospital settings (n = 7) [17, 18, 34, 41–44] and outpatient settings (n = 2) [24, 47]. Ten papers studied a mixed setting [23, 25, 26, 28, 30, 32, 36, 38–40] (i.e. hospital setting and/or GP setting and/or outpatient setting and/or not specified).

Looking at the first author's affiliations, the large majority of studies came from the US (n = 19) [17, 18, 21, 23, 24, 26, 28–31, 33, 36, 37, 39, 40, 42–44, 47] and the UK (n = 5) [18, 26, 31, 44, 45]. Two first authors resided in Canada [25, 41], 2 in Australia [22, 35], 1 in the Netherlands [38], 1 in Spain [20] and 1 in Bahrain [34]. All papers were written in English.

Practical messages

Additional file 1 gives an overview of the selected reviews: the research questions, the number of studies included in the reviews, the WFME (sub) areas that are covered, the results and the critical appraisal scores. The following paragraphs summarise their findings and highlight interesting and practical avenues to enhance the quality of PME. The following paragraphs use the term "trainee" for generic areas of PME training. The term "resident" is more specifically used when this trainee works in a clinical setting.

WFME areas under study

Most papers studied one particular area of the WFME framework while three other ones covered more than one area and/or sub-area [21, 24, 26]. The majority of the reviews give detailed information about the training process (WFME area 2) [17–26], the assessment of trainees (WFME area 3) [21, 24, 27–33] and the trainees (WFME area 4) [26, 34–42]. One review covered the 'governance and administration' (WFME area 8) [24]. No review focused on the mission and outcomes, the evaluation of the training process and the continuous renewal (respectively areas 1, 7 and 9 of the WFME framework).

Importance of a core curriculum

The first step for the quality of post graduate medical training is the necessity to describe an effective "core" in-patient curriculum. That was the conclusion of Di Francesco et al. [17] who analysed the effectiveness of the training in internal medicine. They found little data on the topic and concluded that few data exist to support the quality of this training.

Conditions to facilitate learning in PME education: combination of learning approaches

Issenberg et al. found that high-fidelity simulations are educationally effective: they concluded that simulation based exercises could complement medical education in patient care settings [18]. High fidelity simulations use realistic materials and equipment to represent the task that the candidate has to perform. The authors also insist on four other conditions to facilitate learning i.e. feedback, repetitive practice, opportunities for the trainee to engage in the practice of medical skills across a wide range of difficulties and multiple learning strategies.

In the same way another review insists on the feedback to trainees as a key to success. It should be provided systematically over several years by an authoritative source. Feedback can change clinical performance, but its effects are influenced by the source and duration of the process [33].

Work in team: importance of the clinical settings

Working as a doctor implies working in teams. One systematic review has listed some principles (cfr additional file 1) to enhance the quality of teamwork among future specialists [23].

Furthermore, it is worthwhile to offer to the future hospital-based specialists adequate exposure to outpatient and ambulatory settings as an adjunct to training in inpatient settings [24]. This exposure leads to better performances on national Board examinations, Objective Structured Clinical Examinations and tests of clinical reasoning. Another review estimates that residents often lack of confidence and competence for common health issues because only 13% of the training takes place in ambulatory care [47].

Growing importance of the portfolio in post graduate medical training

A portfolio is a set of materials collected to represent a person's work and foster reflective learning. The use of a portfolio gains importance in PME training and authors also recommend it as a tool for assessment [21].

Selection and assessment of trainees: shortcomings of the academic results

The selection of trainees for PME positions remains a subjective exercise: the undergraduate grades and rankings moderately correlate with the performance during internship and residency [34]. A selection of trainees based only on previous academic results in the undergraduate curriculum poorly predicts the post graduate training.

Authors from an Australian study propose a mix of traits that could predict the success of a future candidate [35] i.e. communication skills, capacity of adaptation to the audience, empathy, understanding of the role of the nursing and supporting staffs, understanding of practice protocol.

Assessment of trainees: a call for a global approach

Most authors emphasize the need for a global assessment of the post graduate trainee. Many tools exist e.g. from the Objective Structured Clinical Examination [24], the Mini-CEX (Mini Clinical Evaluation Exercise = method of evaluating residents by directly observing a history and physical examination followed by feedback) [30]. In the US, the Accreditation Council for Graduate Medical Education considers the portfolio as a corner stone to evaluate the competences. A portfolio must have a creative component that is learner driven [21].

Epstein et al. emphasizes the need for a multidimensional assessment based on the observation of trainees in real situations, on feedbacks of peers and patients and on measures of outcomes. The assessment has to target many competencies e.g. professionalism, time management, learning strategies, teamwork [29]. The strong validity of evidence has been identified for the Mini-CEX but the authors conclude that more work is needed to review the optimal mix and the psychometric characteristics of assessment formats in terms of validity and reliability [24, 30].

Working hours and risk of burnout: no optimal answer

Working conditions of trainees are a matter of debate in the literature. Work hours restrictions may improve the quality of life of the trainees, but it is unclear if the improved quality of life of residents ultimately results in better patient care [26]. Some evidence shows that reducing working hours does not impair clinical training, but it may decrease overall continuity of care [48, 49]. It must be noted that American (80 hour work in the ACGME framework) and European directives (a maximum of 48 hours per week since August 2009 [49]) greatly differ.

Emotional exhaustion and burnout rates in medical residents are high: authors suggest a prevalence ranging from 18% to 82% in medical residents [38]. The personal and professional consequences are potentially dramatic [39, 40] but few interventions are set up to tackle the problem. Support groups and meditation-type practices have shown promising results but are sometimes hard to replicate [40]. Doctor-nurse substitution has the potential to reduce doctors workload and direct health costs. Trainees welcome these reforms but trainers show reservations [50].

Discussion

Some evidence in reviews to build a post graduate training process

The reviews included in this review show that evidence in the reviews is available for training processes, assessment of trainees, and trainee processes. Course organisers, colleges and the stakeholders involved in quality of post graduate medical education should rely on this available evidence to build quality frameworks and assessments. The review suggests other pertinent criteria than the undergraduate curriculum to select the candidates for post graduate medical education. There is a need to define a core curriculum and to combine learning strategies, including simulations and high quality feedback from an authoritative source. The choice of clinical settings is crucial, in particular the opportunity to work in team and to care for common ailments seen in ambulatory practice. The papers finally provide exhaustive reviews of the available assessment instruments: however they underline the need for a better assessment of their validity and for an evolution towards a multidimensional assessment.

Some gaps in the literature have been identified e.g. on sub-areas of staffing, training settings, evaluation of training process. As an illustration the link between staff performance and training outcome and the relationship between training and service are hardly addressed in reviews. The selected reviews focus on one or several parts of the WFME framework but do not take into account their interactions.

Strengths of this systematic review

This systematic review was comprehensive, based on the most important indexed databases for medical education topics. The selection of papers relied on strict criteria and the quality of the included papers was further assessed [12]. The authors who performed this review came therefore across all challenges mentioned by Reed and colleagues for performing systematic reviews of educational interventions, i.e., finding reports of educational interventions, assessing quality of study designs, assessing the scope of interventions, assessing the evaluation of interventions, and synthesizing the results of educational interventions [9].

Limitations of this systematic review

Some limitations inherent to the methodology of this systematic review need to be addressed. An important limitation is linked to the choice of key words and search strings. The concept of quality covers a broad spectrum: the choice and combination of similar MESH and non MESH terms was difficult across all databases.

A second limitation relates to the authors' decision to focus on indexed literature only. Some publications from the grey literature have been probably missed but there is a risk to that these lack scientific rigor [1]. Another source of incomplete data source might be the decision to exclude information from reviews that focused on one specialty or one technical procedure in medicine only.

A third limitation is the questionable quality of the primary studies as reported by many authors of the systematic reviews. They noted that most primary studies relate to single institutions and that the designs of included studies were often of poor quality. (Randomised) controlled trials were seldomly included in the reviews. This means that currently the best evidence in the reviews on quality in PME relies on descriptive and cohort studies and before-and-after measurements.

The classification of papers within the appropriate areas and sub-areas was a challenge. Some misclassifications might arise from the subjective assessment of the researchers' team. In particular, some papers apply to more than one (sub) area. For instance, the paper of Carraccio [21] stressed the applicability of portfolios in assessment of trainees (area 3.1), but because of the formative applications it could also be placed in training content (area 2.3), as it represents what trainees do and reflects upon the content of their work. For reasons of clarity, these three reviews were therefore reported in two most relevant (sub) areas.

The mixed populations in the studies is finally also a possible limitation to the interpretation of the results. Often other groups than residents were included in the reviews (i.e. medical students, nurses) which makes it hard to identify evidence specific for the group of post graduate trainees. Educational needs of senior trainees are different from these of junior ones. The heterogeneity within reviews is of concern and should be identified more precisely by future reviewers.

Conclusions

This systematic literature review identified and analyzed the available evidence for some areas of the post graduate training. The majority of the reviews provided information about the training process, the assessment of trainees and the trainees. Indicators used for quality assessment purposes of post graduate training should be based on this evidence but further research is needed for some areas in particular to assess the quality of the training process.

Key Points of this systematic literature review

-

This systematic review identified the available good quality reviews for some areas of the WFME framework: training approaches, assessment of trainees and working conditions are the areas most often reviewed;

-

Useful criteria to select the candidates for post graduate medical education exist;

-

There is a need to combine learning strategies: high quality feedback from an authoritative source is a key of success;

-

A special attention is required for the choice of clinical settings: experiences in teams and practice in ambulatory care are essential to develop the trainee's competences;

-

Many assessment tools are available but there is need for a better assessment of their validity and for an evolution towards a multidimensional assessment;

-

This review identified a gap in reviewed research for some (sub) areas of the WFME framework: mission and outcomes, the evaluation of the training process and the continuous renewal.

References

Eindrapportage projectgroep "kwaliteitsindicatoren". College voor de Beroepen en Opleidingen in de Gezondheidszorg. [http://www.cbog.nl/uploaded/FILES/htmlcontent/Projecten/Kwaliteitsindicatoren/Eindrapportage%20projectgroep%20Kwaliteitsindicatoren_versie_2.pdf]

Generic standards for specialty including GP training. [http://www.gmc-uk.org/Generic_standards_for_specialty_including_GP_training_Oct_2010.pdf_35788108.pdf_39279982.pdf]

Accreditation Council for Graduate Medical Education. [http://www.acgme.org/]

Kwaliteitsindicatoren voor medische vervolgopleidingen. [http://www.cbog.nl/uploaded/FILES/htmlcontent/Projecten/Kwaliteitsindicatoren/100428%20-%20def%20Kwaliteitsindicatoren%202e%20tranche%20zorgopleidingen.pdf]

The royal college of physicians and surgeons of Canada. The Canmeds project overview. [http://rcpsc.medical.org/canmeds/bestpractices/framework_e.pdf]

Postgraduate Medical Education: WFME standards for quality improvement. [http://www.wfme.org/]

Davis DJ, Ringsted C: Accreditation of undergraduate and graduate medical education: how do the standards contribute to quality?. Adv Health Sci Educ Theory Pract. 2006, 11 (3): 305-313. 10.1007/s10459-005-8555-4.

Adler G, von dem Knesebeck J, Hänle MM: Quality of undergraduate, graduate and continuing medical education. Z Evid Fortbild Qual Gesundhwes. 2008, 102 (4): 235-243. 10.1016/j.zefq.2008.04.004.

Reed D, Price EG, Windish DM, Wright SM, Gozu A, Hsu EB, Beach MC, Kern D, Bass EB: Challenges in Systematic Reviews of Educational Intervention Studies. Annals of Internal Medicine. 2005, 142 (12 Part 2): 1080-1089.

Education Resources Information Center. [http://www.eric.ed.gov/]

Research and Development Resource Base. [http://www.rdrb.utoronto.ca/about.php]

Methodology Checklist 1: Systematic Reviews and Meta-analyses. [http://www.sign.ac.uk/methodology/checklists.html]

Griffith CH, Rich EC, Hillson SD, Wilson JF: Internal medicine residency training and outcomes. J Gen Intern Med. 1997, 12 (6): 390-396. 10.1007/s11606-006-5089-2.

Lee AG, Beaver HA, Boldt HC, Olson R, Oetting TA, Abramoff M, Carter K: Teaching and assessing professionalism in ophthalmology residency training programs. Surv Ophthalmol. 2007, 52 (3): 300-314. 10.1016/j.survophthal.2007.02.003.

Ogrinc G, Headrick LA, Mutha S, Coleman MT, O'Donnell J, Miles PV: A framework for teaching medical students and residents about practice-based learning and improvement, synthesized from a literature review. Acad Med. 2003, 78 (7): 748-756. 10.1097/00001888-200307000-00019.

Byrne AJ, Pugsley L, Hashem MA: Review of comparative studies of clinical skills training. Medical Teacher. 2008, 30 (8): 764-767. 10.1080/01421590802279587.

Di Francesco L, Pistoria MJ, Auerbach AD, Nardino RJ, Holmboe ES: Internal medicine training in the inpatient setting. A review of published educational interventions. J Gen Intern Med. 2005, 20 (12): 1173-1180. 10.1111/j.1525-1497.2005.00250.x.

Issenberg SB, McGaghie WC, Petrusa ER, Gordon DL, Scalese RJ: Features and uses of high-fidelity medical simulations that lead to effective learning: A BEME systematic review. Medical Teacher. 2005, 27 (1): 10-28. 10.1080/01421590500046924.

Coomarasamy A, Khan KS: What is the evidence that postgraduate teaching in evidence based medicine changes anything? A systematic review. BMJ. 2004, 329 (7473): 1017-10.1136/bmj.329.7473.1017.

Flores-Mateo G, Argimon JM: Evidence based practice in postgraduate healthcare education: a systematic review. BMC Health Services Research. 2007, 7 (119).

Carraccio C, Englander R: Evaluating competence using a portfolio: A literature review and web-based application to the ACGME competencies. Teaching & Learning in Medicine. 2004, 16 (4): 381-387. 10.1207/s15328015tlm1604_13.

Lynagh M, Burton R, Sanson-Fisher R: A systematic review of medical skills laboratory training: Where to from here?. Medical Education. 2007, 41 (9): 879-887. 10.1111/j.1365-2923.2007.02821.x.

Chakraborti C, Boonyasai RT, Wright SM, Kern DE: A systematic review of teamwork training interventions in medical student and resident education. J Gen Intern Med. 2008, 23 (6): 846-853. 10.1007/s11606-008-0600-6.

Bowen JL, Irby DM: Assessing quality and costs of education in the ambulatory setting: a review of the literature. Acad Med. 2002, 77 (7): 621-680. 10.1097/00001888-200207000-00006.

Kennedy TJT, Regehr G, Baker GR, Lingard LA: Progressive independence in clinical training: a tradition worth defending?. Acad Med. 2005, 80 (10 Suppl): S106-111.

Fletcher KE, Underwood W, Davis SQ, Mangrulkar RS, McMahon LF, Saint S: Effects of work hour reduction on residents' lives: a systematic review. JAMA. 2005, 294 (9): 1088-1100. 10.1001/jama.294.9.1088.

McKinley RK, Strand J, Ward L, Gray T, Alun-Jones T, Miller H: Checklists for assessment and certification of clinical procedural skills omit essential competencies: A systematic review. Medical Education. 2008, 42 (4): 338-349. 10.1111/j.1365-2923.2007.02970.x.

Lurie SJ, Mooney CJ, Lyness JM: Measurement of the general competencies of the accreditation council for graduate medical education: a systematic review. Acad Med. 2009, 84 (3): 301-309. 10.1097/ACM.0b013e3181971f08.

Epstein RM, Hundert EM: Defining and assessing professional competence. JAMA. 2002, 287 (2): 226-235. 10.1001/jama.287.2.226.

Kogan JR, Holmboe ES, Hauer KE: Tools for Direct Observation and Assessment of Clinical Skills of Medical Trainees: A Systematic Review. JAMA. 2009, 302 (12): 1316-1326. 10.1001/jama.2009.1365.

Lynch DC, Surdyk PM, Eiser AR: Assessing professionalism: A review of the literature. Med Teach. 2004, 26 (4): 366-373. 10.1080/01421590410001696434.

Hutchinson L, Aitken P, Hayes T: Are medical postgraduate certification processes valid? A systematic review of the published evidence. Med Educ. 2002, 36 (1): 73-91. 10.1046/j.1365-2923.2002.01120.x.

Veloski J, Boex JR, Grasberger MJ, Evans A, Wolfson DW: Systematic review of the literature on assessment, feedback and physicians' clinical performance*: BEME Guide No. 7. Medical Teacher. 2006, 28 (2): 117-128. 10.1080/01421590600622665.

Hamdy H, Prasad K, Anderson B, Scherpbier A, Williams R, Zwierstra R, Cuddihy H: BEME systematic review: Predictive values of measurements obtained in medical schools and future performance in medical practice. Medical Teacher. 2006, 28 (2): 103-116. 10.1080/01421590600622723.

Pilotto LS, Duncan GF, Anderson-Wurf J: Issues for clinicians training international medical graduates: A sytematic review. Medical Journal of Australia. 2007, 187 (4): 225-228.

Mitchell M, Srinivasan M, West DC, Franks P, Keenan C, Henderson M, Wilkes M: Factors affecting resident performance: development of a theoretical model and a focused literature review. Acad Med. 2005, 80 (4): 376-389. 10.1097/00001888-200504000-00016.

Yao DC, Wright SM: The challenge of problem residents. J Gen Intern Med. 2001, 16 (7): 486-492. 10.1046/j.1525-1497.2001.016007486.x.

Prins JT, Gazendam-Donofrio SM, Tubben BJ, van der Heijden FMMA, van de Wiel HBM, Hoekstra-Weebers JEHM: Burnout in medical residents: a review. Med Educ. 2007, 41 (8): 788-800. 10.1111/j.1365-2923.2007.02797.x.

Thomas NK: Resident burnout. JAMA. 2004, 292 (23): 2880-2889. 10.1001/jama.292.23.2880.

McCray LW, Cronholm PF, Bogner HR, Gallo JJ, Neill RA: Resident physician burnout: is there hope?. Fam Med. 2008, 40 (9): 626-632.

Finch SJ: Pregnancy during residency: a literature review. Acad Med. 2003, 78 (4): 418-428. 10.1097/00001888-200304000-00021.

Boex JR, Leahy PJ: Understanding residents' work: moving beyond counting hours to assessing educational value. Acad Med. 2003, 78 (9): 939-944. 10.1097/00001888-200309000-00022.

Post RE, Quattlebaum RG, Benich JJ: Residents-as-teachers curricula: a critical review. Acad Med. 2009, 84 (3): 374-380. 10.1097/ACM.0b013e3181971ffe.

Dewey CM, Coverdale JH, Ismail NJ, Culberson JW, Thompson BM, Patton CS, Friedland JA: Residents-as-teachers programs in psychiatry: a systematic review. Can J Psychiatry. 2008, 53 (2): 77-84.

Jha V, Quinton ND, Bekker HL, Roberts TE: Strategies and interventions for the involvement of real patients in medical education: A systematic review. Medical Education. 2009, 43 (1): 10-20. 10.1111/j.1365-2923.2008.03244.x.

Kilminster SM, Jolly BC: Effective supervision in clinical practice settings: a literature review. Med Educ. 2000, 34 (10): 827-840. 10.1046/j.1365-2923.2000.00758.x.

Bowen JL, Salerno SM, Chamberlain JK, Eckstrom E, Chen HL, Brandenburg S: Changing habits of practice. Transforming internal medicine residency education in ambulatory settings. J Gen Intern Med. 2005, 20 (12): 1181-1187. 10.1111/j.1525-1497.2005.0248.x.

Hutter MM, Kellogg KC, Ferguson CM, Abbott WM, Warshaw AL: The impact of the 80-hour resident workweek on surgical residents and attending surgeons. Ann Surg. 2006, 243 (6): 864-871. 10.1097/01.sla.0000220042.48310.66. discussion 871-865

European Working Time Directive. [http://ec.europa.eu/social/main.jsp?catId=706&langId=en&intPageId=205]

Laurant RD, Hermens R, Braspenning J, Grol R, Sibbald B: Substitution of doctors by nurses in primary care. Cochrane Database of Systematic Reviews. 2005, CD001271-2

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1472-6920/11/80/prepub

Acknowledgements

We thank Patrice Chalon, librarian of the Federal Knowledge Centre of Health Care of Belgium for helping to search the databases.

Funding

Funding was provided by Belgian Health Care Knowledge Centre. Project 2008-27

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

AD, RR and DP designed this study. AD, RR and JW selected and analysed data. AD, RR and JW drafted the manuscript. All authors read and approved the final version of the manuscript.

Electronic supplementary material

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Damen, A., Remmen, R., Wens, J. et al. Evidence based post graduate training. A systematic review of reviews based on the WFME quality framework. BMC Med Educ 11, 80 (2011). https://doi.org/10.1186/1472-6920-11-80

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1472-6920-11-80