Abstract

We consider the problem of identifying the pollution source of a 1D parabolic equation from the initial and the final data. The problem is ill posed and regularization is in order. Using the quasi-boundary method and the truncation Fourier method, we present two regularization methods. Error estimates are given and the methods are illustrated by numerical experiments.

Similar content being viewed by others

1 Introduction

In this paper, we consider an inverse problem of identifying a pollution source from data measured at some points in a watershed. The pollution source causes water contamination in some region. In all industrial countries, groundwater pollution is a serious environmental problem that puts the whole ecosystem, including humans, in jeopardy. The quality and quantity of groundwater have much effect on human life and may lead to natural environmental changes (see, e.g., [1]). As we know, most efforts to find pollutant transport are based on the methodology of mathematics. Solute transport in a uniform groundwater flow can be described by the one-dimensional (1D) linear parabolic equation

where Ω is a spatial domain, is the solute concentration, V represents the velocity of watershed movement, R denotes the self-purifying function of the watershed, and is a source term causing the pollution function . Putting

we can transform the latter equation into

where ; we still call it the source function. Coming from this relationship between the two equations (1) and (2), in the present paper, we will find a pair of functions satisfying (2) subject to the initial and the final conditions

and the boundary condition . To consider a more general case, we will replace D in (2) by a given function which is defined later.

This inverse source problem is ill posed. Indeed, a solution corresponding to the given data does possibly not exist, and even if the solution exists (uniquely) then it may not depend continuously on the data. Because the problem is severely ill posed and difficult, many preassumptions on the form of the heat source are in order. In fact, let be a basis in . Then the function F can be written as

In the simplest case, one reduces this approximation to its first term , where the function φ is given. Source terms of this form frequently appear, for example, as a control term for the parabolic equation.

In another context, this problem is called the identification of heat source; it has received considerable attention from many researchers in a variety of fields using different methods since 1970. If the pollute source has the form of , the inverse source problem was studied in [2]. In [3], the authors considered the heat source as a function of both space and time variables, in the additive or separable forms. Many researchers viewed the source as a function of space or time only. In [4, 5], the authors determined the heat source dependent on one variable in a bounded domain by the boundary-element method and the iterative algorithm. In [6], the authors investigated the heat source which is time-dependent only by the method of a fundamental solution.

Many authors considered the uniqueness and stability conditions of the determination of the heat source under this separate form. In spite of the uniqueness and stability results, the regularization problem for unstable cases is still difficult. For a long time, it has been investigated for a heat source which is time-depending only [4, 5, 7] or space-depending only [1, 3, 8–10]. As regards the regularization method, there are few papers with a strict theoretical analysis of identifying the heat source , where φ is a given function. Trong et al. [11, 12] considered this problem by the Fourier transformation method. Recently, when and (), the problem (1) describes a heat process of radio isotope decay whose decay rate is λ, which has been considered by Qian and Li [13]. In [14], Hasanov identified the heat source which has the form of of the variable coefficient heat conduction equation using the variational method. However, the generalized case with the time-dependent coefficient of Δu in the main equation is still limited and open. In this paper, we consider the following generalized equation:

and u satisfies the condition (3). This kind of equation (5) has many applications in groundwater pollution. It is a simple form of advection-convection, which appears in groundwater pollution source identification problems (see [1]). Such a model is related to the detection of the pollution source causing water contamination in some region.

The remainder of the paper is divided into three sections. In Section 2, we apply the quasi-boundary value method and truncation method to solve the problem (2)-(3). Then we also estimate the error between an exact solution and the regularization solution with the logarithmic order and Hölder order. Finally, some numerical experiments will be given in Section 3.

2 Identification and regularization for inhomogeneous source depending on time variable

Let , be the norm and the inner product in . Let be a continuous function on . We set . The problem (5) can be transformed into

By an elementary calculation, we can solve the ordinary differential equation (6) to get

or

where . Note that increases rather quickly when n becomes large. Thus the exact data function must satisfy the property that decays rapidly. But in applications, the input data can only be measured and never be exact. We assume the data functions , to satisfy

and , , , where the constant ϵ represents a noise level and .

Lemma 1 Let , . Then for all and , we have

Proof Case 1. . It is clear to see that

From the inequality , we get

Case 2. . Set . Then we obtain

We continue to estimate the term .

If then , thus

else if then and due to the assumption . Therefore, . This implies that

Hence, in this case, we get

Set for . Taking the derivative of this function, we get

The function g has a maximum at the point , so that . This implies that . Therefore

Since (11), (14), we have

From (11), we get

□

Lemma 2 Let be a continuous function on . Let , . Then we have

where

Proof (i) Since , we have

(ii) Since , we get . Then using Lemma 1, we get

□

2.1 Regularization by a quasi-boundary value method

Denote by the norm in Sobolev space defined by

where .

We modify the problem (3)-(5) by perturbing the Fourier expansion of final value g as follows:

where and is a regularization parameter such that . This problem is based on the quasi-boundary regularization method which is given in [11]. This method has been studied for solving various types of inverse problem [11, 15]. The solution of this problem is given by

Now we will give an error estimate between the regularization solution and the exact solution by the following theorem.

Theorem 1 Suppose that such that and for some . Let be measured data at satisfying (8). Let be the regularized solution given by (20). If we select such that

then and we have following estimate:

Proof We define

and

We divide the proof into three steps.

Step 1. Estimate . From (20) and (22), we have

Step 2. Estimate . From (22), (23), and (18), we have

On other hand, we have

Since , we get

Hence

It follows from (25) and (26) that

Since

and , we obtain

Here

Hence

Step 3. Estimate . In fact, using the Fourier expansion of f, we have

Using Lemma 2, we obtain

This implies that

Combining Steps 1, 2, and 3 and using the triangle inequality, we get

□

Remark 1 If we choose , , then (21) holds.

Remark 2 In this theorem, with the assumption , we have an error of logarithmic order. In the next section, we introduce a truncation method which improves the order of the error. We present the error of Hölder estimates (the order is , ) with a weaker assumption of f, i.e., .

2.2 Regularization by a truncation method

Theorem 2 Suppose that . Let be measured data at satisfying (8). Put

where , . Then the following estimate holds:

where

Proof From (7) and (31), we have

where

and

Step 1. We estimate . In fact, since (34), we get

Using integration by parts, we have

Hence

On the other hand, since is embedded continuously in we can assume that . So, there exists an such that . We have

It follows that

In a similar way, we also obtain . Hence . This implies that

Step 2. We estimate . The term (35) can be rewritten as follows:

Then

Using , we have

In a similar way and using (26), we also obtain

It is easy to see that . It implies that

Therefore

where . Hence

Combining (33), (41), and (46), we obtain

Since , we obtain

where . □

3 Numerical results

In this section, we consider some examples simulation for the theory in Section 2. In numerical experiments, we are interested in the error between exact source and source with approximation as RMSE:

with , a discretization of function f, .

Now, we consider

where

We can see the exact source

Using FORTRAN 95, we have a generator for noise data from routine which is a random variable with the uniform distribution on . Therefore, we have measurement data with noise

where , with , works as the amplitude of noise.

We can easily see

and we have convergence to zero.

From Figure 1, we can compare between exact data and measured data.

We consider the source approximation with the quasi-reversibility regularization

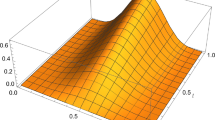

We have the table of errors with (see Table 1) and Figure 2.

On the other hand, we have the source approximation with the truncation Fourier regularization

We have the table of errors (see Table 2) and Figure 3 is as in Table 2.

References

Atmadja J, Bagtzoglou AC: Marching-jury backward beam equation and quasi-reversibility methods for hydrologic inversion: application to contaminant plume spatial distribution recovery. Water Resour. Res. 2003, 39: 1038-1047.

Cannon JR, Duchateau P: Structural identification of an unknown source term in a heat equation. Inverse Probl. 1998, 14: 535-551. 10.1088/0266-5611/14/3/010

Savateev EG: On problems of determining the source function in a parabolic equation. J. Inverse Ill-Posed Probl. 1995, 3: 83-102.

Farcas A, Lesnic D: The boundary-element method for the determination of a heat source dependent on one variable. J. Eng. Math. 2006, 54: 375-388. 10.1007/s10665-005-9023-0

Johansson T, Lesnic D: Determination of a spacewise dependent heat source. J. Comput. Appl. Math. 2007, 209: 66-80. 10.1016/j.cam.2006.10.026

Yan L, Fu C-L, Yang F-L: The method of fundamental solutions for the inverse heat source problem. Eng. Anal. Bound. Elem. 2008, 32: 216-222. 10.1016/j.enganabound.2007.08.002

Yang F, Fu C-L: Two regularization methods for identification of the heat source depending only on spatial variable for the heat equation. J. Inverse Ill-Posed Probl. 2009,17(8):815-830.

Cheng W, Fu C-L: Identifying an unknown source term in a spherically symmetric parabolic equation. Appl. Math. Lett. 2013, 26: 387-391. 10.1016/j.aml.2012.10.009

Yang F, Fu C-L: A simplified Tikhonov regularization method for determining the heat source. Appl. Math. Model. 2010, 34: 3286-3299. 10.1016/j.apm.2010.02.020

Yang F, Fu C-L: A mollification regularization method for the inverse spatial-dependent heat source problem. J. Comput. Appl. Math. 2014, 255: 555-567.

Trong DD, Tuan NH: A nonhomogeneous backward heat problem: regularization and error estimates. Electron. J. Differ. Equ. 2008., 2008: Article ID 33

Trong DD, Quan PH, Alain PND: Determination of a two dimensional heat source: uniqueness, regularization and error estimate. J. Comput. Appl. Math. 2006, 191: 50-67. 10.1016/j.cam.2005.04.022

Qian A, Li Y: Optimal error bound and generalized Tikhonov regularization for identifying an unknown source in the heat equation. J. Math. Chem. 2011,49(3):765-775. 10.1007/s10910-010-9774-3

Hasanov A: Identification of spacewise and time dependent source terms in 1D heat conduction equation from temperature measurement at a final time. Int. J. Heat Mass Transf. 2012, 55: 2069-2080. 10.1016/j.ijheatmasstransfer.2011.12.009

Denche M, Bessila K: A modified quasi-boundary value method for ill-posed problems. J. Math. Anal. Appl. 2005, 301: 419-426. 10.1016/j.jmaa.2004.08.001

Acknowledgements

This research is funded by the Institute for Computational Science and Technology at Ho Chi Minh City (ICST HCMC) under the project name ‘Inverse parabolic equation and application to groundwater pollution source’.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Tuan, N.H., Trong, D.D., Thong, T.H. et al. Identification of the pollution source of a parabolic equation with the time-dependent heat conduction. J Inequal Appl 2014, 161 (2014). https://doi.org/10.1186/1029-242X-2014-161

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1029-242X-2014-161