Abstract

In this paper, we present the application of a new method measuring Hubble parameter H(z) by using the anisotropy of luminosity distance (\(d_{L}\)) of the gravitational wave (GW) standard sirens of neutron star (NS) binary system. The method has never been put into practice so far due to the lack of the ability of detecting GW. However, LIGO’s success in detecting GW of black hole (BH) binary system merger announced the potential possibility of this new method. We apply this method to several GW detecting projects, including Advanced LIGO (aLIGO), Einstein Telescope (ET) and DECIGO, and evaluate its constraint ability on cosmological parameters of H(z). It turns out that the H(z) by aLIGO and ET is of bad accuracy, while the H(z) by DECIGO shows a good one. We simulate H(z) data at every 0.1 redshift span using the error information of H(z) by DECIGO, and put the mock data into the forecasting of cosmological parameters. Compared with the previous data and method, we get an obviously tighter constraint on cosmological parameters by mock data, and a concomitantly higher value of Figure of Merit (FoM, the reciprocal of the area enclosed by the \(2\sigma \) confidence region). For a 3-year-observation by standard sirens of DECIGO, the FoM value is as high as 170.82. If a 10-year-observation is launched, the FoM could reach 569.42. For comparison, the FoM of 38 actual observed H(z) data (OHD) is 9.3. We also investigate the undulant universe, which shows a comparable improvement on the constraint of cosmological parameters. These improvement indicates that the new method has great potential in further cosmological constraints.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the twenty-first century, we witnessed the bloom of precision cosmology. Precision cosmology even ranked second on a list named “Insights of the decade” from Science magazine in 2010 [1]. The key of accurate cosmology is to accurately constrain cosmological parameters and their state equations, which can lead us to a better understanding of the evolution of our universe. Four main observations have been developed to constrain cosmological parameters so far [2]: Supernova (SN, [3]), Baryon Acoustic Oscillation (BAO, [4]), Galaxy Cluster (CL, [5]), Weak Lensing (WL, [6]). Actually, a relatively new tool, observational Hubble parameter data (OHD), is becoming increasingly popular these years because of its effective constraint on cosmological parameters. H(z)’s high efficiency lies on the fact that it is the only observation that can directly represent the expanding history of our universe. Compared with the Luminosity distance (\(d_{L}\)) of SN, H(z) contains no integral terms and directly connects with cosmological parameters, which makes it powerful in constraining cosmological parameters, because the integral term can conceal many details and hide important information. As Ma and Zhang [7] reported, H(z) constrains cosmological parameters much tighter than the same-number SN does. To achieve the same constraint effect of SN subset ConstitutionT, ones need only 64 H(z) data sets under gaussian prior on \(H_{0}\), \(H_{0}+\sigma _{H_{0}}\).

There are various ways to detect H(z), which can be generally classified into three types: (1) differential age method [8]; (2) radial BAO method [9]; (3) standard sirens method [10]. The first two techniques have been employed in the past measurement of H(z), but the number of observed hubble parameter data (OHD) are still insufficient. We get only 38 OHD sets so far, whose accuracies are far from desirable. Now with the development of gravitational wave (GW) detecting technology, it is time to look forwards to the third method: GW standard sirens. GW standard sirens was first proposed and discussed by Schutz [10]. Schutz presented his idea that one can determine the Hubble constant through the observation of GW emitted by decaying orbit of neutron star (NS) binary system. In 2015, although the second generation GW detector Advanced LIGO (aLIGO) operated even not at its design sensitivity, it still detected the first GW signal at its first run [11]. According to theoretical understanding, GW formula of compact binary system encodes the information of \(d_{L}\), providing an access to the direct measurement of \(d_{L}\), the crucial parameter we use in this paper. We sense the possibility and potential from detecting gravitational wave.

The detection of GW not only conforms to the general relativity, but also let us see the hope of standard sirens [12,13,14]. Toshiya Namikawa et al. [15] studied GW standard sirens as a cosmological probe without redshift. But it is hard to get the corresponding redshift information without electromagnetic counterpart. The absence of redshift impedes some further research. If one were given the H(z) and its corresponding redshift, the scope of research would be wildly broaden. In 2006, a new way to narrow the relative error of H(z) by the dipole of \(d_{L}\) has been proposed [16]. The relative error is measured by the dipole of SN. But the problem is that the new method needs plenty of SNs if we want to get a relatively accurate H(z), which can not be met in reality. Although this method also has problem in measuring high-z H(z), it is an instructive idea. Atsushi Nishizawa and Atsushi Tamga et al. [17] gave us an alternative by pointing it out that we can get information of \(d_{L}\) through the gravitational wave function of NS binary system, instead of SN . We follow his idea and choose NS binary system as our research subject in this paper, because a rough estimate would tell us that the number of observed NS binary system turns out much bigger than that of SN. NS binary system can help us dramatically narrow down the statistical error.

Pozzo [18] proposed a general Bayesian theoretical framework for cosmological inference, which can conveniently include the prior information about the GW source. This framework defines the likelihood based on the difference between the strain of each detector and the GW template, and the posterior probability distribution for the cosmological parameters is calculated through the quasi-likelihood obtained by marginalizing over the GW signal intrinsic parameters. Applying the framework the author constrained the Hubble constant \(H_0\) to an accuracy of 4–5\(\%\) at \(95\%\) confidence. Nearly all subsequent work of using GW sources for cosmological inference is based on this Bayesian framework. The same framework was adopted by Taylor et al. [19], but the likelihood was defined on the assumption that the number count of GW events detected by a detector is a Poisson distributed random variate. They measured the Hubble constant using GW signals of NS binaries by narrowing the distribution of masses of the underlying NS population. That is, \(H_0\) was determined to \(\pm 10\%\) using \(\sim 100\) observations. By assuming that the masses of NS binaries can be modeled by a Gaussian distribution and that both masses of the double NS systems are equal, the authors found their chirp masses are approximately normally distributed and got the corresponding mean and standard deviation. Then, using the same method, they explored the prospects for constraining cosmology using GW observations of neutron star binaries by the proposed Einstein Telescope (ET), a third-generation ground-based interferometer. This time they fixed \(H_0\), \(\varOmega _{m,0}\) and \(\varOmega _{\varLambda ,0}\) and constrained the dark-energy equation of state (EOS) parameters [20]. With a \(10^5\)-event catalog, they constrained the dark-energy EOS parameters to an accuracy similar to forecasted constraints from future CMB + BAO + SNIa measurements. Chen et al. [21] investigated the measurement of Hubble constant at various cases: with and without electromagnetic counterpart, binary NS mergers and binary black hole mergers. They showed that that LIGO and Virgo can be expected to constrain the Hubble constant to a precision of \(~2\%\) within 5 years and \(~1\%\) within a decade. Vitale and Chen [22] dealt with neutron star black hole mergers and focused on measuring the luminosity distance to a source. They concluded that the \(1-\sigma \) statistical uncertainty of the luminosity distance for spinning black hole neutron star binaries can be up to a factor of \(\sim 10\) better than for a non-spinning binary neutron star merger with the same signal-to-noise ratio. Pozzo et al. [23] investigated the accuracy of the measured cosmological parameters using information coming only from the gravitational wave observations of binary neutron star systems by the Einstein Telescope. They used Fisher matrix method to extract redshift information of a source given that information about the equation of state of the source is available [24]. They found by direct simulation of \(10^3\) detections of binary neutron stars, \(H_0\), \(\varOmega _m\), \(\varOmega _\varLambda \), \(w_0\) and \(w_1\) can be measured at the \(95\%\) level with an accuracy of \(\sim 8\%\), \(65\%\), \(39\%\), \(80\%\) and \(90\%\), respectively. Different to the previous studies that focussed on constraining the parameters of specific cosmological models, our work emphasises a model independent measurement of H(z). A model free approach will generally produce a weaker constraint on any particular model than the model-specific analysis, but it has more flexibility if the true model deviates from the model assumed.

For the current observational status of GW, several frequency windows of its are targeted by different detectors. The second generation detector are mainly aimed at frequency window 10– 1000 Hz, such as aLIGO and VIRGO. The next generation detector plan to reach lower frequency band. The project DECIGO was designed most sensitive at 0.1–10 Hz, while the Einstein Telescope (ET) may also reach the frequency \(\sim 1\) Hz. The space-based eLISA can even detect GW of \(10^{-4}\)–\(10^{-1}\) Hz. In this paper, we make use of GW sirens to measure H(z) by estimating the error of \(d_{L}\), a little different from the method proposed by Schutz [10]. Because NS binary system are used as the source of GW in this paper, the GW signal frequency of whom mostly ranges in 10–1000 Hz, we ignore the projects whose optimal sensitivity are far away from 10–1000 Hz, such as eLISA, and choose the ones whose optimal sensitivity locate around 10– 1000 Hz. Finally, aLIGO, Einstein Telescope (ET) and DECIGO are chosen as our research subject.

Most importantly, the Advanced LIGO and Advanced Virgo gravitational-wave detectors made their first observation of a binary neutron star inspiral, and detected the signal of GW170817 with a combined signal-to-noise ratio of 32.4 [13, 14]. In addition it provides the first direct evidence of a link between binary neutron star mergers and short \(\gamma \)-ray bursts. The combined analyses of the gravitational-wave data and electromagnetic emissions are providing new insights into independent tests of cosmological models, so GW170817 marks the beginning of a new era of cosmology. Using the data of GW170817, they performed the gravitational-wave standard siren measurement of the Hubble constant [14] to be \(70^{+12}_{-8}\;\mathrm {km\;s^{-1}\;Mpc^{-1}}\). Different from their works, in this paper, we focus mainly on two aspect: (1) how will it work out if we apply the new method to some projects? (2) how about the quality of the H(z) by this method, or to what degree could we constrain cosmological parameters? This paper is organized as follows. In Sect. 2, we sketch the idea of GW standard sirens method, and apply it to some GW detecting projects. In Sect. 3, we simulate the H(z) data, and analyze the constraint ability of the mock data for \(\varLambda \)CDM and the undulant universe. In Sect. 4, we discuss the result and talk a little about the corresponding redshift. All through this paper, we adopt the natural unit, \(c=G=1\).

2 Methods

2.1 Dipole of luminosity distance

If the universe is completely homogeneous and isotropic on large scale, and the observer is relatively rest with the cosmic microwave background (CMB), the luminosity distance, \(d_{L}\), would be just the same form and has the same expression as in standard cosmology. But in fact, there are perturbations around ideal condition leading into the appearance of correction term of \(d_{L}\) [25]. Therefore \(d_{L}\) can be written as follow:

where \(d_{L}^{(0)}\) represents the traditional meaning of luminosity distance in unperturbed Friedmann universe, also the average of \(d_{L}\) on all direction, and \(d_{L}^{(1)}\) means the dipole of \(d_{L}\). The contribution to higher order terms coming from the weak gravitational lensing effect is so small compared with dipole that we ignore them here [26]. The dipole is dominated by the peculiar velocity of observers. If you want to check it or feel intrigued by the theory, you can look up the reference for the details [16]. Here is the final result:

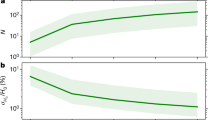

where \(|v_{0}|\), z, H(z), \(\varDelta H(z)\) respectively denote the projection of observer peculiar velocity on the direction of sight, the redshift of the observed celestial body, the expanding rate at the redshift z, the absolute error of H(z), and \(\varDelta d_{L}^{(0)}\), \(\varDelta d_{L}^{(1)}\) means the error of \(d_{L}^{(0)}\), \(d_{L}^{(1)}\) respectively. From the equations above,given the value of \(d_{L}^{(1)}/ d_{L}^{(0)}\) and \(\varDelta d_{L}^{(0)}/d_{L}^{(0)}\), \(\varDelta H(z)/H(z)\) can be easily calculated. The result of the term \(d_{L}^{(1)}/d_{L}^{(0)}\) is shown in Fig. 1. To get \(\varDelta H(z)/H(z)\), the only remaining problem is to find out \(\varDelta d_{L}^{(0)}/d_{L}^{(0)}\), which can be solved by analyzing observed GW function in following subsection. Also, the mean error \(\varDelta H(z)\) reduces to \(\varDelta H(z)/\sqrt{N}\) if we observe N independent sources at the given redshift. Thus, we can improve the accuracy by the observation of a large number of sources.

The value of \(d_{L}^{(1)}/d_{L}^{(0)}\) at different redshift for \(v_{0}=369 \; \mathrm {km/s}\) [27]. As shown in the picture, the ratio goes very large , even bloom up, at low redshift. That is caused by the fact that the ratio approximate to \((1+z)|v_{0}|/z\) at the limit of \(z=0\)

2.2 GW standard sirens

One can use SN to illustrate the method of reducing the error of H(z). But due to the number and distribution of SN, it doesn’t work well, especially at high-z region. Considering the advantage of the larger number of observed sources, which can dramatically narrow down the error of H(z), we choose NS binary system as an alternative of SN. There is another problem for black hole binary system: black hole seldom radiates electromagnetic wave, rendering it hard to measure its corresponding redshift up to now. This is an important factor to choose NS binary system.

In GW experiments, one can extract the property of the source and cosmological information by comparing detected waveform with theoretical template. That is what LIGO team did when the first detected the GW of two back holes merged [11]. The typical Fourier transform of GW waveform can be expressed by

which is based on the average over sky location. Here \(A=(\sqrt{6}\pi ^{2/3})^{-1}\) is a constant geometrically averaged over the inclination angle of a binary system. \(d_{L}(z)\) is the luminosity distance at redshift z, and we can set it as \(d_{L}^{(0)}\) because we need to observe plenty of source at the given redshift. \(M_{z}=(1+z)\eta ^{3/5}M_{t}\) with the definition of total mass \(M_{t}=m_{1}+m_{2}\) and symmetric mass ratio \(\eta =m_{1}m_{2}/M_{t}^{2}\). The last unknown function \(\varPsi (f)\) is a little intricate. It is the frequency-dependent phase caused by orbital evolution. Usually we deal with it by post-Newtanion (PN) approximation, an approximation to general relativity in the weak-field, slow-motion regime [28]. Its concrete expression will not affect the final result, because this term will be eliminated when we do the following calculation. Here we just need to know that it is a function of the coalescence time \(t_{c}\), the phase when emitted \(\phi _{c}\), \(M_{z}\), f, \(\eta \).

There are five unknown parameters, namely: \(M_{z}\), \(\eta \), \(t_{c}\), \(\phi _{c}\), \(d_{L}\), where \(d_{L}\) is the only parameter that has nothing do with the own property of binary system. For the convenience of calculating, we just take account of equal mass NS binary system with \(1.4M_{\bigodot }\), and set \(t_{c}=0\), \(\phi _{c}=0\). Then we have \(M_{z}=1.22(1+z)M_{\bigodot }\), \(\eta =0.25\). Though GW may tell us some information about the redshift [24, 29], we have no data about the redshift and we need a general method to get the reshift information. We should still resort to electromagnetic observation to find out corresponding redshift. Cutler and Holz [30] demonstrated its technological viability.

We use fisher matrix to estimate error. Fisher matrix has its limit: the Cramer–Rao bound, and it’s break down at low SNR. The error estimate for \(d_{L}\) is based on Fisher matrix that is given by

where \(\partial _{a}\) means derivative with respect to parameter \(\theta _{a}\). For DECIGO, there exist eight interferometric signals, \(\varGamma _{ab}\) should multiplied by 8. We set values to parameters expect for \(d_{L}\), so the only parameter in \(\varGamma _{ab}\) is \(d_{L}\). P(f) is the noise power spectrum, and the P(f) for DECIGO, ET and aLIGO are shown in Fig. 2. Here we give the expression of each detector’s noise curve, \(P_{1}(f)\), \(P_{2}(f)\), \(P_{3}(f)\) for DECIGO, ET and aLIGO respectively.

DECIGO Deci-Hertz Interferometer Gravitational Wave Observatory (DECIGO) is a planed space-based GW observation aimed at 0.1–10 Hz frequency window. Its configuration is still to be decided. Here we adopt the following parameters in its configuration: the arm length 1000 km, the output laser power 10 W with wavelength \(\lambda =532\) nm, the mirror diameter 1 m with its mass 100 kg, and the finesse of FP cavity 10. Thus its noise curve is [31]

ET Einstein Telescope (ET) is a third generation GW detector, whose design is not finished. Here we just consider the simplest case with 10km arms. We adopt the fitting expression given by Keppel and Ajith [32]

aLIGO aLIGO is an available second generation detector whose optimal sensitivity band match with the frequency window of GW from NS binary system . The first run of aLIGO did not reach its design sensitivity. Here we use the noise curve fitted by Ref. [33]. It is not an accurate expression, but an approximation of the original curve is given by Ref. [34]

In the expression of \(\varGamma _{ab}\), the lower cutoff of frequency, \(f_{min}\), is a function of observation time \(T_{obs}\), \(f_{min}=0.233( 1M_{\bigodot } / M_{z} )^{5/8}( 1yr / T_{obs} )^{3/8}\;{\text {Hz}}\). In the case of our paper, for a given \(T_{obs}\), \(f_{min}\) changes little with \(M_{z}\), which is always in the high strain noise region. It makes no big difference to the result of the integral. A reasonable setting of the value of \(f_{min}\) will work. But for prudence, we just take the original expression of \(f_{min}\) when calculating the integral. And the higher cutoff, \(f_{max}\), is the inner-most stable circular orbit frequency, whose typical value is of kHz order [35]. More specific, \(f_{max}\simeq 2000\) Hz in our case. In the calculation, \(f_{max}\) can be set by the property of the integrand. The value of integrand sharply drops with f getting larger, so its contribution to \(\varGamma _{ab}\) can be neglected. For the reason of integrand property, we set the \(f_{max}\) of DECIGO, ET, aLIGO respectively to be 100 Hz, 2000 Hz and 2000 Hz. Then the one-sigma instrument error is

If we launch an observation for a given source, the one-sigma error estimate \(\sigma _{instr}\) of \(d_{L}\) arises from instrumental noise. For a given device, no matter it is DECIGO, ET or aLIGO, the accuracy of \(d_{L}\) is the same even for different observation time. It makes no difference for the \(\sigma _{instr}\) no matter how long the observation continues, which is mainly because that the error is due to the property of device. The observation is band-limited. The source is visible only for the time it takes to move form the low frequency list of the detector’s sensitivity to merger. For any observation longer than that time the precision is the same since you do not observe the source any more. The \(\sigma _{instr}\) of three devices are showed in Fig. 3. As we can see, the accuracy is far from desirable. The H(z) error would increase if we include other errors . We need to take measure to narrow down the error. This is what we do in next subsection

2.3 H(z) error

In last subsection, we already calculate the device error \(\sigma _{instr}\) for a given NS binary system under a given observation device. Besides the device error-the dominating error, there are two main errors, namely the lensing error and the peculiar-velocity error. The lensing error is due to the lens effect. Here we take a recent fitting by [36],

And the peculiar-velocity error is a kind of Doppler effect of the movement of the celestial body, essentially. The peculiar-velocity error can be described as [37]

where \(\sigma _{v,gal}= 300\) km/s is the approximation of the 1-dimensional velocity dispersion of the galaxy. Then we get the expression of relative error of \(d_{L}(z)\):

Before we do the calculation to get the relative error of H(z), there is one more step we can do for a better accuracy. The mean error will statistically abate if we have many independent sources. Reducing the error of H(z) by observing many NS binary system at the same redshift may be feasible. The problem is to what degree can we reduce the error? First we need to figure out the number distribution \(\dot{n}(z)\) of NS binary system at different redshift. The distribution of NS binary system can be described and estimated. According to Cutler and Harms [38], the fitting of NS–NS merger rate can be given by:

where s(z) is estimated from star formation history inferred from UV luminosity, and \(\dot{n}_{0}\) represents the merger rate at present time. Then \(\varDelta N\), the number of NS–NS merger at redshift bin \(\varDelta z\), is expressed by: \(\varDelta N(z)=T_{obs} \int _{z-\varDelta z/2}^{z+\varDelta z/2} 4\pi \) \([d_{L}(z')/(1+z')]^{2} \dot{n}(z')/(1+z') /H(z') dz' \).

Recent work doesn’t provide solid evidence of the exact value of \(\dot{n}_{0}\). he latest \(\dot{n}_{0}\) range inferred by the observation of GW170817 is \(0.32-4.7 \times 10^{-6}\;\mathrm {Mpc^{-3}\;year^{-1}}\) [13]. Also not every merger event would be detected. Here we encounter an conundrum. Considering that we are aimed at evaluating the method, not launching an actual observation here, we decide to, a bit arbitrarily, set \(\dot{n}_{0}\) equal to \(1.0 \times 10^{-6}\mathrm {Mpc^{-3}yr^{-1}}\), and assume all the merger events could be detected, and the redshift width \(\varDelta z=0.1\). Thus we get the estimate of 10-year observed number of NS binary system merger at different redshift, which is shown in Fig. 4.

Same as Fig. 5, but for ET

Same as Fig. 5, but for aLIGO. For a given observation time, the dash line and the solid line overlaps, because the lens error is relatively small compared with the instrument error of aLIGO

The total number of SN is just of hundred-magnitude by now, while the observed number of NS–NS merger event will be much larger than that of SN, showing a tremendous potential in reducing the mean error of H(z). And from above equation, the number of NS–NS merger at fixed redshift increases with \(T_{obs}^{1/2}\). The elongation of observation time can remedy the drawback of the device sensibility.

Combining with the information we get above, we can calculate the H(z) error for a specific device under a given observation time now. The relative error of H(z) by DECIGO, ET and aLIGO is shown in Figs. 5, 6, and 7, for 1-year, 3-year, 10-year observation respectively. The relative error by aLIGO is a total disaster, which basically has little application value in constraining cosmological parameters. The error by ET is a little better, especially at low redshift region, because ET is more sensitive than aLIGO. Thus DECIGO plays best in this method. When redshift reach 3, due to the decreasing of the number of observed NS-NS merger event with redshift, the relative error of H(z) is magnified, but still quite small. And the elongation of \(T_{obs}^{1/2}\) shows a great ability in narrowing down the error. We stress here that \(\sigma _{lens}(z)\) contributes a lot to the distance error \(\varDelta d_{L}^{(0)}/d_{L}^{(0)}\). Of course, various techniques have been developed to reduce \(\sigma _{lens}(z)\). Stefan Hilbert [39] suggested that deep shear survey can narrow the lens error. Hirata [36] found that ones can improve the distance determination typically by a factor of 2–3 by exploiting the non-Gaussian nature of the lens magnification distribution. Shapiro [40] used the procedure ‘delensing’, to estimate the magnification and thereby remove it by a weak lensing map. It may be too optimistic to remove all the lens error. But we can rely on it that we could reduce the lens error to a very low level in the near future. Therefore, for simplicity, we will ignore \(\sigma _{lens}(z)\) in the following sections.

3 Evaluation

3.1 Simulating data

The new method for measuring H(z) has been presented and its error analysis has been done above. The problem is how H(z) can accurately observed by this way to constrain cosmological parameters? So far, we did not obtain actual OHD by this way. But it doesn’t necessarily mean we can do nothing about it. A reasonable and rational simulation can help us forecast and evaluate. Since H(z) by aLIGO has a bad accuracy, we carry on no simulation and forecast for aLIGO here. H(z) by ET can do some simulation and forecast. The problem is that the effect is a little bad, even worse than 38 OHD sets. We do not plan to show it here, too. Thus, DECIGO is the only device we discuss in following sections.

Now that we have the error information of H(z), we can simulate the OHD. We follow the method Shuo [41] to generate mock data for \(\varLambda \)CDM:

We treat \(H_{sim}\) as a drift, \(H_{drift}\), based on the theoretical H(z) value under \(\varLambda \)CDM, \(H_{\varLambda CDM}\), caused by various errors. \(H_{drift}\) is a random value under gaussian distribution, \(N(0,\varDelta H)\). \(\varDelta H\) is calculated by relative error we get in last section. Using a piece of python code, we generate our mock \(H_{sim}\) data of 3-year observation at very 0.1 redshift bin, shown in Fig. 8. We get 38 OHD sets up to now. The datas were obtained by different ways from different groups [8, 9, 42,43,44,45,46,47,48,49,50,51]. Figure 9 shows \(H_{\varLambda CDM}\) at every redshift and the 38 OHD sets so far. As we can see, the OHD value goes up and down around the \(H_{\varLambda CDM}\) at the same redshift, which justifies the validity of our simulation. Compared with our mock data, the actual OHD sets’ accuracy is obviously worse.

3.2 Forecasting

Now that we have got the 3-year-observation mock data, we can use them to forecast. Before that, we need a criteria to evaluate the constraining ability of the dataset-Figure of Merit (FoM). We can define FoM in different ways, as long as its value can reflect how tightly or loosely the data constrains parameters. Here for the convenience of our analysis, we adopt the definition by Albrecht [52], the reciprocal of the area enclosed by the \(2\sigma \) confidence region contour, coinciding with a specially appointed confidence region under gaussian distribution.

We choose the \(\varLambda \)CDM as our prior model. In such a standard \(\varLambda \)CDM universe with a curvature term \(\varOmega _{k}=1-\varOmega _{m}-\varOmega _{\varLambda }\), the Hubble parameter is given by

The determination of \(H_{0}\) has been carried on in different \(H_{0}\) tension projects. For 7-year WMAP observation, \(H_{0}=73\pm 3 \; \mathrm {kms^{-1}\;Mpc^{-1}}\) [53]. In this paper, we take the most recent value \(H_{0}=74.2\pm 3.6 \;\mathrm {kms^{-1}\;Mpc^{-1}}\) [54]. The best value of \(\varOmega _{m}\), \(\varOmega _{\varLambda }\) we adopt is 0.27, 0.73 respectively, due to the coherence that they are consistent with the observations and the fact that we use these value to generate our simulation data. All the three parameters are assumed under gaussian distribution. By Bayes’ theorem, the posterior probability density function of parameters is

where \(\ell \) is the likelihood and \(P(H_{0})\) is the prior probability density function of \(H_{0}\). And the expression of \(\ell \) is given by(assuming no covariance between parameters)

where \(\sigma _{i}\) is the uncertainty of the data \(H_{i}\). And the \(P(H_{0})\) is Gaussian prior, given by

Then the integral can be worked out for the given Gaussian prior \(P(H_{0})\). There is a point in the parameter space maximizing the probability density, \(P_{max}\). Because of what we have described in last paragraph, such a point in this forecasting is {0.27, 0.73, 74.2}. The formula

means the contour of a given confidence region, which corresponds to the value of \(\varDelta \chi ^{2}\). We have three parameters here, \(\varOmega _{m},\varOmega _{\varLambda },H_{0}\). \(\varDelta \chi ^{2}\) is statistically set to 2.3, 6.17, 11.8 respectively for \(1\sigma , 2\sigma , 3\sigma \) confidence region. For a direct comparing and understanding, here we choose \(2\sigma \) confidence region, namely \(\varDelta \chi ^{2}=6.17\), when calculating FoM.

To order to calculate the confidence region and FoM, we take the Fisher Matrix forecast technique [55],

where the value of matrix elements is taken at the most-likely value of parameters. Let’s denote the marginalized Fisher matrix by \(\tilde{F}\), then the contour in subspace is given by

where \(\varDelta \theta \) is the deviation from the beat value of the parameters. When calculating FoM, we take \(\varDelta \chi ^{2}\) as 6.17. The enclosed area is \(\pi / \sqrt{det(\tilde{F}/\varDelta \chi ^{2})}\). So FoM, the reciprocal of the area, is

The contour is shown in Fig. 10. As we can see, the contour is an ellipse, which is consistent with the equation of \(\tilde{F}\). For a more direct and concrete comparison, we perform constraint for the 38 OHD sets. Their constraint on \(\varOmega _{m}\) and \(\varOmega _{\varLambda }\) is shown in Fig. 11. Apparently, the constraint of the mock data on parameters is much tighter, compared with that of available OHD sets, which has an significant improvement on precision cosmology. The simulation and forecast of 10-year-observation is just carried out in the same way. As Fig. 12 shows, its constraint on cosmological parameters is even much tighter, implying a consequent higher FoM value.

Same as Fig. 10, but for 38 actual OHD sets. The FoM here is 9.3

For the FoM value of 3-year-observation mock data, it is about 170.82, while that of 38 OHD sets is just about 9.3. It is a remarkable improvement. For 10-year-observation mock data, the FoM has a farther improvement, reaching 569.42. We have enough reason to look forward to the excellent application of H(z) data by this method.

Same as Fig. 10, but for 10-year-observation simulation. The FoM is 569.42

3.3 Nonstandard model

The \(\varLambda \)CDM universe do match the observation quite well. But it doesn’t answer the question that why matter and vacuum energy should be of the same order of magnitude at this moment. Here we consider another model which can give us an answer to this problem by alternating periods of acceleration and deceleration. In undulant universe, the equation of state of the vacuum energy is an oscillatory function of state of the scale of the universe, \(w(a)=-cos(In a)\). It meets the fact that \(w(a=1)=-1\) in the current universe. Then the Hubble parameter is given by:

where \(\varOmega _{\varLambda }+\varOmega _{m}+\varOmega _{k}=1\). The simulation and forecasting carry out just the same as above. Here we consider the case of 3-year-observation. The corresponding FoM is 153.07. And the constraint is displayed in Fig. 13

4 Discussion and conclusions

In this paper, we mainly evaluate the quality of H(z) data by GW standard sirens method of several GW detection plans, whose optimal frequency locate around the frequency window of GW from typical NS binary system. We calculate the relative error of H(z) by three devices, DECIGO and ET and aLIGO. Though the sensitivity of the three devices is almost of the same order of magnitude, the H(z) error of DECIGO is quite optimistic while that of other two is far from satisfying. But it does not mean that H(z) data by this method is a dead end or of no meaning, which is justified by the forecasting of DECIGO-based H(z) data. If the sensitivity of aLIGO or ET is sightly improved, or just move the most sensitive frequency to a lower region, the error of H(z) will be comparable that by DECIGO. To get a better H(z) data, we have two ways: (1) the noise curve could be moved down, and the signal-to-noise ratio of a given sore increases, so we get more events above threshold and more “bright” events which have smaller errors; (2) the noise curve could be moved to the left, then we can see more of the inspiral which can help improve parameter estimation at fixed signal-to-noise.

Considering the absence of real H(z) data by DECIGO, we simulate H(z) and the data show an alluring constraint ability on cosmological parameters. After all, we are aimed at evaluating the viability and quality of H(z) data by GW standard sirens method, not putting the method into actual operation. We find that, under \(\varLambda \)CDM universe, the FoM of mock data shows a huge improvement compared with that of 38 actual OHD sets. For contrast, the FoM is 9.3 for 38 OHD sets, 170.82 for 3-year-observation, 569.42 for 10-year-observation respectively. The advantage of H(z) is that it is the direct measurement of the expansion history, so H(z) can be powerful in constraining nonstandard universe. Besides the standard model, we also explore its ability when applied to undulant universe. H(z) by DECIGO still shows a excellent constraining ability and a comparably excellent result. For 3-year-observation simulation, the FoM is 153.07. The tight constraint of mock data and the FoM of the corresponding contour indicate a bright future of measuring H(z) data by this method.

To extract as much physical information as possible, all the known sources of error should be quantified. Apart from the three error mentioned above, there is another kind of errors, the calibration error. In GW detection, the response function is used to convert the electronic output of a GW detector into the measured GW signal. The calibration error is produced on the experimentally measurement of the response function [56]. The calibration error in the response function degrades the ability to measure the physical properties of the GW source. Thus it is meaningful to investigate the calibration error. Lee Lindblom [56] derived the optimal calibration accuracy: the lower accuracy level would reduce the quantity and the quality of the scientific information extracted from the data, and the higher accuracy would be made irrelevant by the intrinsic noise level of the detector. And Vitate at al. [57] also investigated the effect introduced by calibration error based on the estimates obtained during LIGO’s fifth and VIRGO’s third science runs. They found that the calibration error would slightly damage the parameter estimate in GW data analysis. But the calibration-introduced system has a better ability in locating the source, facilitating the EM counterpart detecting. Considering the damage caused by calibration error is relatively small and its hard to quantify the calibration error, we ignore it in this work. We expect future study can give us more precise estimate.

It is well worth to point out that at current stage, the detecting of electromagnetic counterpart is still a problem. Currently, we are not able to make a good evaluation and conclusion about it. Finding the EM counterpart in the GW event is crucial for GW astronomy, which can reveal the process and interaction during the merger process [58]. Mwtzger et al. [59] showed that the transient EM counterpart can possibly occur within a few seconds after the binary merger. And a lot of theoretical and experimental progress have been achieved [60, 61].

Also there are some recent development in astronomical and computing technologies. During the proposal and test of a number of low-latency GW trigger-generation pipelines, the pipeline has been improved and capable of generating event triggers within minutes upon the arrival of a detectable signal [60, 62,63,64]. More and more detectors to be constructed can form a network, rendering it likely to improve the localization efficiency [65,66,67]. Some methods have been proposed to identifying GW source for a large sky error [68, 69]. Considering the fact that the early detector networks error in GW localization will be of order \(200\;{\text {deg}}^{2}\) [70], such method would improve the feasibility of EM detector a lot. The devices that aim at facilitating the prompt EM detection mainly focus on high energy region and the optical region, while radio region is also a good candidate [58]. By next decade, the Large Synoptic Survey Telescope (LSST), will be in its sky survey. It will bring us great hope to find the prompt EM counterpart. Such EM detection demand multi-wavelength programs by sensitive telescope capable of covering large areas on the sky, and a strong synergy exists between LSST and radio survey in identifying the EM counterpart at both optic and radio wavelengths, and the information from both wavelengths about the physics of the post-merger will be complementary [71]. Here we stress the evaluation of H(z) by standard sirens, not the exact technical details. Another problem is that the detecting range for NS binary system is just 300 Mpc now [72]. This range is much smaller than what we assumed above. We explore how the FoM changes with the variation of detecting range, which is shown in Fig. 14. The FoM can be comparable with that of 38 OHD sets at \(z=0.7\). For 10-year-observation, this critical value would be smaller.In other words, if we launch a 10-year-observation, even if the detecting range is just \(z=0.7\), we can do much better than 38 OHD sets. The reason why the limited data can produce such good effect lies on the fact that the GW standard sirens can measure low-z H(z) with excellent accuracy. This demonstrates that even if we could not detect the high-z H(z) data by GW standard sirens method, the low-z data can still be valuable and powerful. In the further, if we want to measure high-z H(z) by this way, some improvement, probably a lower strain noise, is necessary. But it is undoubtable that the H(z) by this method is of great power and potential.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: Observational data have been referenced in text, and simulated data available from the corresponding author upon request.]

References

A. Cho, Science 330, 1615 (2010). https://doi.org/10.1126/science.330.6011.1615

D.H. Weinberg, M.J. Mortonson, D.J. Eisenstein, C. Hirata, A.G. Riess, E. Rozo, Phys. Rep. 530, 87 (2013). https://doi.org/10.1016/j.physrep.2013.05.001

A.G. Riess, A.V. Filippenko, P. Challis, A. Clocchiatti, A. Diercks, P.M. Garnavich, R.L. Gilliland, C.J. Hogan, S. Jha, R.P. Kirshner, B. Leibundgut, M.M. Phillips, D. Reiss, B.P. Schmidt, R.A. Schommer, R.C. Smith, J. Spyromilio, C. Stubbs, N.B. Suntzeff, J. Tonry, Astron. J. 116, 1009 (1998). https://doi.org/10.1086/300499

D.J. Eisenstein, W. Hu, Astrophys. J. 496, 605 (1998). https://doi.org/10.1086/305424

F. Zwicky, Helv. Phys. Acta 6, 110 (1933)

F. Zwicky, Astrophys. J. 86, 217 (1937). https://doi.org/10.1086/143864

C. Ma, T.J. Zhang, Astrophys. J. 730, 74 (2011). https://doi.org/10.1088/0004-637X/730/2/74

D. Stern, R. Jimenez, L. Verde, M. Kamionkowski, S.A. Stanford, J. Cosmol. Astropart. Phys. 2, 008 (2010). https://doi.org/10.1088/1475-7516/2010/02/008

E. Gaztañaga, A. Cabré, L. Hui, Mon. Not. R. Astron. Soc. 399, 1663 (2009). https://doi.org/10.1111/j.1365-2966.2009.15405.x

B.F. Schutz, Nature (London) 323, 310 (1986). https://doi.org/10.1038/323310a0

B.P. Abbott, R. Abbott, T.D. Abbott, M.R. Abernathy, F. Acernese, K. Ackley, C. Adams, T. Adams, P. Addesso, R.X. Adhikari et al., Phys. Rev. Lett. 116(6), 061102 (2016). https://doi.org/10.1103/PhysRevLett.116.061102

B.P. Abbott, R. Abbott, T.D. Abbott, F. Acernese, K. Ackley, C. Adams, T. Adams, P. Addesso, R.X. Adhikari, V.B. Adya et al., Astrophys. J. Lett. 848, L13 (2017). https://doi.org/10.3847/2041-8213/aa920c

B.P. Abbott, R. Abbott, T.D. Abbott, F. Acernese, K. Ackley, C. Adams, T. Adams, P. Addesso, R.X. Adhikari, V.B. Adya et al., Phys. Rev. Lett. 119(16), 161101 (2017). https://doi.org/10.1103/PhysRevLett.119.161101

B.P. Abbott, R. Abbott, T.D. Abbott, F. Acernese, K. Ackley, C. Adams, T. Adams, P. Addesso, R.X. Adhikari, V.B. Adya et al., Nature (London) 551, 85 (2017). https://doi.org/10.1038/nature24471

T. Namikawa, A. Nishizawa, A. Taruya, Phys. Rev. Lett. 116(12), 121302 (2016). https://doi.org/10.1103/PhysRevLett.116.121302

C. Bonvin, R. Durrer, M. Kunz, Phys. Rev. Lett. 96(19), 191302 (2006). https://doi.org/10.1103/PhysRevLett.96.191302

A. Nishizawa, A. Taruya, S. Saito, Phys. Rev. D 83(8), 084045 (2011). https://doi.org/10.1103/PhysRevD.83.084045

W. Del Pozzo, Phys. Rev. D 86(4), 043011 (2012). https://doi.org/10.1103/PhysRevD.86.043011

S.R. Taylor, J.R. Gair, I. Mandel, Phys. Rev. D 85(2), 023535 (2012). https://doi.org/10.1103/PhysRevD.85.023535

S.R. Taylor, J.R. Gair, Phys. Rev. D 86(2), 023502 (2012). https://doi.org/10.1103/PhysRevD.86.023502

H.Y. Chen, M. Fishbach, D.E. Holz, Nature 562, 545 (2018). https://doi.org/10.1038/s41586-018-0606-0

S. Vitale, H.Y. Chen, Phys. Rev. Lett. 121(2), 021303 (2018). https://doi.org/10.1103/PhysRevLett.121.021303

W. Del Pozzo, T.G.F. Li, C. Messenger, Phys. Rev. D 95(4), 043502 (2017). https://doi.org/10.1103/PhysRevD.95.043502

C. Messenger, J. Read, Phys. Rev. Lett. 108(9), 091101 (2012). https://doi.org/10.1103/PhysRevLett.108.091101

M. Sasaki, Mon. Not. R. Astron. Soc. 228, 653 (1987). https://doi.org/10.1093/mnras/228.3.653

C. Bonvin, R. Durrer, M.A. Gasparini, Phys. Rev. D 73(2), 023523 (2006). https://doi.org/10.1103/PhysRevD.73.023523

N. Jarosik, C.L. Bennett, J. Dunkley, B. Gold, M.R. Greason, M. Halpern, R.S. Hill, G. Hinshaw, A. Kogut, E. Komatsu, D. Larson, M. Limon, S.S. Meyer, M.R. Nolta, N. Odegard, L. Page, K.M. Smith, D.N. Spergel, G.S. Tucker, J.L. Weiland, E. Wollack, E.L. Wright, APJS 192, 14 (2011). https://doi.org/10.1088/0067-0049/192/2/14

L.E. Kidder, C.M. Will, A.G. Wiseman, Phys. Rev. D 47, 3281 (1993). https://doi.org/10.1103/PhysRevD.47.3281

C. Messenger, K. Takami, S. Gossan, L. Rezzolla, B.S. Sathyaprakash, Phys. Rev. X 4(4), 041004 (2014). https://doi.org/10.1103/PhysRevX.4.041004

C. Cutler, D.E. Holz, Phys. Rev. D 80(10), 104009 (2009). https://doi.org/10.1103/PhysRevD.80.104009

S. Kawamura, T. Nakamura, M. Ando, N. Seto, K. Tsubono, K. Numata, R. Takahashi, S. Nagano, T. Ishikawa, M. Musha, K.I. Ueda, T. Sato, M. Hosokawa, K. Agatsuma, T. Akutsu, K.s. Aoyanagi, K. Arai, A. Araya, H. Asada, Y. Aso, T. Chiba, T. Ebisuzaki, Y. Eriguchi, M.K. Fujimoto, M. Fukushima, T. Futamase, K. Ganzu, T. Harada, T. Hashimoto, K. Hayama, W. Hikida, Y. Himemoto, H. Hirabayashi, T. Hiramatsu, K. Ichiki, T. Ikegami, K.T. Inoue, K. Ioka, K. Ishidoshiro, Y. Itoh, S. Kamagasako, N. Kanda, N. Kawashima, H. Kirihara, K. Kiuchi, S. Kobayashi, K. Kohri, Y. Kojima, K. Kokeyama, Y. Kozai, H. Kudoh, H. Kunimori, K. Kuroda, K.i. Maeda, H. Matsuhara, Y. Mino, O. Miyakawa, S. Miyoki, H. Mizusawa, T. Morisawa, S. Mukohyama, I. Naito, N. Nakagawa, K. Nakamura, H. Nakano, K. Nakao, A. Nishizawa, Y. Niwa, C. Nozawa, M. Ohashi, N. Ohishi, M. Ohkawa, A. Okutomi, K. Oohara, N. Sago, M. Saijo, M. Sakagami, S. Sakata, M. Sasaki, S. Sato, M. Shibata, H. Shinkai, K. Somiya, H. Sotani, N. Sugiyama, H. Tagoshi, T. Takahashi, H. Takahashi, R. Takahashi, T. Takano, T. Tanaka, K. Taniguchi, A. Taruya, H. Tashiro, M. Tokunari, S. Tsujikawa, Y. Tsunesada, K. Yamamoto, T. Yamazaki, J. Yokoyama, C.M. Yoo, S. Yoshida, T. Yoshino, Class. Quantum Gravity 23, S125 (2006). https://doi.org/10.1088/0264-9381/23/8/S17

D. Keppel, P. Ajith, Phys. Rev. D 82(12), 122001 (2010). https://doi.org/10.1103/PhysRevD.82.122001

K.G. Arun, B.R. Iyer, B.S. Sathyaprakash, P.A. Sundararajan, Phys. Rev. D 71(8), 084008 (2005). https://doi.org/10.1103/PhysRevD.71.084008

C. Cutler, K.S. Thorne, ArXiv General Relativity and Quantum Cosmology e-prints (2002). arXiv:gr-qc/0204090

I. Bartos, P. Brady, S. Márka, Class. Quantum Gravity 30(12), 123001 (2013). https://doi.org/10.1088/0264-9381/30/12/123001

C.M. Hirata, D.E. Holz, C. Cutler, Phys. Rev. D 81(12), 124046 (2010). https://doi.org/10.1103/PhysRevD.81.124046

C. Gordon, K. Land, A. Slosar, Phys. Rev. Lett. 99(8), 081301 (2007). https://doi.org/10.1103/PhysRevLett.99.081301

C. Cutler, J. Harms, Phys. Rev. D 73(4), 042001 (2006). https://doi.org/10.1103/PhysRevD.73.042001

S. Hilbert, J.R. Gair, L.J. King, Mon. Not. R. Astron. Soc. 412, 1023 (2011). https://doi.org/10.1111/j.1365-2966.2010.17963.x

C. Shapiro, D.J. Bacon, M. Hendry, B. Hoyle, Mon. Not. R. Astron. Soc. 404, 858 (2010). https://doi.org/10.1111/j.1365-2966.2010.16317.x

S. Yuan, T.J. Zhang, J. Cosmol. Astropart. Phys. 2, 025 (2015). https://doi.org/10.1088/1475-7516/2015/02/025

R. Jimenez, L. Verde, T. Treu, D. Stern, Astrophys. J. 593, 622 (2003). https://doi.org/10.1086/376595

J. Simon, L. Verde, R. Jimenez, Phys. Rev. D 71(12), 123001 (2005). https://doi.org/10.1103/PhysRevD.71.123001

M. Moresco, L. Verde, L. Pozzetti, R. Jimenez, A. Cimatti, J. Cosmol. Astropart. Phys. 7, 053 (2012). https://doi.org/10.1088/1475-7516/2012/07/053

M. Moresco, L. Pozzetti, A. Cimatti, R. Jimenez, C. Maraston, L. Verde, D. Thomas, A. Citro, R. Tojeiro, D. Wilkinson, J. Cosmol. Astropart. Phys. 5, 014 (2016). https://doi.org/10.1088/1475-7516/2016/05/014

C. Zhang, H. Zhang, S. Yuan, S. Liu, T.J. Zhang, Y.C. Sun, Res. Astron. Astrophys. 14, 1221–1233 (2014). https://doi.org/10.1088/1674-4527/14/10/002

M. Moresco, Mon. Not. R. Astron. Soc. 450, L16 (2015). https://doi.org/10.1093/mnrasl/slv037

C. Blake, S. Brough, M. Colless, C. Contreras, W. Couch, S. Croom, D. Croton, T.M. Davis, M.J. Drinkwater, K. Forster, D. Gilbank, M. Gladders, K. Glazebrook, B. Jelliffe, R.J. Jurek, I.H. Li, B. Madore, D.C. Martin, K. Pimbblet, G.B. Poole, M. Pracy, R. Sharp, E. Wisnioski, D. Woods, T.K. Wyder, H.K.C. Yee, Mon. Not. R. Astron. Soc. 425, 405 (2012). https://doi.org/10.1111/j.1365-2966.2012.21473.x

L. Samushia, B.A. Reid, M. White, W.J. Percival, A.J. Cuesta, L. Lombriser, M. Manera, R.C. Nichol, D.P. Schneider, D. Bizyaev, H. Brewington, E. Malanushenko, V. Malanushenko, D. Oravetz, K. Pan, A. Simmons, A. Shelden, S. Snedden, J.L. Tinker, B.A. Weaver, D.G. York, G.B. Zhao, Mon. Not. R. Astron. Soc. 429, 1514 (2013). https://doi.org/10.1093/mnras/sts443

X. Xu, A.J. Cuesta, N. Padmanabhan, D.J. Eisenstein, C.K. McBride, Mon. Not. R. Astron. Soc. 431, 2834 (2013). https://doi.org/10.1093/mnras/stt379

X.L. Meng, X. Wang, S.Y. Li, T.J. Zhang, ArXiv e-prints (2015). arXiv:1507.02517

A. Albrecht, G. Bernstein, R. Cahn, W.L. Freedman, J. Hewitt, W. Hu, J. Huth, M. Kamionkowski, E.W. Kolb, L. Knox, J.C. Mather, S. Staggs, N.B. Suntzeff, ArXiv Astrophysics e-prints (2006). arXiv:astro-ph/0609591

D.N. Spergel, R. Bean, O. Doré, M.R. Nolta, C.L. Bennett, J. Dunkley, G. Hinshaw, N. Jarosik, E. Komatsu, L. Page, H.V. Peiris, L. Verde, M. Halpern, R.S. Hill, A. Kogut, M. Limon, S.S. Meyer, N. Odegard, G.S. Tucker, J.L. Weiland, E. Wollack, E.L. Wright, APJS 170, 377 (2007). https://doi.org/10.1086/513700

A.G. Riess, L. Macri, S. Casertano, M. Sosey, H. Lampeitl, H.C. Ferguson, A.V. Filippenko, S.W. Jha, W. Li, R. Chornock, D. Sarkar, Astrophys. J. 699, 539 (2009). https://doi.org/10.1088/0004-637X/699/1/539

S. Dodelson, Modern cosmology (Academic Press, Amsterdam, 2003)

L. Lindblom, Phys. Rev. D 80(4), 042005 (2009). https://doi.org/10.1103/PhysRevD.80.042005

S. Vitale, W. Del Pozzo, T.G.F. Li, C. Van Den Broeck, I. Mandel, B. Aylott, J. Veitch, Phys. Rev. D 85(6), 064034 (2012). https://doi.org/10.1103/PhysRevD.85.064034

Q. Chu, E.J. Howell, A. Rowlinson, H. Gao, B. Zhang, S.J. Tingay, M. Boër, L. Wen, Mon. Not. R. Astron. Soc. 459, 121 (2016). https://doi.org/10.1093/mnras/stw576

B.D. Metzger, E. Berger, Astrophys. J. 746, 48 (2012). https://doi.org/10.1088/0004-637X/746/1/48

J. Abadie, B.P. Abbott, R. Abbott, T.D. Abbott, M. Abernathy, T. Accadia, F. Acernese, C. Adams, R. Adhikari, C. Affeldt et al., A&A 541, A155 (2012). https://doi.org/10.1051/0004-6361/201218860

P.S. Shawhan, Observatory operations: strategies, processes, and systems IV, observatory operations: strategies, processes, and systems IV, vol. 8448, observatory operations: strategies. Process. Syst. IV 8448, 84480Q (2012). https://doi.org/10.1117/12.926372

D. Buskulic, Virgo Collab. LIGO Sci. Collab. Class. Quantum Gravity 27(19), 194013 (2010). https://doi.org/10.1088/0264-9381/27/19/194013

K. Cannon, R. Cariou, A. Chapman, M. Crispin-Ortuzar, N. Fotopoulos, M. Frei, C. Hanna, E. Kara, D. Keppel, L. Liao, S. Privitera, A. Searle, L. Singer, A. Weinstein, Astrophys. J. 748, 136 (2012). https://doi.org/10.1088/0004-637X/748/2/136

S. Hooper, S.K. Chung, J. Luan, D. Blair, Y. Chen, L. Wen, Phys. Rev. D 86(2), 024012 (2012). https://doi.org/10.1103/PhysRevD.86.024012

S. Fairhurst, Class. Quantum Gravity 28(10), 105021 (2011). https://doi.org/10.1088/0264-9381/28/10/105021

S. Vitale, M. Zanolin, Phys. Rev. D 84(10), 104020 (2011). https://doi.org/10.1103/PhysRevD.84.104020

M. Zanolin, S. Vitale, N. Makris, Phys. Rev. D 81(12), 124048 (2010). https://doi.org/10.1103/PhysRevD.81.124048

S. Nissanke, M. Kasliwal, A. Georgieva, Astrophys. J. 767, 124 (2013). https://doi.org/10.1088/0004-637X/767/2/124

P.S. Cowperthwaite, E. Berger, Astrophys. J. 814, 25 (2015). https://doi.org/10.1088/0004-637X/814/1/25

L.P. Singer, L.R. Price, B. Farr, A.L. Urban, C. Pankow, S. Vitale, J. Veitch, W.M. Farr, C. Hanna, K. Cannon, T. Downes, P. Graff, C.J. Haster, I. Mandel, T. Sidery, A. Vecchio, Astrophys. J. 795, 105 (2014). https://doi.org/10.1088/0004-637X/795/2/105

J.W. Lazio, A. Kimball, A.J. Barger, W.N. Brandt, S. Chatterjee, T.E. Clarke, J.J. Condon, R.L. Dickman, M.T. Hunyh, M.J. Jarvis, M. Jurić, N.E. Kassim, S.T. Myers, S. Nissanke, R. Osten, B.A. Zauderer, Publ. Astron. Soc. Pac. 126, 196 (2014). https://doi.org/10.1086/675262

B.F. Schutz, Class. Quantum Gravity 28(12), 125023 (2011). https://doi.org/10.1088/0264-9381/28/12/125023

Acknowledgements

We sincerely thank the referee for his/her useful responses, which help us greatly improve our manuscript. This work was supported by National Key R&D Program of China (2017YFA0402600), the National Science Foundation of China (Grants nos. 11573006, 11929301, 61802428) and Shandong Provincial Natural Science Foundation of China(Grant no. ZR2019MA059).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Zhang, TJ., Liu, Y., Liu, ZE. et al. The constraint ability of Hubble parameter by gravitational wave standard sirens on cosmological parameters. Eur. Phys. J. C 79, 900 (2019). https://doi.org/10.1140/epjc/s10052-019-7434-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-019-7434-8