Abstract

The Matrix Element Method has proven to be a powerful method to optimally exploit the information available in detector data. Its widespread use is nevertheless impeded by its complexity and the associated computing time. MoMEMta, a C++ software package to compute the integrals at the core of the method, provides a versatile implementation of the Matrix Element Method to both the theory and experiment communities. Its modular structure covers the needs of experimental analysis workflows at the LHC without compromising ease of use on simpler and smaller simulated samples used for phenomenological studies. With respect to existing tools, MoMEMta improves on usability and flexibility. In this paper, we present version 1.0 of MoMEMta, together with examples illustrating the wide range of applications at the LHC accessible for the first time with a single tool.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The discovery of the Higgs boson by the ATLAS and CMS experiments in 2012 [1, 2] opened a new era in particle physics. More than just a new particle, a new set of interactions needs to be characterised. The LHC physics program therefore includes precision measurements of standard model (SM) processes (in particular in the top-quark and Higgs sectors) and the search for rare production mechanisms or rare decay channels. The absence so far of any obvious sign of physics beyond the SM further increases the need to look in places where the backgrounds are large and the effect of new physics subtle.

In all these studies, it is of the uttermost importance to fully exploit the potential of the large data set collected. For most of the analyses performed in high energy physics (HEP), obtaining an optimal result implies the treatment of multiple correlated quantities in a multivariate setting. The most popular methods for multivariate analysis in HEP are machine learning techniques, such as boosted decision trees and neural networks. These approaches require large training data sets (usually obtained by Monte Carlo techniques) in order to learn the structure of the data. On the contrary, the Matrix Element Method (MEM) uses directly our theoretical knowledge of a process to assign to each event a probability that measures the compatibility of experimental data with a given hypothesis. There is no training, since the underlying Lagrangian, from which the matrix element of the partonic process is derived, is known.

The MEM, originally designed at the Tevatron experiments DØ and CDF for top quark mass measurements in \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \) production [3,4,5,6,7,8,9], is nowadays a common technique in particle physics. Recent examples of its use at the LHC are searches for \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {H}{}{} \) [10,11,12,13,14,15,16,17] and single top quark production [18], and a measurement of spin correlations in \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \) production [19]. Nevertheless, while it can be used for a wide variety of studies, the practical application of the MEM has been impeded by its complexity and by the associated computing time. In order to evaluate the probability under a given theoretical hypothesis of a given experimental event, a difficult convolution of the theoretical information on the hard scattering (i.e. the matrix element squared) with the experimentally available information on the final state (encoded in the so-called transfer functions) has to be performed. The corresponding integrand varies by several orders of magnitudes in different regions of the phase space, which requires the use of adaptive numerical integration techniques together with a smart choice of integration variables. A general algorithm has been proposed in Ref. [20], which involves optimised phase-space mappings designed to remove as much as possible the peaks in the integrand. However, the corresponding implementation (MadWeight) is not supported anymore and suffers from a lack of flexibility that prevents – or significantly limits – its use in large scale analyses of LHC data by the collaborations, and does not allow the user to implement simplifying assumptions.

In this paper, we present MoMEMta, a modular C++ software package to compute the convolution integrals at the core of the method. Its modular structure covers the needs of experimental analysis workflows at the LHC without compromising the ease of use on simpler and smaller simulated samples used for phenomenological studies. It relies on the same approach as MadWeight to address the parameterisation of the phase space but leaves more freedom to the user. Since it follows the same approach, MoMEMta’s performance in terms of accuracy and CPU time is similar to that of MadWeight. But contrarily to its predecessor, it adapts to any process and can be fitted to any C++ or Python analysis workflow. Modularity and flexibility also open the door to specific optimisations either when designing the integration structure, or when choosing the integration engine. It is also possible to provide a custom (optimised) matrix element when appropriate, without loosing all the advantages of the block decomposition described in Sect. 3.

In the following, we will first briefly review the MEM, with an emphasis on the assumptions made in MoMEMta, before presenting shortly the philosophy of the implementation. We will then concentrate on a few concrete use cases that illustrate the variety of problems that can be tackled using MoMEMta, and how the modularity can best be exploited to adapt to these problems.

2 The matrix element method

The MEM is a technique to calculate the conditional probability density \(P(x | \alpha )\) to observe an experimental event x, given a specific theoretical hypothesis \(\alpha \). Details about the method can be found for example in Ref. [21]. We will here concentrate on the main aspects.

The likelihood for a partonic final state y to be produced in the hard-scattering process is proportional to the differential cross section \(\mathrm{d}\sigma _\alpha \) of the corresponding process, given by

where \(q_1\) and \(q_2\) stand for the initial state parton momentum fractions, s stands for the hadronic centre-of-mass energy and y stands for the kinematics of the final state.

The central element in that expression is the squared matrix element for process \(\alpha \), denoted \(|\mathscr {M}_\alpha \left( q_1,q_2,y\right) |^2\), where the summation over spin and colour states is understood. It can be obtained either analytically or numerically through packages like MG5_aMC@NLO [22] or MCFM [23]. Because of the intrinsic theoretical difficulty to identify final state particles with partons at NLO, the leading order matrix element is used in most applications. The n-body phase space \(\mathrm{d}\varPhi (y)\) must also be considered in the calculation, as it plays an important role in any change of variable needed for the integration of the differential cross section.

To obtain the differential cross section \(\mathrm{d}\sigma _\alpha (y)\) in hadron collisions, (1) is convoluted with the parton density functions (PDF) and summed over all possible flavour compositions of the colliding partons,

where \(f_{a_1}(q_{1})\) and \(f_{a_2}(q_{2})\) are the PDFs for a given flavour \(a_i\) and momentum fraction \(q_i\).

The evolution of the parton-level configuration y into a reconstructed event x in the detector is modelled by a transfer function T(x|y), normalised as a probability density over x, that describes how the partonic final state y is reconstructed as x in the detector. This includes the effects from the parton shower, hadronisation, and the limited detector resolution. The efficiency \(\epsilon (y)\), i.e. the probability to reconstruct and select a specific partonic configuration y, also needs to be taken into account. This includes geometrical acceptance effects.

The transfer function and efficiency are assumed to factorise into contributions from each measured final-state particle, and each of these contributions are often assumed to further factorise into simple direction- and momentum-dependent terms. For most applications, it is realistic to then assume that particle directions are perfectly reconstructed. The transfer function in that case is a Dirac delta function on the angular variables, and takes a non-trivial form only for the energy (or transverse momentum) degree of freedom. All these assumptions may not be valid in cases where objects, especially jets, are close to each other or reconstructed together (e.g. in boosted topologies), which will then result in a less accurate result.

Once the constraints related to the kinematics of observed particles have been taken into account, there may remain unobserved degrees of freedom, such as those pertaining to neutrinos (or any other invisible particles) or to unreconstructed objects outside of detector acceptance, as well as to the initial-state partons. Some of these degrees of freedom can be removed by enforcing the conversation of total 4-momentum in the initial and final states; the remaining ones need to be marginalised, resulting in a potentially large volume of phase space over which to integrate. Additional constraints may then be used to reduce this volume, such as mass constraints from intermediate, narrow resonances in the considered process, assumed to be on their mass shell, or the experimentally measured total transverse momentum \(\mathbf {p}^{\text {miss}}_\text {T} \) of the missing particles in the event. Note that while a resolution function can be built on \(\mathbf {p}^{\text {miss}}_\text {T} \), it is not accurate to trivially factorise the transfer function on the two components of \(\mathbf {p}^{\text {miss}}_\text {T} \) from the terms relative to visible particles in the final state, since the experimental error in measuring \(\mathbf {p}^{\text {miss}}_\text {T} \) is correlated with the error made in measuring all the other particles in the event.

Aspects to be considered in the transfer function and efficiency are the measurement of the momentum of a particle as well as its (mis-)identification. This latter point might be relevant for b quarks and \(\tau \) leptons, and allows in principle to combine different partonic final state hypotheses for the same event.

After convolution, the full expression reads

where \(\sigma _{\alpha }^{\text {vis}}\) is a normalisation factor that ensures \(P(x | \alpha )\) is a probability density over x. While that factor can be computed by explicitly integrating \(P(x|\alpha )\) over the phase space of reconstructed events x, it is often more practical to estimate it using a sample of events simulated under hypothesis \(\alpha \), in which case \(\sigma _{\alpha }^{\text {vis}} = \sigma _{\alpha } \cdot \left\langle \epsilon \right\rangle _{\alpha }\), where \(\sigma _{\alpha } = \int \mathrm{d}\sigma _\alpha (y)\), and \(\left\langle \epsilon \right\rangle _{\alpha }\) is the average reconstruction and selection efficiency of the simulated events. Finally, one has also to take into account the fact that some of the particles measured in the detector cannot be assigned unambiguously to specific final-state partons. Generally, all possible combinations have then to be considered and the resulting values for \(P(x|\alpha )\) averaged.

The information contained in (3b) can be exploited in different ways, from the extraction of the most probable value of theory parameters through a likelihood maximisation method (see e.g. [24]), for which the dependence of the normalisation constant \(\sigma _{\alpha }^{\text {vis}}\) on the considered hypothesis has to be properly taken into account, to the bare use of the integral result without normalisation \(\sigma _{\alpha }^{\text {vis}}\), referred to in the literature as matrix element weight, \(W(x|\alpha )\).

The integral defined in (3b) is typically a small number that varies over several orders of magnitudes from event to event. It is therefore common to use instead the event information defined by \(I_{\alpha } \equiv -\log P(x|\alpha )\). When computed from the weight instead of the probability, the information is only modified by an additive constant, with no consequence in many applications. We will denote this quantity \(I'_\alpha \equiv -\log W(x|\alpha )\).

In the limit where all the quantities and functions in (3b) are known with perfect accuracy, \(P(x|\alpha )\) is a likelihood. By the Neyman–Pearson lemma, the ratio between the likelihoods obtained under two different hypotheses \(\alpha \) and \(\alpha '\) is the most powerful test statistic to discriminate one from the other [25]. Hence, if it can be implemented, the MEM should provide optimal experimental sensitivity. In practice, we are limited by the use of leading-order matrix elements, or by assumptions made in constructing the transfer function and efficiency term. The quantity (3b) is therefore not a true likelihood, and the Neyman–Pearson lemma does not strictly apply. For discrimination purposes, it is then common to use the event information as input of another multivariate method (typically a boosted decision tree or a neural network).

3 Implementation

The MEM is used in HEP by both theoretical and experimental communities with different purposes and levels of complexity ranging from the evaluation of a matrix element on a reconstructed event to the precise evaluation of model parameters (e.g. the top quark mass) through the use of the properly normalised likelihood derived from (3b). Note that in the former case no integration process is required and effects related to parton showering, hadronisation, and finite detector resolution are explicitly neglected.

In order to adapt to these very different use cases, a novel modular design has been adopted for MoMEMta. The core library is written in C++ and provides modules for various purposes: to represent and evaluate the matrix element and parton density functions, to represent and evaluate transfer functions, to perform changes of variables, to handle the combinatorics of the final state, etc. That way, every term of (3b) is treated as a module that can be configured by the user. Weights are computed for a given process by calling and linking the proper set of modules in a configuration file written in the Lua scripting language [26]. Thanks to this modularity, the user is free to substitute any module provided with a custom implementation without loosing the benefits of other parts of the tool. The resulting object can be called from any C++ or Python code, which means that it seamlessly integrates into the complex analysis environment of the large experimental collaborations but can also be used within small programs reading events from files in any format (e.g. a custom text file, or a file in the Root [27], HepMC [28], Lhco [29] or StdHEP [30] format). In case the modules shipped out-of-the-box are not sufficient for a particular application, it is straightforward for the user to extend MoMEMta’s functionalities by adding new modules handling a specific task, still profiting from the existing infrastructure provided by the tool.

The computation of the weights requires, in most cases, the evaluation of multidimensional integrals via adaptive Monte Carlo techniques. The efficiency in computing these integrals depends on the parameterisation of the phase-space measure used in the integration. In order to map in an efficient way all the structures in the integrand, MoMEMta follows the philosophy introduced by MadWeight [20]. In this approach, starting from a standard parameterisation, the phase-space measure is optimised by using a finite number of analytic transformations over subsets of the integration variables, called “blocks”. A list of the blocks available in MoMEMta along with the addressed event topologies, and the integration variables removed and introduced by the changes of variables, is shown in Tables 1 and 2. For consistency, we have adopted the same terminology as in Ref. [20]. Since the considered transformations are nonlinear, specifying the value of the new integration variables typically yields several solutions for the canonical variables. The matrix element, transfer function, efficiencies and PDFs all need to be evaluated on each of those solutions, and summed to define the final integrand. The first table lists “Main Blocks”, i.e. changes of variables that allow to integrate out the four-dimensional Dirac delta function present in the phase-space density term \(\mathrm{d}\varPhi (y)\), that enforces conservation of momentum between the initial and final states. The second table lists “Secondary Blocks”, i.e. simple changes of variables that do not remove any degree of freedom. Main and secondary blocks are implemented as dedicated MoMEMta modules, which can be chained to perform the change of integration variables that is best suited for the problem at hand. These modules also take care of computing the jacobian factors required by the changes of integration variables, to be multiplied with the considered integrand. Examples of using these blocks are given in Sect. 4.

In order to efficiently handle the potentially large combinatorial ambiguity in the assignment between reconstructed final-state objects and partons in the matrix element, we have included a dedicated module to average over all permutations between a given set of particles. This module requires an additional dimension for the integrated phase space, so that the associated variable governs which assignment should be used for the computation of the integrand, and the resulting integral corresponds to the average weight over the considered permutations. However, compared to a naive averaging of the possible assignments, this scheme allows adaptive integration algorithms to concentrate on those yielding the largest contribution to the final result. For a fixed number of evaluations of the computationally expensive parts of the integrand, such as the squared matrix element, the precision on the result is thereby increased.

MoMEMta ships with matrix elements for a few processes, but any leading-order process handled by MG5_aMC @NLO [22] can be added using a Matrix Element Exporter plugin provided [31]. Native support for other matrix element generators is planned for future releases, but the modular structure already enables the user to wrap any C++ code that computes a matrix element to be used with MoMEMta. This novel feature can potentially speed up the computation by a substantial amount, since the evaluation of the matrix element largely dominates the computation time.

Parton density functions are obtained from Lhapdf6 [32] and the integration is done using the Cuba library [33], that offers a choice of four independent routines for multidimensional numerical integration: Vegas [34], Suave [33], Divonne [35], and Cuhre [36, 37].

The MoMEMta implementation [38] is publicly available, is licensed under the GLPv3, and comes together with an online documentation [39] and tutorials [40].

4 MEM use cases

One common application of the MEM is parameter estimation, through which one can extract a parameter of interest by means of likelihood maximisation. Nowadays, applications of the MEM in high-energy physics are most often restricted to computing weights \(W(x|\alpha )\) under several hypotheses, to discriminate a signal from one or several backgrounds. MoMEMta fits the needs for either purpose, since it is specifically designed to efficiently compute integrals as defined in (3b). In complex situations with several reconstructed objects and unconstrained degrees of freedom, there is no unique solution to the problem of efficiently and precisely computing \(W(x|\alpha )\), and the user has to play an active role in defining how to evaluate \(W(x|\alpha )\) and at which accuracy.

In this section we describe a few use cases of the MEM for signal extraction in LHC analyses. The examples illustrate various levels of complexity, from the simplest case with a precisely reconstructed final state for which no integration is needed, to complex final states including six reconstructed and two unobserved objects. The Lua configurations for each of the examples can be found together with the MoMEMta tutorials [40]. For all the examples, simulated events are generated using MG5_aMC@NLO [22], Pythia [41] and Delphes [42].

The computation times vary by several orders of magnitude among the different use cases, and strongly depend on the choice of parameters governing the integration procedure. Indicative performance figures are given in Sect. 5.

4.1 Discovery and characterisation of the Higgs boson

The MEM was instrumental for the CMS collaboration in the discovery of the Higgs boson in the \(\mathrm {H} \rightarrow \mathrm {Z}{}{} \mathrm {Z}{}{} ^* \rightarrow 4 {\ell }{}{} \) channel [2]. Likewise, the characterisation by ATLAS [43, 44] and CMS [45, 46] of the discovered resonance in terms of coupling structure, spin and parity, has relied on matrix-element techniques as suggested in Refs. [47, 48]. In this channel, all final-state particles can be detected and there are no unobserved degrees of freedom over which to integrate. Given the good experimental resolution on muon and electron direction and momentum, it is reasonable to approximate the transfer function in (3b) by \(T(x,y) = \delta (x,y)\). Hence, the integral reduces to a simple evaluation of the matrix element squared and the PDFs using the measured momenta in the event. In this framework, dubbed matrix element likelihood analysis (MELA), it is straightforward to build a discriminating variable between the signal and the \({\mathrm {q}}{\bar{\mathrm{q}}}\rightarrow \mathrm {Z}{}{} \mathrm {Z}{}{}/\mathrm {Z}{}{} \mathrm {\gamma }{}{} ^* \rightarrow 4 {\ell }{}{} \) background by considering the matrix elements of these two hypotheses:

Similar variables can be constructed to discriminate, for instance, a SM Higgs boson (\(J^P=0^+\)) from a resonance of the same mass but different spin and/or opposite parity:

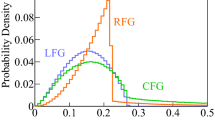

Although MoMEMta was designed to handle more complex final states, its flexibility allows the user to easily implement a MELA-like analysis. To illustrate this fact, we have simulated events for the \(\mathrm {gg} \rightarrow \mathrm {H} \rightarrow \mathrm {Z}{}{} \mathrm {Z}{}{} ^* \rightarrow 4 \mathrm {\mu }\) and \({\mathrm {q}}{\bar{\mathrm{q}}} \rightarrow \mathrm {Z}{}{} \mathrm {Z}{}{}/\mathrm {Z}{}{} \mathrm {\gamma }{}{} ^* \rightarrow 4 \mathrm {\mu }\) processes. The SM Higgs sample, as well as the production and decay of a resonance of spin/parity \(J^P=0^-\) were generated using the Higgs characterisation framework [49]. With MoMEMta’s plugin for MG5_aMC@NLO, the corresponding matrix elements can be exported in a format suitable for MoMEMta. The configuration of MoMEMta in this use case only requires a single module, which returns the product of the matrix element and the PDFs evaluated on a given event. The phase-space density term present in (3b) does not need to be included, since it cancels in the ratios in (4) and (5). With \(P(x|\text {bkg})\), \(P(x|\text {sig})\) and \(P(x|0^-)\) computed by MoMEMta, the discriminant variables \(\mathcal {D}_{\text {bkg}}\) and \(\mathcal {D}_{0^-}\) can be built. The distributions of these variables, for the different processes considered, are shown on Fig. 1 after an event selection closely following the analysis in Ref. [46]. The discrimination power between the competing hypotheses is comparable to what is obtained in Refs. [45, 46].

Distribution of the \(\mathcal {D}_{\text {bkg}}\) (left) and \(\mathcal {D}_{0^-}\) (right) variables for the \(\mathrm {gg} \rightarrow \mathrm {H} \rightarrow \mathrm {ZZ}^* \rightarrow 4 {\mu }\) and \(\mathrm {q}\bar{\mathrm {q}} \rightarrow \mathrm {ZZ}/\mathrm {Z}\gamma ^* \rightarrow 4 {\mu }\) processes, where the resonance H is taken to be the SM Higgs or a pseudoscalar of the same mass (\(0^-\)). For the right-hand figure, we require \(\mathcal {D}_{\text {bkg}} > 0.5\). Note that both the background and the SM Higgs processes have similar distributions of the \(\mathcal {D}_{0^-}\) discriminant. All distributions are normalised to unit area

4.2 Charge identification in \(\mathrm {t}{}{} \mathrm {W}{}{} \) production

The MEM has been extensively used in the study of single top quark production processes at the Tevatron [50, 51], and was instrumental in the most sensitive search for s-channel single top production at the LHC [18]. Incidentally, single top and \(\mathrm {W}{}{} \) boson associated production (\(\mathrm {t}{}{} \mathrm {W}{}{} \)) provides a good opportunity to showcase MoMEMta’s abilities. This process features three propagators in the matrix element and, in the case where both the top quark and \(\mathrm {W}{}{} \) boson decay leptonically (dilepton channel), missing information due to the presence of two neutrinos in the final state.

We consider the charge-conjugate processes, \(\mathrm {t}{}{} \mathrm {W}{}{} ^{-}\) and \(\bar{\mathrm {t}}\,{}{} \mathrm {W}{}{} ^{+}\), which yield the same visible final state and have practically the same rate at the LHC. It has been suggested to measure the CKM matrix element \(|V_{\mathrm {t}{}{} \mathrm {d}{}{}}|\) at the LHC using the charge asymmetry between these two processes [52]. This requires the ability to efficiently disentangle them, a task made difficult by the system not being entirely reconstructible in the dilepton channel.

We thus suggest to construct a MEM-based observable as:

The charge asymmetry can then be defined by counting the number of events for which either \(\mathcal {D}_{\pm }(x) < 0\) or \(\mathcal {D}_{\pm }(x) > 0\).

Computing the weights \(W(x| \bar{\mathrm {t}}\,{}{} \mathrm {W}{}{} _{+})\) and \(W(x| \mathrm {t}{}{} \mathrm {W}{}{} _{-})\) requires a careful consideration of the constraints and degrees of freedom at hand. We start by assuming that the directions of all “visible” objects (\(\mathrm {b}{}{} \) quark, leptons) are perfectly reconstructed, so that the transfer function reduces to factorised parameterisations of the resolution on their energies. Thus, 11 degrees of freedom are present in the system: the longitudinal momentum of the initial-state partons (2), the energies of the visible particles in the final state (3), as well as the directions and energies of the two neutrinos (6). Enforcing conservation of energy and momentum between the initial and final state will remove four of these, so that we end up with seven dimensions over which to integrate.

The numerical integration will be most efficient if the integration variables are mapped to the six peaks in the integrand generated by the top quark and \(\mathrm {W}{}{} \) boson propagators and by the transfer functions on the energies of the visible particles; the remaining degree of freedom can be chosen freely. This can be easily achieved in MoMEMta by pairing the “Secondary Block B” with the “Main Block B”.

We identify the decay chain \(\mathrm {t}{}{} \rightarrow \mathrm {W}{}{} _{\mathrm {t}{}{}} (\rightarrow {\nu }{}{} _{\mathrm {t}{}{}} + {\ell }{}{} _{\mathrm {t}{}{}}) + \mathrm {b}{}{} \) with the notation \(s_{123} \rightarrow s_{12} (\rightarrow p_1 + p_2) + p_3\) for the chosen secondary block in Table 2. The secondary block does not remove any degree of freedom and simply exchanges the energy and polar direction of \({\nu }{}{} _{\mathrm {t}{}{}}\) for the squared invariant masses of \(\mathrm {t}{}{} \) and \(\mathrm {W}{}{} _{\mathrm {t}{}{}}\), which are taken as integration variables. Since the energies of \({\ell }{}{} _{\mathrm {t}{}{}}\) and \(\mathrm {b}{}{} \) are associated with peaks in the transfer function, they should be retained as integration variables, which is straightforward since the chosen block does not affect these quantities. There remains a free variable over which to integrate, the azimuthal direction of \({\nu }{}{} _{\mathrm {t}{}{}}\), which is not directly associated with any peak in the integrand and can be kept as is. Given fixed values for \(s_{123}\), \(s_{12}\) and \(\phi _1\), as well as \(p_2\) and \(p_3\), the block solves the following nonlinear system:

Thus, the block returns up to two solutions for the full kinematics of \(p_1\), as well as the jacobian factor associated with this change of variables. Each of these solutions is used as input for the rest of the computation, described below.

The remaining phase-space variables to be considered are related to the other \(\mathrm {W}{}{} (\rightarrow {\nu }{}{} + {\ell }{}{})\) decay as well as to the initial-state partons. This system can be identified with the topology \((q_1,q_2) \rightarrow s'_{12} (\rightarrow p'_1 + p'_2)\) in Table 1. Again, the energy of the charged lepton \({\ell }{}{} \) is chosen as integration variable. The main block removes four degrees of freedom by enforcing the conservation of total 4-momentum in the initial and final states, which results in a single variable left to integrate over, chosen as the squared invariant mass \(s'_{12}\) of the \(\mathrm {W}{}{} \) boson, conveniently aligned with the last peak in the integrand. The system solved by the main block is:

where \(\mathbf {p}^{\text {miss}}_{x,y}\) are the measured components of the missing transverse momentum along the x and y axes. The block yields up to two solutions for the neutrino \(p'_1\) and evaluates the jacobian factor associated with the integration of a four-dimensional Dirac delta as well as with the change of variables.

Normalised distribution of the \(\mathcal {D}_{\pm }\) asymmetry observable, as defined in (6), for the charge-conjugate processes \(\mathrm {t}{}{} \mathrm {W}{}{} ^{+}\) and \(\bar{\mathrm {t}}\,{}{} \mathrm {W}{}{} ^{-}\). Backgrounds such as \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \) production are expected to be distributed symmetrically around zero

Using the solutions obtained for the final-state partonic systems, the kinematics of the initial-state partons can now be computed using a dedicated module. Since the measured \(\mathbf {p}^{\text {miss}}_{\text {T}}\) has been used to constrain the \(\mathbf {p}_{\text {T}}\) of the pair of neutrinos, the total \(\mathbf {p}_{\text {T}}\) of the system is not guaranteed to vanish. As suggested in Ref. [53], we thus apply a transverse boost on the final-state system to a frame of reference where its total \(\mathbf {p}_{\text {T}}\) is zero, compute the longitudinal components of the two initial-state partons in that frame, and boost the complete system back to the original laboratory frame. This procedure can be understood as a way to correct the effect of initial-state radiation in the observed event. Finally, using the (up to) four obtained solutions for the full partonic system, the transfer functions, Jacobians, PDFs and squared matrix element are evaluated and the results are summed to define the desired integrand function. Note that the enhancements in the matrix elements due to the top quark and \(\mathrm {W}{}{} \) boson propagators can easily be removed by further well-known transformations applied to \(s_{123}\), \(s_{12}\) and \(s'_{12}\). These transformations are handled by specialised modules.

The distribution of the \(\mathcal {D}_{\pm }\) discriminant is shown on Fig. 2. About 75% of events from either process can be retained on each side of \(\mathcal {D}_{\pm } = 0\), which by symmetry leads to a corresponding mistag rate of 25%. Depending on the analysis needs, the purity can be further improved at the cost of efficiency (e.g. we obtain 1.5% mistag rate for 25% efficiency).

The strategy adopted above for the phase-space integration is by no means unique. We stress that thanks to the modularity of MoMEMta, it is easy for the user to quickly test alternate approaches. For instance, working in the narrow-width approximation (NWA) is simply achieved by removing the modules handling the integration over the propagator invariant masses and fixing these to chosen pole masses. Thanks to the changes of variables applied, the kinematic constraints in the system are automatically satisfied. Modifying the assumptions underlying the transfer functions is equally easy, by configuring, adding or removing modules representing the finite resolution on the kinematics of final-state partons. The user might also choose to enforce that the total \(\mathbf {p}_{\text {T}}\) of the partonic system be zero in the laboratory frame, which is achieved by configuring the main block so that the \(\mathbf {p}_{\text {T}}\) of the neutrino represented by \(p'_1\) balances that of all other final-state particles.

4.3 \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {H}{}{} \) production

One of the most successful uses of the MEM at the LHC can be found in the searches for \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {H}{}{} \) production. The ATLAS and CMS collaborations have applied the MEM in final states with \(\mathrm {H}{}{} \rightarrow \mathrm {b}{}{} \bar{\mathrm {b}}\,{}{} \) [10,11,12,13,14], and multi-lepton final states with either \(\mathrm {H}{}{} \rightarrow \mathrm {V} \mathrm {V} ^*\), where \(\mathrm {V} =\mathrm {W}{}{} \) or \(\mathrm {Z}{}{} \), or \(\mathrm {H}{}{} \rightarrow \mathrm {\tau }{}{} \mathrm {\tau }{}{} \) [15,16,17].

Here we demonstrate MoMEMta’s ability to efficiently handle processes as complex as \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {H}{}{} \), featuring a large final-state multiplicity, several propagator enhancements in the matrix element, missing information due to neutrinos, and many possible jet-parton assignments. We consider the channel where the Higgs boson decays to \(\mathrm {b}{}{} \bar{\mathrm {b}}\,{}{} \) and both top quarks decay leptonically, for which the main irreducible background consists of \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {b}{}{} \bar{\mathrm {b}}\,{}{} \) associated production. The relevance of the MEM in this channel was first demonstrated in Ref. [54]. We generate samples for signal and background processes and select events with two opposite-charge leptons and at least four \(\mathrm {b}{}{} \)-tagged jets.

Weights are computed with MoMEMta under two hypotheses, \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {H}{}{} (\mathrm {b}{}{} \bar{\mathrm {b}}\,{}{})\) and \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {b}{}{} \bar{\mathrm {b}}\,{}{} \). The strategy adopted to parameterise the phase space is in many ways similar to that described in Sect. 4.2, and will only be briefly summarised here. The assumptions related to the transfer function are the same as those considered for the previous example, with the exception of what concerns the energy of the two charged leptons, assumed to be perfectly measured. For both hypotheses, the energies of the \(\mathrm {b}{}{} \) quarks coming from the decays of the top quarks are retained as integration variables. In order to reduce the number of dimensions over which to integrate, we work in the narrow-width approximation (NWA), by which the \(\mathrm {W}{}{} \) boson and top quark propagators are approximated by Dirac delta functions.

The momenta of the two unobserved neutrinos (six degrees of freedom) can be fixed using four constraints corresponding to the top quark and W boson invariant masses, as well as by the requirement that their combined transverse momentum equals the observed transverse missing momentum in the event. This solving strategy can be implemented by applying the change of variable “Main Block D” on the standard phase-space parameterisation for the decay products of the top quarks, and fixing the invariants associated with the top quark and W boson propagators (\(s_{134}\), \(s_{256}\), \(s_{13}\) and \(s_{25}\) in Table 1) to their respective pole masses. The remaining degrees of freedom are handled differently, depending on the hypothesis:

-

\(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {b}{}{} \bar{\mathrm {b}}\,{}{} \): The standard polar phase-space parameterisation for the two extra \(\mathrm {b}{}{} \) quarks is retained, i.e. we integrate over both their energies.

-

\(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {H}{}{} \): We integrate over the energy of one of the \(\mathrm {b}{}{} \) quarks from the Higgs boson decay. The other quark’s energy is fixed by the requirement that the pair’s invariant mass be equal to the true Higgs boson mass (NWA). In MoMEMta, this is achieved by applying the transformation of the “Secondary Block C/D”, and fixing \(s_{12}\) to \(m^2_{\mathrm {H}{}{}}\).

Using the above parameterisation, the peaks in the integrand remain mapped to the integration variables, and the unobserved degrees of freedom due to the two neutrinos in the final state are effectively removed. Finally, the integrand needs to be averaged over every one of the \(4!=24\) possible assignments between jets and partons. This task is efficiently handled by a dedicated module that concentrates on the assignments dominating the average, as described in Sect. 3.

Figure 3 shows the normalised distributions of the event information \(I'_{\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {H}{}{}}\) and a discriminating variable defined as

By applying a requirement on \(\mathcal {D}_{\text {sig}}\) such that 50% of the \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {H}{}{} \) signal is retained, 83% of the \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \) + jets background can be rejected. As a comparison, using the invariant masses of pairs of b-tagged jets in the events, by choosing the pair of jets with mass closest to the true Higgs boson mass, would only reject 65% of the background for the same signal efficiency. The distributions shown in Fig. 3 (right) can be compared to those of Refs. [12, 13, 54].

5 Indicative performance figures

We give approximate performance figures observed when computing weights for the different use cases presented above. It should be clear that those numbers are indicative only, as the computation time strongly depends on the considered hypothesis and the parameters of the numerical integration algorithm. Furthermore, these results were obtained using the functionalities available out-of-the-box in MoMEMta, and with matrix elements generated by our plugin for MG5_aMC@NLO, which means no attempt whatsoever was made towards optimising the computation for these particular cases. MoMEMta has been designed with the aim of being flexible, enabling users to implement simplifications or optimisations fit for their needs. Note that tuning the parameters of the algorithms used for the numerical integration of the weights can have a strong impact on both the precision p of the resulting integrals (which in turns impacts the power of the discriminant built from the weights), and the overall computation time T. Generally, all other things being equal, the evaluation time T scales roughly as \(T \propto p^{-2}\). The computation of the weights used in Sect. 4.3 was carried out using two different integration algorithms available in the Cuba library: Vegas and Divonne. The latter was found to yield substantially shorter completion times, without compromising the discrimination between signal and background with respect to the former.

In Table 3 we give the average per-event computation times for the weights used in the examples of Sect. 4, along with the average relative precision on these weights reported by the integration algorithm. These results were obtained on a computer cluster with an average per-core HS06 scoreFootnote 1 of 9.1. Table 4 shows how much time is spent on the main elements of the computation. These fractions are indicative and vary from event to event, but show that for complex hypotheses such as those considered in Sect. 4.3, the bottleneck in the computation is due to the evaluation of the matrix element.

The memory consumption of MoMEMta is strongly linked to the way the integration algorithm is configured. In practice, for the examples shown here, memory consumption was observed never to exceed 200 MB.

6 Summary

We have presented MoMEMta, a modular software package to compute the convolution integrals at the core of the MEM. Its modular structure covers the needs of experimental analysis workflows at the LHC without compromising the ease of use on simpler and smaller simulated samples used for phenomenological studies.

The MEM has been used in HEP by both theoretical and experimental communities with different purposes and levels of complexity ranging from the evaluation of a matrix element on a reconstructed event to the precise evaluation of model parameters through the use of the properly normalised likelihood. We have described a few use cases of the MEM for signal extraction in LHC analyses showcasing different levels of complexity. From the most simple implementation, for Higgs boson characterisation in the \(\mathrm {H} \rightarrow \mathrm {ZZ}^* \rightarrow 4 {\ell }{}{} \) channel, to complex final states such as \(\mathrm {t}{}{} \bar{\mathrm {t}}\,{}{} \mathrm {H}{}{} \) production, MoMEMta has proven to be sufficiently flexible to properly handle these different situations.

The main advantage of MoMEMta over past and existing tools comes from its modular design, that greatly improves on usability and flexibility. MoMEMta is designed to offer a versatile and reusable framework for a wide range of applications of the MEM. While it is able to cover numerous use cases out of the box, the modular architecture of MoMEMta also enables users to easily extend its functionalities to handle situations we have not considered, while keeping the benefits of the I/O, configuration, and integration framework (a typical example would be the use of an optimised or simplified matrix element implementation). As possible future developments, we are considering adding an interface to other matrix element libraries such as MCFM [23] or Sherpa [55], or enhancing the performance of the integration itself through the use of vector integrand with modified transfer function to evaluate the effect of systematic uncertainties, or through the use of machine-learning inspired integration algorithms [56].

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: All results have been obtained using code available freely at https://doi.org/10.5281/zenodo.855289.]

References

G. Aad et al. (ATLAS Collaboration), Observation of a new particle in the search for the Standard Model Higgs boson with the ATLAS detector at the LHC. Phys. Lett. B 716, 1 (2012). https://doi.org/10.1016/j.physletb.2012.08.020. arXiv:1207.7214 [hep-ex]

S. Chatrchyan et al. (CMS Collaboration), Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC. Phys. Lett. B 716, 30 (2012). https://doi.org/10.1016/j.physletb.2012.08.021. arXiv:1207.7235 [hep-ex]

R.H. Dalitz, G.R. Goldstein, Test of analysis method for top–antitop production and decay events. Proc. R. Soc. Lond. A 455, 2803 (1999). https://doi.org/10.1098/rspa.1999.0428. arXiv:hep-ph/9802249 and references therein

T. Aaltonen et al. (CDF Collaboration), Top quark mass measurement in the \({\rm t}{\bar{\rm t}}\) all hadronic channel using a matrix element technique in \({\rm p}{\bar{\rm p}}\) collisions at \(\sqrt{s} = 1.96\, \text{TeV}\). Phys. Rev. D 79, 072010 (2009). https://doi.org/10.1103/PhysRevD.79.072010. arXiv:0811.1062 [hep-ex]

T. Aaltonen et al. (CDF Collaboration), Measurement of the top quark mass with dilepton events selected using neuroevolution at CDF. Phys. Rev. Lett. 102, 152001 (2009). https://doi.org/10.1103/PhysRevLett.102.152001. arXiv:0807.4652 [hep-ex]

T. Aaltonen et al. (CDF Collaboration), Measurement of the top-quark mass in the lepton+jets channel using a matrix element technique with the CDF II detector. Phys. Rev. D 84, 071105 (2011). https://doi.org/10.1103/PhysRevD.84.071105. arXiv:1108.1601 [hep-ex]

T. Aaltonen et al. (CDF Collaboration), Measurements of the top-quark mass and the \({\rm t}{\bar{\rm t}}\) cross section in the hadronic \(\tau +\) jets decay channel at \(\sqrt{s} = 1.96~\text{ TeV }\). Phys. Rev. Lett. 109, 192001 (2012). https://doi.org/10.1103/PhysRevLett.109.192001. arXiv:1208.5720 [hep-ex]

V.M. Abazov et al. (D0 Collaboration), Precision measurement of the top-quark mass in lepton+jets final states. Phys. Rev. D 91, 112003 (2015). https://doi.org/10.1103/PhysRevD.91.112003. arXiv:1501.07912 [hep-ex]

V.M. Abazov et al. (D0 Collaboration), Measurement of the top quark mass using the matrix element technique in dilepton final states. Phys. Rev. D 94, 032004 (2016). https://doi.org/10.1103/PhysRevD.94.032004. arXiv:1606.02814 [hep-ex]

G. Aad et al. (ATLAS Collaboration), Search for the Standard Model Higgs boson produced in association with top quarks and decaying into \(\text{ b }\bar{\text{ b }}\) in pp collisions at \(\sqrt{s}=8~\text{ TeV }\) with the ATLAS detector. Eur. Phys. J. C 75(7), 349 (2015). https://doi.org/10.1140/epjc/s10052-015-3543-1. arXiv:1503.05066 [hep-ex]

M. Aaboud et al. (ATLAS Collaboration), Search for the Standard Model Higgs boson produced in association with top quarks and decaying into a \(\text{ b }\bar{\text{ b }}\) pair in pp collisions at \(\sqrt{s} = 13~\text{ TeV }\) with the ATLAS detector. Phys. Rev. D 97, 072016 (2018). https://doi.org/10.1103/PhysRevD.97.072016. arXiv:1712.08895 [hep-ex]

V. Khachatryan et al. (CMS Collaboration), Search for a standard model Higgs boson produced in association with a top-quark pair and decaying to bottom quarks using a matrix element method. Eur. Phys. J. C 75(6), 251 (2015). https://doi.org/10.1140/epjc/s10052-015-3454-1. arXiv:1502.02485 [hep-ex]

A.M. Sirunyan et al. (CMS Collaboration), Search for \(\text{ t }\overline{\text{ t }}\text{ H }\) production in the \(\text{ H }\rightarrow \text{ b }\overline{\text{ b }}\) decay channel with leptonic \(\text{ t }\overline{\text{ t }}\) decays in proton-proton collisions at \(\sqrt{s}=13~\text{ TeV }\). JHEP. arXiv:1804.03682 [hep-ex] (submitted)

A.M. Sirunyan et al. (CMS Collaboration), Search for \(\text{ t } \overline{\text{ t }}\text{ H }\) production in the all-jet final state in proton-proton collisions at \(\sqrt{s}= 13~\text{ TeV }\). JHEP 1806, 101 (2018). https://doi.org/10.1007/JHEP06(2018)101. arXiv:1803.06986 [hep-ex]

M. Aaboud et al. (ATLAS Collaboration), Measurement of the Higgs boson coupling properties in the \(\text{ H }\rightarrow \text{ ZZ }^{*} \rightarrow 4\ell \) decay channel at \(\sqrt{s} = 13~\text{ TeV }\) with the ATLAS detector. JHEP. arXiv:1712.02304 (submitted) [hep-ex]

M. Aaboud et al. (ATLAS Collaboration), Evidence for the associated production of the Higgs boson and a top quark pair with the ATLAS detector. Phys. Rev. D 97(7), 072003 (2018). https://doi.org/10.1103/PhysRevD.97.072003. arXiv:1712.08891 [hep-ex]

A.M. Sirunyan et al. (CMS Collaboration), Evidence for associated production of a Higgs boson with a top quark pair in final states with electrons, muons, and hadronically decaying \(\tau \) leptons at \(\sqrt{s} = 13~\text{ TeV }\). JHEP 1808, 066 (2018). https://doi.org/10.1007/JHEP08(2018)066. arXiv:1803.05485 [hep-ex]

G. Aad et al. (ATLAS Collaboration), Evidence for single top-quark production in the \(s\)-channel in proton-proton collisions at \(\sqrt{s}=8~\text{ TeV }\) with the ATLAS detector using the Matrix Element Method. Phys. Lett. B 756, 228–246 (2016). https://doi.org/10.1016/j.physletb.2016.03.017. arXiv:1511.05980 [hep-ex]

V. Khachatryan et al. (CMS Collaboration), Measurement of spin correlations in \({\rm t}{\bar{\rm t}}\) production using the matrix element method in the muon+jets final state in pp collisions at \(\sqrt{s} = 8~\text{ TeV }\). Phys. Lett. B 758, 321 (2016). https://doi.org/10.1016/j.physletb.2016.05.005. arXiv:1511.06170 [hep-ex]

P. Artoisenet, V. Lemaitre, F. Maltoni, O. Mattelaer, Automation of the matrix element reweighting method. JHEP 1012, 068 (2010). https://doi.org/10.1007/JHEP12(2010)068. arXiv:1007.3300 [hep-ph]

F. Fiedler, A. Grohsjean, P. Haefner, P. Schieferdecker, The matrix element method and its application in measurements of the top quark mass. Nucl. Instrum. Methods A 624, 203 (2010). https://doi.org/10.1016/j.nima.2010.09.024. arXiv:1003.1316 [hep-ex]

J. Alwall et al., The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 1407, 079 (2014). https://doi.org/10.1007/JHEP07(2014)079. arXiv:1405.0301 [hep-ph]

J.M. Campbell, R.K. Ellis, W.T. Giele, A multi-threaded version of MCFM. Eur. Phys. J. C 75(6), 246 (2015). https://doi.org/10.1140/epjc/s10052-015-3461-2. arXiv:1503.06182 [physics.comp-ph]

D.E. Ferreira de Lima, O. Mattelaer, M. Spannowsky, Searching for processes with invisible particles using a matrix element-based method. Phys. Lett. B 787, 100 (2018). https://doi.org/10.1016/j.physletb.2018.10.044. arXiv:1712.03266 [hep-ph]

J. Neyman, E.S. Pearson, On the problem of the most efficient tests of statistical hypotheses. Philos. Trans. R. Soc. Lond. A 231, 289–337 (1933). https://doi.org/10.1098/rsta.1933.0009

R. Ierusalimschy, L. Henrique de Figueiredo, W. Celes Filho, Lua—an extensible extension language. Softw. Pract. Exp. 26(6), 635–652 (1996). https://doi.org/10.1002/(SICI)1097-024X(199606)26:6\({<}\)635::AID-SPE26\({>}\)3.0.CO;2-P

R. Brun, F. Rademakers, ROOT—an object oriented data analysis framework, in Proceedings AIHENP’96 Workshop, Lausanne, Sep. 1996, Nuclear Instruments and Methods in Physics Research A, vol. 389 (1997), pp. 81–86. See also http://root.cern.ch/

M. Dobbs, J.B. Hansen, The HepMC C++ Monte Carlo event record for high energy physics. Comput. Phys. Commun. 134, 41 (2001)

J. Thaler, How to read LHC olympics data files (2006). http://madgraph.phys.ucl.ac.be/Manual/lhco.html. Accessed Feb 2018

L. Garren, P. Lebrun, StdHep user manual. http://cepa.fnal.gov/psm/stdhep/. Accessed Feb 2018

S. Brochet, S. Wertz, J. de Favereau. MoMEMta—MadGraph matrix element exporter (2018). https://doi.org/10.5281/zenodo.1250685

A. Buckley, J. Ferrando, S. Lloyd, K. Nordström, B. Page, M. Rüfenacht, M. Schönherr, G. Watt, LHAPDF6: parton density access in the LHC precision era. Eur. Phys. J. C 75, 132 (2015). https://doi.org/10.1140/epjc/s10052-015-3318-8. arXiv:1412.7420 [hep-ph]

T. Hahn, CUBA: a library for multidimensional numerical integration. Comput. Phys. Commun. 168, 78 (2005). https://doi.org/10.1016/j.cpc.2005.01.010. arXiv:hep-ph/0404043 [hep-ph]

G.P. Lepage, A new algorithm for adaptive multidimensional integration. J. Comput. Phys. 27, 192 (1978). https://doi.org/10.1016/0021-9991(78)90004-9

J.H. Friedman, M.H. Wright, A nested partitioning procedure for numerical multiple integration and adaptive importance sampling. ACM Trans. Math. Softw. 7, 76 (1981). https://doi.org/10.1145/355934.355939

J. Berntsen, T.O. Espelid, A. Genz, An adaptive algorithm for the approximate calculation of multiple integrals. ACM Trans. Math. Softw. 17, 437–451 (1991). https://doi.org/10.1145/210232.210233

J. Berntsen, T.O. Espelid, A. Genz, Algorithm 698: DCUHRE: an adaptive multidimensional integration routine for a vector of integrals. ACM Trans. Math. Softw. 17, 452–456 (1991). https://doi.org/10.1145/210232.210234

S. Brochet, S. Wertz, M. Vidal, B. François, A. Saggio, C. Delaere, V. Lemaître, Momemta/momemta: 1.0.0 (2018). https://doi.org/10.5281/zenodo.1250697

S. Brochet, S. Wertz, M. Vidal, B. François, A. Saggio, C. Delaere, V. Lemaître, The MoMEMta project website (2018). https://doi.org/10.5281/zenodo.1250743

S. Brochet, S. Wertz, A. Saggio MoMEMta/tutorials (version v1.0.0) (2018). https://doi.org/10.5281/zenodo.1250682

T. Sjöstrand et al., An introduction to PYTHIA 8.2. Comput. Phys. Commun. 191, 159 (2015). https://doi.org/10.1016/j.cpc.2015.01.024. arXiv:1410.3012 [hep-ph]

J. de Favereau et al., DELPHES 3, a modular framework for fast simulation of a generic collider experiment. JHEP 1402, 057 (2014). https://doi.org/10.1007/JHEP02(2014)057. arXiv:1307.6346 [hep-ex]

G. Aad et al. (ATLAS Collaboration), Measurements of Higgs boson production and couplings in the four-lepton channel in pp collisions at center-of-mass energies of 7 and 8 TeV with the ATLAS detector. Phys. Rev. D 91(1), 012006 (2015). https://doi.org/10.1103/PhysRevD.91.012006. arXiv:1408.5191 [hep-ex]

G. Aad et al. (ATLAS Collaboration), Study of the spin and parity of the Higgs boson in diboson decays with the ATLAS detector. Eur. Phys. J. C 75(10), 476 (2015). https://doi.org/10.1140/epjc/s10052-015-3685-1. arXiv:1506.05669 [hep-ex] [Erratum: Eur. Phys. J. C 76(3), 152 (2016). https://doi.org/10.1140/epjc/s10052-016-3934-y]

A.M. Sirunyan et al. (CMS Collaboration), Measurements of properties of the Higgs boson decaying into the four-lepton final state in pp collisions at \( \sqrt{s}=13~\text{ TeV }\). JHEP 1711, 047 (2017). https://doi.org/10.1007/JHEP11(2017)047. arXiv:1706.09936 [hep-ex]

A.M. Sirunyan et al. (CMS Collaboration), Constraints on anomalous Higgs boson couplings using production and decay information in the four-lepton final state. Phys. Lett. B 775, 1 (2017). https://doi.org/10.1016/j.physletb.2017.10.021. arXiv:1707.00541 [hep-ex]

S. Bolognesi, Y. Gao, A.V. Gritsan, K. Melnikov, M. Schulze, N.V. Tran, A. Whitbeck, On the spin and parity of a single-produced resonance at the LHC. Phys. Rev. D 86, 095031 (2012). https://doi.org/10.1103/PhysRevD.86.095031. arXiv:1208.4018 [hep-ph]

I. Anderson et al., Constraining anomalous HVV interactions at proton and lepton colliders. Phys. Rev. D 89(3), 035007 (2014). https://doi.org/10.1103/PhysRevD.89.035007. arXiv:1309.4819 [hep-ph]

P. Artoisenet et al., A framework for Higgs characterisation. JHEP 1311, 043 (2013). https://doi.org/10.1007/JHEP11(2013)043. arXiv:1306.6464 [hep-ph]

T. Aaltonen et al. (CDF Collaboration), First observation of electroweak single top quark production. Phys. Rev. Lett. 103, 092002 (2009). https://doi.org/10.1103/PhysRevLett.103.092002. arXiv:0903.0885 [hep-ex]

V.M. Abazov et al. (D0 Collaboration), Observation of single top quark production. Phys. Rev. Lett. 103, 092001 (2009). https://doi.org/10.1103/PhysRevLett.103.092001. arXiv:0903.0850 [hep-ex]

E. Alvarez, L. Da Rold, M. Estevez, J.F. Kamenik, Measuring \(|V_{{\rm td}}|\) at the LHC. Phys. Rev. D 97, 033002 (2018). https://doi.org/10.1103/PhysRevD.97.033002. arXiv:1709.07887 [hep-ph]

J. Alwall, A. Freitas, O. Mattelaer, The matrix element method and QCD radiation. Phys. Rev. D 83, 074010 (2011). https://doi.org/10.1103/PhysRevD.83.074010. arXiv:1010.2263 [hep-ph]

P. Artoisenet, P. de Aquino, F. Maltoni, O. Mattelaer, Unravelling \(t\overline{t}h\) via the matrix element method. Phys. Rev. Lett. 111(9), 091802 (2013). https://doi.org/10.1103/PhysRevLett.111.091802. arXiv:1304.6414 [hep-ph]

T. Gleisberg, S. Hoeche, F. Krauss, M. Schonherr, S. Schumann, F. Siegert, J. Winter, Event generation with SHERPA 1.1. JHEP 0902, 007 (2009). https://doi.org/10.1088/1126-6708/2009/02/007. arXiv:0811.4622 [hep-ph]

J. Bendavid, Efficient Monte Carlo integration using boosted decision trees and generative deep neural networks. arXiv:1707.00028 [hep-ph]

Acknowledgements

We warmly thank Andrea Giammanco and Olivier Mattelaer for their valued feedback. This project is funded by FRS-FNRS (Belgian National Scientific Research Fund) IISN projects 4.4503.17 and 4.4503.16. MoMEMta is part of AMVA4NP, a project that has received funding from the European Horizon 2020 research and innovation programme under grant agreement No 675440. SW is supported through a FRIA grant by the F.R.S.-FNRS. Computational resources have been provided by the supercomputing facilities of the Université catholique de Louvain (CISM/UCL) and the Consortium des Équipements de Calcul Intensif en Fédération Wallonie Bruxelles (CÉCI) funded by the Fond de la Recherche Scientifique de Belgique (F.R.S.-FNRS) under convention 2.5020.11. This work would not have been possible without the help of the MadWeight team. Special thanks to Matthias Komm who designed our logo.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Brochet, S., Delaere, C., François, B. et al. MoMEMta, a modular toolkit for the Matrix Element Method at the LHC. Eur. Phys. J. C 79, 126 (2019). https://doi.org/10.1140/epjc/s10052-019-6635-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-019-6635-5