Abstract

Also in a time series context, the simple Poisson distribution is very popular for modeling the marginal distribution of the generated counts. If, in contrast, a more complex count distribution is appropriate, then it is important to have tests available to detect this deviation from Poissonity. A possible approach is to use Fisher’s index of dispersion as a test statistic. For several types of Poisson INARMA process, the generated counts \(X_t\) do not only exhibit a marginal Poisson distribution, the lagged pairs \((X_t,X_{t-k})\) are even bivariately Poisson distributed. So to uncover violations of the Poisson null hypothesis within these INARMA processes, the application of a bivariate dispersion index appears to be a reasonable alternative to the simple univariate Fisher index. We survey several proposals for bivariate dispersion indexes and adapt them to a time series context. For the resulting modified indexes, we derive the asymptotic distribution under the null such that they can be used as test statistics. With simulations, we investigate the finite-sample performance of the asymptotic tests, and we analyze the question if the novel tests are advantageous compared to the simple univariate dispersion index test.

Similar content being viewed by others

References

Johnson NL, Kotz S, Balakrishnan N (1997) Discrete Multivar Distrib. Wiley, Hoboken

Crockett NG (1979) A quick test of fit of a bivariate distribution. In: McNeil D (ed) Interactive statistics. North-Holland, Amsterdam, pp 185–191

Loukas S, Kemp CD (1986) The index of dispersion test for the bivariate Poisson distribution. Biometrics 42(4):941–948

Best DJ, Rayner JCW (1997) Crockett’s test of fit for the bivariate Poisson. Biom J 39(4):423–430

Rayner JCW, Best DJ (1997) Smooth tests for the bivariate Poisson. Aust N Z J Stat 37(2):233–245

Kokonendji CC, Puig P (2018) Fisher dispersion index for multivariate count distributions: a review and a new proposal. J Multivar Anal 165:180–193

Alzaid AA, Al-Osh MA (1988) First-order integer-valued autoregressive process: distributional and regression properties. Stat Neerl 42(1):53–61

Alzaid AA, Al-Osh MA (1990) An integer-valued \(p\)th-order autoregressive structure (INAR(p)) process. J Appl Probab 27(2):314–324

Weiß CH (2018) Goodness-of-fit testing of a count time series’ marginal distribution. Metrika 81(6):619–651

Weiß CH (2008) Serial dependence and regression of Poisson INARMA models. J Stat Plan Inference 138(10):2975–2990

Doukhan P, Fokianos K, Li X (2012) On weak dependence conditions: the case of discrete valued processes. Stat Probab Lett 82(11):1941–1948

Doukhan P, Fokianos K, Li X (2013) Corrigendum to “On weak dependence conditions: the case of discrete valued processes”. Stat Probab Lett 83(2):674–675

Ibragimov I (1962) Some limit theorems for stationary processes. Theory Probab Appl 7(4):349–382

Cossette H, Marceau E, Toureille F (2011) Risk models based on time series for count random variables. Insur Math Econ 48(1):19–28

Zhang L, Hu X, Duan B (2015) Optimal reinsurance under adjustment coefficient measure in a discrete risk model based on Poisson MA(1) process. Scand Actuar J 2015(5):455–467

Hu X, Zhang L, Sun W (2018) Risk model based on the first-order integer-valued moving average process with compound Poisson distributed innovations. Scand Actuar J 2018(5):412–425

Weiß CH, Schweer S (2015) Detecting overdispersion in INARCH(1) processes. Stat Neerl 69(3):281–297

Weiß CH, Schweer S (2016) Bias corrections for moment estimators in Poisson INAR(1) and INARCH(1) processes. Stat Probab Lett 112:124–130

Al-Osh MA, Alzaid AA (1988) Integer-valued moving average INMA) process. Stat Pap 29(1):281–300

Aleksandrov B, Weiß CH (2018) Parameter estimation and diagnostic tests for INMA(1) processes. (Submitted)

Weiß CH (2012) Process capability analysis for serially dependent processes of Poisson counts. J Stat Comput Simul 82(3):383–404

Weiß CH (2018) An Introduction to Discrete-Valued Time Series. Wiley, Chichester

Steutel FW, van Harn K (1979) Discrete analogues of self-decomposability and stability. Ann Probab 7(5):893–899

McKenzie E (1985) Some simple models for discrete variate time series. Water Resour Bull 21(4):645–650

Du J-G, Li Y (1991) The integer-valued autoregressive (INAR(p)) model. J Time Ser Anal 12(2):129–142

Ferland R, Latour A, Oraichi D (2006) Integer-valued GARCH processes. J Time Ser Anal 27(6):923–942

Weiß CH (2009) Modelling time series of counts with overdispersion. Stat Methods Appl 18(4):507–519

Jacobs PA, Lewis PAW (1983) Stationary discrete autoregressive-moving average time series generated by mixtures. J Time Ser Anal 4(1):19–36

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix: Derivations

1.1 Some Central Limit Theorems

In order to derive asymptotic distributions for bivariate dispersion indexes, the asymptotic distribution of the vectors \(\frac{1}{\sqrt{T}} \sum _{t=1}^T \varvec{Y}_t^{(k)}\) is required, where

In Weiß and Schweer [18], their asymptotic distribution was derived for the case of a Poisson INAR(1) process and for lag \(k=1\). But their approach to derive asymptotic normality for \(\frac{1}{\sqrt{T}} \sum _{t=1}^T \varvec{Y}_t^{(k)}\) holds more generally, provided that the underlying process is strongly mixing with exponentially decreasing weights (this includes q-dependent processes such as INMA(q) processes, but also higher-order INAR(p) processes as shown by Doukhan et al. [11, 12]), and provided that moments of order \(>4\) exist. Only the expressions for mean and variance of the asymptotic normal distribution will change, depending on the underlying process and on the lag k:

For the subsequent central limit theorems (CLTs), we have to derive analytic expressions for the covariance matrix \(\varvec{\Sigma }{}^{(k)}\) in (A.2), having specified the data-generating process (DGP) as well as the time lag k.

1.1.1 CLTs for Poisson INAR(1) Process

Let the DGP follow the Poisson INAR(1) model, see “Appendix B.1.” For lag \(k=1\), Weiß and Schweer[18] explicitly computed the entries of \(\varvec{\Sigma }{}^{(k)}\) in (A.2) as

For lag \(k=2\) and again an underlying Poisson INAR(1) process, obviously, only the expressions for \(\sigma _{13}^{(2)},\sigma _{23}^{(2)},\sigma _{33}^{(2)}\) change. Denoting the joint mixed moments by

with \(s_1,\ldots , s_{r}\in {\mathbb{N}}_0\), \(0\le s_1\le \ldots \le s_{r}\) and \(r\in {\mathbb N}\) [21], (A.2) leads to

Using the expressions for the joint moments in a Poisson INAR(1) process provided by Weiß [21], and after tedious algebra, we finally end up with

1.1.2 CLTs for Poisson INMA(1) Process

Now let the DGP follow the Poisson INMA(1) model from Example B.3. Because of the 1-dependence of \((X_t)_{\mathbb{Z}}\), the infinite sums in (A.2) become finite sums with only a few summands. Aleksandrov and Weiß [20] derived the following formulae for the entries of \(\varvec{\Sigma }{}^{(k)}\):

1.2 Proof of Theorems 3.1 and 3.2 (Sketch)

We define the functions \(g_i: {\mathbb{R}}^3\rightarrow {\mathbb{R}}\), \(i=1,2\), by

since \(g_i\left( \mu ,\mu (0),\mu (k)\right) \) just equals \(f_i\left( \mu ,\sigma ^2,\rho (k)\right) \). Note that \(\left( \mu , \mu (0), \mu (k)\right) = \left( \mu , \mu (1 + \mu ), \mu (\alpha ^k + \mu )\right) \) in the case of the Poisson INAR(1) model.

For obtaining the asymptotic covariance matrix \(f_i\left( \bar{X},\hat{\sigma }^2,\hat{\rho }(k)\right) \), the gradients of \(g_i\) are required and have to be evaluated in \(\left( \mu ,\mu (0),\mu (k)\right) \). After tedious algebra, it follows that the \({\mathbf{D}}_i^{(k)}:=grad \,g_i\left( \mu ,\mu (0),\mu (k)\right) \) are given by

So for \(k=1\), by using (A.3), we finally obtain the variances \(\tilde{\sigma }_{i,1}^2 := {\mathbf{D}}_i^{(1)} \varvec{\Sigma }^{(1)} ({\mathbf{D}}_i^{(1)})^{\top }\) as

Analogoulsy, for \(k=2\) and using (A.6), we get

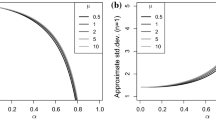

The application of the Delta method also implies that all statistics \(f_i\left( \bar{X},\hat{\sigma }^2,\hat{\rho }(k)\right) \) are asymptotically unbiased, i. e., their means converge with \(T\rightarrow \infty \) to \(f_i(\mu ,\mu ,\alpha ^k)\), the latter being equal to 0. But to obtain a better approximation in samples of finite length T, we use a second-order Taylor expansion for each function \(g_i(y_1,y_2,y_3)\). Tedious algebra leads to closed-form expressions for the Hessians \({\mathbf{H}}_{g_i}(y_1,y_2,y_3)\), which, evaluated in \(\left( \mu ,\mu (0),\mu (k)\right) \), lead to the following formulae for \({\mathbf{H}}_{i}^{(k)} := {\mathbf{H}}_{g_i}\left( \mu ,\mu (0),\mu (k)\right) \):

Then, abbreviating \(\varvec{Z}_T^{(k)} := \frac{1}{\sqrt{T}} \sum _{t=1}^T \varvec{Y}_t^{(k)}\), we compute the bias corrections from

For lag \(k=1\), we finally obtain the following approximate formulae for the means \(\mu _{i,1}:=E\left[ f_i\left( \bar{X},\hat{\sigma }^2,\hat{\rho }(1)\right) \right] \):

Analogously, we have for lag \(k=2\):

1.3 Proof of Theorems 3.3 and 3.4 (Sketch)

We proceed in analogy to “Appendix A.2.” The functions \(g_i: {\mathbb{R}}^3\rightarrow {\mathbb{R}}\), \(i=1,2\), from (A.8) are now evaluated for Poisson INMA(1) processes, where we have \(\mu (0)=\mu (1+\mu )\), \(\mu (1)=\mu \left( \beta /(1+\beta )+\mu \right) \) and \(\mu (k)=\mu ^2\) for \(k\ge 2\). Thus, \({\mathbf{D }}_i^{(k)}:=grad \,g_i\left( \mu ,\mu (0),\mu (k)\right) \), for \(i\in \{1,2\}\), becomes

So for lag 1, we finally obtain the variances \(\tilde{\sigma }_{i,1}^2 := ({\mathbf{D} }_i^{(1)})^{\top }\varvec{\Sigma }^{(1)}{\mathbf{D} }_i^{(1)} \) as

and for lag \(k\ge 2\):

Plugging-in \(\beta =\frac{\rho }{1-\rho }\), we obtain the expressions in Theorems 3.3 and 3.4.

The Hessians \({\mathbf{H} }_{g_i}(y_1,y_2,y_3)\) for \(i=1,2\) are now evaluated in \(\left( \mu ,\mu (0),\mu (k)\right) \) with \(\mu (1)=\mu (\rho +\mu )\) and \(\mu (k)=\mu ^2\) for \(k\ge 2\), so (A.12) immediately implies for \({\mathbf{H }}_{i}^{(k)} := {\mathbf{H} }_{g_i}\left( \mu ,\mu (0),\mu (k)\right) \):

So in analogy to “Appendix A.2,” the approximate mean formulae

and

follow. Plugging-in \(\beta =\frac{\rho }{1-\rho }\), we obtain the expressions in Theorems 3.3 and 3.4.

Finally, we consider the indexes \(\hat{f}_3(k),\hat{f}_4(k)\) in Theorem 3.4, see equation (3.1) for the functions \(f_3,f_4\). We define the following functions \(g_i: {\mathbb{R}}^3\rightarrow {\mathbb{R}}\), \(i=3,4\),

since \(g_i\left( \mu ,\mu (0),\mu (k)\right) \) just equals \(f_i\left( \mu ,\sigma ^2,\rho (k)\right) \) for \(i\in \{3,4\}\). After tedious algebra, it follows for lags \(k\ge 2\) that

So for lag \(k=2\), we finally obtain the variances \(\tilde{\sigma }_{i,2}^2 = ({\mathbf{D }}_i^{(2)})^{\top }\varvec{\Sigma }^{(2)}{\mathbf{D }}_i^{(2)}\) with \(i=3,4\) as

Analogously, for lags \(k>2\), we get

Using that \(\rho =\frac{\beta }{1+\beta }\), we obtain the expressions in Theorem 3.4.

Next, we compute the Hessians \({\mathbf{H }}_{g_i}(y_1,y_2,y_3)\) for \(i=3,4\) and evaluate them in \(\left( \mu ,\mu (0),\mu (k)\right) \) with \(\mu (1)=\mu (\rho +\mu )\) and \(\mu (k)=\mu ^2\) for \(k\ge 2\). This leads to the following expressions for \({\mathbf{H }}_{i}^{(k)} := {\mathbf{H }}_{g_i}\left( \mu ,\mu (0),\mu (k)\right) \):

So like above, the approximate mean formulae

follow. Plugging-in \(\beta =\frac{\rho }{1-\rho }\), we obtain the expressions in Theorem 3.4, and the proof is complete.

Specific Models for Count Processes

Appendix briefly summarizes the definitions of the count time series models being considered in this article together with relevant stochastic properties; more properties, references and further models are presented in the book by Weiß [22].

1.1 INARMA Models

If X is a discrete random variable with range \({\mathbb{N}}_0\) and if \(\alpha \in (0;1)\), then the random variable \(\alpha \circ X := \sum _{i=1}^{X} Z_i\) is said to arise from X by binomial thinning [23]. Here, the \(Z_i\) are i. i. d. binary random variables with \(P(Z_i=1)=\alpha \), which are also independent of X. Hence, \(\alpha \circ X\) has a conditional binomial distribution given the value of X, i. e., \(\alpha \circ X|X\ \sim \text{Bin}(X,\alpha )\). The boundary values \(\alpha =0\) and \(\alpha =1\) are included into this definition by setting \(0\circ X = 0\) and \(1\circ X = X\).

Using the random operator “\(\circ \),” McKenzie [24] defined the INAR(1) model in the following way.

Definition B.1

(INAR(1) Model) Let the innovations \((\epsilon _t)_{\mathbb{Z}}\) be an i. i. d. process with range \({\mathbb{N}}_0\) denote \(E[\epsilon _t]=\mu _{\epsilon }\), \(V[\epsilon _t]=\sigma _{\epsilon }^2\). Let \(\alpha \in [0;1)\). A process \((X_t)_{\mathbb{Z}}\) of observations, which follows the recursion

is said to be an INAR(1) process if all thinning operations are performed independently of each other and of \((\epsilon _t)_{\mathbb{Z}}\), and if the thinning operations at each time t as well as \(\epsilon _t\) are independent of \((X_s)_{s<t}\).

The ACF of the INAR(1) model equals \(\rho (k)=\alpha ^k\), and the relation \(I=(I_{\epsilon }+\alpha )/(1+\alpha )\) holds between the observations’ and innovations’ dispersion indexes. The most popular instance of the INAR(1) family is the Poisson INAR(1) model [24], which assumes the innovations \((\epsilon _t)_{\mathbb{Z}}\) to be i. i. d. according to the Poisson distribution \(\text{Poi}(\lambda )\). A Poisson INAR(1) process is an irreducible and aperiodic Markov chain with a unique stationary marginal distribution for \((X_t)_{\mathbb{Z}}\), the Poisson distribution \(\text{Poi}(\mu )\) with \(\mu =\frac{\lambda }{1-\alpha }\). The k-step-ahead pairs \((X_t,X_{t-k})\) are bivariately Poisson distributed according to \(\text{BPoi}\left( \alpha ^k\,\mu ;\ (1-\alpha ^k)\,\mu , (1-\alpha ^k)\,\mu \right) \) [7].

It is also possible to extend the INAR(1) recursion in Definition B.1 to a pth-order autoregression of the form

Due to the stochastic nature of the thinnings involved in (B.1), however, additional assumptions concerning the thinnings \((\alpha _1\circ X_{t},\ldots , \alpha _p\circ X_{t})\) are required. While the INAR(p) model by Du and Li [25] assumes the conditional independence of \((\alpha _1\circ X_{t},\ldots , \alpha _p\circ X_{t})\) given \(X_t\), the one by Alzaid and Al-Osh [8] supposes a conditional multinomial distribution. Since only the latter model continues the INAR(1)’s property that we have Poisson distributed observations in the case of Poisson innovations, we shall focus here on the Poisson INAR(p) model according to Alzaid and Al-Osh [8], where the innovations are i. i. d. \(\text{Poi}(\lambda )\) and, hence, the observations have the stationary marginal distribution \(\text{Poi}(\mu )\) with \(\mu =\lambda /(1-\alpha _{\bullet })\).

Example B.2

(Poisson INAR(2) Model) Solving the Yule–Walker-type equations (3.6) and (3.8) in Alzaid and Al-Osh [8], the ACF of the Poisson INAR(2) model becomes

As shown in Weiß [9], the lagged observations \(X_t\) and \(X_{t-k}\) with \(k\in {\mathbb{N}}\) are bivariately Poisson distributed according to

An analogous result as in Example B.2 holds for the family of Poisson INMA(q) processes, which are non-Markovian but q-dependent:

where the \(q+1\) thinnings at time t are performed independently of each other. Commonly, one sets \(\beta _0:=1\). Like in the INAR(p) case, different choices are possible for the conditional distribution of \((\beta _0 \circ \epsilon _t, \beta _1\circ \epsilon _{t},\ldots , \beta _{q}\circ \epsilon _{t})\) given \(\epsilon _{t}\), see Weiß [10] for details. Independent of this choice, the marginal distribution is always Poisson with mean \(\mu =\lambda \,\beta _{\bullet }\), where \(\beta _{\bullet } := \sum _{j=0}^{q}\beta _j\), if the innovations are Poisson distributed, \(\epsilon _t\sim \text{Poi}(\lambda )\). Such processes are referred to as Poisson INMA(q) processes, and it was shown by Weiß [10] that again \((X_t,X_{t-k})\, \sim \, \text{BPoi}\left( \rho (k)\,\mu ;\, \left( 1-\rho (k)\right) \,\mu ,\, \left( 1-\rho (k)\right) \,\mu \right) \) holds, where the ACF has to be determined from the specific INMA(q) model.

Example B.3

(INMA(1) Model) The most simple INMA(1) model is defined by the recursion

In the particular case of the Poisson INMA(1) model, the ACF becomes \(\rho (1)=\beta /(1+\beta )\in [0;0.5]\) and 0 otherwise, see Al-Osh and Alzaid [19]. For general INMA(1) processes, see Aleksandrov and Weiß [20], the following relation holds:

1.2 INGARCH Models for Counts

The INGARCH model, see Ferland et al. [26], can be understood as an integer-valued counterpart to the conventional GARCH model (generalized autoregressive conditional heteroskedasticity process). Conditioned on the past observations, the INGARCH model assumes an ARMA-like recursion for the conditional mean. Depending on the choice of the conditional distribution family, different INGARCH models are obtained. The basic INGARCH model assumes a conditional Poisson distribution.

Definition B.4

(INGARCH Model) Let \(p\ge 1\) and \(q\ge 0\). The count process \((X_t)_{\mathbb{Z}}\) follows the (Poisson) INGARCH(p, q) model if

-

(i)

\(X_t\), conditioned on \(X_{t-1}, X_{t-2},\ldots \), is Poisson distributed according to \(\text{Poi}(M_t)\), where

-

(ii)

the conditional mean \(M_t\,:=\,E[X_t\ |\ X_{t-1},\ldots ]\) satisfies

$$\begin{aligned} \textstyle M_t\ =\ \beta _0\ +\ \sum _{i=1}^{p}\ \alpha _i\, X_{t-i}\ +\ \sum _{j=1}^{q}\ \beta _j\, M_{t-j} \end{aligned}$$with \(\beta _0>0\) and \(\alpha _1,\ldots ,\alpha _{p},\beta _1,\ldots ,\beta _{q}\ge 0\).

The ACF can be determined from a set of Yule–Walker equations [27]. If \(q=0\), the model of Definition B.4 is referred to as the INARCH(p) model. Other types of INGARCH model are obtained by replacing the conditional Poisson distribution in (i) by another count distribution.

Example B.5

(INARCH(1) Model) The INARCH(1) model constitutes a counterpart to the INAR(1) model of Definition B.1. Denoting its model parameters by \(\beta :=\beta _0>0\) and \(\alpha :=\alpha _1\in (0;1)\), the INARCH(1) model requires \(X_t\), given \(X_{t-1}, \ldots \), to be conditionally Poisson distributed according to \(\text{Poi}(\beta +\alpha \, X_{t-1})\). The marginal mean and ACF equal \(\mu =\beta /(1-\alpha )\) and \(\rho (k) = \alpha ^k\) like in the INAR(1) case, where the dispersion index equals \(I=1/(1-\alpha ^2)>1\) [27].

1.3 NDARMA Models for Counts

The “new” discrete ARMA (NDARMA) models have been proposed by Jacobs and Lewis [28]. They generate an ARMA-like dependence structure through some kind of random mixture.

Definition B.6

(NDARMA Model for Counts) Let the observations \((X_t)_{\mathbb{Z}}\) and the innovations \((\epsilon _t)_{\mathbb{Z}}\) be count processes, where \((\epsilon _t)_{\mathbb{Z}}\) is i. i. d. with \(P(\epsilon _t=i)=p_i\), and where \(\epsilon _t\) is independent of \((X_s)_{s<t}\). The random mixture is obtained through the i. i. d. multinomial random vectors

which are independent of \((\epsilon _t)_{\mathbb{Z}}\) and of \((X_s)_{s<t}\). Then \((X_t)_{\mathbb{Z}}\) is said to be an NDARMA(p, q) process if it follows the recursion

The stationary marginal distribution of \(X_t\) is identical to that of \(\epsilon _{t}\), i. e., \(P(X_t=i)=p_i=P(\epsilon _t=i)\). So, among others, \(\text{Poi}(\mu )\)-innovations lead to \(\text{Poi}(\mu )\)-observations. The lagged bivariate probabilities equal

where \(\delta _{i,j}\) abbreviates the Kronecker delta. In the Poisson case, this is not a bivariate Poisson distribution, because this distribution emerges by inflating the diagonal probabilities of two independent Poissons. The ACF is nonnegative and follows from a set of Yule–Walker equations [28].

Example B.7

(NDARMA(1, 1) Model) For an NDARMA(1, 1) process with dependence parameters \(\phi _1, \varphi _1, \varphi _0=1-\phi _1-\varphi _1\), the ACF equals \(\rho (k) = \phi _1^{k-1}\,(\phi _1 + \varphi _0\varphi _{1})\) for \(k\ge 1\) [28].

Rights and permissions

About this article

Cite this article

Weiß, C.H., Aleksandrov, B. Model Diagnostics for Poisson INARMA Processes Using Bivariate Dispersion Indexes. J Stat Theory Pract 13, 26 (2019). https://doi.org/10.1007/s42519-018-0028-1

Published:

DOI: https://doi.org/10.1007/s42519-018-0028-1

Keywords

- Poisson INARMA process

- Bivariate Poisson distribution

- Bivariate dispersion index

- Hypothesis test

- Bias correction