Abstract

Multi-criteria decision analysis (MCDA) is increasingly used to support decisions in healthcare involving multiple and conflicting criteria. Although uncertainty is usually carefully addressed in health economic evaluations, whether and how the different sources of uncertainty are dealt with and with what methods in MCDA is less known. The objective of this study is to review how uncertainty can be explicitly taken into account in MCDA and to discuss which approach may be appropriate for healthcare decision makers. A literature review was conducted in the Scopus and PubMed databases. Two reviewers independently categorized studies according to research areas, the type of MCDA used, and the approach used to quantify uncertainty. Selected full text articles were read for methodological details. The search strategy identified 569 studies. The five approaches most identified were fuzzy set theory (45 % of studies), probabilistic sensitivity analysis (15 %), deterministic sensitivity analysis (31 %), Bayesian framework (6 %), and grey theory (3 %). A large number of papers considered the analytic hierarchy process in combination with fuzzy set theory (31 %). Only 3 % of studies were published in healthcare-related journals. In conclusion, our review identified five different approaches to take uncertainty into account in MCDA. The deterministic approach is most likely sufficient for most healthcare policy decisions because of its low complexity and straightforward implementation. However, more complex approaches may be needed when multiple sources of uncertainty must be considered simultaneously.

Similar content being viewed by others

Multi-criteria decision analysis is increasingly used in healthcare, but guidance is lacking on how to quantify and incorporate uncertainty. |

This review identified five commonly used approaches to quantify and incorporate uncertainty: deterministic sensitivity analyses, probabilistic sensitivity analyses, Bayesian frameworks, fuzzy set theory, and grey theory. |

A simple approach that will most likely be sufficient for most decisions is deterministic sensitivity analysis, although more complex approaches may be needed when multiple sources of uncertainty must be considered simultaneously. |

1 Introduction

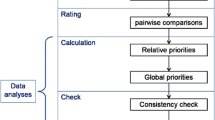

Over the last decade, researchers in outcomes research have increasingly suggested multi-criteria decision analysis (MCDA) as an approach to support healthcare decisions [1]. MCDA is an extension of decision theory that supports decision makers (policy makers, regulators, managers) who have multiple (possibly conflicting) objectives by decomposing the decision objectives into criteria [2]. These criteria are given a numeric importance weight and decision alternatives such as drugs or treatments are scored on each of the criteria. The criteria weights and performances scores are then aggregated into an overall score, which is used to rank the alternative treatments. For a more detailed overview of the subsequent steps in MCDA, the reader is referred to Belton and Steward [2] and Hummel et al. [3]. MCDA is considered to be a transparent and flexible approach [4–7]. It has been used to support a wide range of decisions, such as in portfolio optimization, benefit-risk assessment, health technology assessment, and shared decision making [8–13]. Although the objectives differ, these decisions share three characteristics. First, they are characterized by possibly conflicting decision criteria where trade-offs between criteria influence the decision. Second, the criteria to operationalize can be qualitative, quantitative, or a combination of both. Finally, these decisions and underlying criteria weights and performance scores are characterized by uncertainty.

In principle, several sources of uncertainty can be distinguished and have been clearly described by different authors [14, 15]. In their comprehensive taskforce report, Briggs et al. [15] define four types of uncertainty: stochastic uncertainty, parameter uncertainty, heterogeneity, and structural uncertainty. Although their report discusses uncertainty in decision analytic models in general, this classification is almost identical for MCDA. However, in MCDA, the four types of uncertainty are relevant to consider for both the weighting of criteria and the scoring of alternatives. Criteria weights are always elicited from decision makers, and stochastic uncertainty in weighting is therefore random variability in weights as assigned by otherwise identical persons. Parameter uncertainty refers to the estimation error of an estimated quantity, for example, the mean weight given by a group of decision makers to a criterion. Heterogeneity is explainable variation in weights, for example owing to a person’s background characteristics. Finally, structural uncertainty occurs when decision makers are unsure if all relevant decision criteria are included and how these criteria are structured [14].

Like criteria weights, the performance scores of alternatives can be obtained through elicitation. Alternatively, performance scores can be obtained from other data sources such as registries or clinical trials. If performance scores are elicited from decision makers, generally the same sources of uncertainty apply as in the weighting step. If data are obtained from other data sources (such as an odds ratio comparing two drugs derived from a clinical trial), stochastic uncertainty, parameter uncertainty, and heterogeneity stems from variation or uncertainty in the source data. Structural uncertainty, however, is relevant to consider in these instances as it refers to how the outcomes are measured and how the data are transformed to a performance score in the MCDA. Often, performance scores are assigned based on a structured appraisal of available evidence. In that case, both elicitation- and data-specific uncertainties are relevant, of which the mix depends on the amount of available evidence. An overview of the types of uncertainty and their source in MCDA is presented in Table 1.

A recent systematic review by Marsh et al. identified 41 applications of MCDA in healthcare and found that decision makers are positive about the possibilities of MCDA but that guidance on its application is lacking [16]. Twenty-two studies considered uncertainty, predominantly with deterministic sensitivity analysis. Previous studies outside the area of healthcare reviewed approaches to take into account uncertainty in MCDA-supported decisions. Durbach and Stewart [17] reviewed different approaches to take into account uncertainty in the scoring of alternatives. They identified: probability-based approaches, fuzzy numbers, risk-based approaches, and scenario analysis. Finally, a review by Kangas and Kangas [18] in the field of forestry identified the frequentist, Bayesian, evidential reasoning, fuzzy sets, probabilistic, and possibility theory approaches.

Although the use of MCDA is emerging and uncertainty clearly is a relevant issue in MCDA models, there currently is no guidance on how uncertainty should be taken into account. To account for uncertainty in MCDA, three separate steps are proposed: (1) first, the sources of uncertainty need to be identified, followed by (2) an assessment of the magnitude of the uncertainty, and finally (3) by an evaluation whether the uncertainty would eventually lead to a different decision.

The objective of the present study is threefold. First, the study aims to identify common approaches to account for uncertainty. The second objective is to classify the identified approaches according to their mathematical approach and according to how the estimates for uncertainty are derived. Finally, the approaches will be compared, and their applicability for healthcare decisions will be discussed. In this discussion, the focus will be on approaches that deal with elicitation-related uncertainty.

2 Methods

2.1 Identification of Studies

A literature search in the SCOPUS and PubMed databases was performed for the period between 1960 and 2013 using the following search terms: (MCDA OR multi criteria decision analysis) AND (methodological OR parameter OR structural OR stochastic OR subjective OR *) uncertainty, multi criteria decision analysis AND (sensitivity OR robustness OR scenario) analysis, uncertainty AND X, and sensitivity analysis AND X. In these strings, an asterisk represents a wildcard that can be matched by any word, and X was replaced with each of the individual MCDA method names, written both in full as well as in an abbreviated form (see also Table 3). In addition to the database search, reference lists were also searched. Non-English studies, studies that did not apply or discuss MCDA, and studies with an application of MCDA where uncertainty was not taken into account were excluded.

2.2 Classification

Following the identification, all included studies were classified by research area. This was done by coding the publications (journals and conference proceedings) in which the studies were published with their top-level All Science Journal Classification (ASJC). If publications were associated with multiple classifications, all were used for the coding. To examine applications in healthcare, sub-level ASJC codes related to healthcare were used (available from authors on request).

Second, the studies were classified by the MCDA method used. Only MCDA methods that were identified twice or more were put in separate categories, all methods that were used only once were put in the “other” category. MCDA methods were separated into: value-based, outranking, reference-based, or other/hybrid methods. Value-based methods construct a single overall value for each decision alternative. Low scores on one criterion can be compensated by higher scores on another criterion. In outranking methods, low scores on one criterion may not be compensated by higher scores on another criterion. Furthermore, incomparability between the performance scores of alternatives is allowed. Reference-based methods calculate the similarity of alternatives to an ideal and anti-ideal alternative. The categorization was performed independently by three reviewers (HB, CG, MH) and disagreements were resolved through discussion. Full-text articles were accessed when the used MCDA method could not be identified from the abstract alone or in case of continued disagreement between reviewers.

Third, two reviewers (HB, CG) independently classified the studies by their approach to take into account uncertainty. An initial list of approaches was defined based on the authors’ past experiences. On this initial list were the deterministic, probabilistic, Bayesian, and fuzzy set approaches. Newly identified approaches were added to the list. For every unique combination of an MCDA method and an uncertainty approach, the most recent full-text article was read. If needed, references of these articles were also read to find methodological details or to identify textbooks.

3 Results from the Literature Review

3.1 Identification and Classification of Studies

A total of 569 studies were identified that were published between 1986 and 2013. The number of published studies increases sharply after the year 2000 (Fig. 1). Top-level ASJC research areas that accounted for more than 10 % of the included studies are engineering (21 %), computer science (17 %), and environmental science (12 %), as presented in Table 2. Only 3 % of the included studies are published in a publication with an ASJC related to healthcare. Most studies (88 %) use one MCDA method while 11 % use two and 1 % uses three. As seen in Table 3, the analytic hierarchy process (AHP) was used most often (52 %), followed by the technique for order of preferences by similarity to ideal solution (TOPSIS) (9 %) and the preference ranking organization method for enrichment evaluation (PROMETHEE) (7 %). For 15 % of studies, the MCDA method used could not be identified from the abstract alone and therefore required full-text reading.

3.2 Description of Identified Approaches for Uncertainty Analysis

Following the identification of studies, five distinctly different approaches were identified: deterministic sensitivity analysis, probabilistic sensitivity analysis, Bayesian frameworks, fuzzy set theory, and grey theory. Fuzzy set theory was most commonly identified (45 % of studies). The frequency with which the identified approaches were used in various research and application fields is presented in Table 2, and Table 3 presents how often the uncertainty approaches were combined with various MCDA methods. For a comprehensive demonstration of the different approaches, please refer to the Excel file in the electronic supplementary material.

3.2.1 Deterministic Sensitivity Analysis

Three types of deterministic sensitivity analyses were identified: simple sensitivity analysis, threshold analysis, and analysis of extremes [19]. In simple sensitivity analysis, one model parameter (a criteria weight or a performance score) is varied at a time, and the impact of variation on the rank order of alternatives is observed. If the induced variation does not change the rank order of alternatives (i.e. the preference of one alternative over the other), the decision is considered robust [20, 21]. Both threshold analysis and analysis of extremes are aimed at determining how much model parameters need to change before a different rank order of alternatives is obtained [22].

3.2.2 Probabilistic Sensitivity Analysis

Probabilistic sensitivity analysis requires decision makers to define probability distributions for model parameters. For assigning probability distributions for performance scores, decision makers can refer to descriptive statistics or patient-level data from patient registries or clinical trials [23, 24]. Methods to formally elicit probability distributions from (clinical) experts are also available [25]. For model parameters for which there is little or no evidence, non-informative distributions such as a uniform distribution can be used [26]. After propagating the uncertainty through the MCDA model with Monte Carlo simulations, probability distributions are obtained for each alternative’s overall score, and probabilistic statements such as the probability of a particular rank order of alternatives can be made [26–28].

3.2.3 Bayesian Framework: Bayesian Networks and Dempster–Shafer Theory

Both Bayesian networks and Dempster–Shafer theory use the Bayesian framework to estimate the impact of uncertainty on the outcome of an MCDA-supported decision. Bayesian networks allow decision makers to explicitly model the interdependency of decision-related elements (for example, patient characteristics that may impact treatment performance) as a directed acyclic graph. Associated with each node in the graph are edges (arrows between nodes) that show the conditional relationships between the node and its parents. If there is no information about the conditional probabilities, prior distributions can be assumed. With more evidence, these prior probabilities can be updated using Bayes’ theorem [29, 30].

Dempster–Shafer theory is an evidential reasoning-based method, which is an extension of the Bayesian framework [31–33]. The five basic elements of Dempster–Shafer theory are: the frame of discernment, probability mass assignments, belief functions, plausibility functions, and Dempster’s rule of combination. The frame of discernment is a set of hypotheses from which one hypothesis with the most evidential support has to be chosen. In the context of MCDA, choice alternatives can be considered as the hypotheses; and the aim becomes to select the choice alternative for which the most evidential support for being the best choice (either for the whole decision or for a specific criterion) exists [34]. The first step in such an elicitation process consists of decision makers assigning a probability mass to (sets of) hypotheses in the frame of discernment, for example by indicating that there is evidential support for treatment A and B being the best performing treatments on a particular criterion. Then, lower and upper bounds of evidential support (termed belief and plausibility) are calculated per hypothesis, for example ‘the evidential support for this hypothesis is between 50 and 80 %’. Finally, probability mass assignments from different evidence sources (for example, different decision makers) can be combined with Dempster’s rule of combination. An agreement metric between decision makers can also be calculated.

3.2.4 Fuzzy Set Theory

In fuzzy set theory, elements have a degree of membership to a set [35, 36]. The degree of an element’s membership to a fuzzy set is expressed as a number between zero (not a member of the fuzzy set) and one (completely a member of the fuzzy set). Degrees of memberships between zero and one indicate ambiguous set membership. Consider as examples of fuzzy sets the sets of “very important criteria” or of “low criteria weights”. If all memberships are equal to either zero or one, fuzzy set theory reduces to conventional set theory. When applying fuzzy set theory for MCDA, decision makers first have to identify ambiguous elements (such as particular criteria weights) in their decision problem. They then have to define fuzzy sets and the membership functions to capture the identified ambiguity. For example, the pairwise comparisons to establish criteria weights in the AHP are conventionally numbers between 1 (“equivalence”) and 9 (“extreme preference”) [37]. When decision makers use AHP in combination with fuzzy sets, statements such as “extreme preference” can be ambiguous. The ambiguity in such statements can be represented with fuzzy sets [38, 39].

3.2.5 Grey theory

In grey theory, uncertainty can be represented with ranges termed black, white, or gray numbers [40]. The ‘shade’ of a number indicates the magnitude of uncertainty. Black numbers represent a complete lack of knowledge (range is from minus infinity to plus infinity), whereas white numbers represent complete knowledge (range is a single number). Gray numbers are between these extremes, for example a gray number with a lower bound of 1 and an upper bound of 5. Like fuzzy sets, gray numbers can be described by verbal statements: for example, a performance score between 0 and 0.3 on a particular criterion may be defined as “low” [41]. In an MCDA context, grey theory requires decision makers to provide lower and upper bounds for criteria weights or performance scores. These yield bounds on the overall treatment scores.

3.3 Applications in Healthcare

Nineteen applications of the approaches in healthcare-related publications were identified. Of these, seven are related to healthcare policy decisions. Nine studies in healthcare used the deterministic approach [5, 42–49]. Of these, four studies were in the context of (research) portfolio optimization [42, 46, 47, 49], early health technology assessment [46], and benefit-risk assessment [5]. The other studies applying the deterministic approach were in emergency management [43] and drinking water systems [44, 45]. Four studies in healthcare were categorized as probabilistic [9, 23, 50, 51], of which two focus on benefit-risk assessments [9, 51], one on infectious diseases [23], and one on water transport (safety) [50]. Four fuzzy set theory studies considered environmental health issues [52–55], while one considered diagnostics [56]. Finally, one study applied a Bayesian framework for diagnostics [57].

4 Discussion

4.1 Comparison of Approaches

The present review was performed to identify and classify the different approaches to quantify uncertainty in MCDA. Five distinct approaches were identified: deterministic sensitivity analysis, probabilistic sensitivity analysis, Bayesian frameworks, fuzzy set theory, and grey theory. To guide our discussion on the advantages and disadvantages of these approaches for healthcare applications, we will discuss them with respect to six criteria that are derived from earlier studies that assessed the applicability of uncertainty approaches for: operations research [58], forestry [18], engineering [59], and health economic models [60]. Criteria relating to what extent approaches can represent uncertainty are inputs and outputs (how can decision makers assign uncertainty to model parameters and what additional information does the approach then yield) and the number of uncertainty sources that can be taken into account. More practical considerations are the versatility of the approaches with regard to combining them with MCDA methods, time considerations, and prerequisite knowledge.

Deterministic sensitivity analysis implies that weights are varied as a single value and is therefore easy applicable to both uncertainty in performance scores [20] and uncertainty in criteria weights [61, 62]. If there is heterogeneity in criteria performance scores and/or criteria weights, scenario analysis can be used to compare the outcomes for relevant subgroups [46, 63]. Drawbacks of deterministic sensitivity analysis are that the range over which weights or performance scores are varied is usually arbitrarily chosen and that it is assumed that all parameter values in the range are equally probable. These drawbacks may lead to a biased view of the impact of uncertainty on the decision. Probabilistic sensitivity analysis can address these particular drawbacks by allowing decision makers to assign probability distributions estimating both stochastic and parameter uncertainty.

Bayesian networks are especially relevant when there are conditional relations in the evidence sources, which obviously is present if confounded clinical endpoints obtained from clinical studies are transformed to a performance score. Bayesian networks seem therefore mostly useful as a method to investigate the evidence before the scoring step of alternatives in an MCDA. Dempster–Shafer theory is most useful when little or no evidence is available and an elicitation method is used to gather expert opinion on the performance scores of treatments. Because human judgment is often characterized by ambiguity [35, 64], decision makers may accept fuzzy set theory more readily than approaches that denote variation in criteria weights as uncertain using terms like ‘deviation’ and ‘error’ [35]. In grey theory, ranges can be defined for both criteria weights and performance scores easily. However, this gives information only about the bounds of model parameters such as overall treatment scores and does not give insight into the likelihood of values in between the bounds.

4.2 Widening the Application of Uncertainty Analysis in MCDA for Healthcare

In an attempt to develop guidance for practitioners of MCDA, the five approaches can be compared in terms of how the required input is elicited and what additional information the approaches yield about the magnitude and impact of uncertainty. Three input modes are defined: changing values, specifying ranges, and specifying distributions. In “changing values”, decision makers simply take other values for the criteria weights and performance scores. Although theoretically this can be done with any approach, it is what deterministic sensitivity analysis was designed specifically for. The output of such an analysis can give decision makers more insight in the impact of the induced variation on the overall treatment scores and on the rank order of treatments. In “specifying ranges”, decision makers have to specify lower and upper bounds of model parameters. This can be done with the probabilistic, gray, and fuzzy set approaches. Grey theory was specifically designed for this input mode. In probabilistic sensitivity analysis, a uniform distribution between the lower and upper bounds can be assigned. In fuzzy set theory, uniform fuzzy sets can be defined between the lower and upper bounds. The outputs yielded by the approaches differ when using the “specifying ranges” input mode. The grey theory approach will only provide insight into bounds for treatment overall scores while the probabilistic and fuzzy approaches also yield the distribution of overall treatment scores between the bounds. Finally, by “specifying distributions”, decision makers state how values are distributed over a range. Distributions can be specified in probabilistic sensitivity analysis, Bayesian networks and fuzzy set theory. As output the decision makers will gain insight into the distribution of overall treatment scores. The overlap between the distributions of overall treatment scores can be used to assess the impact of uncertainty on the rank order of treatments. In the probabilistic and Bayesian approaches, this is operationalized as the probability of particular rankings occurring.

Deterministic sensitivity analysis is the only approach that cannot take into account a larger number of uncertain model parameters simultaneously and thus does not consider the cumulative impact of uncertainty in multiple model parameters. The probabilistic and Bayesian network approaches can simultaneously take into account uncertainty from multiple sources of uncertainty with Monte Carlo simulations. In Dempster–Shafer theory, Dempster’s rule of combination is used to combine the probability mass assessments of multiple decision makers. Fuzzy sets and gray numbers can be combined using the known mathematical operations on sets and ranges [35, 65].

In this review, approaches to take into account uncertainty are identified and classified according to their ability to capture and represent uncertainty in the elicitation of criteria weights and performance scores. However, the applicability of these approaches are sometimes strictly dependent on the specific form of MCDA used. Some MCDA methods such as stochastic multi-criteria acceptability analysis are very closely tied to one specific uncertainty approach (in this case, the probabilistic approach). Some other MCDA methods allow the use of multiple approaches for uncertainty while AHP and PROMETHEE can be used with all approaches. It is yet unclear whether other gaps in combinations are due to fundamental methodological mismatches or if the combinations are theoretically possible when there is more familiarity with the MCDA methods and/or uncertainty approaches.

With regard to time considerations, little time is required for simple deterministic sensitivity analysis (assuming only one or two parameters are changed simultaneously). The process of assigning probability distributions in the probabilistic approach can be time consuming for analysts (when a large amount of data has to be modeled) and decision makers (when distributions are elicited from them). This duration can be reduced by assigning distributions only to specific parameters. An example of when this is relevant is when clinical data are available but decision makers are unable or unwilling to provide criteria weights [9, 27, 51, 66]. Time requirements in Bayesian framework are more demanding because of the assignment of not only probability distributions but also of dependence relations in the form of conditional probabilities. Fuzzy set theory requires the definition of fuzzy sets, which takes time. Yet, when these are agreed upon they can be used over multiple decisions. Grey theory is straightforward to use in a group discussion setting [67]. If there is disagreement about the value of model parameters in the group, the lowest and highest of those can be used as bounds for the gray number.

A final practical consideration is the knowledge required to implement an uncertainty approach or to interpret its results. Deterministic sensitivity analysis requires no additional knowledge apart from knowledge about the MCDA method that is used. Grey theory requires decision makers to be able to give, define, and interpret ranges of values. Probabilistic sensitivity analysis requires that decision makers are familiar with probability distributions. This is also the case with Bayesian frameworks, which in addition require knowledge about Bayesian statistics. Analysts applying the Bayesian framework need knowledge about Bayesian programming languages such as WINBUGS. Decision makers should be familiar with set theory to be able to understand and apply fuzzy set theory. When there is a disparity between the decision maker’s current knowledge and the knowledge required from the approach, there is a knowledge gap. This gap may lead to a lack of confidence in the results of an (uncertainty) analysis [68, 69], and bridging the gap can be time consuming. Apart from the required knowledge, visual representations of uncertainty are important factors for ease of interpretation. Decision makers applying deterministic sensitivity analysis can obtain a tornado diagram, which ranks model parameters on their ability to change the overall scores of alternatives. For probabilistic and Bayesian approaches, scatter plots or density plots can be used and Bayesian networks can also be shown. Fuzzy sets can be visualized through membership function plots similar to probabilistic density plots. Because the outcomes of Dempster–Shafer theory and grey theory analysis are the lower and upper bounds of overall scores of treatments, graphs may be less useful.

4.3 Limitations

Although the present review identified many applications, the list is unlikely to be exhaustive owing to the large amount of work on MCDA in different fields. Furthermore, studies that did not mention uncertainty in their title or abstract may have been missed. Although these are potential limitations, the sample of studies provides sufficient information to stimulate a discussion about the use of approaches for uncertainty assessment. Approaches that our review did not classify as such but that were mentioned in the earlier reviews are risk-based approaches [17] and possibility theory [18]. In risk-based approaches, an alternative’s performance score on a criterion will become lower when that performance is uncertain (‘more risky’). Possibility theory combines fuzzy set theory and evidential reasoning [70]. Although these approaches were classified as distinct approaches in the earlier reviews, there is considerable overlap with our classification.

The aspects on which we compared the uncertainty approaches are based on earlier literature, yet it is important to acknowledge that for real-world decision making other aspects, depending on the specific decision and decision maker(s), may be relevant. Further empirical research with decision makers is needed to better assess the usefulness and specific requirements of the approaches for real-world decision making.

Following the classification of sources of uncertainty, all approaches that were identified can be used to assess uncertainty in the criteria weights and performance scores as assigned by decision makers. However, no approaches were identified to deal with structural uncertainty [2, 71–73]. One explanation for this is that MCDA already facilitates an informed discussion and that this addresses structural uncertainty. However, further work is recommended to identify approaches to take into account structural uncertainty and ways to develop MCDA models to incorporate these approaches [74, 75].

5 Conclusions and Recommendations

To our best knowledge, our review is the first to give an overview of approaches to take into account uncertainty in MCDA-supported decisions with a focus on the approaches’ applicability to the context of healthcare decision making. The review identified five approaches to take into account uncertainty in MCDA-supported decisions. In conclusion, the deterministic approach seems most appropriate if the criteria weights or performance scores are varied as a single value. The gray approach seems most appropriate if only lower and upper bounds are elicited. The other approaches can be used flexibly across all three input modes. In a group decision process where the opinions of several decision makers are combined, the distribution input mode seems most relevant, and this would argue for the probabilistic and fuzzy set approaches that allow distributions [76]. From the review it is concluded that deterministic sensitivity analysis will likely be sufficient for most decisions because it has the advantage of a straightforward implementation. It also is an intuitive approach and applicable for multiple MCDA approaches. Although deterministic sensitivity analysis is useful in many applications, the more complex approaches are especially useful when uncertainty in multiple parameters has to be taken into account simultaneously, when dependence relations exist, or when there are no prohibitive time constraints for uncertainty modeling.

References

Diaby V, Campbell K, Goeree R. Multi-criteria decision analysis (MCDA) in health care: a bibliometric analysis. Oper Res Health Care. 2013;2:20–4.

Belton V, Stewart TJ. Multiple criteria decision analysis: an integrated approach. 2nd ed. Dordrecht: Kluwer Academic Publishers; 2002.

Hummel JM, Bridges JFP, IJzerman MJ. Group decision making with the analytic hierarchy process in benefit-risk assessment: a tutorial. Patient. 2014;7:129–40.

Holden W. Benefit-risk analysis: a brief review and proposed quantitative approaches. Drug Saf. 2003;26:853–62.

Mussen F, Salek S, Walker S. A quantitative approach to benefit-risk assessment of medicines—part 1: the development of a new model using multi-criteria decision analysis. Pharmacoepidemiol Drug Saf. 2007;16:S2–15.

Guo JJ, Pandey S, Doyle J, Bian B, Lis Y, Raisch DW. A review of quantitative risk-benefit methodologies for assessing drug safety and efficacy-report of the ISPOR risk-benefit management working group. Value Health. 2010;13:657–66.

Phillips LD, Fasolo B, Zafiropoulos N, Beyer A. Is quantitative benefit–risk modelling of drugs desirable or possible? Drug Discov Today Technol. 2011;8:e3–10.

Levitan BS, Andrews EB, Gilsenan A, Ferguson J, Noel RA, Coplan PM, et al. Application of the BRAT framework to case studies: observations and insights. Clin Pharmacol Ther. 2011;89:217–24.

Van Valkenhoef G, Tervonen T, Zhao J, de Brock B, Hillege HL, Postmus D. Multicriteria benefit–risk assessment using network meta-analysis. J Clin Epidemiol. 2012;65:394–403.

Diaby V, Goeree R. How to use multi-criteria decision analysis methods for reimbursement decision-making in healthcare: a step-by-step guide. Expert Rev Pharmacoecon Outcomes Res. 2014;14:81–99.

Dolan JG. Shared decision-making: transferring research into practice: the analytic hierarchy process (AHP). Patient Educ Couns. 2008;73:418–25.

Thokala P, Duenas A. Multiple criteria decision analysis for health technology assessment. Value Health. 2012;15:1172–81.

Devlin NJ, Sussex JON. Incorporating multiple criteria in HTA: methods and processes. London: The Office of Health Economics; 2011.

Bojke L, Claxton K, Sculpher M, Palmer S. Characterizing structural uncertainty in decision analytic models: a review and application of methods. Value Health. 2009;12:739–49.

Briggs AH, Weinstein MC, Fenwick EL, Karnon J, Sculpher MJ, Paltiel AD. Model parameter estimation and uncertainty: a report of the ISPOR-SMDM modeling good research practices task force-6. Value Health. 2012;15:835–42.

Marsh K, Lanitis T, Neasham D, Orfanos P, Caro J. Assessing the value of healthcare interventions using multi-criteria decision analysis: a review of the literature. Pharmacoeconomics. 2014;32(4):345–65.

Durbach IN, Stewart TJ. Modeling uncertainty in multi-criteria decision analysis. Eur J Oper Res. 2012;223:1–14.

Kangas AS, Kangas J. Probability, possibility and evidence: approaches to consider risk and uncertainty in forestry decision analysis. For Policy Econ. 2004;6:169–88.

Felli J, Hazen GB. Sensitivity analysis and the expected value of perfect information. Med Decis Mak. 1998;18:95–109.

Churilov L, Liu D, Ma H, Christensen S, Nagakane Y, Campbell B, et al. Multiattribute selection of acute stroke imaging software platform for extending the time for thrombolysis in emergency neurological deficits (EXTEND) clinical trial. Int J Stroke. 2013;8:204–10.

Parthiban P, Abdul Zubar H. An integrated multi-objective decision making process for the performance evaluation of the vendors. Int J Prod Res. 2013;51:3836–48.

Hyde KM, Maier HR, Colby CB. A distance-based uncertainty analysis approach to multi-criteria decision analysis for water resource decision making. J Environ Manage. 2005;77:278–90.

Cox R, Sanchez J, Revie CW. Multi-criteria decision analysis tools for prioritising emerging or re-emerging infectious diseases associated with climate change in Canada. PLoS One. 2013;8:e68338.

Hopfe CJ, Augenbroe GLM, Hensen JLM. Multi-criteria decision making under uncertainty in building performance assessment. Build Environ. 2013;69:81–90.

Haakma W, Steuten LMG, Bojke L, IJzerman MJ. Belief elicitation to populate health economic models of medical diagnostic devices in development. Appl Health Econ Health Policy. 2014;12:327–34.

Betrie GD, Sadiq R, Morin KA, Tesfamariam S. Selection of remedial alternatives for mine sites: a multicriteria decision analysis approach. J Environ Manage. 2013;119:36–46.

Prado-Lopez V, Seager TP, Chester M, Laurin L, Bernardo M, Tylock S. Stochastic multi-attribute analysis (SMAA) as an interpretation method for comparative life-cycle assessment (LCA). Int J Life Cycle Assess. 2013;19:405–16.

Broekhuizen H, Groothuis-Oudshoorn CGM, Hauber AB, IJzerman MJ. Integrating patient preferences and clinical trial data in a Bayesian model for benefit-risk assessment. 34th Annual Meeting of the Society of Medical Decision Making. Phoenix, AZ; 2012.

Fenton N, Neil M. Making decisions: using Bayesian nets and MCDA. Knowl Based Syst. 2001;14:307–25.

Albert J. Bayesian computation with R. 1st ed. In: Gentleman R, Hornik K, Parmigiani G, editors. New York: Springer Science+Business Media, Inc.; 2007.

Beynon MJ. The Role of the DS/AHP in identifying inter-group alliances and majority rule within group decision making. Gr Decis Negot. 2005;15:21–42.

Srivastava RP. An introduction to evidential reasoning for decision making under uncertainty: Bayesian and belief function perspectives. Int J Acc Inf Syst. 2011;12:126–35.

Ma W, Xiong W, Luo X. A model for decision making with missing, imprecise, and uncertain evaluations of multiple criteria. Int J Intell Syst. 2013;28:152–84.

Beynon M, Curry B, Morgan P. The Dempster–Shafer theory of evidence: an alternative approach to multicriteria decision modelling. Omega. 2000;28:37–50.

Zadeh L. Fuzzy sets. Inf Control. 1965;8:338–53.

Chen S-J, Hwang C-L. Fuzzy multiple attribute decision making. 1st ed. Berlin: Springer; 1992.

Saaty RW. The analytical hierarchy process: what it is and how it is used. Math Model. 1987;9:161–76.

Montazar A, Gheidari ON, Snyder RL. A fuzzy analytical hierarchy methodology for the performance assessment of irrigation projects. Agric Water Manag. 2013;121:113–23.

Pitchipoo P, Venkumar P, Rajakarunakaran S. Fuzzy hybrid decision model for supplier evaluation and selection. Int J Prod Res. 2013;51:3903–19.

Ju-Long D. Control problems of grey systems. Syst Control Lett. 1982;1:288–94.

Kuang H, Hipel KW, Kilgour DM. Evaluation of source water protection strategies in Waterloo Region based on Grey Systems Theory and PROMETHEE II. In: IEEE International Conference on Systems, Man, and Cybernetics (SMC), Seoul, South Korea; 2012. p. 2775–9.

Franc M, Frangiosa T. Pharmacogenomic study feasibility assessment and pharmaceutical business decision-making. In: Cohen N, editor. Pharmacogenomics and Personalized Medicine. Methods in Pharmacology and Toxicology. 1st ed. New York: Springer; 2008. p. 253–65.

Bertsch V, Geldermann J. Preference elicitation and sensitivity analysis in multicriteria group decision support for industrial risk and emergency management. Int J Emerg Manag. 2008;5:7–24.

Bouchard C, Abi-Zeid I, Beauchamp N, Lamontagne L, Desrosiers J, Rodriguez M. Multicriteria decision analysis for the selection of a small drinking water treatment system. J Water Supply Res Technol. 2010;59:230–42.

Francisque A, Rodriguez MJ, Sadiq R, Miranda LF, Proulx F. Reconciling, “actual” risk with “perceived” risk for distributed water quality: a QFD-based approach. J Water Supply Res Technol. 2011;60:321–42.

Hilgerink MP, Hummel MJ, Manohar S, Vaartjes SR, IJzerman MJ. Assessment of the added value of the Twente Photoacoustic Mammoscope in breast cancer diagnosis. Med Devices (Auckl). 2011;4:107–15.

Lee CW, Kwak NK. Strategic enterprise resource planning in a health-care system using a multicriteria decision-making model. J Med Syst. 2011;35:265–75.

Richman MB, Forman EH, Bayazit Y, Einstein DB, Resnick MI, Stovsky MD. A novel computer based expert decision making model for prostate cancer disease management. J Urol. 2005;174:2310–8.

Wilson E, Sussex J, Macleod C, Fordham R. Prioritizing health technologies in a primary care trust. J Health Serv Res Policy. 2007;12:80–5.

Rafiee R, Ataei M, Kamali M. Probabilistic stability analysis of Naien water transporting tunnel and selection of support system using TOPSIS approach. Sci Res Essays. 2011;6:4442–54.

Tervonen T, van Valkenhoef G, Buskens E, Hillege HL, Postmus D. A stochastic multicriteria model for evidence-based decision making in drug benefit-risk analysis. Stat Med. 2011;30:1419–28.

Karimi AR, Mehrdadi N, Hashemian SJ, Nabi Bidhendi GR, Tavakkoli Moghaddam R. Selection of wastewater treatment process based on the analytical hierarchy process and fuzzy analytical hierarchy process methods. Int J Environ Sci Technol. 2011;8:267–80.

Tesfamariam S, Sadiq R, Najjaran H. Decision making under uncertainty: an example for seismic risk management. Risk Anal. 2010;30:78–94.

Yang AL, Huang GH, Qin XS, Fan YR. Evaluation of remedial options for a benzene-contaminated site through a simulation-based fuzzy-MCDA approach. J Hazard Mater. 2012;213–214:421–33.

Zhang K, Achari G, Pei Y. Incorporating linguistic, probabilistic, and possibilistic information in a risk-based approach for ranking contaminated sites. Integr Environ Assess Manag. 2010;6:711–24.

Uzoka FME, Barker K. Expert systems and uncertainty in medical diagnosis: a proposal for fuzzy-ANP hybridisation. Int J Med Eng Inform. 2010;2:329–42.

Castro F, Caccamo LP, Carter KJ, Erickson BA, Johnson W, Kessler E, et al. Sequential test selection in the analysis of abdominal pain. Med Decis Mak. 1996;16:178–83.

Rios Insua D, French S. A framework for sensitivity analysis in discrete multi-objective decision-making. Eur J Oper Res. 1991;54:176–90.

Helton J. Uncertainty and sensitivity analysis techniques for use in performance assessment for radioactive waste disposal. Reliab Eng Syst Saf. 1993;42:327–67.

Claxton K. Exploring uncertainty in cost-effectiveness analysis. PharmacoEconomics. 2008;26:781–98.

Ribeiro F, Ferreira P, Araújo M. Evaluating future scenarios for the power generation sector using a multi-criteria decision analysis (MCDA) tool: the Portuguese case. Energy. 2013;52:126–36.

Srdjevic Z, Samardzic M, Srdjevic B. Robustness of AHP in selecting wastewater treatment method for the coloured metal industry: Serbian case study. Civ Eng Environ Syst. 2012;29:147–61.

Durbach IN. Outranking under uncertainty using scenarios. Eur J Oper Res. 2014;232:98–108.

Vahdani B, Mousavi SM, Tavakkoli-Moghaddam R, Hashemi H. A new design of the elimination and choice translating reality method for multi-criteria group decision-making in an intuitionistic fuzzy environment. Appl Math Model. 2013;37:1781–99.

Yang Y. Extended grey numbers and their operations. In: IEEE International Conference on Systems, Man and Cybernetics, 2007, Montreal, Quebec; 2007. p. 2181–6.

Lahdelma R, Hokkanen J. SMAA-stochastic multiobjective acceptability analysis. Eur J Oper Res. 1998;2217:137–43.

Zavadskas EK, Kaklauskas A. Multi-attribute decision-making model by applying grey numbers. Informatica. 2009;20:305–20.

Caro J, Möller J. Decision-analytic models: current methodological challenges. Pharmacoeconomics. 2014;32(10):943–50.

Tuffaha HW, Gordon LG, Scuffham P. Value of information analysis in health care: a review of principles and applications. J Med Econ. 2014;17(6):377–88.

Zadeh LA. Fuzzy sets as a basis for a theory of possibility. Fuzzy Sets Syst. 1978;1:3–28.

Keeney R, Raiffa H. Decisions with multiple objectives. Cambridge: Cambridge University Press; 1976.

Ishizaka A, Nemery P. Multi-criteria decision analysis: methods and software. 1st ed. Chichester: Wiley; 2013.

Bilcke J, Beutels P, Brisson M, Jit M. Accounting for methodological, structural, and parameter uncertainty in decision-analytic models: a practical guide. Med Decis Mak. 2011;31:675–92.

Winterfeldt D. Structuring decision problems for decision analysis. Acta Psychol (Amst). 1980;45:71–93.

Kahneman D, Tvsersky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979;47:263–91.

Belton V, Pictet J. A framework for group decision using a MCDA model: sharing, aggregating or comparing individual information? J Decis Syst. 1997;6:283–303.

Liu W. Propositional, probabilistic and evidential reasoning. Heidelberg: Physica-Verlag HD; 2001.

Bouyssou D, Marchant T, Pirlot M, Tsoukias A, Vincke P. Evaluation and decision models with multiple criteria: stepping stones for the analyst. New York: Springer Science+Business Media, Inc.; 2006.

Winterfeldt D, Edwards W. Decision analysis and behavioral research. Cambridge: Cambridge University Press; 1986.

Opricovic S. Multicriteria optimization of civil engineering systems. Belgrade: Faculty of Civil Engineering; 1998.

Proctor W, Drechsler M. Deliberative multicriteria evaluation. Environ Plan C Gov Policy. 2006;24:169–90.

Autran Monteiro Gomes LF, Duncan Rangel LA. An application of the TODIM method to the multicriteria rental evaluation of residential properties. Eur J Oper Res. 2009;193:204–11.

Acknowledgments

This study was funded by ZonMw Grant No. 152002051. All authors contributed to the study conception and design. HB, CG, and MH reviewed and categorized the abstracts. HB, CG, and MIJ drafted the manuscript. All authors contributed to the critical revision of intellectual content in the final manuscript. MIJ is the guarantor for the overall content. The authors would like to thank the anonymous reviewers for their valuable comments. The authors have no conflicts of interest to declare.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Broekhuizen, H., Groothuis-Oudshoorn, C.G.M., van Til, J.A. et al. A Review and Classification of Approaches for Dealing with Uncertainty in Multi-Criteria Decision Analysis for Healthcare Decisions. PharmacoEconomics 33, 445–455 (2015). https://doi.org/10.1007/s40273-014-0251-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-014-0251-x