Abstract

Background

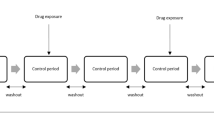

Observational healthcare data offer the potential to identify adverse drug reactions that may be missed by spontaneous reporting. The self-controlled cohort analysis within the Temporal Pattern Discovery framework compares the observed-to-expected ratio of medical outcomes during post-exposure surveillance periods with those during a set of distinct pre-exposure control periods in the same patients. It utilizes an external control group to account for systematic differences between the different time periods, thus combining within- and between-patient confounder adjustment in a single measure.

Objectives

To evaluate the performance of the calibrated self-controlled cohort analysis within Temporal Pattern Discovery as a tool for risk identification in observational healthcare data.

Research Design

Different implementations of the calibrated self-controlled cohort analysis were applied to 399 drug-outcome pairs (165 positive and 234 negative test cases across 4 health outcomes of interest) in 5 real observational databases (four with administrative claims and one with electronic health records).

Measures

Performance was evaluated on real data through sensitivity/specificity, the area under receiver operator characteristics curve (AUC), and bias.

Results

The calibrated self-controlled cohort analysis achieved good predictive accuracy across the outcomes and databases under study. The optimal design based on this reference set uses a 360 days surveillance period and a single control period 180 days prior to new prescriptions. It achieved an average AUC of 0.75 and AUC >0.70 in all but one scenario. A design with three separate control periods performed better for the electronic health records database and for acute renal failure across all data sets. The estimates for negative test cases were generally unbiased, but a minor negative bias of up to 0.2 on the RR-scale was observed with the configurations using multiple control periods, for acute liver injury and upper gastrointestinal bleeding.

Conclusions

The calibrated self-controlled cohort analysis within Temporal Pattern Discovery shows promise as a tool for risk identification; it performs well at discriminating positive from negative test cases. The optimal parameter configuration may vary with the data set and medical outcome of interest.

Similar content being viewed by others

References

Edwards IR, Lindquist M, Wiholm BE, Napke E. Quality criteria for early signals of possible adverse drug reactions. Lancet. 1990;336(8708):156–8.

Rawlins MD. Spontaneous reporting of adverse drug reactions. I: the data. Brit J Clin Pharmacol. 1988;26(1):1–5.

Meyboom RH, Lindquist M, Egberts AC. An ABC of drug-related problems. Drug Saf. 2000;22(6):415–23.

Harpaz R, DuMouchel W, LePendu P, Bauer-Mehren A, Ryan P, Shah NH. Performance of pharmacovigilance signal-detection algorithms for the FDA adverse event reporting system. Clin Pharmacol Ther. 2013;93(6):539–46.

Public Law 110-85: Food and Drug Administration Amendments Act of 2007. 2007.

Woodcock J, Behrman RE, Dal Pan GJ. Role of postmarketing surveillance in contemporary medicine. Annu Rev Med. 2011;62:1–10.

Norén GN, Hopstadius J, Bate A, Star K, Edwards IR. Temporal pattern discovery in longitudinal electronic patient records. Data Min Knowl Discov. 2010;20(3):361–87.

Norén GN, Bate A, Hopstadius J, Star K, Edwards IR. Temporal pattern discovery for trends and transient effects: its application to patient records. In: ACM SIGKDD international conference on knowledge discovery and data mining, KDD ‘08. Las Vegas: ACM; 2008. p. 963–71.

Suissa S. The case–time–control design. Epidemiology. 1995;6(3):248–53.

Ryan PB, Madigan D, Stang PE, Marc Overhage J, Racoosin JA, Hartzema AG. Empirical assessment of methods for risk identification in healthcare data: results from the experiments of the Observational Medical Outcomes Partnership. Stat Med. 2012;31(30):4401–15.

Norén GN, Hopstadius J, Bate A, Edwards IR. Safety surveillance of longitudinal databases: results on real-world data. Pharmacoepidemiol Drug Saf. 2012;21(6):673–5.

Schuemie MJ. Safety surveillance of longitudinal databases: further methodological considerations. Pharmacoepidemiol Drug Saf. 2012;21(6):670–2.

Ryan PB, Schuemie MJ, Welebob E, Duke J, Valentine S, Hartzema AG. Defining a reference set to support methodological research in drug safety. Drug Saf. 2013 (in this supplement issue). doi:10.1007/s40264-013-0097-8.

Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70(1):41–55.

Ryan PB, Schuemie MJ, Madigan D. Empirical performance of a self-controlled cohort method: lessons for developing a risk identification and analysis system. Drug Saf. 2013 (in this supplement issue). doi:10.1007/s40264-013-0101-3.

Hallas J. Evidence of depression provoked by cardiovascular medication: a prescription sequence symmetry analysis. Epidemiology. 1996;7:5.

Farrington CP, Nash J, Miller E. Case series analysis of adverse reactions to vaccines: a comparative evaluation. Am J Epidemiol. 1996;143(11):1165–73.

Maclure M. The case-crossover design: a method for studying transient effects on the risk of acute events. Am J Epidemiol. 1991;133(2):144–53.

Tannen RL, Weiner MG, Xie D. Use of primary care electronic medical record database in drug efficacy research on cardiovascular outcomes: comparison of database and randomised controlled trial findings. BMJ. 2009;338:b81.

Schuemie MJ. Safety surveillance of longitudinal databases: further methodological considerations. Pharmacoepidemiol Drug Saf. 2012;21(6):670–2. doi:10.1002/pds.3259.

Tisdale J, Miller D. Drug-induced diseases: prevention, detection, and management. 2nd ed. USA: American Society of Health-System Pharmacists; 2010.

Armstrong B. A simple estimator of minimum detectable relative risk, sample size, or power in cohort studies. Am J Epidemiol. 1987;126(2):356–8.

Hanley JA, McNeil BJ. A method of comparing the areas under receiver operating characteristic curves derived from the same cases. Radiology. 1983;148(3):839–43.

Smith BM, Schwartzman K, Bartlett G, Menzies D. Adverse events associated with treatment of latent tuberculosis in the general population. CMAJ. 2011;183(3):E173–9.

Carson JL, Strom BL, Duff A, Gupta A, Shaw M, Lundin FE, et al. Acute liver disease associated with erythromycins, sulfonamides, and tetracyclines. Ann Intern Med. 1993;119(7 Pt 1):576–83.

Zorych I, Madigan D, Ryan P, Bate A. Disproportionality methods for pharmacovigilance in longitudinal observational databases. Stat Methods Med Res. 2013;22(1):39–56.

Norén GN, Hopstadius J, Bate A. Shrinkage observed-to-expected ratios for robust and transparent large-scale pattern discovery. Stat Methods Med Res. 2013;22(1):57–69.

Acknowledgments

The Observational Medical Outcomes Partnership is funded by the Foundation for the National Institutes of Health (FNIH) through generous contributions from the following: Abbott, Amgen Inc., AstraZeneca, Bayer Healthcare Pharmaceuticals, Inc., Biogen Idec, Bristol-Myers Squibb, Eli Lilly & Company, GlaxoSmithKline, Janssen Research and Development, Lundbeck, Inc., Merck & Co., Inc., Novartis Pharmaceuticals Corporation, Pfizer Inc, Pharmaceutical Research Manufacturers of America (PhRMA), Roche, Sanofi-aventis, Schering-Plough Corporation, and Takeda. Dr. Ryan is an employee of Janssen Research and Development, and a past employee of GlaxoSmithKline, but does not receive compensation for his work with OMOP. Dr. Schuemie has become an employee of Janssen Research and Development since completing the work described here. Dr. Schuemie received a fellowship from the Office of Medical Policy, Center for Drug Evaluation and Research, Food and Drug Administration. Drs. Schuemie and Madigan receive funding from FNIH. Dr. Norén, Mr. Bergvall and Ms. Juhlin were partially funded by FNIH grant NOREN11OMOP. The authors appreciate the thoughtful feedback from I. Ralph Edwards at the Uppsala Monitoring Centre, the OMOP Research Investigators, Executive Board, and Advisory Boards. No compensation was given for the reviews.

This article was published in a supplement sponsored by the Foundation for the National Institutes of Health (FNIH). The supplement was guest edited by Stephen J.W. Evans. It was peer reviewed by Olaf H. Klungel who received a small honorarium to cover out-of-pocket expenses. S.J.W.E has received travel funding from the FNIH to travel to the OMOP symposium and received a fee from FNIH for the review of a protocol for OMOP. O.H.K has received funding for the IMI-PROTECT project from the Innovative Medicines Initiative Joint Undertaking (http://www.imi.europa.eu) under Grant Agreement no 115004, resources of which are composed of financial contribution from the European Union’s Seventh Framework Programme (FP7/2007–2013) and EFPIA companies' in kind contribution.

Author information

Authors and Affiliations

Corresponding author

Additional information

The OMOP research used data from Truven Health Analytics (formerly the Health Business of Thomson Reuters), and includes MarketScan® Research Databases, represented with MarketScan Lab Supplemental (MSLR, 1.2 m persons), MarketScan Medicare Supplemental Beneficiaries (MDCR, 4.6 m persons), MarketScan Multi-State Medicaid (MDCD, 10.8 m persons), MarketScan Commercial Claims and Encounters (CCAE, 46.5 m persons). Data also provided by Quintiles® Practice Research Database (formerly General Electric’s Electronic Health Record, 11.2 m persons) database. GE is an electronic health record database while the other four databases contain administrative claims data.

Rights and permissions

About this article

Cite this article

Norén, G.N., Bergvall, T., Ryan, P.B. et al. Empirical Performance of the Calibrated Self-Controlled Cohort Analysis Within Temporal Pattern Discovery: Lessons for Developing a Risk Identification and Analysis System. Drug Saf 36 (Suppl 1), 107–121 (2013). https://doi.org/10.1007/s40264-013-0095-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40264-013-0095-x