Abstract

In this paper the recoverable robust spanning tree problem with interval edge costs is considered. The complexity of this problem has remained open to date. By using an iterative relaxation method, it is shown that the problem is polynomially solvable. A generalization of this idea to the recoverable robust matroid basis problem is also presented. Polynomial algorithms for both recoverable robust problems are proposed.

Similar content being viewed by others

1 Introduction

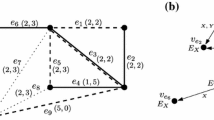

In this paper, we wish to investigate the recoverable robust version of the following minimum spanning tree problem. We are given a connected graph \(G=(V,E)\), where \(|V|=n\) and \(|E|=m\). Let \(\Phi \) be the set of all spanning trees of G. For each edge \(e\in E\) a nonnegative cost \(c_e\) is given. We seek a spanning tree of G of the minimum total cost. The minimum spanning tree problem can be solved in polynomial time by several well known algorithms (see, e.g. [1]). In this paper we consider the recoverable robust model, previously discussed in [2–6]. We are given first stage edge costs \(C_e\), \(e\in E\), recovery parameter \(k\in \{0,\dots ,n-1\}\), and uncertain second stage (recovery) edge costs, modeled by scenarios. Namely, each particular realization of the second stage costs \(S=(c_e^S)_{e\in E}\) is called a scenario and the set of all possible scenarios is denoted by \(\mathcal {U}\). In the recoverable robust spanning tree problem (RR ST, for short), we choose an initial spanning tree X in the first stage. The cost of this tree is equal to \(\sum _{e\in X} C_e\). Then, after scenario \(S\in \mathcal {U}\) reveals, X can be modified by exchanging up to k edges. This new tree is denoted by Y, where \(|Y{\setminus } X|=|X{\setminus } Y| \le k\). The second stage cost of Y under scenario \(S\in \mathcal {U}\) is equal to \(\sum _{e\in Y} c_e^S\). Our goal is to find a pair of trees X and Y such that \(|X{\setminus } Y|\le k\), which minimize the total first and second stage cost \(\sum _{e\in X} C_e +\sum _{e\in Y} c_e^S\) in the worst case. Hence, the problem RR ST can be formally stated as follows:

where \(\Phi ^k_{X}=\{Y\in \Phi \,:\, |Y{\setminus } X|\le k\}\) is the recovery set, i.e. the set of possible solutions in the second, recovery stage.

The RR ST problem has been recently discussed in a number of papers. It is a special case of the robust spanning tree problem with incremental recourse considered in [7]. Furthermore, if \(k=0\) and \(C_e=0\) for each \(e\in E\), then the problem is equivalent to the robust min-max spanning tree problem investigated in [8, 9]. The complexity of RR ST depends on the way in which scenario set \(\mathcal {U}\) is defined. If \(\mathcal {U}=\{S_1,\dots ,S_K\}\) contains \(K\ge 1\), explicitly listed scenarios, then the problem is known to be NP-hard for \(K=2\) and any constant \(k\in \{0,\dots ,n-1\}\) [10]. Furthermore, it becomes strongly NP-hard and not at all approximable when both K and k are part of input [10]. Assume now that the second stage cost of each edge \(e\in E\) is known to belong to the closed interval \([c_e, c_e+d_e]\), where \(d_e\ge 0\). Scenario set \(\mathcal {U}^l\) is then the subset of the Cartesian product \(\prod _{e\in E}[c_e, c_e+d_e]\) such that in each scenario in \(\mathcal {U}^l\), the costs of at most l edges are greater than their nominal values \(c_e\), \(l\in \{0,\dots ,m\}\). Scenario set \(\mathcal {U}^l\) has been proposed in [11]. The parameter l allows us to model the degree of uncertainty. Namely, if \(l=0\) then \(\mathcal {U}\) contains only one scenario. The problem RR ST for scenario set \(\mathcal {U}^l\) is known to be strongly NP-hard when l is a part of input [7]. In fact, the inner problem, \(\max _{S\in \mathcal {U}^l}\min _{Y\in \Phi ^k_X} \sum _{e\in Y} {c_e^S}\), called the adversarial problem, is then strongly NP-hard [7]. On the other hand, \(\mathcal {U}^m\) is the Cartesian product of all the uncertainty intervals, and corresponds to the traditional interval uncertainty representation [9].

The complexity of RR ST with scenario set \(\mathcal {U}^m\) is open to date. In [12] the incremental spanning tree problem was discussed. In this problem we are given an initial spanning tree X and we seek a spanning tree \(Y\in \Phi ^k_X\) whose total cost is minimal. It is easy to see that this problem is the inner one in RR ST, where X is fixed and \(\mathcal {U}\) contains only one scenario. The incremental spanning tree problem can be solved in polynomial time by applying the Lagrangian relaxation technique [12]. In [2] a polynomial algorithm for a more general recoverable robust matroid basis problem (RR MB, for short) with scenario set \(\mathcal {U}^m\) was proposed, provided that the recovery parameter k is constant and, in consequence, for RR ST (a spanning tree is a graphic matroid). Unfortunately, the algorithm is exponential in k. No other result on the problem is known to date. In particular, no polynomial time algorithm has been developed, when k is a part of the input.

In this paper we show that RR ST for the interval uncertainty representation (i.e. for scenario set \(\mathcal {U}^m\)) is polynomially solvable (Sect. 2). We apply a technique called the iterative relaxation, whose framework was described in [13]. The idea is to construct a linear programming relaxation of the problem and show that at least one variable in each optimum vertex solution is integer. Such a variable allows us to add an edge to the solution built and recursively solve the relaxation of the smaller problem. We also show that this technique allows us to solve the recoverable robust matroid basis problem (RR MB) for the interval uncertainty representation in polynomial time (Sect. 3). We provide polynomial algorithms for RR ST and RR MB.

2 Recoverable robust spanning tree problem

In this section we will use the iterative relaxation method [13] to construct a polynomial algorithm for RR ST under scenario set \(\mathcal {U}^m\). Notice first that, in this case, the formulation (1) can be rewritten as follows:

In problem (2) we need to find a pair of spanning trees \(X\in \Phi \) and \(Y\in \Phi ^k_X\). Since \(|X|=|Y|=|V|-1\), the problem (2) is equivalent the following mathematical programming problem:

We now set up some additional notations. Let \(V_X\) and \(V_Y\) be subsets of vertices V, and \(E_X\) and \(E_Y\) be subsets of edges E, which induce connected graphs (multigraphs) \(G_X=(V_X, E_X)\) and \(G_Y=(V_Y,E_Y)\), respectively. Let \(E_Z\) be a subset of E such that \(E_Z\subseteq E_X \cup E_Y\) and \(|E_Z|\ge L\) for some fixed integer L. We will use \(E_X(U)\) (resp. \(E_Y(U)\)) to denote the set of edges that has both endpoints in a given subset of vertices \(U\subseteq V_X\) (resp. \(U\subseteq V_Y\)).

Let us consider the following linear program, denoted by \(LP_{RRST}(E_{X},V_{X},E_{Y},V_{Y}, E_{Z},L)\), that we will substantially use in the algorithm for RR ST:

It is easily seen that if we set \(E_{X}= E_{Z}= E_{Y}=E\), \(V_{X}=V_{Y}=V\), \(L=|V|-1-k\), then the linear program \(LP_{RRST}(E_{X},V_{X},E_{Y},V_{Y}, E_{Z},L)\) is a linear programming relaxation of (3). Indeed, the binary variables \(x_e, y_e, z_e\in \{0,1\}\) indicate then the spanning trees X and Y and their common part \(X\cap Y\), respectively. Moreover, the constraint (8) takes the form of equality, instead of the inequality, since the variables \(z_e\), \(e\in E_Z\), are not present in the objective function (4). Problem \(LP_{RRST}(E_{X},V_{X},E_{Y},V_{Y}, E_{Z},L)\) has exponentially many constraints. However, the constraints (5), (6) and (10), (11) are the spanning tree ones for graphs \(G_X=(V_X,E_X)\) and \(G_Y=(V_Y,E_Y)\), respectively. Fortunately, there exits a polynomial time separation oracle over such constraints [14]. Clearly, separating over the remaining constraints, i.e. (7), (8) and (9) can be done in a polynomial time. In consequence, an optimal vertex solution to the problem can be found in polynomial time. It is also worth pointing out that, alternatively, one may rewrite the spanning tree constraints: (5), (6) and (10), (11) in an equivalent “compact” form, with polynomial number of variables and constraints, that can be more attractive from the computational point of view (see, for instance, the directed multicommodity flow model [14]). However, throughout this section will use the model (4)–(14), since it is easier to use for proving the properties of \(LP_{RRST}(E_{X},V_{X},E_{Y},V_{Y}, E_{Z},L)\).

Let us focus now on a vertex solution \((\pmb {x},\pmb {z},\pmb {y})\in \mathbb {R}^{|E_X|\times |E_Z|\times |E_Y|}_{\ge 0}\) of the linear programming problem \(LP_{RRST}(E_{X},V_{X},E_{Y},V_{Y}, E_{Z},L)\). If \(E_Z=\emptyset \), then the only constraints being left in (4)–(14) are the spanning tree constraints. Thus \(\pmb {x}\) and \(\pmb {y}\) are 0-1 incidence vectors of the spanning trees X and Y, respectively (see [14, Theorem 3.2]).

We now turn to the more involved case, when \(E_X\not =\emptyset \), \(E_Y\not =\emptyset \) and \(E_Z\not =\emptyset \). We first reduce the sets \(E_X\), \(E_Y\) and \(E_Z\) by removing all edges e with \(x_e=0\), or \(y_e=0\), or \(z_e=0\). Removing these edges does not change the feasibility and the cost of the vertex solution \((\pmb {x},\pmb {z},\pmb {y})\). Note that \(V_X\) and \(V_Y\) remain unaffected. From now on, we can assume that the variables corresponding to all edges from \(E_X\), \(E_Y\) and \(E_Z\) are positive, i.e. \(x_e>0\), \(e\in E_X\), \(y_e>0\), \(e\in E_Y\) and \(z_e>0\), \(e\in E_Z\). Hence the constraints (12), (13) and (14) are not taken into account, since they are not tight with respect to \((\pmb {x},\pmb {z},\pmb {y})\). It is possible, after reducing \(E_X\), \(E_Y\), and \(E_Z\), to characterize \((\pmb {x},\pmb {z},\pmb {y})\) by \(|E_X|+ |E_Z|+|E_Y|\) constraints that are linearly independent and tight with respect to \((\pmb {x},\pmb {z},\pmb {y})\).

Let \(\mathcal {F}(\pmb {x})=\{U\subseteq V_X\,:\, \sum _{e\in E_X(U)}x_e =|U|-1\}\) and \(\mathcal {F}(\pmb {y})=\{U\subseteq V_Y\,:\, \sum _{e\in E_Y(U)}y_e =|U|-1\}\) stand for the sets of subsets of nodes that indicate the tight constraints (5), (6) and (10), (11) for \(\pmb {x}\) and \(\pmb {y}\), respectively. Similarly we define the sets of edges that indicate the tight constraints (7) and (9) with respect to \((\pmb {x},\pmb {z},\pmb {y})\), namely \(\mathcal {E}(\pmb {x},\pmb {z})=\{e\in E_{X}\cap E_{Z}\,:\, -x_e+z_e = 0\}\) and \(\mathcal {E}(\pmb {z},\pmb {y})=\{e\in E_{Y}\cap E_{Z}\,:\, z_e -y_e = 0\}\). Let \(\chi _X(W)\), \(W\subseteq E_X\), (resp. \(\chi _Z(W)\), \(W\subseteq E_Z\), and \(\chi _Y(W)\), \(W\subseteq E_Y\)) denote the characteristic vector in \(\{0,1\}^{|E_X|}\times \{0\}^{|E_Z|}\times \{0\}^{|E_Y|}\) (resp. \(\{0\}^{|E_X|}\times \{0,1\}^{|E_Z|}\times \{0\}^{|E_Y|}\) and \(\{0\}^{|E_X|}\times \{0\}^{|E_Z|}\times \{0,1\}^{|E_Y|}\)) that has 1 if \(e\in W\) and 0 otherwise.

We recall that two sets A and B are intersecting if \(A\cap B\), \(A{\setminus } B\), \(B{\setminus } A\) are nonempty. A family of sets is laminar if no two sets are intersecting (see, e.g., [13]). Observe that the number of subsets in \(\mathcal {F}(\pmb {x})\) and \(\mathcal {F}(\pmb {y})\) can be exponential. Let \(\mathcal {L}(\pmb {x})\) (resp. \(\mathcal {L}(\pmb {y})\)) be a maximal laminar subfamily of \(\mathcal {F}(\pmb {x})\) (resp. \(\mathcal {F}(\pmb {y})\)). The following lemma, which is a slight extension of [13, Lemma 4.1.5], allows us to choose out of \(\mathcal {F}(\pmb {x})\) and \(\mathcal {F}(\pmb {y})\) certain subsets that indicate linearly independent tight constraints.

Lemma 1

For \(\mathcal {L}(\pmb {x})\) and \(\mathcal {L}(\pmb {y})\) the following equalities:

hold.

Proof

The proof is the same as that for the spanning tree in [13, Lemma 4.1.5]. \(\square \)

A trivial verification shows that the following observation is true:

Observation 1

\(V_X\in \mathcal {L}(\pmb {x})\) and \(V_Y\in \mathcal {L}(\pmb {y})\).

We are now ready to give a characterization of a vertex solution.

Lemma 2

Let \((\pmb {x},\pmb {z},\pmb {y})\) be a vertex solution of \(LP_{RRST}(E_{X},V_{X},E_{Y},V_{Y}, E_{Z},L)\) such that \(x_e>0\), \(e\in E_X\), \(y_e>0\), \(e\in E_Y\) and \(z_e>0\), \(e\in E_Z\). Then there exist laminar families \(\mathcal {L}(\pmb {x})\not =\emptyset \) and \(\mathcal {L}(\pmb {y})\not =\emptyset \) and subsets \(E(\pmb {x},\pmb {z}) \subseteq \mathcal {E}(\pmb {x},\pmb {z})\) and \(E(\pmb {z},\pmb {y}) \subseteq \mathcal {E}(\pmb {z},\pmb {y})\) that must satisfy the following:

-

(i)

\(|E_X|+|E_Z|+|E_Y|=|\mathcal {L}(\pmb {x})|+|E(\pmb {x},\pmb {z})|+|E(\pmb {z},\pmb {y})| +|\mathcal {L}(\pmb {y})|+1\),

-

(ii)

The vectors in \(\{ \chi _X(E_X(U))\,:\, U\in \mathcal {L}(\pmb {x})\}\cup \{ \chi _Y(E_Y(U))\,:\, U\in \mathcal {L}(\pmb {y})\} \cup \{ -\chi _X(\{e\})+ \chi _Z(\{e\}) \,:\, e\in E(\pmb {x},\pmb {z})\} \cup \{ \chi _Z(\{e\})- \chi _Y(\{e\}) \,:\, e\in E(\pmb {z},\pmb {y})\} \cup \{\chi _Z(E_Z)\}\) are linearly independent.

Proof

The vertex \((\pmb {x},\pmb {z},\pmb {y})\) can be uniquely characterized by any set of linearly independent constraints with the cardinality of \(|E_X|+ |E_Z|+|E_Y|\), chosen from among the constraints (5)–(11), tight with respect to \((\pmb {x},\pmb {z},\pmb {y})\). We construct such set by choosing a maximal subset of linearly independent tight constraints that characterizes \((\pmb {x},\pmb {z},\pmb {y})\). Lemma 1 shows that there exist maximal laminar subfamilies \(\mathcal {L}(\pmb {x})\subseteq \mathcal {F}(\pmb {x})\) and \(\mathcal {L}(\pmb {y})\subseteq \mathcal {F}(\pmb {y})\) such that \(\mathrm {span}(\{ \chi _X(E_X(U))\,:\, U\in \mathcal {L}(\pmb {x})\})= \mathrm {span}(\{ \chi _X(E_X(U))\,:\, U\in \mathcal {F}(\pmb {x})\})\) and \(\mathrm {span}(\{ \chi _Y(E_Y(U))\,:\, U\in \mathcal {L}(\pmb {y})\})= \mathrm {span}(\{ \chi _Y(E_Y(U))\,:\, U\in \mathcal {F}(\pmb {y})\}))\). Observation 1 implies \(\mathcal {L}(\pmb {x})\not =\emptyset \) and \(\mathcal {L}(\pmb {y})\not =\emptyset \). Moreover, it is evident that \(\mathrm {span}(\{ \chi _X(E_X(U))\,:\, U\in \mathcal {L}(\pmb {x})\} \cup \{ \chi _Y(E_Y(U))\,:\, U\in \mathcal {L}(\pmb {y})\})= \mathrm {span}(\{ \chi _X(E_X(U))\,:\, U\in \mathcal {F}(\pmb {x})\} \cup \{ \chi _Y(E_Y(U))\,:\, U\in \mathcal {F}(\pmb {y})\})\). Thus \(\mathcal {L}(\pmb {x})\cup \mathcal {L}(\pmb {y})\) indicate certain linearly independent tight constraints that have been already included in the set constructed. We add (8) to the set constructed. Obviously, it still consists of linearly independent constraints. We complete forming the set by choosing a maximal number of tight constraints from among the ones (7) and (9), such that they form a linearly independent set with the constraints previously selected. We characterize these constraints by the sets of edges \(E(\pmb {x},\pmb {z}) \subseteq \mathcal {E}(\pmb {x},\pmb {z})\) and \(E(\pmb {z},\pmb {y}) \subseteq \mathcal {E}(\pmb {z},\pmb {y})\). Therefore, the vectors in \(\{ \chi _X(E_X(U))\,:\, U\in \mathcal {L}(\pmb {x})\}\cup \{ \chi _Y(E_Y(U))\,:\, U\in \mathcal {L}(\pmb {y})\} \cup \{ -\chi _X(\{e\})+ \chi _Z(\{e\}) \,:\, e\in E(\pmb {x},\pmb {z})\} \cup \{ \chi _Z(\{e\})- \chi _Y(\{e\}) \,:\, e\in E(\pmb {z},\pmb {y})\} \cup \{\chi _Z(E_Z)\}\) are linearly independent and represent the constructed maximal set of independent tight constraints, with the cardinality of \(|\mathcal {L}(\pmb {x})|+|E(\pmb {x},\pmb {z})|+|E(\pmb {z},\pmb {y})| +|\mathcal {L}(\pmb {y})|+1\), that uniquely describe \((\pmb {x},\pmb {z},\pmb {y})\). Hence \(|E_X|+|E_Z|+|E_Y|=|\mathcal {L}(\pmb {x})|+|E(\pmb {x},\pmb {z})|+|E(\pmb {z},\pmb {y})| +|\mathcal {L}(\pmb {y})|+1\), which establishes the lemma. \(\square \)

Lemma 3

Let \((\pmb {x},\pmb {z},\pmb {y})\) be a vertex solution of \(LP_{RRST}(E_{X},V_{X},E_{Y},V_{Y}, E_{Z},L)\) such that \(x_e>0\), \(e\in E_X\), \(y_e>0\), \(e\in E_Y\) and \(z_e>0\), \(e\in E_Z\). Then there is an edge \(e'\in E_X\) with \(x_{e'}=1\) or an edge \(e''\in E_Y\) with \(y_{e''}=1\).

Proof

On the contrary, suppose that \(0<x_e<1\) for every \(e\in E_X\) and \(0<y_e<1\) for every \(e\in E_Y\). Constraints (7) and (9) lead to \(0<z_e<1\) for every \(e\in E_Z\). By Lemma 2, there exist laminar families \(\mathcal {L}(\pmb {x})\not =\emptyset \) and \(\mathcal {L}(\pmb {y})\not =\emptyset \) and subsets \(E(\pmb {x},\pmb {z}) \subseteq \mathcal {E}(\pmb {x},\pmb {z})\) and \(E(\pmb {z},\pmb {y}) \subseteq \mathcal {E}(\pmb {z},\pmb {y})\) indicating linearly independent constraints which uniquely define \((\pmb {x},\pmb {z},\pmb {y})\), namely

We will arrive to a contradiction with Lemma 2(i) by applying a token counting argument, frequently used in [13].

We give exactly two tokens to each edge in \(E_X\), \(E_Z\) and \(E_Y\). Thus we use \(2|E_X|+2|E_Z|+2|E_Y|\) tokens. We then redistribute these tokens to the tight constraints (15)–(19) as follows. For \(e\in E_X\) the first token is assigned to the constraint indicated by the smallest set \(U\in \mathcal {L}(\pmb {x})\) containing its two endpoints (see (15)) and the second one is assigned to the constraint represented by e (see (16)) if \(e\in E(\pmb {x},\pmb {z})\). Similarly, for \(e\in E_Y\) the first token is assigned to the constraint indicated by the smallest set \(U\in \mathcal {L}(\pmb {y})\) containing its both endpoints (see (19)) and the second one is assigned to the constraint represented by e (see (18)) if \(e\in E(\pmb {z},\pmb {y})\). Each \(e\in E_Z\) assigns the first token to the constraint corresponding to e (see (16)) if \(e\in E(\pmb {x},\pmb {z})\); otherwise to the constraint (17). The second token is assigned to the constraint indicated by e (see (18)) if \(e\in E(\pmb {z},\pmb {y})\). \(\square \)

Claim 1

Each of the constraints (16) and (18) receives exactly two tokens. Each of the constraints (15) and (19) collects at least two tokens.

The first part of Claim 1 is obvious. In order to show the second part we apply the same reasoning as [13, Proof 2 of Lemma 4.2.1]. Consider the constraint represented by any subset \(U\in \mathcal {L}(\pmb {x})\). We say that U is the parent of a subset \(C\in \mathcal {L}(\pmb {x})\) and C is the child of U if U is the smallest set containing C. Let \(C_1,\ldots ,C_{\ell }\) be the children of U. The constraints corresponding to these subsets are as follows

Subtracting (21) for every \(k\in [\ell ]\) from (20) yields:

Equation (22) holds, since the sets \(C_1,\ldots ,C_{\ell }\) are the children of U and all these sets are in laminar family \(\mathcal {L}(\pmb {x})\). Observe that \(E_X(U){\setminus } \bigcup _{k\in [\ell ]}E_X(C_k)\not =\emptyset \). Otherwise, this leads to a contradiction with the linear independence of the constraints. Since the right hand side of (22) is integer and \(0<x_e<1\) for every \(e\in E_X\), \(|E_X(U){\setminus } \bigcup _{k\in [\ell ]}E_X(C_k)|\ge 2\). Hence U receives at least two tokens. The same arguments apply to the constraint represented by any subset \(U\in \mathcal {L}(\pmb {y})\). This proves the claim.

Claim 2

Either constraint (17) collects at least one token and there are at least two extra tokens left or constraint (17) receives no token and there are at least three extra tokens left.

To prove the claim we need to consider several nested cases:

-

1.

Case \(E_Z{\setminus } E(\pmb {x},\pmb {z})\not = \emptyset \). Since \(E_Z{\setminus } E(\pmb {x},\pmb {z})\not = \emptyset \), at least one token is assigned to constraint (17). We have yet to show that there are at least two token left.

-

(a)

Case \(E_Z{\setminus } E(\pmb {z},\pmb {y})=\emptyset \). Subtracting (18) for every \(e\in E(\pmb {z},\pmb {y})\) from (17) gives:

$$\begin{aligned} \sum _{e\in E(\pmb {z},\pmb {y})} y_e= L. \end{aligned}$$(23)-

(i)

Case \(E_Y{\setminus } E(\pmb {z},\pmb {y})=\emptyset \). Thus \(L=|V_Y|-1\), since \((\pmb {x},\pmb {z},\pmb {y})\) is a feasible solution. By Observation 1, \(V_Y\in \mathcal {L}(\pmb {y})\) and (23) has the form of constraint (19) for \(V_Y\), which contradicts the linear independence of the constraints.

-

(ii)

Case \(E_Y{\setminus } E(\pmb {z},\pmb {y})\not =\emptyset \). Thus \(L<|V_Y|-1\). Since the right hand side of (23) is integer and \(0<y_e<1\) for every \(e\in E_Y\), \(|E_Y{\setminus } E(\pmb {z},\pmb {y})|\ge 2\). Hence, there are at least two extra tokens left.

-

(i)

-

(b)

Case \(E_Z{\setminus } E(\pmb {z},\pmb {y})\not =\emptyset \). Consequently, \(|E_Z{\setminus } E(\pmb {z},\pmb {y})|\ge 1\) and thus at least one token left over, i.e at least one token is not assigned to constraints (18). Therefore, yet one additional token is required.

-

(i)

Case \(E_Y{\setminus } E(\pmb {z},\pmb {y})=\emptyset \). Consider the constraint (19) corresponding to \(V_Y\). Adding (18) for every \(e\in E(\pmb {z},\pmb {y})\) to this constraint yields:

$$\begin{aligned} \sum _{e\in E(\pmb {z},\pmb {y})} z_e= |V_Y|-1. \end{aligned}$$(24)Obviously \(|V_Y|-1<L\). Since L is integer and \(0<z_e<1\) for every \(e\in E_Z\), \(|E_Z{\setminus } E(\pmb {z},\pmb {y})|\ge 2\). Hence there are at least two extra tokens left.

-

(ii)

Case \(E_Y{\setminus } E(\pmb {z},\pmb {y})\not =\emptyset \). One can see immediately that at least one token left over, i.e at least one token is not assigned to constraints (18). Summarizing the above cases, constraint (17) collects at least one token and there are at least two extra tokens left.

-

(i)

-

(a)

-

2

Case \(E_Z{\setminus } E(\pmb {x},\pmb {z})= \emptyset \). Subtracting (16) for every \(e\in E(\pmb {x},\pmb {z})\) from (17) gives:

$$\begin{aligned} \sum _{e\in E(\pmb {x},\pmb {z})} x_e= L. \end{aligned}$$(25)Thus constraint (17) receives no token. We yet need to show that there are at least three extra tokens left.

-

(a)

Case \(E_X{\setminus } E(\pmb {x},\pmb {z})= \emptyset \). Therefore \(L=|V_X|-1\), since \((\pmb {x},\pmb {z},\pmb {y})\) is a feasible solution. By Observation 1, \(V_X\in \mathcal {L}(\pmb {x})\) and (25) has the form of constraint (15) for \(V_X\), which contradicts with the linear independence of the constraints.

-

(b)

Case \(E_X{\setminus } E(\pmb {x},\pmb {z})\not = \emptyset \). Thus \(L<|V_X|-1\). Since the right hand side of (25) is integer and \(0<x_e<1\) for every \(e\in E_X\), \(|E_X{\setminus } E(\pmb {x},\pmb {z})|\ge 2\). Consequently, there are at least two extra tokens left. Yet at least one token is required.

-

(i)

Case \(E_Z{\setminus } E(\pmb {z},\pmb {y})= \emptyset \). Reasoning is the same as in Case 1a

-

(ii)

Case \(E_Z{\setminus } E(\pmb {z},\pmb {y})\not = \emptyset \). Reasoning is the same as in Case 1b.

Accordingly, constraint (17) receives no token and there are at least three extra tokens left.

-

(i)

-

(a)

Thus the claim is proved. The method of assigning tokens to constraints (15)–(19) and Claims 1 and 2 now show that either

or

The above inequalities lead to \(|E_X|+|E_Z|+|E_Y|>|\mathcal {L}(\pmb {x})|+|E(\pmb {x},\pmb {z})|+|E(\pmb {z},\pmb {y})| +|\mathcal {L}(\pmb {y})|+1\). This contradicts Lemma 2(i).

It remains to verify two cases: \(E_X=\emptyset \) and \(|V_X|=1\); \(E_Y=\emptyset \) and \(|V_Y|=1\). We consider only the first one, because the second case is symmetrical. The constraints (8), (9) and the inclusion \(E_{Z}\subseteq E_{Y}\) yield

Lemma 4

Let \(\pmb {y}\) be a vertex solution of linear program: (4), (10), (11), (14) and (26) such that \(y_e>0\), \(e\in E_Y\). Then there is an edge \(e'\in E_Y\) with \(y_{e'}=1\). Moreover, using \(\pmb {y}\) one can construct a vertex solution of \(LP_{RRST}(\emptyset ,V_{X},E_{Y},V_{Y}, E_{Z},L)\) with \(y_{e'}=1\) and the cost of \(\pmb {y}\).

Proof

Similarly as in the proof Lemma 2, we construct a maximal subset of linearly independent tight constraints that characterize \(\pmb {y}\) and get: \(|E_Y|=|\mathcal {L}(\pmb {y})|\) if (26) is not tight or adding (26) makes the subset dependent; \(|E_Y|=|\mathcal {L}(\pmb {y})|+1\) otherwise. In the first case the spanning tree constraints define \(\pmb {y}\) and, in consequence, \(\pmb {y}\) is integral (see [14, Theorem 3.2]). Consider the second case and assume, on the contrary, that \(0<y_e<1\) for each \(e\in E_Y\). Thus

We assign two tokens to each edge in \(E_Y\) and redistribute \(2|E_Y|\) tokens to constraints (27) and (28) in the following way. The first token is given to the constraint indicated by the smallest set \(U\in \mathcal {L}(\pmb {y})\) containing its two endpoints and the second one is assigned to (27). Since \(0<y_e<1\) and L is integer, similarly as in the proof Lemma 3, one can show that each of the constraints (28) and (27) receives at least two tokens. If \(E_Y{\setminus } E_Z= \emptyset \) then \(L=|V_Y|-1\), since \(\pmb {y}\) is a feasible solution—a contradiction with the linear independence of the constraints. Otherwise (\(E_Y{\setminus } E_Z\not = \emptyset \)), at least one token is left. Hence \(2|E_Y|-1=2|\mathcal {L}(\pmb {y})|+2\) and so \(|E_Y|>|\mathcal {L}(\pmb {y})|+1\), a contradiction.

By (26) and the fact that there are no variables \(z_e\), \(e\in E_Z\), in the objective (4), it is obvious that using \(\pmb {y}\) one can construct \(\pmb {z}\) satisfying (8) and, in consequence, a vertex solution of \(LP_{RRST}(\emptyset ,V_{X},E_{Y},V_{Y}, E_{Z},L)\) with \(y_{e'}=1\) and the cost of \(\pmb {y}\). \(\square \)

We are now ready to give the main result of this section.

Theorem 1

Algorithm 1 solves RR ST in polynomial time.

Proof

Lemmas 3 and 4 and the case when \(E_Z=\emptyset \) (see the comments in this section) ensure that Algorithm 1 terminates after performing O(|V|) iterations (steps 3–15). Let \(\mathrm {OPT_{LP}}\) denote the optimal objective function value of \(LP_{RRST}(E_X,V_X,E_Y,V_Y, E_Z,L)\), where \(E_{X}= E_{Z}= E_{Y}=E\), \(V_{X}=V_{Y}=V\), \(L=|V|-1-k\). Hence \(\mathrm {OPT_{LP}}\) is a lower bound on the optimal objective value of RR ST. It is not difficult to show that after the termination of the algorithm X and Y are two spanning trees in G such that \(\sum _{e\in X} C_e + \sum _{e\in Y} (c_e+d_e)\le \mathrm {OPT_{LP}}\). It remains to show that \(|X\cap Y|\ge |V|-k-1\). By induction on the number of iterations of Algorithm 1 one can easily show that at any iteration the inequality \(L+|Z|= |V|-1-k\) is satisfied. Accordingly, if, after the termination of the algorithm, \(L=0\) holds, then we are done. Suppose, on the contrary that \(L\ge 1\) (L is integer). Since \(\sum _{e\in E_Z}z^{*}_e\ge L\), \(|E_Z|\ge L\ge 1\) and \(E_Z\) is the set with edges not belonging to \( X\cap Y\). Consider any \(e'\in E_Z\). Of course \(z^{*}_{e'}>0\) and it remained positive during the course of Algorithm 1. Moreover, at least one of the constraints \(-x_{e'} +z_{e'}\le 0\) or \(z_{e'} -y_{e'}\le 0\) is still present in the linear program (4)–(14). Otherwise, since \(z^{*}_{e'}>0\), steps 7–9, steps 10–12 and, in consequence, steps 13–15 for \(e'\) must have been executed during the course of Algorithm 1 and \(e'\) must have been included to Z, a contradiction with the fact \(e'\not \in X\cap Y\). Since the above constraints are present, \(0<x^{*}_{e'}<1\) or \(0<y^{*}_{e'}<1\). Thus \(e'\in E_X\) or \(e'\in E_Y\), which contradicts the termination of Algorithm 1. \(\square \)

3 Recoverable robust matroid basis problem

The minimum spanning tree can be generalized to the following minimum matroid basis problem. We are given a matroid \(M=(E,\mathcal {I})\) (see [15]), where E is a nonempty ground set, \(|E|=m\), and \(\mathcal {I}\) is a family of subsets of E, called independent sets. The following two axioms must be satisfied: (i) if \(A\subseteq B\) and \(B\in \mathcal {I}\), then \(A\in \mathcal {I}\); (ii) for all \(A,B\in \mathcal {I}\) if \(|A|< |B|\), then there is an element \(e \in B {\setminus } A\) such that \(A \cup \{e\} \in \mathcal {I}\). We make the assumption that checking the independence of a set \(A \subseteq E\) can be done in polynomial time. The rank function of M, \(r_M: 2^E\rightarrow \mathbb {Z}_{\ge 0}\), is defined by \(r_M(U)=\max _{W \subseteq U, W \in \mathcal {I}}|W|\). A basis of M is a maximal under inclusion element of \(\mathcal {I}\). The cardinality of each basis equals \(r_M(E)\). Let \(c_e\) be a cost specified for each element \(e\in E\). We wish to find a basis of M of the minimum total cost, \(\sum _{e\in M}c_e\). It is well known that the minimum matroid basis problem is polynomially solvable by a greedy algorithm (see, e.g. [16]). A spanning tree is a basis of a graphic matroid, in which E is a set of edges of a given graph and \(\mathcal {I}\) is the set of all forests in G.

We now define two operations on matroid \(M=(E,\mathcal {I})\), used in the following (see also [15]). Let \(M\backslash e=(E_{M\backslash e},\mathcal {I}_{M \backslash e})\), the deletion e from M, be the matroid obtained by deleting \(e\in E\) from M defined by \(E_{M\backslash e}=E{\setminus }\{e\}\) and \(\mathcal {I}_{M\backslash e}=\{U\subseteq E{\setminus }\{e\}\,:\, U\in \mathcal {I}\}\). The rank function of \(M\backslash e\) is given by \(r_{M\backslash e}(U)=r_{M}(U)\) for all \(U\subseteq E{\setminus }\{e\}\). Let \(M/e=(E_{M/e},\mathcal {I}_{M/e})\), the contraction e from M, be the matroid obtained by contracting \(e \in E\) in M, defined by \(E_{M/e}=E{\setminus }\{e\}\); and \(\mathcal {I}_{M/e}=\{U\subseteq E{\setminus }\{e\}\,:\, U\cup \{e\}\in \mathcal {I}\}\) if \(\{e\}\) is independent and \(\mathcal {I}_{M/e}=\mathcal {I}\), otherwise. The rank function of M / e is given by \(r_{M/e}(U)=r_{M}(U)-r_{M}(\{e\})\) for all \(U\subseteq E{\setminus }\{e\}\).

Assume now that the first stage cost of element \(e\in E\) equals \(C_e\) and its second stage cost is uncertain and is modeled by interval \([c_e, c_e+d_e]\). The recoverable robust matroid basis problem (RR MB for short) under scenario set \(\mathcal {U}^m\) can be stated similarly to RR ST. Indeed, it suffices to replace the set of spanning trees by the bases of M and \(\mathcal {U}\) by \(\mathcal {U}^m\) in the formulation (1) and, in consequence, in (2). Here and subsequently, \(\Phi \) denotes the set of all bases of M. Likewise, RR MB under \(\mathcal {U}^m\) is equivalent to the following problem:

Let \(E_{X}, E_{Y}\subseteq E\) and \(\mathcal {I}_{X}\), \(\mathcal {I}_{Y}\) be collections of subsets of \(E_{X}\) and \(E_{Y}\), respectively, (independent sets), that induce matroids \({M}_X=(E_X, \mathcal {I}_X)\) and \({M}_Y=(E_Y, \mathcal {I}_Y)\). Let \(E_Z\) be a subset of E such that \(E_Z \subseteq E_X \cup E_Z\) and \(|E_Z|\ge L\) for some fixed L. The following linear program, denoted by \(LP_{RRMB}(E_{X},\mathcal {I}_{X},E_{Y},\mathcal {I}_{Y},E_{Z},L)\), after setting \(E_X=E_Y=E_Z=E\), \(\mathcal {I}_X=\mathcal {I}_Y=\mathcal {I}\) and \(L=r_{M}(E)-k\) is a relaxation of (29):

The indicator variables \(x_e, y_e, z_e\in \{0,1\}\), \(e\in E\), describing the bases X, Y and their intersection \(X\cap Y\), respectively have been relaxed. Since there are no variables \(z_e\) in the objective function (30), we can use equality constraint (34), instead of the inequality one. The above linear program is solvable in polynomial time. The rank constraints (31), (32) and (36), (37) relate to matroids \({M}_X=(E_X, \mathcal {I}_X)\) and \({M}_Y=(E_Y, \mathcal {I}_Y)\), respectively, and a separation over these constraints can be carried out in polynomial time [17]. Obviously, a separation over (33)–(35) can be done in polynomial time as well.

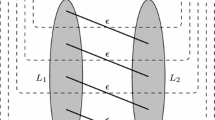

Consider a vertex solution \((\pmb {x},\pmb {z},\pmb {y})\in \mathbb {R}^{|E_X|\times |E_Z|\times |E_Y|}_{\ge 0}\) of \(LP_{RRMB}(E_{X},\mathcal {I}_{X},E_{Y},\mathcal {I}_{Y}, E_{Z},L)\). Note that if \(E_Z=\emptyset \), then the only rank constraints are left in (30)–(40). Consequently, \(\pmb {x}\) and \(\pmb {y}\) are 0-1 incidence vectors of bases X and Y of matroids \({M}_X\) and \({M}_Y\), respectively (see [16]). Let us turn to other cases. Assume that \(E_X\not =\emptyset \), \(E_Y\not =\emptyset \) and \(E_Z\not =\emptyset \). Similarly as in Sect. 2 we first reduce the sets \(E_X\), \(E_Y\) and \(E_Z\) by removing all elements e with \(x_e=0\), or \(y_e=0\), or \(z_e=0\). Let \(\mathcal {F}(\pmb {x})=\{U\subseteq E_X\,:\, \sum _{e\in U}x_e =r_{M_X}(U)\}\) and \(\mathcal {F}(\pmb {y})=\{U\subseteq E_Y\,:\, \sum _{e\in U}y_e =r_{M_Y}(U)\}\) denote the sets of subsets of elements that indicate tight constraints (31), (32) and (36), (37) for \(\pmb {x}\) and \(\pmb {y}\), respectively. Similarly we define the sets of elements that indicate tight constraints (33) and (35) with respect to \((\pmb {x},\pmb {z},\pmb {y})\), namely \(\mathcal {E}(\pmb {x},\pmb {z})=\{e\in E_{X}\cap E_{Z}\,:\, -x_e+z_e = 0\}\) and \(\mathcal {E}(\pmb {z},\pmb {y})=\{e\in E_{Y}\cap E_{Z}\,:\, z_e -y_e = 0\}\). Let \(\chi _X(W)\), \(W\subseteq E_X\), (resp. \(\chi _Z(W)\), \(W\subseteq E_Z\), and \(\chi _Y(W)\), \(W\subseteq E_Y\)) denote the characteristic vector in \(\{0,1\}^{|E_X|}\times \{0\}^{|E_Z|}\times \{0\}^{|E_Y|}\) (resp. \(\{0\}^{|E_X|}\times \{0,1\}^{|E_Z|}\times \{0\}^{|E_Y|}\) and \(\{0\}^{|E_X|}\times \{0\}^{|E_Z|}\times \{0,1\}^{|E_Y|}\)) that has 1 if \(e\in W\) and 0 otherwise.

We recall that a family \(\mathcal {L} \subseteq 2^E\) is a chain if for any \(A, B \in \mathcal {L}\), either \(A \subseteq B\) or \(B \subseteq A\) (see, e.g., [13]). Let \(\mathcal {L}(\pmb {x})\) (resp. \(\mathcal {L}(\pmb {y})\)) be a maximal chain subfamily of \(\mathcal {F}(\pmb {x})\) (resp. \(\mathcal {F}(\pmb {y})\)). The following lemma is a fairly straightforward adaptation of [13, Lemma 5.2.3] to the problem under consideration and its proof may be handled in much the same way.

Lemma 5

For \(\mathcal {L}(\pmb {x})\) and \(\mathcal {L}(\pmb {y})\) the following equalities:

hold.

The next lemma, which characterizes a vertex solution, is analogous to Lemma 2. Its proof is based on Lemma 5 and is similar in spirit to the one of Lemma 2.

Lemma 6

Let \((\pmb {x},\pmb {z},\pmb {y})\) be a vertex solution of \(LP_{RRMB}(E_{X},\mathcal {I}_{X},E_{Y},\mathcal {I}_{Y},E_{Z},L)\) such that \(x_e>0\), \(e\in E_X\), \(y_e>0\), \(e\in E_Y\) and \(z_e>0\), \(e\in E_Z\). Then there exist chain families \(\mathcal {L}(\pmb {x})\not =\emptyset \) and \(\mathcal {L}(\pmb {y})\not =\emptyset \) and subsets \(E(\pmb {x},\pmb {z}) \subseteq \mathcal {E}(\pmb {x},\pmb {z})\) and \(E(\pmb {z},\pmb {y}) \subseteq \mathcal {E}(\pmb {z},\pmb {y})\) that must satisfy the following:

-

(i)

\(|E_X|+|E_Z|+|E_Y|=|\mathcal {L}(\pmb {x})|+|E(\pmb {x},\pmb {z})|+|E(\pmb {z},\pmb {y})| +|\mathcal {L}(\pmb {y})|+1\),

-

(ii)

The vectors in \(\{ \chi _X(E_X(U))\,:\, U\in \mathcal {L}(\pmb {x})\}\cup \{ \chi _Y(E_Y(U))\,:\, U\in \mathcal {L}(\pmb {y})\} \cup \{ -\chi _X(\{e\})+ \chi _Z(\{e\}) \,:\, e\in E(\pmb {x},\pmb {z})\} \cup \{ \chi _Z(\{e\})- \chi _Y(\{e\}) \,:\, e\in E(\pmb {z},\pmb {y})\} \cup \{\chi _Z(E_Z)\}\) are linearly independent.

Lemmas 5 and 6 now lead to the next two ones and their proofs run as the proofs of Lemmas 3 and 4.

Lemma 7

Let \((\pmb {x},\pmb {z},\pmb {y})\) be a vertex solution of \(LP_{RRMB}(E_{X},\mathcal {I}_{X},E_{Y},\mathcal {I}_{Y},E_{Z},L)\) such that \(x_e>0\), \(e\in E_X\), \(y_e>0\), \(e\in E_Y\) and \(z_e>0\), \(e\in E_Z\). Then there is an element \(e'\in E_X\) with \(x_{e'}=1\) or an element \(e''\in E_Y\) with \(y_{e''}=1\).

We now turn to two cases: \(E_X=\emptyset \); \(E_Y=\emptyset \). Consider \(E_X=\emptyset \), the second case is symmetrical. Observe that (34) and (35) and \(E_{Z}\subseteq E_{Y}\) implies constraint (26).

Lemma 8

Let \(\pmb {y}\) be a vertex solution of linear program: (30), (36), (37), (40) and (26) such that \(y_e>0\), \(e\in E_Y\). Then there is an element \(e'\in E_Y\) with \(y_{e'}=1\). Moreover using \(\pmb {y}\) one can construct a vertex solution of \(LP_{RRMB}(\emptyset ,\emptyset ,E_{Y},\mathcal {I}_{Y},E_{Z},L)\) with \(y_{e'}=1\) and the cost of \(\pmb {y}\).

We are thus led to the main result of this section. Its proof follows by the same arguments as for RR ST.

Theorem 2

Algorithm 2 solves RR MB in polynomial time.

4 Conclusions

In this paper we have shown that the recoverable robust version of the minimum spanning tree problem with interval edge costs is polynomially solvable. We have thus resolved a problem which has been open to date. We have applied a technique called an iterative relaxation. It has turned out that the algorithm proposed for the minimum spanning tree can be easily generalized to the recoverable robust version of the matroid basis problem with interval element costs. Our polynomial time algorithm is based on solving linear programs. Thus the next step should be designing a polynomial time combinatorial algorithm for this problem, which is an interesting subject of further research.

References

Ahuja, R.K., Magnanti, T.L., Orlin, J.B.: Network flows:theory, algorithms, and applications. Prentice Hall, Englewood Cliffs, New Jersey (1993)

Büsing, C: Recoverable robustness in combinatorial optimization. Ph.D. thesis, Technical University of Berlin, Berlin (2011)

Büsing, C.: Recoverable robust shortest path problems. Networks 59, 181–189 (2012)

Büsing, C., Koster, A.M.C.A., Kutschka, M.: Recoverable robust knapsacks: the discrete scenario case. Optim. Lett. 5, 379–392 (2011)

Chassein, A., Goerigk, M.: On the recoverable robust traveling salesman problem. Optim. Lett. (2015). doi:10.1007/s11590-015-0949-5

Liebchen, C., Lübbecke, M.E., Möhring, R.H., Stiller, S.: The concept of recoverable robustness, linear programming recovery, and railway applications. In: Robust and Online Large-Scale Optimization, vol. 5868 of Lecture Notes in Computer Science, pp. 1–27. Springer, New York (2009)

Nasrabadi, E., Orlin, J.B.: Robust optimization with incremental recourse. CoRR (2013). arXiv:1312.4075

Kasperski, A., Zieliński, P.: On the approximability of robust spanning problems. Theor. Comput. Sci. 412, 365–374 (2011)

Kouvelis, P., Yu, G.: Robust discrete optimization and its applications. Kluwer Academic Publishers, Berlin (1997)

Kasperski, A., Kurpisz, A., Zieliński, P.: Recoverable robust combinatorial optimization problems. Oper. Res. Proc. 2012, 147–153 (2014)

Bertsimas, D., Sim, M.: Robust discrete optimization and network flows. Math. Program. 98, 49–71 (2003)

Şeref, O., Ahuja, R.K., Orlin, J.B.: Incremental network optimization: theory and algorithms. Oper. Res. 57, 586–594 (2009)

Lau, L.C., Ravi, R., Singh, M.: Iterative methods in combinatorial optimization. Cambridge University Press, Cambridge (2011)

Magnanti, T.L., Wolsey, L.A.: Optimal Trees. In: Ball, M.O., Magnanti, T.L., Monma, C.L., Nemhauser, G.L. (eds.) Network Models, Handbook in Operations Research and Management Science, vol. 7, pp. 503–615. North-Holland, Amsterdam (1995)

Oxley, J.G.: Matroid theory. Oxford University Press, Oxford (1992)

Edmonds, J.: Matroids and the greedy algorithm. Math. Program. 1, 127–136 (1971)

Cunningham, W.H.: Testing membership in matroid polyhedra. J. Comb. Theory Ser. B 36, 161–188 (1984)

Acknowledgments

The Mikita Hradovich was supported by Wrocław University of Technology, Grant S50129/K1102. The Adam Kasperski and the Pawel Zieliński were supported by the National Center for Science (Narodowe Centrum Nauki), Grant 2013/09/B/ST6/01525.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hradovich, M., Kasperski, A. & Zieliński, P. The recoverable robust spanning tree problem with interval costs is polynomially solvable. Optim Lett 11, 17–30 (2017). https://doi.org/10.1007/s11590-016-1057-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-016-1057-x