Abstract

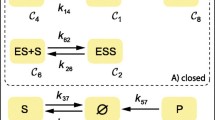

In living organisms, cascades of covalent modification cycles are one of the major intracellular signaling mechanisms, allowing to transduce physical or chemical stimuli of the external world into variations of activated biochemical species within the cell. In this paper, we develop a novel method to study the stimulus–response of signaling cascades and overall the concept of pathway activation profile which is, for a given stimulus, the sequence of activated proteins at each tier of the cascade. Our approach is based on a correspondence that we establish between the stationary states of a cascade and pieces of orbits of a 2D discrete dynamical system. The study of its possible phase portraits in function of the biochemical parameters, and in particular of the contraction/expansion properties around the fixed points of this discrete map, as well as their bifurcations, yields a classification of the cascade tiers into three main types, whose biological impact within a signaling network is examined. In particular, our approach enables to discuss quantitatively the notion of cascade amplification/attenuation from this new perspective. The method allows also to study the interplay between forward and “retroactive” signaling, i.e., the upstream influence of an inhibiting drug bound to the last tier of the cascade.

Similar content being viewed by others

References

Alberts B, Johnson A, Lewis J, Morgan D, Raff M, Roberts K, Walter P (2014) Molecular Biology of the Cell, 6th edn. Garland Science, New York

Aubry S (1983) The twist map, the extended frenkel-kontorova model and the devil’s staircase. Phys D 7(1–3) doi:10.1016/0167-2789(83)90129-X

Berg EL, Hytopoulos E, Plavec I, Kunkel EJ (2005) Approaches to the analysis of cell signaling networks and their application in drug discovery. Curr Opin Drug Discov Dev 8(1):107–114

Blüthgen N, Bruggeman FJ, Legewie S, Herzel H, Westerhoff HV, Kholodenko BN (2006) Effects of sequestration on signal transduction cascades. FEBS J 273(5):895–906. doi:10.1111/j.1742-4658.2006.05105.x

Catozzi S, Di-Bella JP, Ventura AC, Sepulchre JA (2016) Signaling cascades transmit information downstream and upstream but unlikely simultaneously. BMC Syst Biol 10(1):1–20. doi:10.1186/s12918-016-0303-2

Chaves M, Sontag ED, Dinerstein RJ (2004) Optimal length and signal amplification in weakly activated signal transduction cascades. J Phys Chem B 108(39):15311–15320. doi:10.1021/jp048935f

Coulthard LR, White DE, Jones DL, McDermott MF, Burchill SA (2009) p38MAPK: stress responses from molecular mechanisms to therapeutics. Trends Mol Med 15(8):369–379. doi:10.1016/j.molmed.2009.06.005

Csermely P, Korcsmáros T, Kiss HJM, London G, Nussinov R (2013) Structure and dynamics of molecular networks: a novel paradigm of drug discovery: a comprehensive review. Pharmacology & therapeutics 138(3):333–408. doi:10.1016/j.pharmthera.2013.01.016. http://www.sciencedirect.com/science/article/pii/S0163725813000284

Csikász-Nagy A, Kapuy O, Tóth A, Pál C, Jensen LJ, Uhlmann F, Tyson JJ, Novák B (2009) Cell cycle regulation by feed-forward loops coupling transcription and phosphorylation. Molecular Systems Biology 5(1) . doi:10.1038/msb.2008.73. http://msb.embopress.org/content/5/1/236

Del Vecchio D, Ninfa AJ, Sontag ED (2008) Modular cell biology: retroactivity and insulation. Mol Syst Biol 4(161):161. doi:10.1038/msb4100204

Feliu E, Knudsen M, Andersen LN, Wiuf C (2012) An algebraic approach to signaling cascades with N layers. Bulletin of mathematical biology 74(1):45–72. doi:10.1007/s11538-011-9658-0. http://www.ncbi.nlm.nih.gov/pubmed/21523510

Ferrell JE, Ha SH (2014) Ultrasensitivity part I: Michaelian responses and zero-order ultrasensitivity. Trends Biochem Sci 39(10):496–503. doi:10.1016/j.tibs.2014.08.003

Goldbeter A (2013) Oscillatory enzyme reactions and michaelismenten kinetics. FEBS Lett 587(17):2778–2784. doi:10.1016/j.febslet.2013.07.031

Heinrich R, Neel BG, Rapoport TA (2002) Mathematical models of protein kinase signal transduction. Mol cell 9(5):957–970

Huang CYF, Ferrell JE (1996) Ultrasensitivity in the mitogen-activated protein kinase cascade. PNAS 93(19):10078–10083

Ikeda K (1979) Multiple-valued stationary state and its instability of the transmitted light by a ring cavity system. Opt Commun 30:257–261

Jesan T, Sarma U, Halder S, Saha B, Sinha S (2013) Branched motifs enable long-range interactions in signaling networks through retrograde propagation. PloS ONE 8(5):1–12. doi:10.1371/journal.pone.0064409

Kholodenko BN (2000) Negative feedback and ultrasensitivity can bring about oscillations in the mitogen-activated protein kinase cascades. Eur J Biochem 267(6):1583

Li Y (2012) A generic model for open signaling cascades with forward activation. J Math Biol 65(4):709–742. doi:10.1007/s00285-011-0480-y

McDonald G, Firth W (1990) Spatial solitary-wave optical memory. J Opt Soc Am B 7(7):1328–1335

O’Shaughnessy EC, Palani S, Collins JJ, Sarkar CA (2011) Tunable signal processing in synthetic MAP kinase cascades. Cell 144(1):119–131. doi:10.1016/j.cell.2010.12.014

Ossareh HR, Ventura AC, Merajver SD, Del Vecchio D (2011) Long signaling cascades tend to attenuate retroactivity. Biophys J 100(7):1617–26

Pantoja-Hernández L, Martínez-García JC (2015) Retroactivity in the context of modularly structured biomolecular systems. Front Bioeng Biotechnol 3(June):1–16. doi:10.3389/fbioe.2015.00085

Prabakaran S, Gunawardena J, Sontag E (2014) Paradoxical results in perturbation-based signaling network reconstruction. Biophys J 106(12):2720–2728. doi:10.1016/j.bpj.2014.04.031

Rácz E, Slepchenko BM (2008) On sensitivity amplification in intracellular signaling cascades. Physical Biology 5(3):36004. http://stacks.iop.org/1478-3975/5/i=3/a=036004

Segel IH (1993) Enzyme kinetics: behavior and analysis of rapid equilibrium and steady-state enzyme systems. doi:10.1016/0307-4412(76)90018-2. http://www.amazon.com/Enzyme-Kinetics-Behavior-Equilibrium-Steady-State/dp/0471303097

Sepulchre, J.A., Merajver, S.D., Ventura, A.C.: Retroactive signaling in short signaling pathways. PloS one 7(7), e40,806 (2012). doi:10.1371/journal.pone.0040806. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3406091

Thalhauser C, Komarova N (2010) Signal response sensitivity in the yeast mitogen-activated protein kinase cascade. PLoS ONE. doi:10.1371/journal.pone.0011568

Ventura AC, Jiang P, Van Wassenhove L, Del Vecchio D, Merajver SD, Ninfa AJ (2010) Signaling properties of a covalent modification cycle are altered by a downstream target. Proceedings of the National Academy of Sciences of the United States of America 107:10032–10037. doi:10.1073/pnas.0913815107

Ventura AC, Sepulchre JA, Merajver SD (2008) A hidden feedback in signaling cascades is revealed. PLoS Comput Biol 4(3):e1000041

Wynn ML, Ventura AC, Sepulchre JA, Garcia HJ, Merajver SD (2011) Kinase inhibitors can produce off-target effects and activate linked pathways by retroactivity. BMC Syst Biol 5:156

Acknowledgements

We thank Alejandra Ventura for fruitful discussions. SC benefits of a Ph.D. Grant funded by the French national agency CNRS and by the program “Emploi Jeunes Doctorants” of the Région Provence-Alpes-Côte d’Azur (PACA).

Author information

Authors and Affiliations

Corresponding author

Appendix: Proofs

Appendix: Proofs

1.1 Proof of Theorem 1 (Backward Map of the Activation Profiles)

In particular, we work out Eq. (4c) with the aim of making it dependent on only one variable, e.g., \(Y_i^1\). We replace in the order: \(Y_i^0 = \frac{K_{i}^0}{Y_{i-1}^1}\,C_i^0\), \(C_i^0 = \frac{k_i^1}{k_i^0}\,C_i^1\), and \(C_i^1 = E_{iT} \frac{Y_i^1}{K_{i}^1+Y_i^1}\). Then, we divide by the total protein \(Y_{iT}\) to get

By setting \(x_i=Y_i^1/Y_{iT}\) and using definitions (7), it follows

that is,

or equivalently,

Furthermore, for \(i=1\), we rewrite \(Y_i^0 = \frac{K_{i}^0}{Y_{i-1}^1}\,C_i^0\) by using the conservation law \(Y_0^1 = Y_{0T} - C_1^0\) from (3a), then replacing \(C_1^0 = \dfrac{k_1^1}{k_1^0} C_1^1\), and \(C_1^1 = \frac{E_{1T} Y_1^1}{Y_1^1+K_1^1}\).

We normalize by the total first-protein concentration and use definitions (7) to finally get

where we have set

Thus, for \(i=1\) Eq. (26) can be reformulated by

from which it follows (8).

Furthermore, we remark that by definition \(s=1/e_1\) (cf. Eqs. (27) and (7)). Then, multiplying by \(e_1\) both sides of (8) and recalling that \(x_0 = f_1(x_1,x_2)\), we obtain relation (10). \(\square \)

For the case of kinase inhibition, function \(f_n\) is slightly different, as claimed in Remark 3. Actually, the conservation Eq. (3b) considers instead \(C_{n+1}^0=C_D\), where \(C_D = \frac{D_T Y_{n}^1}{K_{D} + Y_n^1}\) (as derived in Sect. 2.1). This implies that term \(\frac{e_{i+1} x_{i+1}}{a_{i+1}+x_{i+1}}\) in (26) will be replaced by \(\frac{d_T x_n}{x_n+a_d}\), where \(d_T=\frac{D_T}{Y_{nT}}\) and \(a_d = \frac{K_D}{Y_{nT}}\). As a result, we obtain

The right-hand side of this latter equality will be denoted by \(\hat{f}_n(x_n,d_T)\).

Notably, function \(\hat{f}_n(x_n,d_T)\) reduces to \(f_n(x_n,x_{n+1}=0)\) as \(d_T=0\).

Lemma 1

For a given \(1\le i\le n\), the fixed point \(x^+\) of function \(f_i\) is such that \( x^+<1\).

Proof

We recall that the fixed point of the map \(f_i\) [from Eq. (13)] is given by

We only need to verify that it is smaller than 1, namely that

The latter inequality reduces to

which is always verified, since b, c, e are strictly positive by definition (7), except for \(e_1\ge 0\). In fact, we recall that \(e_1\) is determined for any \(s>0\) (being \(e_1=1/s\)), and in the limit of infinite stimulus, it tends to zero. \(\square \)

1.2 Proof of Proposition 1 (Fixed Points)

We solve the fixed-point equation

with \(f_i\) defined in (6), for any \(1\le i \le n\), and we have

which always admits

as a solution, as well as two other roots (possibly complex)

Moreover, we observe that

We set \(q = x^+ + x^-\), \(p = x^+ \, x^-\), and \(\varDelta = (1-a-c-e)^2 + 4 (a-b e)\).

For case (C1), we require that \(x^+\) and \(x^-\) are either complex conjugates (namely \(\varDelta <0\)) or non-positive (i.e., their sum has to be \(q\le 0\) and their product \(p \ge 0\)).

For case (C2), the condition that the product p is negative implies that \(x^+\) and \(x^-\) are real (\(\varDelta >0\)) and means \(x^+>0\) and \(x^-<0\). Moreover, in Lemma 1 we already proved that \(x^+<1\), for any \(1\le i\le n\).

For case (C3), we ask \(x^+\) and \(x^-\) to be real (\(\varDelta >0\)) and both strictly positive; thus, their sum and product have to be strictly positive. It is trivial to verify that \(x^-<x^+\).

We recall \(\lambda ^\pm = \frac{f_x \pm \sqrt{f_x^2 + 4f_y}}{2}\) (derived in the following proof). In particular, the partial derivatives \(f_x\) and \(f_y\) given in (39), evaluated in (0,0), imply \(\lambda ^+ = f_x=\frac{b\,e}{a}\) and \(\lambda ^- = f_y=0\).

Such a \(\lambda ^+\) associated with (0,0) is \(>1\) in cases (C1) and (C3), as \(p>0\), and \(<1\) in case (C2), as \(p<0\).

The existence of the fixed points in the unit square \(I^2=[0,1]\times [0,1]\) depends on the intersection of the invariant curve \({\mathcal {C}}\) (derived in Proposition 5) with the bisectrix of the first quadrant. On the one hand, the number of intersections determines the shape of \({\mathcal {C}}\) (i.e., hyperbolic or sigmoidal, according to the initial curvature); on the other hand, the directions \(\mathbf {v}^+\) represent the tangent to \({\mathcal {C}}\) in the fixed points.

Then, if we are in case (C2), the invariant curve must be hyperbolic, and \({\mathbf {v}^+ = (\lambda ^+,1)^T}\) is such that \(\lambda ^+>1\).

For case (C3), curve \({\mathcal {C}}\) must be sigmoidal to intersect the bisectrix three times, and a similar reasoning applies to conciliate the number of fixed points with the slope of \({\mathcal {C}}\). As a result, the \(\lambda ^+\) associated with \(x^-\) must be \(<1\) and the \(\lambda ^+\) associated with \(x^+\) must be \(>1\).

As a consequence, this determines the stability of the fixed points in all scenarios (C1)–(C2)–(C3).

The contracting property of eigenvalue \(\lambda ^-\) associated with the fixed points is derived in the following proof (point 2). \(\square \)

1.3 Proof of Proposition 2 (Properties of the Eigenvalues)

The eigenvalues and vector associated with the jacobian matrix \({\mathbf {J}}_i\) of the map \({\mathbf {F}}_i\) are obtained from the following equation:

That implies the determinant of \({\mathbf {J}}_i - \lambda _i {\mathbf {I}}\) has to be zero. Matrix \({\mathbf {J}}\) is derived in the Proof of Theorem 2 (below), while \({\mathbf {I}}\) is the identity matrix 2\(\times \)2. By setting \(f_x =\frac{\partial f_i}{\partial x_i}\) and \(f_y =\frac{\partial f_i}{\partial y_i}\), we solve

which gives us the solutions

Moreover, solving equation \({\mathbf {J}}_i \mathbf {v}_i = \lambda _i \mathbf {v}_i\) one finds \(\mathbf {v}_i = (\lambda _i,1)^T\).

1. From the definition of \(\lambda _i^+\) and \(\lambda _i^-\), it is trivial to prove that \(f_x\) and \(f_y\) are, respectively, obtained as their sum and their product.

2. Since \(\lambda ^+_i = \frac{f_x+\sqrt{f_x^2 + 4f_y}}{2}\) and \(f_x>0\) and \(f_y\ge 0\) for any point (x, y) (shown in the Proof of Proposition 3), \(\lambda ^+_i>0\). It follows that \(\lambda ^-_i = - f_y / \lambda ^+_i\) is non-positive.

Moreover, by using its definition, we still have to verify that \(\frac{f_x-\sqrt{f_x^2 + 4f_y}}{2} > -1\), namely \(f_x+1>f_y\). This latter can be expressed as a function of the eigenvalues as \(\lambda ^+_i +\lambda ^-_i +1> - \lambda ^+_i \lambda ^-_i\), that is \((1+\lambda ^+_i)(1+\lambda ^-_i) >0\). As \(\lambda ^+_i>0\), the product is positive if and only if \(1+\lambda ^-_i>0\). Hence, we have \(-1<\lambda ^-_i<0\).

This result is valid for any \(i=1,\ldots ,n\), based on the positiveness of the partial derivatives \(f_x\) and \(f_y\).

3. Eventually, \(f_x= \lambda _i^+ + \lambda _i^- >0\) implies \(\lambda _i^+ > -\lambda _i^- = |\lambda _i^-|\). \(\square \)

1.4 Proof of Proposition 3 (Lower Bound of \(\chi _n\))

We demonstrate here that, for homogeneous cascades of arbitrary length n, the value of the maximum response \(\chi _n\) for very large stimulus s is lower bounded by the strictly positive fixed point \(x^*\) satisfying the equation \(x^* = f(x^*,x^*)\), whenever it exists, with f being defined in (6).

We work in the limit \(s\rightarrow +\infty \), so that for all \(0\le i \le n\) \(\chi _i=\lim _{s\rightarrow +\infty } x_i(s)\), and \(\chi _0 =1\) (from (10) at page 7). We firstly rewrite system (6) as

Let us now suppose the claim is false, that is \(\chi _n < x^*\).

By considering the partial derivatives of f(x, y), one can prove that f is increasing in y for all x, and increasing in x for \(y=0\) or \(y=x^*\).

In fact, from (6) one calculates

which is always positive (except in (0,0) where it is null), and

which is positive if and only if \(a (1- e y/(y+a)) + x^2 > 0\).

For \(y=0\), the proof is immediate. For \(y=x^*_+\), we consider the fixed-point equation

which implies that the denominator must be positive. Thus, in particular \(1-x^*-e x^*/(x^*+a) > 0\) is sufficient for the positiveness of \(a (1- e y/(y+a)) + x^2\) and thus of \(\frac{\partial f}{\partial x}(x,x^*)\).

Hence, we obtain \(f(\chi _n,0)< f(\chi _n,x^*) < f(x^*,x^*)\), namely \(\chi _{n-1} < x^*\). Then, \(f(\chi _{n-1},\chi _n)< f(\chi _{n-1},x^*) < f_{n-1}(x^*,x^*)\le f_{n-1}(x^*,x^*)\), i.e., \(\chi _{n-2} < x^*\). Eventually, it follows \({1=\chi _0 < x^*}\). However, Lemma 1 proves that \(x^* < 1\). Therefore, our claim \(x^* \le \chi _n\) must be true. \(\square \)

1.5 Proof of Proposition 4 (Bifurcations)

-

(I)

A dynamical system usually undergoes a saddle-node bifurcation when new fixed points appear in the phase diagram, due to a small parameter variation. In our case, that happens when \(x^+\) and \(x^-\) transit from the set \(\mathbb {C}\) to the set \(\mathbb {R}\), namely when the discriminant \(\varDelta = (1-a-c-e)^2 + 4(a-be)\) changes in sign, from negative to positive. In addition, we pass from (C1) to (C3) if both \(x^+\) and \(x^-\) exist strictly positive, which is guaranteed if their sum is \((1-a-c-e)>0\).

-

(II)

A transcritical bifurcation occurs when the stability of the fixed points is modified as they pass through a bifurcation value. In our case, when \(x^+\) and \(x^-\) are real, a certain parameter variation causes an exchange of stability between 0 and one the other fixed points (which are ordered, \(x^- < x^+\)). That happens when either \(x^-\) or \(x^+\) changes in sign, i.e., transits through the other fixed point 0. Such a transition through 0 constitutes the bifurcation point, which is represented by either \(x^+=0\) or \(x^-=0\), that is translated into a condition on the product of \(x^+\) and \(x^-\), which is \((b e - a)=0\). When the product is tuned from negative to positive, we pass from case (C2), to case (C1) or (C3), according to which fixed point passes from the bifurcation. Cases (C1) or (C3) are achieved as a result of the sign change of, respectively, \(x^+\) (becoming negative) or \(x^-\) (becoming positive). These two situations correspond to set a condition on the sum of \(x^+\) and \(x^-\) being, respectively, \((1-a-c-e)<0\) or \((1-a-c-e)>0\).

If the sum is null, i.e., \( x^+ = - x^-\), then the product \((b e - a)\) will be negative and no bifurcation will appear. \(\square \)

1.6 Proof of Theorem 2 (Response Derivatives)

By using Theorem 1 we can associate a signaling cascade to a two-variable dynamical system defined by the backward map:

or, more compactly, we can write

where \({\mathbf {z}}_i = \begin{pmatrix} x_i \\ y_i \end{pmatrix} \) and \({\mathbf {F}}_i = \begin{pmatrix} f_i \\ g_i \end{pmatrix}\), with \(f_i(x_i,y_i)\) as defined in (6) and \({g_i(x_i,y_i)=x_i}\).

In this case, we have the choice of considering, for \(i=1\), \(\check{f}_1\) instead of \(f_1\); thus, \(s=\check{f}_1(x_1,x_2)\) instead of \(x_0=f_1(x_1,x_2)\). By iterative application, we can obtain \({\mathbf {z}}_0\) as a function of \({\mathbf {z}}_n\), i.e., \( {\mathbf {z}}_0 = {\mathbf {F}}_1\circ {\mathbf {F}}_2 \circ \ldots \circ {\mathbf {F}}_n ({\mathbf {z}}_n)\,, \) and in particular calculate its first derivative with respect to variable \(x_n\), \({\mathbf {z}}'_i = \dfrac{d {\mathbf {z}}_i}{d x_n}\), according to the chain rule:

where

is the jacobian matrix associated with the general dynamical system (28), with \(\lambda _i^\pm \) eigenvalues associated with \({\mathbf {J}}_i\) and defined in (15).

Hence, in (29) we have \({\mathbf {z}}'_0 = ( s', x'_1)^T \) and \({\mathbf {z}}'_n = (1, 0)^T \), so the first component of \({\mathbf {z}}'_0\), denoted \(s'(x_n) = \frac{d s}{d x_n}\), is given by

The multiplication by a row vector (on the left) and a column vector (on the right) corresponds to select the element (1,1) of the product of the jacobians, which gives us \(s'(x_n)\) as a function of the \(\lambda _i^+\)’s and \(\lambda _i^-\)’s.

For arbitrary \(n\ge 2\), the linear development in the \(\lambda _i^-\)’s can be formulated as follows:

Given that \(x'_n(s)=1/s'(x_n)\), we say that

which is equivalent to (19). It is interesting to distinguish the first term in \(\check{\lambda }_1\) because it is the only one which remains if the \(\lambda _i^-\)’s are equal. Furthermore,

if the other terms are negligible, i.e., if \(\frac{\check{\lambda }_1^-}{\check{\lambda }_1^+} + \sum _{i=2}^n \frac{\lambda _i^--\lambda _{i-1}^-}{\lambda _i^+} \ll 1\).

In particular, this approximation holds when for all i, \(|\lambda _i^-| \simeq 0\), as in the case of a dose–response curve which is overall flat (see e.g., the invariant curve associated with an inefficient signaling cascade, namely showing a low activation profile), but more generally also for \(|\lambda _i^-|\ll \lambda _i^+\).

In order to prove the second part of the theorem, let us consider the forward map:

where

By setting \(x_0 = 1 - \frac{x_1}{s(x_1+a_1)}\), we can write \(\check{h}_1(s,x_1)= h_1(x_0,x_1)\).

Let us consider the equations describing the inverse of system (5), through a formulation of the steady states with increasing indexes:

For the uniformity of notation, we use \(h_i\) for any i, but keeping in mind that, for \(i=1\) and \(i=n\), we actually imply \(\check{h}_1\) and \(\hat{h}_n\), so actually:

We reformulate Eq. (34) in terms of dynamical systems:

or , more compactly,

where \( {\mathbf {z}}_i = \begin{pmatrix} x_i \\ y_i \end{pmatrix} \) and \(\mathbf H_i = \begin{pmatrix} g_i \\ h_i \end{pmatrix}\), with \(g_{i+1}(x_i,y_i)=y_i\) and \(h_{i+1}(x_i,y_i)\) as defined in (33).

By iterative application, we can write \({\mathbf {z}}_n\) as a function of \({\mathbf {z}}_0\), i.e., \( {\mathbf {z}}_n = \mathbf H_n\circ \mathbf H_{n-1} \circ \ldots \circ \mathbf H_1 ({\mathbf {z}}_0)\,, \) and in particular calculate its first derivative with respect to variable \(x_1\), denoted \({\mathbf {z}}'_i = \frac{d {\mathbf {z}}_i}{d x_1}\), according to the chain rule:

where \(\mathbf {L}_i = \begin{pmatrix} \frac{\partial g_i}{\partial x_i} \quad \frac{\partial g_i}{\partial y_i} \\ \frac{\partial h_i}{\partial x_i} \quad \frac{\partial h_i}{\partial y_i} \end{pmatrix} \) is the jacobian matrix associated with system (35) and such that \(\mathbf {L}_i = {\mathbf {J}}_i^{-1}\), that is:

with \(\mu _i^\pm = 1/\lambda _i^\pm \) as defined in (15). From Proposition 2, it follows that \(0<\mu _i^+<1\) and \(\mu _i^-< -1\).

Hence, in (36) we have \({\mathbf {z}}'_n = ( x'_n, d'_T)^T \) and \({\mathbf {z}}'_0 = (0, 1)^T \), and the second component of \({\mathbf {z}}'_n\), i.e., \(d_T'(x_1) = \frac{\mathrm {d} d_T}{\mathrm {d} x_1}\), is given by

The element (2,2) of the \( \prod _{0\le i< n} {\mathbf {J}}_{n-i}^{-1}(x_{n-i-1},x_{n-i}) \) is equivalent to \(d'_T(x_1)\). Expressed as a function of the \(\mu _i^+\)’s and \(\mu _i^-\)’s, the general development in the \(\mu ^-_i\)’s truncated at the second order, for \(n\ge 2\), is

Knowing that \(x'_1(d_T) = 1/d'_T(x_1)\), it follows

In particular, by expanding and rearranging the term at the denominator of (38), we get

Then, by replacing \(\mu _i^\pm = 1/\lambda _i^\pm \) in (38), it results

which is equivalent to (20).

Under the hypothesis \(\frac{\check{\lambda }_1^-}{\check{\lambda }_1^+} + \sum _{i=2}^{n} \left( \frac{\lambda _i^- - \lambda _{i-1}^-}{\lambda _i^+}\right) \ll 1\), some terms at the denominator of the latter equation are negligible, and since \(\lambda _i^\pm = 1/\mu _i^\pm \), that expression reduces to

This approximation is valid especially in the case \(|\lambda _i^-| \ll \lambda _i^+\) for each i, which is clearly satisfied if \(\lambda _i^+ \gg 1\) (as \(-1<\lambda _i-<0\)). \(\square \)

We stress that \(x'_1(d_T)<1\), as each factor is such that \(-1<\lambda _i^-<0\). That shows an evidence about why long cascades attenuate retroactivity: the drug-response curves are less “efficient” (either with a slow increment or a low amplitude) as n increases. This product of \(\lambda _i^-\)’s also reports the typical feature of sign reversal for retroactive propagation.

Secondly, as \(x'_2(d_T) \sim \lambda _2^- \lambda _3^- \cdots \hat{\lambda }_n^-\), it follows that \(x'_1(d_T)< x'_2(d_T)< \cdots < x'_n(d_T)\).

Furthermore, if the \(\lambda _i^-\)’s are close to 0, or to −1, the slope of the drug-response function, \(x'_1(d_T)\) is, respectively, minimized, or maximized.

1.7 Proof of Proposition 6

Let us assume that the polynomial \((x+a_{i+1}) \big ( (x+a_i) (1-x) - c_i x - b_i e_i \big ) - e_{i+1} x (x+a_i) =0\) from Eq. (21) has three real strictly positive roots, so that it may be rewritten as \( (x-\alpha ^*) (x-\beta ^*) (x-\gamma ^*) = 0 \,\) with \(\alpha ^*,\beta ^*,\gamma ^* >0\) being the three roots.

By expanding the two equivalent polynomials above, we obtain, respectively,

and

We equate the constant terms in these polynomials, deducing that \( a_{i+1} (a_i-b_i e_i) = \alpha ^*\beta ^*\gamma ^* >0\). It follows that \((a_i-b_i e_i) > 0\), that is \(b_i e_i / a_i = \lambda _i^+(0) < 1\).

Indeed, this last condition determines the fixed point (0,0) as a stable point whenever three other positive fixed points exist. So, as explained in the Proof of Proposition 1, considering the argument of alternating stability for the fixed points corresponding to the sequence \(0<\alpha ^*<\beta ^*<\gamma ^*\), we have that the points (0,0) and \((\beta ^*,\beta ^*)\) are attractors, while \((\alpha ^*,\alpha ^*)\) and \((\gamma ^*,\gamma ^*)\) are saddle points.

Furthermore, this reasoning can be extended to all the other cases where one or more roots of polynomial (21) do not belong to the interval \(I^2=[0,1]\times [0,1]\). Again, the study of the sign of term \((a_i-b_i e_i)\) proves that the largest fixed point in \(I^2\) is always a saddle point. This result holds for every class, from (C\('\)1) to (C\('\)3). \(\square \)

1.8 Partial Derivatives of \(f_i\)

Partial derivatives of \(f_i\) (\(1<i<n\)), \(\check{f}_1\) and \(\hat{f}_n\), respectively, which we report in the following.

1.9 Partial Derivatives of \(f_i\) Evaluated at Fixed Points

The fixed-point equation \(x=f_i(x,x)\) allows to simplify the expressions of the partial derivatives evaluated at a fixed point (x, x). Firstly,

which we rewrite by multiplying numerator and denominator by \(b_i\, e_i \, x^2\) and then simplify by using the fixed-point equation \(x=\frac{b_i\, e_i \,x}{(x+a_i) (1-x-e_{i+1} x/(x+a_{i+1})) - c_i x } \). Hence,

With the same reasoning on

we can similarly recover an equivalent formulation.

The two simplified expressions are given by

Rights and permissions

About this article

Cite this article

Catozzi, S., Sepulchre, JA. A Discrete Dynamical System Approach to Pathway Activation Profiles of Signaling Cascades. Bull Math Biol 79, 1691–1735 (2017). https://doi.org/10.1007/s11538-017-0296-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11538-017-0296-z