Abstract

In this paper, we consider the Rasch model and suggest novel point estimators and confidence intervals for the ability parameter. They are based on a proposed confidence distribution (CD) whose construction has required to overcome some difficulties essentially due to the discrete nature of the model. When the number of items is large, the computations due to the combinatorics involved become heavy, and thus, we provide first- and second-order approximations of the CD. Simulation studies show the good behavior of our estimators and intervals when compared with those obtained through other standard frequentist and weakly informative Bayesian procedures. Finally, using the expansion of the expected length of the suggested interval, we are able to identify reasonable values of the sample size which lead to a desired length of the interval.

Similar content being viewed by others

References

Andrich, D. (2010). Sufficiency and conditional estimation of person parameters in the polytomous Rasch model. Psychometrika, 75, 292–308.

Baker, F. B., & Kim, S. H. (2004). Item Response Theory: Parameter Estimation Techniques. New York: Dekker.

Berger, J. O. (2006). The case for objective Bayesian analysis. Bayesian Analysis, 1, 385–402.

Bond, T. G., & Fox, C. M. (2015). Applying the Rasch model: Fundamental measurement in the human sciences (3rd ed.). New York: Routledge.

Brown, L. D. (1986). Fundamentals of Statistical Exponential Families with Applications in Statistical Decision Theory. Lecture Notes—Monograph Series, vol. 9. Hayward, CA: Institute of Mathematical Statistics.

Brown, L. D., Cai, T. T., & DasGupta, A. (2001). Interval estimation for a binomial proportion. Statistical Science, 16, 101–117.

Christensen, K. B., Kreiner, S., & Mesbah, M. (2013). Rasch Models in Health. Hoboken: Wiley.

Cohen, J., Chan, T., Jiang, T., & Seburn, M. (2008). Consistent estimation of Rasch item parameters and their standard errors under complex sample designs. Applied Psychological Measurement, 32, 289–310.

Deheuvels, P., Puri, M. L., & Ralescu, S. S. (1989). Asymptotic expansions for sums of nonidentically distributed Bernoulli random variables. Journal of Multivariate Analysis, 28, 282–303.

Doebler, A., Doebler, P., & Holling, H. (2013). Optimal and most exact confidence intervals for person parameters in item response theory models. Psychometrika, 78, 98–115.

Finch, H., & Edwards, J. M. (2016). Rasch model parameter estimation in the presence of a nonnormal latent trait using a nonparametric bayesian approach. Educational and Psychological Measurement, 76, 662–684.

Fischer, G. H., & Molenaar, I. W. (2012). Rasch Models: Foundations, Recent Developments, and Applications. New York: Springer.

Fisher, R. A. (1930). Inverse probability. Mathematical Proceedings of the Cambridge Philosophical Society, 26, 528–535.

Fox, J.-P. (2010). Bayesian Item Response Modeling: Theory and Applications. Berlin: Springer.

Fraser, D. A. S. (2011). Is Bayes posterior just quick and dirty confidence? Statistical Science, 26, 299–316.

Gelman, A., Carlin, J. B., Stern, H. S., Dunson, D. B., Vehtari, A., & Rubin, D. B. (2013). Bayesian Data Analysis. Boco Raton: CRC Press.

Hall, P. (2013). The Bootstrap and Edgeworth Expansion. New York: Springer.

Hambleton, R. K., Swaminathan, H., & Rogers, H. J. (1991). Fundamentals of Item Response Theory. Newbury Park: Sage Publications.

Hannig, J. (2009). On generalized fiducial inference. Statistica Sinica, 19, 491–544.

Hannig, J., Iyer, H. K., Lai, R. C. S., & Lee, T. C. M. (2016). Generalized fiducial inference: A review and new results. Journal of the American Statistical Association, 44, 476–483.

Hoijtink, H., & Boomsma, A. (1995). On person parameter estimation in the dichotomous Rasch model. In Rasch Models (pp. 53–68). Springer.

Jannarone, R. J., Kai, F. Y., & Laughlin, J. E. (1990). Easy Bayes estimation for Rasch-type models. Psychometrika, 55, 449–460.

Johnson, R. A. (1967). An asymptotic expansion for posterior distributions. The Annals of Mathematical Statistics, 38, 1899–1906.

Klauer, K. C. (1991). Exact and best confidence intervals for the ability parameter of the Rasch model. Psychometrika, 56, 535–547.

Kolassa, J. E. (2006). Series Approximation Methods in Statistics. New York: Springer.

Liu, Y., & Hannig, J. (2016). Generalized fiducial inference for binary logistic item response models. Psychometrika, 81, 290–324.

Lord, F. M. (1975). Evaluation with Artificial Data of a Procedure for Estimating Ability and Item Characteristic Curve Parameters. Princeton: ETS Research Bulletin Series.

Lord, F. M. (1980). Application of Item Response Theory to Practical Testing Problems. Hillsdale: Lawrence Erlbaum Ass.

Lord, F. M. (1983). Unbiased estimators of ability parameters, of their variance, and of their parallel-forms reliability. Psychometrika, 48, 233–245.

Mair, P., & Hatzinger, R. (2007). Extended Rasch modeling: The eRm package for the application of IRT models in R.

Mair, P., & Strasser, H. (2018). Large-scale estimation in Rasch models: asymptotic results, approximations of the variance-covariance matrix of item parameters, and divide-and-conquer estimation. Behaviormetrika, 45, 189–209.

Ogasawara, H. (2012). Asymptotic expansions for the ability estimator in item response theory. Computational Statistics, 27, 661–683.

Rasch, G. (1960). Probabilistic Models for Some Intelligence and Attainment Tests. Copenhagen: The Danish Institute of Educational Research.

Schweder, T., & Hjort, N. L. (2002). Confidence and likelihood. Scandinavian Journal of Statistics, 29, 309–332.

Schweder, T., & Hjort, N. L. (2016). Confidence, Likelihood and Probability. London: Cambridge University Press.

Serfling, R. J. (1980). Approximation Theorems of Mathematical Statistics. New York: Wiley.

Severini, T. A. (2000). Likelihood Methods in Statistics. Oxford: Oxford University Press.

Shen, J., Liu, R. Y., & Xie, M. (2018). Prediction with confidence—A general framework for predictive inference. Journal of Statistical Planning and Inference, 195, 126–140.

Sheng, Y. (2012). An empirical investigation of Bayesian hierarchical modeling with unidimensional IRT models. Behaviormetrika, 40, 19–40.

Singh, K., Xie, M., & Strawderman, M. (2005). Combining information through confidence distributions. Annals of Statistics, 33, 159–183.

Singh, K., Xie, M., & Strawderman, W. E. (2007). Confidence distribution (CD)—Distribution estimator of a parameter. Complex Datasets and Inverse Problems: Tomography, Networks and Beyond (pp. 132–150). Hayward: Institute of Mathematical Statistics.

Swaminathan, H., & Gifford, J. A. (1982). Bayesian estimation in the Rasch model. Journal of Educational Statistics, 7, 175–191.

Swaminathan, H., & Gifford, J. A. (1985). Bayesian estimation in the two-parameter logistic model. Psychometrika, 50, 349–364.

Thissen, D., & Wainer, H. (1982). Some standard errors in item response theory. Psychometrika, 47, 397–412.

Veronese, P., & Melilli, E. (2015). Fiducial and confidence distributions for real exponential families. Scandinavian Journal of Statistics, 42, 471–484.

Veronese, P., & Melilli, E. (2018). Fiducial, confidence and objective Bayesian posterior distributions for a multidimensional parameter. Journal of Statistical Planning and Inference, 195, 153–173.

Warm, T. A. (1989). Weighted likelihood estimation of ability in item response theory. Psychometrika, 54, 427–450.

Wright, B. (1998). Estimating measures for extreme scores. Rasch Measurement Transactions, 12, 632–633.

Wright, B. D., & Stone, M. H. (1979). Best Test Design. Chicago: Mesa press.

Xie, M., & Singh, K. (2013). Confidence distribution, the frequentist distribution estimator of a parameter: A review. International Statistical Review, 81, 3–39.

Acknowledgements

The research was supported by grants from Bocconi University. The authors thank the editor, the associated editor and a reviewer for their helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

1.1 Proofs

Proof of Proposition 1

Consider first the case \(s=1,\ldots ,n-1\). The density \(h_{\,n,s}\) for \(\theta \) is well defined and admits all moments if

From the well-known fact that the geometric mean is not greater than the arithmetic mean, it follows that \(I_{n,s}\le \int _{-\infty }^{\infty }|\theta |^k \frac{1}{2}(h_{\,n,s}^r(\theta )+h_{\,n,s}^{\ell }(\theta ))\, d\theta \), and thus, it is sufficient to show that there exists the k-th moment of \(h_{\,n,s}^r\) and of \(h_{\,n,s}^{\ell }\), \(k=1,2,\ldots \). Now, using the density given in (7), we have

which is finite if for each \(t=0,1, \ldots ,s\)

Being the integrand in (A.2) asymptotic to \(n|\theta |^k e^{\theta }\) when \(t=0\) and to \(-t|\theta |^k e^{\theta t}\) when \(t=1,2,\ldots \) for \(\theta \rightarrow -\infty \), and to \((n-t)|\theta |^k(1+e^{\theta })^{-(n-t)}\) for \(\theta \rightarrow \infty \), the integral is finite and the result follows.

When \(s=0\), the density of \(h_{n,s}^\beta (\theta )\) and all the corresponding moments exist if \(\int _{-\infty }^{\infty }|\theta |^k h_{n,s}^\beta (\theta ) d\theta \) is finite for \(k=0,1,\ldots \). Using (7), we have to show that

Because the integrand is asymptotic to \(|\theta |^k n^\beta e^{\theta \beta }\) for \(\theta \rightarrow -\infty \), and to \(|\theta |^k ne^{-n\theta \beta }\) for \(\theta \rightarrow \infty \), the result follows recalling that \(\beta \in (0,1]\). The proof is similar for the case \(s=n\), observing that \(h_{n,n}^\beta (\theta )=(n-\bar{P}_n(\theta ))\exp \{\theta n - M_n(\theta )\}\). \(\square \)

Proof of Theorem 1

Let \(\theta _0\) be the true value of the parameter \(\theta \), and let \(P_0(\theta _0)=\exp \{\theta _0-b_0\}/(1+\exp \{\theta _0-b_0\})\). Thus, from the Cesàro mean theorem, \(\bar{P}_n(\theta _0)= \sum _{i=1}^n P_i(\theta _0)/n \rightarrow P_0(\theta _0)\), (\(n\rightarrow \infty \)). From (6), we have \(E_{\theta }(\bar{X}_n)=\bar{P}_n(\theta )\), and because

the strong law of large numbers holds, i.e., \(\bar{X}_n{\mathop {\rightarrow }\limits ^{a.s.}}P_0(\theta _0)\), see Serfling (1980, Sect. 1.8). In conclusion, \(\bar{x}_n\) converges to \(P_0(\theta _0)\) for almost all sequences \((x_1, x_2, \ldots )\).

Now, \(Var_{\theta }(S_n)=\sum _{i=1}^n \exp \{\theta -b_i\}/(1+\exp \{\theta -b_i\})^2 \rightarrow \infty \), for \(n\rightarrow \infty \), because the general term of the series does not tend to 0, and thus, from Deheuvels et al. (1989, Theorem 1.1) we have

If \(\theta \) is distributed according to the CD defined in (2), we can write

where \(\theta _n=\hat{\theta }+z/\sqrt{n}\) and \(\bar{X}^*_n\) is the sample mean of n independent Bernoulli random variables with success probability \(P_i(\theta _n)\), \(i=1, \ldots ,n\). Notice that, for \(z \in \mathbb {R}\), \(\theta _n\) belongs to the natural parameter space because \(\Theta =\mathbb {R}\) and \(\theta _n\) converges to \(\theta _0\). The latter fact follows from the convergence of the sample mean stated above and the relationship between the natural and the mean parameter of a NEF.

Using (A.3), we can write, for each \(\epsilon >0\) and n large enough,

The term \((\bar{x}_n-\bar{P}_n(\theta _n))\bar{P}^{\prime }_n(\theta _n)^{-1/2}\), appearing in (A.5), admits a standard Taylor expansion because it is equidifferentiable thanks to the assumption of boundedness of the sequence of the \(b_i\)’s. Recalling that \(\bar{P}_n(\hat{\theta })=\bar{x}_n\), we have

Thus, (A.5) can be written as

where the last equality follows from (A.4). As a consequence, the convergence stated in Theorem 1 is proved for \(\theta \) distributed according to \(H^r_{n,s}(\theta )\).

Because \(H^{\ell }_{n,s}(\theta )=H^r_{n,s-1}(\theta )\), the previous result holds also for \(H^{\ell }_{n,s}(\theta )\) provided the following conditions for the asymptotic normality are satisfied (see Serfling 1980, Sect. 1.5.5),

where \({\hat{\theta }}^{-}\) is the MLE corresponding to the score \(s - 1\). The first condition is true because all functions involved are continuous and admit finite limits, while the second holds because

Finally, the result for \(H_{n,s}(\theta )\) follows from (3). \(\square \)

The following proposition details the smoothing effect of the geometric mean on the asymptotic CD, described before Theorem 2, when observations are lattice random variables.

Proposition 2 Assume that the distribution functions \(\tilde{H}_{n,s}^r\) and \(\tilde{H}_{n,s}^{\ell }\) of \(\sqrt{n}(\theta -\hat{\theta })\) with \(\theta \) distributed according to \(H_{n,s}^r\) and \(H_{n,s}^{\ell }\), respectively, have the following Edgeworth expansion

where \(d \in \{r,\ell \}\), \(\gamma _r=1\), \(\gamma _{\ell }=-1\) and A(z) is a polynomial of second degree in z. Then, the expansion of \(\tilde{H}_{n,s}\) is

and thus do not depend on the term \(\gamma _d k \hat{\sigma }/2\).

Proof of Proposition 2

Recalling that \(H_{n,s}\) is the distribution function of \(\theta \) corresponding to the (normalized) geometric mean \(h_{\,n,s}\) of the densities \(h_{\,n,s}^r\) and \(h_{\,n,s}^{\ell }\), it follows that the density of a linear transformation of type \(T=a\theta +b\), for constants \(a\ne 0\) and b, can be obtained as the (normalized) geometric mean of \(h_{\,n,s}^r((t-b)/a)|1/a|)\) and \(h_{\,n,s}^\ell ((t-b)/a)|1/a|)\). Using (A.7), the expansion of the density \(\tilde{h}_{n,s}^d(z)\) is given by

where for a function f, as usual, \(f^\prime \) denotes its derivative.

Because \(\tilde{h}_{n,s}(z)=k_n^{-1}\sqrt{\tilde{h}_{n,s}^r(z) \tilde{h}_{n,s}^\ell (z)}\), where \(k_n=\int \sqrt{\tilde{h}^r_{n,s} (z) \tilde{h}_{n,s}^\ell (z)} dz\), from

we obtain

We now derive the expansion of \(k_n\) by integrating (A.9). Using standard properties of the normal density and recalling that A(z) is a polynomial of second degree in z, we have

As a consequence, the expansion of \(\tilde{h}_{n,s}(z)\) coincides with that given in (A.9). Finally, from the expansion of the density given in (A.9) we derive the expansion (A.8) of the corresponding CD through a term by term integration. \(\square \)

The previous proposition is crucial for the following proof.

Proof of Theorem 2

Consider first the distribution function \(\tilde{H}_{n,s}^r\) of \(\sqrt{n}(\theta -\hat{\theta })\) with \(\theta \) distributed according to \(H_{n,s}^r\). Following the proof of Theorem 1, we can write, using (A.4) and the fact that \(\bar{x}_n=\bar{P}_n(\hat{\theta })\), with \(\bar{P}_n(\theta )\) increasing in \(\theta \),

where \(\tilde{F_n}\) is implicitly defined in (A.10).

Notice that \(\{X^*_{nj}, \, 1 \le j \le n\}\) is a triangular array of random variables such that: a) for all \(n\ge 1\), \(X^*_{n1}, \dots , X^*_{nn}\) are independent and b) for all \(j\in \{1,2,\ldots , n\}\), \(X^*_{nj}\) follows a Bernoulli distribution with success probability \(P_j(\theta _n)\), so that the expansion of

can be derived using the result in Deheuvels et al. (1989, Theorem 1.3). Specifically, they consider a smooth version \(\breve{F}_n\) of \(\tilde{F}_n\), which in our context is given by

-

(i)

\(\breve{F}_{n}(t_{nk})=\tilde{F}_n(t_{nk})\) for \(t_{nk}=(k-n\bar{P}_n(\theta _n)+1/2)/(\sqrt{n}\bar{P}^{\prime }_n(\theta _n)^{1/2})\), \(k=0,\pm 1,\pm 2\ldots \);

-

(ii)

\(\breve{F}_{n}(t)\) is continuous in t, and linear on all intervals \((t_{n(k-1)},t_{nk})\), \(k=0,\pm 1,\pm 2\ldots \).

The expansion of \(\breve{F}_n\) is

If t is an observed point, i.e., \(t=(s-n\bar{P}_n(\theta _n))/(\sqrt{n}\bar{P}^{\prime }_n(\theta _n)^{1/2})\), using the previous condition (i) and (A.11), we have

A further expansion in t of the last expression gives

which depends only on the Taylor expansion of \(\Phi (t+1/(2\sqrt{n}\bar{P}^{\prime }_n(\theta _n)^{1/2}))\) appearing in \(\tilde{F}_n(t)\), because the higher-order terms of the expansions of the other components of \(\tilde{F}_n(t)\) are absorbed in \(O(n^{-1})\). Note that the extra term characterizing the Edgeworth expansion of a lattice random variables appears in (A.12).

Recalling the expression of t and that \(s=n\bar{x}=n\bar{P}_n(\hat{\theta })\), from (A.10) we have

Now, using (A.6), it is possible to show that the expansions of \(\Phi (-t)\), \(\phi (t)\) and \(t^2\) are \(\Phi (z\bar{P}^{\prime }_n(\hat{\theta })^{1/2})+ O(n^{-1})\), \(\phi (z\bar{P}^{\prime }_n(\hat{\theta })^{1/2})+ O(n^{-1})\) and \(z^2\bar{P}^{\prime }_n(\hat{\theta })+ O(n^{-1})\), respectively. Thus,

Consider now \(H^{\ell }_{n,s}\) and recall that \(H^{\ell }_{n,s}(\theta )=H^r_{n,s-1}(\theta )\). Thus, the expansion of the CD for \(\sqrt{n}(\theta -\hat{\theta }^-)\), where \(\hat{\theta }^-=\bar{P}_n^{-1}(\bar{x}_n-1/n)\), can be obtained directly from (A.13). To obtain the expansion of \(\sqrt{n}(\theta -\hat{\theta })\) (with \(\theta \) distributed according to \(H^{\ell }_{n,s}(\theta )\), we can write

Observe that the argument of \(\Phi \) and \(\phi \)

can be seen as a function g of \(\bar{x}_n-1/n\) whose Taylor expansion in the point \(\bar{x}_n\) is

As a consequence, we have

Finally, substituting (A.16) and (A.17) into (A.14), we obtain

Applying Proposition 2 to (A.13) and (A.18), the result follows. \(\square \)

Proof of Theorem 3

First, we provide a stochastic expansion of the MLE \(\hat{\theta }\). Notice that the log likelihood of the Rasch model

satisfies the regularity conditions assumed by Severini (2000, Sect. 3.4), as can be seen arguing as in his Example 3.8. Furthermore, from the expression (A.19), it is immediate to conclude that the derivatives from the second-order onwards of \(\ell (\theta )\), with respect to \(\theta \), do not depend on s, so that all the standardized log-likelihood derivatives are equal to 0 with the exception of \(Z_1=(\ell _\theta (\theta )- E_\theta (\ell _\theta (\theta )))/\sqrt{n}=\ell _\theta (\theta )/\sqrt{n}\), where \(\ell _\theta (\theta )\) denotes the first derivative of \(\ell (\theta )\). As a consequence, the stochastic expansion of the MLE \(\hat{\theta }\), obtained using formula (5.7) in Severini (2000), is

To provide an expansion of

we first develop a Taylor expansion of \(h(\hat{\theta })=\bar{P}^{\prime }_n(\hat{\theta })^{-1/2}\) in \(\hat{\theta }=\theta \), where the increment \(\hat{\theta }-\theta \) is derived from (A.20), and then, we compute the expectation with respect to \(Z_1\). More formally, because \(E_\theta (Z_1)=0\) and \(E_\theta (Z_1^2)=\bar{P}^{\prime }_n(\theta )\), we have

so that

Finally, substituting (A.22) in (A.21), the result given in (14) follows. \(\square \)

Appendix B

1.1 Further Figures for Example 1 ctd and Example 2

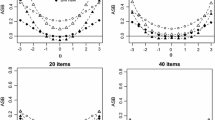

Example 1 ctd. Coverage (left) and expected length (right) of \(I_{CD}\) (solid black) and \(I_{WLE}\) (dashed blue), for \(\alpha =0.2\) (first row) and \(\alpha =0.05\) (second row).

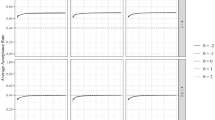

Example 2. First row: bias and MSE of CD-median (solid black), MLE (dashed blue) and WLE (dotted green), for \(\theta \in (-4,6)\). Second row: coverage and expected length of \(I_{CD}\) (solid black), \(I_W\) (dashed blue) and \(I_{WLE}\) (dotted green), for \(\alpha =0.05\).

Rights and permissions

About this article

Cite this article

Veronese, P., Melilli, E. Confidence Distribution for the Ability Parameter of the Rasch Model. Psychometrika 86, 131–166 (2021). https://doi.org/10.1007/s11336-021-09747-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-021-09747-4